Commit

·

6b78b29

1

Parent(s):

463b2ce

Update README.md

Browse files

README.md

CHANGED

|

@@ -1,3 +1,66 @@

|

|

| 1 |

---

|

| 2 |

license: llama2

|

| 3 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

license: llama2

|

| 3 |

---

|

| 4 |

+

|

| 5 |

+

!!! THIS IS A PLACEHOLDER !!!

|

| 6 |

+

!!! MODEL COMMING SOON !!!

|

| 7 |

+

|

| 8 |

+

# CyberBase 8k - (llama-2-13b - lmsys/vicuna-13b-v1.5-16k)

|

| 9 |

+

|

| 10 |

+

Base cybersecurity model for future fine-tuning, it is not reccomended to use on it's own.

|

| 11 |

+

- **CyberBase is a [lmsys/vicuna-13b-v1.5-16k](https://huggingface.co/lmsys/vicuna-13b-v1.5-16k) QLORA fine-tuned on of [CyberNative/github_cybersecurity_READMEs](https://huggingface.co/datasets/CyberNative/github_cybersecurity_READMEs)

|

| 12 |

+

- **sequence_len: 8192

|

| 13 |

+

- **lora_r: 128

|

| 14 |

+

- **lora_alpha: 16

|

| 15 |

+

- **num_epochs: 2

|

| 16 |

+

|

| 17 |

+

[<img src="https://raw.githubusercontent.com/OpenAccess-AI-Collective/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/OpenAccess-AI-Collective/axolotl)

|

| 18 |

+

|

| 19 |

+

---

|

| 20 |

+

inference: false

|

| 21 |

+

license: llama2

|

| 22 |

+

---

|

| 23 |

+

|

| 24 |

+

# Vicuna Model Card

|

| 25 |

+

|

| 26 |

+

## Model Details

|

| 27 |

+

|

| 28 |

+

Vicuna is a chat assistant trained by fine-tuning Llama 2 on user-shared conversations collected from ShareGPT.

|

| 29 |

+

|

| 30 |

+

- **Developed by:** [LMSYS](https://lmsys.org/)

|

| 31 |

+

- **Model type:** An auto-regressive language model based on the transformer architecture

|

| 32 |

+

- **License:** Llama 2 Community License Agreement

|

| 33 |

+

- **Finetuned from model:** [Llama 2](https://arxiv.org/abs/2307.09288)

|

| 34 |

+

|

| 35 |

+

### Model Sources

|

| 36 |

+

|

| 37 |

+

- **Repository:** https://github.com/lm-sys/FastChat

|

| 38 |

+

- **Blog:** https://lmsys.org/blog/2023-03-30-vicuna/

|

| 39 |

+

- **Paper:** https://arxiv.org/abs/2306.05685

|

| 40 |

+

- **Demo:** https://chat.lmsys.org/

|

| 41 |

+

|

| 42 |

+

## Uses

|

| 43 |

+

|

| 44 |

+

The primary use of Vicuna is research on large language models and chatbots.

|

| 45 |

+

The primary intended users of the model are researchers and hobbyists in natural language processing, machine learning, and artificial intelligence.

|

| 46 |

+

|

| 47 |

+

## How to Get Started with the Model

|

| 48 |

+

|

| 49 |

+

- Command line interface: https://github.com/lm-sys/FastChat#vicuna-weights

|

| 50 |

+

- APIs (OpenAI API, Huggingface API): https://github.com/lm-sys/FastChat/tree/main#api

|

| 51 |

+

|

| 52 |

+

## Training Details

|

| 53 |

+

|

| 54 |

+

Vicuna v1.5 (16k) is fine-tuned from Llama 2 with supervised instruction fine-tuning and linear RoPE scaling.

|

| 55 |

+

The training data is around 125K conversations collected from ShareGPT.com. These conversations are packed into sequences that contain 16K tokens each.

|

| 56 |

+

See more details in the "Training Details of Vicuna Models" section in the appendix of this [paper](https://arxiv.org/pdf/2306.05685.pdf).

|

| 57 |

+

|

| 58 |

+

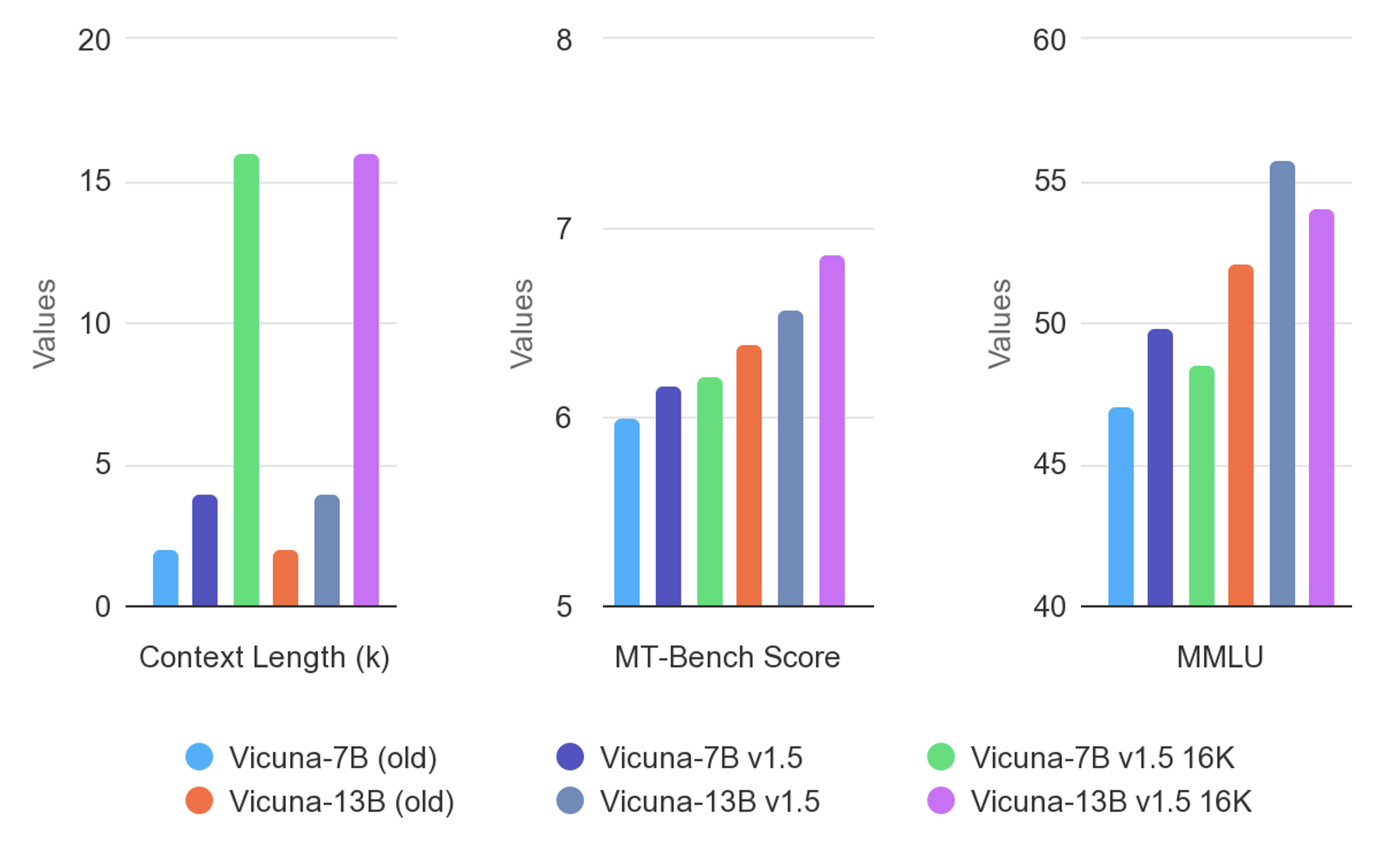

## Evaluation

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

Vicuna is evaluated with standard benchmarks, human preference, and LLM-as-a-judge. See more details in this [paper](https://arxiv.org/pdf/2306.05685.pdf) and [leaderboard](https://huggingface.co/spaces/lmsys/chatbot-arena-leaderboard).

|

| 63 |

+

|

| 64 |

+

## Difference between different versions of Vicuna

|

| 65 |

+

|

| 66 |

+

See [vicuna_weights_version.md](https://github.com/lm-sys/FastChat/blob/main/docs/vicuna_weights_version.md)

|