|

--- |

|

license: apache-2.0 |

|

tags: |

|

- code |

|

- text-generation-inference |

|

- pretrained |

|

language: |

|

- en |

|

pipeline_tag: text-generation |

|

--- |

|

KAI-7B |

|

|

|

|

|

|

|

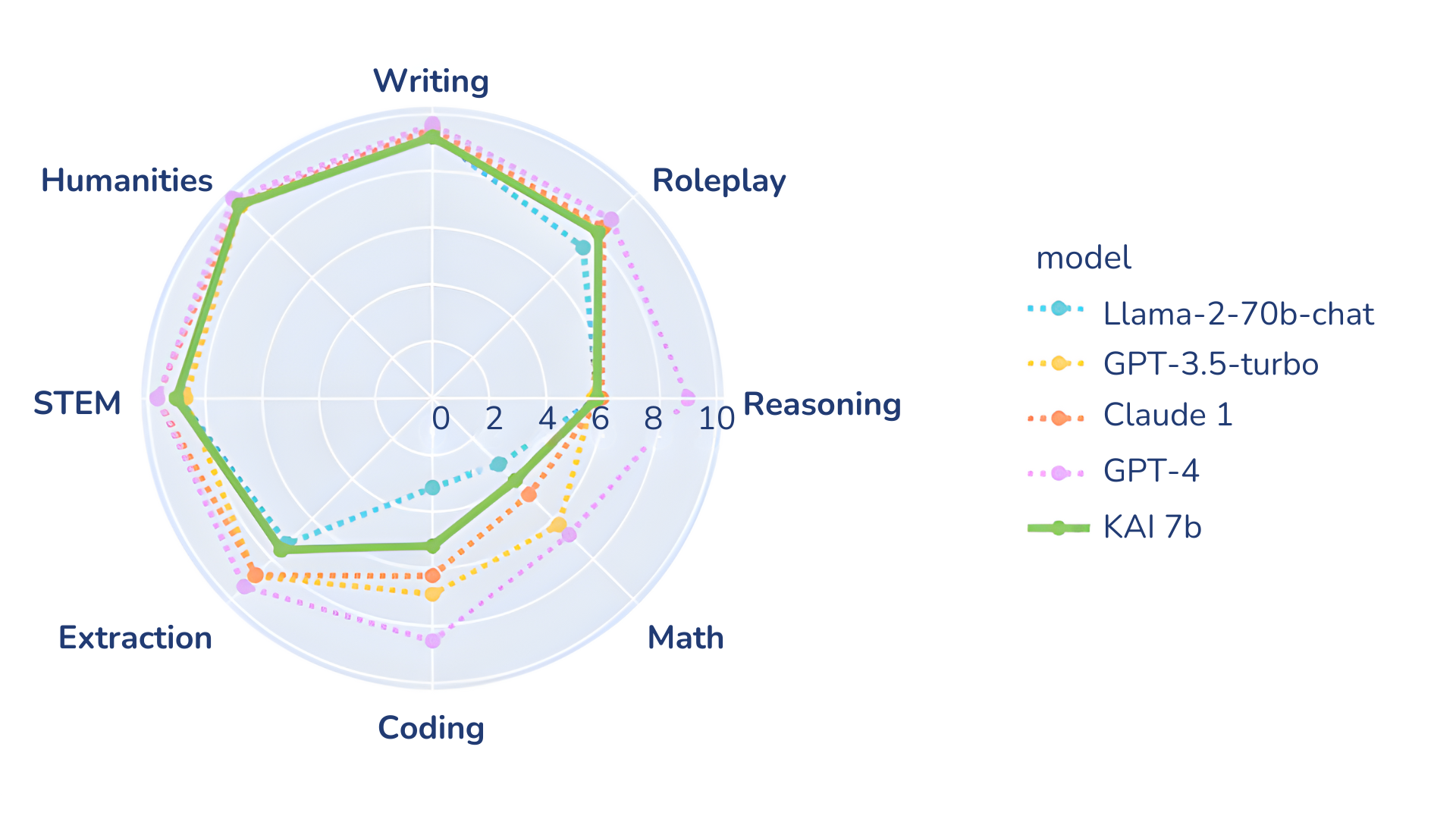

KAI-7B Large Language Model (LLM) is a fine-tuned generative text model based on Mistral 7B. With over 7 billion parameters, KAI-7B outperforms its closest competetor, Meta-Llama 2 7b, in all benchmarks we tested. |

|

|

|

|

|

|

|

|

|

As you can see in the benchmark above, KAI-7B excells in STEM but needs work in the Math and Coding fields. |

|

|

|

## Notice |

|

KAI-7B is a pretrained base model and therefore does not have any moderation mechanisms. |