Upload 10 files

Browse files- README.md +62 -231

- config.json +1 -5

- flax_model.msgpack +3 -0

- gitattributes.txt +0 -1

- model.safetensors +3 -0

- pytorch_model.bin +2 -2

- tf_model.h5 +2 -2

- tokenizer.json +0 -0

- tokenizer_config.json +1 -1

- vocab.txt +0 -0

README.md

CHANGED

|

@@ -1,251 +1,82 @@

|

|

| 1 |

---

|

| 2 |

-

language:

|

|

|

|

|

|

|

| 3 |

tags:

|

| 4 |

- exbert

|

| 5 |

-

license: apache-2.0

|

| 6 |

-

datasets:

|

| 7 |

-

- bookcorpus

|

| 8 |

-

- wikipedia

|

| 9 |

---

|

| 10 |

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

| 14 |

-

[this paper](https://arxiv.org/abs/1810.04805) and first released in

|

| 15 |

-

[this repository](https://github.com/google-research/bert). This model is uncased: it does not make a difference

|

| 16 |

-

between english and English.

|

| 17 |

-

|

| 18 |

-

Disclaimer: The team releasing BERT did not write a model card for this model so this model card has been written by

|

| 19 |

-

the Hugging Face team.

|

| 20 |

-

|

| 21 |

-

## Model description

|

| 22 |

-

|

| 23 |

-

BERT is a transformers model pretrained on a large corpus of English data in a self-supervised fashion. This means it

|

| 24 |

-

was pretrained on the raw texts only, with no humans labeling them in any way (which is why it can use lots of

|

| 25 |

-

publicly available data) with an automatic process to generate inputs and labels from those texts. More precisely, it

|

| 26 |

-

was pretrained with two objectives:

|

| 27 |

-

|

| 28 |

-

- Masked language modeling (MLM): taking a sentence, the model randomly masks 15% of the words in the input then run

|

| 29 |

-

the entire masked sentence through the model and has to predict the masked words. This is different from traditional

|

| 30 |

-

recurrent neural networks (RNNs) that usually see the words one after the other, or from autoregressive models like

|

| 31 |

-

GPT which internally masks the future tokens. It allows the model to learn a bidirectional representation of the

|

| 32 |

-

sentence.

|

| 33 |

-

- Next sentence prediction (NSP): the models concatenates two masked sentences as inputs during pretraining. Sometimes

|

| 34 |

-

they correspond to sentences that were next to each other in the original text, sometimes not. The model then has to

|

| 35 |

-

predict if the two sentences were following each other or not.

|

| 36 |

-

|

| 37 |

-

This way, the model learns an inner representation of the English language that can then be used to extract features

|

| 38 |

-

useful for downstream tasks: if you have a dataset of labeled sentences, for instance, you can train a standard

|

| 39 |

-

classifier using the features produced by the BERT model as inputs.

|

| 40 |

-

|

| 41 |

-

## Model variations

|

| 42 |

-

|

| 43 |

-

BERT has originally been released in base and large variations, for cased and uncased input text. The uncased models also strips out an accent markers.

|

| 44 |

-

Chinese and multilingual uncased and cased versions followed shortly after.

|

| 45 |

-

Modified preprocessing with whole word masking has replaced subpiece masking in a following work, with the release of two models.

|

| 46 |

-

Other 24 smaller models are released afterward.

|

| 47 |

-

|

| 48 |

-

The detailed release history can be found on the [google-research/bert readme](https://github.com/google-research/bert/blob/master/README.md) on github.

|

| 49 |

-

|

| 50 |

-

| Model | #params | Language |

|

| 51 |

-

|------------------------|--------------------------------|-------|

|

| 52 |

-

| [`bert-base-uncased`](https://huggingface.co/bert-base-uncased) | 110M | English |

|

| 53 |

-

| [`bert-large-uncased`](https://huggingface.co/bert-large-uncased) | 340M | English | sub

|

| 54 |

-

| [`bert-base-cased`](https://huggingface.co/bert-base-cased) | 110M | English |

|

| 55 |

-

| [`bert-large-cased`](https://huggingface.co/bert-large-cased) | 340M | English |

|

| 56 |

-

| [`bert-base-chinese`](https://huggingface.co/bert-base-chinese) | 110M | Chinese |

|

| 57 |

-

| [`bert-base-multilingual-cased`](https://huggingface.co/bert-base-multilingual-cased) | 110M | Multiple |

|

| 58 |

-

| [`bert-large-uncased-whole-word-masking`](https://huggingface.co/bert-large-uncased-whole-word-masking) | 340M | English |

|

| 59 |

-

| [`bert-large-cased-whole-word-masking`](https://huggingface.co/bert-large-cased-whole-word-masking) | 340M | English |

|

| 60 |

-

|

| 61 |

-

## Intended uses & limitations

|

| 62 |

-

|

| 63 |

-

You can use the raw model for either masked language modeling or next sentence prediction, but it's mostly intended to

|

| 64 |

-

be fine-tuned on a downstream task. See the [model hub](https://huggingface.co/models?filter=bert) to look for

|

| 65 |

-

fine-tuned versions of a task that interests you.

|

| 66 |

-

|

| 67 |

-

Note that this model is primarily aimed at being fine-tuned on tasks that use the whole sentence (potentially masked)

|

| 68 |

-

to make decisions, such as sequence classification, token classification or question answering. For tasks such as text

|

| 69 |

-

generation you should look at model like GPT2.

|

| 70 |

-

|

| 71 |

-

### How to use

|

| 72 |

-

|

| 73 |

-

You can use this model directly with a pipeline for masked language modeling:

|

| 74 |

-

|

| 75 |

-

```python

|

| 76 |

-

>>> from transformers import pipeline

|

| 77 |

-

>>> unmasker = pipeline('fill-mask', model='bert-base-uncased')

|

| 78 |

-

>>> unmasker("Hello I'm a [MASK] model.")

|

| 79 |

-

|

| 80 |

-

[{'sequence': "[CLS] hello i'm a fashion model. [SEP]",

|

| 81 |

-

'score': 0.1073106899857521,

|

| 82 |

-

'token': 4827,

|

| 83 |

-

'token_str': 'fashion'},

|

| 84 |

-

{'sequence': "[CLS] hello i'm a role model. [SEP]",

|

| 85 |

-

'score': 0.08774490654468536,

|

| 86 |

-

'token': 2535,

|

| 87 |

-

'token_str': 'role'},

|

| 88 |

-

{'sequence': "[CLS] hello i'm a new model. [SEP]",

|

| 89 |

-

'score': 0.05338378623127937,

|

| 90 |

-

'token': 2047,

|

| 91 |

-

'token_str': 'new'},

|

| 92 |

-

{'sequence': "[CLS] hello i'm a super model. [SEP]",

|

| 93 |

-

'score': 0.04667217284440994,

|

| 94 |

-

'token': 3565,

|

| 95 |

-

'token_str': 'super'},

|

| 96 |

-

{'sequence': "[CLS] hello i'm a fine model. [SEP]",

|

| 97 |

-

'score': 0.027095865458250046,

|

| 98 |

-

'token': 2986,

|

| 99 |

-

'token_str': 'fine'}]

|

| 100 |

-

```

|

| 101 |

|

| 102 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 103 |

|

| 104 |

-

|

| 105 |

-

|

| 106 |

-

|

| 107 |

-

|

| 108 |

-

|

| 109 |

-

|

| 110 |

-

|

| 111 |

-

|

| 112 |

|

| 113 |

-

and in TensorFlow:

|

| 114 |

|

| 115 |

-

|

| 116 |

-

|

| 117 |

-

|

| 118 |

-

model = TFBertModel.from_pretrained("bert-base-uncased")

|

| 119 |

-

text = "Replace me by any text you'd like."

|

| 120 |

-

encoded_input = tokenizer(text, return_tensors='tf')

|

| 121 |

-

output = model(encoded_input)

|

| 122 |

-

```

|

| 123 |

|

| 124 |

-

|

| 125 |

-

|

| 126 |

-

|

| 127 |

-

|

| 128 |

-

|

| 129 |

-

|

| 130 |

-

|

| 131 |

-

>>> unmasker = pipeline('fill-mask', model='bert-base-uncased')

|

| 132 |

-

>>> unmasker("The man worked as a [MASK].")

|

| 133 |

-

|

| 134 |

-

[{'sequence': '[CLS] the man worked as a carpenter. [SEP]',

|

| 135 |

-

'score': 0.09747550636529922,

|

| 136 |

-

'token': 10533,

|

| 137 |

-

'token_str': 'carpenter'},

|

| 138 |

-

{'sequence': '[CLS] the man worked as a waiter. [SEP]',

|

| 139 |

-

'score': 0.0523831807076931,

|

| 140 |

-

'token': 15610,

|

| 141 |

-

'token_str': 'waiter'},

|

| 142 |

-

{'sequence': '[CLS] the man worked as a barber. [SEP]',

|

| 143 |

-

'score': 0.04962705448269844,

|

| 144 |

-

'token': 13362,

|

| 145 |

-

'token_str': 'barber'},

|

| 146 |

-

{'sequence': '[CLS] the man worked as a mechanic. [SEP]',

|

| 147 |

-

'score': 0.03788609802722931,

|

| 148 |

-

'token': 15893,

|

| 149 |

-

'token_str': 'mechanic'},

|

| 150 |

-

{'sequence': '[CLS] the man worked as a salesman. [SEP]',

|

| 151 |

-

'score': 0.037680890411138535,

|

| 152 |

-

'token': 18968,

|

| 153 |

-

'token_str': 'salesman'}]

|

| 154 |

-

|

| 155 |

-

>>> unmasker("The woman worked as a [MASK].")

|

| 156 |

-

|

| 157 |

-

[{'sequence': '[CLS] the woman worked as a nurse. [SEP]',

|

| 158 |

-

'score': 0.21981462836265564,

|

| 159 |

-

'token': 6821,

|

| 160 |

-

'token_str': 'nurse'},

|

| 161 |

-

{'sequence': '[CLS] the woman worked as a waitress. [SEP]',

|

| 162 |

-

'score': 0.1597415804862976,

|

| 163 |

-

'token': 13877,

|

| 164 |

-

'token_str': 'waitress'},

|

| 165 |

-

{'sequence': '[CLS] the woman worked as a maid. [SEP]',

|

| 166 |

-

'score': 0.1154729500412941,

|

| 167 |

-

'token': 10850,

|

| 168 |

-

'token_str': 'maid'},

|

| 169 |

-

{'sequence': '[CLS] the woman worked as a prostitute. [SEP]',

|

| 170 |

-

'score': 0.037968918681144714,

|

| 171 |

-

'token': 19215,

|

| 172 |

-

'token_str': 'prostitute'},

|

| 173 |

-

{'sequence': '[CLS] the woman worked as a cook. [SEP]',

|

| 174 |

-

'score': 0.03042375110089779,

|

| 175 |

-

'token': 5660,

|

| 176 |

-

'token_str': 'cook'}]

|

| 177 |

```

|

| 178 |

|

| 179 |

-

|

| 180 |

|

| 181 |

-

|

| 182 |

|

| 183 |

-

|

| 184 |

-

|

| 185 |

-

|

|

|

|

|

|

|

| 186 |

|

| 187 |

-

|

|

|

|

|

|

|

| 188 |

|

| 189 |

-

|

| 190 |

|

| 191 |

-

|

| 192 |

-

then of the form:

|

| 193 |

|

| 194 |

-

|

| 195 |

-

|

| 196 |

-

|

|

|

|

|

|

|

| 197 |

|

| 198 |

-

|

| 199 |

-

|

| 200 |

-

consecutive span of text usually longer than a single sentence. The only constrain is that the result with the two

|

| 201 |

-

"sentences" has a combined length of less than 512 tokens.

|

| 202 |

-

|

| 203 |

-

The details of the masking procedure for each sentence are the following:

|

| 204 |

-

- 15% of the tokens are masked.

|

| 205 |

-

- In 80% of the cases, the masked tokens are replaced by `[MASK]`.

|

| 206 |

-

- In 10% of the cases, the masked tokens are replaced by a random token (different) from the one they replace.

|

| 207 |

-

- In the 10% remaining cases, the masked tokens are left as is.

|

| 208 |

-

|

| 209 |

-

### Pretraining

|

| 210 |

-

|

| 211 |

-

The model was trained on 4 cloud TPUs in Pod configuration (16 TPU chips total) for one million steps with a batch size

|

| 212 |

-

of 256. The sequence length was limited to 128 tokens for 90% of the steps and 512 for the remaining 10%. The optimizer

|

| 213 |

-

used is Adam with a learning rate of 1e-4, \\(\beta_{1} = 0.9\\) and \\(\beta_{2} = 0.999\\), a weight decay of 0.01,

|

| 214 |

-

learning rate warmup for 10,000 steps and linear decay of the learning rate after.

|

| 215 |

-

|

| 216 |

-

## Evaluation results

|

| 217 |

-

|

| 218 |

-

When fine-tuned on downstream tasks, this model achieves the following results:

|

| 219 |

-

|

| 220 |

-

Glue test results:

|

| 221 |

-

|

| 222 |

-

| Task | MNLI-(m/mm) | QQP | QNLI | SST-2 | CoLA | STS-B | MRPC | RTE | Average |

|

| 223 |

-

|:----:|:-----------:|:----:|:----:|:-----:|:----:|:-----:|:----:|:----:|:-------:|

|

| 224 |

-

| | 84.6/83.4 | 71.2 | 90.5 | 93.5 | 52.1 | 85.8 | 88.9 | 66.4 | 79.6 |

|

| 225 |

-

|

| 226 |

-

|

| 227 |

-

### BibTeX entry and citation info

|

| 228 |

-

|

| 229 |

-

```bibtex

|

| 230 |

-

@article{DBLP:journals/corr/abs-1810-04805,

|

| 231 |

-

author = {Jacob Devlin and

|

| 232 |

-

Ming{-}Wei Chang and

|

| 233 |

-

Kenton Lee and

|

| 234 |

-

Kristina Toutanova},

|

| 235 |

-

title = {{BERT:} Pre-training of Deep Bidirectional Transformers for Language

|

| 236 |

-

Understanding},

|

| 237 |

-

journal = {CoRR},

|

| 238 |

-

volume = {abs/1810.04805},

|

| 239 |

-

year = {2018},

|

| 240 |

-

url = {http://arxiv.org/abs/1810.04805},

|

| 241 |

-

archivePrefix = {arXiv},

|

| 242 |

-

eprint = {1810.04805},

|

| 243 |

-

timestamp = {Tue, 30 Oct 2018 20:39:56 +0100},

|

| 244 |

-

biburl = {https://dblp.org/rec/journals/corr/abs-1810-04805.bib},

|

| 245 |

-

bibsource = {dblp computer science bibliography, https://dblp.org}

|

| 246 |

-

}

|

| 247 |

-

```

|

| 248 |

|

| 249 |

-

|

| 250 |

-

|

| 251 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

language: de

|

| 3 |

+

license: mit

|

| 4 |

+

thumbnail: https://static.tildacdn.com/tild6438-3730-4164-b266-613634323466/german_bert.png

|

| 5 |

tags:

|

| 6 |

- exbert

|

|

|

|

|

|

|

|

|

|

|

|

|

| 7 |

---

|

| 8 |

|

| 9 |

+

<a href="https://huggingface.co/exbert/?model=bert-base-german-cased">

|

| 10 |

+

<img width="300px" src="https://cdn-media.huggingface.co/exbert/button.png">

|

| 11 |

+

</a>

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 12 |

|

| 13 |

+

# German BERT

|

| 14 |

+

|

| 15 |

+

## Overview

|

| 16 |

+

**Language model:** bert-base-cased

|

| 17 |

+

**Language:** German

|

| 18 |

+

**Training data:** Wiki, OpenLegalData, News (~ 12GB)

|

| 19 |

+

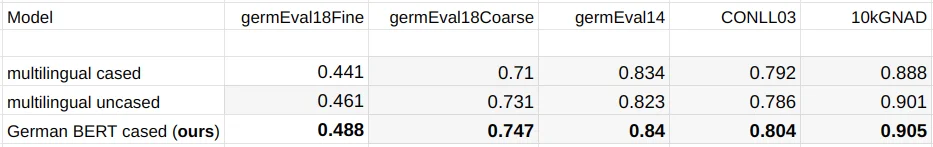

**Eval data:** Conll03 (NER), GermEval14 (NER), GermEval18 (Classification), GNAD (Classification)

|

| 20 |

+

**Infrastructure**: 1x TPU v2

|

| 21 |

+

**Published**: Jun 14th, 2019

|

| 22 |

|

| 23 |

+

**Update April 3rd, 2020**: we updated the vocabulary file on deepset's s3 to conform with the default tokenization of punctuation tokens.

|

| 24 |

+

For details see the related [FARM issue](https://github.com/deepset-ai/FARM/issues/60). If you want to use the old vocab we have also uploaded a ["deepset/bert-base-german-cased-oldvocab"](https://huggingface.co/deepset/bert-base-german-cased-oldvocab) model.

|

| 25 |

+

|

| 26 |

+

## Details

|

| 27 |

+

- We trained using Google's Tensorflow code on a single cloud TPU v2 with standard settings.

|

| 28 |

+

- We trained 810k steps with a batch size of 1024 for sequence length 128 and 30k steps with sequence length 512. Training took about 9 days.

|

| 29 |

+

- As training data we used the latest German Wikipedia dump (6GB of raw txt files), the OpenLegalData dump (2.4 GB) and news articles (3.6 GB).

|

| 30 |

+

- We cleaned the data dumps with tailored scripts and segmented sentences with spacy v2.1. To create tensorflow records we used the recommended sentencepiece library for creating the word piece vocabulary and tensorflow scripts to convert the text to data usable by BERT.

|

| 31 |

|

|

|

|

| 32 |

|

| 33 |

+

See https://deepset.ai/german-bert for more details

|

| 34 |

+

|

| 35 |

+

## Hyperparameters

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 36 |

|

| 37 |

+

```

|

| 38 |

+

batch_size = 1024

|

| 39 |

+

n_steps = 810_000

|

| 40 |

+

max_seq_len = 128 (and 512 later)

|

| 41 |

+

learning_rate = 1e-4

|

| 42 |

+

lr_schedule = LinearWarmup

|

| 43 |

+

num_warmup_steps = 10_000

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 44 |

```

|

| 45 |

|

| 46 |

+

## Performance

|

| 47 |

|

| 48 |

+

During training we monitored the loss and evaluated different model checkpoints on the following German datasets:

|

| 49 |

|

| 50 |

+

- germEval18Fine: Macro f1 score for multiclass sentiment classification

|

| 51 |

+

- germEval18coarse: Macro f1 score for binary sentiment classification

|

| 52 |

+

- germEval14: Seq f1 score for NER (file names deuutf.\*)

|

| 53 |

+

- CONLL03: Seq f1 score for NER

|

| 54 |

+

- 10kGNAD: Accuracy for document classification

|

| 55 |

|

| 56 |

+

Even without thorough hyperparameter tuning, we observed quite stable learning especially for our German model. Multiple restarts with different seeds produced quite similar results.

|

| 57 |

+

|

| 58 |

+

|

| 59 |

|

| 60 |

+

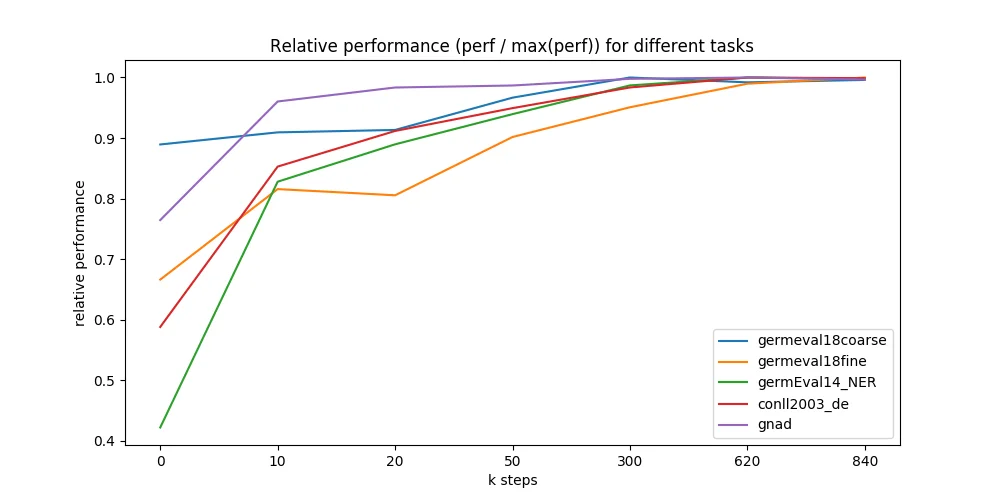

We further evaluated different points during the 9 days of pre-training and were astonished how fast the model converges to the maximally reachable performance. We ran all 5 downstream tasks on 7 different model checkpoints - taken at 0 up to 840k training steps (x-axis in figure below). Most checkpoints are taken from early training where we expected most performance changes. Surprisingly, even a randomly initialized BERT can be trained only on labeled downstream datasets and reach good performance (blue line, GermEval 2018 Coarse task, 795 kB trainset size).

|

| 61 |

|

| 62 |

+

|

|

|

|

| 63 |

|

| 64 |

+

## Authors

|

| 65 |

+

- Branden Chan: `branden.chan [at] deepset.ai`

|

| 66 |

+

- Timo Möller: `timo.moeller [at] deepset.ai`

|

| 67 |

+

- Malte Pietsch: `malte.pietsch [at] deepset.ai`

|

| 68 |

+

- Tanay Soni: `tanay.soni [at] deepset.ai`

|

| 69 |

|

| 70 |

+

## About us

|

| 71 |

+

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 72 |

|

| 73 |

+

We bring NLP to the industry via open source!

|

| 74 |

+

Our focus: Industry specific language models & large scale QA systems.

|

| 75 |

+

|

| 76 |

+

Some of our work:

|

| 77 |

+

- [German BERT (aka "bert-base-german-cased")](https://deepset.ai/german-bert)

|

| 78 |

+

- [FARM](https://github.com/deepset-ai/FARM)

|

| 79 |

+

- [Haystack](https://github.com/deepset-ai/haystack/)

|

| 80 |

+

|

| 81 |

+

Get in touch:

|

| 82 |

+

[Twitter](https://twitter.com/deepset_ai) | [LinkedIn](https://www.linkedin.com/company/deepset-ai/) | [Website](https://deepset.ai)

|

config.json

CHANGED

|

@@ -3,7 +3,6 @@

|

|

| 3 |

"BertForMaskedLM"

|

| 4 |

],

|

| 5 |

"attention_probs_dropout_prob": 0.1,

|

| 6 |

-

"gradient_checkpointing": false,

|

| 7 |

"hidden_act": "gelu",

|

| 8 |

"hidden_dropout_prob": 0.1,

|

| 9 |

"hidden_size": 768,

|

|

@@ -15,9 +14,6 @@

|

|

| 15 |

"num_attention_heads": 12,

|

| 16 |

"num_hidden_layers": 12,

|

| 17 |

"pad_token_id": 0,

|

| 18 |

-

"position_embedding_type": "absolute",

|

| 19 |

-

"transformers_version": "4.6.0.dev0",

|

| 20 |

"type_vocab_size": 2,

|

| 21 |

-

"

|

| 22 |

-

"vocab_size": 30522

|

| 23 |

}

|

|

|

|

| 3 |

"BertForMaskedLM"

|

| 4 |

],

|

| 5 |

"attention_probs_dropout_prob": 0.1,

|

|

|

|

| 6 |

"hidden_act": "gelu",

|

| 7 |

"hidden_dropout_prob": 0.1,

|

| 8 |

"hidden_size": 768,

|

|

|

|

| 14 |

"num_attention_heads": 12,

|

| 15 |

"num_hidden_layers": 12,

|

| 16 |

"pad_token_id": 0,

|

|

|

|

|

|

|

| 17 |

"type_vocab_size": 2,

|

| 18 |

+

"vocab_size": 30000

|

|

|

|

| 19 |

}

|

flax_model.msgpack

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:fb127d4c33e1a89ab189883c405ad620a0e084144c7af743ff6bd4f530492812

|

| 3 |

+

size 436458787

|

gitattributes.txt

CHANGED

|

@@ -7,5 +7,4 @@

|

|

| 7 |

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 8 |

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 9 |

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 10 |

-

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 11 |

model.safetensors filter=lfs diff=lfs merge=lfs -text

|

|

|

|

| 7 |

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 8 |

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 9 |

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

|

|

|

| 10 |

model.safetensors filter=lfs diff=lfs merge=lfs -text

|

model.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a89b4ca42f0a785b35a7c9da3044fdee3225424afb76a12c3c124de51a359c97

|

| 3 |

+

size 438844124

|

pytorch_model.bin

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

-

size

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:56a21938415b06a68b870e4b1b3413cdd532ae6456fefef1ee5a852faf52f806

|

| 3 |

+

size 438869143

|

tf_model.h5

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

-

size

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:3318e2e8ae8e2349b13965ef7ed82b7ce04217cb5a9b382d0a342bb16572d84e

|

| 3 |

+

size 532854392

|

tokenizer.json

CHANGED

|

The diff for this file is too large to render.

See raw diff

|

|

|

tokenizer_config.json

CHANGED

|

@@ -1 +1 @@

|

|

| 1 |

-

{"do_lower_case":

|

|

|

|

| 1 |

+

{"do_lower_case": false, "model_max_length": 512}

|

vocab.txt

CHANGED

|

The diff for this file is too large to render.

See raw diff

|

|

|