Update README.md

Browse files

README.md

CHANGED

|

@@ -11,14 +11,25 @@ pipeline_tag: text-generation

|

|

| 11 |

|

| 12 |

Unreleased, untested, unfinished beta.

|

| 13 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 14 |

# Evaluations

|

| 15 |

|

| 16 |

We've only done very limited testing as yet. The [epoch 4.5 checkpoint](https://huggingface.co/Open-Orca/oo-phi-1_5/commit/aa05eb2596d6d11951695d2e327616188d768880) scores above 5 on MT-Bench (better than Alpaca-13B, worse than Llama2-7b-chat), while preliminary benchmarks suggest peak average performance was achieved roughly at epoch 4.

|

| 17 |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 18 |

|

| 19 |

|

| 20 |

|

| 21 |

-

MT-bench

|

|

|

|

|

|

|

| 22 |

```

|

| 23 |

Mode: single

|

| 24 |

Input file: data/mt_bench/model_judgment/gpt-4_single.jsonl

|

|

@@ -39,6 +50,7 @@ model

|

|

| 39 |

oo-phi-1_5 5.03125

|

| 40 |

```

|

| 41 |

|

|

|

|

| 42 |

# Training

|

| 43 |

|

| 44 |

Trained with full-paramaters fine-tuning on 8x RTX A6000-48GB (Ampere) for 5 epochs for 62 hours (12.5h/epoch) at a commodity cost of $390 ($80/epoch).

|

|

@@ -47,6 +59,12 @@ We did not use [MultiPack](https://github.com/imoneoi/multipack_sampler) packing

|

|

| 47 |

[<img src="https://raw.githubusercontent.com/OpenAccess-AI-Collective/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/OpenAccess-AI-Collective/axolotl)

|

| 48 |

|

| 49 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 50 |

# Inference

|

| 51 |

|

| 52 |

Remove *`.to('cuda')`* for unaccelerated.

|

|

@@ -123,4 +141,40 @@ In terms of programming tasks, I am particularly skilled in:

|

|

| 123 |

10. Fraud Detection: I can detect and prevent fraudulent activities, protecting users' financial information and ensuring secure transactions.

|

| 124 |

|

| 125 |

These programming tasks showcase my ability to understand and process vast amounts of information while adapting to different contexts and user needs. As an AI, I continuously learn and evolve to become even more effective in assisting users.<|im_end|>

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 126 |

```

|

|

|

|

| 11 |

|

| 12 |

Unreleased, untested, unfinished beta.

|

| 13 |

|

| 14 |

+

We've trained Microsoft Research's [phi-1.5](https://huggingface.co/microsoft/phi-1_5), 1.3B parameter model with the same OpenOrca dataset as we used with our [OpenOrcaxOpenChat-Preview2-13B](https://huggingface.co/Open-Orca/OpenOrcaxOpenChat-Preview2-13B) model.

|

| 15 |

+

|

| 16 |

+

This model doesn't dramatically improve on the base model's general task performance, but the instruction tuning has made the model reliably handle the ChatML prompt format.

|

| 17 |

+

|

| 18 |

+

|

| 19 |

# Evaluations

|

| 20 |

|

| 21 |

We've only done very limited testing as yet. The [epoch 4.5 checkpoint](https://huggingface.co/Open-Orca/oo-phi-1_5/commit/aa05eb2596d6d11951695d2e327616188d768880) scores above 5 on MT-Bench (better than Alpaca-13B, worse than Llama2-7b-chat), while preliminary benchmarks suggest peak average performance was achieved roughly at epoch 4.

|

| 22 |

|

| 23 |

+

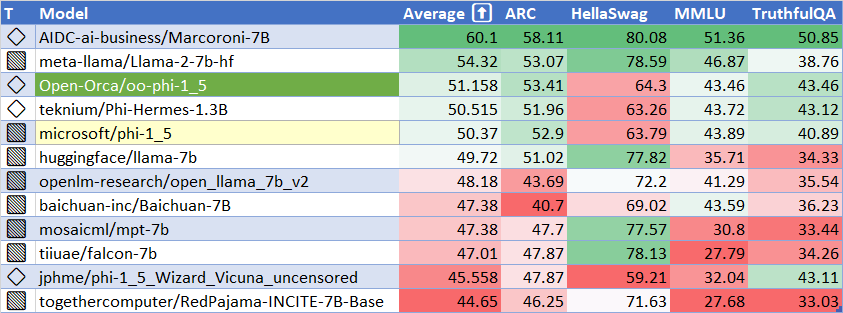

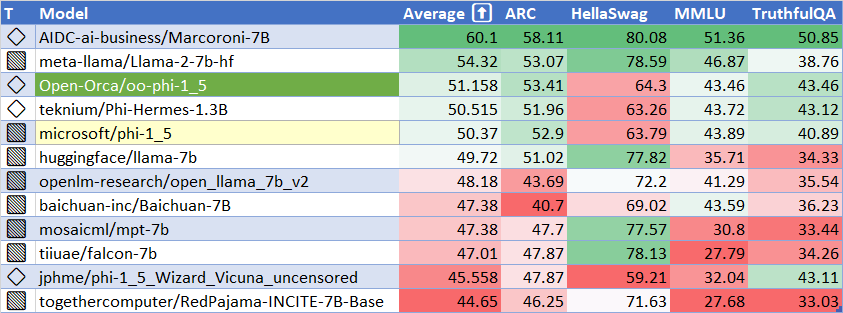

## HuggingFaceH4 Open LLM Leaderboard Performance

|

| 24 |

+

|

| 25 |

+

The only significant improvement was with TruthfulQA.

|

| 26 |

+

|

| 27 |

|

| 28 |

|

| 29 |

|

| 30 |

+

## MT-bench Performance

|

| 31 |

+

|

| 32 |

+

Epoch 4.5 result:

|

| 33 |

```

|

| 34 |

Mode: single

|

| 35 |

Input file: data/mt_bench/model_judgment/gpt-4_single.jsonl

|

|

|

|

| 50 |

oo-phi-1_5 5.03125

|

| 51 |

```

|

| 52 |

|

| 53 |

+

|

| 54 |

# Training

|

| 55 |

|

| 56 |

Trained with full-paramaters fine-tuning on 8x RTX A6000-48GB (Ampere) for 5 epochs for 62 hours (12.5h/epoch) at a commodity cost of $390 ($80/epoch).

|

|

|

|

| 59 |

[<img src="https://raw.githubusercontent.com/OpenAccess-AI-Collective/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/OpenAccess-AI-Collective/axolotl)

|

| 60 |

|

| 61 |

|

| 62 |

+

# Prompt Template

|

| 63 |

+

|

| 64 |

+

We used [OpenAI's Chat Markup Language (ChatML)](https://github.com/openai/openai-python/blob/main/chatml.md) format, with `<|im_start|>` and `<|im_end|>` tokens added to support this.

|

| 65 |

+

This means that, e.g., in [oobabooga](https://github.com/oobabooga/text-generation-webui/) the `MPT-Chat` instruction template should work.

|

| 66 |

+

|

| 67 |

+

|

| 68 |

# Inference

|

| 69 |

|

| 70 |

Remove *`.to('cuda')`* for unaccelerated.

|

|

|

|

| 141 |

10. Fraud Detection: I can detect and prevent fraudulent activities, protecting users' financial information and ensuring secure transactions.

|

| 142 |

|

| 143 |

These programming tasks showcase my ability to understand and process vast amounts of information while adapting to different contexts and user needs. As an AI, I continuously learn and evolve to become even more effective in assisting users.<|im_end|>

|

| 144 |

+

```

|

| 145 |

+

|

| 146 |

+

|

| 147 |

+

# Citation

|

| 148 |

+

|

| 149 |

+

```bibtex

|

| 150 |

+

@software{lian2023oophi15,

|

| 151 |

+

title = {OpenOrca oo-phi-1.5: Phi-1.5 1.3B Model Instruct-tuned on Filtered OpenOrcaV1 GPT-4 Dataset},

|

| 152 |

+

author = {Wing Lian and Bleys Goodson and Guan Wang and Eugene Pentland and Austin Cook and Chanvichet Vong and "Teknium"},

|

| 153 |

+

year = {2023},

|

| 154 |

+

publisher = {HuggingFace},

|

| 155 |

+

journal = {HuggingFace repository},

|

| 156 |

+

howpublished = {\url{https://huggingface.co/Open-Orca/oo-phi-1_5},

|

| 157 |

+

}

|

| 158 |

+

@article{textbooks2,

|

| 159 |

+

title={Textbooks Are All You Need II: \textbf{phi-1.5} technical report},

|

| 160 |

+

author={Li, Yuanzhi and Bubeck, S{\'e}bastien and Eldan, Ronen and Del Giorno, Allie and Gunasekar, Suriya and Lee, Yin Tat},

|

| 161 |

+

journal={arXiv preprint arXiv:2309.05463},

|

| 162 |

+

year={2023}

|

| 163 |

+

}

|

| 164 |

+

@misc{mukherjee2023orca,

|

| 165 |

+

title={Orca: Progressive Learning from Complex Explanation Traces of GPT-4},

|

| 166 |

+

author={Subhabrata Mukherjee and Arindam Mitra and Ganesh Jawahar and Sahaj Agarwal and Hamid Palangi and Ahmed Awadallah},

|

| 167 |

+

year={2023},

|

| 168 |

+

eprint={2306.02707},

|

| 169 |

+

archivePrefix={arXiv},

|

| 170 |

+

primaryClass={cs.CL}

|

| 171 |

+

}

|

| 172 |

+

@misc{longpre2023flan,

|

| 173 |

+

title={The Flan Collection: Designing Data and Methods for Effective Instruction Tuning},

|

| 174 |

+

author={Shayne Longpre and Le Hou and Tu Vu and Albert Webson and Hyung Won Chung and Yi Tay and Denny Zhou and Quoc V. Le and Barret Zoph and Jason Wei and Adam Roberts},

|

| 175 |

+

year={2023},

|

| 176 |

+

eprint={2301.13688},

|

| 177 |

+

archivePrefix={arXiv},

|

| 178 |

+

primaryClass={cs.AI}

|

| 179 |

+

}

|

| 180 |

```

|