Upload folder using huggingface_hub (#2)

Browse files- e6c68e93a28adc079b58231ba80da60b9ff60b0fb940223c82d1c4daac04a3db (6b074cb063a69c1935adb35d139ff826f6b62181)

- base_results.json +19 -0

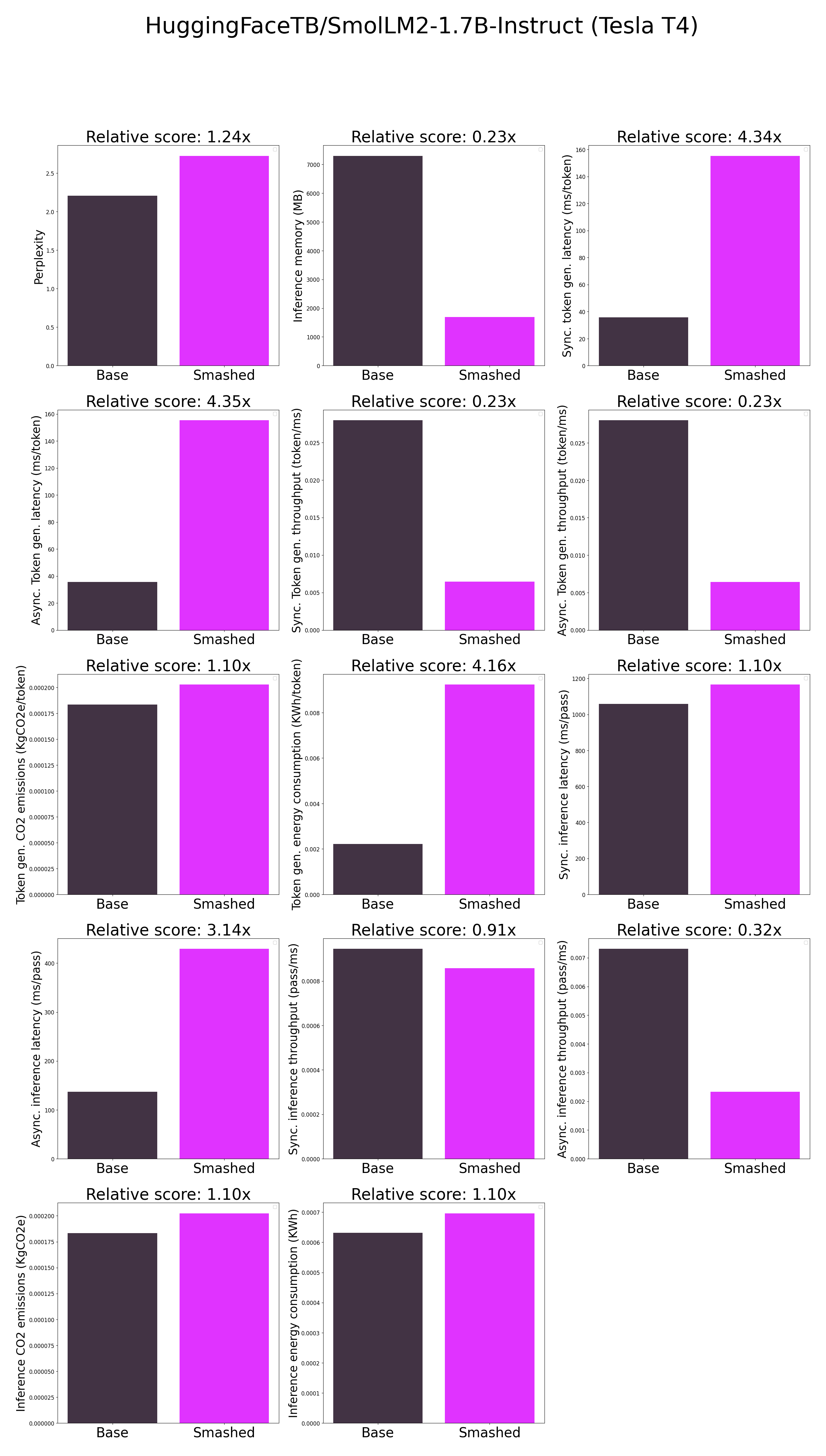

- plots.png +0 -0

- smashed_results.json +19 -0

base_results.json

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"current_gpu_type": "Tesla T4",

|

| 3 |

+

"current_gpu_total_memory": 15095.0625,

|

| 4 |

+

"perplexity": 2.2062017917633057,

|

| 5 |

+

"memory_inference_first": 7298.0,

|

| 6 |

+

"memory_inference": 7298.0,

|

| 7 |

+

"token_generation_latency_sync": 35.75132808685303,

|

| 8 |

+

"token_generation_latency_async": 35.67290659993887,

|

| 9 |

+

"token_generation_throughput_sync": 0.027970988869857785,

|

| 10 |

+

"token_generation_throughput_async": 0.02803247885614439,

|

| 11 |

+

"token_generation_CO2_emissions": 0.00018359090889950798,

|

| 12 |

+

"token_generation_energy_consumption": 0.002221255088823526,

|

| 13 |

+

"inference_latency_sync": 1058.6026702880858,

|

| 14 |

+

"inference_latency_async": 136.83900833129883,

|

| 15 |

+

"inference_throughput_sync": 0.000944641486430279,

|

| 16 |

+

"inference_throughput_async": 0.007307857694926547,

|

| 17 |

+

"inference_CO2_emissions": 0.00018326470280637688,

|

| 18 |

+

"inference_energy_consumption": 0.000631884688384694

|

| 19 |

+

}

|

plots.png

ADDED

|

smashed_results.json

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"current_gpu_type": "Tesla T4",

|

| 3 |

+

"current_gpu_total_memory": 15095.0625,

|

| 4 |

+

"perplexity": 2.7251362800598145,

|

| 5 |

+

"memory_inference_first": 1690.0,

|

| 6 |

+

"memory_inference": 1690.0,

|

| 7 |

+

"token_generation_latency_sync": 155.3000717163086,

|

| 8 |

+

"token_generation_latency_async": 155.34533187747002,

|

| 9 |

+

"token_generation_throughput_sync": 0.006439147058648696,

|

| 10 |

+

"token_generation_throughput_async": 0.0064372710007711,

|

| 11 |

+

"token_generation_CO2_emissions": 0.00020285744923935242,

|

| 12 |

+

"token_generation_energy_consumption": 0.009233308010788546,

|

| 13 |

+

"inference_latency_sync": 1166.3823852539062,

|

| 14 |

+

"inference_latency_async": 429.13405895233154,

|

| 15 |

+

"inference_throughput_sync": 0.0008573517678615431,

|

| 16 |

+

"inference_throughput_async": 0.0023302741396041943,

|

| 17 |

+

"inference_CO2_emissions": 0.000202412501568058,

|

| 18 |

+

"inference_energy_consumption": 0.0006962767357209158

|

| 19 |

+

}

|