c13081bac9221145b156ee9fd5f9ac9ef90eada16325157de61a61ce80429718

Browse files- base_results.json +19 -0

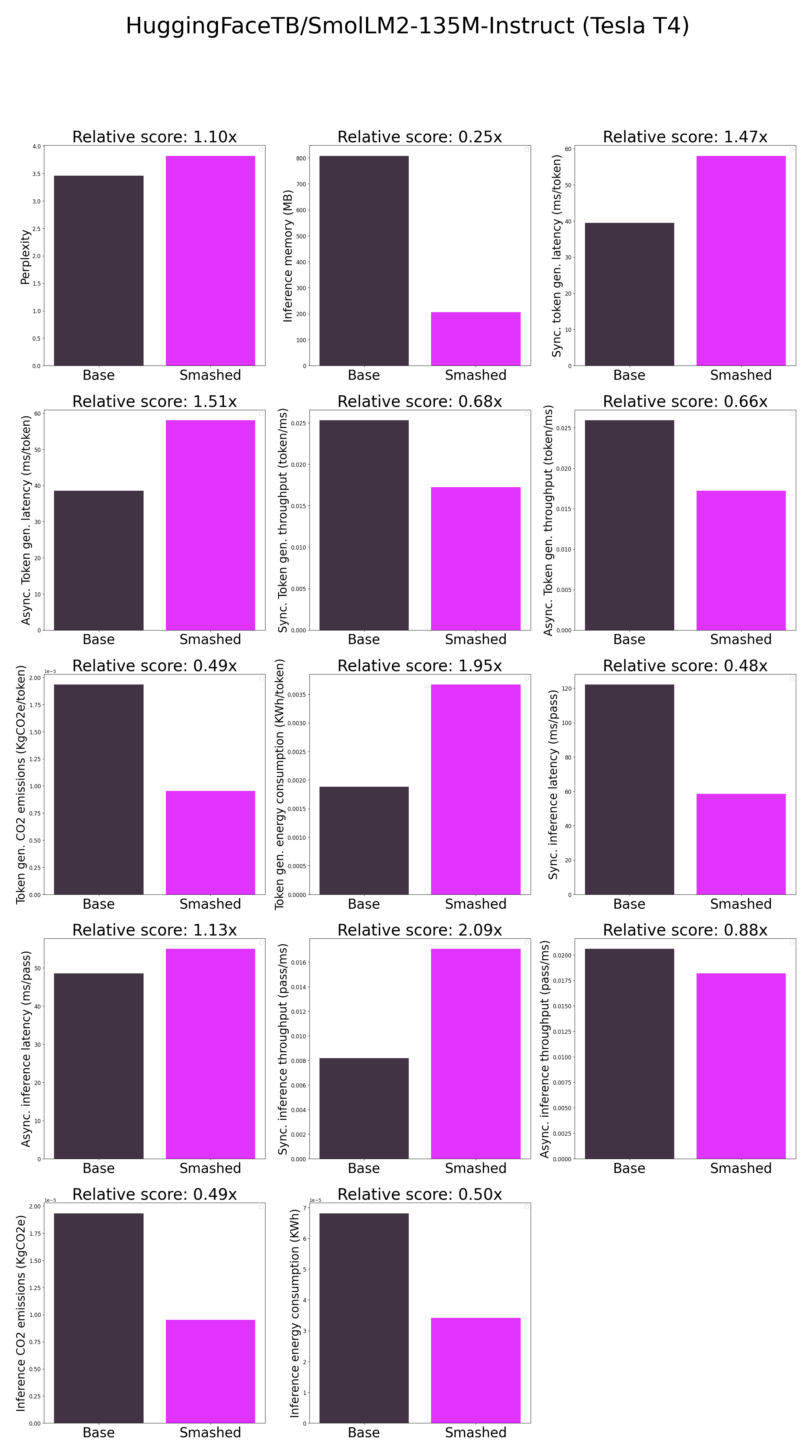

- plots.png +0 -0

- smashed_results.json +19 -0

base_results.json

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"current_gpu_type": "Tesla T4",

|

| 3 |

+

"current_gpu_total_memory": 15095.0625,

|

| 4 |

+

"perplexity": 3.4586403369903564,

|

| 5 |

+

"memory_inference_first": 808.0,

|

| 6 |

+

"memory_inference": 808.0,

|

| 7 |

+

"token_generation_latency_sync": 39.485802841186526,

|

| 8 |

+

"token_generation_latency_async": 38.54773212224245,

|

| 9 |

+

"token_generation_throughput_sync": 0.025325558252469627,

|

| 10 |

+

"token_generation_throughput_async": 0.025941863371593512,

|

| 11 |

+

"token_generation_CO2_emissions": 1.9341882246324658e-05,

|

| 12 |

+

"token_generation_energy_consumption": 0.0018858868968200813,

|

| 13 |

+

"inference_latency_sync": 122.10734748840332,

|

| 14 |

+

"inference_latency_async": 48.58226776123047,

|

| 15 |

+

"inference_throughput_sync": 0.008189515377811079,

|

| 16 |

+

"inference_throughput_async": 0.02058364185292351,

|

| 17 |

+

"inference_CO2_emissions": 1.933046505752413e-05,

|

| 18 |

+

"inference_energy_consumption": 6.813219116923538e-05

|

| 19 |

+

}

|

plots.png

ADDED

|

smashed_results.json

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"current_gpu_type": "Tesla T4",

|

| 3 |

+

"current_gpu_total_memory": 15095.0625,

|

| 4 |

+

"perplexity": 3.8198323249816895,

|

| 5 |

+

"memory_inference_first": 206.0,

|

| 6 |

+

"memory_inference": 206.0,

|

| 7 |

+

"token_generation_latency_sync": 57.987845993041994,

|

| 8 |

+

"token_generation_latency_async": 58.05423278361559,

|

| 9 |

+

"token_generation_throughput_sync": 0.017244993030435907,

|

| 10 |

+

"token_generation_throughput_async": 0.01722527285352785,

|

| 11 |

+

"token_generation_CO2_emissions": 9.527744243728705e-06,

|

| 12 |

+

"token_generation_energy_consumption": 0.003671008174955222,

|

| 13 |

+

"inference_latency_sync": 58.546810150146484,

|

| 14 |

+

"inference_latency_async": 54.94275093078613,

|

| 15 |

+

"inference_throughput_sync": 0.017080349850580167,

|

| 16 |

+

"inference_throughput_async": 0.018200763213690286,

|

| 17 |

+

"inference_CO2_emissions": 9.524661982723253e-06,

|

| 18 |

+

"inference_energy_consumption": 3.420485454644485e-05

|

| 19 |

+

}

|