Upload folder using huggingface_hub (#3)

Browse files- d3481fb04b7c4b3a656ed0794f499ed0f5db551dc2476cbcc840dd2b5611f126 (c878a1590ab6f671d5792bddcd643c676b6c6788)

- config.json +1 -1

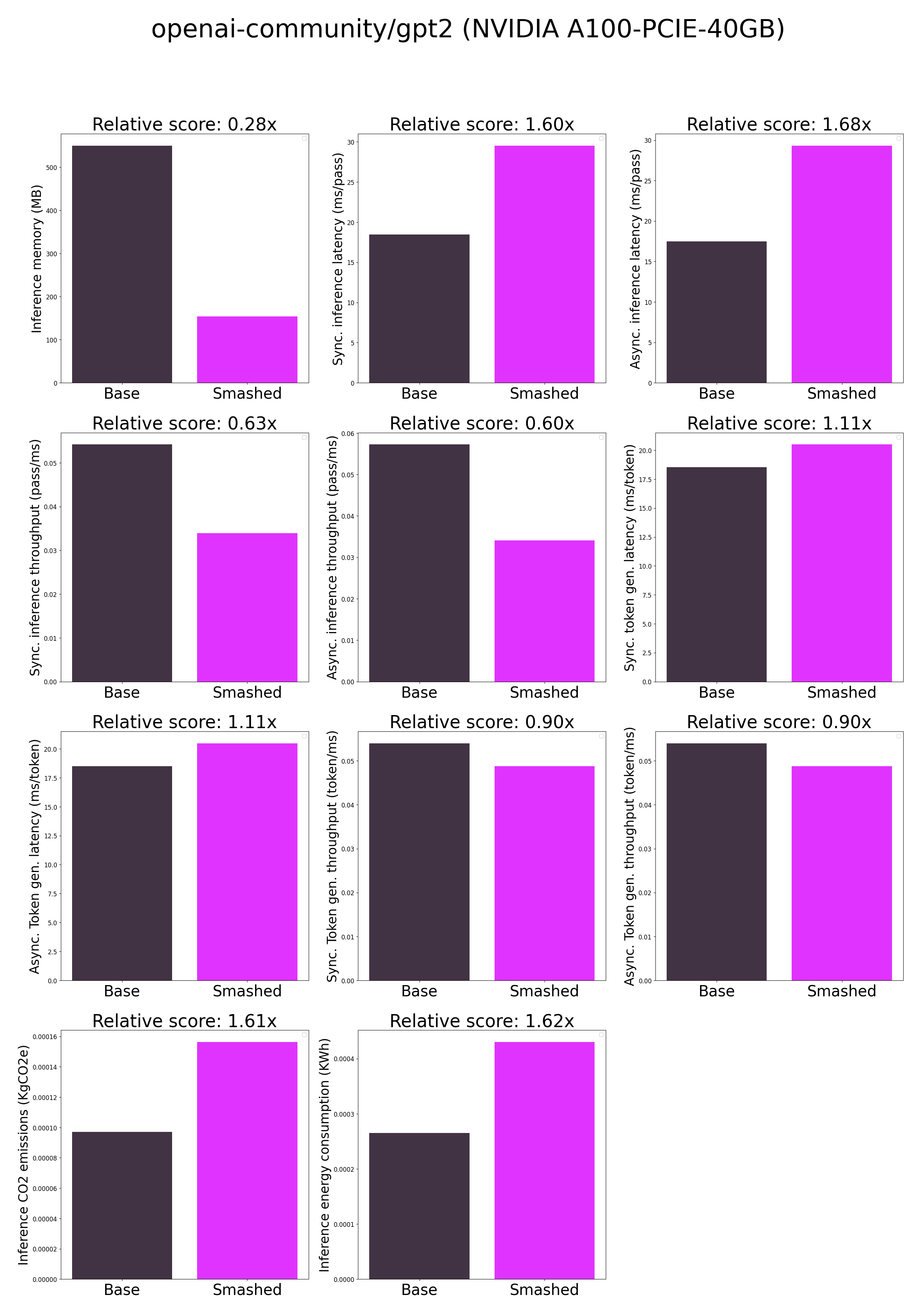

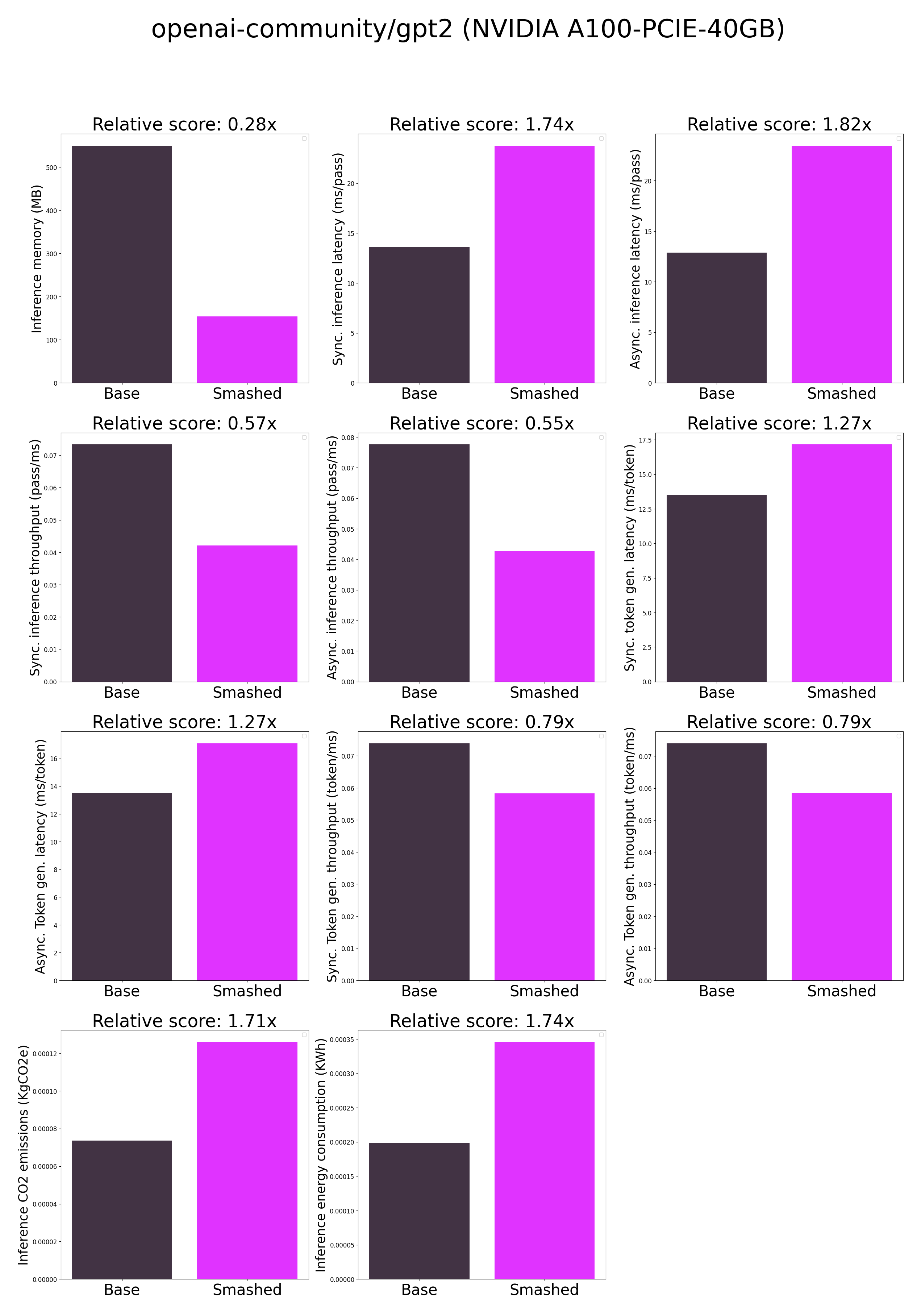

- plots.png +0 -0

- smash_config.json +1 -1

config.json

CHANGED

|

@@ -1,5 +1,5 @@

|

|

| 1 |

{

|

| 2 |

-

"_name_or_path": "/tmp/

|

| 3 |

"activation_function": "gelu_new",

|

| 4 |

"architectures": [

|

| 5 |

"GPT2LMHeadModel"

|

|

|

|

| 1 |

{

|

| 2 |

+

"_name_or_path": "/tmp/tmpl_miijf3",

|

| 3 |

"activation_function": "gelu_new",

|

| 4 |

"architectures": [

|

| 5 |

"GPT2LMHeadModel"

|

plots.png

CHANGED

|

|

smash_config.json

CHANGED

|

@@ -8,7 +8,7 @@

|

|

| 8 |

"compilers": "None",

|

| 9 |

"task": "text_text_generation",

|

| 10 |

"device": "cuda",

|

| 11 |

-

"cache_dir": "/ceph/hdd/staff/charpent/.cache/

|

| 12 |

"batch_size": 1,

|

| 13 |

"model_name": "openai-community/gpt2",

|

| 14 |

"pruning_ratio": 0.0,

|

|

|

|

| 8 |

"compilers": "None",

|

| 9 |

"task": "text_text_generation",

|

| 10 |

"device": "cuda",

|

| 11 |

+

"cache_dir": "/ceph/hdd/staff/charpent/.cache/models55vp7gu9",

|

| 12 |

"batch_size": 1,

|

| 13 |

"model_name": "openai-community/gpt2",

|

| 14 |

"pruning_ratio": 0.0,

|