Update README.md

Browse files

README.md

CHANGED

|

@@ -222,22 +222,24 @@ print(outputs[0]["generated_text"][len(prompt):])

|

|

| 222 |

|

| 223 |

| | Clinical KG | Medical Genetics | Anatomy | Pro Medicine | College Biology | College Medicine | MedQA 4 opts | PubMedQA | MedMCQA | Avg |

|

| 224 |

|--------------------|-------------|------------------|---------|--------------|-----------------|------------------|--------------|----------|---------|-------|

|

| 225 |

-

| **OpenBioLLM-70B**

|

| 226 |

-

| Med-PaLM-2

|

| 227 |

-

| GPT-4 | 86.04 | 91 | 80 | 93.01 | **95.14** | 76.88 | 78.87 | 75.2 | 69.52 | 82.85 |

|

| 228 |

-

|

|

| 229 |

-

|

|

| 230 |

-

|

|

| 231 |

-

| GPT-3.5 Turbo 1106

|

| 232 |

-

| Meditron-70B

|

| 233 |

-

| gemma-7b

|

| 234 |

-

| Mistral-7B-v0.1

|

| 235 |

-

|

|

| 236 |

-

|

|

| 237 |

-

|

|

| 238 |

-

|

|

| 239 |

-

|

| 240 |

-

|

|

|

|

|

|

|

| 241 |

|

| 242 |

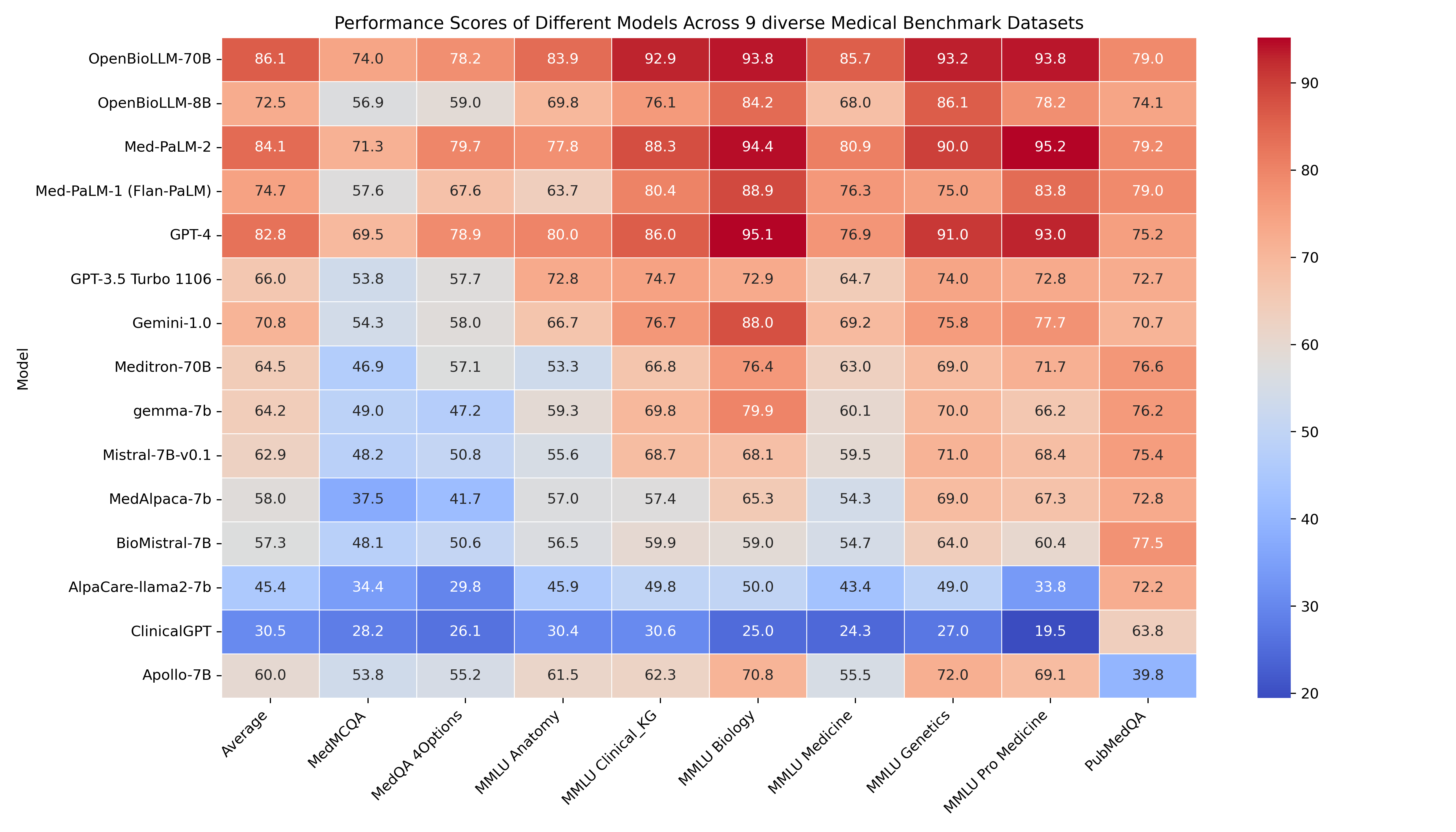

## Detailed Medical Subjectwise accuracy

|

| 243 |

|

|

|

|

| 222 |

|

| 223 |

| | Clinical KG | Medical Genetics | Anatomy | Pro Medicine | College Biology | College Medicine | MedQA 4 opts | PubMedQA | MedMCQA | Avg |

|

| 224 |

|--------------------|-------------|------------------|---------|--------------|-----------------|------------------|--------------|----------|---------|-------|

|

| 225 |

+

| **OpenBioLLM-70B** | **92.93** | **93.197** | **83.904** | **93.75** | **93.827** | **85.749** | 78.162 | 78.97 | **74.014** | **86.05588** |

|

| 226 |

+

| Med-PaLM-2 | 88.3 | 90 | 77.8 | **95.2** | 94.4 | 80.9 | **79.7** | **79.2** | 71.3 | 84.08 |

|

| 227 |

+

| **GPT-4** | 86.04 | 91 | 80 | 93.01 | **95.14** | 76.88 | 78.87 | 75.2 | 69.52 | 82.85 |

|

| 228 |

+

| Med-PaLM-1 (Flan-PaLM) | 80.4 | 75 | 63.7 | 83.8 | 88.9 | 76.3 | 67.6 | 79 | 57.6 | 74.7 |

|

| 229 |

+

| **OpenBioLLM-8B** | 76.101 | 86.1 | 69.829 | 78.21 | 84.213 | 68.042 | 58.993 | 74.12 | 56.913 | 72.502 |

|

| 230 |

+

| Gemini-1.0 | 76.7 | 75.8 | 66.7 | 77.7 | 88 | 69.2 | 58 | 70.7 | 54.3 | 70.79 |

|

| 231 |

+

| GPT-3.5 Turbo 1106 | 74.71 | 74 | 72.79 | 72.79 | 72.91 | 64.73 | 57.71 | 72.66 | 53.79 | 66 |

|

| 232 |

+

| Meditron-70B | 66.79 | 69 | 53.33 | 71.69 | 76.38 | 63 | 57.1 | 76.6 | 46.85 | 64.52 |

|

| 233 |

+

| gemma-7b | 69.81 | 70 | 59.26 | 66.18 | 79.86 | 60.12 | 47.21 | 76.2 | 48.96 | 64.18 |

|

| 234 |

+

| Mistral-7B-v0.1 | 68.68 | 71 | 55.56 | 68.38 | 68.06 | 59.54 | 50.82 | 75.4 | 48.2 | 62.85 |

|

| 235 |

+

| Apollo-7B | 62.26 | 72 | 61.48 | 69.12 | 70.83 | 55.49 | 55.22 | 39.8 | 53.77 | 60 |

|

| 236 |

+

| MedAlpaca-7b | 57.36 | 69 | 57.04 | 67.28 | 65.28 | 54.34 | 41.71 | 72.8 | 37.51 | 58.03 |

|

| 237 |

+

| BioMistral-7B | 59.9 | 64 | 56.5 | 60.4 | 59 | 54.7 | 50.6 | 77.5 | 48.1 | 57.3 |

|

| 238 |

+

| AlpaCare-llama2-7b | 49.81 | 49 | 45.92 | 33.82 | 50 | 43.35 | 29.77 | 72.2 | 34.42 | 45.36 |

|

| 239 |

+

| ClinicalGPT | 30.56 | 27 | 30.37 | 19.48 | 25 | 24.27 | 26.08 | 63.8 | 28.18 | 30.52 |

|

| 240 |

+

|

| 241 |

+

|

| 242 |

+

|

| 243 |

|

| 244 |

## Detailed Medical Subjectwise accuracy

|

| 245 |

|