Take Control of What Your LLM Knows and Does — with the EasyEdit Tool Series

This article is also available in Chinese 简体中文.

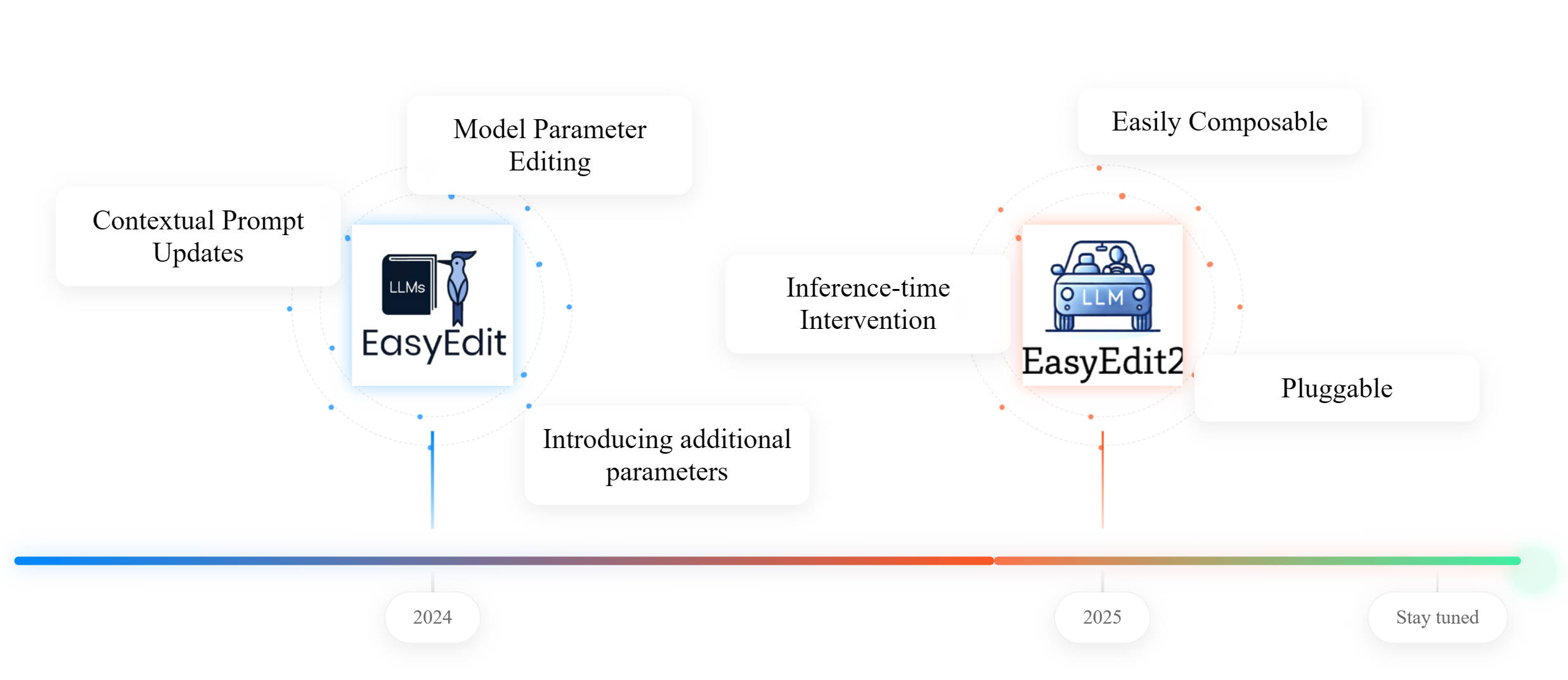

As large language models become more widely applied, the controllability and editability of model behavior are becoming crucial. Today, we introduce the two major versions of the EasyEdit series: the classic EasyEdit1 and the newly released EasyEdit2, ushering you into a new era of knowledge editing and inference-time intervention!

📌 Related Reading Want to dive deeper into community reflections and discussions on knowledge editing? Check out this dedicated blog → Reflection on Knowledge Editing: Charting the Next Steps 🔍

Why Knowledge Editing and Inference-Time Intervention?

With the wide adoption of large language models like GPT, Qwen, and LLaMA, models are demonstrating unprecedented capabilities in various NLP tasks. However, when deployed in real-world production, two fundamental challenges quickly emerge:

- 1️⃣ Knowledge Cutoff — Pretrained models only retain static knowledge up to their training date and know nothing about new events.

- 2️⃣ Incorrect or Biased Knowledge — Large-scale training corpora inevitably contain noise, bias, or misinformation, causing models to produce outputs that seem plausible but are factually wrong or harmful.

Knowledge Editing was born to address this “knowledge updatability” problem: by surgically locating and modifying a small number of parameters, you can update or override a single fact in seconds without retraining the whole model — saving compute costs and minimizing disruption to existing capabilities.

However, knowledge editing alone is not enough. In many real scenarios, users also need to intervene in model outputs during inference:

- Prevent generating sensitive or harmful content (Safety)

- Switch tone, emotion, or even persona across dialogues and scenarios (Personalization)

- Temporarily steer reasoning paths, e.g., enforce logical unfolding or style rewriting

This is Inference-Time Intervention (Steering/Intervention): without modifying model weights, you inject composable steering vectors or prompt structures to achieve instant, tunable control over generation.

The Dual Solution: EasyEdit

Therefore, we release the EasyEdit series:

- EasyEdit1: For precise knowledge editing — cover, update, and fix single-point facts in large models.

- EasyEdit2: For inference-time, plug-and-play intervention — flexibly adjust outputs for safety, style, personalization, and more.

With this toolkit, you can transform your large model at minimal cost, in an interpretable way, without retraining.

EasyEdit1 vs. EasyEdit2 Comparison

How It Works

- EasyEdit1: Modifies internal model parameters for “permanent” knowledge edits

- EasyEdit2: Injects steering vectors during inference, without changing model weights

Granularity

- EasyEdit1: One-shot, instance-level static edits

- EasyEdit2: Adjustable strength, fine-grained from mild to strong interventions

Use Cases

- Both can correct factual outputs

- EasyEdit2 can also steer reasoning, emotion, style, and other abstract behaviors

1. EasyEdit1: Precise Knowledge Editing Tool

EasyEdit1 is a toolkit for precise editing of factual knowledge in large models. It supports multiple mainstream editing paradigms, enabling you to locate, modify, or inject knowledge without retraining at scale — flexibly fix facts, remove bias, or update or erase specific information.

Background & Positioning

- Goal: Efficiently and precisely edit specific knowledge (facts/bias/sensitive info) “stored” in large models.

- Use Cases: Fix outdated facts, inject new info, remove sensitive data, strip toxic outputs.

Core Features

- Multiple Editing Paradigms:

- Multi-Model Compatibility: GPT, LLaMA, T5, ChatGLM, InternLM, Qwen, Mistral, and more (1B–65B).

- Support for Multiple Types of Knowledge Editing Datasets:

- Triplet format: ZsRE, CounterFact, KnowEdit

- Unstructured long text format: AKEW, LEME, CKnowEdit

- Also includes datasets such as multi-hop: MQuAKE; hallucination evaluation for knowledge editing: HalluEditBench; and lifelong knowledge editing scenarios: WikiBigEdit

- Support for Multiple Evaluation Methods:

- Traditional teacher forcing-based evaluation

- Autoregressive open-ended generation evaluation via LLM-as-a-Judge

- One-Shot Editing: Instantly update a single input-output pair.

Environment Setup

git clone https://github.com/zjunlp/EasyEdit.git

conda create -n easyedit python=3.9.7

conda activate easyedit

pip install -r requirements.txt

Usage Steps

EasyEdit is modular and flexible. Here’s an example with the MEND method:

Step1: Define the Target Model

Choose the pretrained language model (PLM) to edit. EasyEdit currently supports several HuggingFace models (T5, GPT-J, GPT‑NEO, LLaMA, etc.). Config is in hparams/<method>/<model>.yaml, e.g., hparams/MEND/gpt2-xl.yaml. Use model_name to specify the target.

model_name: gpt2-xl

model_class: GPT2LMHeadModel

tokenizer_class: GPT2Tokenizer

tokenizer_name: gpt2-xl

model_parallel: false # true for multi-GPU editing

Step2: Choose an Editing Method

Import and load the corresponding hyperparameter config, e.g., for MEND:

from easyeditor import MENDHyperParams

# Load config from hparams/MEND/gpt2-xl.yaml

hparams = MENDHyperParams.from_hparams('./hparams/MEND/gpt2-xl.yaml')

Step3: Provide Edit Descriptor & Target

prompts: input prompts to edit (Edit Descriptor)ground_truth: original output orNonetarget_new: desired new output (Edit Target)

prompts = [

'What university did Watts Humphrey attend?',

'Which family does Ramalinaceae belong to',

'What role does Denny Herzig play in football?'

]

ground_truth = ['Illinois Institute of Technology', 'Lecanorales', 'defender']

target_new = ['University of Michigan', 'Lamiinae', 'winger']

Step4: Initialize Editor and Edit

EasyEdit provides a unified method from_hparams, similar to Hugging Face’s style.

from easyeditor import BaseEditor

# Load hyperparams and construct editor

editor = BaseEditor.from_hparams(hparams)

Step5: Optional — Locality & Portability Eval

Provide custom eval data in dict format:

locality_inputs = {

'neighborhood':{

'prompt': [

'Joseph Fischhof, the',

'Larry Bird is a professional',

'In Forssa, they understand'

],

'ground_truth': ['piano', 'basketball', 'Finnish']

},

'distracting':{

'prompt': [

'Ray Charles, the violin Hauschka plays the instrument',

'Grant Hill is a professional soccer Magic Johnson is a professional',

'The law in Ikaalinen declares the language Swedish In Loviisa, the language spoken is'

],

'ground_truth': ['piano', 'basketball', 'Finnish']

}

}

This evaluates the method’s performance under “neighborhood” and “distracting” contexts.

Step6: Edit & Evaluate

Run the edit and get metrics:

metrics, edited_model, _ = editor.edit(

prompts=prompts,

# rephrase_prompts=rephrase_prompts,

ground_truth=ground_truth,

target_new=target_new,

locality_inputs=locality_inputs,

# portability_inputs=portability_inputs,

sequential_edit=False # Set True for sequential edits

)

# Returns metrics (edit success, rephrase success, locality, etc.) and edited_model

Evaluation Metrics

After editing, metrics return in this dict format:

{

"post": {

"rewrite_acc": ..., // Reliability after edit

"rephrase_acc": ..., // Generalization after edit

"locality": {

"YOUR_LOCALITY_KEY": ...

},

"portability": {

"YOUR_PORTABILITY_KEY": ...

}

},

"pre": {

"rewrite_acc": ...,

"rephrase_acc": ...,

"portability": {

"YOUR_PORTABILITY_KEY": ...

}

}

}

rewrite_acc→Reliability: the success rate of editing with a given editing descriptorrephrase_acc→Generalization: the success rate of editing within the editing scopelocality→Locality: whether the model's output changes after editing for unrelated inputsportability→Portability: the success rate of editing for reasoning/application(one hop, synonym, logical generalization)

Note:

- Reliability: requires only

promptsandtarget_new - Generalization: also needs

rephrase_prompts - Locality & Portability: define

metric_keyand provideprompts+ground_truth

2. EasyEdit2: Real-Time Inference-Time Steering

EasyEdit2 is a toolkit for real-time behavioral control of large models, offering a flexible, extensible framework for generating and applying steering vectors — precisely adjust model output without touching its weights.

Background & Positioning

- Goal: Achieve real-time, controllable inference-time intervention without modifying model weights.

- Use Cases: Flexibly adjust safety, emotion, reasoning, language features, persona, and other behaviors for personalization.

Core Features

- Supports Multiple Steering Paradigms

- Activation-based (CAA, LM‑Steer, SAE, STA…)

- Prompt-based (manual/auto prompt)

- Decoding-based (in progress)

- Flexible Combination & Adjustment: Stack methods, merge vectors, adjust strength, select layers.

- Diverse Applications: Safety, style, reasoning flow, language features, persona shaping.

- Pretrained Vector Library: Plug-and-play steering vectors for common scenarios.

Environment Setup

git clone https://github.com/zjunlp/EasyEdit.git

conda create -n easyedit2 python=3.10

conda activate easyedit2

pip install -r requirements_2.txt

For safety/fluency evaluation, install NLTK data:

import nltk nltk.download('punkt')

Usage Steps

1. All-in-One

python steering.py \

--config-path hparams/Steer/ \

--config-name config.yaml

One command handles vector generation, application, and text generation. Hyperparameters are configured in hparams/Steer/config.yaml.

2. Step-by-Step (Recommended)

Step1: Generate Steering Vectors

python vectors_generate.py \

--config-path hparams/Steer/ \

--config-name vector_generate.yaml

- In

hparams/Steer/vector_generate.yaml:- Specify model name (e.g., LLaMA, Gemma, Qwen, GPT series)

- Configure vector generation method & dataset

Step2: Apply Steering Vectors

python vectors_apply.py \

--config-path hparams/Steer/ \

--config-name vector_applier.yaml

- In

hparams/Steer/vector_applier.yaml:- List

apply_steer_hparam_paths - Set

steer_vector_load_dir - Configure HF generation params (

max_new_tokens,temperature,do_sample, etc.)

- List

Simple Example (Full Process)

Here’s the step-by-step version, covering vector generation and application. Or just use the all-in-one approach by combining configs and running steering.py.

Vector Generation (Generator)

1️⃣ Choose steering method, e.g., hparams/Steer/caa_hparams/generate_caa.yaml:

alg_name: caa

layers: [17]

multiple_choice: false

2️⃣ Top-level config hparams/Steer/vector_generate.yaml:

model_name_or_path: your_model_path

torch_dtype: bfloat16

device: cuda:0

use_chat_template: false

system_prompt: ''

steer_train_hparam_paths:

- hparams/Steer/caa_hparams/generate_caa.yaml

steer_train_dataset:

- your_train_data

steer_vector_output_dir: vectors/your_output_dir/

3️⃣ Provide custom input or use dataset from config:

# Custom input example

# datasets={'your_dataset_name':[{'input':'hello'},{'input':'how are you'}]}

# Or load dataset from config

datasets = prepare_generation_datasets(top_cfg)

4️⃣ Run generation:

vector_generator = BaseVectorGenerator(top_cfg)

vectors = vector_generator.generate_vectors(datasets)

Vector Application (Applier)

1️⃣ Configure each method’s application file, e.g., hparams/Steer/caa_hparams/apply_caa.yaml:

alg_name: caa

layers: [17]

multipliers: [1.0]

2️⃣ Top-level config hparams/Steer/vector_applier.yaml:

apply_steer_hparam_paths:

- hparams/Steer/caa_hparams/apply_caa.yaml

steer_vector_load_dir:

- vectors/your_output_dir/

generation_data:

- your_test_data

generation_data_size: null # null/-1 means use all data

generation_output_dir: generations/your_output/

num_responses: 1

# Generation params

generation_params:

max_new_tokens: 100

temperature: 0.9

do_sample: True

3️⃣ Provide custom input or use dataset from config:

# Custom input example

# datasets={'your_dataset_name':[{'input':'hello'},{'input':'how are you'}]}

# Or load dataset from config

datasets = prepare_generation_datasets(top_cfg)

4️⃣ Apply & generate:

vector_applier = BaseVectorApplier(top_cfg)

vector_applier.apply_vectors()

results = vector_applier.generate(datasets)

Pretrained Vector Library

EasyEdit2 provides scenario-based pretrained vectors for safety, emotion, and more. See README_2.md for details.

Evaluation

Multi-dimensional eval in steer/evaluate/evaluate.py:

- LLM Evaluation (Concept relevance, Instruction relevance, Fluency)

- Rule-Based (PPL, Distinctness, Fluency, GSM)

- Classifier-Based (Sentiment, SafeEdit, Toxigen, RealToxicityPrompts)

Example:

python steer/evaluate/evaluate.py \

--generation_dataset_path results/your_results.json \

--eval_methods ppl distinctness safeedit \

--model_name_or_path your_model \

--device cuda

👉 For More Details:

- EasyEdit1: README.md

- EasyEdit2: README_2.md

- For questions, visit our GitHub Issues page