Commit

·

f8b1523

1

Parent(s):

18c4885

Add more formats

Browse files- README.md +338 -1

- assets/eval_long_form.png +0 -0

- assets/eval_short_form.png +0 -0

- ctranslate2/config.json +280 -0

- ctranslate2/model.bin +3 -0

- ctranslate2/preprocessor_config.json +14 -0

- ctranslate2/tokenizer.json +0 -0

- ctranslate2/vocabulary.json +0 -0

- ggml-model-q5_0.bin +3 -0

- ggml-model.bin +3 -0

- original_model.pt +3 -0

README.md

CHANGED

|

@@ -25,7 +25,7 @@ Compared to [v0.1](https://huggingface.co/collections/bofenghuang/french-whisper

|

|

| 25 |

|

| 26 |

The model uses [openai/whisper-large-v3](https://huggingface.co/openai/whisper-large-v3) as the teacher model while keeping the encoder architecture unchanged. This makes it suitable as a draft model for speculative decoding, potentially getting 2x inference speed while maintaining identical outputs by only adding 2 extra decoder layers and running the encoder just once. It can also serve as a standalone model to trade some accuracy for better efficiency, running 5.8x faster while using only 49% of the parameters. This [paper](https://arxiv.org/abs/2311.00430) also suggests that the distilled model may actually produce fewer hallucinations than the full model during long-form transcription.

|

| 27 |

|

| 28 |

-

The model has been converted into multiple formats to ensure broad compatibility across libraries including transformers, openai-whisper,

|

| 29 |

|

| 30 |

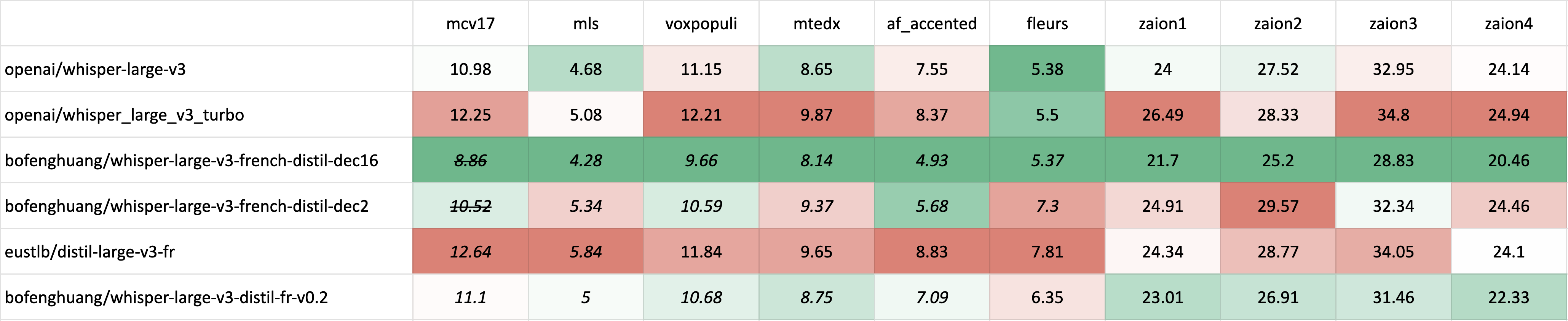

## Performance

|

| 31 |

|

|

@@ -70,3 +70,340 @@ Long-form transcription evaluation used the 🤗 Hugging Face [`pipeline`](https

|

|

| 70 |

| [distil-large-v3-fr](https://huggingface.co/eustlb/distil-large-v3-fr) | 11.31 | 11.34 | 10.36 | 10.52 | 31.38 | 30.32 | 28.05 | 26.43 |

|

| 71 |

| whisper-large-v3-distil-fr-v0.2 | 9.44 | 9.84 | *8.94* | *9.03* | 29.40 | 28.54 | 26.17 | 23.75 | -->

|

| 72 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 25 |

|

| 26 |

The model uses [openai/whisper-large-v3](https://huggingface.co/openai/whisper-large-v3) as the teacher model while keeping the encoder architecture unchanged. This makes it suitable as a draft model for speculative decoding, potentially getting 2x inference speed while maintaining identical outputs by only adding 2 extra decoder layers and running the encoder just once. It can also serve as a standalone model to trade some accuracy for better efficiency, running 5.8x faster while using only 49% of the parameters. This [paper](https://arxiv.org/abs/2311.00430) also suggests that the distilled model may actually produce fewer hallucinations than the full model during long-form transcription.

|

| 27 |

|

| 28 |

+

The model has been converted into multiple formats to ensure broad compatibility across libraries including transformers, openai-whisper, faster-whisper, whisper.cpp, candle, mlx.

|

| 29 |

|

| 30 |

## Performance

|

| 31 |

|

|

|

|

| 70 |

| [distil-large-v3-fr](https://huggingface.co/eustlb/distil-large-v3-fr) | 11.31 | 11.34 | 10.36 | 10.52 | 31.38 | 30.32 | 28.05 | 26.43 |

|

| 71 |

| whisper-large-v3-distil-fr-v0.2 | 9.44 | 9.84 | *8.94* | *9.03* | 29.40 | 28.54 | 26.17 | 23.75 | -->

|

| 72 |

|

| 73 |

+

## Usage

|

| 74 |

+

|

| 75 |

+

### Hugging Face Pipeline

|

| 76 |

+

|

| 77 |

+

The model can be easily used with the 🤗 Hugging Face [`pipeline`](https://huggingface.co/docs/transformers/main_classes/pipelines#transformers.AutomaticSpeechRecognitionPipeline) class for audio transcription. For long-form transcription (over 30 seconds), it will perform sequential decoding as described in OpenAI's paper. If you need faster inference, you can use the `chunk_length_s` argument for [chunked parallel decoding](https://huggingface.co/blog/asr-chunking), which provides 9x faster inference speed but may slightly compromise performance compared to OpenAI's sequential algorithm.

|

| 78 |

+

|

| 79 |

+

```python

|

| 80 |

+

import torch

|

| 81 |

+

from datasets import load_dataset

|

| 82 |

+

from transformers import AutoModelForSpeechSeq2Seq, AutoProcessor, pipeline

|

| 83 |

+

|

| 84 |

+

device = "cuda:0" if torch.cuda.is_available() else "cpu"

|

| 85 |

+

torch_dtype = torch.float16 if torch.cuda.is_available() else torch.float32

|

| 86 |

+

|

| 87 |

+

# Load model

|

| 88 |

+

model_name_or_path = "bofenghuang/whisper-large-v3-distil-fr-v0.2"

|

| 89 |

+

processor = AutoProcessor.from_pretrained(model_name_or_path)

|

| 90 |

+

model = AutoModelForSpeechSeq2Seq.from_pretrained(

|

| 91 |

+

model_name_or_path,

|

| 92 |

+

torch_dtype=torch_dtype,

|

| 93 |

+

low_cpu_mem_usage=True,

|

| 94 |

+

)

|

| 95 |

+

model.to(device)

|

| 96 |

+

|

| 97 |

+

# Init pipeline

|

| 98 |

+

pipe = pipeline(

|

| 99 |

+

"automatic-speech-recognition",

|

| 100 |

+

model=model,

|

| 101 |

+

feature_extractor=processor.feature_extractor,

|

| 102 |

+

tokenizer=processor.tokenizer,

|

| 103 |

+

torch_dtype=torch_dtype,

|

| 104 |

+

device=device,

|

| 105 |

+

# chunk_length_s=30, # for chunked decoding

|

| 106 |

+

max_new_tokens=128,

|

| 107 |

+

)

|

| 108 |

+

|

| 109 |

+

# Example audio

|

| 110 |

+

dataset = load_dataset("bofenghuang/asr-dummy", "fr", split="test")

|

| 111 |

+

sample = dataset[0]["audio"]

|

| 112 |

+

|

| 113 |

+

# Run pipeline

|

| 114 |

+

result = pipe(sample)

|

| 115 |

+

print(result["text"])

|

| 116 |

+

```

|

| 117 |

+

|

| 118 |

+

### Hugging Face Low-level APIs

|

| 119 |

+

|

| 120 |

+

You can also use the 🤗 Hugging Face low-level APIs for transcription, offering greater control over the process, as demonstrated below:

|

| 121 |

+

|

| 122 |

+

```python

|

| 123 |

+

import torch

|

| 124 |

+

from datasets import load_dataset

|

| 125 |

+

from transformers import AutoModelForSpeechSeq2Seq, AutoProcessor

|

| 126 |

+

|

| 127 |

+

device = "cuda:0" if torch.cuda.is_available() else "cpu"

|

| 128 |

+

torch_dtype = torch.float16 if torch.cuda.is_available() else torch.float32

|

| 129 |

+

|

| 130 |

+

# Load model

|

| 131 |

+

model_name_or_path = "bofenghuang/whisper-large-v3-distil-fr-v0.2"

|

| 132 |

+

processor = AutoProcessor.from_pretrained(model_name_or_path)

|

| 133 |

+

model = AutoModelForSpeechSeq2Seq.from_pretrained(

|

| 134 |

+

model_name_or_path,

|

| 135 |

+

torch_dtype=torch_dtype,

|

| 136 |

+

low_cpu_mem_usage=True,

|

| 137 |

+

)

|

| 138 |

+

model.to(device)

|

| 139 |

+

|

| 140 |

+

# Example audio

|

| 141 |

+

dataset = load_dataset("bofenghuang/asr-dummy", "fr", split="test")

|

| 142 |

+

sample = dataset[0]["audio"]

|

| 143 |

+

|

| 144 |

+

# Extract feautres

|

| 145 |

+

input_features = processor(

|

| 146 |

+

sample["array"], sampling_rate=sample["sampling_rate"], return_tensors="pt"

|

| 147 |

+

).input_features

|

| 148 |

+

|

| 149 |

+

|

| 150 |

+

# Generate tokens

|

| 151 |

+

predicted_ids = model.generate(

|

| 152 |

+

input_features.to(dtype=torch_dtype).to(device), max_new_tokens=128

|

| 153 |

+

)

|

| 154 |

+

|

| 155 |

+

# Detokenize to text

|

| 156 |

+

transcription = processor.batch_decode(predicted_ids, skip_special_tokens=True)[0]

|

| 157 |

+

print(transcription)

|

| 158 |

+

```

|

| 159 |

+

|

| 160 |

+

### Speculative Decoding

|

| 161 |

+

|

| 162 |

+

[Speculative decoding](https://huggingface.co/blog/whisper-speculative-decoding) can be achieved using a draft model, essentially a distilled version of Whisper. This approach guarantees identical outputs to using the main Whisper model alone, offers a 2x faster inference speed, and incurs only a slight increase in memory overhead.

|

| 163 |

+

|

| 164 |

+

Since the distilled Whisper has the same encoder as the original, only its decoder need to be loaded, and encoder outputs are shared between the main and draft models during inference.

|

| 165 |

+

|

| 166 |

+

Using speculative decoding with the Hugging Face pipeline is simple - just specify the `assistant_model` within the generation configurations.

|

| 167 |

+

|

| 168 |

+

```python

|

| 169 |

+

import torch

|

| 170 |

+

from datasets import load_dataset

|

| 171 |

+

from transformers import (

|

| 172 |

+

AutoModelForCausalLM,

|

| 173 |

+

AutoModelForSpeechSeq2Seq,

|

| 174 |

+

AutoProcessor,

|

| 175 |

+

pipeline,

|

| 176 |

+

)

|

| 177 |

+

|

| 178 |

+

device = "cuda:0" if torch.cuda.is_available() else "cpu"

|

| 179 |

+

torch_dtype = torch.float16 if torch.cuda.is_available() else torch.float32

|

| 180 |

+

|

| 181 |

+

# Load model

|

| 182 |

+

model_name_or_path = "openai/whisper-large-v3"

|

| 183 |

+

processor = AutoProcessor.from_pretrained(model_name_or_path)

|

| 184 |

+

model = AutoModelForSpeechSeq2Seq.from_pretrained(

|

| 185 |

+

model_name_or_path,

|

| 186 |

+

torch_dtype=torch_dtype,

|

| 187 |

+

low_cpu_mem_usage=True,

|

| 188 |

+

)

|

| 189 |

+

model.to(device)

|

| 190 |

+

|

| 191 |

+

# Load draft model

|

| 192 |

+

assistant_model_name_or_path = "bofenghuang/whisper-large-v3-distil-fr-v0.2"

|

| 193 |

+

assistant_model = AutoModelForCausalLM.from_pretrained(

|

| 194 |

+

assistant_model_name_or_path,

|

| 195 |

+

torch_dtype=torch_dtype,

|

| 196 |

+

low_cpu_mem_usage=True,

|

| 197 |

+

)

|

| 198 |

+

assistant_model.to(device)

|

| 199 |

+

|

| 200 |

+

# Init pipeline

|

| 201 |

+

pipe = pipeline(

|

| 202 |

+

"automatic-speech-recognition",

|

| 203 |

+

model=model,

|

| 204 |

+

feature_extractor=processor.feature_extractor,

|

| 205 |

+

tokenizer=processor.tokenizer,

|

| 206 |

+

torch_dtype=torch_dtype,

|

| 207 |

+

device=device,

|

| 208 |

+

generate_kwargs={"assistant_model": assistant_model},

|

| 209 |

+

max_new_tokens=128,

|

| 210 |

+

)

|

| 211 |

+

|

| 212 |

+

# Example audio

|

| 213 |

+

dataset = load_dataset("bofenghuang/asr-dummy", "fr", split="test")

|

| 214 |

+

sample = dataset[0]["audio"]

|

| 215 |

+

|

| 216 |

+

# Run pipeline

|

| 217 |

+

result = pipe(sample)

|

| 218 |

+

print(result["text"])

|

| 219 |

+

```

|

| 220 |

+

|

| 221 |

+

### OpenAI Whisper

|

| 222 |

+

|

| 223 |

+

You can also employ the sequential long-form decoding algorithm with a sliding window and temperature fallback, as outlined by OpenAI in their original [paper](https://arxiv.org/abs/2212.04356).

|

| 224 |

+

|

| 225 |

+

First, install the [openai-whisper](https://github.com/openai/whisper) package:

|

| 226 |

+

|

| 227 |

+

```bash

|

| 228 |

+

pip install -U openai-whisper

|

| 229 |

+

```

|

| 230 |

+

|

| 231 |

+

Then, download the converted model:

|

| 232 |

+

|

| 233 |

+

```bash

|

| 234 |

+

huggingface-cli download --include original_model.pt --local-dir ./models/whisper-large-v3-distil-fr-v0.2 bofenghuang/whisper-large-v3-distil-fr-v0.2

|

| 235 |

+

```

|

| 236 |

+

|

| 237 |

+

Now, you can transcirbe audio files by following the usage instructions provided in the repository:

|

| 238 |

+

|

| 239 |

+

```python

|

| 240 |

+

import whisper

|

| 241 |

+

from datasets import load_dataset

|

| 242 |

+

|

| 243 |

+

# Load model

|

| 244 |

+

model_name_or_path = "./models/whisper-large-v3-distil-fr-v0.2/original_model.pt"

|

| 245 |

+

model = whisper.load_model(model_name_or_path)

|

| 246 |

+

|

| 247 |

+

# Example audio

|

| 248 |

+

dataset = load_dataset("bofenghuang/asr-dummy", "fr", split="test")

|

| 249 |

+

sample = dataset[0]["audio"]["array"].astype("float32")

|

| 250 |

+

|

| 251 |

+

# Transcribe

|

| 252 |

+

result = model.transcribe(sample, language="fr")

|

| 253 |

+

print(result["text"])

|

| 254 |

+

```

|

| 255 |

+

|

| 256 |

+

### Faster Whisper

|

| 257 |

+

|

| 258 |

+

Faster Whisper is a reimplementation of OpenAI's Whisper models and the sequential long-form decoding algorithm in the [CTranslate2](https://github.com/OpenNMT/CTranslate2) format.

|

| 259 |

+

|

| 260 |

+

Compared to openai-whisper, it offers up to 4x faster inference speed, while consuming less memory. Additionally, the model can be quantized into int8, further enhancing its efficiency on both CPU and GPU.

|

| 261 |

+

|

| 262 |

+

First, install the [faster-whisper](https://github.com/SYSTRAN/faster-whisper) package:

|

| 263 |

+

|

| 264 |

+

```bash

|

| 265 |

+

pip install faster-whisper

|

| 266 |

+

```

|

| 267 |

+

|

| 268 |

+

Then, download the model converted to the CTranslate2 format:

|

| 269 |

+

|

| 270 |

+

```bash

|

| 271 |

+

python -c "from huggingface_hub import snapshot_download; snapshot_download(repo_id='bofenghuang/whisper-large-v3-distil-fr-v0.2', local_dir='./models/whisper-large-v3-distil-fr-v0.2', allow_patterns='ctranslate2/*')"

|

| 272 |

+

```

|

| 273 |

+

|

| 274 |

+

Now, you can transcirbe audio files by following the usage instructions provided in the repository:

|

| 275 |

+

|

| 276 |

+

```python

|

| 277 |

+

from datasets import load_dataset

|

| 278 |

+

from faster_whisper import WhisperModel

|

| 279 |

+

|

| 280 |

+

# Load model

|

| 281 |

+

model_name_or_path = "./models/whisper-large-v3-distil-fr-v0.2/ctranslate2

|

| 282 |

+

model = WhisperModel(model_name_or_path", device="cuda", compute_type="float16") # Run on GPU with FP16

|

| 283 |

+

|

| 284 |

+

# Example audio

|

| 285 |

+

dataset = load_dataset("bofenghuang/asr-dummy", "fr", split="test")

|

| 286 |

+

sample = dataset[0]["audio"]["array"].astype("float32")

|

| 287 |

+

|

| 288 |

+

segments, info = model.transcribe(sample, beam_size=5, language="fr")

|

| 289 |

+

|

| 290 |

+

for segment in segments:

|

| 291 |

+

print("[%.2fs -> %.2fs] %s" % (segment.start, segment.end, segment.text))

|

| 292 |

+

```

|

| 293 |

+

|

| 294 |

+

### Whisper.cpp

|

| 295 |

+

|

| 296 |

+

Whisper.cpp is a reimplementation of OpenAI's Whisper models, crafted in plain C/C++ without any dependencies. It offers compatibility with various backends and platforms.

|

| 297 |

+

|

| 298 |

+

Additionally, the model can be quantized to either 4-bit or 5-bit integers, further enhancing its efficiency.

|

| 299 |

+

|

| 300 |

+

First, clone and build the [whisper.cpp](https://github.com/ggerganov/whisper.cpp) repository:

|

| 301 |

+

|

| 302 |

+

```bash

|

| 303 |

+

git clone https://github.com/ggerganov/whisper.cpp.git

|

| 304 |

+

cd whisper.cpp

|

| 305 |

+

|

| 306 |

+

# build the main example

|

| 307 |

+

make

|

| 308 |

+

```

|

| 309 |

+

|

| 310 |

+

Next, download the converted ggml weights from the Hugging Face Hub:

|

| 311 |

+

|

| 312 |

+

```bash

|

| 313 |

+

# Download model quantized with Q5_0 method

|

| 314 |

+

python -c "from huggingface_hub import hf_hub_download; hf_hub_download(repo_id='bofenghuang/whisper-large-v3-distil-fr-v0.2', filename='ggml-model-q5_0.bin', local_dir='./models/whisper-large-v3-distil-fr-v0.2')"

|

| 315 |

+

```

|

| 316 |

+

|

| 317 |

+

Now, you can transcribe an audio file using the following command:

|

| 318 |

+

|

| 319 |

+

```bash

|

| 320 |

+

./main -m ./models/whisper-large-v3-distil-fr-v0.2/ggml-model-q5_0.bin -l fr -f /path/to/audio/file --print-colors

|

| 321 |

+

```

|

| 322 |

+

|

| 323 |

+

### Candle

|

| 324 |

+

|

| 325 |

+

[Candle-whisper](https://github.com/huggingface/candle/tree/main/candle-examples/examples/whisper) is a reimplementation of OpenAI's Whisper models in the candle format - a lightweight ML framework built in Rust.

|

| 326 |

+

|

| 327 |

+

First, clone the [candle](https://github.com/huggingface/candle) repository:

|

| 328 |

+

|

| 329 |

+

```bash

|

| 330 |

+

git clone https://github.com/huggingface/candle.git

|

| 331 |

+

cd candle/candle-examples/examples/whisper

|

| 332 |

+

```

|

| 333 |

+

|

| 334 |

+

Transcribe an audio file using the following command:

|

| 335 |

+

|

| 336 |

+

```bash

|

| 337 |

+

cargo run --example whisper --release -- --model large-v3 --model-id bofenghuang/whisper-large-v3-distil-fr-v0.2 --language fr --input /path/to/audio/file

|

| 338 |

+

```

|

| 339 |

+

|

| 340 |

+

In order to use CUDA add `--features cuda` to the example command line:

|

| 341 |

+

|

| 342 |

+

```bash

|

| 343 |

+

cargo run --example whisper --release --features cuda -- --model large-v3 --model-id bofenghuang/whisper-large-v3-distil-fr-v0.2 --language fr --input /path/to/audio/file

|

| 344 |

+

```

|

| 345 |

+

|

| 346 |

+

### MLX

|

| 347 |

+

|

| 348 |

+

[MLX-Whisper](https://github.com/ml-explore/mlx-examples/tree/main/whisper) is a reimplementation of OpenAI's Whisper models in the [MLX](https://github.com/ml-explore/mlx) format - a ML framework on Apple silicon. It supports features like lazy computation, unified memory management, etc.

|

| 349 |

+

|

| 350 |

+

First, clone the [MLX Examples](https://github.com/ml-explore/mlx-examples) repository:

|

| 351 |

+

|

| 352 |

+

```bash

|

| 353 |

+

git clone https://github.com/ml-explore/mlx-examples.git

|

| 354 |

+

cd mlx-examples/whisper

|

| 355 |

+

```

|

| 356 |

+

|

| 357 |

+

Next, install the dependencies:

|

| 358 |

+

|

| 359 |

+

```bash

|

| 360 |

+

pip install -r requirements.txt

|

| 361 |

+

```

|

| 362 |

+

|

| 363 |

+

Download the pytorch checkpoint in the original OpenAI format and convert it into MLX format (We haven't included the converted version here since the repository is already heavy and the conversion is very fast):

|

| 364 |

+

|

| 365 |

+

```bash

|

| 366 |

+

# Download

|

| 367 |

+

python -c "from huggingface_hub import hf_hub_download; hf_hub_download(repo_id='bofenghuang/whisper-large-v3-distil-fr-v0.2', filename='original_model.pt', local_dir='./models/whisper-large-v3-distil-fr-v0.2')"

|

| 368 |

+

# Convert into .npz

|

| 369 |

+

python convert.py --torch-name-or-path ./models/whisper-large-v3-distil-fr-v0.2/original_model.pt --mlx-path ./mlx_models/whisper-large-v3-distil-fr-v0.2

|

| 370 |

+

```

|

| 371 |

+

|

| 372 |

+

Now, you can transcribe audio with:

|

| 373 |

+

|

| 374 |

+

```python

|

| 375 |

+

import whisper

|

| 376 |

+

|

| 377 |

+

result = whisper.transcribe("/path/to/audio/file", path_or_hf_repo="mlx_models/whisper-large-v3-distil-fr-v0.2", language="fr")

|

| 378 |

+

print(result["text"])

|

| 379 |

+

```

|

| 380 |

+

|

| 381 |

+

## Training details

|

| 382 |

+

|

| 383 |

+

We built a French speech recognition dataset of over 22,000 hours of annotated and semi-annotated speech. After decoding this dataset through Whisper Large V3 and filtering out segments with WER above 20%, we retained approximately 10,000 hours of high-quality audio.

|

| 384 |

+

|

| 385 |

+

| Dataset | Total Duration (h) | Filtered Duration (h) <20% WER |

|

| 386 |

+

|---|---:|---:|

|

| 387 |

+

| mcv | 800.37 | 687.02 |

|

| 388 |

+

| mls | 1076.58 | 1043.87 |

|

| 389 |

+

| voxpopuli | 199.03 | 177.11 |

|

| 390 |

+

| mtedx | 170.31 | 147.48 |

|

| 391 |

+

| african_accented_french | 7.69 | 7.69 |

|

| 392 |

+

| yodas-fr000 | 2395.82 | 1502.82 |

|

| 393 |

+

| yodas-fr100 | 4978.16 | 1887.36 |

|

| 394 |

+

| yodas-fr101 | 4966.07 | 1882.39 |

|

| 395 |

+

| yodas-fr102 | 4992.84 | 1877.40 |

|

| 396 |

+

| yodas-fr103 | 3161.39 | 1189.32 |

|

| 397 |

+

| total | 22748.26 | 10402.46 |

|

| 398 |

+

|

| 399 |

+

Most data were first concatenated into 30-second segments, primarily preserving the same speaker, then inferred together. 50% of segments were trained with timestamps to ensure good timestamp prediction, and only 20% of segments were trained with previous context since we don't expect the 2-layer decoder to excel at this task.

|

| 400 |

+

|

| 401 |

+

This model was trained for a fairly long schedule of 160 epochs using aggressive data augmentation, with eval WER continuing to decrease. Some hyperparameter choices were made to favor long-form over short-form transcription. For further details, please refer to the [Distil-Whisper](https://github.com/huggingface/distil-whisper) repository.

|

| 402 |

+

|

| 403 |

+

All model training was conducted on the [Jean-Zay supercomputer](http://www.idris.fr/eng/jean-zay/jean-zay-presentation-eng.html) at GENCI. Special thanks to the IDRIS team for their excellent support throughout this project.

|

| 404 |

+

|

| 405 |

+

## Acknowledgements

|

| 406 |

+

|

| 407 |

+

- OpenAI for developing and open-sourcing the [Whisper](https://arxiv.org/abs/2212.04356) model

|

| 408 |

+

- 🤗 Hugging Face for implementing Whisper in the [Transformers](https://github.com/huggingface/transformers) library and creating [Distil-Whisper](https://github.com/huggingface/distil-whisper)

|

| 409 |

+

- [Genci](https://genci.fr/) for generously providing the GPU computing resources for this project

|

assets/eval_long_form.png

CHANGED

|

|

assets/eval_short_form.png

CHANGED

|

|

ctranslate2/config.json

ADDED

|

@@ -0,0 +1,280 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"alignment_heads": [

|

| 3 |

+

[

|

| 4 |

+

1,

|

| 5 |

+

0

|

| 6 |

+

],

|

| 7 |

+

[

|

| 8 |

+

1,

|

| 9 |

+

1

|

| 10 |

+

],

|

| 11 |

+

[

|

| 12 |

+

1,

|

| 13 |

+

2

|

| 14 |

+

],

|

| 15 |

+

[

|

| 16 |

+

1,

|

| 17 |

+

3

|

| 18 |

+

],

|

| 19 |

+

[

|

| 20 |

+

1,

|

| 21 |

+

4

|

| 22 |

+

],

|

| 23 |

+

[

|

| 24 |

+

1,

|

| 25 |

+

5

|

| 26 |

+

],

|

| 27 |

+

[

|

| 28 |

+

1,

|

| 29 |

+

6

|

| 30 |

+

],

|

| 31 |

+

[

|

| 32 |

+

1,

|

| 33 |

+

7

|

| 34 |

+

],

|

| 35 |

+

[

|

| 36 |

+

1,

|

| 37 |

+

8

|

| 38 |

+

],

|

| 39 |

+

[

|

| 40 |

+

1,

|

| 41 |

+

9

|

| 42 |

+

],

|

| 43 |

+

[

|

| 44 |

+

1,

|

| 45 |

+

10

|

| 46 |

+

],

|

| 47 |

+

[

|

| 48 |

+

1,

|

| 49 |

+

11

|

| 50 |

+

],

|

| 51 |

+

[

|

| 52 |

+

1,

|

| 53 |

+

12

|

| 54 |

+

],

|

| 55 |

+

[

|

| 56 |

+

1,

|

| 57 |

+

13

|

| 58 |

+

],

|

| 59 |

+

[

|

| 60 |

+

1,

|

| 61 |

+

14

|

| 62 |

+

],

|

| 63 |

+

[

|

| 64 |

+

1,

|

| 65 |

+

15

|

| 66 |

+

],

|

| 67 |

+

[

|

| 68 |

+

1,

|

| 69 |

+

16

|

| 70 |

+

],

|

| 71 |

+

[

|

| 72 |

+

1,

|

| 73 |

+

17

|

| 74 |

+

],

|

| 75 |

+

[

|

| 76 |

+

1,

|

| 77 |

+

18

|

| 78 |

+

],

|

| 79 |

+

[

|

| 80 |

+

1,

|

| 81 |

+

19

|

| 82 |

+

]

|

| 83 |

+

],

|

| 84 |

+

"lang_ids": [

|

| 85 |

+

50259,

|

| 86 |

+

50260,

|

| 87 |

+

50261,

|

| 88 |

+

50262,

|

| 89 |

+

50263,

|

| 90 |

+

50264,

|

| 91 |

+

50265,

|

| 92 |

+

50266,

|

| 93 |

+

50267,

|

| 94 |

+

50268,

|

| 95 |

+

50269,

|

| 96 |

+

50270,

|

| 97 |

+

50271,

|

| 98 |

+

50272,

|

| 99 |

+

50273,

|

| 100 |

+

50274,

|

| 101 |

+

50275,

|

| 102 |

+

50276,

|

| 103 |

+

50277,

|

| 104 |

+

50278,

|

| 105 |

+

50279,

|

| 106 |

+

50280,

|

| 107 |

+

50281,

|

| 108 |

+

50282,

|

| 109 |

+

50283,

|

| 110 |

+

50284,

|

| 111 |

+

50285,

|

| 112 |

+

50286,

|

| 113 |

+

50287,

|

| 114 |

+

50288,

|

| 115 |

+

50289,

|

| 116 |

+

50290,

|

| 117 |

+

50291,

|

| 118 |

+

50292,

|

| 119 |

+

50293,

|

| 120 |

+

50294,

|

| 121 |

+

50295,

|

| 122 |

+

50296,

|

| 123 |

+

50297,

|

| 124 |

+

50298,

|

| 125 |

+

50299,

|

| 126 |

+

50300,

|

| 127 |

+

50301,

|

| 128 |

+

50302,

|

| 129 |

+

50303,

|

| 130 |

+

50304,

|

| 131 |

+

50305,

|

| 132 |

+

50306,

|

| 133 |

+

50307,

|

| 134 |

+

50308,

|

| 135 |

+

50309,

|

| 136 |

+

50310,

|

| 137 |

+

50311,

|

| 138 |

+

50312,

|

| 139 |

+

50313,

|

| 140 |

+

50314,

|

| 141 |

+

50315,

|

| 142 |

+

50316,

|

| 143 |

+

50317,

|

| 144 |

+

50318,

|

| 145 |

+

50319,

|

| 146 |

+

50320,

|

| 147 |

+

50321,

|

| 148 |

+

50322,

|

| 149 |

+

50323,

|

| 150 |

+

50324,

|

| 151 |

+

50325,

|

| 152 |

+

50326,

|

| 153 |

+

50327,

|

| 154 |

+

50328,

|

| 155 |

+

50329,

|

| 156 |

+

50330,

|

| 157 |

+

50331,

|

| 158 |

+

50332,

|

| 159 |

+

50333,

|

| 160 |

+

50334,

|

| 161 |

+

50335,

|

| 162 |

+

50336,

|

| 163 |

+

50337,

|

| 164 |

+

50338,

|

| 165 |

+

50339,

|

| 166 |

+

50340,

|

| 167 |

+

50341,

|

| 168 |

+

50342,

|

| 169 |

+

50343,

|

| 170 |

+

50344,

|

| 171 |

+

50345,

|

| 172 |

+

50346,

|

| 173 |

+

50347,

|

| 174 |

+

50348,

|

| 175 |

+

50349,

|

| 176 |

+

50350,

|

| 177 |

+

50351,

|

| 178 |

+

50352,

|

| 179 |

+

50353,

|

| 180 |

+

50354,

|

| 181 |

+

50355,

|

| 182 |

+

50356,

|

| 183 |

+

50357,

|

| 184 |

+

50358

|

| 185 |

+

],

|

| 186 |

+

"suppress_ids": [

|

| 187 |

+

1,

|

| 188 |

+

2,

|

| 189 |

+

7,

|

| 190 |

+

8,

|

| 191 |

+

9,

|

| 192 |

+

10,

|

| 193 |

+

14,

|

| 194 |

+

25,

|

| 195 |

+

26,

|

| 196 |

+

27,

|

| 197 |

+

28,

|

| 198 |

+

29,

|

| 199 |

+

31,

|

| 200 |

+

58,

|

| 201 |

+

59,

|

| 202 |

+

60,

|

| 203 |

+

61,

|

| 204 |

+

62,

|

| 205 |

+

63,

|

| 206 |

+

90,

|

| 207 |

+

91,

|

| 208 |

+

92,

|

| 209 |

+

93,

|

| 210 |

+

359,

|

| 211 |

+

503,

|

| 212 |

+

522,

|

| 213 |

+

542,

|

| 214 |

+

873,

|

| 215 |

+

893,

|

| 216 |

+

902,

|

| 217 |

+

918,

|

| 218 |

+

922,

|

| 219 |

+

931,

|

| 220 |

+

1350,

|

| 221 |

+

1853,

|

| 222 |

+

1982,

|

| 223 |

+

2460,

|

| 224 |

+

2627,

|

| 225 |

+

3246,

|

| 226 |

+

3253,

|

| 227 |

+

3268,

|

| 228 |

+

3536,

|

| 229 |

+

3846,

|

| 230 |

+

3961,

|

| 231 |

+

4183,

|

| 232 |

+

4667,

|

| 233 |

+

6585,

|

| 234 |

+

6647,

|

| 235 |

+

7273,

|

| 236 |

+

9061,

|

| 237 |

+

9383,

|

| 238 |

+

10428,

|

| 239 |

+

10929,

|

| 240 |

+

11938,

|

| 241 |

+

12033,

|

| 242 |

+

12331,

|

| 243 |

+

12562,

|

| 244 |

+

13793,

|

| 245 |

+

14157,

|

| 246 |

+

14635,

|

| 247 |

+

15265,

|

| 248 |

+

15618,

|

| 249 |

+

16553,

|

| 250 |

+

16604,

|

| 251 |

+

18362,

|

| 252 |

+

18956,

|

| 253 |

+

20075,

|

| 254 |

+

21675,

|

| 255 |

+

22520,

|

| 256 |

+

26130,

|

| 257 |

+

26161,

|

| 258 |

+

26435,

|

| 259 |

+

28279,

|

| 260 |

+

29464,

|

| 261 |

+

31650,

|

| 262 |

+

32302,

|

| 263 |

+

32470,

|

| 264 |

+

36865,

|

| 265 |

+

42863,

|

| 266 |

+

47425,

|

| 267 |

+

49870,

|

| 268 |

+

50254,

|

| 269 |

+

50258,

|

| 270 |

+

50359,

|

| 271 |

+

50360,

|

| 272 |

+

50361,

|

| 273 |

+

50362,

|

| 274 |

+

50363

|

| 275 |

+

],

|

| 276 |

+

"suppress_ids_begin": [

|

| 277 |

+

220,

|

| 278 |

+

50257

|

| 279 |

+

]

|

| 280 |

+

}

|

ctranslate2/model.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ffc4fbe33beb84a944dfef7f50f586443cf63e9ec7b6aa97cba2e4581e40f85a

|

| 3 |

+

size 1512927906

|

ctranslate2/preprocessor_config.json

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"chunk_length": 30,

|

| 3 |

+

"feature_extractor_type": "WhisperFeatureExtractor",

|

| 4 |

+

"feature_size": 128,

|

| 5 |

+

"hop_length": 160,

|

| 6 |

+

"n_fft": 400,

|

| 7 |

+

"n_samples": 480000,

|

| 8 |

+

"nb_max_frames": 3000,

|

| 9 |

+

"padding_side": "right",

|

| 10 |

+

"padding_value": 0.0,

|

| 11 |

+

"processor_class": "WhisperProcessor",

|

| 12 |

+

"return_attention_mask": false,

|

| 13 |

+

"sampling_rate": 16000

|

| 14 |

+

}

|

ctranslate2/tokenizer.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

ctranslate2/vocabulary.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

ggml-model-q5_0.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:785404fd5bd992de0363b7c242a6a6061d0674e4f58e3fbd6135d8faf912637e

|

| 3 |

+

size 537819875

|

ggml-model.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7375e0a909cf0fc28e29d1e0ad0c9cd0d3fbf8198400ecacbc9b58939bb0baf7

|

| 3 |

+

size 1519521155

|

original_model.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1d28fcd2cb0d636eda5420aec766f5f8ac6e5a9bc9fae5c6dd0a2cbf243635b9

|

| 3 |

+

size 1513002965

|