Commit

·

9e826e6

1

Parent(s):

49e917d

Initial Commit

Browse files- .gitignore +1 -0

- README.md +188 -0

- ckpt/bpe_vocab +0 -0

- ckpt/codes.bpe.32000 +0 -0

- data-bin/dict.en.txt +0 -0

- data-bin/dict.zh.txt +0 -0

- data-bin/preprocess.log +4 -0

- data-bin/test.en-zh.en +1 -0

- data-bin/test.en-zh.zh +1 -0

- data-bin/test.zh-en.en +1 -0

- data-bin/test.zh-en.zh +1 -0

- docs/img.png +0 -0

- eval.sh +166 -0

- examples/configs/eval_benchmarks.yml +80 -0

- examples/configs/parallel_mono_12e12d_contrastive.yml +44 -0

- mcolt/__init__.py +4 -0

- mcolt/__pycache__/__init__.cpython-310.pyc +0 -0

- mcolt/arches/__init__.py +1 -0

- mcolt/arches/__pycache__/__init__.cpython-310.pyc +0 -0

- mcolt/arches/__pycache__/transformer.cpython-310.pyc +0 -0

- mcolt/arches/transformer.py +380 -0

- mcolt/criterions/__init__.py +1 -0

- mcolt/criterions/__pycache__/__init__.cpython-310.pyc +0 -0

- mcolt/criterions/__pycache__/label_smoothed_cross_entropy_with_contrastive.cpython-310.pyc +0 -0

- mcolt/criterions/label_smoothed_cross_entropy_with_contrastive.py +123 -0

- mcolt/data/__init__.py +1 -0

- mcolt/data/__pycache__/__init__.cpython-310.pyc +0 -0

- mcolt/data/__pycache__/subsample_language_pair_dataset.cpython-310.pyc +0 -0

- mcolt/data/subsample_language_pair_dataset.py +124 -0

- mcolt/tasks/__init__.py +2 -0

- mcolt/tasks/__pycache__/__init__.cpython-310.pyc +0 -0

- mcolt/tasks/__pycache__/translation_w_langtok.cpython-310.pyc +0 -0

- mcolt/tasks/__pycache__/translation_w_mono.cpython-310.pyc +0 -0

- mcolt/tasks/translation_w_langtok.py +476 -0

- mcolt/tasks/translation_w_mono.py +214 -0

- requirements.txt +5 -0

- scripts/load_config.sh +48 -0

- scripts/utils.py +116 -0

- test/input.en +1 -0

- test/input.zh +1 -0

- test/output +0 -0

- test/output.en.no_bpe +1 -0

- test/output.en.no_bpe.moses +1 -0

- test/output.zh +3 -0

- test/output.zh.no_bpe +1 -0

- test/output.zh.no_bpe.moses +1 -0

- train_w_mono.sh +56 -0

.gitignore

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

.idea

|

README.md

ADDED

|

@@ -0,0 +1,188 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

```bash

|

| 2 |

+

conda install pytorch torchvision torchaudio pytorch-cuda=11.6 -c pytorch -c nvidia

|

| 3 |

+

```

|

| 4 |

+

|

| 5 |

+

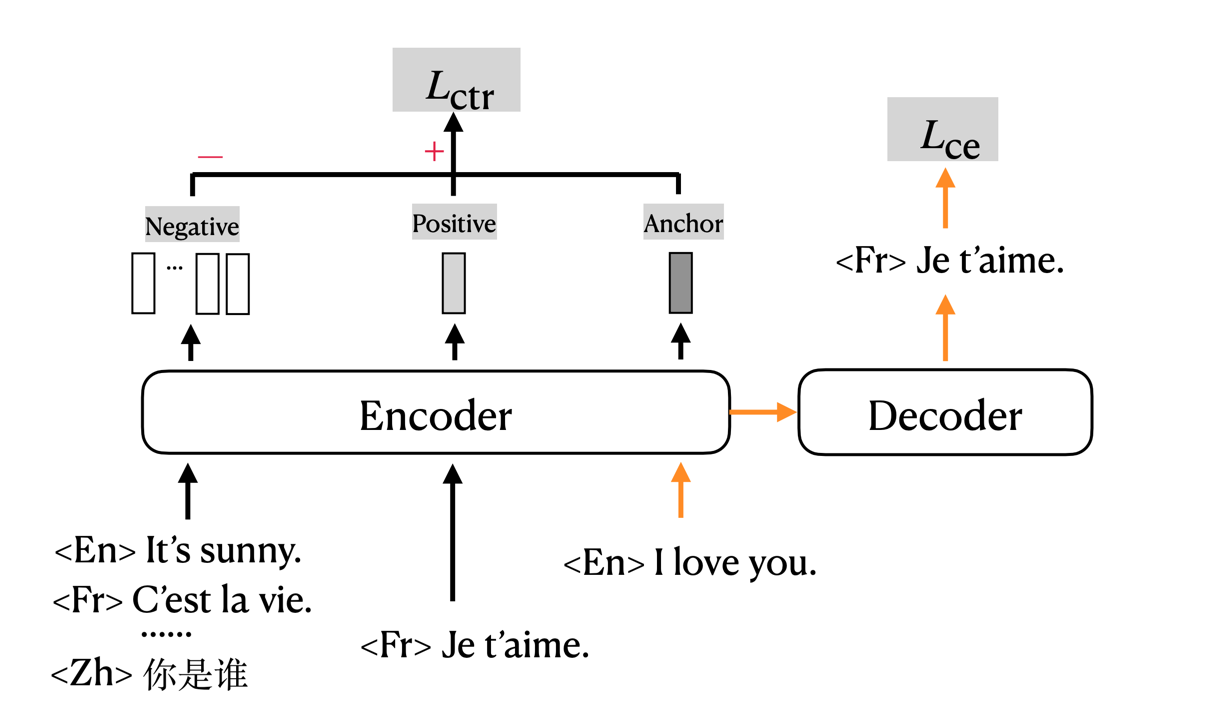

# Contrastive Learning for Many-to-many Multilingual Neural Machine Translation(mCOLT/mRASP2), ACL2021

|

| 6 |

+

The code for training mCOLT/mRASP2, a multilingual neural machine translation training method, implemented based on [fairseq](https://github.com/pytorch/fairseq).

|

| 7 |

+

|

| 8 |

+

**mRASP2**: [paper](https://arxiv.org/abs/2105.09501) [blog](https://medium.com/@panxiao1994/mrasp2-multilingual-nmt-advances-via-contrastive-learning-ac8c4c35d63)

|

| 9 |

+

|

| 10 |

+

**mRASP**: [paper](https://www.aclweb.org/anthology/2020.emnlp-main.210.pdf),

|

| 11 |

+

[code](https://github.com/linzehui/mRASP)

|

| 12 |

+

|

| 13 |

+

---

|

| 14 |

+

## News

|

| 15 |

+

We have released two versions, this version is the original one. In this implementation:

|

| 16 |

+

- You should first merge all data, by pre-pending language token before each sentence to indicate the language.

|

| 17 |

+

- AA/RAS muse be done off-line (before binarize), check [this toolkit](https://github.com/linzehui/mRASP/blob/master/preprocess).

|

| 18 |

+

|

| 19 |

+

**New implementation**: https://github.com/PANXiao1994/mRASP2/tree/new_impl

|

| 20 |

+

|

| 21 |

+

* Acknowledgement: This work is supported by [Bytedance](https://bytedance.com). We thank [Chengqi](https://github.com/zhaocq-nlp) for uploading all files and checkpoints.

|

| 22 |

+

|

| 23 |

+

## Introduction

|

| 24 |

+

|

| 25 |

+

mRASP2/mCOLT, representing multilingual Contrastive Learning for Transformer, is a multilingual neural machine translation model that supports complete many-to-many multilingual machine translation. It employs both parallel corpora and multilingual corpora in a unified training framework. For detailed information please refer to the paper.

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

## Pre-requisite

|

| 30 |

+

```bash

|

| 31 |

+

pip install -r requirements.txt

|

| 32 |

+

# install fairseq

|

| 33 |

+

git clone https://github.com/pytorch/fairseq

|

| 34 |

+

cd fairseq

|

| 35 |

+

pip install --editable ./

|

| 36 |

+

```

|

| 37 |

+

|

| 38 |

+

## Training Data and Checkpoints

|

| 39 |

+

We release our preprocessed training data and checkpoints in the following.

|

| 40 |

+

### Dataset

|

| 41 |

+

|

| 42 |

+

We merge 32 English-centric language pairs, resulting in 64 directed translation pairs in total. The original 32 language pairs corpus contains about 197M pairs of sentences. We get about 262M pairs of sentences after applying RAS, since we keep both the original sentences and the substituted sentences. We release both the original dataset and dataset after applying RAS.

|

| 43 |

+

|

| 44 |

+

| Dataset | #Pair |

|

| 45 |

+

| --- | --- |

|

| 46 |

+

| [32-lang-pairs-TRAIN](https://lf3-nlp-opensource.bytetos.com/obj/nlp-opensource/acl2021/mrasp2/bin_parallel/download.sh) | 197603294 |

|

| 47 |

+

| [32-lang-pairs-RAS-TRAIN](https://lf3-nlp-opensource.bytetos.com/obj/nlp-opensource/acl2021/mrasp2/bin_parallel_ras/download.sh) | 262662792 |

|

| 48 |

+

| [mono-split-a](https://lf3-nlp-opensource.bytetos.com/obj/nlp-opensource/acl2021/mrasp2/bin_mono_split_a/download.sh) | - |

|

| 49 |

+

| [mono-split-b](https://lf3-nlp-opensource.bytetos.com/obj/nlp-opensource/acl2021/mrasp2/bin_mono_split_b/download.sh) | - |

|

| 50 |

+

| [mono-split-c](https://lf3-nlp-opensource.bytetos.com/obj/nlp-opensource/acl2021/mrasp2/bin_mono_split_c/download.sh) | - |

|

| 51 |

+

| [mono-split-d](https://lf3-nlp-opensource.bytetos.com/obj/nlp-opensource/acl2021/mrasp2/bin_mono_split_d/download.sh) | - |

|

| 52 |

+

| [mono-split-e](https://lf3-nlp-opensource.bytetos.com/obj/nlp-opensource/acl2021/mrasp2/bin_mono_split_e/download.sh) | - |

|

| 53 |

+

| [mono-split-de-fr-en](https://lf3-nlp-opensource.bytetos.com/obj/nlp-opensource/acl2021/mrasp2/bin_mono_de_fr_en/download.sh) | - |

|

| 54 |

+

| [mono-split-nl-pl-pt](https://lf3-nlp-opensource.bytetos.com/obj/nlp-opensource/acl2021/mrasp2/bin_mono_nl_pl_pt/download.sh) | - |

|

| 55 |

+

| [32-lang-pairs-DEV-en-centric](https://lf3-nlp-opensource.bytetos.com/obj/nlp-opensource/acl2021/mrasp2/bin_dev_en_centric/download.sh) | - |

|

| 56 |

+

| [32-lang-pairs-DEV-many-to-many](https://lf3-nlp-opensource.bytetos.com/obj/nlp-opensource/acl2021/mrasp2/bin_dev_m2m/download.sh) | - |

|

| 57 |

+

| [Vocab](https://lf3-nlp-opensource.bytetos.com/obj/nlp-opensource/acl2021/mrasp2/bpe_vocab) | - |

|

| 58 |

+

| [BPE Code](https://lf3-nlp-opensource.bytetos.com/obj/nlp-opensource/emnlp2020/mrasp/pretrain/dataset/codes.bpe.32000) | - |

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

### Checkpoints & Results

|

| 62 |

+

* **Please note that the provided checkpoint is sightly different from that in the paper.** In the following sections, we report the results of the provided checkpoints.

|

| 63 |

+

|

| 64 |

+

#### English-centric Directions

|

| 65 |

+

We report **tokenized BLEU** in the following table. Please click the model links to download. It is in pytorch format. (check eval.sh for details)

|

| 66 |

+

|

| 67 |

+

|Models | [6e6d-no-mono](https://lf3-nlp-opensource.bytetos.com/obj/nlp-opensource/acl2021/mrasp2/6e6d_no_mono.pt) | [12e12d-no-mono](https://lf3-nlp-opensource.bytetos.com/obj/nlp-opensource/acl2021/mrasp2/12e12d_no_mono.pt) | [12e12d](https://lf3-nlp-opensource.bytetos.com/obj/nlp-opensource/acl2021/mrasp2/12e12d_last.pt) |

|

| 68 |

+

| --- | --- | --- | --- |

|

| 69 |

+

| en2cs/wmt16 | 21.0 | 22.3 | 23.8 |

|

| 70 |

+

| cs2en/wmt16 | 29.6 | 32.4 | 33.2 |

|

| 71 |

+

| en2fr/wmt14 | 42.0 | 43.3 | 43.4 |

|

| 72 |

+

| fr2en/wmt14 | 37.8 | 39.3 | 39.5 |

|

| 73 |

+

| en2de/wmt14 | 27.4 | 29.2 | 29.5 |

|

| 74 |

+

| de2en/wmt14 | 32.2 | 34.9 | 35.2 |

|

| 75 |

+

| en2zh/wmt17 | 33.0 | 34.9 | 34.1 |

|

| 76 |

+

| zh2en/wmt17 | 22.4 | 24.0 | 24.4 |

|

| 77 |

+

| en2ro/wmt16 | 26.6 | 28.1 | 28.7 |

|

| 78 |

+

| ro2en/wmt16 | 36.8 | 39.0 | 39.1 |

|

| 79 |

+

| en2tr/wmt16 | 18.6 | 20.3 | 21.2 |

|

| 80 |

+

| tr2en/wmt16 | 22.2 | 25.5 | 26.1 |

|

| 81 |

+

| en2ru/wmt19 | 17.4 | 18.5 | 19.2 |

|

| 82 |

+

| ru2en/wmt19 | 22.0 | 23.2 | 23.6 |

|

| 83 |

+

| en2fi/wmt17 | 20.2 | 22.1 | 22.9 |

|

| 84 |

+

| fi2en/wmt17 | 26.1 | 29.5 | 29.7 |

|

| 85 |

+

| en2es/wmt13 | 32.8 | 34.1 | 34.6 |

|

| 86 |

+

| es2en/wmt13 | 32.8 | 34.6 | 34.7 |

|

| 87 |

+

| en2it/wmt09 | 28.9 | 30.0 | 30.8 |

|

| 88 |

+

| it2en/wmt09 | 31.4 | 32.7 | 32.8 |

|

| 89 |

+

|

| 90 |

+

#### Unsupervised Directions

|

| 91 |

+

We report **tokenized BLEU** in the following table. (check eval.sh for details)

|

| 92 |

+

|

| 93 |

+

| | 12e12d |

|

| 94 |

+

| --- | --- |

|

| 95 |

+

| en2pl/wmt20 | 6.2 |

|

| 96 |

+

| pl2en/wmt20 | 13.5 |

|

| 97 |

+

| en2nl/iwslt14 | 8.8 |

|

| 98 |

+

| nl2en/iwslt14 | 27.1 |

|

| 99 |

+

| en2pt/opus100 | 18.9 |

|

| 100 |

+

| pt2en/opus100 | 29.2 |

|

| 101 |

+

|

| 102 |

+

#### Zero-shot Directions

|

| 103 |

+

* row: source language

|

| 104 |

+

* column: target language

|

| 105 |

+

We report **[sacreBLEU](https://github.com/mozilla/sacreBLEU)** in the following table.

|

| 106 |

+

|

| 107 |

+

| 12e12d | ar | zh | nl | fr | de | ru |

|

| 108 |

+

| --- | --- | --- | --- | --- | --- | --- |

|

| 109 |

+

| ar | - | 32.5 | 3.2 | 22.8 | 11.2 | 16.7 |

|

| 110 |

+

| zh | 6.5 | - | 1.9 | 32.9 | 7.6 | 23.7 |

|

| 111 |

+

| nl | 1.7 | 8.2 | - | 7.5 | 10.2 | 2.9 |

|

| 112 |

+

| fr | 6.2 | 42.3 | 7.5 | - | 18.9 | 24.4 |

|

| 113 |

+

| de | 4.9 | 21.6 | 9.2 | 24.7 | - | 14.4 |

|

| 114 |

+

| ru | 7.1 | 40.6 | 4.5 | 29.9 | 13.5 | - |

|

| 115 |

+

|

| 116 |

+

## Training

|

| 117 |

+

```bash

|

| 118 |

+

export NUM_GPU=4 && bash train_w_mono.sh ${model_config}

|

| 119 |

+

```

|

| 120 |

+

* We give example of `${model_config}` in `${PROJECT_REPO}/examples/configs/parallel_mono_12e12d_contrastive.yml`

|

| 121 |

+

|

| 122 |

+

## Inference

|

| 123 |

+

* You must pre-pend the corresponding language token to the source side before binarize the test data.

|

| 124 |

+

```bash

|

| 125 |

+

fairseq-generate ${test_path} \

|

| 126 |

+

--user-dir ${repo_dir}/mcolt \

|

| 127 |

+

-s ${src} \

|

| 128 |

+

-t ${tgt} \

|

| 129 |

+

--skip-invalid-size-inputs-valid-test \

|

| 130 |

+

--path ${ckpts} \

|

| 131 |

+

--max-tokens ${batch_size} \

|

| 132 |

+

--task translation_w_langtok \

|

| 133 |

+

${options} \

|

| 134 |

+

--lang-prefix-tok "LANG_TOK_"`echo "${tgt} " | tr '[a-z]' '[A-Z]'` \

|

| 135 |

+

--max-source-positions ${max_source_positions} \

|

| 136 |

+

--max-target-positions ${max_target_positions} \

|

| 137 |

+

--nbest 1 | grep -E '[S|H|P|T]-[0-9]+' > ${final_res_file}

|

| 138 |

+

|

| 139 |

+

python fairseq/fairseq_cli/preprocess.py --dataset-impl raw --srcdict ckpt/bpe_vocab --tgtdict ckpt/bpe_vocab --testpref test/input -s zh -t en

|

| 140 |

+

|

| 141 |

+

python fairseq/fairseq_cli/interactive.py /mnt/data2/siqiouyang/demo/mRASP2/data-bin \

|

| 142 |

+

--user-dir mcolt \

|

| 143 |

+

-s en \

|

| 144 |

+

-t zh \

|

| 145 |

+

--skip-invalid-size-inputs-valid-test \

|

| 146 |

+

--path ckpt/12e12d_last.pt \

|

| 147 |

+

--max-tokens 1024 \

|

| 148 |

+

--task translation_w_langtok \

|

| 149 |

+

--lang-prefix-tok "LANG_TOK_"`echo "zh " | tr '[a-z]' '[A-Z]'` \

|

| 150 |

+

--max-source-positions 1024 \

|

| 151 |

+

--max-target-positions 1024 \

|

| 152 |

+

--nbest 1 \

|

| 153 |

+

--bpe subword_nmt \

|

| 154 |

+

--bpe-codes ckpt/codes.bpe.32000 \

|

| 155 |

+

--post-process --tokenizer moses \

|

| 156 |

+

--input ./test/input.en | grep -E '[D]-[0-9]+' > test/output.zh.no_bpe.moses

|

| 157 |

+

|

| 158 |

+

python3 ${repo_dir}/scripts/utils.py ${res_file} ${ref_file} || exit 1;

|

| 159 |

+

```

|

| 160 |

+

|

| 161 |

+

## Synonym dictionaries

|

| 162 |

+

We use the bilingual synonym dictionaries provised by [MUSE](https://github.com/facebookresearch/MUSE).

|

| 163 |

+

|

| 164 |

+

We generate multilingual synonym dictionaries using [this script](https://github.com/linzehui/mRASP/blob/master/preprocess/tools/ras/multi_way_word_graph.py), and apply

|

| 165 |

+

RAS using [this script](https://github.com/linzehui/mRASP/blob/master/preprocess/tools/ras/random_alignment_substitution_w_multi.sh).

|

| 166 |

+

|

| 167 |

+

| Description | File | Size |

|

| 168 |

+

| --- | --- | --- |

|

| 169 |

+

| dep=1 | [synonym_dict_raw_dep1](https://lf3-nlp-opensource.bytetos.com/obj/nlp-opensource/acl2021/mrasp2/synonym_dict_raw_dep1) | 138.0 M |

|

| 170 |

+

| dep=2 | [synonym_dict_raw_dep2](https://lf3-nlp-opensource.bytetos.com/obj/nlp-opensource/acl2021/mrasp2/synonym_dict_raw_dep2) | 1.6 G |

|

| 171 |

+

| dep=3 | [synonym_dict_raw_dep3](https://lf3-nlp-opensource.bytetos.com/obj/nlp-opensource/acl2021/mrasp2/synonym_dict_raw_dep3) | 2.2 G |

|

| 172 |

+

|

| 173 |

+

## Contact

|

| 174 |

+

Please contact me via e-mail `[email protected]` or via [wechat/zhihu](https://fork-ball-95c.notion.site/mRASP2-4e9b3450d5aa4137ae1a2c46d5f3c1fa) or join [the slack group](https://mrasp2.slack.com/join/shared_invite/zt-10k9710mb-MbDHzDboXfls2Omd8cuWqA)!

|

| 175 |

+

|

| 176 |

+

## Citation

|

| 177 |

+

Please cite as:

|

| 178 |

+

```

|

| 179 |

+

@inproceedings{mrasp2,

|

| 180 |

+

title = {Contrastive Learning for Many-to-many Multilingual Neural Machine Translation},

|

| 181 |

+

author= {Xiao Pan and

|

| 182 |

+

Mingxuan Wang and

|

| 183 |

+

Liwei Wu and

|

| 184 |

+

Lei Li},

|

| 185 |

+

booktitle = {Proceedings of ACL 2021},

|

| 186 |

+

year = {2021},

|

| 187 |

+

}

|

| 188 |

+

```

|

ckpt/bpe_vocab

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

ckpt/codes.bpe.32000

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

data-bin/dict.en.txt

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

data-bin/dict.zh.txt

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

data-bin/preprocess.log

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Namespace(no_progress_bar=False, log_interval=100, log_format=None, log_file=None, aim_repo=None, aim_run_hash=None, tensorboard_logdir=None, wandb_project=None, azureml_logging=False, seed=1, cpu=False, tpu=False, bf16=False, memory_efficient_bf16=False, fp16=False, memory_efficient_fp16=False, fp16_no_flatten_grads=False, fp16_init_scale=128, fp16_scale_window=None, fp16_scale_tolerance=0.0, on_cpu_convert_precision=False, min_loss_scale=0.0001, threshold_loss_scale=None, amp=False, amp_batch_retries=2, amp_init_scale=128, amp_scale_window=None, user_dir=None, empty_cache_freq=0, all_gather_list_size=16384, model_parallel_size=1, quantization_config_path=None, profile=False, reset_logging=False, suppress_crashes=False, use_plasma_view=False, plasma_path='/tmp/plasma', criterion='cross_entropy', tokenizer=None, bpe=None, optimizer=None, lr_scheduler='fixed', scoring='bleu', task='translation', source_lang='en', target_lang='zh', trainpref=None, validpref=None, testpref='test/input', align_suffix=None, destdir='data-bin', thresholdtgt=0, thresholdsrc=0, tgtdict='ckpt/bpe_vocab', srcdict='ckpt/bpe_vocab', nwordstgt=-1, nwordssrc=-1, alignfile=None, dataset_impl='raw', joined_dictionary=False, only_source=False, padding_factor=8, workers=1, dict_only=False)

|

| 2 |

+

Wrote preprocessed data to data-bin

|

| 3 |

+

Namespace(no_progress_bar=False, log_interval=100, log_format=None, log_file=None, aim_repo=None, aim_run_hash=None, tensorboard_logdir=None, wandb_project=None, azureml_logging=False, seed=1, cpu=False, tpu=False, bf16=False, memory_efficient_bf16=False, fp16=False, memory_efficient_fp16=False, fp16_no_flatten_grads=False, fp16_init_scale=128, fp16_scale_window=None, fp16_scale_tolerance=0.0, on_cpu_convert_precision=False, min_loss_scale=0.0001, threshold_loss_scale=None, amp=False, amp_batch_retries=2, amp_init_scale=128, amp_scale_window=None, user_dir=None, empty_cache_freq=0, all_gather_list_size=16384, model_parallel_size=1, quantization_config_path=None, profile=False, reset_logging=False, suppress_crashes=False, use_plasma_view=False, plasma_path='/tmp/plasma', criterion='cross_entropy', tokenizer=None, bpe=None, optimizer=None, lr_scheduler='fixed', scoring='bleu', task='translation', source_lang='zh', target_lang='en', trainpref=None, validpref=None, testpref='test/input', align_suffix=None, destdir='data-bin', thresholdtgt=0, thresholdsrc=0, tgtdict='ckpt/bpe_vocab', srcdict='ckpt/bpe_vocab', nwordstgt=-1, nwordssrc=-1, alignfile=None, dataset_impl='raw', joined_dictionary=False, only_source=False, padding_factor=8, workers=1, dict_only=False)

|

| 4 |

+

Wrote preprocessed data to data-bin

|

data-bin/test.en-zh.en

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

LANG_TOK_EN Hello my friend!

|

data-bin/test.en-zh.zh

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

LANG_TOK_ZH

|

data-bin/test.zh-en.en

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

LANG_TOK_EN Hello my friend!

|

data-bin/test.zh-en.zh

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

LANG_TOK_ZH 你好!

|

docs/img.png

ADDED

|

eval.sh

ADDED

|

@@ -0,0 +1,166 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env bash

|

| 2 |

+

|

| 3 |

+

# repo_dir: root directory of the project

|

| 4 |

+

repo_dir="$( cd "$( dirname "$0" )" && pwd )"

|

| 5 |

+

echo "==== Working directory: ====" >&2

|

| 6 |

+

echo "${repo_dir}" >&2

|

| 7 |

+

echo "============================" >&2

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

test_config=$1

|

| 11 |

+

source ${repo_dir}/scripts/load_config.sh ${test_config} ${repo_dir}

|

| 12 |

+

model_dir=$2

|

| 13 |

+

choice=$3 # all|best|last

|

| 14 |

+

|

| 15 |

+

model_dir=${repo_dir}/model

|

| 16 |

+

data_dir=${repo_dir}/data

|

| 17 |

+

res_path=${model_dir}/results

|

| 18 |

+

|

| 19 |

+

mkdir -p ${model_dir} ${data_dir} ${res_path}

|

| 20 |

+

|

| 21 |

+

testset_name=data_testset_1_name

|

| 22 |

+

testset_path=data_testset_1_path

|

| 23 |

+

testset_ref=data_testset_1_ref

|

| 24 |

+

testset_direc=data_testset_1_direction

|

| 25 |

+

i=1

|

| 26 |

+

testsets=""

|

| 27 |

+

while [[ ! -z ${!testset_path} && ! -z ${!testset_direc} ]]; do

|

| 28 |

+

dataname=${!testset_name}

|

| 29 |

+

mkdir -p ${data_dir}/${!testset_direc}/${dataname} ${data_dir}/ref/${!testset_direc}/${dataname}

|

| 30 |

+

cp ${!testset_path}/* ${data_dir}/${!testset_direc}/${dataname}/

|

| 31 |

+

cp ${!testset_ref}/* ${data_dir}/ref/${!testset_direc}/${dataname}/

|

| 32 |

+

if [[ $testsets == "" ]]; then

|

| 33 |

+

testsets=${!testset_direc}/${dataname}

|

| 34 |

+

else

|

| 35 |

+

testsets=${testsets}:${!testset_direc}/${dataname}

|

| 36 |

+

fi

|

| 37 |

+

i=$((i+1))

|

| 38 |

+

testset_name=testset_${i}_name

|

| 39 |

+

testset_path=testset_${i}_path

|

| 40 |

+

testset_ref=testset_${i}_ref

|

| 41 |

+

testset_direc=testset_${i}_direction

|

| 42 |

+

done

|

| 43 |

+

|

| 44 |

+

IFS=':' read -r -a testset_list <<< ${testsets}

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

bleu () {

|

| 48 |

+

src=$1

|

| 49 |

+

tgt=$2

|

| 50 |

+

res_file=$3

|

| 51 |

+

ref_file=$4

|

| 52 |

+

if [[ -f ${res_file} ]]; then

|

| 53 |

+

f_dirname=`dirname ${res_file}`

|

| 54 |

+

python3 ${repo_dir}/scripts/utils.py ${res_file} ${ref_file} || exit 1;

|

| 55 |

+

input_file="${f_dirname}/hypo.out.nobpe"

|

| 56 |

+

output_file="${f_dirname}/hypo.out.nobpe.final"

|

| 57 |

+

# form command

|

| 58 |

+

cmd="cat ${input_file}"

|

| 59 |

+

lang_token="LANG_TOK_"`echo "${tgt} " | tr '[a-z]' '[A-Z]'`

|

| 60 |

+

if [[ $tgt == "fr" ]]; then

|

| 61 |

+

cmd=$cmd" | sed -Ee 's/\"([^\"]*)\"/« \1 »/g'"

|

| 62 |

+

elif [[ $tgt == "zh" ]]; then

|

| 63 |

+

tokenizer="zh"

|

| 64 |

+

elif [[ $tgt == "ja" ]]; then

|

| 65 |

+

tokenizer="ja-mecab"

|

| 66 |

+

fi

|

| 67 |

+

[[ -z $tokenizer ]] && tokenizer="none"

|

| 68 |

+

cmd=$cmd" | sed -e s'|${lang_token} ||g' > ${output_file}"

|

| 69 |

+

eval $cmd || { echo "$cmd FAILED !"; exit 1; }

|

| 70 |

+

cat ${output_file} | sacrebleu -l ${src}-${tgt} -tok $tokenizer --short "${f_dirname}/ref.out" | awk '{print $3}'

|

| 71 |

+

else

|

| 72 |

+

echo "${res_file} not exist!" >&2 && exit 1;

|

| 73 |

+

fi

|

| 74 |

+

}

|

| 75 |

+

|

| 76 |

+

# monitor

|

| 77 |

+

# ${ckptname}/${direction}/${testname}/orig.txt

|

| 78 |

+

(inotifywait -r -m -e close_write ${res_path} |

|

| 79 |

+

while read path action file; do

|

| 80 |

+

if [[ "$file" =~ .*txt$ ]]; then

|

| 81 |

+

tmp_str="${path%/*}"

|

| 82 |

+

testname="${tmp_str##*/}"

|

| 83 |

+

tmp_str="${tmp_str%/*}"

|

| 84 |

+

direction="${tmp_str##*/}"

|

| 85 |

+

tmp_str="${tmp_str%/*}"

|

| 86 |

+

ckptname="${tmp_str##*/}"

|

| 87 |

+

src_lang="${direction%2*}"

|

| 88 |

+

tgt_lang="${direction##*2}"

|

| 89 |

+

res_file=$path$file

|

| 90 |

+

ref_file=${data_dir}/ref/${direction}/${testname}/dev.${tgt_lang}

|

| 91 |

+

bleuscore=`bleu ${src_lang} ${tgt_lang} ${res_file} ${ref_file}`

|

| 92 |

+

bleu_str="$(date "+%Y-%m-%d %H:%M:%S")\t${ckptname}\t${direction}/${testname}\t$bleuscore"

|

| 93 |

+

echo -e ${bleu_str} # to stdout

|

| 94 |

+

echo -e ${bleu_str} >> ${model_dir}/summary.log

|

| 95 |

+

fi

|

| 96 |

+

done) &

|

| 97 |

+

|

| 98 |

+

|

| 99 |

+

if [[ ${choice} == "all" ]]; then

|

| 100 |

+

filelist=`ls -la ${model_dir} | sort -k6,7 -r | awk '{print $NF}' | grep .pt$ | tr '\n' ' '`

|

| 101 |

+

elif [[ ${choice} == "best" ]]; then

|

| 102 |

+

filelist="${model_dir}/checkpoint_best.pt"

|

| 103 |

+

elif [[ ${choice} == "last" ]]; then

|

| 104 |

+

filelist="${model_dir}/checkpoint_last.pt"

|

| 105 |

+

else

|

| 106 |

+

echo "invalid choice!" && exit 2;

|

| 107 |

+

fi

|

| 108 |

+

|

| 109 |

+

N=${NUM_GPU}

|

| 110 |

+

#export CUDA_VISIBLE_DEVICES=$(seq -s ',' 0 $(($N - 1)) )

|

| 111 |

+

|

| 112 |

+

|

| 113 |

+

infer_test () {

|

| 114 |

+

test_path=$1

|

| 115 |

+

ckpts=$2

|

| 116 |

+

gpu=$3

|

| 117 |

+

final_res_file=$4

|

| 118 |

+

src=$5

|

| 119 |

+

tgt=$6

|

| 120 |

+

gpu_cmd="CUDA_VISIBLE_DEVICES=$gpu "

|

| 121 |

+

lang_token="LANG_TOK_"`echo "${tgt} " | tr '[a-z]' '[A-Z]'`

|

| 122 |

+

[[ -z ${max_source_positions} ]] && max_source_positions=1024

|

| 123 |

+

[[ -z ${max_target_positions} ]] && max_target_positions=1024

|

| 124 |

+

command=${gpu_cmd}"fairseq-generate ${test_path} \

|

| 125 |

+

--user-dir ${repo_dir}/mcolt \

|

| 126 |

+

-s ${src} \

|

| 127 |

+

-t ${tgt} \

|

| 128 |

+

--skip-invalid-size-inputs-valid-test \

|

| 129 |

+

--path ${ckpts} \

|

| 130 |

+

--max-tokens 1024 \

|

| 131 |

+

--task translation_w_langtok \

|

| 132 |

+

${options} \

|

| 133 |

+

--lang-prefix-tok ${lang_token} \

|

| 134 |

+

--max-source-positions ${max_source_positions} \

|

| 135 |

+

--max-target-positions ${max_target_positions} \

|

| 136 |

+

--nbest 1 | grep -E '[S|H|P|T]-[0-9]+' > ${final_res_file}

|

| 137 |

+

"

|

| 138 |

+

echo "$command"

|

| 139 |

+

}

|

| 140 |

+

|

| 141 |

+

export -f infer_test

|

| 142 |

+

i=0

|

| 143 |

+

(for ckpt in ${filelist}

|

| 144 |

+

do

|

| 145 |

+

for testset in "${testset_list[@]}"

|

| 146 |

+

do

|

| 147 |

+

ckptbase=`basename $ckpt`

|

| 148 |

+

ckptname="${ckptbase%.*}"

|

| 149 |

+

direction="${testset%/*}"

|

| 150 |

+

testname="${testset##*/}"

|

| 151 |

+

src_lang="${direction%2*}"

|

| 152 |

+

tgt_lang="${direction##*2}"

|

| 153 |

+

|

| 154 |

+

((i=i%N)); ((i++==0)) && wait

|

| 155 |

+

test_path=${data_dir}/${testset}

|

| 156 |

+

|

| 157 |

+

echo "-----> "${ckptname}" | "${direction}/$testname" <-----" >&2

|

| 158 |

+

if [[ ! -d ${res_path}/${ckptname}/${direction}/${testname} ]]; then

|

| 159 |

+

mkdir -p ${res_path}/${ckptname}/${direction}/${testname}

|

| 160 |

+

fi

|

| 161 |

+

final_res_file="${res_path}/${ckptname}/${direction}/${testname}/orig.txt"

|

| 162 |

+

command=`infer_test ${test_path} ${model_dir}/${ckptname}.pt $((i-1)) ${final_res_file} ${src_lang} ${tgt_lang}`

|

| 163 |

+

echo "${command}"

|

| 164 |

+

eval $command &

|

| 165 |

+

done

|

| 166 |

+

done)

|

examples/configs/eval_benchmarks.yml

ADDED

|

@@ -0,0 +1,80 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

data_testset_1:

|

| 2 |

+

direction: en2de

|

| 3 |

+

name: wmt14

|

| 4 |

+

path: data/binarized/en_de/en2de/wmt14

|

| 5 |

+

ref: data/dev/en2de/wmt14

|

| 6 |

+

data_testset_10:

|

| 7 |

+

direction: ru2en

|

| 8 |

+

name: newstest2019

|

| 9 |

+

path: data/binarized/en_ru/ru2en/newstest2019

|

| 10 |

+

ref: data/dev/ru2en/newstest2019

|

| 11 |

+

data_testset_11:

|

| 12 |

+

direction: en2fi

|

| 13 |

+

name: newstest2017

|

| 14 |

+

path: data/binarized/en_fi/en2fi/newstest2017

|

| 15 |

+

ref: data/dev/en2fi/newstest2017

|

| 16 |

+

data_testset_12:

|

| 17 |

+

direction: fi2en

|

| 18 |

+

name: newstest2017

|

| 19 |

+

path: data/binarized/en_fi/fi2en/newstest2017

|

| 20 |

+

ref: data/dev/fi2en/newstest2017

|

| 21 |

+

data_testset_13:

|

| 22 |

+

direction: en2cs

|

| 23 |

+

name: newstest2016

|

| 24 |

+

path: data/binarized/en_cs/en2cs/newstest2016

|

| 25 |

+

ref: data/dev/en2cs/newstest2016

|

| 26 |

+

data_testset_14:

|

| 27 |

+

direction: cs2en

|

| 28 |

+

name: newstest2016

|

| 29 |

+

path: data/binarized/en_cs/cs2en/newstest2016

|

| 30 |

+

ref: data/dev/cs2en/newstest2016

|

| 31 |

+

data_testset_15:

|

| 32 |

+

direction: en2et

|

| 33 |

+

name: newstest2018

|

| 34 |

+

path: data/binarized/en_et/en2et/newstest2018

|

| 35 |

+

ref: data/dev/en2et/newstest2018

|

| 36 |

+

data_testset_16:

|

| 37 |

+

direction: et2en

|

| 38 |

+

name: newstest2018

|

| 39 |

+

path: data/binarized/en_et/et2en/newstest2018

|

| 40 |

+

ref: data/dev/et2en/newstest2018

|

| 41 |

+

data_testset_2:

|

| 42 |

+

direction: de2en

|

| 43 |

+

name: wmt14

|

| 44 |

+

path: data/binarized/en_de/de2en/wmt14

|

| 45 |

+

ref: data/dev/de2en/wmt14

|

| 46 |

+

data_testset_3:

|

| 47 |

+

direction: en2fr

|

| 48 |

+

name: newstest2014

|

| 49 |

+

path: data/binarized/en_fr/en2fr/newstest2014

|

| 50 |

+

ref: data/dev/en2fr/newstest2014

|

| 51 |

+

data_testset_4:

|

| 52 |

+

direction: fr2en

|

| 53 |

+

name: newstest2014

|

| 54 |

+

path: data/binarized/en_fr/fr2en/newstest2014

|

| 55 |

+

ref: data/dev/fr2en/newstest2014

|

| 56 |

+

data_testset_5:

|

| 57 |

+

direction: en2ro

|

| 58 |

+

name: wmt16

|

| 59 |

+

path: data/binarized/en_ro/en_ro/wmt16

|

| 60 |

+

ref: data/dev/en_ro/wmt16

|

| 61 |

+

data_testset_6:

|

| 62 |

+

direction: ro2en

|

| 63 |

+

name: wmt16

|

| 64 |

+

path: data/binarized/en_ro/en_ro/wmt16

|

| 65 |

+

ref: data/dev/en_ro/wmt16

|

| 66 |

+

data_testset_7:

|

| 67 |

+

direction: en2zh

|

| 68 |

+

name: wmt17

|

| 69 |

+

path: data/binarized/en_zh/en2zh/wmt17

|

| 70 |

+

ref: data/dev/en2zh/wmt17

|

| 71 |

+

data_testset_8:

|

| 72 |

+

direction: zh2en

|

| 73 |

+

name: wmt17

|

| 74 |

+

path: data/binarized/en_zh/zh2en/wmt17

|

| 75 |

+

ref: data/dev/zh2en/wmt17

|

| 76 |

+

data_testset_9:

|

| 77 |

+

direction: en2ru

|

| 78 |

+

name: newstest2019

|

| 79 |

+

path: data/binarized/en_ru/en2ru/newstest2019

|

| 80 |

+

ref: data/dev/en2ru/newstest2019

|

examples/configs/parallel_mono_12e12d_contrastive.yml

ADDED

|

@@ -0,0 +1,44 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

model_dir: model/pretrain/lab/multilingual/l2r/multi_bpe32k/parallel_mono_contrastive_1/transformer_big_t2t_12e12d

|

| 2 |

+

data_1: data/multilingual/bin/merged_deduped_ras

|

| 3 |

+

data_mono_1: data/multilingual/bin/mono_only/splitaa

|

| 4 |

+

data_mono_2: data/multilingual/bin/mono_only/splitab

|

| 5 |

+

data_mono_3: data/multilingual/bin/mono_only/splitac

|

| 6 |

+

data_mono_4: data/multilingual/bin/mono_only/splitad

|

| 7 |

+

data_mono_5: data/multilingual/bin/mono_only/splitae

|

| 8 |

+

data_mono_6: data/multilingual/bin/mono_only/mono_de_fr_en

|

| 9 |

+

data_mono_7: data/multilingual/bin/mono_only/mono_nl_pl_pt

|

| 10 |

+

source_lang: src

|

| 11 |

+

target_lang: trg

|

| 12 |

+

task: translation_w_mono

|

| 13 |

+

parallel_ratio: 0.2

|

| 14 |

+

mono_ratio: 0.07

|

| 15 |

+

arch: transformer_big_t2t_12e12d

|

| 16 |

+

share_all_embeddings: true

|

| 17 |

+

encoder_learned_pos: true

|

| 18 |

+

decoder_learned_pos: true

|

| 19 |

+

max_source_positions: 1024

|

| 20 |

+

max_target_positions: 1024

|

| 21 |

+

dropout: 0.1

|

| 22 |

+

criterion: label_smoothed_cross_entropy_with_contrastive

|

| 23 |

+

contrastive_lambda: 1.0

|

| 24 |

+

temperature: 0.1

|

| 25 |

+

lr: 0.0003

|

| 26 |

+

clip_norm: 10.0

|

| 27 |

+

optimizer: adam

|

| 28 |

+

adam_eps: 1e-06

|

| 29 |

+

weight_decay: 0.01

|

| 30 |

+

warmup_updates: 10000

|

| 31 |

+

label_smoothing: 0.1

|

| 32 |

+

lr_scheduler: polynomial_decay

|

| 33 |

+

min_lr: -1

|

| 34 |

+

max_tokens: 1536

|

| 35 |

+

update_freq: 30

|

| 36 |

+

max_update: 5000000

|

| 37 |

+

no_scale_embedding: true

|

| 38 |

+

layernorm_embedding: true

|

| 39 |

+

save_interval_updates: 2000

|

| 40 |

+

skip_invalid_size_inputs_valid_test: true

|

| 41 |

+

log_interval: 500

|

| 42 |

+

num_workers: 1

|

| 43 |

+

fp16: true

|

| 44 |

+

seed: 33122

|

mcolt/__init__.py

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .arches import *

|

| 2 |

+

from . criterions import *

|

| 3 |

+

from .data import *

|

| 4 |

+

from .tasks import *

|

mcolt/__pycache__/__init__.cpython-310.pyc

ADDED

|

Binary file (222 Bytes). View file

|

|

|

mcolt/arches/__init__.py

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

from .transformer import *

|

mcolt/arches/__pycache__/__init__.cpython-310.pyc

ADDED

|

Binary file (179 Bytes). View file

|

|

|

mcolt/arches/__pycache__/transformer.cpython-310.pyc

ADDED

|

Binary file (9.16 kB). View file

|

|

|

mcolt/arches/transformer.py

ADDED

|

@@ -0,0 +1,380 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from fairseq.models import register_model_architecture

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

@register_model_architecture('transformer', 'transformer_bigger')

|

| 5 |

+

def transformer_bigger(args):

|

| 6 |

+

args.attention_dropout = getattr(args, 'attention_dropout', 0.3)

|

| 7 |

+

args.activation_dropout = getattr(args, 'activation_dropout', 0.3)

|

| 8 |

+

args.dropout = getattr(args, 'dropout', 0.1)

|

| 9 |

+

args.encoder_ffn_embed_dim = getattr(args, 'encoder_ffn_embed_dim', 15000)

|

| 10 |

+

args.decoder_ffn_embed_dim = getattr(args, 'decoder_ffn_embed_dim', 15000)

|

| 11 |

+

args.share_decoder_input_output_embed = getattr(args, 'share_decoder_input_output_embed', True)

|

| 12 |

+

from fairseq.models.transformer import transformer_wmt_en_de_big_t2t

|

| 13 |

+

transformer_wmt_en_de_big_t2t(args)

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

@register_model_architecture('transformer', 'transformer_bigger_16384')

|

| 17 |

+

def transformer_bigger_16384(args):

|

| 18 |

+

args.attention_dropout = getattr(args, 'attention_dropout', 0.1)

|

| 19 |

+

args.activation_dropout = getattr(args, 'activation_dropout', 0.1)

|

| 20 |

+

args.dropout = getattr(args, 'dropout', 0.1)

|

| 21 |

+

args.encoder_ffn_embed_dim = getattr(args, 'encoder_ffn_embed_dim', 16384)

|

| 22 |

+

args.decoder_ffn_embed_dim = getattr(args, 'decoder_ffn_embed_dim', 16384)

|

| 23 |

+

args.share_decoder_input_output_embed = getattr(args, 'share_decoder_input_output_embed', True)

|

| 24 |

+

from fairseq.models.transformer import transformer_wmt_en_de_big_t2t

|

| 25 |

+

transformer_wmt_en_de_big_t2t(args)

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

@register_model_architecture('transformer', 'transformer_bigger_no_share')

|

| 29 |

+

def transformer_bigger_no_share(args):

|

| 30 |

+

args.attention_dropout = getattr(args, 'attention_dropout', 0.3)

|

| 31 |

+

args.activation_dropout = getattr(args, 'activation_dropout', 0.3)

|

| 32 |

+

args.dropout = getattr(args, 'dropout', 0.1)

|

| 33 |

+

args.encoder_ffn_embed_dim = getattr(args, 'encoder_ffn_embed_dim', 15000)

|

| 34 |

+

args.decoder_ffn_embed_dim = getattr(args, 'decoder_ffn_embed_dim', 15000)

|

| 35 |

+

args.share_decoder_input_output_embed = getattr(args, 'share_decoder_input_output_embed', False)

|

| 36 |

+

from fairseq.models.transformer import transformer_wmt_en_de_big_t2t

|

| 37 |

+

transformer_wmt_en_de_big_t2t(args)

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

@register_model_architecture('transformer', 'transformer_deeper')

|

| 41 |

+

def transformer_deeper(args):

|

| 42 |

+

args.encoder_layers = getattr(args, 'encoder_layers', 15)

|

| 43 |

+

args.dense = False

|

| 44 |

+

args.bottleneck_component = getattr(args, 'bottleneck_component', 'mean_pool')

|

| 45 |

+

args.attention_dropout = getattr(args, 'attention_dropout', 0.1)

|

| 46 |

+

args.activation_dropout = getattr(args, 'activation_dropout', 0.1)

|

| 47 |

+

args.dropout = getattr(args, 'dropout', 0.1)

|

| 48 |

+

# args.encoder_ffn_embed_dim = getattr(args, 'encoder_ffn_embed_dim', 15000)

|

| 49 |

+

# args.decoder_ffn_embed_dim = getattr(args, 'decoder_ffn_embed_dim', 15000)

|

| 50 |

+

args.share_decoder_input_output_embed = getattr(args, 'share_decoder_input_output_embed', True)

|

| 51 |

+

from fairseq.models.transformer import transformer_wmt_en_de_big_t2t

|

| 52 |

+

transformer_wmt_en_de_big_t2t(args)

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

@register_model_architecture('transformer', 'transformer_deeper_no_share')

|

| 56 |

+

def transformer_deeper_no_share(args):

|

| 57 |

+

args.encoder_layers = getattr(args, 'encoder_layers', 15)

|

| 58 |

+

args.dense = False

|

| 59 |

+

args.bottleneck_component = getattr(args, 'bottleneck_component', 'mean_pool')

|

| 60 |

+

args.attention_dropout = getattr(args, 'attention_dropout', 0.1)

|

| 61 |

+

args.activation_dropout = getattr(args, 'activation_dropout', 0.1)

|

| 62 |

+

args.dropout = getattr(args, 'dropout', 0.1)

|

| 63 |

+

# args.encoder_ffn_embed_dim = getattr(args, 'encoder_ffn_embed_dim', 15000)

|

| 64 |

+

# args.decoder_ffn_embed_dim = getattr(args, 'decoder_ffn_embed_dim', 15000)

|

| 65 |

+

args.share_decoder_input_output_embed = getattr(args, 'share_decoder_input_output_embed', False)

|

| 66 |

+

from fairseq.models.transformer import transformer_wmt_en_de_big_t2t

|

| 67 |

+

transformer_wmt_en_de_big_t2t(args)

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

@register_model_architecture('transformer', 'transformer_deeper_dense')

|

| 71 |

+

def transformer_deeper_no_share(args):

|

| 72 |

+

args.encoder_layers = getattr(args, 'encoder_layers', 15)

|

| 73 |

+

args.dense = True

|

| 74 |

+

args.bottleneck_component = 'mean_pool'

|

| 75 |

+

args.attention_dropout = getattr(args, 'attention_dropout', 0.1)

|

| 76 |

+

args.activation_dropout = getattr(args, 'activation_dropout', 0.1)

|

| 77 |

+

args.dropout = getattr(args, 'dropout', 0.1)

|

| 78 |

+

# args.encoder_ffn_embed_dim = getattr(args, 'encoder_ffn_embed_dim', 15000)

|

| 79 |

+

# args.decoder_ffn_embed_dim = getattr(args, 'decoder_ffn_embed_dim', 15000)

|

| 80 |

+

args.share_decoder_input_output_embed = getattr(args, 'share_decoder_input_output_embed', True)

|

| 81 |

+

from fairseq.models.transformer import transformer_wmt_en_de_big_t2t

|

| 82 |

+

transformer_wmt_en_de_big_t2t(args)

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

@register_model_architecture('transformer', 'transformer_deeper_dense_no_share')

|

| 86 |

+

def transformer_deeper_no_share(args):

|

| 87 |

+

args.encoder_layers = getattr(args, 'encoder_layers', 15)

|

| 88 |

+

args.dense = True

|

| 89 |

+

args.bottleneck_component = 'mean_pool'

|

| 90 |

+

args.attention_dropout = getattr(args, 'attention_dropout', 0.1)

|

| 91 |

+

args.activation_dropout = getattr(args, 'activation_dropout', 0.1)

|

| 92 |

+

args.dropout = getattr(args, 'dropout', 0.1)

|

| 93 |

+

# args.encoder_ffn_embed_dim = getattr(args, 'encoder_ffn_embed_dim', 15000)

|

| 94 |

+

# args.decoder_ffn_embed_dim = getattr(args, 'decoder_ffn_embed_dim', 15000)

|

| 95 |

+

args.share_decoder_input_output_embed = getattr(args, 'share_decoder_input_output_embed', False)

|

| 96 |

+

from fairseq.models.transformer import transformer_wmt_en_de_big_t2t

|

| 97 |

+

transformer_wmt_en_de_big_t2t(args)

|

| 98 |

+

|

| 99 |

+

|

| 100 |

+

@register_model_architecture('transformer', 'transformer_big')

|

| 101 |

+

def transformer_big(args):

|

| 102 |

+

args.dropout = getattr(args, 'dropout', 0.1)

|

| 103 |

+

args.share_decoder_input_output_embed = getattr(args, 'share_decoder_input_output_embed', True)

|

| 104 |

+

from fairseq.models.transformer import transformer_wmt_en_de_big_t2t

|

| 105 |

+

transformer_wmt_en_de_big_t2t(args)

|

| 106 |

+

|

| 107 |

+

|

| 108 |

+

@register_model_architecture('transformer', 'transformer_big_emb512')

|

| 109 |

+

def transformer_big_emb512(args):

|

| 110 |

+

args.dropout = getattr(args, 'dropout', 0.1)

|

| 111 |

+

args.share_decoder_input_output_embed = getattr(args, 'share_decoder_input_output_embed', True)

|

| 112 |

+

args.encoder_embed_dim = getattr(args, 'encoder_embed_dim', 512)

|

| 113 |

+

args.decoder_embed_dim = getattr(args, 'decoder_embed_dim', 512)

|

| 114 |

+

from fairseq.models.transformer import transformer_wmt_en_de_big_t2t

|

| 115 |

+

transformer_wmt_en_de_big_t2t(args)

|

| 116 |

+

|

| 117 |

+

|

| 118 |

+

@register_model_architecture('transformer', 'transformer_big_no_share')

|

| 119 |

+

def transformer_big_no_share(args):

|

| 120 |

+

args.dropout = getattr(args, 'dropout', 0.1)

|

| 121 |

+

args.share_decoder_input_output_embed = getattr(args, 'share_decoder_input_output_embed', False)

|

| 122 |

+

from fairseq.models.transformer import transformer_wmt_en_de_big_t2t

|

| 123 |

+

transformer_wmt_en_de_big_t2t(args)

|

| 124 |

+

|

| 125 |

+

|

| 126 |

+

@register_model_architecture('transformer', 'transformer_big_16e4d')

|

| 127 |

+

def transformer_big_16e4d(args):

|

| 128 |

+

args.dropout = getattr(args, 'dropout', 0.2)

|

| 129 |

+

args.encoder_layers = getattr(args, 'encoder_layers', 16)

|

| 130 |

+

args.decoder_layers = getattr(args, 'decoder_layers', 4)

|

| 131 |

+

args.encoder_embed_dim = getattr(args, 'encoder_embed_dim', 1024)

|

| 132 |

+

args.decoder_embed_dim = getattr(args, 'decoder_embed_dim', 1024)

|

| 133 |

+

args.encoder_ffn_embed_dim = getattr(args, 'encoder_ffn_embed_dim', 4096)

|

| 134 |

+

args.encoder_attention_heads = getattr(args, 'encoder_attention_heads', 16)

|

| 135 |

+

args.decoder_attention_heads = getattr(args, 'decoder_attention_heads', 16)

|

| 136 |

+

args.share_decoder_input_output_embed = getattr(args, 'share_decoder_input_output_embed', True)

|

| 137 |

+

from fairseq.models.transformer import transformer_wmt_en_de_big_t2t

|

| 138 |

+

transformer_wmt_en_de_big_t2t(args)

|

| 139 |

+

|

| 140 |

+

|

| 141 |

+

@register_model_architecture('transformer', 'transformer_big_16e6d')

|

| 142 |

+

def transformer_big_16e6d(args):

|

| 143 |

+

args.dropout = getattr(args, 'dropout', 0.2)

|

| 144 |

+

args.encoder_layers = getattr(args, 'encoder_layers', 16)

|

| 145 |

+

args.decoder_layers = getattr(args, 'decoder_layers', 6)

|

| 146 |

+

args.encoder_embed_dim = getattr(args, 'encoder_embed_dim', 1024)

|

| 147 |

+

args.decoder_embed_dim = getattr(args, 'decoder_embed_dim', 1024)

|

| 148 |

+

args.encoder_ffn_embed_dim = getattr(args, 'encoder_ffn_embed_dim', 4096)

|

| 149 |

+

args.encoder_attention_heads = getattr(args, 'encoder_attention_heads', 16)

|

| 150 |

+

args.decoder_attention_heads = getattr(args, 'decoder_attention_heads', 16)

|

| 151 |

+

args.share_decoder_input_output_embed = getattr(args, 'share_decoder_input_output_embed', True)

|

| 152 |

+

from fairseq.models.transformer import transformer_wmt_en_de_big_t2t

|

| 153 |

+

transformer_wmt_en_de_big_t2t(args)

|

| 154 |

+

|