File size: 4,048 Bytes

5c9f014 cd6178b 5c9f014 cd6178b 2589ecc cd6178b |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 |

---

license: creativeml-openrail-m

tags:

- coreml

- stable-diffusion

- text-to-image

---

# Core ML Converted Model:

- This model was converted to [Core ML for use on Apple Silicon devices](https://github.com/apple/ml-stable-diffusion). Conversion instructions can be found [here](https://github.com/godly-devotion/MochiDiffusion/wiki/How-to-convert-ckpt-or-safetensors-files-to-Core-ML).

- Provide the model to an app such as **Mochi Diffusion** [Github](https://github.com/godly-devotion/MochiDiffusion) / [Discord](https://discord.gg/x2kartzxGv) to generate images.

- `split_einsum` version is compatible with all compute unit options including Neural Engine.

- `original` version is only compatible with `CPU & GPU` option.

- Custom resolution versions are tagged accordingly.

- The `vae-ft-mse-840000-ema-pruned.ckpt` VAE is embedded into the model.

- This model was converted with a `vae-encoder` for use with `image2image`.

- This model is `fp16`.

- Descriptions are posted as-is from original model source.

- Not all features and/or results may be available in `CoreML` format.

- This model does not have the [unet split into chunks](https://github.com/apple/ml-stable-diffusion#-converting-models-to-core-ml).

- This model does not include a `safety checker` (for NSFW content).

- This model can be used with ControlNet.

<br>

# toonYou-beta5pruned_cn:

Source(s): [CivitAI](https://civitai.com/models/30240/toonyou)<br>

## ToonYou - Beta 5 is up!

Silly, stylish, and.. kind of cute? 😅

A bit of detail with a cartoony feel, it keeps getting better!

With your support, ToonYou has come this far, Thx!<br>

⬇Read the info below to get the same quality images🙏

### Recommended Settings - VAE is included!

- Clip skip: 2

- Hires. fix: R-ESRGAN 4x+ Anime6B / Upscale by: 1.5+ / Hires steps: 14 / Denoising strength: 0.4

- Adetailer: face_yolov8n

- Sampler: DPM++ SDE Karras

- CFG: 8 / Steps : 25+

- Prompts: (best quality, masterpiece)

- Neg: (worst quality, low quality, letterboxed)

### Why is my image different from yours?

- Use prompts that match the danbooru tag

- Keywords like Realistic, HDR, and others sometimes force changes to the characteristics of the model

- Tag auto complete extension

- Use Neg only when necessary

- A lot of negative embedding is not always the answer

- If you're having trouble with your character's face (especially the eyes), set the Upscale to 2 or higher. If you don't have enough VRAM, Adetailer is an alternative

- Different samplers will produce different results

- I can't give you a solution to the error or any technical help

### Do you like my work? check out my profile and see what else!

**And [a cup of coffee would be nice! 😉](https://ko-fi.com/bradcatt)**

For anything other than general personal use, please be sure to contact me

You are solely responsible for any legal liability resulting from unethical use of this model<br><br>

,%201girl,%20collarbone,%20wavy%20hair,%20looking%20at%20viewer,%20blurry,%20upper%20body,%20necklace,%20suspenders,%20floral%20p.jpeg)

,%201girl,%20blonde,%20freckles,%20crop%20top,%20choker,%20looking%20at%20viewer,%20upper%20body,%20blurry,%20earrings,%20street,.jpeg)

,%201boy,%20explorer,%20jungle,%20sitting,%20boots,%20hat,%20backpack.jpeg)

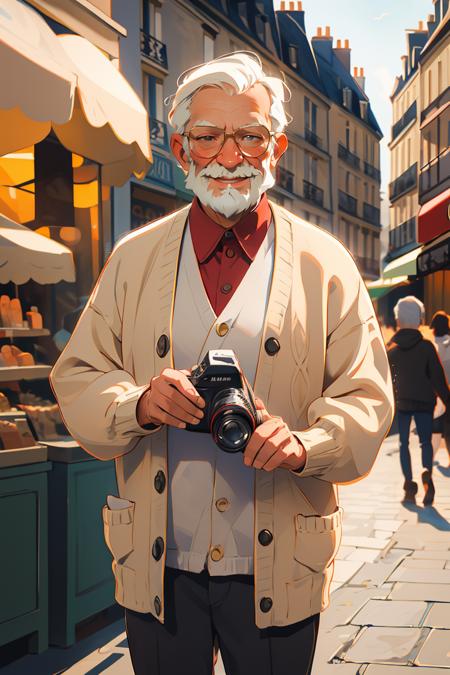

,%20old%20man,%20beard,%20blonde,%20oversized%20shirt,%20happy,%20street,%20holding%20camera,%20open%20cardigan,%20blurry,%20pari.jpeg) |