issue_owner_repo

listlengths 2

2

| issue_body

stringlengths 0

261k

⌀ | issue_title

stringlengths 1

925

| issue_comments_url

stringlengths 56

81

| issue_comments_count

int64 0

2.5k

| issue_created_at

stringlengths 20

20

| issue_updated_at

stringlengths 20

20

| issue_html_url

stringlengths 37

62

| issue_github_id

int64 387k

2.46B

| issue_number

int64 1

127k

|

|---|---|---|---|---|---|---|---|---|---|

[

"hwchase17",

"langchain"

]

| I have two tools to manage credits from a bank

One calculate the loan payments and the other get the the interest rate from a table.

```

sql_tool = Tool(

name='Interest rate DB',

func=sql_chain.run,

description="Useful for when you need to answer questions about interest rate of credits"

)

class CalculateLoanPayments(BaseTool):

name = "Loan Payments calculator"

description = "use this tool when you need to calculate a loan payments"

def _run(self, parameters):

# Convert annual interest rate to monthly rate

monthly_rate = interest_rate / 12.0

# Calculate total number of monthly payments

num_payments = num_years * 12.0

# Calculate monthly payment amount using formula for present value of annuity

# where PV = A * [(1 - (1 + r)^(-n)) / r]

# A = monthly payment amount

# r = monthly interest rate

# n = total number of payments

payment = (principal * monthly_rate) / (1 - (1 + monthly_rate) ** (-num_payments))

return payment

def _arun(self, radius: Union[int, float]):

raise NotImplementedError("This tool does not support async")

tools.append(CalculateLoanPayments())

```

Iam asking

I need to calculate the monthly payments for a 2-year loan of 2 million pesos with a commercial credit

The result is:

I need to use a loan payments calculator to calculate the monthly payments

Action: Loan Payments calculator

Action Input: Loan amount: 2,000,000, Loan term: 2 years, Interest rate: I need to look up the interest rate in the Interest rate DB

Action: Interest rate DB

Action Input: Commercial credit interest rate

One of the parameters need an action so i try:

```

class LeoOutputParser(AgentOutputParser):

def parse(self, text: str) -> Union[AgentAction, AgentFinish]:

if FINAL_ANSWER_ACTION in text:

return AgentFinish(

{"output": text.split(FINAL_ANSWER_ACTION)[-1].strip()}, text

)

actions = []

# \s matches against tab/newline/whitespace

regex = r"Action\s*\d*\s*:(.*?)\nAction\s*\d*\s*Input\s*\d*\s*:[\s]*(.*)"

match1 = re.search(regex, text, re.DOTALL)

if not match1:

raise ValueError(f"Could not parse LLM output: `{text}`")

action1 = match1.group(1).strip()

action_input1 = match1.group(2).strip(" ").strip('"')

first = AgentAction(action1, action_input1, text)

actions.append(first)

match2 = re.search(regex, action_input1, re.DOTALL)

if match2:

action2 = match2.group(1).strip()

action_input2 = match2.group(2).strip(" ").strip('"')

second = AgentAction(action2, action_input2, action_input1)

actions.insert(0,second)

return actions

```

zero_shot_agent = initialize_agent(

agent="zero-shot-react-description",

tools=tools,

llm=llm,

verbose=True,

max_iterations=3,

agent_kwargs={'output_parser': LeoOutputParser()}

)

It execute the parameter getting but not pass the parameter to the calculator.

I propose to send the before result to the tool

```

observation = tool.run(

agent_action.tool_input,

verbose=self.verbose,

color=color,

**tool_run_kwargs,

result

)

```

Thanks in advance

| Execute Actions when response has two actions | https://api.github.com/repos/langchain-ai/langchain/issues/3666/comments | 4 | 2023-04-27T17:44:40Z | 2023-08-31T17:06:46Z | https://github.com/langchain-ai/langchain/issues/3666 | 1,687,296,784 | 3,666 |

[

"hwchase17",

"langchain"

]

| **Issue**

Sometimes when doing search similarity using chromaDB wrapper, I run into the following issue:

`RuntimeError(\'Cannot return the results in a contigious 2D array. Probably ef or M is too small\')`

**Some background info:**

ChromaDB is a library for performing similarity search on high-dimensional data. It uses an approximate nearest neighbor (ANN) search algorithm called Hierarchical Navigable Small World (HNSW) to find the most similar items to a given query. The parameters `ef` and `M` are related to the HNSW algorithm and have an impact on the search quality and performance.

1. `ef`: This parameter controls the size of the dynamic search list used by the HNSW algorithm. A higher value for `ef` results in a more accurate search but slower search speed. A lower value will result in a faster search but less accurate results.

2. `M`: This parameter determines the number of bi-directional links created for each new element during the construction of the HNSW graph. A higher value for `M` results in a denser graph, leading to higher search accuracy but increased memory consumption and construction time.

The error message you encountered indicates that either or both of these parameters are too small for the current dataset. This can cause issues when trying to return the search results in a contiguous 2D array. To resolve this error, you can try increasing the values of `ef` and `M` in the ChromaDB configuration or during the search query.

It's important to note that the optimal values for `ef` and `M` can depend on the specific dataset and use case. You may need to experiment with different values to find the best balance between search accuracy, speed, and memory consumption for your application.

**My proposal**

3 possibilities:

- Simple one: .adding ef and M optional parameter to similarity_search

- More complex one : adding a retrial system that tries over a range ef and M when encountering the issue built into similarity search

- Very complex one: calculating optimal ef and M within similarity_search to always have optimal ef and M

| Chroma DB : Cannot return the results in a contiguous 2D array | https://api.github.com/repos/langchain-ai/langchain/issues/3665/comments | 5 | 2023-04-27T16:44:01Z | 2024-06-27T09:44:47Z | https://github.com/langchain-ai/langchain/issues/3665 | 1,687,201,707 | 3,665 |

[

"hwchase17",

"langchain"

]

| got the following error when running today:

``` File "venv/lib/python3.11/site-packages/langchain/__init__.py", line 6, in <module>

from langchain.agents import MRKLChain, ReActChain, SelfAskWithSearchChain

File "venv/lib/python3.11/site-packages/langchain/agents/__init__.py", line 2, in <module>

from langchain.agents.agent import (

File "venv/lib/python3.11/site-packages/langchain/agents/agent.py", line 17, in <module>

from langchain.chains.base import Chain

File "venv/lib/python3.11/site-packages/langchain/chains/__init__.py", line 2, in <module>

from langchain.chains.api.base import APIChain

File "venv/lib/python3.11/site-packages/langchain/chains/api/base.py", line 8, in <module>

from langchain.chains.api.prompt import API_RESPONSE_PROMPT, API_URL_PROMPT

File "venv/lib/python3.11/site-packages/langchain/chains/api/prompt.py", line 2, in <module>

from langchain.prompts.prompt import PromptTemplate

File "venv/lib/python3.11/site-packages/langchain/prompts/__init__.py", line 14, in <module>

from langchain.prompts.loading import load_prompt

File "venv/lib/python3.11/site-packages/langchain/prompts/loading.py", line 14, in <module>

from langchain.utilities.loading import try_load_from_hub

File "venv/lib/python3.11/site-packages/langchain/utilities/__init__.py", line 5, in <module>

from langchain.utilities.bash import BashProcess

File "venv/lib/python3.11/site-packages/langchain/utilities/bash.py", line 7, in <module>

import pexpect

ModuleNotFoundError: No module named 'pexpect'

```

does this need to be added to project dependencies? | import error when importing `from langchain import OpenAI` on 0.0.151 | https://api.github.com/repos/langchain-ai/langchain/issues/3664/comments | 21 | 2023-04-27T16:24:30Z | 2023-04-28T17:54:02Z | https://github.com/langchain-ai/langchain/issues/3664 | 1,687,175,750 | 3,664 |

[

"hwchase17",

"langchain"

]

| When i use other embedding model,the vector dimensions is always wrong. So i use 'None' to replace ADA_TOKEN_COUNT.

It will be auto compute how many dimensions when first time to use an embedding model.

I use 'GanymedeNil/text2vec-large-chinese' test and success.

so i change this :

embedding: Vector = sqlalchemy.Column(Vector(ADA_TOKEN_COUNT))

to this

embedding: Vector = sqlalchemy.Column(Vector(None))

| pgvector embedding length error | https://api.github.com/repos/langchain-ai/langchain/issues/3660/comments | 3 | 2023-04-27T15:57:33Z | 2023-10-07T16:07:39Z | https://github.com/langchain-ai/langchain/issues/3660 | 1,687,134,800 | 3,660 |

[

"hwchase17",

"langchain"

]

| have no idea, just install langchain and run code below, the error popup, any idea?

```python

from langchain.document_loaders import UnstructuredPDFLoader, OnlinePDFLoader, UnstructuredImageLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

---------------------------------------------------------------------------

ImportError Traceback (most recent call last)

<ipython-input-2-756b21b77eab> in <module>

----> 1 from langchain.document_loaders import UnstructuredPDFLoader, OnlinePDFLoader, UnstructuredImageLoader

2 from langchain.text_splitter import RecursiveCharacterTextSplitter

/home/nvme2/kunzhong/anaconda3/lib/python3.8/site-packages/langchain/__init__.py in <module>

4 from typing import Optional

5

----> 6 from langchain.agents import MRKLChain, ReActChain, SelfAskWithSearchChain

7 from langchain.cache import BaseCache

8 from langchain.callbacks import (

/home/nvme2/kunzhong/anaconda3/lib/python3.8/site-packages/langchain/agents/__init__.py in <module>

1 """Interface for agents."""

----> 2 from langchain.agents.agent import (

3 Agent,

4 AgentExecutor,

5 AgentOutputParser,

/home/nvme2/kunzhong/anaconda3/lib/python3.8/site-packages/langchain/agents/agent.py in <module>

15 from langchain.agents.tools import InvalidTool

16 from langchain.callbacks.base import BaseCallbackManager

---> 17 from langchain.chains.base import Chain

18 from langchain.chains.llm import LLMChain

19 from langchain.input import get_color_mapping

/home/nvme2/kunzhong/anaconda3/lib/python3.8/site-packages/langchain/chains/__init__.py in <module>

1 """Chains are easily reusable components which can be linked together."""

----> 2 from langchain.chains.api.base import APIChain

3 from langchain.chains.api.openapi.chain import OpenAPIEndpointChain

4 from langchain.chains.combine_documents.base import AnalyzeDocumentChain

5 from langchain.chains.constitutional_ai.base import ConstitutionalChain

/home/nvme2/kunzhong/anaconda3/lib/python3.8/site-packages/langchain/chains/api/base.py in <module>

6 from pydantic import Field, root_validator

7

----> 8 from langchain.chains.api.prompt import API_RESPONSE_PROMPT, API_URL_PROMPT

9 from langchain.chains.base import Chain

10 from langchain.chains.llm import LLMChain

/home/nvme2/kunzhong/anaconda3/lib/python3.8/site-packages/langchain/chains/api/prompt.py in <module>

1 # flake8: noqa

----> 2 from langchain.prompts.prompt import PromptTemplate

3

4 API_URL_PROMPT_TEMPLATE = """You are given the below API Documentation:

5 {api_docs}

/home/nvme2/kunzhong/anaconda3/lib/python3.8/site-packages/langchain/prompts/__init__.py in <module>

1 """Prompt template classes."""

2 from langchain.prompts.base import BasePromptTemplate, StringPromptTemplate

----> 3 from langchain.prompts.chat import (

4 AIMessagePromptTemplate,

5 BaseChatPromptTemplate,

/home/nvme2/kunzhong/anaconda3/lib/python3.8/site-packages/langchain/prompts/chat.py in <module>

8 from pydantic import BaseModel, Field

9

---> 10 from langchain.memory.buffer import get_buffer_string

11 from langchain.prompts.base import BasePromptTemplate, StringPromptTemplate

12 from langchain.prompts.prompt import PromptTemplate

/home/nvme2/kunzhong/anaconda3/lib/python3.8/site-packages/langchain/memory/__init__.py in <module>

21 from langchain.memory.summary_buffer import ConversationSummaryBufferMemory

22 from langchain.memory.token_buffer import ConversationTokenBufferMemory

---> 23 from langchain.memory.vectorstore import VectorStoreRetrieverMemory

24

25 __all__ = [

/home/nvme2/kunzhong/anaconda3/lib/python3.8/site-packages/langchain/memory/vectorstore.py in <module>

8 from langchain.memory.utils import get_prompt_input_key

9 from langchain.schema import Document

---> 10 from langchain.vectorstores.base import VectorStoreRetriever

11

12

/home/nvme2/kunzhong/anaconda3/lib/python3.8/site-packages/langchain/vectorstores/__init__.py in <module>

1 """Wrappers on top of vector stores."""

----> 2 from langchain.vectorstores.analyticdb import AnalyticDB

3 from langchain.vectorstores.annoy import Annoy

4 from langchain.vectorstores.atlas import AtlasDB

5 from langchain.vectorstores.base import VectorStore

/home/nvme2/kunzhong/anaconda3/lib/python3.8/site-packages/langchain/vectorstores/analyticdb.py in <module>

9 from sqlalchemy import REAL, Index

10 from sqlalchemy.dialects.postgresql import ARRAY, JSON, UUID

---> 11 from sqlalchemy.orm import Mapped, Session, declarative_base, relationship

12 from sqlalchemy.sql.expression import func

13

ImportError: cannot import name 'Mapped' from 'sqlalchemy.orm' (/home/nvme2/kunzhong/anaconda3/lib/python3.8/site-packages/sqlalchemy/orm/__init__.py)

``` | ImportError: cannot import name 'Mapped' from 'sqlalchemy.orm' (/home/nvme2/kunzhong/anaconda3/lib/python3.8/site-packages/sqlalchemy/orm/__init__.py) | https://api.github.com/repos/langchain-ai/langchain/issues/3655/comments | 6 | 2023-04-27T14:55:35Z | 2023-09-24T16:07:06Z | https://github.com/langchain-ai/langchain/issues/3655 | 1,687,033,336 | 3,655 |

[

"hwchase17",

"langchain"

]

|

if return text is not str, there is nothing helpful info | logging Generation text type error | https://api.github.com/repos/langchain-ai/langchain/issues/3654/comments | 3 | 2023-04-27T14:48:41Z | 2023-09-10T16:26:05Z | https://github.com/langchain-ai/langchain/issues/3654 | 1,687,020,237 | 3,654 |

[

"hwchase17",

"langchain"

]

| Hi there,

I'm using Langchain + AzureOpenAi api. Based on that I'm trying to use the sql agent to run queries against the postgresql table (15.2). In many cases it works fine but once in a while I'm getting an error:

```

Must provide an 'engine' or 'deployment_id' parameter to create a <class 'openai.api_resources.completion.Completion'>

```

The llm instance gets initiated as:

```

llm = AzureOpenAI(deployment_name=settings.OPENAI_ENGINE, model_name="code-davinci-002")

```

Here's an example of the Agent output:

```

....

> Entering new AgentExecutor chain...

Action: list_tables_sql_db

Action Input: ""

Observation: app_organisationadvertiser, app_transaction, app_publisher, app_basketproduct

Thought: I need to query the app_organisationadvertiser table to get the list of brands

Action: query_sql_db

Action Input: SELECT name FROM app_organisationadvertiser LIMIT 10

Observation: [('Your brand nr 1',)]

Thought: I should check my query before executing it

Action: query_checker_sql_db

Action Input: SELECT name FROM app_organisationadvertiser LIMIT 10

```

The final query looks good and is a valid SQL query, but the agent returns an exception with the error as described above.

Any ideas how to deal with that?

| AzureOpenAi - Sql Agent: must provide an `engine` or `deployment_id` | https://api.github.com/repos/langchain-ai/langchain/issues/3649/comments | 4 | 2023-04-27T12:28:05Z | 2023-04-28T14:14:46Z | https://github.com/langchain-ai/langchain/issues/3649 | 1,686,748,777 | 3,649 |

[

"hwchase17",

"langchain"

]

| Hi, using the text-embedding-ada-002 model provided by Azure OpenAI doesnt seem to be working for me. Any fixes? | Azure OpenAI Embeddings model not working | https://api.github.com/repos/langchain-ai/langchain/issues/3648/comments | 5 | 2023-04-27T12:03:40Z | 2023-05-04T03:53:17Z | https://github.com/langchain-ai/langchain/issues/3648 | 1,686,708,535 | 3,648 |

[

"hwchase17",

"langchain"

]

| Use my custom LLM model, got warning like this.

Token indices sequence length is longer than the specified maximum sequence length for this model (1266 > 1024). Running this sequence through the model will result in indexing errors

My model max support token is 8k.

Did anyone know what this mean?

``` python

loader = SeleniumURLLoader(urls=urls)

data = loader.load()

print(data)

llm = MyLLM()

chain = load_summarize_chain(llm, chain_type="map_reduce")

print(chain.run(data))

``` | Token indices sequence length is longer than the specified maximum sequence length for this model | https://api.github.com/repos/langchain-ai/langchain/issues/3647/comments | 2 | 2023-04-27T11:59:19Z | 2023-10-05T16:10:38Z | https://github.com/langchain-ai/langchain/issues/3647 | 1,686,700,270 | 3,647 |

[

"hwchase17",

"langchain"

]

| I am using Langchain package to connect to a remote DB. The problem is that it takes a lot of time (sometimes more than 3 minutes) to run the SQLDatabase class. To avoid that long time I am specifying just to load a table but still is taking up to a minute to do that work. Here the code:

```python

from langchain import OpenAI

from langchain.sql_database import SQLDatabase

from sqlalchemy import create_engine

# already loaded environment vars

llm = OpenAI(temperature=0)

engine = create_engine("postgresql+psycopg2://{user}:{passwd}@{host}:{port}/chatdatabase")

include_tables=['table_1']

db = SQLDatabase(engine, include_tables=include_tables)

...

```

As in the documentation, Langchain uses SQLAlchemy in the background for making connections and loading tables. That is why I tried to make a connection with pure SQLAlchemy and not using langchain:

```python

from sqlalchemy import create_engine

engine = create_engine("postgresql+psycopg2://{user}:{passwd}@{host}:{port}/chatdatabase")

with engine.connect() as con:

rs = con.execute('select * from table_1 limit 10')

for row in rs:

print(row)

```

And surprisingly it takes just few seconds to do so.

Is there any way or documentation to read (I've searched but not lucky) so that this process can be faster? | Langchain connection to remote DB takes a lot of time | https://api.github.com/repos/langchain-ai/langchain/issues/3645/comments | 27 | 2023-04-27T11:35:12Z | 2024-07-30T09:27:42Z | https://github.com/langchain-ai/langchain/issues/3645 | 1,686,665,722 | 3,645 |

[

"hwchase17",

"langchain"

]

| I think I have found an issue with using ChatVectorDBChain together with HuggingFacePipeline that uses Hugging Face Accelerate.

First, I successfully load and use a ~10GB model pipeline on an ~8GB GPU (setting it to use only ~5GB by specifying `device_map` and `max_memory`), and initialize the vectorstore:

```python

from transformers import pipeline

pipe = pipeline(model='declare-lab/flan-alpaca-xl', device_map='auto', model_kwargs={'max_memory': {0: "5GiB", "cpu": "20GiB"}})

pipe("How are you?")

# [{'generated_text': "I'm doing well. I'm doing well, thank you. How about you?"}]

import faiss

import getpass

import os

from langchain.vectorstores.faiss import FAISS

from langchain.text_splitter import CharacterTextSplitter

from langchain.chains import ChatVectorDBChain

from langchain import HuggingFaceHub, HuggingFacePipeline

from langchain.embeddings import HuggingFaceEmbeddings

model_name = "sentence-transformers/all-mpnet-base-v2"

embeddings = HuggingFaceEmbeddings(model_name=model_name)

!nvidia-smi

# Thu Apr 27 10:14:26 2023

# +-----------------------------------------------------------------------------+

# | NVIDIA-SMI 510.73.05 Driver Version: 510.73.05 CUDA Version: 11.6 |

# |-------------------------------+----------------------+----------------------+

# | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

# | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

# | | | MIG M. |

# |===============================+======================+======================|

# | 0 Quadro RTX 4000 Off | 00000000:00:05.0 Off | N/A |

# | 30% 47C P0 33W / 125W | 5880MiB / 8192MiB | 0% Default |

# | | | N/A |

# +-------------------------------+----------------------+----------------------+

# +-----------------------------------------------------------------------------+

# | Processes: |

# | GPU GI CI PID Type Process name GPU Memory |

# | ID ID Usage |

# |=============================================================================|

# +-----------------------------------------------------------------------------+

with open('data/made-up-story.txt') as f:

text = f.read()

text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=20)

texts = text_splitter.split_text(text)

vectorstore = FAISS.from_texts(texts, embeddings)

```

So far so good. The issue arises when I try to load ChatVectorDBChain:

```python

llm = HuggingFacePipeline(pipeline=pipe)

qa = ChatVectorDBChain.from_llm(llm, vectorstore) # Produces RuntimeError: CUDA out of memory.

```

Full output:

```

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

Input In [9], in <cell line: 4>()

1 from transformers import pipeline

3 llm = HuggingFacePipeline(pipeline=pipe)

----> 4 qa = ChatVectorDBChain.from_llm(llm, vectorstore)

File /usr/local/lib/python3.9/dist-packages/langchain/chains/conversational_retrieval/base.py:240, in ChatVectorDBChain.from_llm(cls, llm, vectorstore, condense_question_prompt, chain_type, combine_docs_chain_kwargs, **kwargs)

238 """Load chain from LLM."""

239 combine_docs_chain_kwargs = combine_docs_chain_kwargs or {}

--> 240 doc_chain = load_qa_chain(

241 llm,

242 chain_type=chain_type,

243 **combine_docs_chain_kwargs,

244 )

245 condense_question_chain = LLMChain(llm=llm, prompt=condense_question_prompt)

246 return cls(

247 vectorstore=vectorstore,

248 combine_docs_chain=doc_chain,

249 question_generator=condense_question_chain,

250 **kwargs,

251 )

File /usr/local/lib/python3.9/dist-packages/langchain/chains/question_answering/__init__.py:218, in load_qa_chain(llm, chain_type, verbose, callback_manager, **kwargs)

213 if chain_type not in loader_mapping:

214 raise ValueError(

215 f"Got unsupported chain type: {chain_type}. "

216 f"Should be one of {loader_mapping.keys()}"

217 )

--> 218 return loader_mapping[chain_type](

219 llm, verbose=verbose, callback_manager=callback_manager, **kwargs

220 )

File /usr/local/lib/python3.9/dist-packages/langchain/chains/question_answering/__init__.py:67, in _load_stuff_chain(llm, prompt, document_variable_name, verbose, callback_manager, **kwargs)

63 llm_chain = LLMChain(

64 llm=llm, prompt=_prompt, verbose=verbose, callback_manager=callback_manager

65 )

66 # TODO: document prompt

---> 67 return StuffDocumentsChain(

68 llm_chain=llm_chain,

69 document_variable_name=document_variable_name,

70 verbose=verbose,

71 callback_manager=callback_manager,

72 **kwargs,

73 )

File /usr/local/lib/python3.9/dist-packages/pydantic/main.py:339, in pydantic.main.BaseModel.__init__()

File /usr/local/lib/python3.9/dist-packages/pydantic/main.py:1038, in pydantic.main.validate_model()

File /usr/local/lib/python3.9/dist-packages/pydantic/fields.py:857, in pydantic.fields.ModelField.validate()

File /usr/local/lib/python3.9/dist-packages/pydantic/fields.py:1074, in pydantic.fields.ModelField._validate_singleton()

File /usr/local/lib/python3.9/dist-packages/pydantic/fields.py:1121, in pydantic.fields.ModelField._apply_validators()

File /usr/local/lib/python3.9/dist-packages/pydantic/class_validators.py:313, in pydantic.class_validators._generic_validator_basic.lambda12()

File /usr/local/lib/python3.9/dist-packages/pydantic/main.py:679, in pydantic.main.BaseModel.validate()

File /usr/local/lib/python3.9/dist-packages/pydantic/main.py:605, in pydantic.main.BaseModel._copy_and_set_values()

File /usr/lib/python3.9/copy.py:146, in deepcopy(x, memo, _nil)

144 copier = _deepcopy_dispatch.get(cls)

145 if copier is not None:

--> 146 y = copier(x, memo)

147 else:

148 if issubclass(cls, type):

File /usr/lib/python3.9/copy.py:230, in _deepcopy_dict(x, memo, deepcopy)

228 memo[id(x)] = y

229 for key, value in x.items():

--> 230 y[deepcopy(key, memo)] = deepcopy(value, memo)

231 return y

File /usr/lib/python3.9/copy.py:172, in deepcopy(x, memo, _nil)

170 y = x

171 else:

--> 172 y = _reconstruct(x, memo, *rv)

174 # If is its own copy, don't memoize.

175 if y is not x:

File /usr/lib/python3.9/copy.py:270, in _reconstruct(x, memo, func, args, state, listiter, dictiter, deepcopy)

268 if state is not None:

269 if deep:

--> 270 state = deepcopy(state, memo)

271 if hasattr(y, '__setstate__'):

272 y.__setstate__(state)

File /usr/lib/python3.9/copy.py:146, in deepcopy(x, memo, _nil)

144 copier = _deepcopy_dispatch.get(cls)

145 if copier is not None:

--> 146 y = copier(x, memo)

147 else:

148 if issubclass(cls, type):

File /usr/lib/python3.9/copy.py:230, in _deepcopy_dict(x, memo, deepcopy)

228 memo[id(x)] = y

229 for key, value in x.items():

--> 230 y[deepcopy(key, memo)] = deepcopy(value, memo)

231 return y

[... skipping similar frames: _deepcopy_dict at line 230 (1 times), deepcopy at line 146 (1 times)]

File /usr/lib/python3.9/copy.py:172, in deepcopy(x, memo, _nil)

170 y = x

171 else:

--> 172 y = _reconstruct(x, memo, *rv)

174 # If is its own copy, don't memoize.

175 if y is not x:

File /usr/lib/python3.9/copy.py:270, in _reconstruct(x, memo, func, args, state, listiter, dictiter, deepcopy)

268 if state is not None:

269 if deep:

--> 270 state = deepcopy(state, memo)

271 if hasattr(y, '__setstate__'):

272 y.__setstate__(state)

[... skipping similar frames: _deepcopy_dict at line 230 (2 times), deepcopy at line 146 (2 times), deepcopy at line 172 (2 times), _reconstruct at line 270 (1 times)]

File /usr/lib/python3.9/copy.py:296, in _reconstruct(x, memo, func, args, state, listiter, dictiter, deepcopy)

294 for key, value in dictiter:

295 key = deepcopy(key, memo)

--> 296 value = deepcopy(value, memo)

297 y[key] = value

298 else:

[... skipping similar frames: deepcopy at line 172 (2 times), _deepcopy_dict at line 230 (1 times), _reconstruct at line 270 (1 times), deepcopy at line 146 (1 times)]

File /usr/lib/python3.9/copy.py:296, in _reconstruct(x, memo, func, args, state, listiter, dictiter, deepcopy)

294 for key, value in dictiter:

295 key = deepcopy(key, memo)

--> 296 value = deepcopy(value, memo)

297 y[key] = value

298 else:

[... skipping similar frames: deepcopy at line 172 (11 times), _deepcopy_dict at line 230 (5 times), _reconstruct at line 270 (5 times), _reconstruct at line 296 (5 times), deepcopy at line 146 (5 times)]

File /usr/lib/python3.9/copy.py:270, in _reconstruct(x, memo, func, args, state, listiter, dictiter, deepcopy)

268 if state is not None:

269 if deep:

--> 270 state = deepcopy(state, memo)

271 if hasattr(y, '__setstate__'):

272 y.__setstate__(state)

File /usr/lib/python3.9/copy.py:146, in deepcopy(x, memo, _nil)

144 copier = _deepcopy_dispatch.get(cls)

145 if copier is not None:

--> 146 y = copier(x, memo)

147 else:

148 if issubclass(cls, type):

File /usr/lib/python3.9/copy.py:230, in _deepcopy_dict(x, memo, deepcopy)

228 memo[id(x)] = y

229 for key, value in x.items():

--> 230 y[deepcopy(key, memo)] = deepcopy(value, memo)

231 return y

File /usr/lib/python3.9/copy.py:172, in deepcopy(x, memo, _nil)

170 y = x

171 else:

--> 172 y = _reconstruct(x, memo, *rv)

174 # If is its own copy, don't memoize.

175 if y is not x:

File /usr/lib/python3.9/copy.py:296, in _reconstruct(x, memo, func, args, state, listiter, dictiter, deepcopy)

294 for key, value in dictiter:

295 key = deepcopy(key, memo)

--> 296 value = deepcopy(value, memo)

297 y[key] = value

298 else:

File /usr/lib/python3.9/copy.py:153, in deepcopy(x, memo, _nil)

151 copier = getattr(x, "__deepcopy__", None)

152 if copier is not None:

--> 153 y = copier(memo)

154 else:

155 reductor = dispatch_table.get(cls)

File /usr/local/lib/python3.9/dist-packages/torch/nn/parameter.py:56, in Parameter.__deepcopy__(self, memo)

54 return memo[id(self)]

55 else:

---> 56 result = type(self)(self.data.clone(memory_format=torch.preserve_format), self.requires_grad)

57 memo[id(self)] = result

58 return result

RuntimeError: CUDA out of memory. Tried to allocate 40.00 MiB (GPU 0; 7.80 GiB total capacity; 6.82 GiB already allocated; 30.44 MiB free; 6.85 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

```

It seems to me that LangChain is somehow trying to reload the (whole?) pipeline on the GPU.

Any help appreciated, thank you. | Issue with ChatVectorDBChain and Hugging Face Accelerate | https://api.github.com/repos/langchain-ai/langchain/issues/3642/comments | 1 | 2023-04-27T10:28:29Z | 2023-09-10T16:26:11Z | https://github.com/langchain-ai/langchain/issues/3642 | 1,686,567,730 | 3,642 |

[

"hwchase17",

"langchain"

]

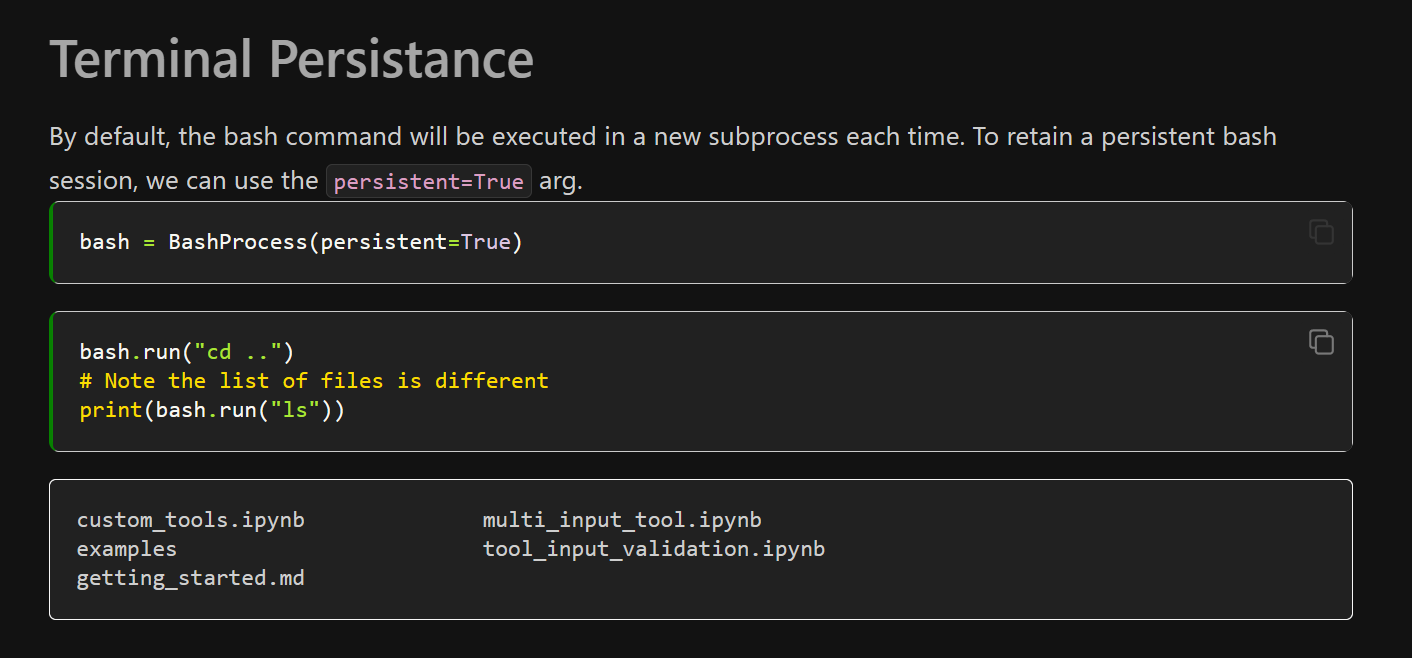

| Follow the instruction: https://python.langchain.com/en/latest/modules/agents/tools/examples/bash.html

But I get the error:

```

bash = BashProcess(persistent=True)

TypeError: BashProcess.__init__() got an unexpected keyword argument 'persistent'

```

The version of langchain is 0.0.150

| no 'persistent=True' tag | https://api.github.com/repos/langchain-ai/langchain/issues/3641/comments | 1 | 2023-04-27T09:42:23Z | 2023-04-27T19:08:03Z | https://github.com/langchain-ai/langchain/issues/3641 | 1,686,495,917 | 3,641 |

[

"hwchase17",

"langchain"

]

| I'm attempting to load some Documents and get a `TransformError` - could someone please point me in the right direction? Thanks!

I'm afraid the traceback doesn't mean much to me.

```python

db = DeepLake(dataset_path=deeplake_path, embedding_function=embeddings)

db.add_documents(texts)

```

```

tensor htype shape dtype compression

------- ------- ------- ------- -------

embedding generic (0,) float32 None

ids text (0,) str None

metadata json (0,) str None

text text (0,) str None

Evaluating ingest: 0%| | 0/1 [00:10<?

Traceback (most recent call last):

File "C:\Users\charles\Documents\GitHub\Chat-with-Github-Repo\venv\Lib\site-packages\deeplake\core\chunk_engine.py", line 1065, in extend

self._extend(samples, progressbar, pg_callback=pg_callback)

File "C:\Users\charles\Documents\GitHub\Chat-with-Github-Repo\venv\Lib\site-packages\deeplake\core\chunk_engine.py", line 1001, in _extend

self._samples_to_chunks(

File "C:\Users\charles\Documents\GitHub\Chat-with-Github-Repo\venv\Lib\site-packages\deeplake\core\chunk_engine.py", line 824, in _samples_to_chunks

num_samples_added = current_chunk.extend_if_has_space(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\charles\Documents\GitHub\Chat-with-Github-Repo\venv\Lib\site-packages\deeplake\core\chunk\chunk_compressed_chunk.py", line 50, in extend_if_has_space

return self.extend_if_has_space_byte_compression(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\charles\Documents\GitHub\Chat-with-Github-Repo\venv\Lib\site-packages\deeplake\core\chunk\chunk_compressed_chunk.py", line 233, in extend_if_has_space_byte_compression

serialized_sample, shape = self.serialize_sample(

^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\charles\Documents\GitHub\Chat-with-Github-Repo\venv\Lib\site-packages\deeplake\core\chunk\base_chunk.py", line 342, in serialize_sample

incoming_sample, shape = serialize_text(

^^^^^^^^^^^^^^^

File "C:\Users\charles\Documents\GitHub\Chat-with-Github-Repo\venv\Lib\site-packages\deeplake\core\serialize.py", line 505, in serialize_text

incoming_sample, shape = text_to_bytes(incoming_sample, dtype, htype)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\charles\Documents\GitHub\Chat-with-Github-Repo\venv\Lib\site-packages\deeplake\core\serialize.py", line 458, in text_to_bytes

byts = json.dumps(sample, cls=HubJsonEncoder).encode()

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\charles\AppData\Local\Programs\Python\Python311\Lib\json\__init__.py", line 238, in dumps

**kw).encode(obj)

^^^^^^^^^^^

File "C:\Users\charles\AppData\Local\Programs\Python\Python311\Lib\json\encoder.py", line 200, in encode

chunks = self.iterencode(o, _one_shot=True)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\charles\AppData\Local\Programs\Python\Python311\Lib\json\encoder.py", line 258, in iterencode

return _iterencode(o, 0)

^^^^^^^^^^^^^^^^^

ValueError: Circular reference detected

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "C:\Users\charles\Documents\GitHub\Chat-with-Github-Repo\venv\Lib\site-packages\deeplake\util\transform.py", line 220, in _transform_and_append_data_slice

transform_dataset.flush()

File "C:\Users\charles\Documents\GitHub\Chat-with-Github-Repo\venv\Lib\site-packages\deeplake\core\transform\transform_dataset.py", line 154, in flush

raise SampleAppendError(name) from e

deeplake.util.exceptions.SampleAppendError: Failed to append a sample to the tensor 'metadata'. See more details in the traceback.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "C:\Users\charles\Documents\GitHub\Chat-with-Github-Repo\venv\Lib\site-packages\deeplake\core\chunk_engine.py", line 1065, in extend

self._extend(samples, progressbar, pg_callback=pg_callback)

File "C:\Users\charles\Documents\GitHub\Chat-with-Github-Repo\venv\Lib\site-packages\deeplake\core\chunk_engine.py", line 1001, in _extend

self._samples_to_chunks(

File "C:\Users\charles\Documents\GitHub\Chat-with-Github-Repo\venv\Lib\site-packages\deeplake\core\chunk_engine.py", line 824, in _samples_to_chunks

num_samples_added = current_chunk.extend_if_has_space(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\charles\Documents\GitHub\Chat-with-Github-Repo\venv\Lib\site-packages\deeplake\core\chunk\chunk_compressed_chunk.py", line 50, in extend_if_has_space

return self.extend_if_has_space_byte_compression(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\charles\Documents\GitHub\Chat-with-Github-Repo\venv\Lib\site-packages\deeplake\core\chunk\chunk_compressed_chunk.py", line 233, in extend_if_has_space_byte_compression

serialized_sample, shape = self.serialize_sample(

^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\charles\Documents\GitHub\Chat-with-Github-Repo\venv\Lib\site-packages\deeplake\core\chunk\base_chunk.py", line 342, in serialize_sample

incoming_sample, shape = serialize_text(

^^^^^^^^^^^^^^^

File "C:\Users\charles\Documents\GitHub\Chat-with-Github-Repo\venv\Lib\site-packages\deeplake\core\serialize.py", line 505, in serialize_text

incoming_sample, shape = text_to_bytes(incoming_sample, dtype, htype)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\charles\Documents\GitHub\Chat-with-Github-Repo\venv\Lib\site-packages\deeplake\core\serialize.py", line 458, in text_to_bytes

byts = json.dumps(sample, cls=HubJsonEncoder).encode()

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\charles\AppData\Local\Programs\Python\Python311\Lib\json\__init__.py", line 238, in dumps

**kw).encode(obj)

^^^^^^^^^^^

File "C:\Users\charles\AppData\Local\Programs\Python\Python311\Lib\json\encoder.py", line 200, in encode

chunks = self.iterencode(o, _one_shot=True)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\charles\AppData\Local\Programs\Python\Python311\Lib\json\encoder.py", line 258, in iterencode

return _iterencode(o, 0)

^^^^^^^^^^^^^^^^^

ValueError: Circular reference detected

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "C:\Users\charles\Documents\GitHub\Chat-with-Github-Repo\venv\Lib\site-packages\deeplake\util\transform.py", line 177, in _handle_transform_error

transform_dataset.flush()

File "C:\Users\charles\Documents\GitHub\Chat-with-Github-Repo\venv\Lib\site-packages\deeplake\core\transform\transform_dataset.py", line 154, in flush

raise SampleAppendError(name) from e

deeplake.util.exceptions.SampleAppendError: Failed to append a sample to the tensor 'metadata'. See more details in the traceback.

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "C:\Users\charles\Documents\GitHub\Chat-with-Github-Repo\venv\Lib\site-packages\deeplake\core\transform\transform.py", line 298, in eval

raise TransformError(

deeplake.util.exceptions.TransformError: Transform failed at index 0 of the input data. See traceback for more details.

``` | deeplake.util.exceptions.TransformError | https://api.github.com/repos/langchain-ai/langchain/issues/3640/comments | 7 | 2023-04-27T09:03:57Z | 2023-11-27T04:56:27Z | https://github.com/langchain-ai/langchain/issues/3640 | 1,686,435,981 | 3,640 |

[

"hwchase17",

"langchain"

]

| Brief summary:

Need to solve multiple tasks in sequence (eg: translate an input -> use it to answer question -> translate to different language)

Previously was making multiple LLMChain objects with different prompts and passing outputs of one chain into another.

Came across sequential chains, tried it.

I didnt find any big difference on why I should use one over the other. Moreover, sequential chains seem to be slower than just calling multiple LLMChains.

Anything I'm missing, or if anyone can elaborate the need of sequential chains.

Thanks!! | Sequential chains vs multiple LLMChains (Why prefer one over the other?) | https://api.github.com/repos/langchain-ai/langchain/issues/3638/comments | 5 | 2023-04-27T07:12:50Z | 2023-10-21T16:09:41Z | https://github.com/langchain-ai/langchain/issues/3638 | 1,686,256,733 | 3,638 |

[

"hwchase17",

"langchain"

]

| In the [docs](https://python.langchain.com/en/latest/modules/indexes/document_loaders/examples/googledrive.html) of the GoogleDriveLoader says ``Currently, only Google Docs are supported``, but then, in the [code](https://github.com/hwchase17/langchain/blob/8e10ac422e4e6b193fc35e1d64d7f0c5208faa8d/langchain/document_loaders/googledrive.py#L100), there is a function ``_load_sheet_from_id``.

That function is only used for folder loading.

Accessing the _private_ method of the class is it possible, and works perfectly, to load spread sheets:

```

from langchain.document_loaders import GoogleDriveLoader

spreadsheet_id = "122tuu4r-yYng8Lj7XXXUgb-basdbk"

loader = GoogleDriveLoader(file_ids=[spreadsheet_id])

docs = loader._load_sheet_from_id(spreadsheet_id)

```

Probably ``_load_documents_from_ids`` needs some refactor to work based on the mimeType, as ``_load_documents_from_folder`` does. | Document Loaders: GoogleDriveLoader hidden option to load spread sheets | https://api.github.com/repos/langchain-ai/langchain/issues/3637/comments | 3 | 2023-04-27T06:07:09Z | 2024-02-07T16:30:28Z | https://github.com/langchain-ai/langchain/issues/3637 | 1,686,176,243 | 3,637 |

[

"hwchase17",

"langchain"

]

| Hello all,

I have been struggling for the past few days attempting to allow an agent.executor call to reference a text file as a VectorStore and determine the best response, then respond. When the agent eventually calls the VectorDBQAChain chain, it throws the below error stating the inability to redefine run().

Any input here is much appreciated.

Even a basic setup throws an error stating:

```

.\projectPath\node_modules\langchain\dist\chains\base.cjs:64

Object.defineProperty(outputValues, index_js_1.RUN_KEY, {

^

TypeError: Cannot redefine property: __run

at Function.defineProperty (<anonymous>)

at VectorDBQAChain.call (C:\Users\Tyler\Documents\RMMZ\GPTales-InteractiveNPC\node_modules\langchain\dist\chains\base.cjs:64:16)

at process.processTicksAndRejections (node:internal/process/task_queues:95:5)

at async VectorDBQAChain.run (C:\Users\Tyler\Documents\RMMZ\GPTales-InteractiveNPC\node_modules\langchain\dist\chains\base.cjs:29:30)

at async ChainTool.call (C:\Users\Tyler\Documents\RMMZ\GPTales-InteractiveNPC\node_modules\langchain\dist\tools\base.cjs:23:22)

at async C:\Users\Tyler\Documents\RMMZ\GPTales-InteractiveNPC\node_modules\langchain\dist\agents\executor.cjs:101:23

at async Promise.all (index 0)

at async AgentExecutor._call (C:\Users\Tyler\Documents\RMMZ\GPTales-InteractiveNPC\node_modules\langchain\dist\agents\executor.cjs:97:30)

at async AgentExecutor.call (C:\Users\Tyler\Documents\RMMZ\GPTales-InteractiveNPC\node_modules\langchain\dist\chains\base.cjs:53:28)

at async run (C:\Users\Tyler\Documents\RMMZ\GPTales-InteractiveNPC\Game\js\plugins\GPTales\example.js:79:19)

Node.js v19.7.0

```

Code:

```

const run = async () => {

console.log("Starting.");

console.log(process.env.OPENAI_API_KEY);

process.env.LANGCHAIN_HANDLER = "langchain";

const gameLorePath = path.join(__dirname, "yuri.txt");

const text = fs.readFileSync(gameLorePath, "utf8");

const textSplitter = new RecursiveCharacterTextSplitter({

chunkSize: 1000,

});

const docs = await textSplitter.createDocuments([text]);

const vectorStore = await HNSWLib.fromDocuments(docs, new OpenAIEmbeddings());

const model = new ChatOpenAI({

temperature: 0,

api_key: process.env.OPENAI_API_KEY,

});

const chain = VectorDBQAChain.fromLLM(model, vectorStore);

const characterContextTool = new ChainTool({

name: "character-contextTool-tool",

description:

"Context for the character - used for querying context of lore(bio, personality, appearance, etc), characters, events, environments, essentially all aspects of the character and their history.",

chain: chain,

});

const tools = [new Calculator(), characterContextTool];

// Passing "chat-conversational-react-description" as the agent type

// automatically creates and uses BufferMemory with the executor.

// If you would like to override this, you can pass in a custom

// memory option, but the memoryKey set on it must be "chat_history".

const executor = await initializeAgentExecutorWithOptions(tools, model, {

agentType: "chat-conversational-react-description",

verbose: true,

});

console.log("Loaded agent.");

const input0 =

"hi, i am bob. use the character context tool to best decide how to respond considering all facets of the character.";

const result0 = await executor.call({ input: input0 });

console.log(`Got output ${result0.output}`);

const input1 = "whats your name?";

const result1 = await executor.call({ input: input1 });

console.log(`Got output ${result1.output}`);

};

run();

``` | Unable to call VectorDBQAChain from Executor | https://api.github.com/repos/langchain-ai/langchain/issues/3633/comments | 2 | 2023-04-27T05:25:14Z | 2023-04-27T17:40:28Z | https://github.com/langchain-ai/langchain/issues/3633 | 1,686,139,134 | 3,633 |

[

"hwchase17",

"langchain"

]

| I am facing an issue when using the embeddings model that Azure OpenAI offers. Please help. Heres the code below. Assume the azure resource name is azure-resource. This issue is only arising with the text-embeddings-ada-002 model, nothing else

```

os.environ["OPENAI_API_KEY"] = API_KEY

# Loading the document using PyPDFLoader

loader = PyPDFLoader('xxx')

# Splitting the document into chunks

pages = loader.load_and_split()

# Creating your embeddings instance

embeddings = OpenAIEmbeddings(

model = "azure-resource",

)

# Creating your vector db

db = FAISS.from_documents(pages, embeddings)

query = "some-query"

docs = db.similarity_search(query)

```

My error:

`KeyError: 'Could not automatically map azure-resource to a tokeniser. Please use `tiktok.get_encoding` to explicitly get the tokeniser you expect.'` | KeyError: 'Could not automatically map azure-resource to a tokeniser. Arising when using the text-embeddings-ada-002 model. | https://api.github.com/repos/langchain-ai/langchain/issues/3632/comments | 0 | 2023-04-27T05:23:59Z | 2023-04-30T14:54:06Z | https://github.com/langchain-ai/langchain/issues/3632 | 1,686,138,122 | 3,632 |

[

"hwchase17",

"langchain"

]

| Using MMR with Chroma currently does not work because the max_marginal_relevance_search_by_vector method calls self.__query_collection with the parameter "include:", but "include" is not an accepted parameter for __query_collection. This appears to be a regression introduced with #3372

Excerpt from max_marginal_relevance_search_by_vector method:

```

results = self.__query_collection(

query_embeddings=embedding,

n_results=fetch_k,

where=filter,

include=["metadatas", "documents", "distances", "embeddings"],

)

```

__query_collection does not accept include:

```

def __query_collection(

self,

query_texts: Optional[List[str]] = None,

query_embeddings: Optional[List[List[float]]] = None,

n_results: int = 4,

where: Optional[Dict[str, str]] = None,

) -> List[Document]:

```

This results in an unexpected keyword error.

The short term fix is to use self._collection.query instead of self.__query_collection in max_marginal_relevance_search_by_vector, although that loses the protection when the user requests more records than exist in the store.

```

results = self._collection.query(

query_embeddings=embedding,

n_results=fetch_k,

where=filter,

include=["metadatas", "documents", "distances", "embeddings"],

)

``` | Chroma.py max_marginal_relevance_search_by_vector method currently broken | https://api.github.com/repos/langchain-ai/langchain/issues/3628/comments | 4 | 2023-04-27T00:21:42Z | 2023-05-01T17:47:17Z | https://github.com/langchain-ai/langchain/issues/3628 | 1,685,907,595 | 3,628 |

[

"hwchase17",

"langchain"

]

| Hi, i'm using deeplake with the ConversationalRetrievalBuffer (just like in this brand new guide [code understanding](https://python.langchain.com/en/latest/use_cases/code/code-analysis-deeplake.html#prepare-data) encountering the following error when calling:

`answer = chain({"question": user_input, "chat_history": chat_history['history']})`

error:

```

File "C:\Users\sbene\Projects\GitChat\src\chatbot.py", line 446, in generate_answer

answer = chain({"question": user_input, "chat_history": chat_history['history']})

File "C:\Users\sbene\miniconda3\envs\gitchat\lib\site-packages\langchain\chains\base.py", line 116, in __call__

raise e

File "C:\Users\sbene\miniconda3\envs\gitchat\lib\site-packages\langchain\chains\base.py", line 113, in __call__

outputs = self._call(inputs)

File "C:\Users\sbene\miniconda3\envs\gitchat\lib\site-packages\langchain\chains\conversational_retrieval\base.py", line 95, in _call

docs = self._get_docs(new_question, inputs)

File "C:\Users\sbene\miniconda3\envs\gitchat\lib\site-packages\langchain\chains\conversational_retrieval\base.py", line 162, in _get_docs

docs = self.retriever.get_relevant_documents(question)

File "C:\Users\sbene\miniconda3\envs\gitchat\lib\site-packages\langchain\vectorstores\base.py", line 279, in get_relevant_documents

docs = self.vectorstore.similarity_search(query, **self.search_kwargs)

File "C:\Users\sbene\miniconda3\envs\gitchat\lib\site-packages\langchain\vectorstores\deeplake.py", line 350, in similarity_search

return self.search(query=query, k=k, **kwargs)

File "C:\Users\sbene\miniconda3\envs\gitchat\lib\site-packages\langchain\vectorstores\deeplake.py", line 294, in search

indices, scores = vector_search(

File "C:\Users\sbene\miniconda3\envs\gitchat\lib\site-packages\langchain\vectorstores\deeplake.py", line 51, in vector_search

nearest_indices[::-1][:k] if distance_metric in ["cos"] else nearest_indices[:k]

``` | Bug: deeplake cosine distance search error | https://api.github.com/repos/langchain-ai/langchain/issues/3623/comments | 1 | 2023-04-26T23:27:06Z | 2023-09-10T16:26:16Z | https://github.com/langchain-ai/langchain/issues/3623 | 1,685,870,712 | 3,623 |

[

"hwchase17",

"langchain"

]

| It would be good to get some more documentation and examples of using models other than OpenAI. Currently the docs are really heavily skewed and in some areas such as conversation only offer an OpenAI option.

Thanks | Non OpenAI models | https://api.github.com/repos/langchain-ai/langchain/issues/3622/comments | 2 | 2023-04-26T23:06:51Z | 2023-09-17T17:22:03Z | https://github.com/langchain-ai/langchain/issues/3622 | 1,685,858,023 | 3,622 |

[

"hwchase17",

"langchain"

]

| I am having issues with using ConversationalRetrievalChain to chat with a CSV file. It only recognizes the first four rows of a CSV file.

```

loader = CSVLoader(file_path=filepath, encoding="utf-8")

data = loader.load()

embeddings = OpenAIEmbeddings(openai_api_key=openai_api_key)

vectorstore = FAISS.from_documents(data, embeddings)

_template = """Given the following conversation and a follow-up question, rephrase the follow-up question to be a standalone question.

Chat History:

{chat_history}

Follow-up entry: {question}

Standalone question:"""

CONDENSE_QUESTION_PROMPT = PromptTemplate.from_template(_template)

qa_template = """"You are an AI conversational assistant to answer questions based on a context.

You are given data from a csv file and a question, you must help the user find the information they need.

Your answers should be friendly, in the same language.

question: {question}

=========

context: {context}

=======

"""

QA_PROMPT = PromptTemplate(template=qa_template, input_variables=["question", "context"])

model_name = 'gpt-4'

from langchain.memory import ConversationBufferMemory

memory = ConversationBufferMemory(memory_key="chat_history", return_messages=True)

chain = ConversationalRetrievalChain.from_llm(

llm = ChatOpenAI(temperature=0.0,

model_name=model_name,

openai_api_key=openai_api_key,

request_timeout=120),

retriever=vectorstore.as_retriever(),

memory=memory)

query = """

How many headlines are in this data set

"""

result = chain({"question": query,})

result[ 'answer']

```

The response is `There are four rows in this data set.`

The data length is 151 lines so I know that this step is working properly. Could this be a token limitation of OpenAI? | ConversationalRetrievalChain with CSV file limited to first 4 rows of data | https://api.github.com/repos/langchain-ai/langchain/issues/3621/comments | 14 | 2023-04-26T22:38:48Z | 2023-09-01T07:29:44Z | https://github.com/langchain-ai/langchain/issues/3621 | 1,685,837,569 | 3,621 |

[

"hwchase17",

"langchain"

]

| if the line in BaseConversationalRetrievalChain::_call() (in chains/conversational_retrieval/base.py):

```

docs = self._get_docs(new_question, inputs)

```

returns an empty list of docs, then a subsequent line in the same method:

```

answer, _ = self.combine_docs_chain.combine_docs(docs, **new_inputs)

```

will result in an error due to the CombineDocsProtocol.combine_docs() line:

```

results = self.llm_chain.apply(

# FYI - this is parallelized and so it is fast.

[{**{self.document_variable_name: d.page_content}, **kwargs} for d in docs]

)

```

which will pass an empty "input_list" arg to LLMChain.apply(). LLMChain.apply() doesn't like an empty input_list.

Should docs be non-empty in all cases? If the vectorstore is empty, wouldn't it match 0 docs and then shouldn't that be handled more gracefully? | BaseConversationalRetrievalChain raising error when no Documents are matched | https://api.github.com/repos/langchain-ai/langchain/issues/3617/comments | 1 | 2023-04-26T20:15:11Z | 2023-09-10T16:26:25Z | https://github.com/langchain-ai/langchain/issues/3617 | 1,685,654,780 | 3,617 |

[

"hwchase17",

"langchain"

]

| When executing the code for Human as a tool taken directly from documentation I get the following error:

```

ImportError Traceback (most recent call last)

[/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/delete.ipynb](https://file+.vscode-resource.vscode-cdn.net/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/delete.ipynb) Cell 2 in 5

[3](vscode-notebook-cell:/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/delete.ipynb#W1sZmlsZQ%3D%3D?line=2) from langchain.llms import OpenAI

[4](vscode-notebook-cell:/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/delete.ipynb#W1sZmlsZQ%3D%3D?line=3) from langchain.agents import load_tools, initialize_agent

----> [5](vscode-notebook-cell:/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/delete.ipynb#W1sZmlsZQ%3D%3D?line=4) from langchain.agents import AgentType

[7](vscode-notebook-cell:/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/delete.ipynb#W1sZmlsZQ%3D%3D?line=6) llm = ChatOpenAI(temperature=0.0)

[8](vscode-notebook-cell:/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/delete.ipynb#W1sZmlsZQ%3D%3D?line=7) math_llm = OpenAI(temperature=0.0)

ImportError: cannot import name 'AgentType' from 'langchain.agents' ([/Library/Frameworks/Python.framework/Versions/3.11/lib/python3.11/site-packages/langchain/agents/__init__.py](https://file+.vscode-resource.vscode-cdn.net/Library/Frameworks/Python.framework/Versions/3.11/lib/python3.11/site-packages/langchain/agents/__init__.py))

```

Even when commenting out the 'from langchain.agents import AgentType' and switching the agent like so 'agent="zero-shot-react-description"' I still get the following error:

```

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

[/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/AGI.ipynb](https://file+.vscode-resource.vscode-cdn.net/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/AGI.ipynb) Cell 4 in 7

[4](vscode-notebook-cell:/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/AGI.ipynb#W3sZmlsZQ%3D%3D?line=3) os.environ['WOLFRAM_ALPHA_APPID'] = creds.WOLFRAM_ALPHA_APPID

[6](vscode-notebook-cell:/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/AGI.ipynb#W3sZmlsZQ%3D%3D?line=5) llm = OpenAI(temperature=0.0, model_name = "gpt-3.5-turbo")

----> [7](vscode-notebook-cell:/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/AGI.ipynb#W3sZmlsZQ%3D%3D?line=6) tools = load_tools(["python_repl",

[8](vscode-notebook-cell:/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/AGI.ipynb#W3sZmlsZQ%3D%3D?line=7) "terminal",

[9](vscode-notebook-cell:/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/AGI.ipynb#W3sZmlsZQ%3D%3D?line=8) "wolfram-alpha",

[10](vscode-notebook-cell:/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/AGI.ipynb#W3sZmlsZQ%3D%3D?line=9) "human",

[11](vscode-notebook-cell:/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/AGI.ipynb#W3sZmlsZQ%3D%3D?line=10) # "serpapi",

[12](vscode-notebook-cell:/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/AGI.ipynb#W3sZmlsZQ%3D%3D?line=11) # "wikipedia",

[13](vscode-notebook-cell:/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/AGI.ipynb#W3sZmlsZQ%3D%3D?line=12) "requests",

[14](vscode-notebook-cell:/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/AGI.ipynb#W3sZmlsZQ%3D%3D?line=13) ],)

[16](vscode-notebook-cell:/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/AGI.ipynb#W3sZmlsZQ%3D%3D?line=15) agent = initialize_agent(tools,

[17](vscode-notebook-cell:/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/AGI.ipynb#W3sZmlsZQ%3D%3D?line=16) llm,

[18](vscode-notebook-cell:/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/AGI.ipynb#W3sZmlsZQ%3D%3D?line=17) agent="zero-shot-react-description",

[19](vscode-notebook-cell:/Users/anonymous/Library/CloudStorage/OneDrive-UniversityCollegeLondon/Python_Projects/LangChain/AGI.ipynb#W3sZmlsZQ%3D%3D?line=18) verbose=True)

File [/Library/Frameworks/Python.framework/Versions/3.11/lib/python3.11/site-packages/langchain/agents/load_tools.py:236](https://file+.vscode-resource.vscode-cdn.net/Library/Frameworks/Python.framework/Versions/3.11/lib/python3.11/site-packages/langchain/agents/load_tools.py:236), in load_tools(tool_names, llm, callback_manager, **kwargs)

234 tools.append(tool)

235 else:

--> 236 raise ValueError(f"Got unknown tool {name}")

237 return tools

ValueError: Got unknown tool human

```

| Human as a Tool Documentation Out of Date | https://api.github.com/repos/langchain-ai/langchain/issues/3615/comments | 6 | 2023-04-26T19:58:50Z | 2023-04-26T22:11:05Z | https://github.com/langchain-ai/langchain/issues/3615 | 1,685,632,646 | 3,615 |

[

"hwchase17",

"langchain"

]

| Hello all, I would like to clarify something regarding indexes, llama connectors etc... I made simple q/a AI app using lanchain with pinecone vector DB, the vector DB is updated from local files on change to that files.. Everything works ok.

Now, how or what is the logic when adding other connectors ? Do I just use the llama connector to scrape some endpoint like web or discord, and feed it to vector DB and use only one vector DB in the end to query answer?

I need to query over multiple sources. How to deal with new data ? Currently, since the text files are small the pinecone index is dropped and it's recreated from scratch which does not seem to be a correct way to do it... let's say if the web changes, something is added or modified, it does not make sense to recreate the whole DB (hmm maybe I can drop stuff by source meta ? ) | Multiple data sources logic ? | https://api.github.com/repos/langchain-ai/langchain/issues/3609/comments | 1 | 2023-04-26T18:23:02Z | 2023-09-17T17:22:08Z | https://github.com/langchain-ai/langchain/issues/3609 | 1,685,505,802 | 3,609 |

[

"hwchase17",

"langchain"

]

| Hello, I and deploying RetrievalQAWithSourcesChain with ChatOpenAI model right now.

Unlike OpenAI model, you can provide system message for the model which is a great complement.

But I tried many times, it seems the prompt can not be insert into the chain.

Please suggest what should I do to my code:

```

#Prompt Construction

template="""You play as {user_name}'s assistant,your name is {name},personality is {personality},duty is {duty}"""

system_message_prompt = SystemMessagePromptTemplate.from_template(template)

human_template="""

Context: {context}

Question: {question}

please indicate if you are not sure about answer. Do NOT Makeup.

MUST answer in {language}."""

human_message_prompt = HumanMessagePromptTemplate.from_template(human_template)

chat_prompt = ChatPromptTemplate.from_messages([system_message_prompt, human_message_prompt])

ChatPromptTemplate.input_variables=["context", "question","name","personality","user_name","duty","language"]

#define the chain

chain = RetrievalQAWithSourcesChain.from_chain_type(llm=llm,

combine_documents_chain=qa_chain,

chain_type="stuff",

retriever=compression_retriever,

chain_type_kwargs = {"prompt": chat_prompt}

)

``` | How can I structure prompt temple for RetrievalQAWithSourcesChain with ChatOpenAI model | https://api.github.com/repos/langchain-ai/langchain/issues/3606/comments | 3 | 2023-04-26T18:02:39Z | 2023-09-17T17:22:13Z | https://github.com/langchain-ai/langchain/issues/3606 | 1,685,480,734 | 3,606 |

[

"hwchase17",

"langchain"

]

| I am new to using Langchain and attempting to make it work with a locally running LLM (Alpaca) and Embeddings model (Sentence Transformer). When configuring the sentence transformer model with `HuggingFaceEmbeddings` no arguments can be passed to the encode method of the model, specifically `normalize_embeddings=True`. Neither can I specify the distance metric that I want to use in the `similarity_search` method irrespective of what vector store I am using. So it seems to me I can only create unnormalized embeddings with huggingface models and only use L2 distance as the similarity metric by default. Whereas I want to use the cosine similarity metric or have normalized embeddings and then use the dot product/L2 distance.

If I am wrong here can someone point me in the right direction. If not are there any plans to implement this? | Embeddings normalization and similarity metric | https://api.github.com/repos/langchain-ai/langchain/issues/3605/comments | 0 | 2023-04-26T18:02:20Z | 2023-05-30T18:57:06Z | https://github.com/langchain-ai/langchain/issues/3605 | 1,685,480,283 | 3,605 |

[

"hwchase17",

"langchain"

]

| I have a doubt if FAISS is a vector database or a search algorithm. The vectorstores.faiss mentions it as a vector database, but is it not a search algorithm? | The vectorstores says faiss as FAISS vector database | https://api.github.com/repos/langchain-ai/langchain/issues/3601/comments | 1 | 2023-04-26T16:28:37Z | 2023-09-10T16:26:41Z | https://github.com/langchain-ai/langchain/issues/3601 | 1,685,351,151 | 3,601 |

[

"hwchase17",

"langchain"

]

| Hi Team, I am using opensearch as my vectorstore and trying to create index for documents vectors. but unable to create index:

Getting error:

`ERROR - The embeddings count, 501 is more than the [bulk_size], 500. Increase the value of [bulk_size]`

Can someone please advice ?

Thanks | Unable to create opensearch index. | https://api.github.com/repos/langchain-ai/langchain/issues/3595/comments | 2 | 2023-04-26T14:04:56Z | 2023-09-10T16:26:46Z | https://github.com/langchain-ai/langchain/issues/3595 | 1,685,103,449 | 3,595 |

[

"hwchase17",

"langchain"

]

| null | load_qa_chain _ RuntimeError: Expected all tensors to be on the same device, but found at least two devices, cuda:0 and cpu! | https://api.github.com/repos/langchain-ai/langchain/issues/3593/comments | 3 | 2023-04-26T14:02:08Z | 2023-10-18T21:42:47Z | https://github.com/langchain-ai/langchain/issues/3593 | 1,685,098,021 | 3,593 |

[

"hwchase17",

"langchain"

]

| I am using RetrievalQAWithSourcesChain to get answers on documents that I previously embedded using pinecone. I notice that sometimes that the sources is not populated under the sources key when I run the chain.

I am using pinecone to embed the pdf documents like so:

```python

documents = loader.load()

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=400,

chunk_overlap=20,

length_function=tiktoken_len,

separators=['\n\n', '\n', ' ', '']

)

split_documents = text_splitter.split_documents(documents=documents)

Pinecone.from_documents(

split_documents,

OpenAIEmbeddings(),

index_name='test_index',

namespace= 'test_namespace')

```

I am using RetrievalQAWithSourcesChain to ask queries like so:

```python

llm = OpenAIEmbeddings()

vectorstore: Pinecone = Pinecone.from_existing_index(

index_name='test_index',

embedding=OpenAIEmbeddings(),

namespace='test_namespace'

)

qa_chain = load_qa_with_sources_chain(llm=_llm, chain_type="stuff")

qa = RetrievalQAWithSourcesChain(

combine_documents_chain=qa_chain,

retriever=vectorstore.as_retriever(),

reduce_k_below_max_tokens=True,

)

answer_response = qa({"question": question}, return_only_outputs=True)

```

Expected response

`{'answer': 'some answer', 'sources': 'the_file_name.pdf'}`

Actual response

`{'answer': 'some answer', 'sources': ''}`

This behaviour is actually not consistent. I sometimes get the sources in the answer itself and not under the sources key. And at times I get the sources under the 'sources' key and not the answer. I want the sources to ALWAYS come under the sources key and not in the answer text.

Im using langchain==0.0.149.

Am I missing something in the way im embedding or retrieving my documents? Or is this an issue with langchain?

**Edit: Additional information on how to reproduce this issue**

While trying to reproduce the exact issue for @jpdus I noticed that this happens consistently when I request for the answer in a table format. When the query requests for the answer in a table format, it seems like the source is coming in with the answer and not the source key. I am attaching a test document and some examples here:

Source : [UN Doc.pdf](https://github.com/hwchase17/langchain/files/11339620/UN.Doc.pdf)

Query 1 (with table): what are the goals for sustainability 2030, povide your answer in a table format?

Response :

```json

{'answer': 'Goals for Sustainability 2030:\n\nGoal 1. End poverty in all its forms everywhere\nGoal 2. End hunger, achieve food security and improved nutrition and promote sustainable agriculture\nGoal 3. Ensure healthy lives and promote well-being for all at all ages\nGoal 4. Ensure inclusive and equitable quality education and promote lifelong learning opportunities for all\nGoal 5. Achieve gender equality and empower all women and girls\nGoal 6. Ensure availability and sustainable management of water and sanitation for all\nGoal 7. Ensure access to affordable, reliable, sustainable and modern energy for all\nGoal 8. Promote sustained, inclusive and sustainable economic growth, full and productive employment and decent work for all\nGoal 9. Build resilient infrastructure, promote inclusive and sustainable industrialization and foster innovation\nGoal 10. Reduce inequality within and among countries\nGoal 11. Make cities and human settlements inclusive, safe, resilient and sustainable\nGoal 12. Ensure sustainable consumption and production patterns\nGoal 13. Take urgent action to combat climate change and its impacts\nGoal 14. Conserve and sustainably use the oceans, seas and marine resources for sustainable development\nGoal 15. Protect, restore and promote sustainable use of terrestrial ecosystems, sustainably manage forests, combat desertification, and halt and reverse land degradation and halt biodiversity loss\nSource: docs/UN Doc.pdf', 'sources': ''}

```

Query 2 (without table) : what are the goals for sustainability 2030?

Response:

```json

{'answer': "The goals for sustainability 2030 include expanding international cooperation and capacity-building support to developing countries in water and sanitation-related activities and programs, ensuring access to affordable, reliable, sustainable and modern energy for all, promoting sustained, inclusive and sustainable economic growth, full and productive employment and decent work for all, taking urgent action to combat climate change and its impacts, strengthening efforts to protect and safeguard the world's cultural and natural heritage, providing universal access to safe, inclusive and accessible green and public spaces, ensuring sustainable consumption and production patterns, significantly increasing access to information and communications technology and striving to provide universal and affordable access to the Internet in least developed countries by 2020, and reducing inequality within and among countries. \n", 'sources': 'docs/UN Doc.pdf'}

```

| RetrievalQAWithSourcesChain sometimes does not return sources under sources key | https://api.github.com/repos/langchain-ai/langchain/issues/3592/comments | 7 | 2023-04-26T13:22:28Z | 2023-09-24T16:07:12Z | https://github.com/langchain-ai/langchain/issues/3592 | 1,685,024,756 | 3,592 |

[

"hwchase17",

"langchain"

]

| I am using the DirectoryLoader, with the relevant loader class defined

```

DirectoryLoader('.\\src', glob="**/*.md", loader_cls=UnstructuredMarkdownLoader)`

```

I couldn't understand why the following step didn't chunk text into the relevant markdown sections:

```

markdown_splitter = MarkdownTextSplitter(chunk_size=1000, chunk_overlap=0)

texts = markdown_splitter.split_documents(docs)

```

After digging into it a bit, the UnstructuredMarkdownLoader strips the Markdown formatting from the documents. This means that the Splitter has nothing to guide it and ends up chunking into 1000 text character sizes. | UnstructuredMarkdownLoader strips Markdown formatting from documents, rendering MarkdownTextSplitter non-functional | https://api.github.com/repos/langchain-ai/langchain/issues/3591/comments | 3 | 2023-04-26T13:02:27Z | 2023-11-02T16:15:34Z | https://github.com/langchain-ai/langchain/issues/3591 | 1,684,990,072 | 3,591 |

[

"hwchase17",

"langchain"

]

| So I'm just trying to write a custom agent using `LLMSingleActionAgent` based off the example from the official docs and I ran into this error

>

> File "/usr/local/lib/python3.9/site-packages/langchain/chains/base.py", line 118, in __call__

> return self.prep_outputs(inputs, outputs, return_only_outputs)

> File "/usr/local/lib/python3.9/site-packages/langchain/chains/base.py", line 168, in prep_outputs

> self._validate_outputs(outputs)

> File "/usr/local/lib/python3.9/site-packages/langchain/chains/base.py", line 79, in _validate_outputs

> raise ValueError(

> ValueError: Did not get output keys that were expected. Got: {'survey_question'}. Expected: {'output'}

```python

class CustomOutputParser(AgentOutputParser):

def parse(self, llm_output: str) -> Union[AgentAction, AgentFinish]:

# Check if agent should finish

if "Final Answer:" in llm_output:

return AgentFinish(

# Return values is generally always a dictionary with a single `output` key

# It is not recommended to try anything else at the moment :)

return_values={"survey_question": llm_output.split(

"Final Answer:")[-1].strip()},

log=llm_output,

)

# Parse out the action and action input

regex = r"Action\s*\d*\s*:(.*?)\nAction\s*\d*\s*Input\s*\d*\s*:[\s]*(.*)"

match = re.search(regex, llm_output, re.DOTALL)

if not match:

raise ValueError(f"Could not parse LLM result: `{llm_output}`")

action = match.group(1).strip()

action_input = match.group(2)

# Return the action and action input

return AgentAction(tool=action, tool_input=action_input.strip(" ").strip('"'), log=llm_output)

class Chatbot:

async def conversational_chat(self, query, dataset_path):

prompt = CustomPromptTemplate(

template=template,

tools=tools,

# This omits the `agent_scratchpad`, `tools`, and `tool_names` variables because those are generated dynamically

# This includes the `intermediate_steps` variable because that is needed

input_variables=["input", "intermediate_steps", "dataset_path"],

output_parser=CustomOutputParser(),

)

output_parser = CustomOutputParser()

llm = OpenAI(temperature=0) # type: ignore

llm_chain = LLMChain(llm=llm, prompt=prompt)

tool_names = [tool.name for tool in tools]

survey_agent = LLMSingleActionAgent(

llm_chain=llm_chain,

output_parser=output_parser,

stop=["\nObservation:"],

allowed_tools=tool_names # type: ignore

)

survey_agent_executor = AgentExecutor.from_agent_and_tools(

agent=survey_agent, tools=tools, verbose=True)

return survey_agent_executor({"input": query, "dataset_path": dataset_path})

``` | Did not get output keys that were expected. | https://api.github.com/repos/langchain-ai/langchain/issues/3590/comments | 1 | 2023-04-26T12:55:45Z | 2023-09-10T16:26:51Z | https://github.com/langchain-ai/langchain/issues/3590 | 1,684,978,281 | 3,590 |

[

"hwchase17",

"langchain"

]

| I'm using OpenAPI agents to access my own APIs. and the LLM I'm using is OpenAI's GPT-4.

When I queried something, LLM just answered not only `Action` and `Action Input`, but also `Observation` and even `Final Answer` with fake data under API_ORCHESTRATOR_PROMPT.

So the agent did not work with `api_planner` and `api_controller` tools.

I am wondering is `API_ORCHESTRATOR_PROMPT` or `FORMAT_INSTRUCTIONS` prompt stable?

I tested the [Agent Getting Started](https://python.langchain.com/en/latest/modules/agents/getting_started.html), and got bad answer from llm directly without tools sometimes, ether.

or am i missing something important?

or should i rewrite the prompt?

thanks

| OpenAPI agents did not execute tools | https://api.github.com/repos/langchain-ai/langchain/issues/3588/comments | 3 | 2023-04-26T12:24:28Z | 2023-09-13T15:59:30Z | https://github.com/langchain-ai/langchain/issues/3588 | 1,684,928,287 | 3,588 |

[

"hwchase17",

"langchain"

]

| I am facing an error when calling the OpenAIEmbeddings model. This is my code.

````

import os

os.environ["OPENAI_API_TYPE"] = "azure"

os.environ["OPENAI_API_BASE"] = "base-thing"

os.environ["OPENAI_API_KEY"] = "apikey"

from langchain.embeddings import OpenAIEmbeddings

embeddings = OpenAIEmbeddings(model="model-name")

text = "This is a test document."

query_result = embeddings.embed_query(text)

doc_result = embeddings.embed_documents([text])

````

This is the error I am facing:

**AttributeError: module 'tiktoken' has no attribute 'model'** | AttributeError when calling OpenAIEmbeddings model | https://api.github.com/repos/langchain-ai/langchain/issues/3586/comments | 12 | 2023-04-26T11:07:24Z | 2023-04-27T05:26:43Z | https://github.com/langchain-ai/langchain/issues/3586 | 1,684,804,005 | 3,586 |

[

"hwchase17",

"langchain"

]

| Hi,

I have installed langchain-0.0.149 using pip. When trying to run the folloging code I get an import error.

from langchain.retrievers import ContextualCompressionRetriever

Traceback (most recent call last):

File ".../lib/python3.10/site-packages/IPython/core/interactiveshell.py", line 3460, in run_code

exec(code_obj, self.user_global_ns, self.user_ns)

File "", line 1, in

from langchain.retrievers import ContextualCompressionRetriever