Update Thinking Like Transformers-cn.md

Browse files- Thinking Like Transformers-cn.md +57 -57

Thinking Like Transformers-cn.md

CHANGED

|

@@ -1,20 +1,20 @@

|

|

| 1 |

-

# 像Transformer一样思考

|

| 2 |

-

- [论文](https://arxiv.org/pdf/2106.06981.pdf)来自Gail Weiss, Yoav Goldberg,Eran Yahav.

|

| 3 |

-

- 博客参考[ Sasha Rush ](https://rush-nlp.com/)和[ Gail Weiss ](https://sgailw.cswp.cs.technion.ac.il/)

|

| 4 |

-

- 库和交互Notebook:[ srush/raspy ](https://github.com/srush/RASPy)

|

| 5 |

|

| 6 |

|

| 7 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

| 8 |

|

| 9 |

<center>

|

| 10 |

|

| 11 |

-

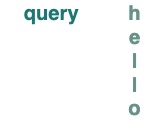

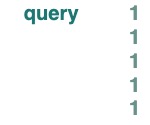

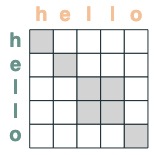

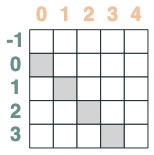

提出 transformer 类计算框架。这个框架直接计算和模仿 Transformer 计算。使用[

|

| 18 |

|

| 19 |

在这篇博客中,我用 python 复现了 RASP 的变体( RASPy )。该语言大致与原始版本相当,但是多了一些我认为很有趣的变化。通过这些语言,作者 Gail Weiss 的工作,提供了一套具有挑战性的有趣且正确的方式可以帮助了解其工作原理。

|

| 20 |

|

|

@@ -33,7 +33,7 @@ flip()

|

|

| 33 |

```

|

| 34 |

<center>

|

| 35 |

|

| 36 |

-

|

|

| 53 |

|

| 54 |

<center>

|

| 55 |

|

| 56 |

-

|

|

| 77 |

|

| 78 |

<center>

|

| 79 |

|

| 80 |

-

|

|

| 101 |

|

| 102 |

<center>

|

| 103 |

|

| 104 |

-

|

|

| 112 |

|

| 113 |

<center>

|

| 114 |

|

| 115 |

-

|

|

| 137 |

|

| 138 |

<center>

|

| 139 |

|

| 140 |

-

|

|

| 150 |

|

| 151 |

<center>

|

| 152 |

|

| 153 |

-

|

|

| 161 |

|

| 162 |

<center>

|

| 163 |

|

| 164 |

-

| (tokens == "l"), tokens, "q")

|

|

| 174 |

|

| 175 |

<center>

|

| 176 |

|

| 177 |

-

|

|

| 187 |

|

| 188 |

<center>

|

| 189 |

|

| 190 |

-

|

|

| 205 |

|

| 206 |

<center>

|

| 207 |

|

| 208 |

-

|

|

| 214 |

|

| 215 |

<center>

|

| 216 |

|

| 217 |

-

|

|

| 226 |

|

| 227 |

<center>

|

| 228 |

|

| 229 |

-

|

|

| 239 |

|

| 240 |

<center>

|

| 241 |

|

| 242 |

-

|

|

| 252 |

|

| 253 |

<center>

|

| 254 |

|

| 255 |

-

|

|

| 417 |

|

| 418 |

<center>

|

| 419 |

|

| 420 |

-

.input([3, 1, -2, 3, 1])

|

|

| 432 |

|

| 433 |

<center>

|

| 434 |

|

| 435 |

-

|

|

| 456 |

|

| 457 |

<center>

|

| 458 |

|

| 459 |

-

|

|

| 486 |

|

| 487 |

<center>

|

| 488 |

|

| 489 |

-

|

|

| 504 |

|

| 505 |

<center>

|

| 506 |

|

| 507 |

-

|

| 514 |

|

| 515 |

```python

|

| 516 |

def minimum(seq=tokens):

|

|

@@ -524,7 +524,7 @@ minimum()([5,3,2,5,2])

|

|

| 524 |

|

| 525 |

<center>

|

| 526 |

|

| 527 |

-

|

|

| 540 |

|

| 541 |

<center>

|

| 542 |

|

| 543 |

-

("xyz__")

|

|

| 558 |

|

| 559 |

<center>

|

| 560 |

|

| 561 |

-

("xyz+zyr")

|

|

| 581 |

|

| 582 |

<center>

|

| 583 |

|

| 584 |

-

("xyz+zyr")

|

|

| 591 |

|

| 592 |

<center>

|

| 593 |

|

| 594 |

-

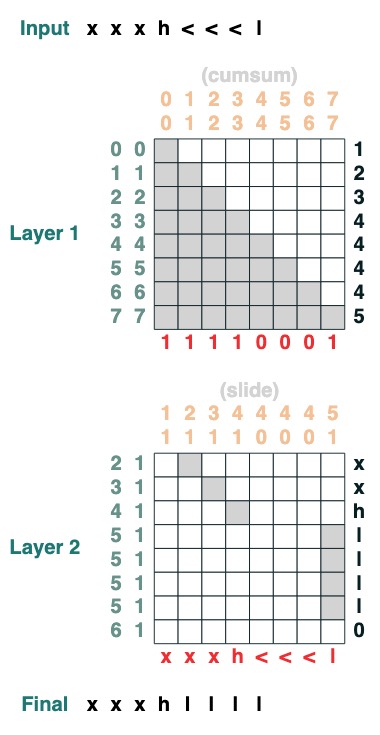

.input("xxxh<<<l")

|

|

| 610 |

|

| 611 |

<center>

|

| 612 |

|

| 613 |

-

.input("683+345")

|

|

@@ -636,7 +636,7 @@ add().input("683+345")

|

|

| 636 |

|

| 637 |

3. 完成加法

|

| 638 |

|

| 639 |

-

这些都是1行代码。完整的系统是6个注意力机制。(尽管 Gail

|

| 640 |

|

| 641 |

```python

|

| 642 |

def add(sop=tokens):

|

|

@@ -652,7 +652,7 @@ add()("683+345")

|

|

| 652 |

```

|

| 653 |

<center>

|

| 654 |

|

| 655 |

-

("683+345")

|

|

| 663 |

```python

|

| 664 |

1028

|

| 665 |

```

|

| 666 |

-

|

| 667 |

|

| 668 |

像 Transformer 和 RASP 语言一样思考。

|

| 669 |

|

| 670 |

-

|

| 671 |

|

| 672 |

在 https://flann.super.site/ 上查看。

|

| 673 |

|

|

@@ -675,5 +675,5 @@ add()("683+345")

|

|

| 675 |

|

| 676 |

<hr>

|

| 677 |

|

| 678 |

-

|

| 679 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

|

| 2 |

|

| 3 |

+

- [论文](https://arxiv.org/pdf/2106.06981.pdf) 来自 Gail Weiss, Yoav Goldberg,Eran Yahav.

|

| 4 |

+

- 博客参考 [Sasha Rush](https://rush-nlp.com/) 和 [Gail Weiss](https://sgailw.cswp.cs.technion.ac.il/)

|

| 5 |

+

- 库和交互 Notebook: [srush/raspy](https://github.com/srush/RASPy)

|

| 6 |

+

|

| 7 |

+

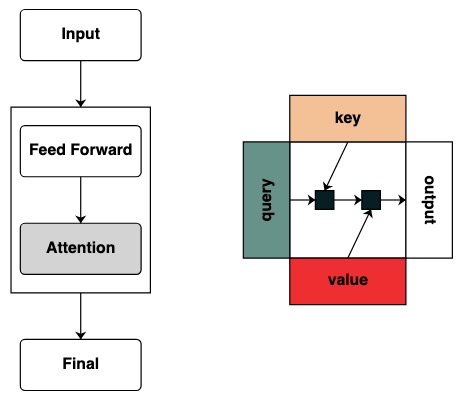

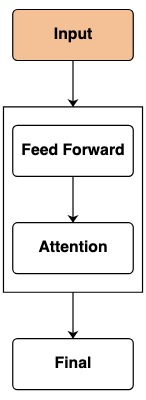

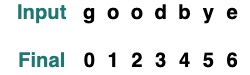

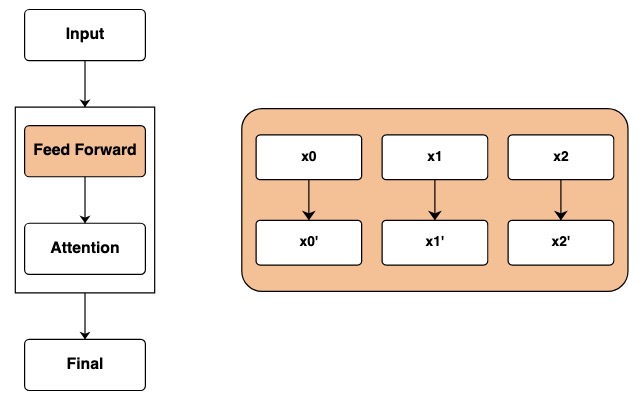

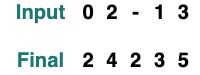

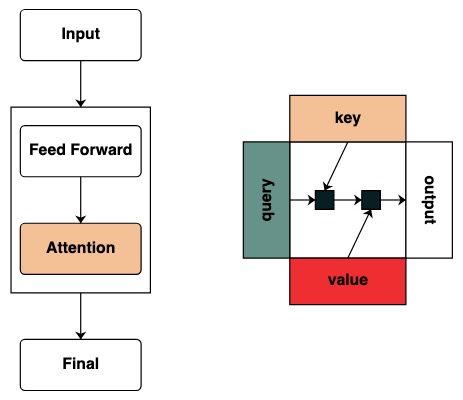

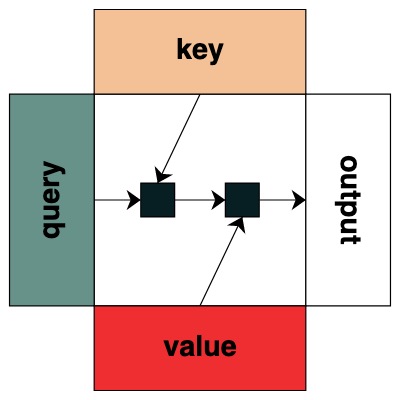

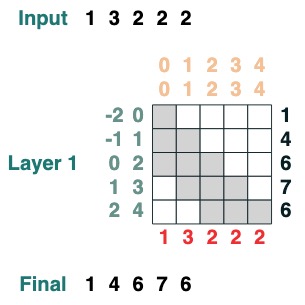

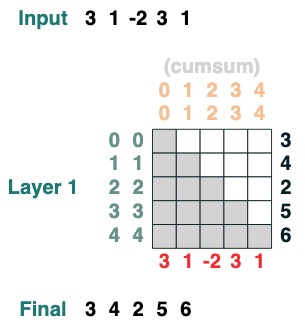

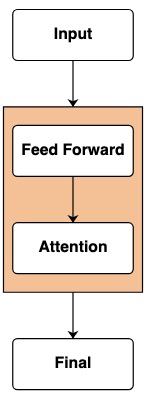

Transformer 模型是 AI 系统的基础。已经有了数不清的关于 "Transformer 如何工作" 的核心结构图表。

|

| 8 |

|

| 9 |

<center>

|

| 10 |

|

| 11 |

+

|

| 12 |

|

| 13 |

</center>

|

| 14 |

|

| 15 |

但是这些图表没有提供任何直观的计算该模型的框架表示。当研究者对于 Transformer 如何工作抱有兴趣时,直观的获取他运行的机制变得十分有用。

|

| 16 |

|

| 17 |

+

[像Transformers一样思考](https://arxiv.org/pdf/2106.06981.pdf)提出 transformer 类计算框架。这个框架直接计算和模仿 Transformer 计算。使用 [RASP](https://github.com/tech-srl/RASP) 编程语言,使每个程序编译成一个特殊的 Transformer 。

|

| 18 |

|

| 19 |

在这篇博客中,我用 python 复现了 RASP 的变体( RASPy )。该语言大致与原始版本相当,但是多了一些我认为很有趣的变化。通过这些语言,作者 Gail Weiss 的工作,提供了一套具有挑战性的有趣且正确的方式可以帮助了解其工作原理。

|

| 20 |

|

|

|

|

| 33 |

```

|

| 34 |

<center>

|

| 35 |

|

| 36 |

+

|

| 37 |

|

| 38 |

</center>

|

| 39 |

## 表格目录

|

|

|

|

| 53 |

|

| 54 |

<center>

|

| 55 |

|

| 56 |

+

|

| 57 |

|

| 58 |

</center>

|

| 59 |

|

|

|

|

| 65 |

|

| 66 |

<center>

|

| 67 |

|

| 68 |

+

|

| 69 |

|

| 70 |

</center>

|

| 71 |

|

|

|

|

| 77 |

|

| 78 |

<center>

|

| 79 |

|

| 80 |

+

|

| 81 |

|

| 82 |

</center>

|

| 83 |

|

|

|

|

| 90 |

|

| 91 |

<center>

|

| 92 |

|

| 93 |

+

|

| 94 |

|

| 95 |

</center>

|

| 96 |

|

|

|

|

| 101 |

|

| 102 |

<center>

|

| 103 |

|

| 104 |

+

|

| 105 |

|

| 106 |

</center>

|

| 107 |

|

|

|

|

| 112 |

|

| 113 |

<center>

|

| 114 |

|

| 115 |

+

|

| 116 |

|

| 117 |

</center>

|

| 118 |

|

|

|

|

| 124 |

|

| 125 |

<center>

|

| 126 |

|

| 127 |

+

|

| 128 |

|

| 129 |

</center>

|

| 130 |

|

|

|

|

| 137 |

|

| 138 |

<center>

|

| 139 |

|

| 140 |

+

|

| 141 |

|

| 142 |

</center>

|

| 143 |

|

|

|

|

| 150 |

|

| 151 |

<center>

|

| 152 |

|

| 153 |

+

|

| 154 |

|

| 155 |

</center>

|

| 156 |

|

|

|

|

| 161 |

|

| 162 |

<center>

|

| 163 |

|

| 164 |

+

|

| 165 |

|

| 166 |

</center>

|

| 167 |

|

|

|

|

| 174 |

|

| 175 |

<center>

|

| 176 |

|

| 177 |

+

|

| 178 |

|

| 179 |

</center>

|

| 180 |

|

|

|

|

| 187 |

|

| 188 |

<center>

|

| 189 |

|

| 190 |

+

|

| 191 |

|

| 192 |

</center>

|

| 193 |

|

|

|

|

| 205 |

|

| 206 |

<center>

|

| 207 |

|

| 208 |

+

|

| 209 |

|

| 210 |

</center>

|

| 211 |

|

|

|

|

| 214 |

|

| 215 |

<center>

|

| 216 |

|

| 217 |

+

|

| 218 |

|

| 219 |

</center>

|

| 220 |

|

|

|

|

| 226 |

|

| 227 |

<center>

|

| 228 |

|

| 229 |

+

|

| 230 |

|

| 231 |

</center>

|

| 232 |

|

|

|

|

| 239 |

|

| 240 |

<center>

|

| 241 |

|

| 242 |

+

|

| 243 |

|

| 244 |

</center>

|

| 245 |

|

|

|

|

| 252 |

|

| 253 |

<center>

|

| 254 |

|

| 255 |

+

|

| 256 |

|

| 257 |

</center>

|

| 258 |

|

|

|

|

| 266 |

|

| 267 |

<center>

|

| 268 |

|

| 269 |

+

|

| 270 |

|

| 271 |

</center>

|

| 272 |

|

|

|

|

| 281 |

|

| 282 |

<center>

|

| 283 |

|

| 284 |

+

|

| 285 |

|

| 286 |

</center>

|

| 287 |

|

|

|

|

| 295 |

|

| 296 |

<center>

|

| 297 |

|

| 298 |

+

|

| 299 |

|

| 300 |

</center>

|

| 301 |

|

|

|

|

| 309 |

|

| 310 |

<center>

|

| 311 |

|

| 312 |

+

|

| 313 |

|

| 314 |

</center>

|

| 315 |

|

|

|

|

| 321 |

|

| 322 |

<center>

|

| 323 |

|

| 324 |

+

|

| 325 |

|

| 326 |

</center>

|

| 327 |

|

|

|

|

| 339 |

|

| 340 |

<center>

|

| 341 |

|

| 342 |

+

|

| 343 |

|

| 344 |

</center>

|

| 345 |

|

|

|

|

| 347 |

|

| 348 |

<center>

|

| 349 |

|

| 350 |

+

|

| 351 |

|

| 352 |

</center>

|

| 353 |

|

|

|

|

| 362 |

|

| 363 |

<center>

|

| 364 |

|

| 365 |

+

|

| 366 |

|

| 367 |

</center>

|

| 368 |

|

|

|

|

| 379 |

|

| 380 |

<center>

|

| 381 |

|

| 382 |

+

|

| 383 |

|

| 384 |

</center>

|

| 385 |

|

|

|

|

| 391 |

```

|

| 392 |

<center>

|

| 393 |

|

| 394 |

+

|

| 395 |

|

| 396 |

</center>

|

| 397 |

|

|

|

|

| 404 |

|

| 405 |

<center>

|

| 406 |

|

| 407 |

+

|

| 408 |

|

| 409 |

</center>

|

| 410 |

|

|

|

|

| 417 |

|

| 418 |

<center>

|

| 419 |

|

| 420 |

+

|

| 421 |

|

| 422 |

</center>

|

| 423 |

|

|

|

|

| 432 |

|

| 433 |

<center>

|

| 434 |

|

| 435 |

+

|

| 436 |

|

| 437 |

</center>

|

| 438 |

|

| 439 |

|

| 440 |

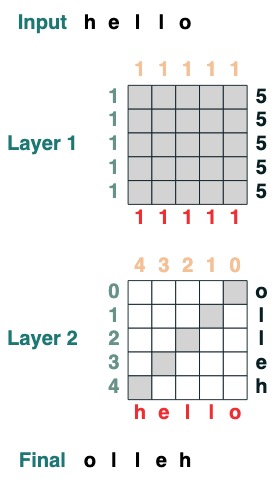

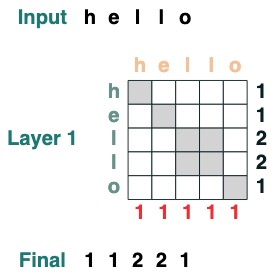

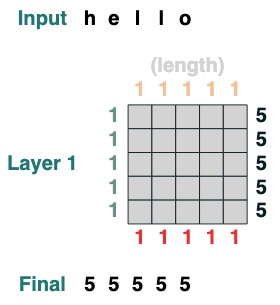

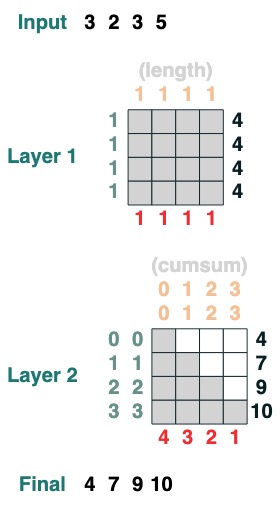

### 层

|

| 441 |

+

这个语言支持编译更加复杂的 transforms。他同时通过跟踪每一个运算操作计算层。

|

| 442 |

|

| 443 |

<center>

|

| 444 |

|

| 445 |

+

|

| 446 |

|

| 447 |

</center>

|

| 448 |

|

|

|

|

| 456 |

|

| 457 |

<center>

|

| 458 |

|

| 459 |

+

|

| 460 |

|

| 461 |

</center>

|

| 462 |

|

|

|

|

| 486 |

|

| 487 |

<center>

|

| 488 |

|

| 489 |

+

|

| 490 |

|

| 491 |

</center>

|

| 492 |

|

|

|

|

| 504 |

|

| 505 |

<center>

|

| 506 |

|

| 507 |

+

|

| 508 |

|

| 509 |

</center>

|

| 510 |

|

| 511 |

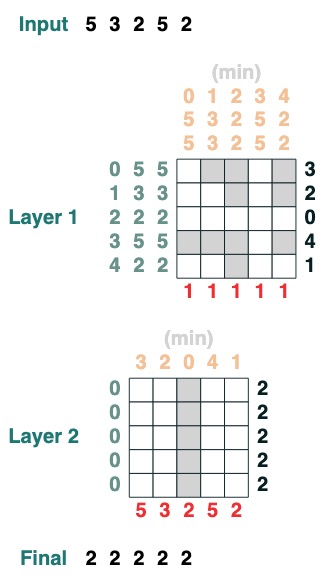

### 挑战三 :最小化

|

| 512 |

|

| 513 |

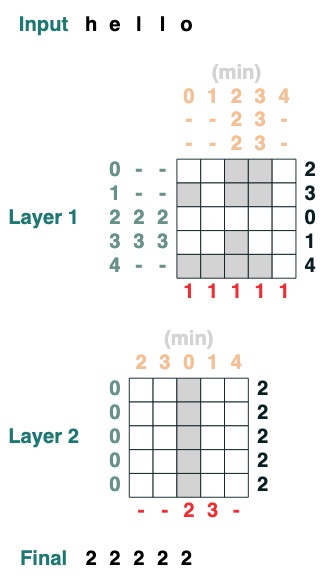

+

计算序列的最小值。(这一步开始变得困难,我们版本用了 2 层注意力机制)

|

| 514 |

|

| 515 |

```python

|

| 516 |

def minimum(seq=tokens):

|

|

|

|

| 524 |

|

| 525 |

<center>

|

| 526 |

|

| 527 |

+

|

| 528 |

|

| 529 |

</center>

|

| 530 |

|

|

|

|

| 540 |

|

| 541 |

<center>

|

| 542 |

|

| 543 |

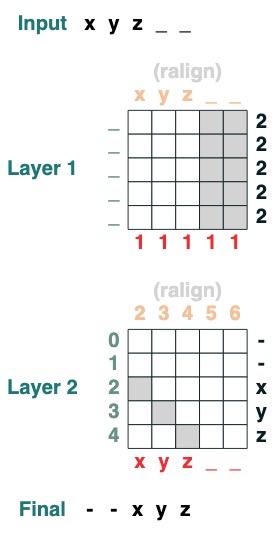

+

|

| 544 |

|

| 545 |

</center>

|

| 546 |

|

|

|

|

| 558 |

|

| 559 |

<center>

|

| 560 |

|

| 561 |

+

|

| 562 |

|

| 563 |

</center>

|

| 564 |

|

|

|

|

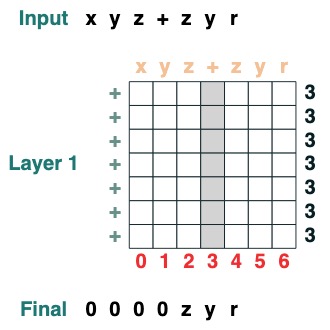

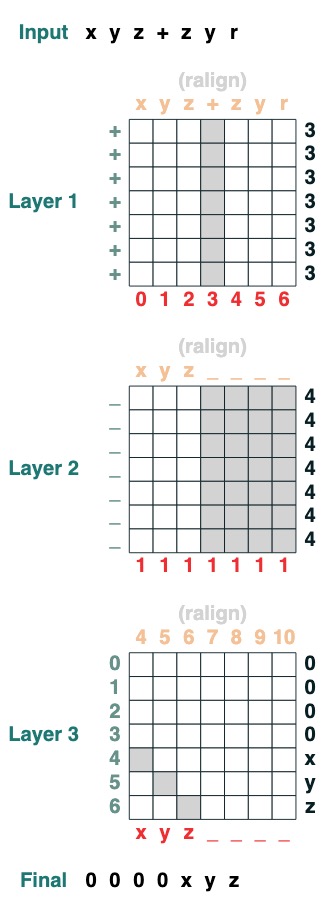

| 581 |

|

| 582 |

<center>

|

| 583 |

|

| 584 |

+

|

| 585 |

|

| 586 |

</center>

|

| 587 |

|

|

|

|

| 591 |

|

| 592 |

<center>

|

| 593 |

|

| 594 |

+

|

| 595 |

|

| 596 |

</center>

|

| 597 |

|

|

|

|

| 610 |

|

| 611 |

<center>

|

| 612 |

|

| 613 |

+

|

| 614 |

|

| 615 |

</center>

|

| 616 |

|

| 617 |

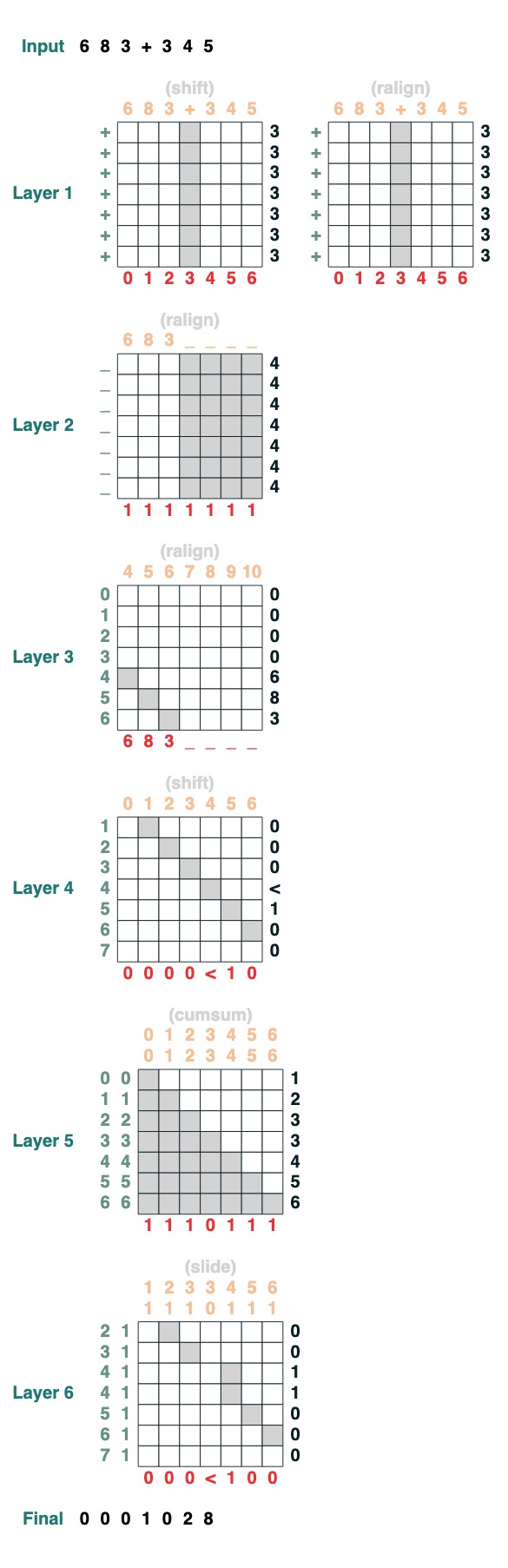

## 挑战八:增加

|

| 618 |

|

| 619 |

+

你要执行两个数字的添加。这是步骤。

|

| 620 |

|

| 621 |

```python

|

| 622 |

add().input("683+345")

|

|

|

|

| 636 |

|

| 637 |

3. 完成加法

|

| 638 |

|

| 639 |

+

这些都是1行代码。完整的系统是6个注意力机制。(尽管 Gail 说,如果你足够细心则可以在5个中完成!)。

|

| 640 |

|

| 641 |

```python

|

| 642 |

def add(sop=tokens):

|

|

|

|

| 652 |

```

|

| 653 |

<center>

|

| 654 |

|

| 655 |

+

|

| 656 |

|

| 657 |

</center>

|

| 658 |

|

|

|

|

| 663 |

```python

|

| 664 |

1028

|

| 665 |

```

|

| 666 |

+

非常整洁的东西。如果你对此主题更感兴趣,请务必查看论文:

|

| 667 |

|

| 668 |

像 Transformer 和 RASP 语言一样思考。

|

| 669 |

|

| 670 |

+

如果你通常对形式语言和神经网络 (FLaNN) 的联系感兴趣,或者认识感兴趣的人,那么这里有一个在线社区,到目前为止非常友好且相当活跃!

|

| 671 |

|

| 672 |

在 https://flann.super.site/ 上查看。

|

| 673 |

|

|

|

|

| 675 |

|

| 676 |

<hr>

|

| 677 |

|

| 678 |

+

> 英文原文:[Thinking Like Transformers](https://srush.github.io/raspy/)

|

| 679 |

+

> 译者:innovation64 (李洋)

|