text

stringlengths 6

13.6M

| id

stringlengths 13

176

| metadata

dict | __index_level_0__

int64 0

1.69k

|

|---|---|---|---|

// Copyright 2013 The Flutter Authors. All rights reserved.

// Use of this source code is governed by a BSD-style license that can be

// found in the LICENSE file.

// ignore_for_file: public_member_api_docs

part of flutter_gpu;

/// A handle to a graphics context. Used to create and manage GPU resources.

///

/// To obtain the default graphics context, use [getContext].

base class GpuContext extends NativeFieldWrapperClass1 {

/// Creates a new graphics context that corresponds to the default Impeller

/// context.

GpuContext._createDefault() {

final String? error = _initializeDefault();

if (error != null) {

throw Exception(error);

}

}

/// A supported [PixelFormat] for textures that store 4-channel colors

/// (red/green/blue/alpha).

PixelFormat get defaultColorFormat {

return PixelFormat.values[_getDefaultColorFormat()];

}

/// A supported [PixelFormat] for textures that store stencil information.

/// May include a depth channel if a stencil-only format is not available.

PixelFormat get defaultStencilFormat {

return PixelFormat.values[_getDefaultStencilFormat()];

}

/// A supported `PixelFormat` for textures that store both a stencil and depth

/// component. This will never return a depth-only or stencil-only texture.

///

/// May be [PixelFormat.unknown] if no suitable depth+stencil format was

/// found.

PixelFormat get defaultDepthStencilFormat {

return PixelFormat.values[_getDefaultDepthStencilFormat()];

}

/// Allocates a new region of GPU-resident memory.

///

/// The [storageMode] must be either [StorageMode.hostVisible] or

/// [StorageMode.devicePrivate], otherwise an exception will be thrown.

///

/// Returns [null] if the [DeviceBuffer] creation failed.

DeviceBuffer? createDeviceBuffer(StorageMode storageMode, int sizeInBytes) {

if (storageMode == StorageMode.deviceTransient) {

throw Exception(

'DeviceBuffers cannot be set to StorageMode.deviceTransient');

}

DeviceBuffer result =

DeviceBuffer._initialize(this, storageMode, sizeInBytes);

return result.isValid ? result : null;

}

/// Allocates a new region of host-visible GPU-resident memory, initialized

/// with the given [data].

///

/// Given that the buffer will be immediately populated with [data] uploaded

/// from the host, the [StorageMode] of the new [DeviceBuffer] is

/// automatically set to [StorageMode.hostVisible].

///

/// Returns [null] if the [DeviceBuffer] creation failed.

DeviceBuffer? createDeviceBufferWithCopy(ByteData data) {

DeviceBuffer result = DeviceBuffer._initializeWithHostData(this, data);

return result.isValid ? result : null;

}

HostBuffer createHostBuffer() {

return HostBuffer._initialize(this);

}

/// Allocates a new texture in GPU-resident memory.

///

/// Returns [null] if the [Texture] creation failed.

Texture? createTexture(StorageMode storageMode, int width, int height,

{PixelFormat format = PixelFormat.r8g8b8a8UNormInt,

sampleCount = 1,

TextureCoordinateSystem coordinateSystem =

TextureCoordinateSystem.renderToTexture,

bool enableRenderTargetUsage = true,

bool enableShaderReadUsage = true,

bool enableShaderWriteUsage = false}) {

Texture result = Texture._initialize(

this,

storageMode,

format,

width,

height,

sampleCount,

coordinateSystem,

enableRenderTargetUsage,

enableShaderReadUsage,

enableShaderWriteUsage);

return result.isValid ? result : null;

}

/// Create a new command buffer that can be used to submit GPU commands.

CommandBuffer createCommandBuffer() {

return CommandBuffer._(this);

}

RenderPipeline createRenderPipeline(

Shader vertexShader, Shader fragmentShader) {

return RenderPipeline._(this, vertexShader, fragmentShader);

}

/// Associates the default Impeller context with this Context.

@Native<Handle Function(Handle)>(

symbol: 'InternalFlutterGpu_Context_InitializeDefault')

external String? _initializeDefault();

@Native<Int Function(Pointer<Void>)>(

symbol: 'InternalFlutterGpu_Context_GetDefaultColorFormat')

external int _getDefaultColorFormat();

@Native<Int Function(Pointer<Void>)>(

symbol: 'InternalFlutterGpu_Context_GetDefaultStencilFormat')

external int _getDefaultStencilFormat();

@Native<Int Function(Pointer<Void>)>(

symbol: 'InternalFlutterGpu_Context_GetDefaultDepthStencilFormat')

external int _getDefaultDepthStencilFormat();

}

/// The default graphics context.

final GpuContext gpuContext = GpuContext._createDefault();

| engine/lib/gpu/lib/src/context.dart/0 | {

"file_path": "engine/lib/gpu/lib/src/context.dart",

"repo_id": "engine",

"token_count": 1429

} | 250 |

// Copyright 2013 The Flutter Authors. All rights reserved.

// Use of this source code is governed by a BSD-style license that can be

// found in the LICENSE file.

#ifndef FLUTTER_LIB_GPU_SHADER_LIBRARY_H_

#define FLUTTER_LIB_GPU_SHADER_LIBRARY_H_

#include <memory>

#include <string>

#include <unordered_map>

#include "flutter/lib/gpu/export.h"

#include "flutter/lib/gpu/shader.h"

#include "flutter/lib/ui/dart_wrapper.h"

#include "fml/memory/ref_ptr.h"

namespace flutter {

namespace gpu {

/// An immutable collection of shaders loaded from a shader bundle asset.

class ShaderLibrary : public RefCountedDartWrappable<ShaderLibrary> {

DEFINE_WRAPPERTYPEINFO();

FML_FRIEND_MAKE_REF_COUNTED(ShaderLibrary);

public:

using ShaderMap = std::unordered_map<std::string, fml::RefPtr<Shader>>;

static fml::RefPtr<ShaderLibrary> MakeFromAsset(

impeller::Context::BackendType backend_type,

const std::string& name,

std::string& out_error);

static fml::RefPtr<ShaderLibrary> MakeFromShaders(ShaderMap shaders);

static fml::RefPtr<ShaderLibrary> MakeFromFlatbuffer(

impeller::Context::BackendType backend_type,

std::shared_ptr<fml::Mapping> payload);

/// Sets a return override for `MakeFromAsset` for testing purposes.

static void SetOverride(fml::RefPtr<ShaderLibrary> override_shader_library);

fml::RefPtr<Shader> GetShader(const std::string& shader_name,

Dart_Handle shader_wrapper) const;

~ShaderLibrary() override;

private:

/// A global override used to inject a ShaderLibrary when running with the

/// Impeller playground. When set, `MakeFromAsset` will always just return

/// this library.

static fml::RefPtr<ShaderLibrary> override_shader_library_;

std::shared_ptr<fml::Mapping> payload_;

ShaderMap shaders_;

explicit ShaderLibrary(std::shared_ptr<fml::Mapping> payload,

ShaderMap shaders);

FML_DISALLOW_COPY_AND_ASSIGN(ShaderLibrary);

};

} // namespace gpu

} // namespace flutter

//----------------------------------------------------------------------------

/// Exports

///

extern "C" {

FLUTTER_GPU_EXPORT

extern Dart_Handle InternalFlutterGpu_ShaderLibrary_InitializeWithAsset(

Dart_Handle wrapper,

Dart_Handle asset_name);

FLUTTER_GPU_EXPORT

extern Dart_Handle InternalFlutterGpu_ShaderLibrary_GetShader(

flutter::gpu::ShaderLibrary* wrapper,

Dart_Handle shader_name,

Dart_Handle shader_wrapper);

} // extern "C"

#endif // FLUTTER_LIB_GPU_SHADER_LIBRARY_H_

| engine/lib/gpu/shader_library.h/0 | {

"file_path": "engine/lib/gpu/shader_library.h",

"repo_id": "engine",

"token_count": 893

} | 251 |

// Copyright 2013 The Flutter Authors. All rights reserved.

// Use of this source code is governed by a BSD-style license that can be

// found in the LICENSE file.

part of dart.ui;

/// Annotation to keep [Object.toString] overrides as-is instead of removing

/// them for size optimization purposes.

///

/// For certain uris (currently `dart:ui` and `package:flutter`) the Dart

/// compiler will remove [Object.toString] overrides from classes in

/// profile/release mode to reduce code size.

///

/// Individual classes can opt out of this behavior via the following

/// annotations:

///

/// * `@pragma('flutter:keep-to-string')`

/// * `@pragma('flutter:keep-to-string-in-subtypes')`

///

/// See https://github.com/dart-lang/sdk/blob/main/runtime/docs/pragmas.md

///

/// For example, in the following class the `toString` method will remain as

/// `return _buffer.toString();`, even if the `--delete-tostring-package-uri`

/// option would otherwise apply and replace it with `return super.toString()`.

/// (By convention, `dart:ui` is usually imported `as ui`, hence the prefix.)

///

/// ```dart

/// class MyStringBuffer {

/// final StringBuffer _buffer = StringBuffer();

///

/// // ...

///

/// @ui.keepToString

/// @override

/// String toString() {

/// return _buffer.toString();

/// }

/// }

/// ```

const pragma keepToString = pragma('flutter:keep-to-string');

| engine/lib/ui/annotations.dart/0 | {

"file_path": "engine/lib/ui/annotations.dart",

"repo_id": "engine",

"token_count": 432

} | 252 |

#version 320 es

// Copyright 2013 The Flutter Authors. All rights reserved.

// Use of this source code is governed by a BSD-style license that can be

// found in the LICENSE file.

precision highp float;

layout(location = 0) out vec4 fragColor;

layout(location = 0) uniform float a;

void main() {

fragColor = vec4(

/* cross product of parallel vectors is a zero vector */

cross(vec3(a, 2.0, 3.0), vec3(2.0, 4.0, 6.0))[0], 1.0,

// cross product of parallel vectors is a zero vector

cross(vec3(a, 2.0, 3.0), vec3(2.0, 4.0, 6.0))[2], 1.0);

}

| engine/lib/ui/fixtures/shaders/supported_glsl_op_shaders/68_cross.frag/0 | {

"file_path": "engine/lib/ui/fixtures/shaders/supported_glsl_op_shaders/68_cross.frag",

"repo_id": "engine",

"token_count": 216

} | 253 |

// Copyright 2013 The Flutter Authors. All rights reserved.

// Use of this source code is governed by a BSD-style license that can be

// found in the LICENSE file.

#ifndef FLUTTER_LIB_UI_ISOLATE_NAME_SERVER_ISOLATE_NAME_SERVER_H_

#define FLUTTER_LIB_UI_ISOLATE_NAME_SERVER_ISOLATE_NAME_SERVER_H_

#include <map>

#include <mutex>

#include <string>

#include "flutter/fml/macros.h"

#include "third_party/dart/runtime/include/dart_api.h"

namespace flutter {

class IsolateNameServer {

public:

IsolateNameServer();

~IsolateNameServer();

// Looks up the Dart_Port associated with a given name. Returns ILLEGAL_PORT

// if the name does not exist.

Dart_Port LookupIsolatePortByName(const std::string& name);

// Registers a Dart_Port with a given name. Returns true if registration is

// successful, false if the name entry already exists.

bool RegisterIsolatePortWithName(Dart_Port port, const std::string& name);

// Removes a name to Dart_Port mapping given a name. Returns true if the

// mapping was successfully removed, false if the mapping does not exist.

bool RemoveIsolateNameMapping(const std::string& name);

private:

Dart_Port LookupIsolatePortByNameUnprotected(const std::string& name);

mutable std::mutex mutex_;

std::map<std::string, Dart_Port> port_mapping_;

FML_DISALLOW_COPY_AND_ASSIGN(IsolateNameServer);

};

} // namespace flutter

#endif // FLUTTER_LIB_UI_ISOLATE_NAME_SERVER_ISOLATE_NAME_SERVER_H_

| engine/lib/ui/isolate_name_server/isolate_name_server.h/0 | {

"file_path": "engine/lib/ui/isolate_name_server/isolate_name_server.h",

"repo_id": "engine",

"token_count": 479

} | 254 |

// Copyright 2013 The Flutter Authors. All rights reserved.

// Use of this source code is governed by a BSD-style license that can be

// found in the LICENSE file.

#include "flutter/lib/ui/painting/display_list_deferred_image_gpu_skia.h"

#include "flutter/fml/make_copyable.h"

#include "third_party/skia/include/core/SkColorSpace.h"

#include "third_party/skia/include/core/SkImage.h"

#include "third_party/skia/include/gpu/ganesh/SkImageGanesh.h"

namespace flutter {

sk_sp<DlDeferredImageGPUSkia> DlDeferredImageGPUSkia::Make(

const SkImageInfo& image_info,

sk_sp<DisplayList> display_list,

fml::TaskRunnerAffineWeakPtr<SnapshotDelegate> snapshot_delegate,

const fml::RefPtr<fml::TaskRunner>& raster_task_runner,

fml::RefPtr<SkiaUnrefQueue> unref_queue) {

return sk_sp<DlDeferredImageGPUSkia>(new DlDeferredImageGPUSkia(

ImageWrapper::Make(image_info, std::move(display_list),

std::move(snapshot_delegate), raster_task_runner,

std::move(unref_queue)),

raster_task_runner));

}

sk_sp<DlDeferredImageGPUSkia> DlDeferredImageGPUSkia::MakeFromLayerTree(

const SkImageInfo& image_info,

std::unique_ptr<LayerTree> layer_tree,

fml::TaskRunnerAffineWeakPtr<SnapshotDelegate> snapshot_delegate,

const fml::RefPtr<fml::TaskRunner>& raster_task_runner,

fml::RefPtr<SkiaUnrefQueue> unref_queue) {

return sk_sp<DlDeferredImageGPUSkia>(new DlDeferredImageGPUSkia(

ImageWrapper::MakeFromLayerTree(

image_info, std::move(layer_tree), std::move(snapshot_delegate),

raster_task_runner, std::move(unref_queue)),

raster_task_runner));

}

DlDeferredImageGPUSkia::DlDeferredImageGPUSkia(

std::shared_ptr<ImageWrapper> image_wrapper,

fml::RefPtr<fml::TaskRunner> raster_task_runner)

: image_wrapper_(std::move(image_wrapper)),

raster_task_runner_(std::move(raster_task_runner)) {}

// |DlImage|

DlDeferredImageGPUSkia::~DlDeferredImageGPUSkia() {

fml::TaskRunner::RunNowOrPostTask(raster_task_runner_,

[image_wrapper = image_wrapper_]() {

if (!image_wrapper) {

return;

}

image_wrapper->Unregister();

image_wrapper->DeleteTexture();

});

}

// |DlImage|

sk_sp<SkImage> DlDeferredImageGPUSkia::skia_image() const {

return image_wrapper_ ? image_wrapper_->CreateSkiaImage() : nullptr;

};

// |DlImage|

std::shared_ptr<impeller::Texture> DlDeferredImageGPUSkia::impeller_texture()

const {

return nullptr;

}

// |DlImage|

bool DlDeferredImageGPUSkia::isOpaque() const {

return image_wrapper_ ? image_wrapper_->image_info().isOpaque() : false;

}

// |DlImage|

bool DlDeferredImageGPUSkia::isTextureBacked() const {

return image_wrapper_ ? image_wrapper_->isTextureBacked() : false;

}

// |DlImage|

bool DlDeferredImageGPUSkia::isUIThreadSafe() const {

return true;

}

// |DlImage|

SkISize DlDeferredImageGPUSkia::dimensions() const {

return image_wrapper_ ? image_wrapper_->image_info().dimensions()

: SkISize::MakeEmpty();

}

// |DlImage|

size_t DlDeferredImageGPUSkia::GetApproximateByteSize() const {

return sizeof(*this) +

(image_wrapper_ ? image_wrapper_->image_info().computeMinByteSize()

: 0);

}

std::optional<std::string> DlDeferredImageGPUSkia::get_error() const {

return image_wrapper_ ? image_wrapper_->get_error() : std::nullopt;

}

std::shared_ptr<DlDeferredImageGPUSkia::ImageWrapper>

DlDeferredImageGPUSkia::ImageWrapper::Make(

const SkImageInfo& image_info,

sk_sp<DisplayList> display_list,

fml::TaskRunnerAffineWeakPtr<SnapshotDelegate> snapshot_delegate,

fml::RefPtr<fml::TaskRunner> raster_task_runner,

fml::RefPtr<SkiaUnrefQueue> unref_queue) {

auto wrapper = std::shared_ptr<ImageWrapper>(new ImageWrapper(

image_info, std::move(display_list), std::move(snapshot_delegate),

std::move(raster_task_runner), std::move(unref_queue)));

wrapper->SnapshotDisplayList();

return wrapper;

}

std::shared_ptr<DlDeferredImageGPUSkia::ImageWrapper>

DlDeferredImageGPUSkia::ImageWrapper::MakeFromLayerTree(

const SkImageInfo& image_info,

std::unique_ptr<LayerTree> layer_tree,

fml::TaskRunnerAffineWeakPtr<SnapshotDelegate> snapshot_delegate,

fml::RefPtr<fml::TaskRunner> raster_task_runner,

fml::RefPtr<SkiaUnrefQueue> unref_queue) {

auto wrapper = std::shared_ptr<ImageWrapper>(

new ImageWrapper(image_info, nullptr, std::move(snapshot_delegate),

std::move(raster_task_runner), std::move(unref_queue)));

wrapper->SnapshotDisplayList(std::move(layer_tree));

return wrapper;

}

DlDeferredImageGPUSkia::ImageWrapper::ImageWrapper(

const SkImageInfo& image_info,

sk_sp<DisplayList> display_list,

fml::TaskRunnerAffineWeakPtr<SnapshotDelegate> snapshot_delegate,

fml::RefPtr<fml::TaskRunner> raster_task_runner,

fml::RefPtr<SkiaUnrefQueue> unref_queue)

: image_info_(image_info),

display_list_(std::move(display_list)),

snapshot_delegate_(std::move(snapshot_delegate)),

raster_task_runner_(std::move(raster_task_runner)),

unref_queue_(std::move(unref_queue)) {}

void DlDeferredImageGPUSkia::ImageWrapper::OnGrContextCreated() {

FML_DCHECK(raster_task_runner_->RunsTasksOnCurrentThread());

SnapshotDisplayList();

}

void DlDeferredImageGPUSkia::ImageWrapper::OnGrContextDestroyed() {

FML_DCHECK(raster_task_runner_->RunsTasksOnCurrentThread());

DeleteTexture();

}

sk_sp<SkImage> DlDeferredImageGPUSkia::ImageWrapper::CreateSkiaImage() const {

FML_DCHECK(raster_task_runner_->RunsTasksOnCurrentThread());

if (texture_.isValid() && context_) {

return SkImages::BorrowTextureFrom(

context_.get(), texture_, kTopLeft_GrSurfaceOrigin,

image_info_.colorType(), image_info_.alphaType(),

image_info_.refColorSpace());

}

return image_;

}

bool DlDeferredImageGPUSkia::ImageWrapper::isTextureBacked() const {

return texture_.isValid();

}

void DlDeferredImageGPUSkia::ImageWrapper::SnapshotDisplayList(

std::unique_ptr<LayerTree> layer_tree) {

fml::TaskRunner::RunNowOrPostTask(

raster_task_runner_,

fml::MakeCopyable([weak_this = weak_from_this(),

layer_tree = std::move(layer_tree)]() mutable {

auto wrapper = weak_this.lock();

if (!wrapper) {

return;

}

auto snapshot_delegate = wrapper->snapshot_delegate_;

if (!snapshot_delegate) {

return;

}

if (layer_tree) {

auto display_list =

layer_tree->Flatten(SkRect::MakeWH(wrapper->image_info_.width(),

wrapper->image_info_.height()),

snapshot_delegate->GetTextureRegistry(),

snapshot_delegate->GetGrContext());

wrapper->display_list_ = std::move(display_list);

}

auto result = snapshot_delegate->MakeSkiaGpuImage(

wrapper->display_list_, wrapper->image_info_);

if (result->texture.isValid()) {

wrapper->texture_ = result->texture;

wrapper->context_ = std::move(result->context);

wrapper->texture_registry_ =

wrapper->snapshot_delegate_->GetTextureRegistry();

wrapper->texture_registry_->RegisterContextListener(

reinterpret_cast<uintptr_t>(wrapper.get()), weak_this);

} else if (result->image) {

wrapper->image_ = std::move(result->image);

} else {

std::scoped_lock lock(wrapper->error_mutex_);

wrapper->error_ = result->error;

}

}));

}

std::optional<std::string> DlDeferredImageGPUSkia::ImageWrapper::get_error() {

std::scoped_lock lock(error_mutex_);

return error_;

}

void DlDeferredImageGPUSkia::ImageWrapper::Unregister() {

if (texture_registry_) {

texture_registry_->UnregisterContextListener(

reinterpret_cast<uintptr_t>(this));

}

}

void DlDeferredImageGPUSkia::ImageWrapper::DeleteTexture() {

if (texture_.isValid()) {

unref_queue_->DeleteTexture(texture_);

texture_ = GrBackendTexture();

}

image_.reset();

context_.reset();

}

} // namespace flutter

| engine/lib/ui/painting/display_list_deferred_image_gpu_skia.cc/0 | {

"file_path": "engine/lib/ui/painting/display_list_deferred_image_gpu_skia.cc",

"repo_id": "engine",

"token_count": 3623

} | 255 |

// Copyright 2013 The Flutter Authors. All rights reserved.

// Use of this source code is governed by a BSD-style license that can be

// found in the LICENSE file.

#include "flutter/lib/ui/painting/image_decoder_impeller.h"

#include <memory>

#include "flutter/fml/closure.h"

#include "flutter/fml/make_copyable.h"

#include "flutter/fml/trace_event.h"

#include "flutter/impeller/core/allocator.h"

#include "flutter/impeller/core/texture.h"

#include "flutter/impeller/display_list/dl_image_impeller.h"

#include "flutter/impeller/renderer/command_buffer.h"

#include "flutter/impeller/renderer/context.h"

#include "flutter/lib/ui/painting/image_decoder_skia.h"

#include "impeller/base/strings.h"

#include "impeller/display_list/skia_conversions.h"

#include "impeller/geometry/size.h"

#include "third_party/skia/include/core/SkAlphaType.h"

#include "third_party/skia/include/core/SkBitmap.h"

#include "third_party/skia/include/core/SkColorSpace.h"

#include "third_party/skia/include/core/SkColorType.h"

#include "third_party/skia/include/core/SkImageInfo.h"

#include "third_party/skia/include/core/SkMallocPixelRef.h"

#include "third_party/skia/include/core/SkPixelRef.h"

#include "third_party/skia/include/core/SkPixmap.h"

#include "third_party/skia/include/core/SkPoint.h"

#include "third_party/skia/include/core/SkSamplingOptions.h"

#include "third_party/skia/include/core/SkSize.h"

namespace flutter {

class MallocDeviceBuffer : public impeller::DeviceBuffer {

public:

explicit MallocDeviceBuffer(impeller::DeviceBufferDescriptor desc)

: impeller::DeviceBuffer(desc) {

data_ = static_cast<uint8_t*>(malloc(desc.size));

}

~MallocDeviceBuffer() override { free(data_); }

bool SetLabel(const std::string& label) override { return true; }

bool SetLabel(const std::string& label, impeller::Range range) override {

return true;

}

uint8_t* OnGetContents() const override { return data_; }

bool OnCopyHostBuffer(const uint8_t* source,

impeller::Range source_range,

size_t offset) override {

memcpy(data_ + offset, source + source_range.offset, source_range.length);

return true;

}

private:

uint8_t* data_;

FML_DISALLOW_COPY_AND_ASSIGN(MallocDeviceBuffer);

};

#ifdef FML_OS_ANDROID

static constexpr bool kShouldUseMallocDeviceBuffer = true;

#else

static constexpr bool kShouldUseMallocDeviceBuffer = false;

#endif // FML_OS_ANDROID

namespace {

/**

* Loads the gamut as a set of three points (triangle).

*/

void LoadGamut(SkPoint abc[3], const skcms_Matrix3x3& xyz) {

// rx = rX / (rX + rY + rZ)

// ry = rY / (rX + rY + rZ)

// gx, gy, bx, and gy are calculated similarly.

for (int index = 0; index < 3; index++) {

float sum = xyz.vals[index][0] + xyz.vals[index][1] + xyz.vals[index][2];

abc[index].fX = xyz.vals[index][0] / sum;

abc[index].fY = xyz.vals[index][1] / sum;

}

}

/**

* Calculates the area of the triangular gamut.

*/

float CalculateArea(SkPoint abc[3]) {

const SkPoint& a = abc[0];

const SkPoint& b = abc[1];

const SkPoint& c = abc[2];

return 0.5f * fabsf(a.fX * b.fY + b.fX * c.fY - a.fX * c.fY - c.fX * b.fY -

b.fX * a.fY);

}

// Note: This was calculated from SkColorSpace::MakeSRGB().

static constexpr float kSrgbGamutArea = 0.0982f;

// Source:

// https://source.chromium.org/chromium/_/skia/skia.git/+/393fb1ec80f41d8ad7d104921b6920e69749fda1:src/codec/SkAndroidCodec.cpp;l=67;drc=46572b4d445f41943059d0e377afc6d6748cd5ca;bpv=1;bpt=0

bool IsWideGamut(const SkColorSpace* color_space) {

if (!color_space) {

return false;

}

skcms_Matrix3x3 xyzd50;

color_space->toXYZD50(&xyzd50);

SkPoint rgb[3];

LoadGamut(rgb, xyzd50);

float area = CalculateArea(rgb);

return area > kSrgbGamutArea;

}

} // namespace

ImageDecoderImpeller::ImageDecoderImpeller(

const TaskRunners& runners,

std::shared_ptr<fml::ConcurrentTaskRunner> concurrent_task_runner,

const fml::WeakPtr<IOManager>& io_manager,

bool supports_wide_gamut,

const std::shared_ptr<fml::SyncSwitch>& gpu_disabled_switch)

: ImageDecoder(runners, std::move(concurrent_task_runner), io_manager),

supports_wide_gamut_(supports_wide_gamut),

gpu_disabled_switch_(gpu_disabled_switch) {

std::promise<std::shared_ptr<impeller::Context>> context_promise;

context_ = context_promise.get_future();

runners_.GetIOTaskRunner()->PostTask(fml::MakeCopyable(

[promise = std::move(context_promise), io_manager]() mutable {

promise.set_value(io_manager ? io_manager->GetImpellerContext()

: nullptr);

}));

}

ImageDecoderImpeller::~ImageDecoderImpeller() = default;

static SkColorType ChooseCompatibleColorType(SkColorType type) {

switch (type) {

case kRGBA_F32_SkColorType:

return kRGBA_F16_SkColorType;

default:

return kRGBA_8888_SkColorType;

}

}

static SkAlphaType ChooseCompatibleAlphaType(SkAlphaType type) {

return type;

}

DecompressResult ImageDecoderImpeller::DecompressTexture(

ImageDescriptor* descriptor,

SkISize target_size,

impeller::ISize max_texture_size,

bool supports_wide_gamut,

const std::shared_ptr<impeller::Allocator>& allocator) {

TRACE_EVENT0("impeller", __FUNCTION__);

if (!descriptor) {

std::string decode_error("Invalid descriptor (should never happen)");

FML_DLOG(ERROR) << decode_error;

return DecompressResult{.decode_error = decode_error};

}

target_size.set(std::min(static_cast<int32_t>(max_texture_size.width),

target_size.width()),

std::min(static_cast<int32_t>(max_texture_size.height),

target_size.height()));

const SkISize source_size = descriptor->image_info().dimensions();

auto decode_size = source_size;

if (descriptor->is_compressed()) {

decode_size = descriptor->get_scaled_dimensions(std::max(

static_cast<float>(target_size.width()) / source_size.width(),

static_cast<float>(target_size.height()) / source_size.height()));

}

//----------------------------------------------------------------------------

/// 1. Decode the image.

///

const auto base_image_info = descriptor->image_info();

const bool is_wide_gamut =

supports_wide_gamut ? IsWideGamut(base_image_info.colorSpace()) : false;

SkAlphaType alpha_type =

ChooseCompatibleAlphaType(base_image_info.alphaType());

SkImageInfo image_info;

if (is_wide_gamut) {

SkColorType color_type = alpha_type == SkAlphaType::kOpaque_SkAlphaType

? kBGR_101010x_XR_SkColorType

: kRGBA_F16_SkColorType;

image_info =

base_image_info.makeWH(decode_size.width(), decode_size.height())

.makeColorType(color_type)

.makeAlphaType(alpha_type)

.makeColorSpace(SkColorSpace::MakeSRGB());

} else {

image_info =

base_image_info.makeWH(decode_size.width(), decode_size.height())

.makeColorType(

ChooseCompatibleColorType(base_image_info.colorType()))

.makeAlphaType(alpha_type);

}

const auto pixel_format =

impeller::skia_conversions::ToPixelFormat(image_info.colorType());

if (!pixel_format.has_value()) {

std::string decode_error(impeller::SPrintF(

"Codec pixel format is not supported (SkColorType=%d)",

image_info.colorType()));

FML_DLOG(ERROR) << decode_error;

return DecompressResult{.decode_error = decode_error};

}

auto bitmap = std::make_shared<SkBitmap>();

bitmap->setInfo(image_info);

auto bitmap_allocator = std::make_shared<ImpellerAllocator>(allocator);

if (descriptor->is_compressed()) {

if (!bitmap->tryAllocPixels(bitmap_allocator.get())) {

std::string decode_error(

"Could not allocate intermediate for image decompression.");

FML_DLOG(ERROR) << decode_error;

return DecompressResult{.decode_error = decode_error};

}

// Decode the image into the image generator's closest supported size.

if (!descriptor->get_pixels(bitmap->pixmap())) {

std::string decode_error("Could not decompress image.");

FML_DLOG(ERROR) << decode_error;

return DecompressResult{.decode_error = decode_error};

}

} else {

auto temp_bitmap = std::make_shared<SkBitmap>();

temp_bitmap->setInfo(base_image_info);

auto pixel_ref = SkMallocPixelRef::MakeWithData(

base_image_info, descriptor->row_bytes(), descriptor->data());

temp_bitmap->setPixelRef(pixel_ref, 0, 0);

if (!bitmap->tryAllocPixels(bitmap_allocator.get())) {

std::string decode_error(

"Could not allocate intermediate for pixel conversion.");

FML_DLOG(ERROR) << decode_error;

return DecompressResult{.decode_error = decode_error};

}

temp_bitmap->readPixels(bitmap->pixmap());

bitmap->setImmutable();

}

if (bitmap->dimensions() == target_size) {

auto buffer = bitmap_allocator->GetDeviceBuffer();

if (!buffer) {

return DecompressResult{.decode_error = "Unable to get device buffer"};

}

return DecompressResult{.device_buffer = buffer,

.sk_bitmap = bitmap,

.image_info = bitmap->info()};

}

//----------------------------------------------------------------------------

/// 2. If the decoded image isn't the requested target size, resize it.

///

TRACE_EVENT0("impeller", "DecodeScale");

const auto scaled_image_info = image_info.makeDimensions(target_size);

auto scaled_bitmap = std::make_shared<SkBitmap>();

auto scaled_allocator = std::make_shared<ImpellerAllocator>(allocator);

scaled_bitmap->setInfo(scaled_image_info);

if (!scaled_bitmap->tryAllocPixels(scaled_allocator.get())) {

std::string decode_error(

"Could not allocate scaled bitmap for image decompression.");

FML_DLOG(ERROR) << decode_error;

return DecompressResult{.decode_error = decode_error};

}

if (!bitmap->pixmap().scalePixels(

scaled_bitmap->pixmap(),

SkSamplingOptions(SkFilterMode::kLinear, SkMipmapMode::kNone))) {

FML_LOG(ERROR) << "Could not scale decoded bitmap data.";

}

scaled_bitmap->setImmutable();

auto buffer = scaled_allocator->GetDeviceBuffer();

if (!buffer) {

return DecompressResult{.decode_error = "Unable to get device buffer"};

}

return DecompressResult{.device_buffer = buffer,

.sk_bitmap = scaled_bitmap,

.image_info = scaled_bitmap->info()};

}

/// Only call this method if the GPU is available.

static std::pair<sk_sp<DlImage>, std::string> UnsafeUploadTextureToPrivate(

const std::shared_ptr<impeller::Context>& context,

const std::shared_ptr<impeller::DeviceBuffer>& buffer,

const SkImageInfo& image_info) {

const auto pixel_format =

impeller::skia_conversions::ToPixelFormat(image_info.colorType());

if (!pixel_format) {

std::string decode_error(impeller::SPrintF(

"Unsupported pixel format (SkColorType=%d)", image_info.colorType()));

FML_DLOG(ERROR) << decode_error;

return std::make_pair(nullptr, decode_error);

}

impeller::TextureDescriptor texture_descriptor;

texture_descriptor.storage_mode = impeller::StorageMode::kDevicePrivate;

texture_descriptor.format = pixel_format.value();

texture_descriptor.size = {image_info.width(), image_info.height()};

texture_descriptor.mip_count = texture_descriptor.size.MipCount();

texture_descriptor.compression_type = impeller::CompressionType::kLossy;

auto dest_texture =

context->GetResourceAllocator()->CreateTexture(texture_descriptor);

if (!dest_texture) {

std::string decode_error("Could not create Impeller texture.");

FML_DLOG(ERROR) << decode_error;

return std::make_pair(nullptr, decode_error);

}

dest_texture->SetLabel(

impeller::SPrintF("ui.Image(%p)", dest_texture.get()).c_str());

auto command_buffer = context->CreateCommandBuffer();

if (!command_buffer) {

std::string decode_error(

"Could not create command buffer for mipmap generation.");

FML_DLOG(ERROR) << decode_error;

return std::make_pair(nullptr, decode_error);

}

command_buffer->SetLabel("Mipmap Command Buffer");

auto blit_pass = command_buffer->CreateBlitPass();

if (!blit_pass) {

std::string decode_error(

"Could not create blit pass for mipmap generation.");

FML_DLOG(ERROR) << decode_error;

return std::make_pair(nullptr, decode_error);

}

blit_pass->SetLabel("Mipmap Blit Pass");

blit_pass->AddCopy(impeller::DeviceBuffer::AsBufferView(buffer),

dest_texture);

if (texture_descriptor.size.MipCount() > 1) {

blit_pass->GenerateMipmap(dest_texture);

}

blit_pass->EncodeCommands(context->GetResourceAllocator());

if (!context->GetCommandQueue()->Submit({command_buffer}).ok()) {

std::string decode_error("Failed to submit blit pass command buffer.");

FML_DLOG(ERROR) << decode_error;

return std::make_pair(nullptr, decode_error);

}

return std::make_pair(

impeller::DlImageImpeller::Make(std::move(dest_texture)), std::string());

}

std::pair<sk_sp<DlImage>, std::string>

ImageDecoderImpeller::UploadTextureToPrivate(

const std::shared_ptr<impeller::Context>& context,

const std::shared_ptr<impeller::DeviceBuffer>& buffer,

const SkImageInfo& image_info,

const std::shared_ptr<SkBitmap>& bitmap,

const std::shared_ptr<fml::SyncSwitch>& gpu_disabled_switch) {

TRACE_EVENT0("impeller", __FUNCTION__);

if (!context) {

return std::make_pair(nullptr, "No Impeller context is available");

}

if (!buffer) {

return std::make_pair(nullptr, "No Impeller device buffer is available");

}

std::pair<sk_sp<DlImage>, std::string> result;

gpu_disabled_switch->Execute(

fml::SyncSwitch::Handlers()

.SetIfFalse([&result, context, buffer, image_info] {

result = UnsafeUploadTextureToPrivate(context, buffer, image_info);

})

.SetIfTrue([&result, context, bitmap, gpu_disabled_switch] {

// create_mips is false because we already know the GPU is disabled.

result =

UploadTextureToStorage(context, bitmap, gpu_disabled_switch,

impeller::StorageMode::kHostVisible,

/*create_mips=*/false);

}));

return result;

}

std::pair<sk_sp<DlImage>, std::string>

ImageDecoderImpeller::UploadTextureToStorage(

const std::shared_ptr<impeller::Context>& context,

std::shared_ptr<SkBitmap> bitmap,

const std::shared_ptr<fml::SyncSwitch>& gpu_disabled_switch,

impeller::StorageMode storage_mode,

bool create_mips) {

TRACE_EVENT0("impeller", __FUNCTION__);

if (!context) {

return std::make_pair(nullptr, "No Impeller context is available");

}

if (!bitmap) {

return std::make_pair(nullptr, "No texture bitmap is available");

}

const auto image_info = bitmap->info();

const auto pixel_format =

impeller::skia_conversions::ToPixelFormat(image_info.colorType());

if (!pixel_format) {

std::string decode_error(impeller::SPrintF(

"Unsupported pixel format (SkColorType=%d)", image_info.colorType()));

FML_DLOG(ERROR) << decode_error;

return std::make_pair(nullptr, decode_error);

}

impeller::TextureDescriptor texture_descriptor;

texture_descriptor.storage_mode = storage_mode;

texture_descriptor.format = pixel_format.value();

texture_descriptor.size = {image_info.width(), image_info.height()};

texture_descriptor.mip_count =

create_mips ? texture_descriptor.size.MipCount() : 1;

auto texture =

context->GetResourceAllocator()->CreateTexture(texture_descriptor);

if (!texture) {

std::string decode_error("Could not create Impeller texture.");

FML_DLOG(ERROR) << decode_error;

return std::make_pair(nullptr, decode_error);

}

auto mapping = std::make_shared<fml::NonOwnedMapping>(

reinterpret_cast<const uint8_t*>(bitmap->getAddr(0, 0)), // data

texture_descriptor.GetByteSizeOfBaseMipLevel(), // size

[bitmap](auto, auto) mutable { bitmap.reset(); } // proc

);

if (!texture->SetContents(mapping)) {

std::string decode_error("Could not copy contents into Impeller texture.");

FML_DLOG(ERROR) << decode_error;

return std::make_pair(nullptr, decode_error);

}

texture->SetLabel(impeller::SPrintF("ui.Image(%p)", texture.get()).c_str());

if (texture_descriptor.mip_count > 1u && create_mips) {

std::optional<std::string> decode_error;

// The only platform that needs mipmapping unconditionally is GL.

// GL based platforms never disable GPU access.

// This is only really needed for iOS.

gpu_disabled_switch->Execute(fml::SyncSwitch::Handlers().SetIfFalse(

[context, &texture, &decode_error] {

auto command_buffer = context->CreateCommandBuffer();

if (!command_buffer) {

decode_error =

"Could not create command buffer for mipmap generation.";

return;

}

command_buffer->SetLabel("Mipmap Command Buffer");

auto blit_pass = command_buffer->CreateBlitPass();

if (!blit_pass) {

decode_error = "Could not create blit pass for mipmap generation.";

return;

}

blit_pass->SetLabel("Mipmap Blit Pass");

blit_pass->GenerateMipmap(texture);

blit_pass->EncodeCommands(context->GetResourceAllocator());

if (!context->GetCommandQueue()->Submit({command_buffer}).ok()) {

decode_error = "Failed to submit blit pass command buffer.";

return;

}

command_buffer->WaitUntilScheduled();

}));

if (decode_error.has_value()) {

FML_DLOG(ERROR) << decode_error.value();

return std::make_pair(nullptr, decode_error.value());

}

}

return std::make_pair(impeller::DlImageImpeller::Make(std::move(texture)),

std::string());

}

// |ImageDecoder|

void ImageDecoderImpeller::Decode(fml::RefPtr<ImageDescriptor> descriptor,

uint32_t target_width,

uint32_t target_height,

const ImageResult& p_result) {

FML_DCHECK(descriptor);

FML_DCHECK(p_result);

// Wrap the result callback so that it can be invoked from any thread.

auto raw_descriptor = descriptor.get();

raw_descriptor->AddRef();

ImageResult result = [p_result, //

raw_descriptor, //

ui_runner = runners_.GetUITaskRunner() //

](auto image, auto decode_error) {

ui_runner->PostTask([raw_descriptor, p_result, image, decode_error]() {

raw_descriptor->Release();

p_result(std::move(image), decode_error);

});

};

concurrent_task_runner_->PostTask(

[raw_descriptor, //

context = context_.get(), //

target_size = SkISize::Make(target_width, target_height), //

io_runner = runners_.GetIOTaskRunner(), //

result,

supports_wide_gamut = supports_wide_gamut_, //

gpu_disabled_switch = gpu_disabled_switch_]() {

if (!context) {

result(nullptr, "No Impeller context is available");

return;

}

auto max_size_supported =

context->GetResourceAllocator()->GetMaxTextureSizeSupported();

// Always decompress on the concurrent runner.

auto bitmap_result = DecompressTexture(

raw_descriptor, target_size, max_size_supported,

supports_wide_gamut, context->GetResourceAllocator());

if (!bitmap_result.device_buffer) {

result(nullptr, bitmap_result.decode_error);

return;

}

auto upload_texture_and_invoke_result = [result, context, bitmap_result,

gpu_disabled_switch]() {

sk_sp<DlImage> image;

std::string decode_error;

if (!kShouldUseMallocDeviceBuffer &&

context->GetCapabilities()->SupportsBufferToTextureBlits()) {

std::tie(image, decode_error) = UploadTextureToPrivate(

context, bitmap_result.device_buffer, bitmap_result.image_info,

bitmap_result.sk_bitmap, gpu_disabled_switch);

result(image, decode_error);

} else {

std::tie(image, decode_error) = UploadTextureToStorage(

context, bitmap_result.sk_bitmap, gpu_disabled_switch,

impeller::StorageMode::kDevicePrivate,

/*create_mips=*/true);

result(image, decode_error);

}

};

// TODO(jonahwilliams):

// https://github.com/flutter/flutter/issues/123058 Technically we

// don't need to post tasks to the io runner, but without this

// forced serialization we can end up overloading the GPU and/or

// competing with raster workloads.

io_runner->PostTask(upload_texture_and_invoke_result);

});

}

ImpellerAllocator::ImpellerAllocator(

std::shared_ptr<impeller::Allocator> allocator)

: allocator_(std::move(allocator)) {}

std::shared_ptr<impeller::DeviceBuffer> ImpellerAllocator::GetDeviceBuffer()

const {

return buffer_;

}

bool ImpellerAllocator::allocPixelRef(SkBitmap* bitmap) {

if (!bitmap) {

return false;

}

const SkImageInfo& info = bitmap->info();

if (kUnknown_SkColorType == info.colorType() || info.width() < 0 ||

info.height() < 0 || !info.validRowBytes(bitmap->rowBytes())) {

return false;

}

impeller::DeviceBufferDescriptor descriptor;

descriptor.storage_mode = impeller::StorageMode::kHostVisible;

descriptor.size = ((bitmap->height() - 1) * bitmap->rowBytes()) +

(bitmap->width() * bitmap->bytesPerPixel());

std::shared_ptr<impeller::DeviceBuffer> device_buffer =

kShouldUseMallocDeviceBuffer

? std::make_shared<MallocDeviceBuffer>(descriptor)

: allocator_->CreateBuffer(descriptor);

if (!device_buffer) {

return false;

}

struct ImpellerPixelRef final : public SkPixelRef {

ImpellerPixelRef(int w, int h, void* s, size_t r)

: SkPixelRef(w, h, s, r) {}

~ImpellerPixelRef() override {}

};

auto pixel_ref = sk_sp<SkPixelRef>(

new ImpellerPixelRef(info.width(), info.height(),

device_buffer->OnGetContents(), bitmap->rowBytes()));

bitmap->setPixelRef(std::move(pixel_ref), 0, 0);

buffer_ = std::move(device_buffer);

return true;

}

} // namespace flutter

| engine/lib/ui/painting/image_decoder_impeller.cc/0 | {

"file_path": "engine/lib/ui/painting/image_decoder_impeller.cc",

"repo_id": "engine",

"token_count": 9293

} | 256 |

// Copyright 2013 The Flutter Authors. All rights reserved.

// Use of this source code is governed by a BSD-style license that can be

// found in the LICENSE file.

#ifndef FLUTTER_LIB_UI_PAINTING_IMAGE_ENCODING_SKIA_H_

#define FLUTTER_LIB_UI_PAINTING_IMAGE_ENCODING_SKIA_H_

#include "flutter/common/task_runners.h"

#include "flutter/display_list/image/dl_image.h"

#include "flutter/fml/synchronization/sync_switch.h"

#include "flutter/lib/ui/snapshot_delegate.h"

namespace flutter {

void ConvertImageToRasterSkia(

const sk_sp<DlImage>& dl_image,

std::function<void(sk_sp<SkImage>)> encode_task,

const fml::RefPtr<fml::TaskRunner>& raster_task_runner,

const fml::RefPtr<fml::TaskRunner>& io_task_runner,

const fml::WeakPtr<GrDirectContext>& resource_context,

const fml::TaskRunnerAffineWeakPtr<SnapshotDelegate>& snapshot_delegate,

const std::shared_ptr<const fml::SyncSwitch>& is_gpu_disabled_sync_switch);

} // namespace flutter

#endif // FLUTTER_LIB_UI_PAINTING_IMAGE_ENCODING_SKIA_H_

| engine/lib/ui/painting/image_encoding_skia.h/0 | {

"file_path": "engine/lib/ui/painting/image_encoding_skia.h",

"repo_id": "engine",

"token_count": 390

} | 257 |

// Copyright 2013 The Flutter Authors. All rights reserved.

// Use of this source code is governed by a BSD-style license that can be

// found in the LICENSE file.

#ifndef FLUTTER_LIB_UI_PAINTING_MATRIX_H_

#define FLUTTER_LIB_UI_PAINTING_MATRIX_H_

#include "third_party/skia/include/core/SkM44.h"

#include "third_party/skia/include/core/SkMatrix.h"

#include "third_party/tonic/typed_data/typed_list.h"

namespace flutter {

SkM44 ToSkM44(const tonic::Float64List& matrix4);

SkMatrix ToSkMatrix(const tonic::Float64List& matrix4);

tonic::Float64List ToMatrix4(const SkMatrix& sk_matrix);

} // namespace flutter

#endif // FLUTTER_LIB_UI_PAINTING_MATRIX_H_

| engine/lib/ui/painting/matrix.h/0 | {

"file_path": "engine/lib/ui/painting/matrix.h",

"repo_id": "engine",

"token_count": 249

} | 258 |

// Copyright 2013 The Flutter Authors. All rights reserved.

// Use of this source code is governed by a BSD-style license that can be

// found in the LICENSE file.

#ifndef FLUTTER_LIB_UI_PAINTING_RRECT_H_

#define FLUTTER_LIB_UI_PAINTING_RRECT_H_

#include "third_party/dart/runtime/include/dart_api.h"

#include "third_party/skia/include/core/SkRRect.h"

#include "third_party/tonic/converter/dart_converter.h"

namespace flutter {

class RRect {

public:

SkRRect sk_rrect;

bool is_null;

};

} // namespace flutter

namespace tonic {

template <>

struct DartConverter<flutter::RRect> {

using NativeType = flutter::RRect;

using FfiType = Dart_Handle;

static constexpr const char* kFfiRepresentation = "Handle";

static constexpr const char* kDartRepresentation = "Object";

static constexpr bool kAllowedInLeafCall = false;

static NativeType FromDart(Dart_Handle handle);

static NativeType FromArguments(Dart_NativeArguments args,

int index,

Dart_Handle& exception);

static NativeType FromFfi(FfiType val) { return FromDart(val); }

static const char* GetFfiRepresentation() { return kFfiRepresentation; }

static const char* GetDartRepresentation() { return kDartRepresentation; }

static bool AllowedInLeafCall() { return kAllowedInLeafCall; }

};

} // namespace tonic

#endif // FLUTTER_LIB_UI_PAINTING_RRECT_H_

| engine/lib/ui/painting/rrect.h/0 | {

"file_path": "engine/lib/ui/painting/rrect.h",

"repo_id": "engine",

"token_count": 523

} | 259 |

// Copyright 2013 The Flutter Authors. All rights reserved.

// Use of this source code is governed by a BSD-style license that can be

// found in the LICENSE file.

#ifndef FLUTTER_LIB_UI_PLUGINS_CALLBACK_CACHE_H_

#define FLUTTER_LIB_UI_PLUGINS_CALLBACK_CACHE_H_

#include <map>

#include <memory>

#include <mutex>

#include <string>

#include "flutter/fml/macros.h"

#include "third_party/dart/runtime/include/dart_api.h"

namespace flutter {

struct DartCallbackRepresentation {

std::string name;

std::string class_name;

std::string library_path;

};

class DartCallbackCache {

public:

static void SetCachePath(const std::string& path);

static std::string GetCachePath() { return cache_path_; }

static int64_t GetCallbackHandle(const std::string& name,

const std::string& class_name,

const std::string& library_path);

static Dart_Handle GetCallback(int64_t handle);

static std::unique_ptr<DartCallbackRepresentation> GetCallbackInformation(

int64_t handle);

static void LoadCacheFromDisk();

private:

static Dart_Handle LookupDartClosure(const std::string& name,

const std::string& class_name,

const std::string& library_path);

static void SaveCacheToDisk();

static std::mutex mutex_;

static std::string cache_path_;

static std::map<int64_t, DartCallbackRepresentation> cache_;

FML_DISALLOW_IMPLICIT_CONSTRUCTORS(DartCallbackCache);

};

} // namespace flutter

#endif // FLUTTER_LIB_UI_PLUGINS_CALLBACK_CACHE_H_

| engine/lib/ui/plugins/callback_cache.h/0 | {

"file_path": "engine/lib/ui/plugins/callback_cache.h",

"repo_id": "engine",

"token_count": 631

} | 260 |

// Copyright 2013 The Flutter Authors. All rights reserved.

// Use of this source code is governed by a BSD-style license that can be

// found in the LICENSE file.

part of dart.ui;

/// Whether to use the italic type variation of glyphs in the font.

///

/// Some modern fonts allow this to be selected in a more fine-grained manner.

/// See [FontVariation.italic] for details.

///

/// Italic type is distinct from slanted glyphs. To control the slant of a

/// glyph, consider the [FontVariation.slant] font feature.

enum FontStyle {

/// Use the upright ("Roman") glyphs.

normal,

/// Use glyphs that have a more pronounced angle and typically a cursive style

/// ("italic type").

italic,

}

/// The thickness of the glyphs used to draw the text.

///

/// Fonts are typically weighted on a 9-point scale, which, for historical

/// reasons, uses the names 100 to 900. In Flutter, these are named `w100` to

/// `w900` and have the following conventional meanings:

///

/// * [w100]: Thin, the thinnest font weight.

///

/// * [w200]: Extra light.

///

/// * [w300]: Light.

///

/// * [w400]: Normal. The constant [FontWeight.normal] is an alias for this value.

///

/// * [w500]: Medium.

///

/// * [w600]: Semi-bold.

///

/// * [w700]: Bold. The constant [FontWeight.bold] is an alias for this value.

///

/// * [w800]: Extra-bold.

///

/// * [w900]: Black, the thickest font weight.

///

/// For example, the font named "Roboto Medium" is typically exposed as a font

/// with the name "Roboto" and the weight [FontWeight.w500].

///

/// Some modern fonts allow the weight to be adjusted in arbitrary increments.

/// See [FontVariation.weight] for details.

class FontWeight {

const FontWeight._(this.index, this.value);

/// The encoded integer value of this font weight.

final int index;

/// The thickness value of this font weight.

final int value;

/// Thin, the least thick.

static const FontWeight w100 = FontWeight._(0, 100);

/// Extra-light.

static const FontWeight w200 = FontWeight._(1, 200);

/// Light.

static const FontWeight w300 = FontWeight._(2, 300);

/// Normal / regular / plain.

static const FontWeight w400 = FontWeight._(3, 400);

/// Medium.

static const FontWeight w500 = FontWeight._(4, 500);

/// Semi-bold.

static const FontWeight w600 = FontWeight._(5, 600);

/// Bold.

static const FontWeight w700 = FontWeight._(6, 700);

/// Extra-bold.

static const FontWeight w800 = FontWeight._(7, 800);

/// Black, the most thick.

static const FontWeight w900 = FontWeight._(8, 900);

/// The default font weight.

static const FontWeight normal = w400;

/// A commonly used font weight that is heavier than normal.

static const FontWeight bold = w700;

/// A list of all the font weights.

static const List<FontWeight> values = <FontWeight>[

w100, w200, w300, w400, w500, w600, w700, w800, w900

];

/// Linearly interpolates between two font weights.

///

/// Rather than using fractional weights, the interpolation rounds to the

/// nearest weight.

///

/// For a smoother animation of font weight, consider using

/// [FontVariation.weight] if the font in question supports it.

///

/// If both `a` and `b` are null, then this method will return null. Otherwise,

/// any null values for `a` or `b` are interpreted as equivalent to [normal]

/// (also known as [w400]).

///

/// The `t` argument represents position on the timeline, with 0.0 meaning

/// that the interpolation has not started, returning `a` (or something

/// equivalent to `a`), 1.0 meaning that the interpolation has finished,

/// returning `b` (or something equivalent to `b`), and values in between

/// meaning that the interpolation is at the relevant point on the timeline

/// between `a` and `b`. The interpolation can be extrapolated beyond 0.0 and

/// 1.0, so negative values and values greater than 1.0 are valid (and can

/// easily be generated by curves such as [Curves.elasticInOut]). The result

/// is clamped to the range [w100]–[w900].

///

/// Values for `t` are usually obtained from an [Animation<double>], such as

/// an [AnimationController].

static FontWeight? lerp(FontWeight? a, FontWeight? b, double t) {

if (a == null && b == null) {

return null;

}

return values[_lerpInt((a ?? normal).index, (b ?? normal).index, t).round().clamp(0, 8)];

}

@override

String toString() {

return const <int, String>{

0: 'FontWeight.w100',

1: 'FontWeight.w200',

2: 'FontWeight.w300',

3: 'FontWeight.w400',

4: 'FontWeight.w500',

5: 'FontWeight.w600',

6: 'FontWeight.w700',

7: 'FontWeight.w800',

8: 'FontWeight.w900',

}[index]!;

}

}

/// A feature tag and value that affect the selection of glyphs in a font.

///

/// Different fonts support different features. Consider using a tool

/// such as <https://wakamaifondue.com/> to examine your fonts to

/// determine what features are available.

///

/// {@tool sample}

/// This example shows usage of several OpenType font features,

/// including Small Caps (selected manually using the "smcp" code),

/// old-style figures, fractional ligatures, and stylistic sets.

///

/// ** See code in examples/api/lib/ui/text/font_feature.0.dart **

/// {@end-tool}

///

/// Some fonts also support continuous font variations; see the [FontVariation]

/// class.

///

/// See also:

///

/// * <https://en.wikipedia.org/wiki/List_of_typographic_features>,

/// Wikipedia's description of these typographic features.

///

/// * <https://docs.microsoft.com/en-us/typography/opentype/spec/featuretags>,

/// Microsoft's registry of these features.

class FontFeature {

/// Creates a [FontFeature] object, which can be added to a [TextStyle] to

/// change how the engine selects glyphs when rendering text.

///

/// `feature` is the four-character tag that identifies the feature.

/// These tags are specified by font formats such as OpenType.

///

/// `value` is the value that the feature will be set to. The behavior

/// of the value depends on the specific feature. Many features are

/// flags whose value can be 1 (when enabled) or 0 (when disabled).

///

/// See <https://docs.microsoft.com/en-us/typography/opentype/spec/featuretags>

const FontFeature(

this.feature,

[ this.value = 1 ]

) : assert(feature.length == 4, 'Feature tag must be exactly four characters long.'),

assert(value >= 0, 'Feature value must be zero or a positive integer.');

/// Create a [FontFeature] object that enables the feature with the given tag.

const FontFeature.enable(String feature) : this(feature, 1);

/// Create a [FontFeature] object that disables the feature with the given tag.

const FontFeature.disable(String feature) : this(feature, 0);

// Features below should be alphabetic by feature tag. This makes it

// easier to determine when a feature is missing so that we avoid

// adding duplicates.

//

// The full list is extremely long, and many of the features are

// language-specific, or indeed force-enabled for particular locales

// by HarfBuzz, so we don't even attempt to be comprehensive here.

// Features listed below are those we deemed "interesting enough" to

// have their own constructor, mostly on the basis of whether we

// could find a font where the feature had a useful effect that

// could be demonstrated.

// Start of feature tag list.

// ------------------------------------------------------------------------

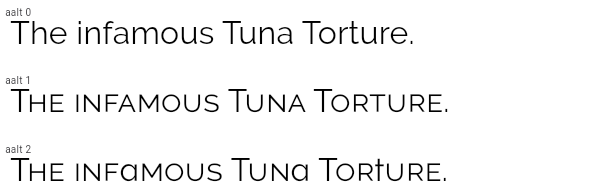

/// Access alternative glyphs. (`aalt`)

///

/// This feature selects the given glyph variant for glyphs in the span.

///

/// {@tool sample}

/// The Raleway font supports several alternate glyphs. The code

/// below shows how specific glyphs can be selected. With `aalt` set

/// to zero, the default, the normal glyphs are used. With a

/// non-zero value, Raleway substitutes small caps for lower case

/// letters. With value 2, the lowercase "a" changes to a stemless

/// "a", whereas the lowercase "t" changes to a vertical bar instead

/// of having a curve. By targeting specific letters in the text

/// (using [widgets.Text.rich]), the desired rendering for each glyph can be

/// achieved.

///

///

///

/// ** See code in examples/api/lib/ui/text/font_feature.font_feature_alternative.0.dart **

/// {@end-tool}

///

/// See also:

///

/// * <https://docs.microsoft.com/en-us/typography/opentype/spec/features_ae#aalt>

const FontFeature.alternative(this.value) : feature = 'aalt';

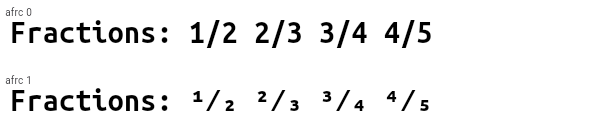

/// Use alternative ligatures to represent fractions. (`afrc`)

///

/// When this feature is enabled (and the font supports it),

/// sequences of digits separated by U+002F SOLIDUS character (/) or

/// U+2044 FRACTION SLASH (⁄) are replaced by ligatures that

/// represent the corresponding fraction. These ligatures may differ

/// from those used by the [FontFeature.fractions] feature.

///

/// This feature overrides all other features.

///

/// {@tool sample}

/// The Ubuntu Mono font supports the `afrc` feature. It causes digits

/// before slashes to become superscripted and digits after slashes to become

/// subscripted. This contrasts to the effect seen with [FontFeature.fractions].

///

///

///

/// ** See code in examples/api/lib/ui/text/font_feature.font_feature_alternative_fractions.0.dart **

/// {@end-tool}

///

/// See also:

///

/// * [FontFeature.fractions], which has a similar (but different) effect.

/// * <https://docs.microsoft.com/en-us/typography/opentype/spec/features_ae#afrc>

const FontFeature.alternativeFractions() : feature = 'afrc', value = 1;

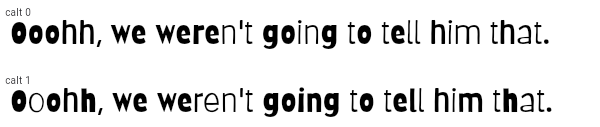

/// Enable contextual alternates. (`calt`)

///

/// With this feature enabled, specific glyphs may be replaced by

/// alternatives based on nearby text.

///

/// {@tool sample}

/// The Barriecito font supports the `calt` feature. It causes some

/// letters in close proximity to other instances of themselves to

/// use different glyphs, to give the appearance of more variation

/// in the glyphs, rather than having each letter always use a

/// particular glyph.

///

///

///

/// ** See code in examples/api/lib/ui/text/font_feature.font_feature_contextual_alternates.0.dart **

/// {@end-tool}

///

/// See also:

///

/// * [FontFeature.randomize], which is more a rarely supported but more

/// powerful way to get a similar effect.

/// * <https://docs.microsoft.com/en-us/typography/opentype/spec/features_ae#calt>

const FontFeature.contextualAlternates() : feature = 'calt', value = 1;

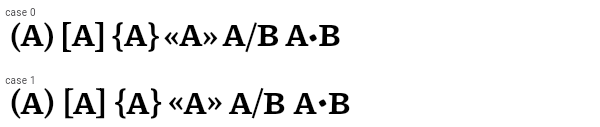

/// Enable case-sensitive forms. (`case`)

///

/// Some glyphs, for example parentheses or operators, are typically

/// designed to fit nicely with mixed case, or even predominantly

/// lowercase, text. When these glyphs are placed near strings of

/// capital letters, they appear a little off-center.

///

/// This feature, when supported by the font, causes these glyphs to

/// be shifted slightly, or otherwise adjusted, so as to form a more

/// aesthetically pleasing combination with capital letters.

///

/// {@tool sample}

/// The Piazzolla font supports the `case` feature. It causes

/// parentheses, brackets, braces, guillemets, slashes, bullets, and

/// some other glyphs (not shown below) to be shifted up slightly so

/// that capital letters appear centered in comparison. When the

/// feature is disabled, those glyphs are optimized for use with

/// lowercase letters, and so capital letters appear to ride higher

/// relative to the punctuation marks.

///

/// The difference is very subtle. It may be most obvious when

/// examining the square brackets compared to the capital A.

///

///

///

/// ** See code in examples/api/lib/ui/text/font_feature.font_feature_case_sensitive_forms.0.dart **

/// {@end-tool}

///

/// See also:

///

/// * <https://docs.microsoft.com/en-us/typography/opentype/spec/features_ae#case>

const FontFeature.caseSensitiveForms() : feature = 'case', value = 1;

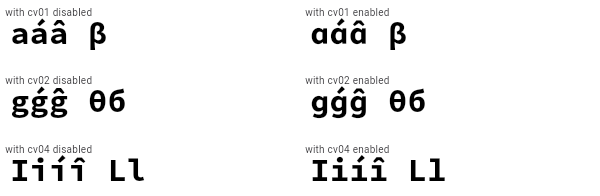

/// Select a character variant. (`cv01` through `cv99`)

///

/// Fonts may have up to 99 character variant sets, numbered 1

/// through 99, each of which can be independently enabled or

/// disabled.

///

/// Related character variants are typically grouped into stylistic

/// sets, controlled by the [FontFeature.stylisticSet] feature

/// (`ssXX`).

///

/// {@tool sample}

/// The Source Code Pro font supports the `cvXX` feature for several

/// characters. In the example below, variants 1 (`cv01`), 2

/// (`cv02`), and 4 (`cv04`) are selected. Variant 1 changes the

/// rendering of the "a" character, variant 2 changes the lowercase

/// "g" character, and variant 4 changes the lowercase "i" and "l"

/// characters. There are also variants (not shown here) that

/// control the rendering of various greek characters such as beta

/// and theta.

///

/// Notably, this can be contrasted with the stylistic sets, where

/// the set which affects the "a" character also affects beta, and

/// the set which affects the "g" character also affects theta and

/// delta.

///

///

///

/// ** See code in examples/api/lib/ui/text/font_feature.font_feature_character_variant.0.dart **

/// {@end-tool}

///

/// See also:

///

/// * [FontFeature.stylisticSet], which allows for groups of characters

/// variants to be selected at once, as opposed to individual character variants.

/// * <https://docs.microsoft.com/en-us/typography/opentype/spec/features_ae#cv01-cv99>

factory FontFeature.characterVariant(int value) {

assert(value >= 1);

assert(value <= 99);

return FontFeature('cv${value.toString().padLeft(2, "0")}');

}

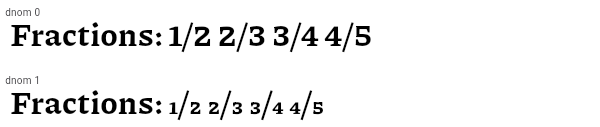

/// Display digits as denominators. (`dnom`)

///

/// This is typically used automatically by the font rendering

/// system as part of the implementation of `frac` for the denominator

/// part of fractions (see [FontFeature.fractions]).

///

/// {@tool sample}

/// The Piazzolla font supports the `dnom` feature. It causes

/// the digits to be rendered smaller and near the bottom of the EM box.

///

///

///

/// ** See code in examples/api/lib/ui/text/font_feature.font_feature_denominator.0.dart **

/// {@end-tool}

///

/// See also:

///

/// * <https://docs.microsoft.com/en-us/typography/opentype/spec/features_ae#dnom>

const FontFeature.denominator() : feature = 'dnom', value = 1;

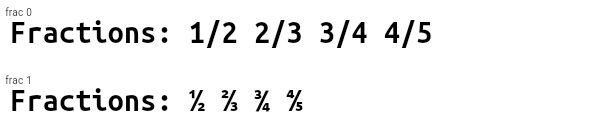

/// Use ligatures to represent fractions. (`afrc`)

///

/// When this feature is enabled (and the font supports it),

/// sequences of digits separated by U+002F SOLIDUS character (/) or

/// U+2044 FRACTION SLASH (⁄) are replaced by ligatures that

/// represent the corresponding fraction.

///

/// This feature may imply the [FontFeature.numerators] and

/// [FontFeature.denominator] features.

///

/// {@tool sample}

/// The Ubuntu Mono font supports the `frac` feature. It causes

/// digits around slashes to be turned into dedicated fraction

/// glyphs. This contrasts to the effect seen with

/// [FontFeature.alternativeFractions].

///

///

///

/// ** See code in examples/api/lib/ui/text/font_feature.font_feature_fractions.0.dart **

/// {@end-tool}

///

/// See also:

///

/// * [FontFeature.alternativeFractions], which has a similar (but different) effect.

/// * <https://docs.microsoft.com/en-us/typography/opentype/spec/features_fj#frac>

const FontFeature.fractions() : feature = 'frac', value = 1;

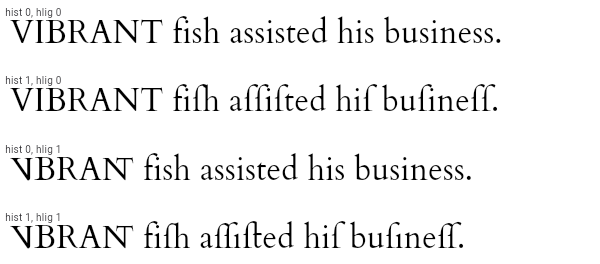

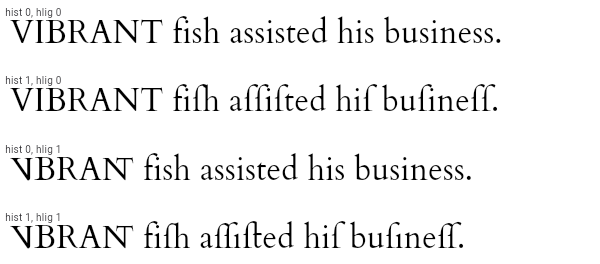

/// Use historical forms. (`hist`)

///

/// Some fonts have alternatives for letters whose forms have changed

/// through the ages. In the Latin alphabet, this is common for

/// example with the long-form "s" or the Fraktur "k". This feature enables

/// those alternative glyphs.

///

/// This does not enable legacy ligatures, only single-character alternatives.

/// To enable historical ligatures, use [FontFeature.historicalLigatures].

///

/// This feature may override other glyph-substitution features.

///

/// {@tool sample}

/// The Cardo font supports the `hist` feature specifically for the

/// letter "s": it changes occurrences of that letter for the glyph

/// used by U+017F LATIN SMALL LETTER LONG S.

///

///

///

/// ** See code in examples/api/lib/ui/text/font_feature.font_feature_historical_forms.0.dart **

/// {@end-tool}

///

/// See also:

///

/// * <https://docs.microsoft.com/en-us/typography/opentype/spec/features_fj#hist>

const FontFeature.historicalForms() : feature = 'hist', value = 1;

/// Use historical ligatures. (`hlig`)

///

/// Some fonts support ligatures that have fallen out of favor today,

/// but were historically in common use. This feature enables those

/// ligatures.

///

/// For example, the "long s" glyph was historically typeset with

/// characters such as "t" and "h" as a single ligature.

///

/// This does not enable the legacy forms, only ligatures. See

/// [FontFeature.historicalForms] to enable single characters to be

/// replaced with their historical alternatives. Combining both is

/// usually desired since the ligatures typically apply specifically

/// to characters that have historical forms as well. For example,

/// the historical forms feature might replace the "s" character

/// with the "long s" (ſ) character, while the historical ligatures

/// feature might specifically apply to cases where "long s" is

/// followed by other characters such as "t". In such cases, without

/// the historical forms being enabled, the ligatures would only

/// apply when the "long s" is used explicitly.

///

/// This feature may override other glyph-substitution features.

///

/// {@tool sample}

/// The Cardo font supports the `hlig` feature. It has legacy

/// ligatures for "VI" and "NT", and various ligatures involving the

/// "long s". In the example below, both historical forms (`hist 1`)

/// and historical ligatures (`hlig 1`) are enabled, so, for

/// instance, "fish" becomes "fiſh" which is then rendered using a

/// ligature for the last two characters.

///

/// Similarly, the word "business" is turned into "buſineſſ" by

/// `hist`, and the `ſi` and `ſſ` pairs are ligated by `hlig`.

/// Observe in particular the position of the dot of the "i" in

/// "business" in the various combinations of these features.

///

///

///

/// ** See code in examples/api/lib/ui/text/font_feature.font_feature_historical_ligatures.0.dart **

/// {@end-tool}

///

/// See also:

///

/// * <https://docs.microsoft.com/en-us/typography/opentype/spec/features_fj#hlig>

const FontFeature.historicalLigatures() : feature = 'hlig', value = 1;

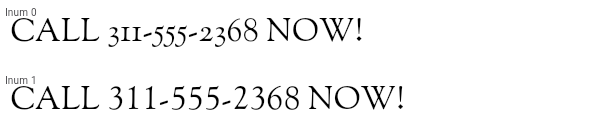

/// Use lining figures. (`lnum`)

///

/// Some fonts have digits that, like lowercase latin letters, have

/// both descenders and ascenders. In some situations, especially in

/// conjunction with capital letters, this leads to an aesthetically

/// questionable irregularity. Lining figures, on the other hand,

/// have a uniform height, and align with the baseline and the

/// height of capital letters. Conceptually, they can be thought of

/// as "capital digits".

///

/// This feature may conflict with [FontFeature.oldstyleFigures].

///

/// {@tool sample}

/// The Sorts Mill Goudy font supports the `lnum` feature. It causes

/// digits to fit more seamlessly with capital letters.

///

///

///

/// ** See code in examples/api/lib/ui/text/font_feature.font_feature_lining_figures.0.dart **

/// {@end-tool}

///

/// See also:

///

/// * <https://docs.microsoft.com/en-us/typography/opentype/spec/features_ko#lnum>

const FontFeature.liningFigures() : feature = 'lnum', value = 1;

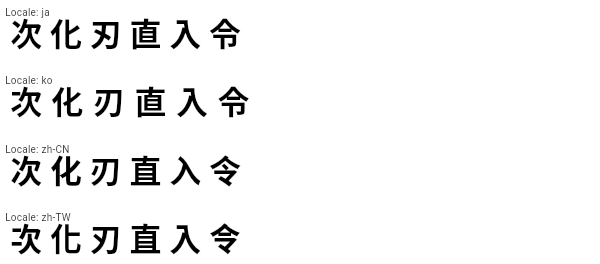

/// Use locale-specific glyphs. (`locl`)

///

/// Some characters, most notably those in the Unicode Han

/// Unification blocks, vary in presentation based on the locale in

/// use. For example, the ideograph for "grass" (U+8349, 草) has a

/// broken top line in Traditional Chinese, but a solid top line in

/// Simplified Chinese, Japanese, Korean, and Vietnamese. This kind

/// of variation also exists with other alphabets, for example

/// Cyrillic characters as used in the Bulgarian and Serbian

/// alphabets vary from their Russian counterparts.

///

/// A particular font may default to the forms for the locale for

/// which it was constructed, but still support alternative forms

/// for other locales. When this feature is enabled, the locale (as

/// specified using [painting.TextStyle.locale], for instance) is

/// used to determine which glyphs to use when locale-specific

/// alternatives exist. Disabling this feature causes the font

/// rendering to ignore locale information and only use the default

/// glyphs.

///

/// This feature is enabled by default. Using

/// `FontFeature.localeAware(enable: false)` disables the

/// locale-awareness. (So does not specifying the locale in the

/// first place, of course.)

///

/// {@tool sample}

/// The Noto Sans CJK font supports the `locl` feature for CJK characters.

/// In this example, the `localeAware` feature is not explicitly used, as it is

/// enabled by default. This example instead shows how to set the locale,

/// thus demonstrating how Noto Sans adapts the glyph shapes to the locale.

///

///

///

/// ** See code in examples/api/lib/ui/text/font_feature.font_feature_locale_aware.0.dart **

/// {@end-tool}

///

/// See also:

///

/// * <https://docs.microsoft.com/en-us/typography/opentype/spec/features_ko#locl>

/// * <https://en.wikipedia.org/wiki/Han_unification>

/// * <https://en.wikipedia.org/wiki/Cyrillic_script>

const FontFeature.localeAware({ bool enable = true }) : feature = 'locl', value = enable ? 1 : 0;

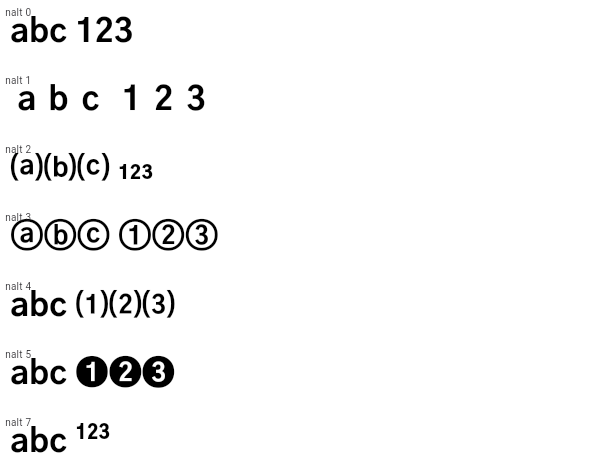

/// Display alternative glyphs for numerals (alternate annotation forms). (`nalt`)

///

/// Replaces glyphs used in numbering lists (e.g. 1, 2, 3...; or a, b, c...) with notational

/// variants that might be more typographically interesting.

///

/// Fonts sometimes support multiple alternatives, and the argument

/// selects the set to use (a positive integer, or 0 to disable the

/// feature). The default set if none is specified is 1.

///

/// {@tool sample}

/// The Gothic A1 font supports several notational variant sets via

/// the `nalt` feature.

///

/// Set 1 changes the spacing of the glyphs. Set 2 parenthesizes the

/// latin letters and reduces the numerals to subscripts. Set 3

/// circles the glyphs. Set 4 parenthesizes the digits. Set 5 uses

/// reverse-video circles for the digits. Set 7 superscripts the

/// digits.

///

/// The code below shows how to select set 3.

///

///

///

/// ** See code in examples/api/lib/ui/text/font_feature.font_feature_notational_forms.0.dart **

/// {@end-tool}

///

/// See also:

///

/// * <https://docs.microsoft.com/en-us/typography/opentype/spec/features_ko#nalt>

const FontFeature.notationalForms([this.value = 1]) : feature = 'nalt', assert(value >= 0);

/// Display digits as numerators. (`numr`)

///

/// This is typically used automatically by the font rendering

/// system as part of the implementation of `frac` for the numerator

/// part of fractions (see [FontFeature.fractions]).

///

/// {@tool sample}

/// The Piazzolla font supports the `numr` feature. It causes

/// the digits to be rendered smaller and near the top of the EM box.

///

///

///

/// ** See code in examples/api/lib/ui/text/font_feature.font_feature_numerators.0.dart **

/// {@end-tool}

///

/// See also:

///

/// * <https://docs.microsoft.com/en-us/typography/opentype/spec/features_ko#numr>

const FontFeature.numerators() : feature = 'numr', value = 1;

/// Use old style figures. (`onum`)

///

/// Some fonts have variants of the figures (e.g. the digit 9) that,

/// when this feature is enabled, render with descenders under the

/// baseline instead of being entirely above the baseline. If the

/// default digits are lining figures, this allows the selection of

/// digits that fit better with mixed case (uppercase and lowercase)

/// text.

///

/// This overrides [FontFeature.slashedZero] and may conflict with

/// [FontFeature.liningFigures].

///

/// {@tool sample}

/// The Piazzolla font supports the `onum` feature. It causes

/// digits to extend below the baseline.

///

///

///

/// ** See code in examples/api/lib/ui/text/font_feature.font_feature_oldstyle_figures.0.dart **

/// {@end-tool}

///

/// See also:

///

/// * <https://docs.microsoft.com/en-us/typography/opentype/spec/features_ko#onum>

/// * <https://en.wikipedia.org/wiki/Text_figures>

const FontFeature.oldstyleFigures() : feature = 'onum', value = 1;

/// Use ordinal forms for alphabetic glyphs. (`ordn`)

///

/// Some fonts have variants of the alphabetic glyphs intended for

/// use after numbers when expressing ordinals, as in "1st", "2nd",

/// "3rd". This feature enables those alternative glyphs.

///

/// This may override other features that substitute glyphs.

///

/// {@tool sample}

/// The Piazzolla font supports the `ordn` feature. It causes

/// alphabetic glyphs to become smaller and superscripted.

///

///

///

/// ** See code in examples/api/lib/ui/text/font_feature.font_feature_ordinal_forms.0.dart **

/// {@end-tool}

///

/// See also:

///

/// * <https://docs.microsoft.com/en-us/typography/opentype/spec/features_ko#ordn>

const FontFeature.ordinalForms() : feature = 'ordn', value = 1;

/// Use proportional (varying width) figures. (`pnum`)

///

/// For fonts that have both proportional and tabular (monospace) figures,

/// this enables the proportional figures.

///

/// This is mutually exclusive with [FontFeature.tabularFigures].

///

/// The default behavior varies from font to font.

///

/// {@tool sample}

/// The Kufam font supports the `pnum` feature. It causes the digits

/// to become proportionally-sized, rather than all being the same

/// width. In this font this is especially noticeable with the digit

/// "1": normally, the 1 has very noticeable serifs in this

/// sans-serif font, but with the proportionally figures enabled,

/// the digit becomes much narrower.

///

///

///

/// ** See code in examples/api/lib/ui/text/font_feature.font_feature_proportional_figures.0.dart **

/// {@end-tool}

///

/// See also:

///