modelId

stringlengths 4

112

| lastModified

stringlengths 24

24

| tags

list | pipeline_tag

stringclasses 21

values | files

list | publishedBy

stringlengths 2

37

| downloads_last_month

int32 0

9.44M

| library

stringclasses 15

values | modelCard

large_stringlengths 0

100k

|

|---|---|---|---|---|---|---|---|---|

TransQuest/siamesetransquest-da-ru_en-reddit_wikiquotes | 2021-06-04T11:16:08.000Z | [

"pytorch",

"xlm-roberta",

"ru-en",

"transformers",

"Quality Estimation",

"siamesetransquest",

"da",

"license:apache-2.0"

]

| [

".gitattributes",

"README.md",

"config.json",

"model_args.json",

"modules.json",

"pooling_config.json",

"pytorch_model.bin",

"sentence_bert_config.json",

"sentencepiece.bpe.model",

"special_tokens_map.json",

"tokenizer_config.json"

]

| TransQuest | 28 | transformers | ---

language: ru-en

tags:

- Quality Estimation

- siamesetransquest

- da

license: apache-2.0

---

# TransQuest: Translation Quality Estimation with Cross-lingual Transformers

The goal of quality estimation (QE) is to evaluate the quality of a translation without having access to a reference translation. High-accuracy QE that can be easily deployed for a number of language pairs is the missing piece in many commercial translation workflows as they have numerous potential uses. They can be employed to select the best translation when several translation engines are available or can inform the end user about the reliability of automatically translated content. In addition, QE systems can be used to decide whether a translation can be published as it is in a given context, or whether it requires human post-editing before publishing or translation from scratch by a human. The quality estimation can be done at different levels: document level, sentence level and word level.

With TransQuest, we have opensourced our research in translation quality estimation which also won the sentence-level direct assessment quality estimation shared task in [WMT 2020](http://www.statmt.org/wmt20/quality-estimation-task.html). TransQuest outperforms current open-source quality estimation frameworks such as [OpenKiwi](https://github.com/Unbabel/OpenKiwi) and [DeepQuest](https://github.com/sheffieldnlp/deepQuest).

## Features

- Sentence-level translation quality estimation on both aspects: predicting post editing efforts and direct assessment.

- Word-level translation quality estimation capable of predicting quality of source words, target words and target gaps.

- Outperform current state-of-the-art quality estimation methods like DeepQuest and OpenKiwi in all the languages experimented.

- Pre-trained quality estimation models for fifteen language pairs are available in [HuggingFace.](https://huggingface.co/TransQuest)

## Installation

### From pip

```bash

pip install transquest

```

### From Source

```bash

git clone https://github.com/TharinduDR/TransQuest.git

cd TransQuest

pip install -r requirements.txt

```

## Using Pre-trained Models

```python

import torch

from transquest.algo.sentence_level.siamesetransquest.run_model import SiameseTransQuestModel

model = SiameseTransQuestModel("TransQuest/siamesetransquest-da-ru_en-reddit_wikiquotes")

predictions = model.predict([["Reducerea acestor conflicte este importantă pentru conservare.", "Reducing these conflicts is not important for preservation."]])

print(predictions)

```

## Documentation

For more details follow the documentation.

1. **[Installation](https://tharindudr.github.io/TransQuest/install/)** - Install TransQuest locally using pip.

2. **Architectures** - Checkout the architectures implemented in TransQuest

1. [Sentence-level Architectures](https://tharindudr.github.io/TransQuest/architectures/sentence_level_architectures/) - We have released two architectures; MonoTransQuest and SiameseTransQuest to perform sentence level quality estimation.

2. [Word-level Architecture](https://tharindudr.github.io/TransQuest/architectures/word_level_architecture/) - We have released MicroTransQuest to perform word level quality estimation.

3. **Examples** - We have provided several examples on how to use TransQuest in recent WMT quality estimation shared tasks.

1. [Sentence-level Examples](https://tharindudr.github.io/TransQuest/examples/sentence_level_examples/)

2. [Word-level Examples](https://tharindudr.github.io/TransQuest/examples/word_level_examples/)

4. **Pre-trained Models** - We have provided pretrained quality estimation models for fifteen language pairs covering both sentence-level and word-level

1. [Sentence-level Models](https://tharindudr.github.io/TransQuest/models/sentence_level_pretrained/)

2. [Word-level Models](https://tharindudr.github.io/TransQuest/models/word_level_pretrained/)

5. **[Contact](https://tharindudr.github.io/TransQuest/contact/)** - Contact us for any issues with TransQuest

## Citations

If you are using the word-level architecture, please consider citing this paper which is accepted to [ACL 2021](https://2021.aclweb.org/).

```bash

@InProceedings{ranasinghe2021,

author = {Ranasinghe, Tharindu and Orasan, Constantin and Mitkov, Ruslan},

title = {An Exploratory Analysis of Multilingual Word Level Quality Estimation with Cross-Lingual Transformers},

booktitle = {Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics},

year = {2021}

}

```

If you are using the sentence-level architectures, please consider citing these papers which were presented in [COLING 2020](https://coling2020.org/) and in [WMT 2020](http://www.statmt.org/wmt20/) at EMNLP 2020.

```bash

@InProceedings{transquest:2020a,

author = {Ranasinghe, Tharindu and Orasan, Constantin and Mitkov, Ruslan},

title = {TransQuest: Translation Quality Estimation with Cross-lingual Transformers},

booktitle = {Proceedings of the 28th International Conference on Computational Linguistics},

year = {2020}

}

```

```bash

@InProceedings{transquest:2020b,

author = {Ranasinghe, Tharindu and Orasan, Constantin and Mitkov, Ruslan},

title = {TransQuest at WMT2020: Sentence-Level Direct Assessment},

booktitle = {Proceedings of the Fifth Conference on Machine Translation},

year = {2020}

}

```

|

|

TransQuest/siamesetransquest-da-si_en-wiki | 2021-06-04T11:15:08.000Z | [

"pytorch",

"xlm-roberta",

"si-en",

"transformers",

"Quality Estimation",

"siamesetransquest",

"da",

"license:apache-2.0"

]

| [

".gitattributes",

"README.md",

"config.json",

"model_args.json",

"modules.json",

"pooling_config.json",

"pytorch_model.bin",

"sentence_bert_config.json",

"sentencepiece.bpe.model",

"special_tokens_map.json",

"tokenizer_config.json"

]

| TransQuest | 18 | transformers | ---

language: si-en

tags:

- Quality Estimation

- siamesetransquest

- da

license: apache-2.0

---

# TransQuest: Translation Quality Estimation with Cross-lingual Transformers

The goal of quality estimation (QE) is to evaluate the quality of a translation without having access to a reference translation. High-accuracy QE that can be easily deployed for a number of language pairs is the missing piece in many commercial translation workflows as they have numerous potential uses. They can be employed to select the best translation when several translation engines are available or can inform the end user about the reliability of automatically translated content. In addition, QE systems can be used to decide whether a translation can be published as it is in a given context, or whether it requires human post-editing before publishing or translation from scratch by a human. The quality estimation can be done at different levels: document level, sentence level and word level.

With TransQuest, we have opensourced our research in translation quality estimation which also won the sentence-level direct assessment quality estimation shared task in [WMT 2020](http://www.statmt.org/wmt20/quality-estimation-task.html). TransQuest outperforms current open-source quality estimation frameworks such as [OpenKiwi](https://github.com/Unbabel/OpenKiwi) and [DeepQuest](https://github.com/sheffieldnlp/deepQuest).

## Features

- Sentence-level translation quality estimation on both aspects: predicting post editing efforts and direct assessment.

- Word-level translation quality estimation capable of predicting quality of source words, target words and target gaps.

- Outperform current state-of-the-art quality estimation methods like DeepQuest and OpenKiwi in all the languages experimented.

- Pre-trained quality estimation models for fifteen language pairs are available in [HuggingFace.](https://huggingface.co/TransQuest)

## Installation

### From pip

```bash

pip install transquest

```

### From Source

```bash

git clone https://github.com/TharinduDR/TransQuest.git

cd TransQuest

pip install -r requirements.txt

```

## Using Pre-trained Models

```python

import torch

from transquest.algo.sentence_level.siamesetransquest.run_model import SiameseTransQuestModel

model = SiameseTransQuestModel("TransQuest/siamesetransquest-da-si_en-wiki")

predictions = model.predict([["Reducerea acestor conflicte este importantă pentru conservare.", "Reducing these conflicts is not important for preservation."]])

print(predictions)

```

## Documentation

For more details follow the documentation.

1. **[Installation](https://tharindudr.github.io/TransQuest/install/)** - Install TransQuest locally using pip.

2. **Architectures** - Checkout the architectures implemented in TransQuest

1. [Sentence-level Architectures](https://tharindudr.github.io/TransQuest/architectures/sentence_level_architectures/) - We have released two architectures; MonoTransQuest and SiameseTransQuest to perform sentence level quality estimation.

2. [Word-level Architecture](https://tharindudr.github.io/TransQuest/architectures/word_level_architecture/) - We have released MicroTransQuest to perform word level quality estimation.

3. **Examples** - We have provided several examples on how to use TransQuest in recent WMT quality estimation shared tasks.

1. [Sentence-level Examples](https://tharindudr.github.io/TransQuest/examples/sentence_level_examples/)

2. [Word-level Examples](https://tharindudr.github.io/TransQuest/examples/word_level_examples/)

4. **Pre-trained Models** - We have provided pretrained quality estimation models for fifteen language pairs covering both sentence-level and word-level

1. [Sentence-level Models](https://tharindudr.github.io/TransQuest/models/sentence_level_pretrained/)

2. [Word-level Models](https://tharindudr.github.io/TransQuest/models/word_level_pretrained/)

5. **[Contact](https://tharindudr.github.io/TransQuest/contact/)** - Contact us for any issues with TransQuest

## Citations

If you are using the word-level architecture, please consider citing this paper which is accepted to [ACL 2021](https://2021.aclweb.org/).

```bash

@InProceedings{ranasinghe2021,

author = {Ranasinghe, Tharindu and Orasan, Constantin and Mitkov, Ruslan},

title = {An Exploratory Analysis of Multilingual Word Level Quality Estimation with Cross-Lingual Transformers},

booktitle = {Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics},

year = {2021}

}

```

If you are using the sentence-level architectures, please consider citing these papers which were presented in [COLING 2020](https://coling2020.org/) and in [WMT 2020](http://www.statmt.org/wmt20/) at EMNLP 2020.

```bash

@InProceedings{transquest:2020a,

author = {Ranasinghe, Tharindu and Orasan, Constantin and Mitkov, Ruslan},

title = {TransQuest: Translation Quality Estimation with Cross-lingual Transformers},

booktitle = {Proceedings of the 28th International Conference on Computational Linguistics},

year = {2020}

}

```

```bash

@InProceedings{transquest:2020b,

author = {Ranasinghe, Tharindu and Orasan, Constantin and Mitkov, Ruslan},

title = {TransQuest at WMT2020: Sentence-Level Direct Assessment},

booktitle = {Proceedings of the Fifth Conference on Machine Translation},

year = {2020}

}

```

|

|

TristaNi/MAPC | 2021-02-08T20:10:44.000Z | []

| [

".gitattributes"

]

| TristaNi | 0 | |||

TsinghuaAI/CPM-Generate | 2021-05-21T11:34:56.000Z | [

"pytorch",

"tf",

"gpt2",

"lm-head",

"causal-lm",

"zh",

"dataset:100GB Chinese corpus",

"arxiv:2012.00413",

"transformers",

"cpm",

"license:mit",

"text-generation"

]

| text-generation | [

".gitattributes",

"README.md",

"config.json",

"pytorch_model.bin",

"spiece.model",

"tf_model.h5"

]

| TsinghuaAI | 288 | transformers | ---

language:

- zh

tags:

- cpm

license: mit

datasets:

- 100GB Chinese corpus

---

# CPM-Generate

## Model description

CPM (Chinese Pre-trained Language Model) is a Transformer-based autoregressive language model, with 2.6 billion parameters and 100GB Chinese training data. To the best of our knowledge, CPM is the largest Chinese pre-trained language model, which could facilitate downstream Chinese NLP tasks, such as conversation, essay generation, cloze test, and language understanding. [[Project](https://cpm.baai.ac.cn)] [[Model](https://cpm.baai.ac.cn/download.html)] [[Paper](https://arxiv.org/abs/2012.00413)]

## Intended uses & limitations

#### How to use

```python

from transformers import TextGenerationPipeline, AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained("TsinghuaAI/CPM-Generate")

model = AutoModelWithLMHead.from_pretrained("TsinghuaAI/CPM-Generate")

text_generator = TextGenerationPipeline(model, tokenizer)

text_generator('清华大学', max_length=50, do_sample=True, top_p=0.9)

```

#### Limitations and bias

The text generated by CPM is automatically generated by a neural network model trained on a large number of texts, which does not represent the authors' or their institutes' official attitudes and preferences. The text generated by CPM is only used for technical and scientific purposes. If it infringes on your rights and interests or violates social morality, please do not propagate it, but contact the authors and the authors will deal with it promptly.

## Training data

We collect different kinds of texts in our pre-training, including encyclopedia, news, novels, and Q\&A. The details of our training data are shown as follows.

| Data Source | Encyclopedia | Webpage | Story | News | Dialog |

| ----------- | ------------ | ------- | ----- | ----- | ------ |

| **Size** | ~40GB | ~39GB | ~10GB | ~10GB | ~1GB |

## Training procedure

Based on the hyper-parameter searching on the learning rate and batch size, we set the learning rate as \\(1.5\times10^{-4}\\) and the batch size as \\(3,072\\), which makes the model training more stable. In the first version, we still adopt the dense attention and the max sequence length is \\(1,024\\). We will implement sparse attention in the future. We pre-train our model for \\(20,000\\) steps, and the first \\(5,000\\) steps are for warm-up. The optimizer is Adam. It takes two weeks to train our largest model using \\(64\\) NVIDIA V100.

## Eval results

| | n_param | n_layers | d_model | n_heads | d_head |

|------------|-------------------:|--------------------:|-------------------:|-------------------:|------------------:|

| CPM-Small | 109M | 12 | 768 | 12 | 64 |

| CPM-Medium | 334M | 24 | 1,024 | 16 | 64 |

| CPM-Large | 2.6B | 32 | 2,560 | 32 | 80 |

We evaluate CPM with different numbers of parameters (the details are shown above) on various Chinese NLP tasks in the few-shot (even zero-shot) settings. With the increase of parameters, CPM performs better on most datasets, indicating that larger models are more proficient at language generation and language understanding. We provide results of text classification, chinese idiom cloze test, and short text conversation generation as follows. Please refer to our [paper](https://arxiv.org/abs/2012.00413) for more detailed results.

### Zero-shot performance on text classification tasks

| | TNEWS | IFLYTEK | OCNLI |

| ---------- | :------------: | :------------: | :------------: |

| CPM-Small | 0.626 | 0.584 | 0.378 |

| CPM-Medium | 0.618 | 0.635 | 0.379 |

| CPM-Large | **0.703** | **0.708** | **0.442** |

### Performance on Chinese Idiom Cloze (ChID) dataset

| | Supervised | Unsupervised |

|------------|:--------------:|:--------------:|

| CPM-Small | 0.657 | 0.433 |

| CPM-Medium | 0.695 | 0.524 |

| CPM-Large | **0.804** | **0.685** |

### Performance on Short Text Conversation Generation (STC) dataset

| | Average | Extrema | Greedy | Dist-1 | Dist-2 |

|----------------------------------|:--------------:|:--------------:|:--------------:|:-------------------------------:|:--------------------------------:|

| *Few-shot (Unsupervised)* | | | | | |

| CDial-GPT | 0.899 | 0.797 | 0.810 | 1,963 / **0.011** | 20,814 / 0.126 |

| CPM-Large | **0.928** | **0.805** | **0.815** | **3,229** / 0.007 | **68,008** / **0.154** |

| *Supervised* | | | | | |

| CDial-GPT | 0.933 | **0.814** | **0.826** | 2,468 / 0.008 | 35,634 / 0.127 |

| CPM-Large | **0.934** | 0.810 | 0.819 | **3,352** / **0.011** | **67,310** / **0.233** |

### BibTeX entry and citation info

```bibtex

@article{cpm-v1,

title={CPM: A Large-scale Generative Chinese Pre-trained Language Model},

author={Zhang, Zhengyan and Han, Xu, and Zhou, Hao, and Ke, Pei, and Gu, Yuxian and Ye, Deming and Qin, Yujia and Su, Yusheng and Ji, Haozhe and Guan, Jian and Qi, Fanchao and Wang, Xiaozhi and Zheng, Yanan and Zeng, Guoyang and Cao, Huanqi and Chen, Shengqi and Li, Daixuan and Sun, Zhenbo and Liu, Zhiyuan and Huang, Minlie and Han, Wentao and Tang, Jie and Li, Juanzi and Sun, Maosong},

year={2020}

}

``` |

TurkuNLP/bert-base-finnish-cased-v1 | 2021-05-18T22:45:33.000Z | [

"pytorch",

"tf",

"jax",

"bert",

"masked-lm",

"fi",

"arxiv:1912.07076",

"arxiv:1908.04212",

"transformers",

"fill-mask"

]

| fill-mask | [

".gitattributes",

"README.md",

"config.json",

"flax_model.msgpack",

"pytorch_model.bin",

"tf_model.h5",

"tokenizer.json",

"tokenizer_config.json",

"vocab.txt"

]

| TurkuNLP | 21,786 | transformers | ---

language: fi

---

## Quickstart

**Release 1.0** (November 25, 2019)

Download the models here:

* Cased Finnish BERT Base: [bert-base-finnish-cased-v1.zip](http://dl.turkunlp.org/finbert/bert-base-finnish-cased-v1.zip)

* Uncased Finnish BERT Base: [bert-base-finnish-uncased-v1.zip](http://dl.turkunlp.org/finbert/bert-base-finnish-uncased-v1.zip)

We generally recommend the use of the cased model.

Paper presenting Finnish BERT: [arXiv:1912.07076](https://arxiv.org/abs/1912.07076)

## What's this?

A version of Google's [BERT](https://github.com/google-research/bert) deep transfer learning model for Finnish. The model can be fine-tuned to achieve state-of-the-art results for various Finnish natural language processing tasks.

FinBERT features a custom 50,000 wordpiece vocabulary that has much better coverage of Finnish words than e.g. the previously released [multilingual BERT](https://github.com/google-research/bert/blob/master/multilingual.md) models from Google:

| Vocabulary | Example |

|------------|---------|

| FinBERT | Suomessa vaihtuu kesän aikana sekä pääministeri että valtiovarain ##ministeri . |

| Multilingual BERT | Suomessa vai ##htuu kes ##än aikana sekä p ##ää ##minister ##i että valt ##io ##vara ##in ##minister ##i . |

FinBERT has been pre-trained for 1 million steps on over 3 billion tokens (24B characters) of Finnish text drawn from news, online discussion, and internet crawls. By contrast, Multilingual BERT was trained on Wikipedia texts, where the Finnish Wikipedia text is approximately 3% of the amount used to train FinBERT.

These features allow FinBERT to outperform not only Multilingual BERT but also all previously proposed models when fine-tuned for Finnish natural language processing tasks.

## Results

### Document classification

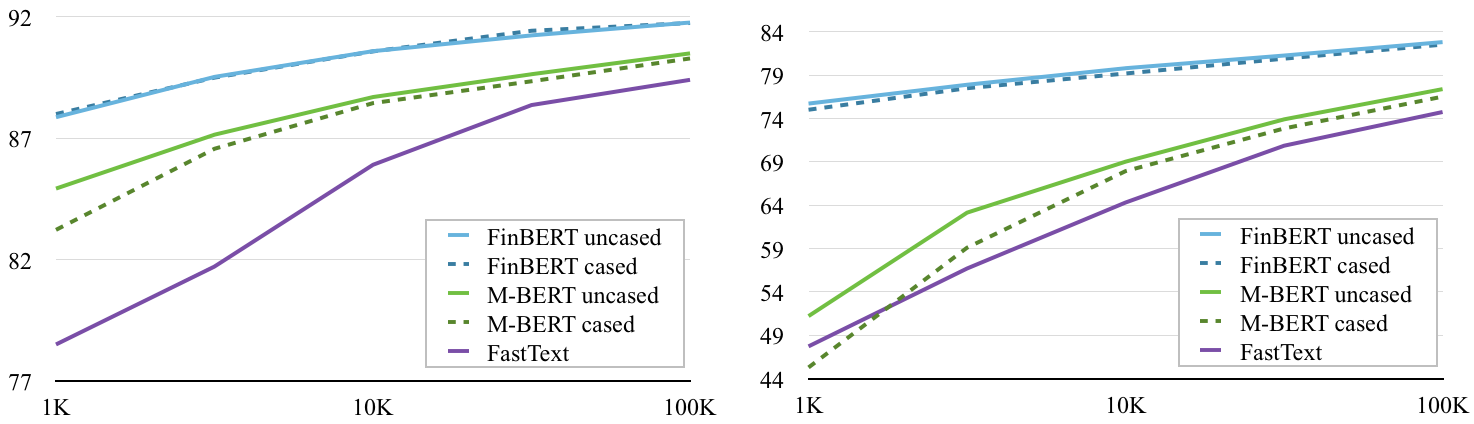

FinBERT outperforms multilingual BERT (M-BERT) on document classification over a range of training set sizes on the Yle news (left) and Ylilauta online discussion (right) corpora. (Baseline classification performance with [FastText](https://fasttext.cc/) included for reference.)

[[code](https://github.com/spyysalo/finbert-text-classification)][[Yle data](https://github.com/spyysalo/yle-corpus)] [[Ylilauta data](https://github.com/spyysalo/ylilauta-corpus)]

### Named Entity Recognition

Evaluation on FiNER corpus ([Ruokolainen et al 2019](https://arxiv.org/abs/1908.04212))

| Model | Accuracy |

|--------------------|----------|

| **FinBERT** | **92.40%** |

| Multilingual BERT | 90.29% |

| [FiNER-tagger](https://github.com/Traubert/FiNer-rules) (rule-based) | 86.82% |

(FiNER tagger results from [Ruokolainen et al. 2019](https://arxiv.org/pdf/1908.04212.pdf))

[[code](https://github.com/jouniluoma/keras-bert-ner)][[data](https://github.com/mpsilfve/finer-data)]

### Part of speech tagging

Evaluation on three Finnish corpora annotated with [Universal Dependencies](https://universaldependencies.org/) part-of-speech tags: the Turku Dependency Treebank (TDT), FinnTreeBank (FTB), and Parallel UD treebank (PUD)

| Model | TDT | FTB | PUD |

|-------------------|-------------|-------------|-------------|

| **FinBERT** | **98.23%** | **98.39%** | **98.08%** |

| Multilingual BERT | 96.97% | 95.87% | 97.58% |

[[code](https://github.com/spyysalo/bert-pos)][[data](http://hdl.handle.net/11234/1-2837)]

## Use with PyTorch

If you want to use the model with the huggingface/transformers library, follow the steps in [huggingface_transformers.md](https://github.com/TurkuNLP/FinBERT/blob/master/huggingface_transformers.md)

## Previous releases

### Release 0.2

**October 24, 2019** Beta version of the BERT base uncased model trained from scratch on a corpus of Finnish news, online discussions, and crawled data.

Download the model here: [bert-base-finnish-uncased.zip](http://dl.turkunlp.org/finbert/bert-base-finnish-uncased.zip)

### Release 0.1

**September 30, 2019** We release a beta version of the BERT base cased model trained from scratch on a corpus of Finnish news, online discussions, and crawled data.

Download the model here: [bert-base-finnish-cased.zip](http://dl.turkunlp.org/finbert/bert-base-finnish-cased.zip)

|

TurkuNLP/bert-base-finnish-uncased-v1 | 2021-05-18T22:46:38.000Z | [

"pytorch",

"tf",

"jax",

"bert",

"masked-lm",

"fi",

"arxiv:1912.07076",

"arxiv:1908.04212",

"transformers",

"fill-mask"

]

| fill-mask | [

".gitattributes",

"README.md",

"config.json",

"flax_model.msgpack",

"pytorch_model.bin",

"tf_model.h5",

"tokenizer.json",

"tokenizer_config.json",

"vocab.txt"

]

| TurkuNLP | 793 | transformers | ---

language: fi

---

## Quickstart

**Release 1.0** (November 25, 2019)

Download the models here:

* Cased Finnish BERT Base: [bert-base-finnish-cased-v1.zip](http://dl.turkunlp.org/finbert/bert-base-finnish-cased-v1.zip)

* Uncased Finnish BERT Base: [bert-base-finnish-uncased-v1.zip](http://dl.turkunlp.org/finbert/bert-base-finnish-uncased-v1.zip)

We generally recommend the use of the cased model.

Paper presenting Finnish BERT: [arXiv:1912.07076](https://arxiv.org/abs/1912.07076)

## What's this?

A version of Google's [BERT](https://github.com/google-research/bert) deep transfer learning model for Finnish. The model can be fine-tuned to achieve state-of-the-art results for various Finnish natural language processing tasks.

FinBERT features a custom 50,000 wordpiece vocabulary that has much better coverage of Finnish words than e.g. the previously released [multilingual BERT](https://github.com/google-research/bert/blob/master/multilingual.md) models from Google:

| Vocabulary | Example |

|------------|---------|

| FinBERT | Suomessa vaihtuu kesän aikana sekä pääministeri että valtiovarain ##ministeri . |

| Multilingual BERT | Suomessa vai ##htuu kes ##än aikana sekä p ##ää ##minister ##i että valt ##io ##vara ##in ##minister ##i . |

FinBERT has been pre-trained for 1 million steps on over 3 billion tokens (24B characters) of Finnish text drawn from news, online discussion, and internet crawls. By contrast, Multilingual BERT was trained on Wikipedia texts, where the Finnish Wikipedia text is approximately 3% of the amount used to train FinBERT.

These features allow FinBERT to outperform not only Multilingual BERT but also all previously proposed models when fine-tuned for Finnish natural language processing tasks.

## Results

### Document classification

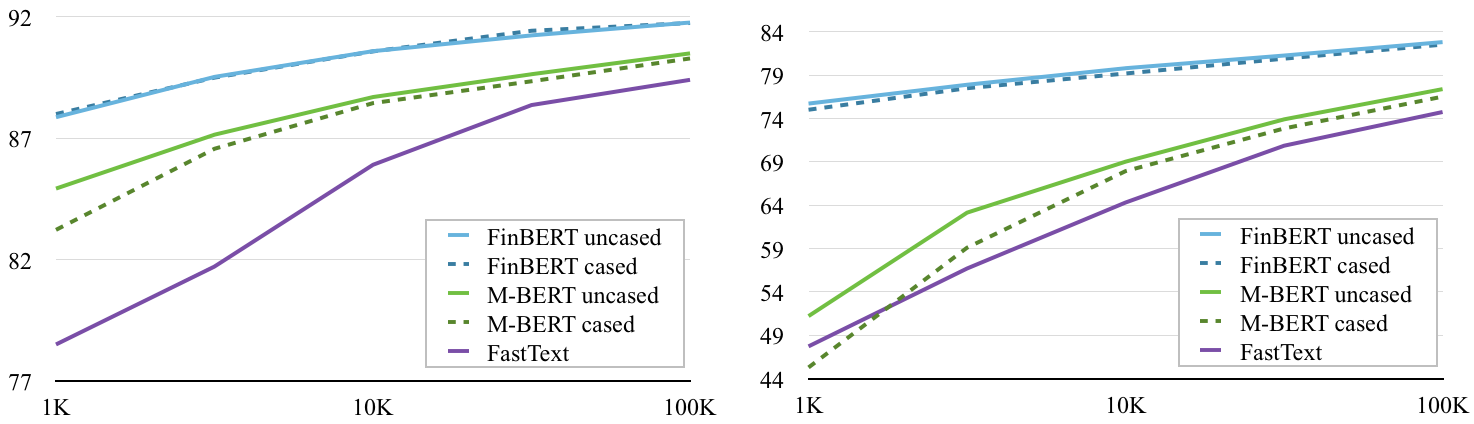

FinBERT outperforms multilingual BERT (M-BERT) on document classification over a range of training set sizes on the Yle news (left) and Ylilauta online discussion (right) corpora. (Baseline classification performance with [FastText](https://fasttext.cc/) included for reference.)

[[code](https://github.com/spyysalo/finbert-text-classification)][[Yle data](https://github.com/spyysalo/yle-corpus)] [[Ylilauta data](https://github.com/spyysalo/ylilauta-corpus)]

### Named Entity Recognition

Evaluation on FiNER corpus ([Ruokolainen et al 2019](https://arxiv.org/abs/1908.04212))

| Model | Accuracy |

|--------------------|----------|

| **FinBERT** | **92.40%** |

| Multilingual BERT | 90.29% |

| [FiNER-tagger](https://github.com/Traubert/FiNer-rules) (rule-based) | 86.82% |

(FiNER tagger results from [Ruokolainen et al. 2019](https://arxiv.org/pdf/1908.04212.pdf))

[[code](https://github.com/jouniluoma/keras-bert-ner)][[data](https://github.com/mpsilfve/finer-data)]

### Part of speech tagging

Evaluation on three Finnish corpora annotated with [Universal Dependencies](https://universaldependencies.org/) part-of-speech tags: the Turku Dependency Treebank (TDT), FinnTreeBank (FTB), and Parallel UD treebank (PUD)

| Model | TDT | FTB | PUD |

|-------------------|-------------|-------------|-------------|

| **FinBERT** | **98.23%** | **98.39%** | **98.08%** |

| Multilingual BERT | 96.97% | 95.87% | 97.58% |

[[code](https://github.com/spyysalo/bert-pos)][[data](http://hdl.handle.net/11234/1-2837)]

## Use with PyTorch

If you want to use the model with the huggingface/transformers library, follow the steps in [huggingface_transformers.md](https://github.com/TurkuNLP/FinBERT/blob/master/huggingface_transformers.md)

## Previous releases

### Release 0.2

**October 24, 2019** Beta version of the BERT base uncased model trained from scratch on a corpus of Finnish news, online discussions, and crawled data.

Download the model here: [bert-base-finnish-uncased.zip](http://dl.turkunlp.org/finbert/bert-base-finnish-uncased.zip)

### Release 0.1

**September 30, 2019** We release a beta version of the BERT base cased model trained from scratch on a corpus of Finnish news, online discussions, and crawled data.

Download the model here: [bert-base-finnish-cased.zip](http://dl.turkunlp.org/finbert/bert-base-finnish-cased.zip)

|

TurkuNLP/wikibert-base-af-cased | 2020-05-24T19:58:31.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 9 | transformers | ||

TurkuNLP/wikibert-base-ar-cased | 2020-05-24T19:58:38.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 11 | transformers | ||

TurkuNLP/wikibert-base-be-cased | 2020-05-24T19:58:44.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 11 | transformers | ||

TurkuNLP/wikibert-base-bg-cased | 2020-05-24T19:58:50.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 8 | transformers | ||

TurkuNLP/wikibert-base-ca-cased | 2020-05-24T19:58:56.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 11 | transformers | ||

TurkuNLP/wikibert-base-cs-cased | 2020-05-24T19:59:01.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 12 | transformers | ||

TurkuNLP/wikibert-base-da-cased | 2020-05-24T19:59:06.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 32 | transformers | ||

TurkuNLP/wikibert-base-de-cased | 2020-05-24T19:59:14.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 10 | transformers | ||

TurkuNLP/wikibert-base-el-cased | 2020-05-24T19:59:19.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 10 | transformers | ||

TurkuNLP/wikibert-base-en-cased | 2020-05-24T19:59:24.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 9 | transformers | ||

TurkuNLP/wikibert-base-es-cased | 2020-05-24T19:59:29.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 10 | transformers | ||

TurkuNLP/wikibert-base-et-cased | 2020-05-24T19:59:34.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 9 | transformers | ||

TurkuNLP/wikibert-base-eu-cased | 2020-05-24T19:59:42.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 11 | transformers | ||

TurkuNLP/wikibert-base-fa-cased | 2020-05-24T19:59:47.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 11 | transformers | ||

TurkuNLP/wikibert-base-fi-cased | 2020-05-24T19:59:52.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 9 | transformers | ||

TurkuNLP/wikibert-base-fr-cased | 2020-05-24T19:59:57.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 10 | transformers | ||

TurkuNLP/wikibert-base-ga-cased | 2020-05-24T20:00:02.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 16 | transformers | ||

TurkuNLP/wikibert-base-gl-cased | 2020-05-24T20:00:08.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 11 | transformers | ||

TurkuNLP/wikibert-base-he-cased | 2020-05-24T20:00:13.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 60 | transformers | ||

TurkuNLP/wikibert-base-hi-cased | 2020-05-24T20:00:18.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 11 | transformers | ||

TurkuNLP/wikibert-base-hr-cased | 2020-05-24T20:00:23.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 9 | transformers | ||

TurkuNLP/wikibert-base-hu-cased | 2020-05-24T20:00:28.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 10 | transformers | ||

TurkuNLP/wikibert-base-hy-cased | 2020-05-24T20:00:33.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 11 | transformers | ||

TurkuNLP/wikibert-base-id-cased | 2020-05-24T20:00:42.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 12 | transformers | ||

TurkuNLP/wikibert-base-it-cased | 2020-05-24T20:00:47.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 12 | transformers | ||

TurkuNLP/wikibert-base-ja-cased | 2020-05-24T20:00:52.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 9 | transformers | ||

TurkuNLP/wikibert-base-ko-cased | 2020-11-09T13:08:15.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 12 | transformers | ||

TurkuNLP/wikibert-base-lt-cased | 2020-05-24T20:00:57.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 9 | transformers | ||

TurkuNLP/wikibert-base-lv-cased | 2020-05-24T20:01:02.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 10 | transformers | ||

TurkuNLP/wikibert-base-nl-cased | 2020-05-24T20:01:07.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 11 | transformers | ||

TurkuNLP/wikibert-base-no-cased | 2020-05-24T20:01:12.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 35 | transformers | ||

TurkuNLP/wikibert-base-pl-cased | 2020-05-24T20:01:17.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 10 | transformers | ||

TurkuNLP/wikibert-base-pt-cased | 2020-05-24T20:01:22.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 8 | transformers | ||

TurkuNLP/wikibert-base-ro-cased | 2020-05-24T20:01:27.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 11 | transformers | ||

TurkuNLP/wikibert-base-ru-cased | 2020-05-24T20:01:32.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 16 | transformers | ||

TurkuNLP/wikibert-base-sk-cased | 2020-05-24T20:01:37.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 10 | transformers | ||

TurkuNLP/wikibert-base-sl-cased | 2020-05-24T20:01:43.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 9 | transformers | ||

TurkuNLP/wikibert-base-sr-cased | 2020-05-24T20:01:48.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 13 | transformers | ||

TurkuNLP/wikibert-base-sv-cased | 2020-05-24T20:01:54.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 61 | transformers | ||

TurkuNLP/wikibert-base-ta-cased | 2020-05-24T20:02:00.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 10 | transformers | ||

TurkuNLP/wikibert-base-tr-cased | 2020-05-24T20:02:06.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 9 | transformers | ||

TurkuNLP/wikibert-base-uk-cased | 2020-05-24T20:02:13.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 75 | transformers | ||

TurkuNLP/wikibert-base-ur-cased | 2020-05-24T20:02:19.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 10 | transformers | ||

TurkuNLP/wikibert-base-vi-cased | 2020-05-24T20:02:25.000Z | [

"pytorch",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"vocab.txt"

]

| TurkuNLP | 10 | transformers | ||

TypicaAI/magbert-lm | 2020-10-01T23:18:10.000Z | [

"pytorch",

"camembert",

"masked-lm",

"transformers",

"fill-mask"

]

| fill-mask | [

".gitattributes",

"config.json",

"log_history.json",

"pytorch_model.bin",

"sentencepiece.bpe.model",

"special_tokens_map.json",

"tokenizer_config.json",

"training_args.bin"

]

| TypicaAI | 21 | transformers | |

TypicaAI/magbert-ner | 2020-12-11T21:30:45.000Z | [

"pytorch",

"camembert",

"token-classification",

"fr",

"transformers"

]

| token-classification | [

".gitattributes",

"README.md",

"config.json",

"pytorch_model.bin",

"sentencepiece.bpe.model",

"special_tokens_map.json",

"tokenizer_config.json"

]

| TypicaAI | 2,525 | transformers | ---

language: fr

widget:

- text: "Je m'appelle Hicham et je vis a Fès"

---

# MagBERT-NER: a state-of-the-art NER model for Moroccan French language (Maghreb)

## Introduction

[MagBERT-NER] is a state-of-the-art NER model for Moroccan French language (Maghreb). The MagBERT-NER model was fine-tuned for NER Task based the language model for French Camembert (based on the RoBERTa architecture).

For further information or requests, please visite our website at [typica.ai Website](https://typica.ai/) or send us an email at [email protected]

## How to use MagBERT-NER with HuggingFace

##### Load MagBERT-NER and its sub-word tokenizer :

```python

from transformers import AutoTokenizer, AutoModelForTokenClassification

tokenizer = AutoTokenizer.from_pretrained("TypicaAI/magbert-ner")

model = AutoModelForTokenClassification.from_pretrained("TypicaAI/magbert-ner")

##### Process text sample (from wikipedia about the current Prime Minister of Morocco) Using NER pipeline

from transformers import pipeline

nlp = pipeline('ner', model=model, tokenizer=tokenizer, grouped_entities=True)

nlp("Saad Dine El Otmani, né le 16 janvier 1956 à Inezgane, est un homme d'État marocain, chef du gouvernement du Maroc depuis le 5 avril 2017")

#[{'entity_group': 'I-PERSON',

# 'score': 0.8941445276141167,

# 'word': 'Saad Dine El Otmani'},

# {'entity_group': 'B-DATE',

# 'score': 0.5967703461647034,

# 'word': '16 janvier 1956'},

# {'entity_group': 'B-GPE', 'score': 0.7160899192094803, 'word': 'Inezgane'},

# {'entity_group': 'B-NORP', 'score': 0.7971733212471008, 'word': 'marocain'},

# {'entity_group': 'B-GPE', 'score': 0.8921478390693665, 'word': 'Maroc'},

# {'entity_group': 'B-DATE',

# 'score': 0.5760444005330404,

# 'word': '5 avril 2017'}]

```

## Authors

MagBert-NER Model was trained by Hicham Assoudi, Ph.D.

For any questions, comments you can contact me at [email protected]

## Citation

If you use our work, please cite:

Hicham Assoudi, Ph.D., MagBERT-NER: a state-of-the-art NER model for Moroccan French language (Maghreb), (2020)

|

UBC-NLP/ARBERT | 2021-05-18T22:48:25.000Z | [

"pytorch",

"tf",

"jax",

"bert",

"masked-lm",

"transformers",

"fill-mask"

]

| fill-mask | [

".gitattributes",

"ARBERT_pytorch_verison.tar.gz",

"ARBERT_tensorflow_version.tar.gz",

"README.md",

"config.json",

"flax_model.msgpack",

"pytorch_model.bin",

"special_tokens_map.json",

"tf_model.h5",

"tokenizer_config.json",

"vocab.txt"

]

| UBC-NLP | 615 | transformers |

<img src="https://raw.githubusercontent.com/UBC-NLP/marbert/main/ARBERT_MARBERT.jpg" alt="drawing" width="30%" height="30%" align="right"/>

ARBERT is one of two models described in the paper ["ARBERT & MARBERT: Deep Bidirectional Transformers for Arabic"](https://mageed.arts.ubc.ca/files/2020/12/marbert_arxiv_2020.pdf). ARBERT is a large-scale pre-trained masked language model focused on Modern Standard Arabic (MSA). To train ARBERT, we use the same architecture as BERT-base: 12 attention layers, each has 12 attention heads and 768 hidden dimensions, a vocabulary of 100K WordPieces, making ∼163M parameters. We train ARBERT on a collection of Arabic datasets comprising 61GB of text (6.2B tokens). For more information, please visit our own GitHub [repo](https://github.com/UBC-NLP/marbert).

|

UBC-NLP/MARBERT | 2021-05-18T22:50:36.000Z | [

"pytorch",

"tf",

"jax",

"bert",

"masked-lm",

"transformers",

"fill-mask"

]

| fill-mask | [

".gitattributes",

"MARBERT_pytorch_verison.tar.gz",

"MARBERT_tensorflow_version.tar.gz",

"README.md",

"config.json",

"flax_model.msgpack",

"pytorch_model.bin",

"special_tokens_map.json",

"tf_model.h5",

"tokenizer_config.json",

"vocab.txt"

]

| UBC-NLP | 15,402 | transformers | <img src="https://raw.githubusercontent.com/UBC-NLP/marbert/main/ARBERT_MARBERT.jpg" alt="drawing" width="30%" height="30%" align="right"/>

MARBERT is one of two models described in the paper ["ARBERT & MARBERT: Deep Bidirectional Transformers for Arabic"](https://mageed.arts.ubc.ca/files/2020/12/marbert_arxiv_2020.pdf). MARBERT is a large-scale pre-trained masked language model focused on both Dialectal Arabic (DA) and MSA. Arabic has multiple varieties. To train MARBERT, we randomly sample 1B Arabic tweets from a large in-house dataset of about 6B tweets. We only include tweets with at least 3 Arabic words, based on character string matching, regardless whether the tweet has non-Arabic string or not. That is, we do not remove non-Arabic so long as the tweet meets the 3 Arabic word criterion. The dataset makes up 128GB of text (15.6B tokens). We use the same network architecture as ARBERT (BERT-base), but without the next sentence prediction (NSP) objective since tweets are short. See our [repo](https://github.com/UBC-NLP/LMBERT) for modifying BERT code to remove NSP. For more information about MARBERT, please visit our own GitHub [repo](https://github.com/UBC-NLP/marbert).

|

UWB-AIR/Czert-A-base-uncased | 2021-06-09T08:13:59.000Z | [

"tf",

"albert",

"arxiv:2103.13031",

"transformers",

"cs"

]

| [

".gitattributes",

"README.md",

"config.json",

"special_tokens_map.json",

"tf_model.h5",

"tokenizer_config.json",

"vocab.txt"

]

| UWB-AIR | 8 | transformers | ---

tags:

- cs

---

# CZERT

This repository keeps Czert-A model for the paper [Czert – Czech BERT-like Model for Language Representation

](https://arxiv.org/abs/2103.13031)

For more information, see the paper

## Available Models

You can download **MLM & NSP only** pretrained models

~~[CZERT-A-v1](https://air.kiv.zcu.cz/public/CZERT-A-czert-albert-base-uncased.zip)

[CZERT-B-v1](https://air.kiv.zcu.cz/public/CZERT-B-czert-bert-base-cased.zip)~~

After some additional experiments, we found out that the tokenizers config was exported wrongly. In Czert-B-v1, the tokenizer parameter "do_lower_case" was wrongly set to true. In Czert-A-v1 the parameter "strip_accents" was incorrectly set to true.

Both mistakes are repaired in v2.

[CZERT-A-v2](https://air.kiv.zcu.cz/public/CZERT-A-v2-czert-albert-base-uncased.zip)

[CZERT-B-v2](https://air.kiv.zcu.cz/public/CZERT-B-v2-czert-bert-base-cased.zip)

or choose from one of **Finetuned Models**

| | Models |

| - | - |

| Sentiment Classification<br> (Facebook or CSFD) | [CZERT-A-sentiment-FB](https://air.kiv.zcu.cz/public/CZERT-A_fb.zip) <br> [CZERT-B-sentiment-FB](https://air.kiv.zcu.cz/public/CZERT-B_fb.zip) <br> [CZERT-A-sentiment-CSFD](https://air.kiv.zcu.cz/public/CZERT-A_csfd.zip) <br> [CZERT-B-sentiment-CSFD](https://air.kiv.zcu.cz/public/CZERT-B_csfd.zip) | Semantic Text Similarity <br> (Czech News Agency) | [CZERT-A-sts-CNA](https://air.kiv.zcu.cz/public/CZERT-A-sts-CNA.zip) <br> [CZERT-B-sts-CNA](https://air.kiv.zcu.cz/public/CZERT-B-sts-CNA.zip)

| Named Entity Recognition | [CZERT-A-ner-CNEC](https://air.kiv.zcu.cz/public/CZERT-A-ner-CNEC-cased.zip) <br> [CZERT-B-ner-CNEC](https://air.kiv.zcu.cz/public/CZERT-B-ner-CNEC-cased.zip) <br>[PAV-ner-CNEC](https://air.kiv.zcu.cz/public/PAV-ner-CNEC-cased.zip) <br> [CZERT-A-ner-BSNLP](https://air.kiv.zcu.cz/public/CZERT-A-ner-BSNLP-cased.zip)<br>[CZERT-B-ner-BSNLP](https://air.kiv.zcu.cz/public/CZERT-B-ner-BSNLP-cased.zip) <br>[PAV-ner-BSNLP](https://air.kiv.zcu.cz/public/PAV-ner-BSNLP-cased.zip) |

| Morphological Tagging<br> | [CZERT-A-morphtag-126k](https://air.kiv.zcu.cz/public/CZERT-A-morphtag-126k-cased.zip)<br>[CZERT-B-morphtag-126k](https://air.kiv.zcu.cz/public/CZERT-B-morphtag-126k-cased.zip) |

| Semantic Role Labelling |[CZERT-A-srl](https://air.kiv.zcu.cz/public/CZERT-A-srl-cased.zip)<br> [CZERT-B-srl](https://air.kiv.zcu.cz/public/CZERT-B-srl-cased.zip) |

## How to Use CZERT?

### Sentence Level Tasks

We evaluate our model on two sentence level tasks:

* Sentiment Classification,

* Semantic Text Similarity.

<!-- tokenizer = BertTokenizerFast.from_pretrained(CZERT_MODEL_PATH, strip_accents=False)

\tmodel = TFAlbertForSequenceClassification.from_pretrained(CZERT_MODEL_PATH, num_labels=1)

or

self.tokenizer = BertTokenizerFast.from_pretrained(CZERT_MODEL_PATH, strip_accents=False)

self.model_encoder = AutoModelForSequenceClassification.from_pretrained(CZERT_MODEL_PATH, from_tf=True)

-->

\t

### Document Level Tasks

We evaluate our model on one document level task

* Multi-label Document Classification.

### Token Level Tasks

We evaluate our model on three token level tasks:

* Named Entity Recognition,

* Morphological Tagging,

* Semantic Role Labelling.

## Downstream Tasks Fine-tuning Results

### Sentiment Classification

| | mBERT | SlavicBERT | ALBERT-r | Czert-A | Czert-B |

|:----:|:------------------------:|:------------------------:|:------------------------:|:-----------------------:|:--------------------------------:|

| FB | 71.72 ± 0.91 | 73.87 ± 0.50 | 59.50 ± 0.47 | 72.47 ± 0.72 | **76.55** ± **0.14** |

| CSFD | 82.80 ± 0.14 | 82.51 ± 0.14 | 75.40 ± 0.18 | 79.58 ± 0.46 | **84.79** ± **0.26** |

Average F1 results for the Sentiment Classification task. For more information, see [the paper](https://arxiv.org/abs/2103.13031).

### Semantic Text Similarity

| | **mBERT** | **Pavlov** | **Albert-random** | **Czert-A** | **Czert-B** |

|:-------------|:--------------:|:--------------:|:-----------------:|:--------------:|:----------------------:|

| STA-CNA | 83.335 ± 0.063 | 83.593 ± 0.050 | 43.184 ± 0.125 | 82.942 ± 0.106 | **84.345** ± **0.028** |

| STS-SVOB-img | 79.367 ± 0.486 | 79.900 ± 0.810 | 15.739 ± 2.992 | 79.444 ± 0.338 | **83.744** ± **0.395** |

| STS-SVOB-hl | 78.833 ± 0.296 | 76.996 ± 0.305 | 33.949 ± 1.807 | 75.089 ± 0.806 | **79.827 ± 0.469** |

Comparison of Pearson correlation achieved using pre-trained CZERT-A, CZERT-B, mBERT, Pavlov and randomly initialised Albert on semantic text similarity. For more information see [the paper](https://arxiv.org/abs/2103.13031).

### Multi-label Document Classification

| | mBERT | SlavicBERT | ALBERT-r | Czert-A | Czert-B |

|:-----:|:------------:|:------------:|:------------:|:------------:|:-------------------:|

| AUROC | 97.62 ± 0.08 | 97.80 ± 0.06 | 94.35 ± 0.13 | 97.49 ± 0.07 | **98.00** ± **0.04** |

| F1 | 83.04 ± 0.16 | 84.08 ± 0.14 | 72.44 ± 0.22 | 82.27 ± 0.17 | **85.06** ± **0.11** |

Comparison of F1 and AUROC score achieved using pre-trained CZERT-A, CZERT-B, mBERT, Pavlov and randomly initialised Albert on multi-label document classification. For more information see [the paper](https://arxiv.org/abs/2103.13031).

### Morphological Tagging

| | mBERT | Pavlov | Albert-random | Czert-A | Czert-B |

|:-----------------------|:---------------|:---------------|:---------------|:---------------|:---------------|

| Universal Dependencies | 99.176 ± 0.006 | 99.211 ± 0.008 | 96.590 ± 0.096 | 98.713 ± 0.008 | **99.300 ± 0.009** |

Comparison of F1 score achieved using pre-trained CZERT-A, CZERT-B, mBERT, Pavlov and randomly initialised Albert on morphological tagging task. For more information see [the paper](https://arxiv.org/abs/2103.13031).

### Semantic Role Labelling

<div id="tab:SRL">

| | mBERT | Pavlov | Albert-random | Czert-A | Czert-B | dep-based | gold-dep |

|:------:|:----------:|:----------:|:-------------:|:----------:|:----------:|:---------:|:--------:|

| span | 78.547 ± 0.110 | 79.333 ± 0.080 | 51.365 ± 0.423 | 72.254 ± 0.172 | **81.861 ± 0.102** | \\- | \\- |

| syntax | 90.226 ± 0.224 | 90.492 ± 0.040 | 80.747 ± 0.131 | 80.319 ± 0.054 | **91.462 ± 0.062** | 85.19 | 89.52 |

SRL results – dep columns are evaluate with labelled F1 from CoNLL 2009 evaluation script, other columns are evaluated with span F1 score same as it was used for NER evaluation. For more information see [the paper](https://arxiv.org/abs/2103.13031).

</div>

### Named Entity Recognition

| | mBERT | Pavlov | Albert-random | Czert-A | Czert-B |

|:-----------|:---------------|:---------------|:---------------|:---------------|:---------------|

| CNEC | **86.225 ± 0.208** | **86.565 ± 0.198** | 34.635 ± 0.343 | 72.945 ± 0.227 | 86.274 ± 0.116 |

| BSNLP 2019 | 84.006 ± 1.248 | **86.699 ± 0.370** | 19.773 ± 0.938 | 48.859 ± 0.605 | **86.729 ± 0.344** |

Comparison of f1 score achieved using pre-trained CZERT-A, CZERT-B, mBERT, Pavlov and randomly initialised Albert on named entity recognition task. For more information see [the paper](https://arxiv.org/abs/2103.13031).

## Licence

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License. http://creativecommons.org/licenses/by-nc-sa/4.0/

## How should I cite CZERT?

For now, please cite [the Arxiv paper](https://arxiv.org/abs/2103.13031):

```

@article{sido2021czert,

title={Czert -- Czech BERT-like Model for Language Representation},

author={Jakub Sido and Ondřej Pražák and Pavel Přibáň and Jan Pašek and Michal Seják and Miloslav Konopík},

year={2021},

eprint={2103.13031},

archivePrefix={arXiv},

primaryClass={cs.CL},

journal={arXiv preprint arXiv:2103.13031},

}

```

|

|

UWB-AIR/Czert-B-base-cased | 2021-06-09T08:13:11.000Z | [

"tf",

"bert",

"arxiv:2103.13031",

"transformers",

"cs"

]

| [

".gitattributes",

"README.md",

"config.json",

"special_tokens_map.json",

"tf_model.h5",

"tokenizer_config.json",

"vocab.txt"

]

| UWB-AIR | 554 | transformers | ---

tags:

- cs

---

# CZERT

This repository keeps trained Czert-B model for the paper [Czert – Czech BERT-like Model for Language Representation

](https://arxiv.org/abs/2103.13031)

For more information, see the paper

## Available Models

You can download **MLM & NSP only** pretrained models

~~[CZERT-A-v1](https://air.kiv.zcu.cz/public/CZERT-A-czert-albert-base-uncased.zip)

[CZERT-B-v1](https://air.kiv.zcu.cz/public/CZERT-B-czert-bert-base-cased.zip)~~

After some additional experiments, we found out that the tokenizers config was exported wrongly. In Czert-B-v1, the tokenizer parameter "do_lower_case" was wrongly set to true. In Czert-A-v1 the parameter "strip_accents" was incorrectly set to true.

Both mistakes are repaired in v2.

[CZERT-A-v2](https://air.kiv.zcu.cz/public/CZERT-A-v2-czert-albert-base-uncased.zip)

[CZERT-B-v2](https://air.kiv.zcu.cz/public/CZERT-B-v2-czert-bert-base-cased.zip)

or choose from one of **Finetuned Models**

| | Models |

| - | - |

| Sentiment Classification<br> (Facebook or CSFD) | [CZERT-A-sentiment-FB](https://air.kiv.zcu.cz/public/CZERT-A_fb.zip) <br> [CZERT-B-sentiment-FB](https://air.kiv.zcu.cz/public/CZERT-B_fb.zip) <br> [CZERT-A-sentiment-CSFD](https://air.kiv.zcu.cz/public/CZERT-A_csfd.zip) <br> [CZERT-B-sentiment-CSFD](https://air.kiv.zcu.cz/public/CZERT-B_csfd.zip) | Semantic Text Similarity <br> (Czech News Agency) | [CZERT-A-sts-CNA](https://air.kiv.zcu.cz/public/CZERT-A-sts-CNA.zip) <br> [CZERT-B-sts-CNA](https://air.kiv.zcu.cz/public/CZERT-B-sts-CNA.zip)

| Named Entity Recognition | [CZERT-A-ner-CNEC](https://air.kiv.zcu.cz/public/CZERT-A-ner-CNEC-cased.zip) <br> [CZERT-B-ner-CNEC](https://air.kiv.zcu.cz/public/CZERT-B-ner-CNEC-cased.zip) <br>[PAV-ner-CNEC](https://air.kiv.zcu.cz/public/PAV-ner-CNEC-cased.zip) <br> [CZERT-A-ner-BSNLP](https://air.kiv.zcu.cz/public/CZERT-A-ner-BSNLP-cased.zip)<br>[CZERT-B-ner-BSNLP](https://air.kiv.zcu.cz/public/CZERT-B-ner-BSNLP-cased.zip) <br>[PAV-ner-BSNLP](https://air.kiv.zcu.cz/public/PAV-ner-BSNLP-cased.zip) |

| Morphological Tagging<br> | [CZERT-A-morphtag-126k](https://air.kiv.zcu.cz/public/CZERT-A-morphtag-126k-cased.zip)<br>[CZERT-B-morphtag-126k](https://air.kiv.zcu.cz/public/CZERT-B-morphtag-126k-cased.zip) |

| Semantic Role Labelling |[CZERT-A-srl](https://air.kiv.zcu.cz/public/CZERT-A-srl-cased.zip)<br> [CZERT-B-srl](https://air.kiv.zcu.cz/public/CZERT-B-srl-cased.zip) |

## How to Use CZERT?

### Sentence Level Tasks

We evaluate our model on two sentence level tasks:

* Sentiment Classification,

* Semantic Text Similarity.

<!-- tokenizer = BertTokenizerFast.from_pretrained(CZERT_MODEL_PATH, strip_accents=False)

\\tmodel = TFAlbertForSequenceClassification.from_pretrained(CZERT_MODEL_PATH, num_labels=1)

or

self.tokenizer = BertTokenizerFast.from_pretrained(CZERT_MODEL_PATH, strip_accents=False)

self.model_encoder = AutoModelForSequenceClassification.from_pretrained(CZERT_MODEL_PATH, from_tf=True)

-->

\\t

### Document Level Tasks

We evaluate our model on one document level task

* Multi-label Document Classification.

### Token Level Tasks

We evaluate our model on three token level tasks:

* Named Entity Recognition,

* Morphological Tagging,

* Semantic Role Labelling.

## Downstream Tasks Fine-tuning Results

### Sentiment Classification

| | mBERT | SlavicBERT | ALBERT-r | Czert-A | Czert-B |

|:----:|:------------------------:|:------------------------:|:------------------------:|:-----------------------:|:--------------------------------:|

| FB | 71.72 ± 0.91 | 73.87 ± 0.50 | 59.50 ± 0.47 | 72.47 ± 0.72 | **76.55** ± **0.14** |

| CSFD | 82.80 ± 0.14 | 82.51 ± 0.14 | 75.40 ± 0.18 | 79.58 ± 0.46 | **84.79** ± **0.26** |

Average F1 results for the Sentiment Classification task. For more information, see [the paper](https://arxiv.org/abs/2103.13031).

### Semantic Text Similarity

| | **mBERT** | **Pavlov** | **Albert-random** | **Czert-A** | **Czert-B** |

|:-------------|:--------------:|:--------------:|:-----------------:|:--------------:|:----------------------:|

| STA-CNA | 83.335 ± 0.063 | 83.593 ± 0.050 | 43.184 ± 0.125 | 82.942 ± 0.106 | **84.345** ± **0.028** |

| STS-SVOB-img | 79.367 ± 0.486 | 79.900 ± 0.810 | 15.739 ± 2.992 | 79.444 ± 0.338 | **83.744** ± **0.395** |

| STS-SVOB-hl | 78.833 ± 0.296 | 76.996 ± 0.305 | 33.949 ± 1.807 | 75.089 ± 0.806 | **79.827 ± 0.469** |

Comparison of Pearson correlation achieved using pre-trained CZERT-A, CZERT-B, mBERT, Pavlov and randomly initialised Albert on semantic text similarity. For more information see [the paper](https://arxiv.org/abs/2103.13031).

### Multi-label Document Classification

| | mBERT | SlavicBERT | ALBERT-r | Czert-A | Czert-B |

|:-----:|:------------:|:------------:|:------------:|:------------:|:-------------------:|

| AUROC | 97.62 ± 0.08 | 97.80 ± 0.06 | 94.35 ± 0.13 | 97.49 ± 0.07 | **98.00** ± **0.04** |

| F1 | 83.04 ± 0.16 | 84.08 ± 0.14 | 72.44 ± 0.22 | 82.27 ± 0.17 | **85.06** ± **0.11** |

Comparison of F1 and AUROC score achieved using pre-trained CZERT-A, CZERT-B, mBERT, Pavlov and randomly initialised Albert on multi-label document classification. For more information see [the paper](https://arxiv.org/abs/2103.13031).

### Morphological Tagging

| | mBERT | Pavlov | Albert-random | Czert-A | Czert-B |

|:-----------------------|:---------------|:---------------|:---------------|:---------------|:---------------|

| Universal Dependencies | 99.176 ± 0.006 | 99.211 ± 0.008 | 96.590 ± 0.096 | 98.713 ± 0.008 | **99.300 ± 0.009** |

Comparison of F1 score achieved using pre-trained CZERT-A, CZERT-B, mBERT, Pavlov and randomly initialised Albert on morphological tagging task. For more information see [the paper](https://arxiv.org/abs/2103.13031).

### Semantic Role Labelling

<div id="tab:SRL">

| | mBERT | Pavlov | Albert-random | Czert-A | Czert-B | dep-based | gold-dep |

|:------:|:----------:|:----------:|:-------------:|:----------:|:----------:|:---------:|:--------:|

| span | 78.547 ± 0.110 | 79.333 ± 0.080 | 51.365 ± 0.423 | 72.254 ± 0.172 | **81.861 ± 0.102** | \\\\- | \\\\- |

| syntax | 90.226 ± 0.224 | 90.492 ± 0.040 | 80.747 ± 0.131 | 80.319 ± 0.054 | **91.462 ± 0.062** | 85.19 | 89.52 |

SRL results – dep columns are evaluate with labelled F1 from CoNLL 2009 evaluation script, other columns are evaluated with span F1 score same as it was used for NER evaluation. For more information see [the paper](https://arxiv.org/abs/2103.13031).

</div>

### Named Entity Recognition

| | mBERT | Pavlov | Albert-random | Czert-A | Czert-B |

|:-----------|:---------------|:---------------|:---------------|:---------------|:---------------|

| CNEC | **86.225 ± 0.208** | **86.565 ± 0.198** | 34.635 ± 0.343 | 72.945 ± 0.227 | 86.274 ± 0.116 |

| BSNLP 2019 | 84.006 ± 1.248 | **86.699 ± 0.370** | 19.773 ± 0.938 | 48.859 ± 0.605 | **86.729 ± 0.344** |

Comparison of f1 score achieved using pre-trained CZERT-A, CZERT-B, mBERT, Pavlov and randomly initialised Albert on named entity recognition task. For more information see [the paper](https://arxiv.org/abs/2103.13031).

## Licence

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License. http://creativecommons.org/licenses/by-nc-sa/4.0/

## How should I cite CZERT?

For now, please cite [the Arxiv paper](https://arxiv.org/abs/2103.13031):

```

@article{sido2021czert,

title={Czert -- Czech BERT-like Model for Language Representation},

author={Jakub Sido and Ondřej Pražák and Pavel Přibáň and Jan Pašek and Michal Seják and Miloslav Konopík},

year={2021},

eprint={2103.13031},

archivePrefix={arXiv},

primaryClass={cs.CL},

journal={arXiv preprint arXiv:2103.13031},

}

```

|

|

UgobOby/model_name | 2021-03-09T09:40:50.000Z | []

| [

".gitattributes"

]

| UgobOby | 0 | |||

Vaibhavbrkn/mbart-english-hindi | 2021-06-12T03:41:52.000Z | [

"pytorch",

"mbart",

"seq2seq",

"transformers",

"text2text-generation"

]

| text2text-generation | [

".gitattributes",

"config.json",

"pytorch_model.bin"

]

| Vaibhavbrkn | 11 | transformers | |

Vaibhavbrkn/question-gen | 2021-06-07T05:18:15.000Z | [

"pytorch",

"t5",

"seq2seq",

"transformers",

"text2text-generation"

]

| text2text-generation | [

".gitattributes",

"config.json",

"pytorch_model.bin"

]

| Vaibhavbrkn | 100 | transformers | |

Vaibhavbrkn/t5-summarization | 2021-06-10T17:45:06.000Z | [

"pytorch",

"t5",

"seq2seq",

"transformers",

"text2text-generation"

]

| text2text-generation | [

".gitattributes",

"config.json",

"pytorch_model.bin"

]

| Vaibhavbrkn | 7 | transformers | |

Vamsi/T5_Paraphrase_Paws | 2020-12-11T21:30:48.000Z | [

"pytorch",

"tf",

"t5",

"seq2seq",

"en",

"transformers",

"paraphrase-generation",

"text-generation",

"Conditional Generation",

"text2text-generation"

]

| text-generation | [

".gitattributes",

"README.md",

"checkpointepoch=0.ckpt",

"config.json",

"pytorch_model.bin",

"special_tokens_map.json",

"spiece.model",

"tf_model.h5",

"tokenizer_config.json"

]

| Vamsi | 21,130 | transformers | ---

language: "en"

tags:

- paraphrase-generation

- text-generation

- Conditional Generation

inference: false

---

# Paraphrase-Generation

## Model description

T5 Model for generating paraphrases of english sentences. Trained on the [Google PAWS](https://github.com/google-research-datasets/paws) dataset.

## How to use

PyTorch and TF models available

```python

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("Vamsi/T5_Paraphrase_Paws")

model = AutoModelForSeq2SeqLM.from_pretrained("Vamsi/T5_Paraphrase_Paws")

sentence = "This is something which i cannot understand at all"

text = "paraphrase: " + sentence + " </s>"

encoding = tokenizer.encode_plus(text,pad_to_max_length=True, return_tensors="pt")

input_ids, attention_masks = encoding["input_ids"].to("cuda"), encoding["attention_mask"].to("cuda")

outputs = model.generate(

input_ids=input_ids, attention_mask=attention_masks,

max_length=256,

do_sample=True,

top_k=120,

top_p=0.95,

early_stopping=True,

num_return_sequences=5

)

for output in outputs:

line = tokenizer.decode(output, skip_special_tokens=True,clean_up_tokenization_spaces=True)

print(line)

```

For more reference on training your own T5 model or using this model, do check out [Paraphrase Generation](https://github.com/Vamsi995/Paraphrase-Generator).

|

Vasudev/discharge_albert | 2021-05-17T10:37:47.000Z | [

"pytorch",

"albert",

"masked-lm",

"transformers",

"fill-mask"

]

| fill-mask | [

".gitattributes",

"config.json",

"pytorch_model.bin",

"special_tokens_map.json",

"spiece.model",

"tokenizer.json",

"tokenizer_config.json",

"train_results.json",

"trainer_state.json",

"training_args.bin"

]

| Vasudev | 11 | transformers | |

Venkatakrishnan/Huggingface_STS_BERT | 2021-04-17T03:22:46.000Z | []

| [

".gitattributes"

]

| Venkatakrishnan | 0 | |||

Vicent/fasttext_model | 2021-04-24T02:45:29.000Z | []

| [

".gitattributes"

]

| Vicent | 0 | |||

Vicent/model_text | 2021-04-25T04:09:28.000Z | []

| [

".gitattributes"

]

| Vicent | 0 | |||

VictorSanh/bart-base-finetuned-xsum | 2020-08-17T15:02:57.000Z | [

"pytorch",

"bart",

"seq2seq",

"transformers",

"text2text-generation"

]

| text2text-generation | [

".gitattributes",

"config.json",

"merges.txt",

"pytorch_model.bin",

"special_tokens_map.json",

"test_results.txt",

"tokenizer_config.json",

"vocab.json"

]

| VictorSanh | 111 | transformers | |

VictorSanh/roberta-base-finetuned-yelp-polarity | 2021-05-20T12:30:20.000Z | [

"pytorch",

"jax",

"roberta",

"text-classification",

"en",

"dataset:yelp_polarity",

"transformers"

]

| text-classification | [

".gitattributes",

"README.md",

"config.json",

"eval_results_yelp.txt",

"flax_model.msgpack",

"merges.txt",

"pytorch_model.bin",

"special_tokens_map.json",

"tokenizer_config.json",

"training_args.bin",

"vocab.json"

]

| VictorSanh | 786 | transformers | ---

language: en

datasets:

- yelp_polarity

---

# RoBERTa-base-finetuned-yelp-polarity

This is a [RoBERTa-base](https://huggingface.co/roberta-base) checkpoint fine-tuned on binary sentiment classifcation from [Yelp polarity](https://huggingface.co/nlp/viewer/?dataset=yelp_polarity).

It gets **98.08%** accuracy on the test set.

## Hyper-parameters

We used the following hyper-parameters to train the model on one GPU:

```python

num_train_epochs = 2.0

learning_rate = 1e-05

weight_decay = 0.0

adam_epsilon = 1e-08

max_grad_norm = 1.0

per_device_train_batch_size = 32

gradient_accumulation_steps = 1

warmup_steps = 3500

seed = 42

```

|

Vikram/test_transformers | 2021-03-18T11:22:30.000Z | []

| [

".gitattributes"

]

| Vikram | 0 | |||

ViktorAlm/electra-base-norwegian-uncased-discriminator | 2020-12-11T21:30:55.000Z | [

"pytorch",

"tf",

"electra",

"pretraining",

"no",

"transformers"

]

| [

".gitattributes",

"README.md",

"config.json",

"pytorch_model.bin",

"special_tokens_map.json",

"tf_model.h5",

"tokenizer_config.json",

"vocab.txt"

]

| ViktorAlm | 18,337 | transformers | ---

language: no

thumbnail: https://i.imgur.com/QqSEC5I.png

---

# Norwegian Electra

Trained on Oscar + wikipedia + opensubtitles + some other data I had with the awesome power of TPUs(V3-8)

Use with caution. I have no downstream tasks in Norwegian to test on so I have no idea of its performance yet.

# Model

## Electra: Pre-training Text Encoders as Discriminators Rather Than Generators

Kevin Clark and Minh-Thang Luong and Quoc V. Le and Christopher D. Manning

- https://openreview.net/pdf?id=r1xMH1BtvB

- https://github.com/google-research/electra

# Acknowledgments

### TensorFlow Research Cloud

Research supported with Cloud TPUs from Google's TensorFlow Research Cloud (TFRC). Thanks for providing access to the TFRC ❤️

- https://www.tensorflow.org/tfrc

#### OSCAR corpus

- https://oscar-corpus.com/

#### OPUS

- http://opus.nlpl.eu/

- http://www.opensubtitles.org/

|

|

Violeta/ArmBERTa | 2021-05-07T14:31:35.000Z | []

| [

".gitattributes"

]

| Violeta | 0 | |||

Violeta/ArmBERTa_Model | 2021-05-20T12:31:26.000Z | [

"pytorch",

"jax",

"roberta",

"transformers"

]

| [

".gitattributes",

"config.json",

"flax_model.msgpack",

"merges.txt",

"pytorch_model.bin",

"special_tokens_map.json",

"tokenizer.json",

"tokenizer_config.json",

"vocab.json"

]

| Violeta | 6 | transformers | ||

Vishva/UNIBOT | 2021-03-07T04:44:28.000Z | []

| [

".gitattributes"

]

| Vishva | 0 | |||

Vishva/UNIFAQ-T5 | 2021-03-07T04:42:24.000Z | []

| [

".gitattributes",

"README.md"

]

| Vishva | 0 | |||

Vishva/unibot-faq | 2021-03-07T06:27:36.000Z | []

| [

".gitattributes"

]

| Vishva | 0 | |||

VoVanPhuc/sup-SimCSE-VietNamese-phobert-base | 2021-05-28T05:42:03.000Z | [

"pytorch",

"roberta",

"arxiv:2104.08821",

"transformers"

]

| [

".gitattributes",

"README.md",

"added_tokens.json",

"bpe.codes",

"config.json",

"pytorch_model.bin",

"special_tokens_map.json",

"tokenizer_config.json",

"vocab.txt"

]

| VoVanPhuc | 5,300 | transformers |

#### Table of contents

1. [Introduction](#introduction)

2. [Pretrain model](#models)

3. [Using SimeCSE_Vietnamese with `sentences-transformers`](#sentences-transformers)

- [Installation](#install1)

- [Example usage](#usage1)

4. [Using SimeCSE_Vietnamese with `transformers`](#transformers)

- [Installation](#install2)

- [Example usage](#usage2)

# <a name="introduction"></a> SimeCSE_Vietnamese: Simple Contrastive Learning of Sentence Embeddings with Vietnamese

Pre-trained SimeCSE_Vietnamese models are the state-of-the-art of Sentence Embeddings with Vietnamese :

- SimeCSE_Vietnamese pre-training approach is based on [SimCSE](https://arxiv.org/abs/2104.08821) which optimizes the SimeCSE_Vietnamese pre-training procedure for more robust performance.

- SimeCSE_Vietnamese encode input sentences using a pre-trained language model such as [PhoBert](https://www.aclweb.org/anthology/2020.findings-emnlp.92/)

- SimeCSE_Vietnamese works with both unlabeled and labeled data.

## Pre-trained models <a name="models"></a>

Model | #params | Arch.

---|---|---

[`VoVanPhuc/sup-SimCSE-VietNamese-phobert-base`](https://huggingface.co/VoVanPhuc/sup-SimCSE-VietNamese-phobert-base) | 135M | base

[`VoVanPhuc/unsup-SimCSE-VietNamese-phobert-base`](https://huggingface.co/VoVanPhuc/unsup-SimCSE-VietNamese-phobert-base) | 135M | base

## <a name="sentences-transformers"></a> Using SimeCSE_Vietnamese with `sentences-transformers`

### Installation <a name="install1"></a>

- Install `sentence-transformers`:

- `pip install -U sentence-transformers`

- Install `pyvi` to word segment:

- `pip install pyvi`

### Example usage <a name="usage1"></a>

```python

from sentence_transformers import SentenceTransformer

from pyvi.ViTokenizer import tokenize

model = SentenceTransformer('VoVanPhuc/sup-SimCSE-VietNamese-phobert-base')

sentences = ['Kẻ đánh bom đinh tồi tệ nhất nước Anh.',

'Nghệ sĩ làm thiện nguyện - minh bạch là việc cấp thiết.',

'Bắc Giang tăng khả năng điều trị và xét nghiệm.',

'HLV futsal Việt Nam tiết lộ lý do hạ Lebanon.',

'việc quan trọng khi kêu gọi quyên góp từ thiện là phải minh bạch, giải ngân kịp thời.',

'20% bệnh nhân Covid-19 có thể nhanh chóng trở nặng.',

'Thái Lan thua giao hữu trước vòng loại World Cup.',

'Cựu tuyển thủ Nguyễn Bảo Quân: May mắn ủng hộ futsal Việt Nam',

'Chủ ki-ốt bị đâm chết trong chợ đầu mối lớn nhất Thanh Hoá.',

'Bắn chết người trong cuộc rượt đuổi trên sông.'

]

sentences = [tokenize(sentence) for sentence in sentences]

embeddings = model.encode(sentences)

```

## <a name="sentences-transformers"></a> Using SimeCSE_Vietnamese with `transformers`

### Installation <a name="install2"></a>

- Install `transformers`:

- `pip install -U transformers`

- Install `pyvi` to word segment:

- `pip install pyvi`

### Example usage <a name="usage2"></a>

```python

import torch

from transformers import AutoModel, AutoTokenizer

from pyvi.ViTokenizer import tokenize

PhobertTokenizer = AutoTokenizer.from_pretrained("VoVanPhuc/sup-SimCSE-VietNamese-phobert-base")

model = AutoModel.from_pretrained("VoVanPhuc/sup-SimCSE-VietNamese-phobert-base")

sentences = ['Kẻ đánh bom đinh tồi tệ nhất nước Anh.',

'Nghệ sĩ làm thiện nguyện - minh bạch là việc cấp thiết.',

'Bắc Giang tăng khả năng điều trị và xét nghiệm.',

'HLV futsal Việt Nam tiết lộ lý do hạ Lebanon.',

'việc quan trọng khi kêu gọi quyên góp từ thiện là phải minh bạch, giải ngân kịp thời.',

'20% bệnh nhân Covid-19 có thể nhanh chóng trở nặng.',

'Thái Lan thua giao hữu trước vòng loại World Cup.',

'Cựu tuyển thủ Nguyễn Bảo Quân: May mắn ủng hộ futsal Việt Nam',

'Chủ ki-ốt bị đâm chết trong chợ đầu mối lớn nhất Thanh Hoá.',

'Bắn chết người trong cuộc rượt đuổi trên sông.'

]

sentences = [tokenize(sentence) for sentence in sentences]

inputs = PhobertTokenizer(sentences, padding=True, truncation=True, return_tensors="pt")

with torch.no_grad():

embeddings = model(**inputs, output_hidden_states=True, return_dict=True).pooler_output

```

## Quick Start

[Open In Colab](https://colab.research.google.com/drive/12__EXJoQYHe9nhi4aXLTf9idtXT8yr7H?usp=sharing)

## Citation

@article{gao2021simcse,

title={{SimCSE}: Simple Contrastive Learning of Sentence Embeddings},

author={Gao, Tianyu and Yao, Xingcheng and Chen, Danqi},

journal={arXiv preprint arXiv:2104.08821},

year={2021}

}

@inproceedings{phobert,

title = {{PhoBERT: Pre-trained language models for Vietnamese}},

author = {Dat Quoc Nguyen and Anh Tuan Nguyen},

booktitle = {Findings of the Association for Computational Linguistics: EMNLP 2020},

year = {2020},

pages = {1037--1042}

}

|

|

VoVanPhuc/unsup-SimCSE-VietNamese-phobert-base | 2021-05-28T05:45:41.000Z | [

"pytorch",

"roberta",

"arxiv:2104.08821",

"transformers"

]

| [

".gitattributes",

"README.md",

"added_tokens.json",

"bpe.codes",

"config.json",

"pytorch_model.bin",

"special_tokens_map.json",

"tokenizer_config.json",

"vocab.txt"

]

| VoVanPhuc | 40 | transformers |

#### Table of contents

1. [Introduction](#introduction)

2. [Pretrain model](#models)

3. [Using SimeCSE_Vietnamese with `sentences-transformers`](#sentences-transformers)

- [Installation](#install1)

- [Example usage](#usage1)

4. [Using SimeCSE_Vietnamese with `transformers`](#transformers)

- [Installation](#install2)

- [Example usage](#usage2)

# <a name="introduction"></a> SimeCSE_Vietnamese: Simple Contrastive Learning of Sentence Embeddings with Vietnamese

Pre-trained SimeCSE_Vietnamese models are the state-of-the-art of Sentence Embeddings with Vietnamese :