# A foundation model utilizing chest CT volumes and radiology reports for supervised-level zero-shot detection of abnormalities

Welcome to the official page of CT-RATE, a pioneering dataset in 3D medical imaging that uniquely pairs textual data with imagery data, focused on chest CT volumes. Here, you will find the CT-RATE dataset, which comprises chest CT volumes paired with corresponding radiology text reports, multi-abnormality labels, and metadata, all freely accessible for researchers. The CT-RATE dataset is released as part of the paper named "A foundation model that utilizes chest CT volumes and radiology reports for supervised-level zero-shot detection of abnormalities".

## CT-RATE: A novel dataset of chest CT volumes with corresponding radiology text reports

A major challenge in computational research in 3D medical imaging is the lack of comprehensive datasets. Addressing this issue, we present CT-RATE, the first 3D medical imaging dataset that pairs images with textual reports. CT-RATE consists of 25,692 non-contrast chest CT volumes, expanded to 50,188 through various reconstructions, from 21,304 unique patients, along with corresponding radiology text reports, multi-abnormality labels, and metadata.

We divided the cohort into two groups: 20,000 patients were allocated to the training set and 1,304 to the validation set. Our folders are structured as split_patientID_scanID_reconstructionID. For instance, "valid_53_a_1" indicates that this is a CT volume from the validation set, scan "a" from patient 53, and reconstruction 1 of scan "a". This naming convention applies to all files.

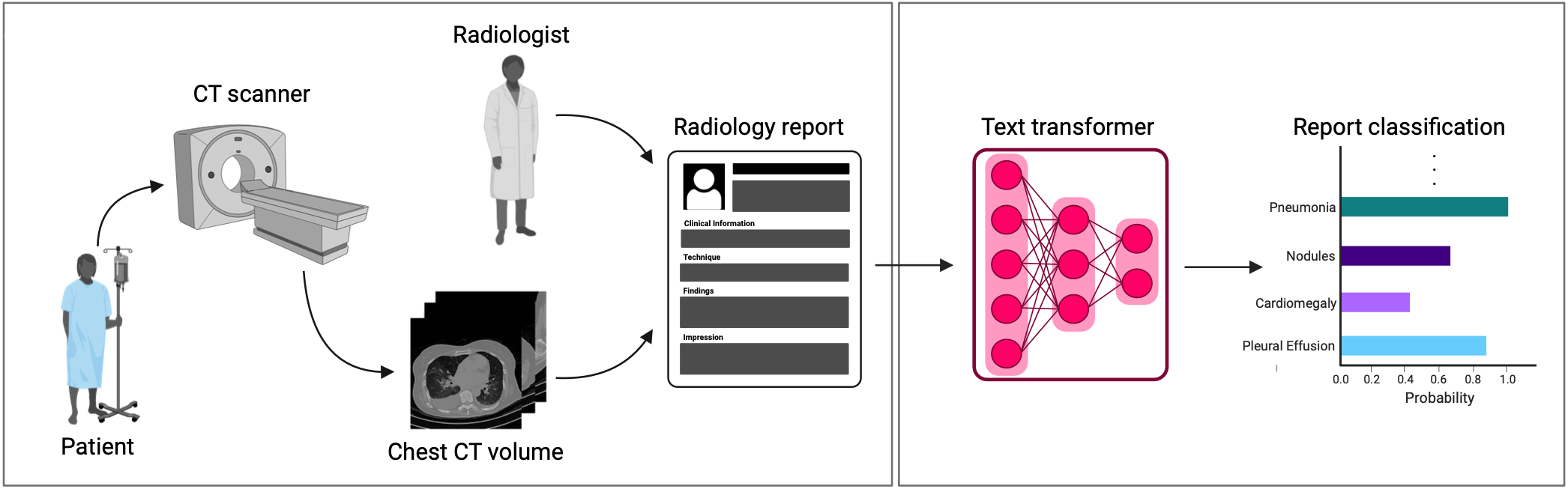

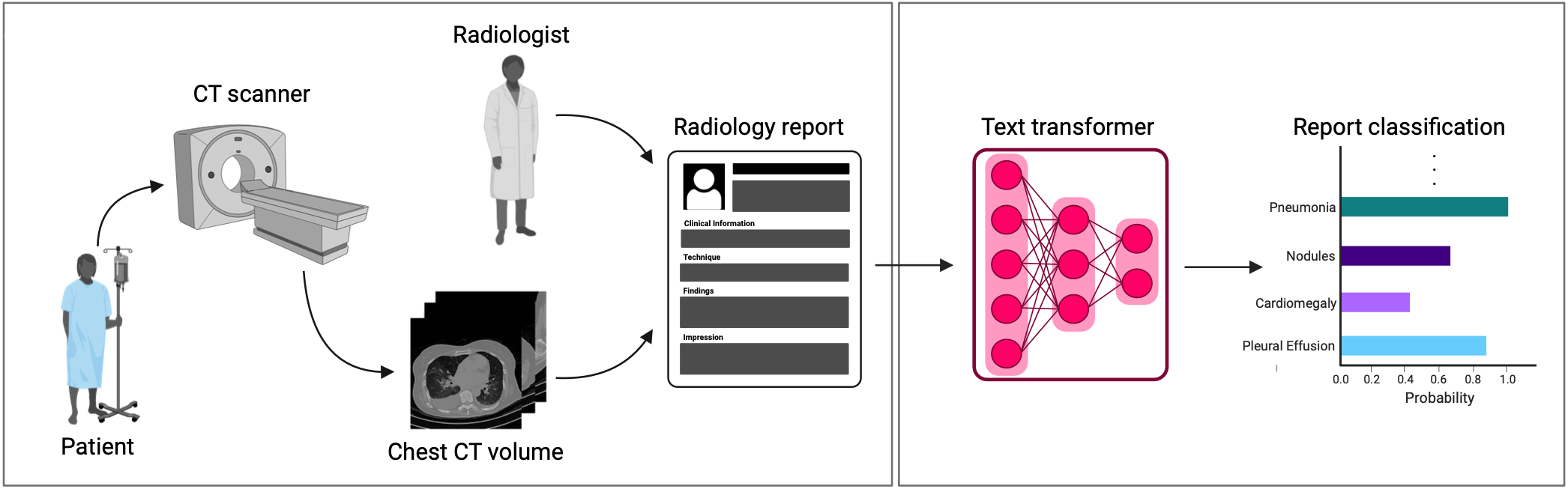

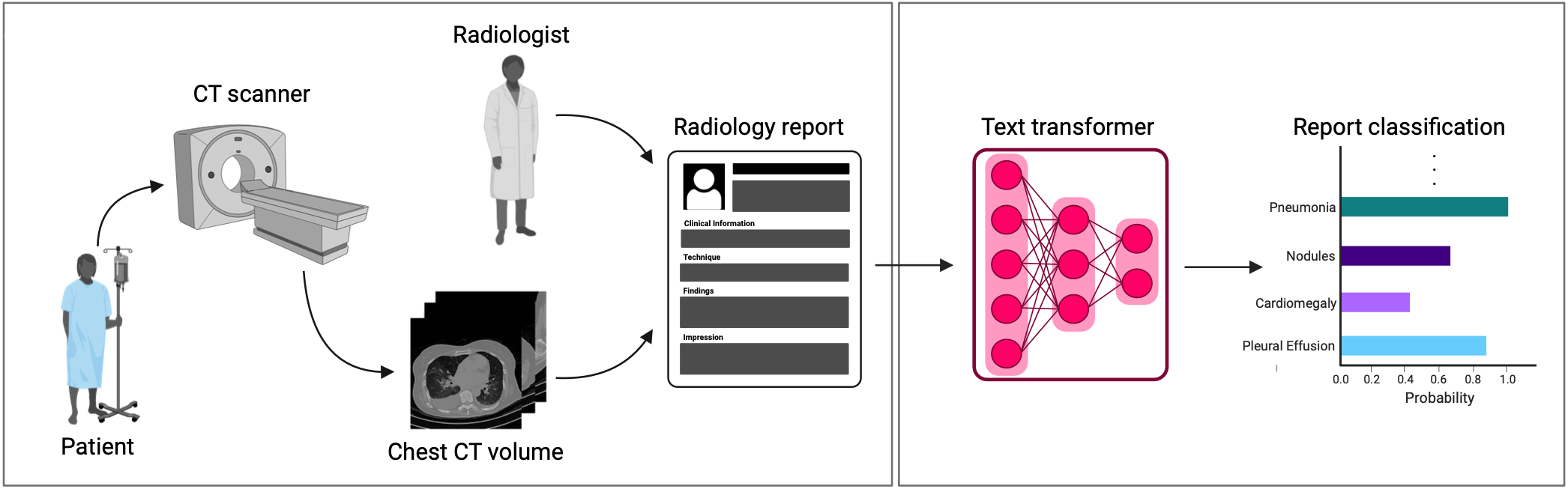

## CT-CLIP: CT-focused contrastive language-image pre-training framework

Leveraging CT-RATE, we developed CT-CLIP, a CT-focused contrastive language-image pre-training framework. As a versatile, self-supervised model, CT-CLIP is designed for broad application and does not require task-specific training. Remarkably, CT-CLIP outperforms state-of-the-art, fully supervised methods in multi-abnormality detection across all key metrics, thus eliminating the need for manual annotation. We also demonstrate its utility in case retrieval, whether using imagery or textual queries, thereby advancing knowledge dissemination.

Our complete codebase is openly available on [our official GitHub repository](https://github.com/ibrahimethemhamamci/CT-CLIP).

## Citing Us

If you use CT-RATE or CT-CLIP, we would appreciate your references to our paper.

## License

We are committed to fostering innovation and collaboration in the research community. To this end, all elements of the CT-RATE dataset are released under a [Creative Commons Attribution (CC-BY-NC-SA) license](https://creativecommons.org/licenses/by-nc-sa/4.0/). This licensing framework ensures that our contributions can be freely used for non-commercial research purposes, while also encouraging contributions and modifications, provided that the original work is properly cited and any derivative works are shared under similar terms.