modelId

string | author

string | last_modified

timestamp[us, tz=UTC] | downloads

int64 | likes

int64 | library_name

string | tags

list | pipeline_tag

string | createdAt

timestamp[us, tz=UTC] | card

string |

|---|---|---|---|---|---|---|---|---|---|

AAAAnsah/Llama-3.2-1B_BMA_theta_1.8

|

AAAAnsah

| 2025-08-14T20:37:06Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2025-08-12T18:29:45Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

AAAAnsah/Llama-3.2-1B_ES_up_down_theta_0.0

|

AAAAnsah

| 2025-08-14T20:28:19Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2025-08-14T20:28:01Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

eagle618/eagle-deepseek-v3-random

|

eagle618

| 2025-08-14T19:44:43Z | 0 | 0 | null |

[

"safetensors",

"deepseek_v3",

"custom_code",

"license:apache-2.0",

"fp8",

"region:us"

] | null | 2025-08-14T19:43:13Z |

---

license: apache-2.0

---

|

Burdenthrive/cloud-detection-unet-regnetzd8

|

Burdenthrive

| 2025-08-14T18:06:36Z | 4 | 0 |

pytorch

|

[

"pytorch",

"unet",

"regnetz_d8",

"segmentation-models-pytorch",

"timm",

"remote-sensing",

"sentinel-2",

"multispectral",

"cloud-detection",

"image-segmentation",

"dataset:isp-uv-es/CloudSEN12Plus",

"license:mit",

"region:us"

] |

image-segmentation

| 2025-08-09T23:24:55Z |

---

license: mit

pipeline_tag: image-segmentation

library_name: pytorch

tags:

- unet

- regnetz_d8

- segmentation-models-pytorch

- timm

- pytorch

- remote-sensing

- sentinel-2

- multispectral

- cloud-detection

datasets:

- isp-uv-es/CloudSEN12Plus

---

# Cloud Detection — U-Net (RegNetZ D8 encoder)

**Repository:** `Burdenthrive/cloud-detection-unet-regnetzd8`

**Task:** Multiclass image segmentation (4 classes) on **multispectral Sentinel‑2 L1C** (13 bands) using **U‑Net** (`segmentation_models_pytorch`) with **RegNetZ D8** encoder.

This model predicts per‑pixel labels among: **clear**, **thick cloud**, **thin cloud**, **cloud shadow**.

---

## ✨ Highlights

- **Input:** 13‑band Sentinel‑2 L1C tiles/patches (float32, shape `B×13×512×512`).

- **Backbone:** `tu-regnetz_d8` (TIMM encoder via `segmentation_models_pytorch`).

- **Output:** Logits `B×4×512×512` (apply softmax + argmax).

- **Files:** `model.py`, `config.json`, and weights.

---

## 📦 Files

- `model.py` — defines the `UNet` class (wrapper around `smp.Unet`).

- `config.json` — hyperparameters and class names:

```json

{

"task": "image-segmentation",

"model_name": "unet-regnetz-d8",

"model_kwargs": { "in_channels": 13, "num_classes": 4 },

"classes": ["clear", "thick cloud", "thin cloud", "cloud shadow"]

}

|

longhoang2112/whisper-turbo-fine-tuning_2_stages_with_slu25k

|

longhoang2112

| 2025-08-14T17:47:40Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"text-generation-inference",

"unsloth",

"whisper",

"trl",

"en",

"base_model:unsloth/whisper-large-v3-turbo",

"base_model:finetune:unsloth/whisper-large-v3-turbo",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2025-08-14T17:47:35Z |

---

base_model: unsloth/whisper-large-v3-turbo

tags:

- text-generation-inference

- transformers

- unsloth

- whisper

- trl

license: apache-2.0

language:

- en

---

# Uploaded model

- **Developed by:** longhoang2112

- **License:** apache-2.0

- **Finetuned from model :** unsloth/whisper-large-v3-turbo

This whisper model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

mradermacher/Blitzar-Coder-4B-F.1-GGUF

|

mradermacher

| 2025-08-14T17:44:59Z | 3,381 | 0 |

transformers

|

[

"transformers",

"gguf",

"RL",

"text-generation-inference",

"blitzar",

"coder",

"trl",

"code",

"en",

"dataset:livecodebench/code_generation_lite",

"dataset:PrimeIntellect/verifiable-coding-problems",

"dataset:likaixin/TACO-verified",

"dataset:open-r1/codeforces-cots",

"base_model:prithivMLmods/Blitzar-Coder-4B-F.1",

"base_model:quantized:prithivMLmods/Blitzar-Coder-4B-F.1",

"license:apache-2.0",

"endpoints_compatible",

"region:us",

"conversational"

] | null | 2025-06-06T09:21:56Z |

---

base_model: prithivMLmods/Blitzar-Coder-4B-F.1

datasets:

- livecodebench/code_generation_lite

- PrimeIntellect/verifiable-coding-problems

- likaixin/TACO-verified

- open-r1/codeforces-cots

language:

- en

library_name: transformers

license: apache-2.0

mradermacher:

readme_rev: 1

quantized_by: mradermacher

tags:

- RL

- text-generation-inference

- blitzar

- coder

- trl

- code

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

static quants of https://huggingface.co/prithivMLmods/Blitzar-Coder-4B-F.1

<!-- provided-files -->

***For a convenient overview and download list, visit our [model page for this model](https://hf.tst.eu/model#Blitzar-Coder-4B-F.1-GGUF).***

weighted/imatrix quants are available at https://huggingface.co/mradermacher/Blitzar-Coder-4B-F.1-i1-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/Blitzar-Coder-4B-F.1-GGUF/resolve/main/Blitzar-Coder-4B-F.1.Q2_K.gguf) | Q2_K | 1.8 | |

| [GGUF](https://huggingface.co/mradermacher/Blitzar-Coder-4B-F.1-GGUF/resolve/main/Blitzar-Coder-4B-F.1.Q3_K_S.gguf) | Q3_K_S | 2.0 | |

| [GGUF](https://huggingface.co/mradermacher/Blitzar-Coder-4B-F.1-GGUF/resolve/main/Blitzar-Coder-4B-F.1.Q3_K_M.gguf) | Q3_K_M | 2.2 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/Blitzar-Coder-4B-F.1-GGUF/resolve/main/Blitzar-Coder-4B-F.1.Q3_K_L.gguf) | Q3_K_L | 2.3 | |

| [GGUF](https://huggingface.co/mradermacher/Blitzar-Coder-4B-F.1-GGUF/resolve/main/Blitzar-Coder-4B-F.1.IQ4_XS.gguf) | IQ4_XS | 2.4 | |

| [GGUF](https://huggingface.co/mradermacher/Blitzar-Coder-4B-F.1-GGUF/resolve/main/Blitzar-Coder-4B-F.1.Q4_K_S.gguf) | Q4_K_S | 2.5 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Blitzar-Coder-4B-F.1-GGUF/resolve/main/Blitzar-Coder-4B-F.1.Q4_K_M.gguf) | Q4_K_M | 2.6 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Blitzar-Coder-4B-F.1-GGUF/resolve/main/Blitzar-Coder-4B-F.1.Q5_K_S.gguf) | Q5_K_S | 2.9 | |

| [GGUF](https://huggingface.co/mradermacher/Blitzar-Coder-4B-F.1-GGUF/resolve/main/Blitzar-Coder-4B-F.1.Q5_K_M.gguf) | Q5_K_M | 3.0 | |

| [GGUF](https://huggingface.co/mradermacher/Blitzar-Coder-4B-F.1-GGUF/resolve/main/Blitzar-Coder-4B-F.1.Q6_K.gguf) | Q6_K | 3.4 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/Blitzar-Coder-4B-F.1-GGUF/resolve/main/Blitzar-Coder-4B-F.1.Q8_0.gguf) | Q8_0 | 4.4 | fast, best quality |

| [GGUF](https://huggingface.co/mradermacher/Blitzar-Coder-4B-F.1-GGUF/resolve/main/Blitzar-Coder-4B-F.1.f16.gguf) | f16 | 8.2 | 16 bpw, overkill |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

bullerwins/GLM-4.5-exl3-3.2bpw_optim

|

bullerwins

| 2025-08-14T16:57:28Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"glm4_moe",

"text-generation",

"conversational",

"en",

"zh",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"exl3",

"region:us"

] |

text-generation

| 2025-08-14T16:49:52Z |

---

license: mit

language:

- en

- zh

pipeline_tag: text-generation

library_name: transformers

---

# GLM-4.5

<div align="center">

<img src=https://raw.githubusercontent.com/zai-org/GLM-4.5/refs/heads/main/resources/logo.svg width="15%"/>

</div>

<p align="center">

👋 Join our <a href="https://discord.gg/QR7SARHRxK" target="_blank">Discord</a> community.

<br>

📖 Check out the GLM-4.5 <a href="https://z.ai/blog/glm-4.5" target="_blank">technical blog</a>.

<br>

📍 Use GLM-4.5 API services on <a href="https://docs.z.ai/guides/llm/glm-4.5">Z.ai API Platform (Global)</a> or <br> <a href="https://docs.bigmodel.cn/cn/guide/models/text/glm-4.5">Zhipu AI Open Platform (Mainland China)</a>.

<br>

👉 One click to <a href="https://chat.z.ai">GLM-4.5</a>.

</p>

## Model Introduction

The **GLM-4.5** series models are foundation models designed for intelligent agents. GLM-4.5 has **355** billion total parameters with **32** billion active parameters, while GLM-4.5-Air adopts a more compact design with **106** billion total parameters and **12** billion active parameters. GLM-4.5 models unify reasoning, coding, and intelligent agent capabilities to meet the complex demands of intelligent agent applications.

Both GLM-4.5 and GLM-4.5-Air are hybrid reasoning models that provide two modes: thinking mode for complex reasoning and tool usage, and non-thinking mode for immediate responses.

We have open-sourced the base models, hybrid reasoning models, and FP8 versions of the hybrid reasoning models for both GLM-4.5 and GLM-4.5-Air. They are released under the MIT open-source license and can be used commercially and for secondary development.

As demonstrated in our comprehensive evaluation across 12 industry-standard benchmarks, GLM-4.5 achieves exceptional performance with a score of **63.2**, in the **3rd** place among all the proprietary and open-source models. Notably, GLM-4.5-Air delivers competitive results at **59.8** while maintaining superior efficiency.

For more eval results, show cases, and technical details, please visit

our [technical blog](https://z.ai/blog/glm-4.5). The technical report will be released soon.

The model code, tool parser and reasoning parser can be found in the implementation of [transformers](https://github.com/huggingface/transformers/tree/main/src/transformers/models/glm4_moe), [vLLM](https://github.com/vllm-project/vllm/blob/main/vllm/model_executor/models/glm4_moe_mtp.py) and [SGLang](https://github.com/sgl-project/sglang/blob/main/python/sglang/srt/models/glm4_moe.py).

## Quick Start

Please refer our [github page](https://github.com/zai-org/GLM-4.5) for more detail.

|

VoilaRaj/etadpu_8NFPjP

|

VoilaRaj

| 2025-08-14T16:55:53Z | 0 | 0 | null |

[

"safetensors",

"any-to-any",

"omega",

"omegalabs",

"bittensor",

"agi",

"license:mit",

"region:us"

] |

any-to-any

| 2025-08-14T16:53:59Z |

---

license: mit

tags:

- any-to-any

- omega

- omegalabs

- bittensor

- agi

---

This is an Any-to-Any model checkpoint for the OMEGA Labs x Bittensor Any-to-Any subnet.

Check out the [git repo](https://github.com/omegalabsinc/omegalabs-anytoany-bittensor) and find OMEGA on X: [@omegalabsai](https://x.com/omegalabsai).

|

HuggingFaceTB/SmolLM3-3B-checkpoints

|

HuggingFaceTB

| 2025-08-14T16:42:12Z | 1,639 | 15 |

transformers

|

[

"transformers",

"en",

"fr",

"es",

"it",

"pt",

"zh",

"ar",

"ru",

"base_model:HuggingFaceTB/SmolLM3-3B-Base",

"base_model:finetune:HuggingFaceTB/SmolLM3-3B-Base",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2025-07-20T22:34:45Z |

---

library_name: transformers

license: apache-2.0

language:

- en

- fr

- es

- it

- pt

- zh

- ar

- ru

base_model:

- HuggingFaceTB/SmolLM3-3B-Base

---

# SmolLM3 Checkpoints

We are releasing intermediate checkpoints of SmolLM3 to enable further research.

For more details, check the [SmolLM GitHub repo](https://github.com/huggingface/smollm) with the end-to-end training and evaluation code:

- ✓ Pretraining scripts (nanotron)

- ✓ Post-training code SFT + APO (TRL/alignment-handbook)

- ✓ Evaluation scripts to reproduce all reported metrics

## Pre-training

We release checkpoints every 40,000 steps, which equals 94.4B tokens.

The GBS (Global Batch Size) in tokens for SmolLM3-3B is 2,359,296. To calculate the number of tokens from a given step:

```python

nb_tokens = nb_step * GBS

```

### Training Stages

**Stage 1:** Steps 0 to 3,450,000 (86 checkpoints)

[config](https://huggingface.co/datasets/HuggingFaceTB/smollm3-configs/blob/main/stage1_8T.yaml)

**Stage 2:** Steps 3,450,000 to 4,200,000 (19 checkpoints)

[config](https://huggingface.co/datasets/HuggingFaceTB/smollm3-configs/blob/main/stage2_8T_9T.yaml)

**Stage 3:** Steps 4,200,000 to 4,720,000 (13 checkpoints)

[config](https://huggingface.co/datasets/HuggingFaceTB/smollm3-configs/blob/main/stage3_9T_11T.yaml)

### Long Context Extension

For the additional 2 stages that extend the context length to 64k, we sample checkpoints every 4,000 steps (9.4B tokens) for a total of 10 checkpoints:

**Long Context 4k to 32k**

[config](https://huggingface.co/datasets/HuggingFaceTB/smollm3-configs/blob/main/long_context_4k_to_32k.yaml)

**Long Context 32k to 64k**

[config](https://huggingface.co/datasets/HuggingFaceTB/smollm3-configs/blob/main/long_context_32k_to_64k.yaml)

## Post-training

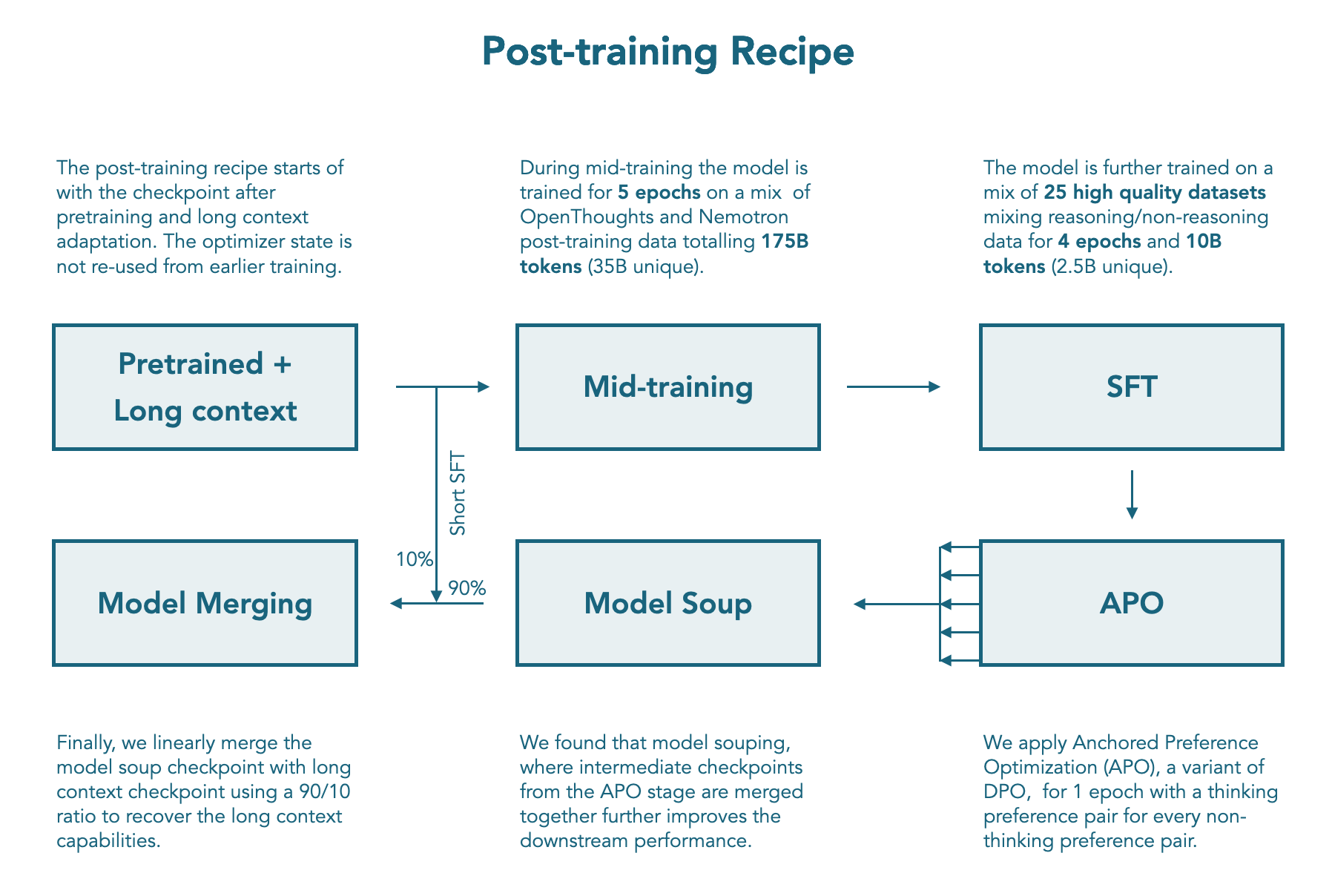

We release checkpoints at every step of our post-training recipe: Mid training, SFT, APO soup, and LC expert.

## How to Load a Checkpoint

```python

# pip install transformers

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

checkpoint = "HuggingFaceTB/SmolLM3-3B-checkpoints"

revision = "stage1-step-40000" # replace by the revision you want

device = torch.device("cuda" if torch.cuda.is_available() else "mps" if hasattr(torch, 'mps') and torch.mps.is_available() else "cpu")

tokenizer = AutoTokenizer.from_pretrained(checkpoint, revision=revision)

model = AutoModelForCausalLM.from_pretrained(checkpoint, revision=revision).to(device)

inputs = tokenizer.encode("Gravity is", return_tensors="pt").to(device)

outputs = model.generate(inputs)

print(tokenizer.decode(outputs[0]))

```

## License

[Apache 2.0](https://www.apache.org/licenses/LICENSE-2.0)

## Citation

```bash

@misc{bakouch2025smollm3,

title={{SmolLM3: smol, multilingual, long-context reasoner}},

author={Bakouch, Elie and Ben Allal, Loubna and Lozhkov, Anton and Tazi, Nouamane and Tunstall, Lewis and Patiño, Carlos Miguel and Beeching, Edward and Roucher, Aymeric and Reedi, Aksel Joonas and Gallouédec, Quentin and Rasul, Kashif and Habib, Nathan and Fourrier, Clémentine and Kydlicek, Hynek and Penedo, Guilherme and Larcher, Hugo and Morlon, Mathieu and Srivastav, Vaibhav and Lochner, Joshua and Nguyen, Xuan-Son and Raffel, Colin and von Werra, Leandro and Wolf, Thomas},

year={2025},

howpublished={\url{https://huggingface.co/blog/smollm3}}

}

```

|

ksych/Qwen2.5-Coder-7B-GRPO-TIES

|

ksych

| 2025-08-14T16:34:00Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen2",

"text-generation",

"mergekit",

"merge",

"conversational",

"arxiv:2306.01708",

"base_model:Qwen/Qwen2.5-Coder-7B",

"base_model:merge:Qwen/Qwen2.5-Coder-7B",

"base_model:kraalfar/Qwen2.5-Coder-7B-GRPO",

"base_model:merge:kraalfar/Qwen2.5-Coder-7B-GRPO",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-08-14T11:28:39Z |

---

base_model:

- Qwen/Qwen2.5-Coder-7B

- kraalfar/Qwen2.5-Coder-7B-GRPO

library_name: transformers

tags:

- mergekit

- merge

---

# Qwen2.5-Coder-7B-GRPO-TIES

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

## Merge Details

### Merge Method

This model was merged using the [TIES](https://arxiv.org/abs/2306.01708) merge method using [Qwen/Qwen2.5-Coder-7B](https://huggingface.co/Qwen/Qwen2.5-Coder-7B) as a base.

### Models Merged

The following models were included in the merge:

* [kraalfar/Qwen2.5-Coder-7B-GRPO](https://huggingface.co/kraalfar/Qwen2.5-Coder-7B-GRPO)

### Configuration

The following YAML configuration was used to produce this model:

```yaml

models:

- model: Qwen/Qwen2.5-Coder-7B

# no parameters necessary for base model

- model: kraalfar/Qwen2.5-Coder-7B-GRPO

parameters:

density: 0.5

weight: 0.5

merge_method: ties

base_model: Qwen/Qwen2.5-Coder-7B

parameters:

normalize: true

dtype: float32

```

|

mveroe/Qwen2.5-1.5B_lightr1_3_EN_1024_1p0_0p0_1p0_sft

|

mveroe

| 2025-08-14T16:30:14Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen2",

"text-generation",

"generated_from_trainer",

"conversational",

"base_model:Qwen/Qwen2.5-1.5B",

"base_model:finetune:Qwen/Qwen2.5-1.5B",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-08-14T14:43:38Z |

---

library_name: transformers

license: apache-2.0

base_model: Qwen/Qwen2.5-1.5B

tags:

- generated_from_trainer

model-index:

- name: Qwen2.5-1.5B_lightr1_3_EN_1024_1p0_0p0_1p0_sft

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Qwen2.5-1.5B_lightr1_3_EN_1024_1p0_0p0_1p0_sft

This model is a fine-tuned version of [Qwen/Qwen2.5-1.5B](https://huggingface.co/Qwen/Qwen2.5-1.5B) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- distributed_type: multi-GPU

- num_devices: 4

- gradient_accumulation_steps: 4

- total_train_batch_size: 64

- total_eval_batch_size: 32

- optimizer: Use OptimizerNames.ADAFACTOR and the args are:

No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 3

### Training results

### Framework versions

- Transformers 4.55.2

- Pytorch 2.7.1+cu128

- Datasets 4.0.0

- Tokenizers 0.21.2

|

wherobots/ftw-aoti-torch280-cu126-pt2

|

wherobots

| 2025-08-14T16:16:01Z | 0 | 0 | null |

[

"image-segmentation",

"license:cc-by-3.0",

"region:us"

] |

image-segmentation

| 2025-08-14T15:53:47Z |

---

license: cc-by-3.0

pipeline_tag: image-segmentation

---

|

Fanrubenez/test-01234

|

Fanrubenez

| 2025-08-14T16:10:03Z | 0 | 0 | null |

[

"license:apache-2.0",

"region:us"

] | null | 2025-08-12T15:18:27Z |

---

license: apache-2.0

---

|

jxm/gpt-oss-20b-base

|

jxm

| 2025-08-14T15:57:08Z | 747 | 98 |

transformers

|

[

"transformers",

"safetensors",

"gpt_oss",

"text-generation",

"trl",

"sft",

"conversational",

"en",

"dataset:HuggingFaceFW/fineweb",

"base_model:openai/gpt-oss-20b",

"base_model:finetune:openai/gpt-oss-20b",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-08-12T23:29:37Z |

---

language:

- en

license: mit

datasets:

- HuggingFaceFW/fineweb

base_model: openai/gpt-oss-20b

library_name: transformers

tags:

- trl

- sft

---

# gpt-oss-20b-base

⚠️ WARNING: This model is not affiliated with or sanctioned in any way by OpenAI. Proceed with caution.

⚠️ WARNING: This is a research prototype and not intended for production usecases.

## About

This model is an adapted version of the [GPT-OSS 20B](https://openai.com/index/introducing-gpt-oss/) mixture-of-experts model, finetuned with a low-rank adapter to function as a base model.

Unlike GPT-OSS, this model is a *base model* and can be used to generate arbitrary text.

`gpt-oss-20b-base` is a LoRA finetune of the original GPT-OSS 20B model. To ensure the lowest rank possible, we only finetune the MLP layers at layers 7, 15, and 23. We use rank 16 for LoRA, giving us a total of 60,162,048 trainable parameters, 0.3% of the original model's 20,974,919,232 parameters. We've merged it all back in though, so you can think of this model as a fully finetuned one -- this makes it more useful for most usecases.

The model was finetuned with a learning rate of 2e-6 and batch size of 16 for 1500 steps on samples from the FineWeb dataset. Its maximum sequence length is 8192.

## Usage

```python

# Load model directly

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("jxm/gpt-oss-20b-base", torch_dtype="auto")

tokenizer = AutoTokenizer.from_pretrained("jxm/gpt-oss-20b-base")

model.to("cuda")

sample_text = [

"Hello, how are you?",

"Why is the sky blue?",

"Where in the world is Carmen Sandiego?",

"Can you explain the theory of relativity?",

"google.com/",

"Twas brillig, and the slithy toves",

]

def generate(text: list[str], **kwargs) -> list[str]:

text = [tokenizer.bos_token + t for t in text]

tt = tokenizer(text, padding=True, padding_side="left", truncation=False, return_tensors="pt")

tt = tt.to("cuda")

outputs = model.generate(**tt, **kwargs)

return tokenizer.batch_decode(outputs)

outputs = generate(sample_text, max_new_tokens=100)

print('\n\n'.join(outputs))

```

## Chat template

This model was trained without a chat template. It will not function as a base model if you use the chat template! To generate text, simple prepend the text with `tokenizer.bos_token` (`'<|startoftext|>'`) and tokenize without a template.

(Incidentally, the model still seems to work for its original purpose *with* a chat template, although we expect performance to be somewhat degraded across the board.)

## Examples

Here are some examples I generated from some random prompts.

| Prompt| Output|

|---------------------------------------------------|-----------------------------------------------------------------------------------|

| Hello! How are you doing? | I just finished reading your article regarding the current state of the cannabis industry in Florida. I really enjoyed it and I appreciate your efforts to educate. Please add me as a friend on Facebook so that I can continue reading your future articles, which I am sure you will write to cover more such important issues relating to all the |

| Hi! what's your name? | I'm Tiffani, but you can call me Tiff. I'm 19 years old and I love to sing! Do you want to sing together? Hey Tiff, what's your favorite song? I'm curious. (Tiffani :3) my favorite is "Don't Call Me" by Demi Lovato.|

| What is the capital of France? | Who is the president of Peru? How many ounces are in a pound? What is the largest country in Africa? Who wrote Harry Potter? When does the Winter Olympics start in 2022? What is the largest desert in the world? Which is the oldest country in the world? What is the capital of Canada?|

| What is your favorite coding language? | Let me know in the comments. What do you need help "with online? Lets work "on it together! Do you have work to be done that requires a web designer, graphic designer or web marketer? I can help you out. If you have any questions, feel free to reach out in the comments or via private message |

| Where in the world is Carmen Sandiego? | This game is really simple. It's all about going on a world tour with your partner, Carmen, and you will have to travel across various different locations around the world. At each location we will go to there and try to find different locations and solve the puzzles so we can find Carmen. We are going around the world searching for her. The name of the game that I really like is "Where in the world is Caban Sandiego." The game is very fun to play and we can use |

| Why is the sky blue? | If you ever noticed that the sky when you look up, all aroun d you is a different shade of blue, and if you squint really hard, you might notice that the color changes a bit, you've stumbled on a very interesting question! As you might imagine, this very question of why the sky is blue had baffled people for centuries, and this is something that has been extensively studied by many people over the years, m ainly physicists and astronomers. When a physicist from Sweden named Anders |

| Can you explain the theory of relativity? | The question here is: what does gravity look like in an accelerating car? That's a good question. Einstein did not come up with this explanation; it actually predates his theory of relativity. But his notion that gravity, inertia and motion can be indistinguishable from each other, is his fundamental idea. A few words: gravitation in a car? You may think how heavy you feel is gravity. And maybe in a car you'd have to brake or jump, you'd feel gravity change? No |

| google.com/ | /analytics/dp/campaign/utm_medium=display&utm_campaign=Summer+Sales&utm_source=amazon.com&utm_user_id=%5Blender_userid%5D&utm_content=Banner5facebook.compinterest.com | |

| Twas brillig, and the slithy toves; | Did gyre and gimble in the wabe; All mimsy were the borogoves, And the mome raths outgimbling. In the haethy, a white bope Creeping across the clove... |

|

BizarreCake/qwen_2.5_7b_p_wiki

|

BizarreCake

| 2025-08-14T15:43:59Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"text-generation-inference",

"unsloth",

"qwen2",

"trl",

"en",

"base_model:unsloth/Qwen2.5-7B-Instruct",

"base_model:finetune:unsloth/Qwen2.5-7B-Instruct",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2025-08-14T13:17:52Z |

---

base_model: unsloth/Qwen2.5-7B-Instruct

tags:

- text-generation-inference

- transformers

- unsloth

- qwen2

- trl

license: apache-2.0

language:

- en

---

# Uploaded model

- **Developed by:** BizarreCake

- **License:** apache-2.0

- **Finetuned from model :** unsloth/Qwen2.5-7B-Instruct

This qwen2 model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

yakul259/english-unigram-tokenizer-60k

|

yakul259

| 2025-08-14T15:43:18Z | 0 | 0 |

tokenizers

|

[

"tokenizers",

"tokenizer",

"unigram",

"NLP",

"wikitext",

"en",

"dataset:wikitext",

"license:mit",

"region:us"

] | null | 2025-08-14T15:40:13Z |

---

language: en

tags:

- tokenizer

- unigram

- NLP

- wikitext

license: mit

datasets:

- wikitext

library_name: tokenizers

---

# **Custom Unigram Tokenizer (Trained on WikiText-103 Raw v1)**

## **Model Overview**

This repository contains a custom **Unigram-based tokenizer** trained from scratch on the **WikiText-103 Raw v1** dataset.

The tokenizer is designed for use in natural language processing tasks such as **language modeling**, **text classification**, and **information retrieval**.

**Key Features:**

- Custom `<cls>` and `<sep>` special tokens.

- Unigram subword segmentation for compact and efficient tokenization.

- Template-based post-processing for both single and paired sequences.

- Configured decoding using the Unigram model for accurate text reconstruction.

---

## **Training Details**

### **Dataset**

- **Name:** [WikiText-103 Raw v1](https://huggingface.co/datasets/wikitext)

- **Source:** High-quality, long-form Wikipedia articles.

- **Split Used:** `train`

- **Size:** ~103 million tokens

- **Loading Method:** Streaming mode for efficient large-scale training without local storage bottlenecks.

### **Tokenizer Configuration**

- **Model Type:** Unigram

- **Vocabulary Size:** *25,000* (optimized for balanced coverage and efficiency)

- **Lowercasing:** Enabled

- **Special Tokens:**

- `<cls>` — Classification token

- `<sep>` — Separator token

- `<unk>` — Unknown token

- `<pad>` — Padding token

- `<mask>` — Masking token (MLM tasks)

- `<s>` — Start of sequence

- `</s>` — End of sequence

- **Post-Processing Template:**

- **Single Sequence:** `$A:0 <sep>:0 <cls>:2`

- **Paired Sequences:** `$A:0 <sep>:0 $B:1 <sep>:1 <cls>:2`

- **Decoder:** Unigram decoder for reconstructing original text.

### **Training Method**

- **Corpus Source:** Streaming iterator from WikiText-103 Raw v1 (train split)

- **Batch Size:** 1000 lines per batch

- **Trainer:** `UnigramTrainer` from Hugging Face `tokenizers` library

- **Special Tokens Added:** `<cls>`, `<sep>`, `<unk>`, `<pad>`, `<mask>`, `<s>`, `</s>`

---

## **Intended Uses & Limitations**

### Intended Uses

- Pre-tokenization for training Transformer-based LLMs.

- Downstream NLP tasks:

- Language modeling

- Text classification

- Question answering

- Summarization

### Limitations

- Trained exclusively on English Wikipedia text — performance may degrade in informal, domain-specific, or multilingual contexts.

- May inherit biases present in Wikipedia data.

---

## **License**

This tokenizer is released under the **MIT License**.

---

## **Citation**

If you use this tokenizer, please cite:

title = Custom Unigram Tokenizer Trained on WikiText-103 Raw v1

author = yakul259

year = 2025

publisher = Hugging Face

|

lethanhanh-dev/fresh_meat

|

lethanhanh-dev

| 2025-08-14T15:14:38Z | 0 | 0 | null |

[

"license:cc-by-nc-2.0",

"region:us"

] | null | 2025-08-14T15:14:37Z |

---

license: cc-by-nc-2.0

---

|

RobbedoesHF/mt5-xl-dutch-definitions-qlora

|

RobbedoesHF

| 2025-08-14T15:09:33Z | 0 | 0 | null |

[

"license:apache-2.0",

"region:us"

] | null | 2025-08-14T15:09:33Z |

---

license: apache-2.0

---

|

afdeting/blockassist-bc-invisible_amphibious_otter_1755183269

|

afdeting

| 2025-08-14T14:56:14Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"invisible amphibious otter",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-14T14:56:06Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- invisible amphibious otter

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

bartowski/huizimao_gpt-oss-120b-uncensored-bf16-GGUF

|

bartowski

| 2025-08-14T14:49:17Z | 13,331 | 4 | null |

[

"gguf",

"text-generation",

"base_model:huizimao/gpt-oss-120b-uncensored-bf16",

"base_model:quantized:huizimao/gpt-oss-120b-uncensored-bf16",

"region:us"

] |

text-generation

| 2025-08-11T13:08:23Z |

---

quantized_by: bartowski

pipeline_tag: text-generation

base_model_relation: quantized

base_model: huizimao/gpt-oss-120b-uncensored-bf16

---

## Llamacpp imatrix Quantizations of gpt-oss-120b-uncensored-bf16 by huizimao

Using <a href="https://github.com/ggerganov/llama.cpp/">llama.cpp</a> release <a href="https://github.com/ggerganov/llama.cpp/releases/tag/b6115">b6115</a> for quantization.

Original model: https://huggingface.co/huizimao/gpt-oss-120b-uncensored-bf16

All quants made using imatrix option with dataset from [here](https://gist.github.com/bartowski1182/eb213dccb3571f863da82e99418f81e8) combined with Ed Addario's dataset from [here](https://huggingface.co/datasets/eaddario/imatrix-calibration/blob/main/combined_all_tiny.parquet)

Run them in [LM Studio](https://lmstudio.ai/)

Run them directly with [llama.cpp](https://github.com/ggerganov/llama.cpp), or any other llama.cpp based project

## Prompt format

No prompt format found, check original model page

## Download a file (not the whole branch) from below:

Use this one:

| Filename | Quant type | File Size | Split | Description |

| -------- | ---------- | --------- | ----- | ----------- |

| [gpt-oss-120b-uncensored-bf16-MXFP4_MOE.gguf](https://huggingface.co/bartowski/huizimao_gpt-oss-120b-uncensored-bf16-GGUF/tree/main/huizimao_gpt-oss-120b-uncensored-bf16-MXFP4_MOE) | MXFP4_MOE | 63.39GB | true | Special format for OpenAI's gpt-oss models, see: https://github.com/ggml-org/llama.cpp/pull/15091 *recommended* |

The reason is, the FFN (feed forward networks) of gpt-oss do not behave nicely when quantized to anything other than MXFP4, so they are kept at that level for everything.

The rest of these are provided for your own interest in case you feel like experimenting, but the size savings is basically non-existent so I would not recommend running them, they are provided simply for show:

| Filename | Quant type | File Size | Split | Description |

| -------- | ---------- | --------- | ----- | ----------- |

| [gpt-oss-120b-uncensored-bf16-Q6_K.gguf](https://huggingface.co/bartowski/huizimao_gpt-oss-120b-uncensored-bf16-GGUF/tree/main/huizimao_gpt-oss-120b-uncensored-bf16-Q6_K) | Q6_K | 63.28GB | true | Q6_K with all FFN kept at MXFP4_MOE |

| [gpt-oss-120b-uncensored-bf16-Q4_K_L.gguf](https://huggingface.co/bartowski/huizimao_gpt-oss-120b-uncensored-bf16-GGUF/tree/main/huizimao_gpt-oss-120b-uncensored-bf16-Q4_K_L) | Q4_K_L | 63.06GB | true | Uses Q8_0 for embed and output weights, Q4_K_M with all FFN kept at MXFP4_MOE |

| [gpt-oss-120b-uncensored-bf16-Q2_K_L.gguf](https://huggingface.co/bartowski/huizimao_gpt-oss-120b-uncensored-bf16-GGUF/tree/main/huizimao_gpt-oss-120b-uncensored-bf16-Q2_K_L) | Q2_K_L | 63.00GB | true | Uses Q8_0 for embed and output weights, Q2_K with all FFN kept at MXFP4_MOE |

| [gpt-oss-120b-uncensored-bf16-Q3_K_XL.gguf](https://huggingface.co/bartowski/huizimao_gpt-oss-120b-uncensored-bf16-GGUF/tree/main/huizimao_gpt-oss-120b-uncensored-bf16-Q3_K_XL) | Q3_K_XL | 62.89GB | true | Uses Q8_0 for embed and output weights. Q3_K_L with all FFN kept at MXFP4_MOE |

| [gpt-oss-120b-uncensored-bf16-Q4_K_M.gguf](https://huggingface.co/bartowski/huizimao_gpt-oss-120b-uncensored-bf16-GGUF/tree/main/huizimao_gpt-oss-120b-uncensored-bf16-Q4_K_M) | Q4_K_M | 62.84GB | true | Q4_K_M with all FFN kept at MXFP4_MOE |

| [gpt-oss-120b-uncensored-bf16-IQ4_NL.gguf](https://huggingface.co/bartowski/huizimao_gpt-oss-120b-uncensored-bf16-GGUF/tree/main/huizimao_gpt-oss-120b-uncensored-bf16-IQ4_NL) | IQ4_NL | 62.71GB | true | IQ4_NL with all FFN kept at MXFP4_MOE. |

| [gpt-oss-120b-uncensored-bf16-IQ3_M.gguf](https://huggingface.co/bartowski/huizimao_gpt-oss-120b-uncensored-bf16-GGUF/tree/main/huizimao_gpt-oss-120b-uncensored-bf16-IQ3_M) | IQ3_M | 62.71GB | true | IQ3_M with all FFN kept at MXFP4_MOE. |

| [gpt-oss-120b-uncensored-bf16-Q2_K.gguf](https://huggingface.co/bartowski/huizimao_gpt-oss-120b-uncensored-bf16-GGUF/tree/main/huizimao_gpt-oss-120b-uncensored-bf16-Q2_K) | Q2_K | 62.71GB | true | Q2_K with all FFN kept at MXFP4_MOE. |

| [gpt-oss-120b-uncensored-bf16-IQ2_M.gguf](https://huggingface.co/bartowski/huizimao_gpt-oss-120b-uncensored-bf16-GGUF/tree/main/huizimao_gpt-oss-120b-uncensored-bf16-IQ2_M) | IQ2_M | 62.69GB | true | IQ2_M with all FFN kept at MXFP4_MOE. |

| [gpt-oss-120b-uncensored-bf16-Q3_K_L.gguf](https://huggingface.co/bartowski/huizimao_gpt-oss-120b-uncensored-bf16-GGUF/tree/main/huizimao_gpt-oss-120b-uncensored-bf16-Q3_K_L) | Q3_K_L | 62.60GB | true | Q3_K_L with all FFN kept at MXFP4_MOE. |

## Embed/output weights

Some of these quants (Q3_K_XL, Q4_K_L etc) are the standard quantization method with the embeddings and output weights quantized to Q8_0 instead of what they would normally default to.

## Downloading using huggingface-cli

<details>

<summary>Click to view download instructions</summary>

First, make sure you have hugginface-cli installed:

```

pip install -U "huggingface_hub[cli]"

```

Then, you can target the specific file you want:

```

huggingface-cli download bartowski/huizimao_gpt-oss-120b-uncensored-bf16-GGUF --include "huizimao_gpt-oss-120b-uncensored-bf16-Q4_K_M.gguf" --local-dir ./

```

If the model is bigger than 50GB, it will have been split into multiple files. In order to download them all to a local folder, run:

```

huggingface-cli download bartowski/huizimao_gpt-oss-120b-uncensored-bf16-GGUF --include "huizimao_gpt-oss-120b-uncensored-bf16-Q8_0/*" --local-dir ./

```

You can either specify a new local-dir (huizimao_gpt-oss-120b-uncensored-bf16-Q8_0) or download them all in place (./)

</details>

## ARM/AVX information

Previously, you would download Q4_0_4_4/4_8/8_8, and these would have their weights interleaved in memory in order to improve performance on ARM and AVX machines by loading up more data in one pass.

Now, however, there is something called "online repacking" for weights. details in [this PR](https://github.com/ggerganov/llama.cpp/pull/9921). If you use Q4_0 and your hardware would benefit from repacking weights, it will do it automatically on the fly.

As of llama.cpp build [b4282](https://github.com/ggerganov/llama.cpp/releases/tag/b4282) you will not be able to run the Q4_0_X_X files and will instead need to use Q4_0.

Additionally, if you want to get slightly better quality for , you can use IQ4_NL thanks to [this PR](https://github.com/ggerganov/llama.cpp/pull/10541) which will also repack the weights for ARM, though only the 4_4 for now. The loading time may be slower but it will result in an overall speed incrase.

<details>

<summary>Click to view Q4_0_X_X information (deprecated</summary>

I'm keeping this section to show the potential theoretical uplift in performance from using the Q4_0 with online repacking.

<details>

<summary>Click to view benchmarks on an AVX2 system (EPYC7702)</summary>

| model | size | params | backend | threads | test | t/s | % (vs Q4_0) |

| ------------------------------ | ---------: | ---------: | ---------- | ------: | ------------: | -------------------: |-------------: |

| qwen2 3B Q4_0 | 1.70 GiB | 3.09 B | CPU | 64 | pp512 | 204.03 ± 1.03 | 100% |

| qwen2 3B Q4_0 | 1.70 GiB | 3.09 B | CPU | 64 | pp1024 | 282.92 ± 0.19 | 100% |

| qwen2 3B Q4_0 | 1.70 GiB | 3.09 B | CPU | 64 | pp2048 | 259.49 ± 0.44 | 100% |

| qwen2 3B Q4_0 | 1.70 GiB | 3.09 B | CPU | 64 | tg128 | 39.12 ± 0.27 | 100% |

| qwen2 3B Q4_0 | 1.70 GiB | 3.09 B | CPU | 64 | tg256 | 39.31 ± 0.69 | 100% |

| qwen2 3B Q4_0 | 1.70 GiB | 3.09 B | CPU | 64 | tg512 | 40.52 ± 0.03 | 100% |

| qwen2 3B Q4_K_M | 1.79 GiB | 3.09 B | CPU | 64 | pp512 | 301.02 ± 1.74 | 147% |

| qwen2 3B Q4_K_M | 1.79 GiB | 3.09 B | CPU | 64 | pp1024 | 287.23 ± 0.20 | 101% |

| qwen2 3B Q4_K_M | 1.79 GiB | 3.09 B | CPU | 64 | pp2048 | 262.77 ± 1.81 | 101% |

| qwen2 3B Q4_K_M | 1.79 GiB | 3.09 B | CPU | 64 | tg128 | 18.80 ± 0.99 | 48% |

| qwen2 3B Q4_K_M | 1.79 GiB | 3.09 B | CPU | 64 | tg256 | 24.46 ± 3.04 | 83% |

| qwen2 3B Q4_K_M | 1.79 GiB | 3.09 B | CPU | 64 | tg512 | 36.32 ± 3.59 | 90% |

| qwen2 3B Q4_0_8_8 | 1.69 GiB | 3.09 B | CPU | 64 | pp512 | 271.71 ± 3.53 | 133% |

| qwen2 3B Q4_0_8_8 | 1.69 GiB | 3.09 B | CPU | 64 | pp1024 | 279.86 ± 45.63 | 100% |

| qwen2 3B Q4_0_8_8 | 1.69 GiB | 3.09 B | CPU | 64 | pp2048 | 320.77 ± 5.00 | 124% |

| qwen2 3B Q4_0_8_8 | 1.69 GiB | 3.09 B | CPU | 64 | tg128 | 43.51 ± 0.05 | 111% |

| qwen2 3B Q4_0_8_8 | 1.69 GiB | 3.09 B | CPU | 64 | tg256 | 43.35 ± 0.09 | 110% |

| qwen2 3B Q4_0_8_8 | 1.69 GiB | 3.09 B | CPU | 64 | tg512 | 42.60 ± 0.31 | 105% |

Q4_0_8_8 offers a nice bump to prompt processing and a small bump to text generation

</details>

</details>

## Which file should I choose?

<details>

<summary>Click here for details</summary>

A great write up with charts showing various performances is provided by Artefact2 [here](https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9)

The first thing to figure out is how big a model you can run. To do this, you'll need to figure out how much RAM and/or VRAM you have.

If you want your model running as FAST as possible, you'll want to fit the whole thing on your GPU's VRAM. Aim for a quant with a file size 1-2GB smaller than your GPU's total VRAM.

If you want the absolute maximum quality, add both your system RAM and your GPU's VRAM together, then similarly grab a quant with a file size 1-2GB Smaller than that total.

Next, you'll need to decide if you want to use an 'I-quant' or a 'K-quant'.

If you don't want to think too much, grab one of the K-quants. These are in format 'QX_K_X', like Q5_K_M.

If you want to get more into the weeds, you can check out this extremely useful feature chart:

[llama.cpp feature matrix](https://github.com/ggerganov/llama.cpp/wiki/Feature-matrix)

But basically, if you're aiming for below Q4, and you're running cuBLAS (Nvidia) or rocBLAS (AMD), you should look towards the I-quants. These are in format IQX_X, like IQ3_M. These are newer and offer better performance for their size.

These I-quants can also be used on CPU, but will be slower than their K-quant equivalent, so speed vs performance is a tradeoff you'll have to decide.

</details>

## Credits

Thank you kalomaze and Dampf for assistance in creating the imatrix calibration dataset.

Thank you ZeroWw for the inspiration to experiment with embed/output.

Thank you to LM Studio for sponsoring my work.

Want to support my work? Visit my ko-fi page here: https://ko-fi.com/bartowski

|

EYEDOL/FROM_C3_5

|

EYEDOL

| 2025-08-14T14:45:24Z | 0 | 0 |

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"whisper",

"automatic-speech-recognition",

"hf-asr-leaderboard",

"generated_from_trainer",

"sw",

"dataset:mozilla-foundation/common_voice_13_0",

"base_model:EYEDOL/FROM_C3_4",

"base_model:finetune:EYEDOL/FROM_C3_4",

"model-index",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2025-08-14T06:58:35Z |

---

library_name: transformers

language:

- sw

base_model: EYEDOL/FROM_C3_4

tags:

- hf-asr-leaderboard

- generated_from_trainer

datasets:

- mozilla-foundation/common_voice_13_0

metrics:

- wer

model-index:

- name: ASR_FROM_C3

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: Common Voice 13.0

type: mozilla-foundation/common_voice_13_0

config: sw

split: None

args: 'config: sw, split: test'

metrics:

- name: Wer

type: wer

value: 17.669860078154546

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ASR_FROM_C3

This model is a fine-tuned version of [EYEDOL/FROM_C3_4](https://huggingface.co/EYEDOL/FROM_C3_4) on the Common Voice 13.0 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2687

- Wer: 17.6699

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:------:|:----:|:---------------:|:-------:|

| 0.0239 | 0.6918 | 2000 | 0.2687 | 17.6699 |

### Framework versions

- Transformers 4.52.4

- Pytorch 2.6.0+cu124

- Datasets 3.6.0

- Tokenizers 0.21.2

|

chainway9/blockassist-bc-untamed_quick_eel_1755180681

|

chainway9

| 2025-08-14T14:40:58Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"untamed quick eel",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-14T14:40:54Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- untamed quick eel

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

KolyaGudenkauf/JUNE

|

KolyaGudenkauf

| 2025-08-14T14:39:31Z | 0 | 0 | null |

[

"license:other",

"region:us"

] | null | 2025-08-14T13:46:46Z |

---

license: other

license_name: flux-1-dev-non-commercial-license

license_link: https://huggingface.co/black-forest-labs/FLUX.1-dev/blob/main/LICENSE.md

---

|

runchat/lora-test-kohya

|

runchat

| 2025-08-14T14:28:43Z | 0 | 0 | null |

[

"flux",

"lora",

"kohya",

"text-to-image",

"base_model:black-forest-labs/FLUX.1-dev",

"base_model:adapter:black-forest-labs/FLUX.1-dev",

"license:mit",

"region:us"

] |

text-to-image

| 2025-08-14T14:05:42Z |

---

license: mit

base_model: black-forest-labs/FLUX.1-dev

tags:

- flux

- lora

- kohya

- text-to-image

widget:

- text: 'CasaRunchat style'

---

# Flux LoRA: CasaRunchat (Kohya Format)

This is a LoRA trained with the [Kohya_ss training scripts](https://github.com/bmaltais/kohya_ss) in Kohya format.

## Usage

Use the trigger word `CasaRunchat` in your prompts in ComfyUI, AUTOMATIC1111, etc.

## Training Details

- Base model: `black-forest-labs/FLUX.1-dev`

- Total Steps: ~`10`

- Learning rate: `0.0008`

- LoRA rank: `32`

- Trigger word: `CasaRunchat`

- Format: Kohya LoRA (.safetensors)

|

koloni/blockassist-bc-deadly_graceful_stingray_1755179837

|

koloni

| 2025-08-14T14:23:50Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"deadly graceful stingray",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-14T14:23:46Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- deadly graceful stingray

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

milliarderdol/blockassist-bc-roaring_rough_scorpion_1755178804

|

milliarderdol

| 2025-08-14T14:20:58Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"roaring rough scorpion",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-14T14:14:50Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- roaring rough scorpion

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Muapi/retro-neon-style-flux-sd-xl-illustrious-xl-pony

|

Muapi

| 2025-08-14T14:20:04Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-14T14:19:49Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Retro neon style [FLUX+SD+XL+Illustrious-XL+Pony]

**Base model**: Flux.1 D

**Trained words**: retro_neon

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:569937@747123", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

TAUR-dev/M-sft1e-5_ppo_countdown3arg_format0.1_transition0.1-rl

|

TAUR-dev

| 2025-08-14T14:16:41Z | 0 | 0 | null |

[

"safetensors",

"qwen2",

"en",

"license:mit",

"region:us"

] | null | 2025-08-14T14:15:29Z |

---

language: en

license: mit

---

# M-sft1e-5_ppo_countdown3arg_format0.1_transition0.1-rl

## Model Details

- **Training Method**: VeRL Reinforcement Learning (RL)

- **Stage Name**: rl

- **Experiment**: sft1e-5_ppo_countdown3arg_format0.1_transition0.1

- **RL Framework**: VeRL (Versatile Reinforcement Learning)

## Training Configuration

## Experiment Tracking

🔗 **View complete experiment details**: https://huggingface.co/datasets/TAUR-dev/D-ExpTracker__sft1e-5_ppo_countdown3arg_format0.1_transition0.1__v1

## Usage

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("TAUR-dev/M-sft1e-5_ppo_countdown3arg_format0.1_transition0.1-rl")

model = AutoModelForCausalLM.from_pretrained("TAUR-dev/M-sft1e-5_ppo_countdown3arg_format0.1_transition0.1-rl")

```

|

ench100/bodyandface

|

ench100

| 2025-08-14T13:55:19Z | 7 | 0 |

diffusers

|

[

"diffusers",

"text-to-image",

"lora",

"template:diffusion-lora",

"base_model:lodestones/Chroma",

"base_model:adapter:lodestones/Chroma",

"region:us"

] |

text-to-image

| 2025-08-12T08:58:41Z |

---

tags:

- text-to-image

- lora

- diffusers

- template:diffusion-lora

widget:

- output:

url: images/2.png

text: '-'

base_model: lodestones/Chroma

instance_prompt: null

---

# forME

<Gallery />

## Download model

[Download](/ench100/bodyandface/tree/main) them in the Files & versions tab.

|

BootesVoid/cmebe0izl0bggrts8htc1l7bh_cmebeiffk0bhtrts8cf3sg6l2

|

BootesVoid

| 2025-08-14T13:54:58Z | 0 | 0 |

diffusers

|

[

"diffusers",

"flux",

"lora",

"replicate",

"text-to-image",

"en",

"base_model:black-forest-labs/FLUX.1-dev",

"base_model:adapter:black-forest-labs/FLUX.1-dev",

"license:other",

"region:us"

] |

text-to-image

| 2025-08-14T13:54:57Z |

---

license: other

license_name: flux-1-dev-non-commercial-license

license_link: https://huggingface.co/black-forest-labs/FLUX.1-dev/blob/main/LICENSE.md

language:

- en

tags:

- flux

- diffusers

- lora

- replicate

base_model: "black-forest-labs/FLUX.1-dev"

pipeline_tag: text-to-image

# widget:

# - text: >-

# prompt

# output:

# url: https://...

instance_prompt: YYY111

---

# Cmebe0Izl0Bggrts8Htc1L7Bh_Cmebeiffk0Bhtrts8Cf3Sg6L2

<Gallery />

## About this LoRA

This is a [LoRA](https://replicate.com/docs/guides/working-with-loras) for the FLUX.1-dev text-to-image model. It can be used with diffusers or ComfyUI.

It was trained on [Replicate](https://replicate.com/) using AI toolkit: https://replicate.com/ostris/flux-dev-lora-trainer/train

## Trigger words

You should use `YYY111` to trigger the image generation.

## Run this LoRA with an API using Replicate

```py

import replicate

input = {

"prompt": "YYY111",

"lora_weights": "https://huggingface.co/BootesVoid/cmebe0izl0bggrts8htc1l7bh_cmebeiffk0bhtrts8cf3sg6l2/resolve/main/lora.safetensors"

}

output = replicate.run(

"black-forest-labs/flux-dev-lora",

input=input

)

for index, item in enumerate(output):

with open(f"output_{index}.webp", "wb") as file:

file.write(item.read())

```

## Use it with the [🧨 diffusers library](https://github.com/huggingface/diffusers)

```py

from diffusers import AutoPipelineForText2Image

import torch

pipeline = AutoPipelineForText2Image.from_pretrained('black-forest-labs/FLUX.1-dev', torch_dtype=torch.float16).to('cuda')

pipeline.load_lora_weights('BootesVoid/cmebe0izl0bggrts8htc1l7bh_cmebeiffk0bhtrts8cf3sg6l2', weight_name='lora.safetensors')

image = pipeline('YYY111').images[0]

```

For more details, including weighting, merging and fusing LoRAs, check the [documentation on loading LoRAs in diffusers](https://huggingface.co/docs/diffusers/main/en/using-diffusers/loading_adapters)

## Training details

- Steps: 2000

- Learning rate: 0.0004

- LoRA rank: 16

## Contribute your own examples

You can use the [community tab](https://huggingface.co/BootesVoid/cmebe0izl0bggrts8htc1l7bh_cmebeiffk0bhtrts8cf3sg6l2/discussions) to add images that show off what you’ve made with this LoRA.

|

indoempatnol/blockassist-bc-fishy_wary_swan_1755178051

|

indoempatnol

| 2025-08-14T13:54:44Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"fishy wary swan",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-14T13:54:41Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- fishy wary swan

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

david-cleon/Meta-Llama-3.1-8B-q4_k_m-paul-graham-guide-GGUF

|

david-cleon

| 2025-08-14T13:48:30Z | 0 | 0 |

transformers

|

[

"transformers",

"gguf",

"llama",

"text-generation-inference",

"unsloth",

"en",

"license:apache-2.0",

"endpoints_compatible",

"region:us",

"conversational"

] | null | 2025-08-14T13:47:15Z |

---

base_model: unsloth/meta-llama-3.1-8b-instruct-bnb-4bit

tags:

- text-generation-inference

- transformers

- unsloth

- llama

- gguf

license: apache-2.0

language:

- en

---

# Uploaded model

- **Developed by:** david-cleon

- **License:** apache-2.0

- **Finetuned from model :** unsloth/meta-llama-3.1-8b-instruct-bnb-4bit

This llama model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

bruhzair/prototype-0.4x318

|

bruhzair

| 2025-08-14T13:47:11Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"llama",

"text-generation",

"mergekit",

"merge",

"conversational",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-08-14T13:30:10Z |

---

base_model: []

library_name: transformers

tags:

- mergekit

- merge

---

# prototype-0.4x318

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

## Merge Details

### Merge Method

This model was merged using the [Multi-SLERP](https://goddard.blog/posts/multislerp-wow-what-a-cool-idea) merge method using /workspace/cache/models--deepcogito--cogito-v2-preview-llama-70B/snapshots/1e1d12e8eaebd6084a8dcf45ecdeaa2f4b8879ce as a base.

### Models Merged

The following models were included in the merge:

* /workspace/cache/models--BruhzWater--Apocrypha-L3.3-70b-0.4a/snapshots/64723af7b548b0f19e8b4b3867117393282c7839

* /workspace/prototype-0.4x310

### Configuration

The following YAML configuration was used to produce this model:

```yaml

models:

- model: /workspace/prototype-0.4x310

parameters:

weight: [0.5]

- model: /workspace/cache/models--BruhzWater--Apocrypha-L3.3-70b-0.4a/snapshots/64723af7b548b0f19e8b4b3867117393282c7839

parameters:

weight: [0.5]

base_model: /workspace/cache/models--deepcogito--cogito-v2-preview-llama-70B/snapshots/1e1d12e8eaebd6084a8dcf45ecdeaa2f4b8879ce

merge_method: multislerp

tokenizer:

source: base

chat_template: llama3

parameters:

normalize_weights: false

eps: 1e-8

pad_to_multiple_of: 8

int8_mask: true

dtype: bfloat16

```

|

elmenbillion/blockassist-bc-beaked_sharp_otter_1755177307

|

elmenbillion

| 2025-08-14T13:41:52Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"beaked sharp otter",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-14T13:41:48Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- beaked sharp otter

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

AnthonyPa57/HF-torch-demo-R

|

AnthonyPa57

| 2025-08-14T13:41:52Z | 0 | 0 | null |

[

"safetensors",

"pytorch",

"text-generation",

"moe",

"custom_code",

"en",

"license:mit",

"model-index",

"region:us"

] |

text-generation

| 2025-08-14T13:30:26Z |

---

tags:

- pytorch

- text-generation

- moe

- custom_code

library: pytorch

license: mit

language:

- en

model-index:

- name: AnthonyPa57/HF-torch-demo-R

results:

- task:

type: text-generation

name: text-generation

dataset:

name: pretraining

type: pretraining

metrics:

- type: CEL

value: '10.438'

name: Cross Entropy Loss

verified: false

---

# Random Pytorch model used as a demo to show how to push custom models to HF hub

| parameters | precision |

| :--------: | :-------: |

|907.63 M|BF16|

|

Suu/Klear-Reasoner-8B

|

Suu

| 2025-08-14T13:38:22Z | 37 | 5 | null |

[

"safetensors",

"qwen3",

"en",

"dataset:Suu/KlearReasoner-MathSub-30K",

"dataset:Suu/KlearReasoner-CodeSub-15K",

"arxiv:2508.07629",

"base_model:Suu/Klear-Reasoner-8B-SFT",

"base_model:finetune:Suu/Klear-Reasoner-8B-SFT",

"license:apache-2.0",

"region:us"

] | null | 2025-08-11T08:45:35Z |

---

license: apache-2.0

language:

- en

base_model:

- Suu/Klear-Reasoner-8B-SFT

datasets:

- Suu/KlearReasoner-MathSub-30K

- Suu/KlearReasoner-CodeSub-15K

metrics:

- accuracy

---

# ✨ Klear-Reasoner-8B

We present Klear-Reasoner, a model with long reasoning capabilities that demonstrates careful deliberation during problem solving, achieving outstanding performance across multiple benchmarks. We investigate two key issues with current clipping mechanisms in RL: Clipping suppresses critical exploration signals and ignores suboptimal trajectories. To address these challenges, we propose **G**radient-**P**reserving clipping **P**olicy **O**ptimization (**GPPO**) that gently backpropagates gradients from clipped tokens.

| Resource | Link |

|---|---|

| 📝 Preprints | [Paper](https://arxiv.org/pdf/2508.07629) |

| 🤗 Daily Paper | [Paper](https://huggingface.co/papers/2508.07629) |

| 🤗 Model Hub | [Klear-Reasoner-8B](https://huggingface.co/Suu/Klear-Reasoner-8B) |

| 🤗 Dataset Hub | [Math RL](https://huggingface.co/datasets/Suu/KlearReasoner-MathSub-30K) |

| 🤗 Dataset Hub | [Code RL](https://huggingface.co/datasets/Suu/KlearReasoner-CodeSub-15K) |

| 🐛 Issues & Discussions | [GitHub Issues](https://github.com/suu990901/KlearReasoner/issues) |

| 📧 Contact | [email protected] |

## 📌 Overview

<div align="center">

<img src="main_result.png" width="100%"/>

<sub>Benchmark accuracy of Klear-Reasoner-8B on AIME 2024/2025 (avg@64), LiveCodeBench V5 (2024/08/01-2025/02/01, avg@8), and v6 (2025/02/01-2025/05/01, avg@8).</sub>

</div>

Klear-Reasoner is an 8-billion-parameter reasoning model that achieves **SOTA** performance on challenging **math and coding benchmarks**:

| Benchmark | AIME 2024 | AIME 2025 | LiveCodeBench V5 | LiveCodeBench V6 |

|---|---|---|---|---|

| **Score** | **90.5 %** | **83.2 %** | **66.0 %** | **58.1 %** |

The model combines:

1. **Quality-centric long CoT SFT** – distilled from DeepSeek-R1-0528.

2. **Gradient-Preserving Clipping Policy Optimization (GPPO)** – a novel RL method that **keeps gradients from clipped tokens** to boost exploration & convergence.

---

### Evaluation

When we expand the inference budget to 64K and adopt the YaRN method with a scaling factor of 2.5. **Evaluation is coming soon, stay tuned.**

## 📊 Benchmark Results (Pass@1)

| Model | AIME2024<br>avg@64 | AIME2025<br>avg@64 | HMMT2025<br>avg@64 | LCB V5<br>avg@8 | LCB V6<br>avg@8 |

|-------|--------------------|--------------------|--------------------|-----------------|-----------------|

| AReal-boba-RL-7B | 61.9 | 48.3 | 29.4 | 34.3 | 31.0† |

| MiMo-7B-RL | 68.2 | 55.4 | 35.7 | 57.8 | 49.3 |

| Skywork-OR1-7B | 70.2 | 54.6 | 35.7 | 47.6 | 42.7 |

| AceReason-Nemotron-1.1-7B | 72.6 | 64.8 | 42.9 | 57.2 | 52.1 |

| POLARIS-4B-Preview | 81.2 | _79.4_ | 58.7 | 58.5† | 53.0† |

| Qwen3-8B | 76.0 | 67.3 | 44.7† | 57.5 | 48.4† |

| Deepseek-R1-0528-Distill-8B | _86.0_ | 76.3 | 61.5 | 61.0† | 51.6† |