Upload non-data

Browse files- .gitattributes +2 -55

- LICENSE +202 -0

- README.md +135 -0

- doc/documentation.pdf +0 -0

- img/label-studio-task-overview.png +0 -0

- scripts/adjust_annotation_end.py +226 -0

- scripts/create_poner_dataset_conll.py +112 -0

- scripts/poner-1_0.py +132 -0

- scripts/remove_start_whitespace.py +72 -0

- scripts/requirements.txt +10 -0

- scripts/split_poner_dataset_conll.py +82 -0

.gitattributes

CHANGED

|

@@ -1,55 +1,2 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

-

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

-

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

-

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

-

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

-

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

-

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

-

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

-

*.lz4 filter=lfs diff=lfs merge=lfs -text

|

| 12 |

-

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 13 |

-

*.model filter=lfs diff=lfs merge=lfs -text

|

| 14 |

-

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 15 |

-

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 16 |

-

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 17 |

-

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 18 |

-

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 19 |

-

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 20 |

-

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 21 |

-

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 22 |

-

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 23 |

-

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 24 |

-

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 25 |

-

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 26 |

-

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 27 |

-

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

-

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 29 |

-

*.tar filter=lfs diff=lfs merge=lfs -text

|

| 30 |

-

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 31 |

-

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 32 |

-

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 33 |

-

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 34 |

-

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 35 |

-

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 36 |

-

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 37 |

-

# Audio files - uncompressed

|

| 38 |

-

*.pcm filter=lfs diff=lfs merge=lfs -text

|

| 39 |

-

*.sam filter=lfs diff=lfs merge=lfs -text

|

| 40 |

-

*.raw filter=lfs diff=lfs merge=lfs -text

|

| 41 |

-

# Audio files - compressed

|

| 42 |

-

*.aac filter=lfs diff=lfs merge=lfs -text

|

| 43 |

-

*.flac filter=lfs diff=lfs merge=lfs -text

|

| 44 |

-

*.mp3 filter=lfs diff=lfs merge=lfs -text

|

| 45 |

-

*.ogg filter=lfs diff=lfs merge=lfs -text

|

| 46 |

-

*.wav filter=lfs diff=lfs merge=lfs -text

|

| 47 |

-

# Image files - uncompressed

|

| 48 |

-

*.bmp filter=lfs diff=lfs merge=lfs -text

|

| 49 |

-

*.gif filter=lfs diff=lfs merge=lfs -text

|

| 50 |

-

*.png filter=lfs diff=lfs merge=lfs -text

|

| 51 |

-

*.tiff filter=lfs diff=lfs merge=lfs -text

|

| 52 |

-

# Image files - compressed

|

| 53 |

-

*.jpg filter=lfs diff=lfs merge=lfs -text

|

| 54 |

-

*.jpeg filter=lfs diff=lfs merge=lfs -text

|

| 55 |

-

*.webp filter=lfs diff=lfs merge=lfs -text

|

|

|

|

| 1 |

+

# Auto detect text files and perform LF normalization

|

| 2 |

+

* text=auto

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

LICENSE

ADDED

|

@@ -0,0 +1,202 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

Apache License

|

| 3 |

+

Version 2.0, January 2004

|

| 4 |

+

http://www.apache.org/licenses/

|

| 5 |

+

|

| 6 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 7 |

+

|

| 8 |

+

1. Definitions.

|

| 9 |

+

|

| 10 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 11 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 12 |

+

|

| 13 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 14 |

+

the copyright owner that is granting the License.

|

| 15 |

+

|

| 16 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 17 |

+

other entities that control, are controlled by, or are under common

|

| 18 |

+

control with that entity. For the purposes of this definition,

|

| 19 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 20 |

+

direction or management of such entity, whether by contract or

|

| 21 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 22 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 23 |

+

|

| 24 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 25 |

+

exercising permissions granted by this License.

|

| 26 |

+

|

| 27 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 28 |

+

including but not limited to software source code, documentation

|

| 29 |

+

source, and configuration files.

|

| 30 |

+

|

| 31 |

+

"Object" form shall mean any form resulting from mechanical

|

| 32 |

+

transformation or translation of a Source form, including but

|

| 33 |

+

not limited to compiled object code, generated documentation,

|

| 34 |

+

and conversions to other media types.

|

| 35 |

+

|

| 36 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 37 |

+

Object form, made available under the License, as indicated by a

|

| 38 |

+

copyright notice that is included in or attached to the work

|

| 39 |

+

(an example is provided in the Appendix below).

|

| 40 |

+

|

| 41 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 42 |

+

form, that is based on (or derived from) the Work and for which the

|

| 43 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 44 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 45 |

+

of this License, Derivative Works shall not include works that remain

|

| 46 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 47 |

+

the Work and Derivative Works thereof.

|

| 48 |

+

|

| 49 |

+

"Contribution" shall mean any work of authorship, including

|

| 50 |

+

the original version of the Work and any modifications or additions

|

| 51 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 52 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 53 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 54 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 55 |

+

means any form of electronic, verbal, or written communication sent

|

| 56 |

+

to the Licensor or its representatives, including but not limited to

|

| 57 |

+

communication on electronic mailing lists, source code control systems,

|

| 58 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 59 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 60 |

+

excluding communication that is conspicuously marked or otherwise

|

| 61 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 62 |

+

|

| 63 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 64 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 65 |

+

subsequently incorporated within the Work.

|

| 66 |

+

|

| 67 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 68 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 69 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 70 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 71 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 72 |

+

Work and such Derivative Works in Source or Object form.

|

| 73 |

+

|

| 74 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 75 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 76 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 77 |

+

(except as stated in this section) patent license to make, have made,

|

| 78 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 79 |

+

where such license applies only to those patent claims licensable

|

| 80 |

+

by such Contributor that are necessarily infringed by their

|

| 81 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 82 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 83 |

+

institute patent litigation against any entity (including a

|

| 84 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 85 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 86 |

+

or contributory patent infringement, then any patent licenses

|

| 87 |

+

granted to You under this License for that Work shall terminate

|

| 88 |

+

as of the date such litigation is filed.

|

| 89 |

+

|

| 90 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 91 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 92 |

+

modifications, and in Source or Object form, provided that You

|

| 93 |

+

meet the following conditions:

|

| 94 |

+

|

| 95 |

+

(a) You must give any other recipients of the Work or

|

| 96 |

+

Derivative Works a copy of this License; and

|

| 97 |

+

|

| 98 |

+

(b) You must cause any modified files to carry prominent notices

|

| 99 |

+

stating that You changed the files; and

|

| 100 |

+

|

| 101 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 102 |

+

that You distribute, all copyright, patent, trademark, and

|

| 103 |

+

attribution notices from the Source form of the Work,

|

| 104 |

+

excluding those notices that do not pertain to any part of

|

| 105 |

+

the Derivative Works; and

|

| 106 |

+

|

| 107 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 108 |

+

distribution, then any Derivative Works that You distribute must

|

| 109 |

+

include a readable copy of the attribution notices contained

|

| 110 |

+

within such NOTICE file, excluding those notices that do not

|

| 111 |

+

pertain to any part of the Derivative Works, in at least one

|

| 112 |

+

of the following places: within a NOTICE text file distributed

|

| 113 |

+

as part of the Derivative Works; within the Source form or

|

| 114 |

+

documentation, if provided along with the Derivative Works; or,

|

| 115 |

+

within a display generated by the Derivative Works, if and

|

| 116 |

+

wherever such third-party notices normally appear. The contents

|

| 117 |

+

of the NOTICE file are for informational purposes only and

|

| 118 |

+

do not modify the License. You may add Your own attribution

|

| 119 |

+

notices within Derivative Works that You distribute, alongside

|

| 120 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 121 |

+

that such additional attribution notices cannot be construed

|

| 122 |

+

as modifying the License.

|

| 123 |

+

|

| 124 |

+

You may add Your own copyright statement to Your modifications and

|

| 125 |

+

may provide additional or different license terms and conditions

|

| 126 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 127 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 128 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 129 |

+

the conditions stated in this License.

|

| 130 |

+

|

| 131 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 132 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 133 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 134 |

+

this License, without any additional terms or conditions.

|

| 135 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 136 |

+

the terms of any separate license agreement you may have executed

|

| 137 |

+

with Licensor regarding such Contributions.

|

| 138 |

+

|

| 139 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 140 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 141 |

+

except as required for reasonable and customary use in describing the

|

| 142 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 143 |

+

|

| 144 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 145 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 146 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 147 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 148 |

+

implied, including, without limitation, any warranties or conditions

|

| 149 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 150 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 151 |

+

appropriateness of using or redistributing the Work and assume any

|

| 152 |

+

risks associated with Your exercise of permissions under this License.

|

| 153 |

+

|

| 154 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 155 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 156 |

+

unless required by applicable law (such as deliberate and grossly

|

| 157 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 158 |

+

liable to You for damages, including any direct, indirect, special,

|

| 159 |

+

incidental, or consequential damages of any character arising as a

|

| 160 |

+

result of this License or out of the use or inability to use the

|

| 161 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 162 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 163 |

+

other commercial damages or losses), even if such Contributor

|

| 164 |

+

has been advised of the possibility of such damages.

|

| 165 |

+

|

| 166 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 167 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 168 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 169 |

+

or other liability obligations and/or rights consistent with this

|

| 170 |

+

License. However, in accepting such obligations, You may act only

|

| 171 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 172 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 173 |

+

defend, and hold each Contributor harmless for any liability

|

| 174 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 175 |

+

of your accepting any such warranty or additional liability.

|

| 176 |

+

|

| 177 |

+

END OF TERMS AND CONDITIONS

|

| 178 |

+

|

| 179 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 180 |

+

|

| 181 |

+

To apply the Apache License to your work, attach the following

|

| 182 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 183 |

+

replaced with your own identifying information. (Don't include

|

| 184 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 185 |

+

comment syntax for the file format. We also recommend that a

|

| 186 |

+

file or class name and description of purpose be included on the

|

| 187 |

+

same "printed page" as the copyright notice for easier

|

| 188 |

+

identification within third-party archives.

|

| 189 |

+

|

| 190 |

+

Copyright [yyyy] [name of copyright owner]

|

| 191 |

+

|

| 192 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 193 |

+

you may not use this file except in compliance with the License.

|

| 194 |

+

You may obtain a copy of the License at

|

| 195 |

+

|

| 196 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 197 |

+

|

| 198 |

+

Unless required by applicable law or agreed to in writing, software

|

| 199 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 200 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 201 |

+

See the License for the specific language governing permissions and

|

| 202 |

+

limitations under the License.

|

README.md

ADDED

|

@@ -0,0 +1,135 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# PERO OCR NER 1.0

|

| 2 |

+

|

| 3 |

+

Dataset

|

| 4 |

+

|

| 5 |

+

This is a dataset created for master thesis "Document Information Extraction".

|

| 6 |

+

Author: Roman Janík (xjanik20), 2023

|

| 7 |

+

Faculty of Information Technology, Brno University of Technology

|

| 8 |

+

|

| 9 |

+

## Description

|

| 10 |

+

|

| 11 |

+

This is a **P**ERO **O**CR **NER** 1.0 dataset for Named Entity Recognition. The dataset consists of 9,310 Czech sentences with 14,639 named entities.

|

| 12 |

+

Source data are Czech historical chronicles mostly from the first half of the 20th century. The chronicles scanned images were processed by PERO OCR [1].

|

| 13 |

+

Text data were then annotated in the Label Studio tool. The process was semi-automated, first a NER model was used to pre-annotate the data and then

|

| 14 |

+

the pre-annotations were manually refined. Named entity types are: *Personal names*, *Institutions*, *Geographical names*, *Time expressions*, and *Artifact names/Objects*; the same as in Czech Historical Named Entity Corpus (CHNEC)[2].

|

| 15 |

+

|

| 16 |

+

The CoNLL files are formatted as follows:

|

| 17 |

+

|

| 18 |

+

Each line in

|

| 19 |

+

the corpus contains information about one word/token. The first column is the actual

|

| 20 |

+

word, and the second column is a Named Entity class in a BIO format. An empty line is a sentence separator.

|

| 21 |

+

|

| 22 |

+

For detailed documentation, please see [doc/documentation.pdf](https://github.com/roman-janik/PONER/blob/main/doc/documentation.pdf). In case of any question, please use GitHub Issues.

|

| 23 |

+

|

| 24 |

+

## Results

|

| 25 |

+

|

| 26 |

+

This dataset was used for training several NER models.

|

| 27 |

+

|

| 28 |

+

### RobeCzech

|

| 29 |

+

|

| 30 |

+

RobeCzech [3], a Czech version of RoBERTa [4] model was finetuned using PONER, CHNEC [2], and Czech Named Entity Corpus (CNEC)[5]. All datasets train and test splits were concatenated and used together during training and the model was then evaluated separately on each dataset.

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

| Model | CNEC 2.0 test | CHNEC 1.0 test | PONER 1.0 test |

|

| 34 |

+

| --------- | --------- | --------- | --------- |

|

| 35 |

+

| RobeCzech | 0.886 | 0.876 | **0.871** |

|

| 36 |

+

|

| 37 |

+

### Czech RoBERTa models

|

| 38 |

+

|

| 39 |

+

Smaller versions of RoBERTa [4] model were trained on an own text dataset and then finetuned using PONER, CHNEC [2] and Czech Named Entity Corpus (CNEC)[5]. All datasets train and test splits were concatenated and used together during training and the model was then evaluated separately on each dataset. Two configurations were used: CNEC + CHNEC + PONER and PONER.

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

| Model | Configuration | CNEC 2.0 test | CHNEC 1.0 test | PONER 1.0 test |

|

| 43 |

+

| --------- | --------- | --------- | --------- | --------- |

|

| 44 |

+

| Czech RoBERTa 8L_512H| CNEC + CHNEC + PONER | 0.800 | 0.867 | **0.841** |

|

| 45 |

+

| Czech RoBERTa 8L_512H | PONER | - | - | **0.832** |

|

| 46 |

+

|

| 47 |

+

## Data

|

| 48 |

+

|

| 49 |

+

Data are organized as follows: `data/conll` contains dataset CoNLL files, with whole data in `poner.conll` and splits used

|

| 50 |

+

for training in the original thesis. These splits are 0.45/0.50/0.05 for train/test/dev. You can create your own splits with `scripts/split_poner_dataset_conll.py`. `data/hugging_face` contains original splits in the Hugging Face format. `data/label_studio_annotations`

|

| 51 |

+

contains the final Label Studio JSON export file. `data/source_data` contains original text and image files of annotated pages.

|

| 52 |

+

|

| 53 |

+

#### Examples

|

| 54 |

+

|

| 55 |

+

CoNLL:

|

| 56 |

+

|

| 57 |

+

```

|

| 58 |

+

Od O

|

| 59 |

+

9. B-t

|

| 60 |

+

listopadu I-t

|

| 61 |

+

1895 I-t

|

| 62 |

+

zastupoval O

|

| 63 |

+

starostu O

|

| 64 |

+

Fr B-p

|

| 65 |

+

. I-p

|

| 66 |

+

Štěpka I-p

|

| 67 |

+

zemřel O

|

| 68 |

+

2. B-t

|

| 69 |

+

února I-t

|

| 70 |

+

1896 I-t

|

| 71 |

+

) O

|

| 72 |

+

pan O

|

| 73 |

+

Jindřich B-p

|

| 74 |

+

Matzenauer I-p

|

| 75 |

+

. O

|

| 76 |

+

|

| 77 |

+

```

|

| 78 |

+

|

| 79 |

+

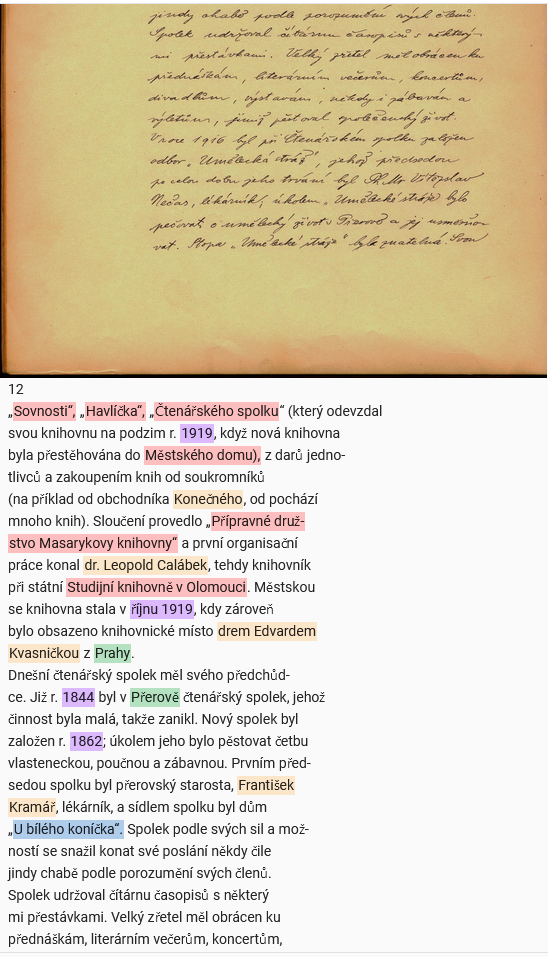

Label Studio page:

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

|

| 83 |

+

## Scripts

|

| 84 |

+

|

| 85 |

+

Directory `scripts` contain Python scripts used for the creation of the dataset. There are two scripts for

|

| 86 |

+

editing Label Studio JSON annotation file, one for creating CoNLL version out of an annotation file and text files,

|

| 87 |

+

one for creating splits and one for loading CoNNL files and transforming them to the Hugging Face dataset format. Scripts are written in Python 10.0.

|

| 88 |

+

To be able to run all scripts, in the scripts directory run the:

|

| 89 |

+

|

| 90 |

+

```shellscript

|

| 91 |

+

pip install -r requirements.txt

|

| 92 |

+

```

|

| 93 |

+

|

| 94 |

+

## License

|

| 95 |

+

|

| 96 |

+

PONER is licensed under the Apache License Version 2.0.

|

| 97 |

+

|

| 98 |

+

## Citation

|

| 99 |

+

|

| 100 |

+

If you use PONER in your work, please cite the

|

| 101 |

+

[Document Information Extraction](https://dspace.vutbr.cz/handle/11012/213801?locale-attribute=en).

|

| 102 |

+

|

| 103 |

+

```

|

| 104 |

+

@mastersthesis{janik-2023-document-information-extraction,

|

| 105 |

+

title = "Document Information Extraction",

|

| 106 |

+

author = "Janík, Roman",

|

| 107 |

+

language = "eng",

|

| 108 |

+

year = "2023",

|

| 109 |

+

school = "Brno University of Technology, Faculty of Information Technology",

|

| 110 |

+

url = "https://dspace.vutbr.cz/handle/11012/213801?locale-attribute=en",

|

| 111 |

+

type = "Master’s thesis",

|

| 112 |

+

note = "Supervisor Ing. Michal Hradiš, Ph.D."

|

| 113 |

+

}

|

| 114 |

+

```

|

| 115 |

+

|

| 116 |

+

## References

|

| 117 |

+

[1] - **O Kodym, M Hradiš**: *Page Layout Analysis System for Unconstrained Historic Documents.* ICDAR, 2021, [PERO OCR](https://pero-ocr.fit.vutbr.cz/).

|

| 118 |

+

|

| 119 |

+

[2] - **Hubková, H., Kral, P. and Pettersson, E.** Czech Historical Named Entity

|

| 120 |

+

Corpus v 1.0. In: *Proceedings of the 12th Language Resources and Evaluation Conference.* Marseille, France: European Language Resources Association, May 2020, p. 4458–4465. ISBN 979-10-95546-34-4. Available at:

|

| 121 |

+

https://aclanthology.org/2020.lrec-1.549.

|

| 122 |

+

|

| 123 |

+

[3] - **Straka, M., Náplava, J., Straková, J. and Samuel, D.** RobeCzech: Czech

|

| 124 |

+

RoBERTa, a Monolingual Contextualized Language Representation Model. In: *24th

|

| 125 |

+

International Conference on Text, Speech and Dialogue.* Cham, Switzerland:

|

| 126 |

+

Springer, 2021, p. 197–209. ISBN 978-3-030-83526-2.

|

| 127 |

+

|

| 128 |

+

[4] - **Liu, Y., Ott, M., Goyal, N., Du, J., Joshi, M. et al.** RoBERTa: A Robustly

|

| 129 |

+

Optimized BERT Pretraining Approach. 2019. Available at:

|

| 130 |

+

http://arxiv.org/abs/1907.11692.

|

| 131 |

+

|

| 132 |

+

[5] - **Ševčíková, M., Žabokrtský, Z., Straková, J. and Straka, M.** Czech Named

|

| 133 |

+

Entity Corpus 2.0. 2014. LINDAT/CLARIAH-CZ digital library at the Institute of Formal

|

| 134 |

+

and Applied Linguistics (ÚFAL), Faculty of Mathematics and Physics, Charles University.

|

| 135 |

+

Available at: http://hdl.handle.net/11858/00-097C-0000-0023-1B22-8.

|

doc/documentation.pdf

ADDED

|

Binary file (915 kB). View file

|

|

|

img/label-studio-task-overview.png

ADDED

|

scripts/adjust_annotation_end.py

ADDED

|

@@ -0,0 +1,226 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Author: Roman Janík

|

| 2 |

+

# Script for adjusting the end of entity annotation span in text. Label Studio marks additional characters

|

| 3 |

+

# at the end of entity, which do not belong to it (usually dot or comma).

|

| 4 |

+

#

|

| 5 |

+

|

| 6 |

+

import argparse

|

| 7 |

+

import json

|

| 8 |

+

import re

|

| 9 |

+

|

| 10 |

+

from pynput import keyboard

|

| 11 |

+

from pynput.keyboard import Key

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

def get_args():

|

| 15 |

+

parser = argparse.ArgumentParser(description="Script for adjusting the end of entity annotation span in text. Label\

|

| 16 |

+

Studio marks additional characters at the end of entity, which do not belong to it\

|

| 17 |

+

(usually dot or comma).")

|

| 18 |

+

parser.add_argument("-s", "--source_file", required=True, help="Path to source Label Studio json annotations file.")

|

| 19 |

+

args = parser.parse_args()

|

| 20 |

+

return args

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

def save(annotations_file, annotations):

|

| 24 |

+

with open(annotations_file, "w", encoding='utf8') as f:

|

| 25 |

+

json.dump(annotations, f, indent=2, ensure_ascii=False)

|

| 26 |

+

print("Annotations were saved!")

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

def auto_correct(n_entity_text, n_entity_type):

|

| 30 |

+

if len(n_entity_text) >= 2 and n_entity_text[-2].isalnum() and n_entity_text[-1] == "\n":

|

| 31 |

+

return 1

|

| 32 |

+

|

| 33 |

+

if n_entity_type == "Artifact names/Objects":

|

| 34 |

+

monetary_u = ["zl.", "kr.", "zl. r. m.", "kr. r. m.", "tol.", "zl. r. č.", "h.", "hal.", "fl.", "gr."]

|

| 35 |

+

if n_entity_text in monetary_u:

|

| 36 |

+

return 0

|

| 37 |

+

to_be_determined = ["Uh.", "př.", "č.", "R.", "lt.", "r.", "J. K. K.", "sv. Karla\nBor.",

|

| 38 |

+

"čís. 80. Sov. zák. a nař.", "čís. 80\nSb. z. a n."]

|

| 39 |

+

if n_entity_text in to_be_determined:

|

| 40 |

+

return -1

|

| 41 |

+

if n_entity_text.endswith("Sb."):

|

| 42 |

+

return 0

|

| 43 |

+

if len(n_entity_text) >= 2 and n_entity_text[-2].isalnum():

|

| 44 |

+

return 1

|

| 45 |

+

|

| 46 |

+

if n_entity_type == "Time expressions":

|

| 47 |

+

# year

|

| 48 |

+

if re.search("^[0-9]{4}$", n_entity_text[:-1]):

|

| 49 |

+

return 1

|

| 50 |

+

# single day

|

| 51 |

+

if re.search("^[0-9][.]$|^[0-9]{2}[.]$", n_entity_text):

|

| 52 |

+

return 0

|

| 53 |

+

# single day with comma

|

| 54 |

+

if re.search("^[0-9][,]$|^[0-9]{2}[,]$", n_entity_text):

|

| 55 |

+

return 1

|

| 56 |

+

# day and month

|

| 57 |

+

if re.search("^[0-9][.][ ]?[0-9][.]$|^[0-9][.][ ]?[0-9]{2}[.]$|^[0-9]{2}[.][ ]?[0-9][.]$|^[0-9]{2}[.][ ]?["

|

| 58 |

+

"0-9]{2}[.]$", n_entity_text):

|

| 59 |

+

return 0

|

| 60 |

+

# year span

|

| 61 |

+

if re.search("^[0-9]{4}[ ]?[-][ ]?[0-9]{4}$|^[0-9]{4}[ ]?[-][ ]?[0-9]{2}$", n_entity_text[:-1]):

|

| 62 |

+

return 1

|

| 63 |

+

# date with "hod." or "t.r." at the end

|

| 64 |

+

if n_entity_text.endswith(("hod.", "t.r.", "t. r.", "t.m.", "t. m.")):

|

| 65 |

+

return 0

|

| 66 |

+

|

| 67 |

+

if n_entity_type == "Geographical names":

|

| 68 |

+

# street name correct

|

| 69 |

+

if n_entity_text.endswith(("ul.", "tř.", "nám.")):

|

| 70 |

+

return 0

|

| 71 |

+

# street name incorrect

|

| 72 |

+

if n_entity_text.endswith(("ul.,", "tř.,", "nám.,")):

|

| 73 |

+

return 1

|

| 74 |

+

|

| 75 |

+

if n_entity_type == "Personal names":

|

| 76 |

+

# names ending with "ml." or "st." correct

|

| 77 |

+

if n_entity_text.endswith(("ml.", "st.")):

|

| 78 |

+

return 0

|

| 79 |

+

# names ending with "ml." or "st." incorrect

|

| 80 |

+

if n_entity_text.endswith(("ml.,", "st.,")):

|

| 81 |

+

return 1

|

| 82 |

+

|

| 83 |

+

if n_entity_type == "Institutions":

|

| 84 |

+

# M.N.V., O.N.V., K.N.V., N.J., N.F., J.Z.D.

|

| 85 |

+

if re.search("^[M][.][ ]?[N][.][ ]?[V][.]$|^[O][.][ ]?[N][.][ ]?[V][.]$|^[K][.][ ]?[N][.][ ]?[V][.]$|^[N][.][ "

|

| 86 |

+

"]?[J][.]$|^[N][.][ ]?[F][.]|^[J][.][ ]?[Z][.][ ]?[D][.]$", n_entity_text):

|

| 87 |

+

return 0

|

| 88 |

+

# M.N.V., O.N.V., K.N.V., N.J., N.F., J.Z.D. with additional char

|

| 89 |

+

if re.search(

|

| 90 |

+

"^[M][.][ ]?[N][.][ ]?[V][.]$|^[O][.][ ]?[N][.][ ]?[V][.]$|^[K][.][ ]?[N][.][ ]?[V][.]$|^[N][.][ "

|

| 91 |

+

"]?[J][.]$|^[N][.][ ]?[F][.]|^[J][.][ ]?[Z][.][ ]?[D][.]$", n_entity_text[:-1]):

|

| 92 |

+

return 1

|

| 93 |

+

if n_entity_text[:-1] in ["JZD", "KSČ", "Ksč", "ksč", "Kčs", "ksč", "NF", "MNV", "ONV", "KNV"]:

|

| 94 |

+

return 1

|

| 95 |

+

|

| 96 |

+

if n_entity_type in ["Geographical names", "Personal names", "Institutions"]:

|

| 97 |

+

# shorten entities with non-dot char at the end

|

| 98 |

+

if n_entity_text[-1] != ".":

|

| 99 |

+

words = n_entity_text[:-1].split()

|

| 100 |

+

if all([word.isalnum() for word in words]):

|

| 101 |

+

return 1

|

| 102 |

+

|

| 103 |

+

return -1

|

| 104 |

+

|

| 105 |

+

|

| 106 |

+

def main():

|

| 107 |

+

args = get_args()

|

| 108 |

+

|

| 109 |

+

print("Script for adjusting the end of entity annotation span in text. "

|

| 110 |

+

"Script goes through page text files and their annotations json record. "

|

| 111 |

+

"End of entity is adjusted manually or automatically is possible and annotations are saved to the same file."

|

| 112 |

+

"Adjusting starts at last adjusted entity, controls: right arrow - next entity, left arrow - previous entity,"

|

| 113 |

+

"up arrow - +1 length, down arrow - -1 length, s - save"

|

| 114 |

+

"automatic save after 10 entities\n")

|

| 115 |

+

|

| 116 |

+

def on_key_release(key):

|

| 117 |

+

nonlocal annotations, n_entity_idx, page_idx, page_text, stop_edit, adjusted_n_entities

|

| 118 |

+

|

| 119 |

+

# next entity

|

| 120 |

+

if key == Key.right:

|

| 121 |

+

annotations[page_idx]["ner"][n_entity_idx]["adjusted"] = True

|

| 122 |

+

n_entity_idx += 1

|

| 123 |

+

adjusted_n_entities += 1

|

| 124 |

+

exit()

|

| 125 |

+

# previous adjusted entity

|

| 126 |

+

elif key == Key.left:

|

| 127 |

+

while n_entity_idx > 0:

|

| 128 |

+

n_entity_idx -= 1

|

| 129 |

+

if "adjusted" in annotations[page_idx]["ner"][n_entity_idx].keys():

|

| 130 |

+

annotations[page_idx]["ner"][n_entity_idx]["adjusted"] = False

|

| 131 |

+

adjusted_n_entities -= 1

|

| 132 |

+

break

|

| 133 |

+

exit()

|

| 134 |

+

# +1 length

|

| 135 |

+

elif key == Key.up:

|

| 136 |

+

if annotations[page_idx]["ner"][n_entity_idx]["end"] + 1 == len(page_text):

|

| 137 |

+

print("Entity span cannot be prolonged, end of page text is reached!")

|

| 138 |

+

else:

|

| 139 |

+

annotations[page_idx]["ner"][n_entity_idx]["end"] += 1

|

| 140 |

+

print("Entity length + 1, end: {}".format(annotations[page_idx]["ner"][n_entity_idx]["end"]))

|

| 141 |

+

# -1 length

|

| 142 |

+

elif key == Key.down:

|

| 143 |

+

if annotations[page_idx]["ner"][n_entity_idx]["end"] - 1 == 0:

|

| 144 |

+

print("Entity span cannot be shortened, start of page text is reached!")

|

| 145 |

+

else:

|

| 146 |

+

annotations[page_idx]["ner"][n_entity_idx]["end"] -= 1

|

| 147 |

+

print("Entity length - 1, end: {}".format(annotations[page_idx]["ner"][n_entity_idx]["end"]))

|

| 148 |

+

# save

|

| 149 |

+

elif key == Key.esc:

|

| 150 |

+

save(args.source_file, annotations)

|

| 151 |

+

print("Editing stopped!")

|

| 152 |

+

stop_edit = True

|

| 153 |

+

exit()

|

| 154 |

+

|

| 155 |

+

with open(args.source_file, encoding="utf-8") as f:

|

| 156 |

+

annotations = json.load(f)

|

| 157 |

+

|

| 158 |

+

page_idx = 0

|

| 159 |

+

n_entity_idx = 0

|

| 160 |

+

for i, page in enumerate(annotations):

|

| 161 |

+

if "adjusted" not in page.keys() or not page["adjusted"]:

|

| 162 |

+

page_idx = i

|

| 163 |

+

for j, n_entity in enumerate(page["ner"]):

|

| 164 |

+

if "adjusted" not in n_entity.keys():

|

| 165 |

+

n_entity_idx = j

|

| 166 |

+

break

|

| 167 |

+

break

|

| 168 |

+

|

| 169 |

+

adjusted_pages = 0

|

| 170 |

+

adjusted_n_entities = 1

|

| 171 |

+

stop_edit = False

|

| 172 |

+

while True:

|

| 173 |

+

page_text_path = annotations[page_idx]["text"].replace(

|

| 174 |

+

"http://localhost:8081", "../../../datasets/poner1.0/data")

|

| 175 |

+

with open(page_text_path, encoding="utf-8") as p_f:

|

| 176 |

+

page_text = p_f.read()

|

| 177 |

+

page_name = annotations[page_idx]["page_name"]

|

| 178 |

+

print(f"Page: {adjusted_pages}\n{page_name}\n{page_text}\n\nEntities without alphanum end:\n")

|

| 179 |

+

|

| 180 |

+

while True:

|

| 181 |

+

n_entity = annotations[page_idx]["ner"][n_entity_idx]

|

| 182 |

+

n_entity_text = page_text[n_entity["start"]:n_entity["end"]]

|

| 183 |

+

n_entity_type = n_entity["labels"][0]

|

| 184 |

+

if not n_entity_text[-1].isalnum() or ("adjusted" in n_entity.keys() and not n_entity["adjusted"]):

|

| 185 |

+

# try auto correct function, if auto correction is not possible, manual correction is applied

|

| 186 |

+

end_shift = auto_correct(n_entity_text, n_entity_type)

|

| 187 |

+

if end_shift == -1 or ("adjusted" in n_entity.keys() and not n_entity["adjusted"]):

|

| 188 |

+

context_start = 0 if n_entity["start"] - 100 < 0 else n_entity["start"] - 100

|

| 189 |

+

context_end = len(page_text) - 1 if n_entity["start"] + 100 >= len(page_text) \

|

| 190 |

+

else n_entity["start"] + 100

|

| 191 |

+

print(

|

| 192 |

+

f"\n{n_entity_text}\n------------------------------\n{n_entity_type}\n"

|

| 193 |

+

f"------------------------------\n{page_text[context_start:context_end]}\n")

|

| 194 |

+

with keyboard.Listener(on_release=on_key_release) as listener:

|

| 195 |

+

listener.join()

|

| 196 |

+

if stop_edit:

|

| 197 |

+

return

|

| 198 |

+

else:

|

| 199 |

+

# auto correction application

|

| 200 |

+

annotations[page_idx]["ner"][n_entity_idx]["end"] -= end_shift

|

| 201 |

+

annotations[page_idx]["ner"][n_entity_idx]["auto_adjusted"] = True

|

| 202 |

+

n_entity_idx += 1

|

| 203 |

+

adjusted_n_entities += 1

|

| 204 |

+

|

| 205 |

+

# auto save

|

| 206 |

+

if adjusted_n_entities % 10 == 0:

|

| 207 |

+

save(args.source_file, annotations)

|

| 208 |

+

else:

|

| 209 |

+

n_entity_idx += 1

|

| 210 |

+

if n_entity_idx == len(annotations[page_idx]["ner"]):

|

| 211 |

+

n_entity_idx = 0

|

| 212 |

+

break

|

| 213 |

+

|

| 214 |

+

adjusted_pages += 1

|

| 215 |

+

|

| 216 |

+

annotations[page_idx]["adjusted"] = True

|

| 217 |

+

page_idx += 1

|

| 218 |

+

if page_idx == len(annotations):

|

| 219 |

+

save(args.source_file, annotations)

|

| 220 |

+

break

|

| 221 |

+

|

| 222 |

+

print("All pages were adjusted!")

|

| 223 |

+

|

| 224 |

+

|

| 225 |

+

if __name__ == '__main__':

|

| 226 |

+

main()

|

scripts/create_poner_dataset_conll.py

ADDED

|

@@ -0,0 +1,112 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Author: Roman Janík

|

| 2 |

+

# Script for creating a CoNLL format of my dataset from text files and Label Studio annotations.

|

| 3 |

+

#

|

| 4 |

+

|

| 5 |

+

import argparse

|

| 6 |

+

import json

|

| 7 |

+

import os

|

| 8 |

+

|

| 9 |

+

from nltk.tokenize import word_tokenize, sent_tokenize

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

def get_args():

|

| 13 |

+

parser = argparse.ArgumentParser(description="Script for creating a CoNLL format of my dataset from text files and Label Studio annotations.")

|

| 14 |

+

parser.add_argument("-s", "--source_file", required=True, help="Path to source Label Studio json annotations file.")

|

| 15 |

+

parser.add_argument("-o", "--output_dir", required=True, help="Output dir for CoNLL dataset splits.")

|

| 16 |

+

args = parser.parse_args()

|

| 17 |

+

return args

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

def fix_quotes(sentence):

|

| 21 |

+

return map(lambda word: word.replace('``', '"').replace("''", '"'), sentence)

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

def process_text_annotations(text, annotations):

|

| 25 |

+

entity_types_map = {

|

| 26 |

+

"Personal names": "p",

|

| 27 |

+

"Institutions": "i",

|

| 28 |

+

"Geographical names": "g",

|

| 29 |

+

"Time expressions": "t",

|

| 30 |

+

"Artifact names/Objects": "o"

|

| 31 |

+

}

|

| 32 |

+

|

| 33 |

+

sentences = sent_tokenize(text, language="czech")

|

| 34 |

+

sentences_t = [fix_quotes(word_tokenize(x, language="czech")) for x in sentences]

|

| 35 |

+

|

| 36 |

+

sentences_idx = []

|

| 37 |

+

start = 0

|

| 38 |

+

for i, c in enumerate(text):

|

| 39 |

+

if not c.isspace():

|

| 40 |

+

start = i

|

| 41 |

+

break

|

| 42 |

+

|

| 43 |

+

for sentence in sentences_t:

|

| 44 |

+

sentence_idx = []

|

| 45 |

+

for word in sentence:

|

| 46 |

+

end = start + len(word)

|

| 47 |

+

sentence_idx.append({"word": word, "start": start, "end": end, "entity_type": "O"})

|

| 48 |

+

for i, _ in enumerate(text):

|

| 49 |

+

if end + i < len(text) and not text[end + i].isspace():

|

| 50 |

+

start = end + i

|

| 51 |

+

break

|

| 52 |

+

sentences_idx.append(sentence_idx)

|

| 53 |

+

|

| 54 |

+

for n_entity in annotations:

|

| 55 |

+

begin = True

|

| 56 |

+

done = False

|

| 57 |

+

for sentence_idx in sentences_idx:

|

| 58 |

+

for word_idx in sentence_idx:

|

| 59 |

+

if word_idx["start"] >= n_entity["start"]\

|

| 60 |

+

and (word_idx["end"] <= n_entity["end"]

|

| 61 |

+

or (not text[word_idx["end"]-1].isalnum()) and len(word_idx["word"]) > 1) and begin:

|

| 62 |

+

word_idx["entity_type"] = "B-" + entity_types_map[n_entity["labels"][0]]

|

| 63 |

+

begin = False

|

| 64 |

+

if word_idx["end"] >= n_entity["end"]:

|

| 65 |

+

done = True

|

| 66 |

+

break

|

| 67 |

+

elif word_idx["start"] > n_entity["start"] and (word_idx["end"] <= n_entity["end"]

|

| 68 |

+

or (not text[word_idx["end"]-1].isalnum() and text[word_idx["start"]].isalnum())):

|

| 69 |

+

word_idx["entity_type"] = "I-" + entity_types_map[n_entity["labels"][0]]

|

| 70 |

+

if word_idx["end"] >= n_entity["end"]:

|

| 71 |

+

done = True

|

| 72 |

+

break

|

| 73 |

+

elif word_idx["end"] > n_entity["end"]:

|

| 74 |

+

done = True

|

| 75 |

+

break

|

| 76 |

+

if done:

|

| 77 |

+

break

|

| 78 |

+

|

| 79 |

+

conll_sentences = []

|

| 80 |

+

for sentence_idx in sentences_idx:

|

| 81 |

+

conll_sentence = map(lambda w: w["word"] + " " + w["entity_type"], sentence_idx)

|

| 82 |

+

conll_sentences.append("\n".join(conll_sentence))

|

| 83 |

+

conll_sentences = "\n\n".join(conll_sentences)

|

| 84 |

+

|

| 85 |

+

return conll_sentences

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

def main():

|

| 89 |

+

args = get_args()

|

| 90 |

+

|

| 91 |

+

print("Script for creating a CoNLL format of my dataset from text files and Label Studio annotations."

|

| 92 |

+

"Script goes through page text files and their annotations json record. "

|

| 93 |

+

"Output CoNLL dataset file is saved to output directory.")

|

| 94 |

+

|

| 95 |

+

with open(args.source_file, encoding="utf-8") as f:

|

| 96 |

+

annotations = json.load(f)

|

| 97 |

+

|

| 98 |

+

print("Starting documents processing...")

|

| 99 |

+

|

| 100 |

+

with open(os.path.join(args.output_dir, "poner.conll"), "w", encoding="utf-8") as f:

|

| 101 |

+

for page in annotations:

|

| 102 |

+

page_text_path = page["text"].replace("http://localhost:8081", "../../../datasets/poner1.0/data")

|

| 103 |

+

with open(page_text_path, encoding="utf-8") as p_f:

|

| 104 |

+

page_text = p_f.read()

|

| 105 |

+

processed_page = process_text_annotations(page_text, page["ner"])

|

| 106 |

+

f.write(processed_page + "\n\n")

|

| 107 |

+

|

| 108 |

+

print("Annotations are processed.")

|

| 109 |

+

|

| 110 |

+

|

| 111 |

+

if __name__ == '__main__':

|

| 112 |

+

main()

|

scripts/poner-1_0.py

ADDED

|

@@ -0,0 +1,132 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|