Commit

·

ccbf277

1

Parent(s):

03d5016

Update parquet files

Browse files- README.md +0 -706

- dataset_infos.json +0 -103

- example.png +0 -0

- output_14_0.png +0 -0

- output_16_0.png +0 -0

- output_17_0.png +0 -0

- output_18_0.png +0 -0

- output_9_1.png +0 -0

- data/train-00000-of-00001.parquet → rubrix--sst2_with_predictions/train/0000.parquet +0 -0

- data/validation-00000-of-00001.parquet → rubrix--sst2_with_predictions/validation/0000.parquet +0 -0

- sst2_example.ipynb +0 -0

README.md

DELETED

|

@@ -1,706 +0,0 @@

|

|

| 1 |

-

# Comparing model predictions and ground truth labels with Rubrix and Hugging Face

|

| 2 |

-

|

| 3 |

-

## Build dataset

|

| 4 |

-

|

| 5 |

-

You can skip this step if you run:

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

```python

|

| 10 |

-

from datasets import load_dataset

|

| 11 |

-

import rubrix as rb

|

| 12 |

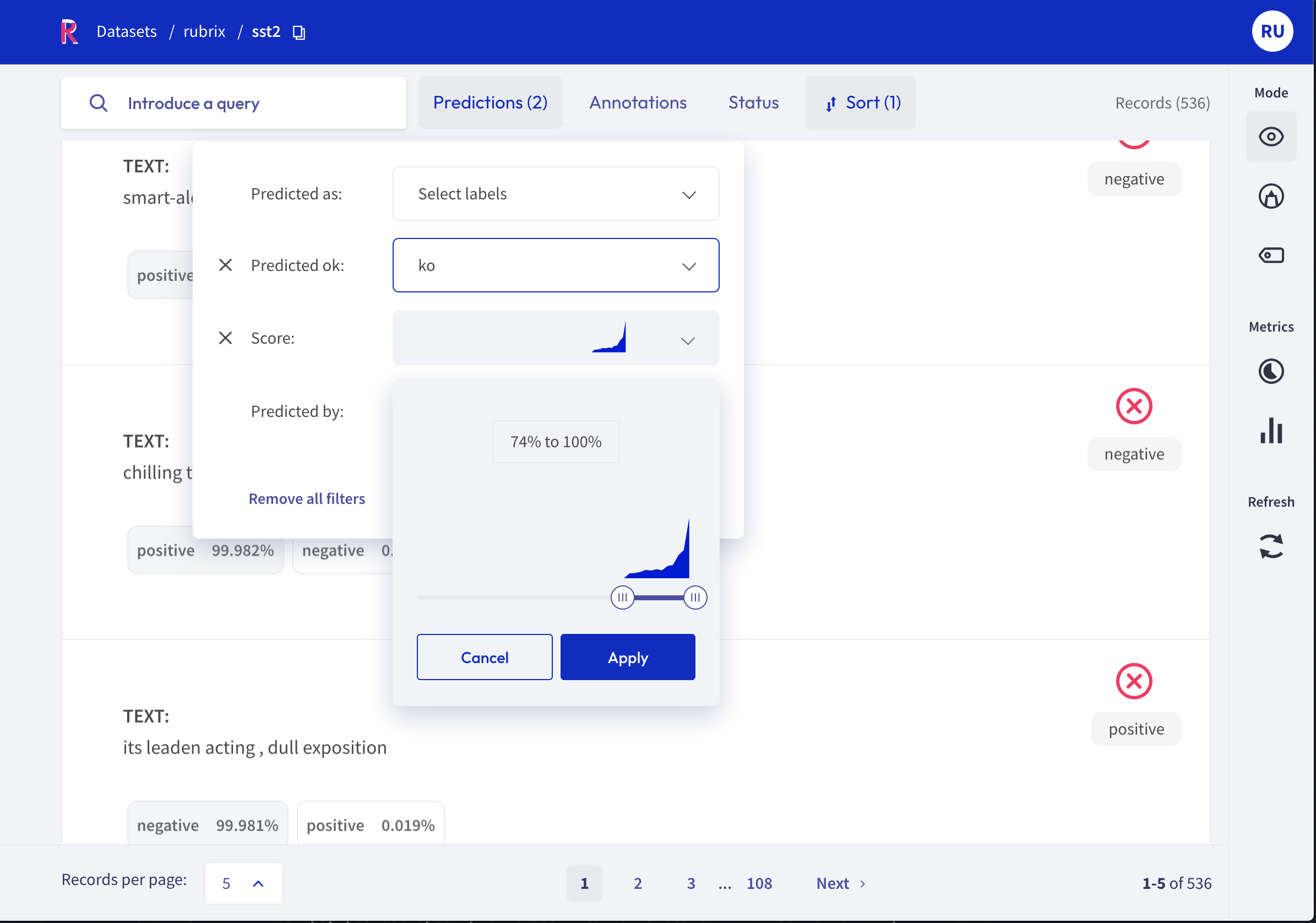

-

|

| 13 |

-

ds = rb.DatasetForTextClassification.from_datasets(load_dataset("rubrix/sst2_with_predictions", split="train"))

|

| 14 |

-

```

|

| 15 |

-

|

| 16 |

-

|

| 17 |

-

Otherwise, the following cell will run the pipeline over the training set and store labels and predictions.

|

| 18 |

-

|

| 19 |

-

|

| 20 |

-

```python

|

| 21 |

-

from datasets import load_dataset

|

| 22 |

-

from transformers import pipeline, AutoModelForSequenceClassification

|

| 23 |

-

|

| 24 |

-

import rubrix as rb

|

| 25 |

-

|

| 26 |

-

name = "distilbert-base-uncased-finetuned-sst-2-english"

|

| 27 |

-

|

| 28 |

-

# Need to define id2label because surprisingly the pipeline has uppercase label names

|

| 29 |

-

model = AutoModelForSequenceClassification.from_pretrained(name, id2label={0: 'negative', 1: 'positive'})

|

| 30 |

-

nlp = pipeline("sentiment-analysis", model=model, tokenizer=name, return_all_scores=True)

|

| 31 |

-

|

| 32 |

-

dataset = load_dataset("glue", "sst2", split="train")

|

| 33 |

-

|

| 34 |

-

# batch predict

|

| 35 |

-

def predict(example):

|

| 36 |

-

return {"prediction": nlp(example["sentence"])}

|

| 37 |

-

|

| 38 |

-

# add predictions to the dataset

|

| 39 |

-

dataset = dataset.map(predict, batched=True).rename_column("sentence", "text")

|

| 40 |

-

|

| 41 |

-

# build rubrix dataset from hf dataset

|

| 42 |

-

ds = rb.DatasetForTextClassification.from_datasets(dataset, annotation="label")

|

| 43 |

-

```

|

| 44 |

-

|

| 45 |

-

|

| 46 |

-

```python

|

| 47 |

-

# Install Rubrix and start exploring and sharing URLs with interesting subsets, etc.

|

| 48 |

-

rb.log(ds, "sst2")

|

| 49 |

-

```

|

| 50 |

-

|

| 51 |

-

|

| 52 |

-

```python

|

| 53 |

-

ds.to_datasets().push_to_hub("rubrix/sst2_with_predictions")

|

| 54 |

-

```

|

| 55 |

-

|

| 56 |

-

|

| 57 |

-

Pushing dataset shards to the dataset hub: 0%| | 0/1 [00:00<?, ?it/s]

|

| 58 |

-

|

| 59 |

-

|

| 60 |

-

## Analize misspredictions and ambiguous labels

|

| 61 |

-

|

| 62 |

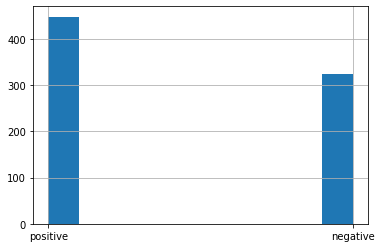

-

### With the UI

|

| 63 |

-

|

| 64 |

-

With Rubrix's UI you can:

|

| 65 |

-

|

| 66 |

-

- Combine filters and full-text/DSL queries to quickly find important samples

|

| 67 |

-

- All URLs contain the state so you can share with collaborator and annotator specific dataset regions to work on.

|

| 68 |

-

- Sort examples by score, as well as custom metadata fields.

|

| 69 |

-

|

| 70 |

-

|

| 71 |

-

|

| 72 |

-

|

| 73 |

-

|

| 74 |

-

|

| 75 |

-

### Programmatically

|

| 76 |

-

|

| 77 |

-

Let's find all the wrong predictions from Python. This is useful for bulk operations (relabelling, discarding, etc.) as well as

|

| 78 |

-

|

| 79 |

-

|

| 80 |

-

```python

|

| 81 |

-

import pandas as pd

|

| 82 |

-

|

| 83 |

-

# Get dataset slice with wrong predictions

|

| 84 |

-

df = rb.load("sst2", query="predicted:ko").to_pandas()

|

| 85 |

-

|

| 86 |

-

# display first 20 examples

|

| 87 |

-

with pd.option_context('display.max_colwidth', None):

|

| 88 |

-

display(df[["text", "prediction", "annotation"]].head(20))

|

| 89 |

-

```

|

| 90 |

-

|

| 91 |

-

|

| 92 |

-

<div>

|

| 93 |

-

<style scoped>

|

| 94 |

-

.dataframe tbody tr th:only-of-type {

|

| 95 |

-

vertical-align: middle;

|

| 96 |

-

}

|

| 97 |

-

|

| 98 |

-

.dataframe tbody tr th {

|

| 99 |

-

vertical-align: top;

|

| 100 |

-

}

|

| 101 |

-

|

| 102 |

-

.dataframe thead th {

|

| 103 |

-

text-align: right;

|

| 104 |

-

}

|

| 105 |

-

</style>

|

| 106 |

-

<table border="1" class="dataframe">

|

| 107 |

-

<thead>

|

| 108 |

-

<tr style="text-align: right;">

|

| 109 |

-

<th></th>

|

| 110 |

-

<th>text</th>

|

| 111 |

-

<th>prediction</th>

|

| 112 |

-

<th>annotation</th>

|

| 113 |

-

</tr>

|

| 114 |

-

</thead>

|

| 115 |

-

<tbody>

|

| 116 |

-

<tr>

|

| 117 |

-

<th>0</th>

|

| 118 |

-

<td>this particular , anciently demanding métier</td>

|

| 119 |

-

<td>[(negative, 0.9386059045791626), (positive, 0.06139408051967621)]</td>

|

| 120 |

-

<td>positive</td>

|

| 121 |

-

</tr>

|

| 122 |

-

<tr>

|

| 123 |

-

<th>1</th>

|

| 124 |

-

<td>under our skin</td>

|

| 125 |

-

<td>[(positive, 0.7508484721183777), (negative, 0.24915160238742828)]</td>

|

| 126 |

-

<td>negative</td>

|

| 127 |

-

</tr>

|

| 128 |

-

<tr>

|

| 129 |

-

<th>2</th>

|

| 130 |

-

<td>evokes a palpable sense of disconnection , made all the more poignant by the incessant use of cell phones .</td>

|

| 131 |

-

<td>[(negative, 0.6634528636932373), (positive, 0.3365470767021179)]</td>

|

| 132 |

-

<td>positive</td>

|

| 133 |

-

</tr>

|

| 134 |

-

<tr>

|

| 135 |

-

<th>3</th>

|

| 136 |

-

<td>plays like a living-room war of the worlds , gaining most of its unsettling force from the suggested and the unknown .</td>

|

| 137 |

-

<td>[(positive, 0.9968075752258301), (negative, 0.003192420583218336)]</td>

|

| 138 |

-

<td>negative</td>

|

| 139 |

-

</tr>

|

| 140 |

-

<tr>

|

| 141 |

-

<th>4</th>

|

| 142 |

-

<td>into a pulpy concept that , in many other hands would be completely forgettable</td>

|

| 143 |

-

<td>[(positive, 0.6178210377693176), (negative, 0.3821789622306824)]</td>

|

| 144 |

-

<td>negative</td>

|

| 145 |

-

</tr>

|

| 146 |

-

<tr>

|

| 147 |

-

<th>5</th>

|

| 148 |

-

<td>transcends ethnic lines .</td>

|

| 149 |

-

<td>[(positive, 0.9758220314979553), (negative, 0.024177948012948036)]</td>

|

| 150 |

-

<td>negative</td>

|

| 151 |

-

</tr>

|

| 152 |

-

<tr>

|

| 153 |

-

<th>6</th>

|

| 154 |

-

<td>is barely</td>

|

| 155 |

-

<td>[(negative, 0.9922297596931458), (positive, 0.00777028314769268)]</td>

|

| 156 |

-

<td>positive</td>

|

| 157 |

-

</tr>

|

| 158 |

-

<tr>

|

| 159 |

-

<th>7</th>

|

| 160 |

-

<td>a pulpy concept that , in many other hands would be completely forgettable</td>

|

| 161 |

-

<td>[(negative, 0.9738760590553284), (positive, 0.026123959571123123)]</td>

|

| 162 |

-

<td>positive</td>

|

| 163 |

-

</tr>

|

| 164 |

-

<tr>

|

| 165 |

-

<th>8</th>

|

| 166 |

-

<td>of hollywood heart-string plucking</td>

|

| 167 |

-

<td>[(positive, 0.9889695644378662), (negative, 0.011030420660972595)]</td>

|

| 168 |

-

<td>negative</td>

|

| 169 |

-

</tr>

|

| 170 |

-

<tr>

|

| 171 |

-

<th>9</th>

|

| 172 |

-

<td>a minimalist beauty and the beast</td>

|

| 173 |

-

<td>[(positive, 0.9100378751754761), (negative, 0.08996208757162094)]</td>

|

| 174 |

-

<td>negative</td>

|

| 175 |

-

</tr>

|

| 176 |

-

<tr>

|

| 177 |

-

<th>10</th>

|

| 178 |

-

<td>the intimate , unguarded moments of folks who live in unusual homes --</td>

|

| 179 |

-

<td>[(positive, 0.9967381358146667), (negative, 0.0032618637196719646)]</td>

|

| 180 |

-

<td>negative</td>

|

| 181 |

-

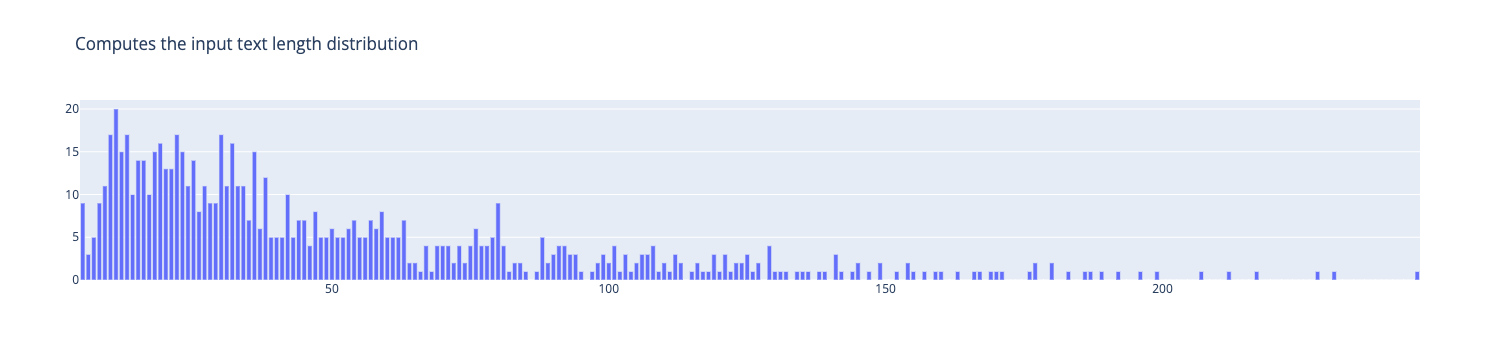

</tr>

|

| 182 |

-

<tr>

|

| 183 |

-

<th>11</th>

|

| 184 |

-

<td>steals the show</td>

|

| 185 |

-

<td>[(negative, 0.8031412363052368), (positive, 0.1968587338924408)]</td>

|

| 186 |

-

<td>positive</td>

|

| 187 |

-

</tr>

|

| 188 |

-

<tr>

|

| 189 |

-

<th>12</th>

|

| 190 |

-

<td>enough</td>

|

| 191 |

-

<td>[(positive, 0.7941301465034485), (negative, 0.2058698982000351)]</td>

|

| 192 |

-

<td>negative</td>

|

| 193 |

-

</tr>

|

| 194 |

-

<tr>

|

| 195 |

-

<th>13</th>

|

| 196 |

-

<td>accept it as life and</td>

|

| 197 |

-

<td>[(positive, 0.9987508058547974), (negative, 0.0012492131209000945)]</td>

|

| 198 |

-

<td>negative</td>

|

| 199 |

-

</tr>

|

| 200 |

-

<tr>

|

| 201 |

-

<th>14</th>

|

| 202 |

-

<td>this is the kind of movie that you only need to watch for about thirty seconds before you say to yourself , ` ah , yes ,</td>

|

| 203 |

-

<td>[(negative, 0.7889454960823059), (positive, 0.21105451881885529)]</td>

|

| 204 |

-

<td>positive</td>

|

| 205 |

-

</tr>

|

| 206 |

-

<tr>

|

| 207 |

-

<th>15</th>

|

| 208 |

-

<td>plunges you into a reality that is , more often then not , difficult and sad ,</td>

|

| 209 |

-

<td>[(positive, 0.967541515827179), (negative, 0.03245845437049866)]</td>

|

| 210 |

-

<td>negative</td>

|

| 211 |

-

</tr>

|

| 212 |

-

<tr>

|

| 213 |

-

<th>16</th>

|

| 214 |

-

<td>overcomes the script 's flaws and envelops the audience in his character 's anguish , anger and frustration .</td>

|

| 215 |

-

<td>[(positive, 0.9953157901763916), (negative, 0.004684178624302149)]</td>

|

| 216 |

-

<td>negative</td>

|

| 217 |

-

</tr>

|

| 218 |

-

<tr>

|

| 219 |

-

<th>17</th>

|

| 220 |

-

<td>troubled and determined homicide cop</td>

|

| 221 |

-

<td>[(negative, 0.6632784008979797), (positive, 0.33672159910202026)]</td>

|

| 222 |

-

<td>positive</td>

|

| 223 |

-

</tr>

|

| 224 |

-

<tr>

|

| 225 |

-

<th>18</th>

|

| 226 |

-

<td>human nature is a goofball movie , in the way that malkovich was , but it tries too hard</td>

|

| 227 |

-

<td>[(positive, 0.5959018468856812), (negative, 0.40409812331199646)]</td>

|

| 228 |

-

<td>negative</td>

|

| 229 |

-

</tr>

|

| 230 |

-

<tr>

|

| 231 |

-

<th>19</th>

|

| 232 |

-

<td>to watch too many barney videos</td>

|

| 233 |

-

<td>[(negative, 0.9909896850585938), (positive, 0.00901023019105196)]</td>

|

| 234 |

-

<td>positive</td>

|

| 235 |

-

</tr>

|

| 236 |

-

</tbody>

|

| 237 |

-

</table>

|

| 238 |

-

</div>

|

| 239 |

-

|

| 240 |

-

|

| 241 |

-

|

| 242 |

-

```python

|

| 243 |

-

df.annotation.hist()

|

| 244 |

-

```

|

| 245 |

-

|

| 246 |

-

|

| 247 |

-

|

| 248 |

-

|

| 249 |

-

<AxesSubplot:>

|

| 250 |

-

|

| 251 |

-

|

| 252 |

-

|

| 253 |

-

|

| 254 |

-

|

| 255 |

-

|

| 256 |

-

|

| 257 |

-

|

| 258 |

-

|

| 259 |

-

|

| 260 |

-

```python

|

| 261 |

-

# Get dataset slice with wrong predictions

|

| 262 |

-

df = rb.load("sst2", query="predicted:ko and annotated_as:negative").to_pandas()

|

| 263 |

-

|

| 264 |

-

# display first 20 examples

|

| 265 |

-

with pd.option_context('display.max_colwidth', None):

|

| 266 |

-

display(df[["text", "prediction", "annotation"]].head(20))

|

| 267 |

-

```

|

| 268 |

-

|

| 269 |

-

|

| 270 |

-

<div>

|

| 271 |

-

<style scoped>

|

| 272 |

-

.dataframe tbody tr th:only-of-type {

|

| 273 |

-

vertical-align: middle;

|

| 274 |

-

}

|

| 275 |

-

|

| 276 |

-

.dataframe tbody tr th {

|

| 277 |

-

vertical-align: top;

|

| 278 |

-

}

|

| 279 |

-

|

| 280 |

-

.dataframe thead th {

|

| 281 |

-

text-align: right;

|

| 282 |

-

}

|

| 283 |

-

</style>

|

| 284 |

-

<table border="1" class="dataframe">

|

| 285 |

-

<thead>

|

| 286 |

-

<tr style="text-align: right;">

|

| 287 |

-

<th></th>

|

| 288 |

-

<th>text</th>

|

| 289 |

-

<th>prediction</th>

|

| 290 |

-

<th>annotation</th>

|

| 291 |

-

</tr>

|

| 292 |

-

</thead>

|

| 293 |

-

<tbody>

|

| 294 |

-

<tr>

|

| 295 |

-

<th>0</th>

|

| 296 |

-

<td>plays like a living-room war of the worlds , gaining most of its unsettling force from the suggested and the unknown .</td>

|

| 297 |

-

<td>[(positive, 0.9968075752258301), (negative, 0.003192420583218336)]</td>

|

| 298 |

-

<td>negative</td>

|

| 299 |

-

</tr>

|

| 300 |

-

<tr>

|

| 301 |

-

<th>1</th>

|

| 302 |

-

<td>a minimalist beauty and the beast</td>

|

| 303 |

-

<td>[(positive, 0.9100378751754761), (negative, 0.08996208757162094)]</td>

|

| 304 |

-

<td>negative</td>

|

| 305 |

-

</tr>

|

| 306 |

-

<tr>

|

| 307 |

-

<th>2</th>

|

| 308 |

-

<td>accept it as life and</td>

|

| 309 |

-

<td>[(positive, 0.9987508058547974), (negative, 0.0012492131209000945)]</td>

|

| 310 |

-

<td>negative</td>

|

| 311 |

-

</tr>

|

| 312 |

-

<tr>

|

| 313 |

-

<th>3</th>

|

| 314 |

-

<td>plunges you into a reality that is , more often then not , difficult and sad ,</td>

|

| 315 |

-

<td>[(positive, 0.967541515827179), (negative, 0.03245845437049866)]</td>

|

| 316 |

-

<td>negative</td>

|

| 317 |

-

</tr>

|

| 318 |

-

<tr>

|

| 319 |

-

<th>4</th>

|

| 320 |

-

<td>overcomes the script 's flaws and envelops the audience in his character 's anguish , anger and frustration .</td>

|

| 321 |

-

<td>[(positive, 0.9953157901763916), (negative, 0.004684178624302149)]</td>

|

| 322 |

-

<td>negative</td>

|

| 323 |

-

</tr>

|

| 324 |

-

<tr>

|

| 325 |

-

<th>5</th>

|

| 326 |

-

<td>and social commentary</td>

|

| 327 |

-

<td>[(positive, 0.7863275408744812), (negative, 0.2136724889278412)]</td>

|

| 328 |

-

<td>negative</td>

|

| 329 |

-

</tr>

|

| 330 |

-

<tr>

|

| 331 |

-

<th>6</th>

|

| 332 |

-

<td>we do n't get williams ' usual tear and a smile , just sneers and bile , and the spectacle is nothing short of refreshing .</td>

|

| 333 |

-

<td>[(positive, 0.9982783794403076), (negative, 0.0017216014675796032)]</td>

|

| 334 |

-

<td>negative</td>

|

| 335 |

-

</tr>

|

| 336 |

-

<tr>

|

| 337 |

-

<th>7</th>

|

| 338 |

-

<td>before pulling the plug on the conspirators and averting an american-russian armageddon</td>

|

| 339 |

-

<td>[(positive, 0.6992855072021484), (negative, 0.30071452260017395)]</td>

|

| 340 |

-

<td>negative</td>

|

| 341 |

-

</tr>

|

| 342 |

-

<tr>

|

| 343 |

-

<th>8</th>

|

| 344 |

-

<td>in tight pants and big tits</td>

|

| 345 |

-

<td>[(positive, 0.7850217819213867), (negative, 0.2149781733751297)]</td>

|

| 346 |

-

<td>negative</td>

|

| 347 |

-

</tr>

|

| 348 |

-

<tr>

|

| 349 |

-

<th>9</th>

|

| 350 |

-

<td>that it certainly does n't feel like a film that strays past the two and a half mark</td>

|

| 351 |

-

<td>[(positive, 0.6591460108757019), (negative, 0.3408539891242981)]</td>

|

| 352 |

-

<td>negative</td>

|

| 353 |

-

</tr>

|

| 354 |

-

<tr>

|

| 355 |

-

<th>10</th>

|

| 356 |

-

<td>actress-producer and writer</td>

|

| 357 |

-

<td>[(positive, 0.8167378306388855), (negative, 0.1832621842622757)]</td>

|

| 358 |

-

<td>negative</td>

|

| 359 |

-

</tr>

|

| 360 |

-

<tr>

|

| 361 |

-

<th>11</th>

|

| 362 |

-

<td>gives devastating testimony to both people 's capacity for evil and their heroic capacity for good .</td>

|

| 363 |

-

<td>[(positive, 0.8960123062133789), (negative, 0.10398765653371811)]</td>

|

| 364 |

-

<td>negative</td>

|

| 365 |

-

</tr>

|

| 366 |

-

<tr>

|

| 367 |

-

<th>12</th>

|

| 368 |

-

<td>deep into the girls ' confusion and pain as they struggle tragically to comprehend the chasm of knowledge that 's opened between them</td>

|

| 369 |

-

<td>[(positive, 0.9729612469673157), (negative, 0.027038726955652237)]</td>

|

| 370 |

-

<td>negative</td>

|

| 371 |

-

</tr>

|

| 372 |

-

<tr>

|

| 373 |

-

<th>13</th>

|

| 374 |

-

<td>a younger lad in zen and the art of getting laid in this prickly indie comedy of manners and misanthropy</td>

|

| 375 |

-

<td>[(positive, 0.9875985980033875), (negative, 0.012401451356709003)]</td>

|

| 376 |

-

<td>negative</td>

|

| 377 |

-

</tr>

|

| 378 |

-

<tr>

|

| 379 |

-

<th>14</th>

|

| 380 |

-

<td>get on a board and , uh , shred ,</td>

|

| 381 |

-

<td>[(positive, 0.5352609753608704), (negative, 0.46473899483680725)]</td>

|

| 382 |

-

<td>negative</td>

|

| 383 |

-

</tr>

|

| 384 |

-

<tr>

|

| 385 |

-

<th>15</th>

|

| 386 |

-

<td>so preachy-keen and</td>

|

| 387 |

-

<td>[(positive, 0.9644021391868591), (negative, 0.035597823560237885)]</td>

|

| 388 |

-

<td>negative</td>

|

| 389 |

-

</tr>

|

| 390 |

-

<tr>

|

| 391 |

-

<th>16</th>

|

| 392 |

-

<td>there 's an admirable rigor to jimmy 's relentless anger , and to the script 's refusal of a happy ending ,</td>

|

| 393 |

-

<td>[(positive, 0.9928517937660217), (negative, 0.007148175034672022)]</td>

|

| 394 |

-

<td>negative</td>

|

| 395 |

-

</tr>

|

| 396 |

-

<tr>

|

| 397 |

-

<th>17</th>

|

| 398 |

-

<td>` christian bale 's quinn ( is ) a leather clad grunge-pirate with a hairdo like gandalf in a wind-tunnel and a simply astounding cor-blimey-luv-a-duck cockney accent . '</td>

|

| 399 |

-

<td>[(positive, 0.9713286757469177), (negative, 0.028671346604824066)]</td>

|

| 400 |

-

<td>negative</td>

|

| 401 |

-

</tr>

|

| 402 |

-

<tr>

|

| 403 |

-

<th>18</th>

|

| 404 |

-

<td>passion , grief and fear</td>

|

| 405 |

-

<td>[(positive, 0.9849751591682434), (negative, 0.015024829655885696)]</td>

|

| 406 |

-

<td>negative</td>

|

| 407 |

-

</tr>

|

| 408 |

-

<tr>

|

| 409 |

-

<th>19</th>

|

| 410 |

-

<td>to keep the extremes of screwball farce and blood-curdling family intensity on one continuum</td>

|

| 411 |

-

<td>[(positive, 0.8838250637054443), (negative, 0.11617499589920044)]</td>

|

| 412 |

-

<td>negative</td>

|

| 413 |

-

</tr>

|

| 414 |

-

</tbody>

|

| 415 |

-

</table>

|

| 416 |

-

</div>

|

| 417 |

-

|

| 418 |

-

|

| 419 |

-

|

| 420 |

-

```python

|

| 421 |

-

# Get dataset slice with wrong predictions

|

| 422 |

-

df = rb.load("sst2", query="predicted:ko and score:{0.99 TO *}").to_pandas()

|

| 423 |

-

|

| 424 |

-

# display first 20 examples

|

| 425 |

-

with pd.option_context('display.max_colwidth', None):

|

| 426 |

-

display(df[["text", "prediction", "annotation"]].head(20))

|

| 427 |

-

```

|

| 428 |

-

|

| 429 |

-

|

| 430 |

-

<div>

|

| 431 |

-

<style scoped>

|

| 432 |

-

.dataframe tbody tr th:only-of-type {

|

| 433 |

-

vertical-align: middle;

|

| 434 |

-

}

|

| 435 |

-

|

| 436 |

-

.dataframe tbody tr th {

|

| 437 |

-

vertical-align: top;

|

| 438 |

-

}

|

| 439 |

-

|

| 440 |

-

.dataframe thead th {

|

| 441 |

-

text-align: right;

|

| 442 |

-

}

|

| 443 |

-

</style>

|

| 444 |

-

<table border="1" class="dataframe">

|

| 445 |

-

<thead>

|

| 446 |

-

<tr style="text-align: right;">

|

| 447 |

-

<th></th>

|

| 448 |

-

<th>text</th>

|

| 449 |

-

<th>prediction</th>

|

| 450 |

-

<th>annotation</th>

|

| 451 |

-

</tr>

|

| 452 |

-

</thead>

|

| 453 |

-

<tbody>

|

| 454 |

-

<tr>

|

| 455 |

-

<th>0</th>

|

| 456 |

-

<td>plays like a living-room war of the worlds , gaining most of its unsettling force from the suggested and the unknown .</td>

|

| 457 |

-

<td>[(positive, 0.9968075752258301), (negative, 0.003192420583218336)]</td>

|

| 458 |

-

<td>negative</td>

|

| 459 |

-

</tr>

|

| 460 |

-

<tr>

|

| 461 |

-

<th>1</th>

|

| 462 |

-

<td>accept it as life and</td>

|

| 463 |

-

<td>[(positive, 0.9987508058547974), (negative, 0.0012492131209000945)]</td>

|

| 464 |

-

<td>negative</td>

|

| 465 |

-

</tr>

|

| 466 |

-

<tr>

|

| 467 |

-

<th>2</th>

|

| 468 |

-

<td>overcomes the script 's flaws and envelops the audience in his character 's anguish , anger and frustration .</td>

|

| 469 |

-

<td>[(positive, 0.9953157901763916), (negative, 0.004684178624302149)]</td>

|

| 470 |

-

<td>negative</td>

|

| 471 |

-

</tr>

|

| 472 |

-

<tr>

|

| 473 |

-

<th>3</th>

|

| 474 |

-

<td>will no doubt rally to its cause , trotting out threadbare standbys like ` masterpiece ' and ` triumph ' and all that malarkey ,</td>

|

| 475 |

-

<td>[(negative, 0.9936562180519104), (positive, 0.006343740504235029)]</td>

|

| 476 |

-

<td>positive</td>

|

| 477 |

-

</tr>

|

| 478 |

-

<tr>

|

| 479 |

-

<th>4</th>

|

| 480 |

-

<td>we do n't get williams ' usual tear and a smile , just sneers and bile , and the spectacle is nothing short of refreshing .</td>

|

| 481 |

-

<td>[(positive, 0.9982783794403076), (negative, 0.0017216014675796032)]</td>

|

| 482 |

-

<td>negative</td>

|

| 483 |

-

</tr>

|

| 484 |

-

<tr>

|

| 485 |

-

<th>5</th>

|

| 486 |

-

<td>somehow manages to bring together kevin pollak , former wrestler chyna and dolly parton</td>

|

| 487 |

-

<td>[(negative, 0.9979034662246704), (positive, 0.002096540294587612)]</td>

|

| 488 |

-

<td>positive</td>

|

| 489 |

-

</tr>

|

| 490 |

-

<tr>

|

| 491 |

-

<th>6</th>

|

| 492 |

-

<td>there 's an admirable rigor to jimmy 's relentless anger , and to the script 's refusal of a happy ending ,</td>

|

| 493 |

-

<td>[(positive, 0.9928517937660217), (negative, 0.007148175034672022)]</td>

|

| 494 |

-

<td>negative</td>

|

| 495 |

-

</tr>

|

| 496 |

-

<tr>

|

| 497 |

-

<th>7</th>

|

| 498 |

-

<td>the bottom line with nemesis is the same as it has been with all the films in the series : fans will undoubtedly enjoy it , and the uncommitted need n't waste their time on it</td>

|

| 499 |

-

<td>[(positive, 0.995850682258606), (negative, 0.004149340093135834)]</td>

|

| 500 |

-

<td>negative</td>

|

| 501 |

-

</tr>

|

| 502 |

-

<tr>

|

| 503 |

-

<th>8</th>

|

| 504 |

-

<td>is genial but never inspired , and little</td>

|

| 505 |

-

<td>[(negative, 0.9921030402183533), (positive, 0.007896988652646542)]</td>

|

| 506 |

-

<td>positive</td>

|

| 507 |

-

</tr>

|

| 508 |

-

<tr>

|

| 509 |

-

<th>9</th>

|

| 510 |

-

<td>heaped upon a project of such vast proportions need to reap more rewards than spiffy bluescreen technique and stylish weaponry .</td>

|

| 511 |

-

<td>[(negative, 0.9958089590072632), (positive, 0.004191054962575436)]</td>

|

| 512 |

-

<td>positive</td>

|

| 513 |

-

</tr>

|

| 514 |

-

<tr>

|

| 515 |

-

<th>10</th>

|

| 516 |

-

<td>than recommended -- as visually bland as a dentist 's waiting room , complete with soothing muzak and a cushion of predictable narrative rhythms</td>

|

| 517 |

-

<td>[(negative, 0.9988711476325989), (positive, 0.0011287889210507274)]</td>

|

| 518 |

-

<td>positive</td>

|

| 519 |

-

</tr>

|

| 520 |

-

<tr>

|

| 521 |

-

<th>11</th>

|

| 522 |

-

<td>spectacle and</td>

|

| 523 |

-

<td>[(positive, 0.9941601753234863), (negative, 0.005839805118739605)]</td>

|

| 524 |

-

<td>negative</td>

|

| 525 |

-

</tr>

|

| 526 |

-

<tr>

|

| 527 |

-

<th>12</th>

|

| 528 |

-

<td>groan and</td>

|

| 529 |

-

<td>[(negative, 0.9987359642982483), (positive, 0.0012639997294172645)]</td>

|

| 530 |

-

<td>positive</td>

|

| 531 |

-

</tr>

|

| 532 |

-

<tr>

|

| 533 |

-

<th>13</th>

|

| 534 |

-

<td>'re not likely to have seen before , but beneath the exotic surface ( and exotic dancing ) it 's surprisingly old-fashioned .</td>

|

| 535 |

-

<td>[(positive, 0.9908103942871094), (negative, 0.009189637377858162)]</td>

|

| 536 |

-

<td>negative</td>

|

| 537 |

-

</tr>

|

| 538 |

-

<tr>

|

| 539 |

-

<th>14</th>

|

| 540 |

-

<td>its metaphors are opaque enough to avoid didacticism , and</td>

|

| 541 |

-

<td>[(negative, 0.990602970123291), (positive, 0.00939704105257988)]</td>

|

| 542 |

-

<td>positive</td>

|

| 543 |

-

</tr>

|

| 544 |

-

<tr>

|

| 545 |

-

<th>15</th>

|

| 546 |

-

<td>by kevin bray , whose crisp framing , edgy camera work , and wholesale ineptitude with acting , tone and pace very obviously mark him as a video helmer making his feature debut</td>

|

| 547 |

-

<td>[(positive, 0.9973387122154236), (negative, 0.0026612314395606518)]</td>

|

| 548 |

-

<td>negative</td>

|

| 549 |

-

</tr>

|

| 550 |

-

<tr>

|

| 551 |

-

<th>16</th>

|

| 552 |

-

<td>evokes the frustration , the awkwardness and the euphoria of growing up , without relying on the usual tropes .</td>

|

| 553 |

-

<td>[(positive, 0.9989104270935059), (negative, 0.0010896018939092755)]</td>

|

| 554 |

-

<td>negative</td>

|

| 555 |

-

</tr>

|

| 556 |

-

<tr>

|

| 557 |

-

<th>17</th>

|

| 558 |

-

<td>, incoherence and sub-sophomoric</td>

|

| 559 |

-

<td>[(negative, 0.9962475895881653), (positive, 0.003752368036657572)]</td>

|

| 560 |

-

<td>positive</td>

|

| 561 |

-

</tr>

|

| 562 |

-

<tr>

|

| 563 |

-

<th>18</th>

|

| 564 |

-

<td>seems intimidated by both her subject matter and the period trappings of this debut venture into the heritage business .</td>

|

| 565 |

-

<td>[(negative, 0.9923072457313538), (positive, 0.007692818529903889)]</td>

|

| 566 |

-

<td>positive</td>

|

| 567 |

-

</tr>

|

| 568 |

-

<tr>

|

| 569 |

-

<th>19</th>

|

| 570 |

-

<td>despite downplaying her good looks , carries a little too much ai n't - she-cute baggage into her lead role as a troubled and determined homicide cop to quite pull off the heavy stuff .</td>

|

| 571 |

-

<td>[(negative, 0.9948075413703918), (positive, 0.005192441400140524)]</td>

|

| 572 |

-

<td>positive</td>

|

| 573 |

-

</tr>

|

| 574 |

-

</tbody>

|

| 575 |

-

</table>

|

| 576 |

-

</div>

|

| 577 |

-

|

| 578 |

-

|

| 579 |

-

|

| 580 |

-

```python

|

| 581 |

-

# Get dataset slice with wrong predictions

|

| 582 |

-

df = rb.load("sst2", query="predicted:ko and score:{* TO 0.6}").to_pandas()

|

| 583 |

-

|

| 584 |

-

# display first 20 examples

|

| 585 |

-

with pd.option_context('display.max_colwidth', None):

|

| 586 |

-

display(df[["text", "prediction", "annotation"]].head(20))

|

| 587 |

-

```

|

| 588 |

-

|

| 589 |

-

|

| 590 |

-

<div>

|

| 591 |

-

<style scoped>

|

| 592 |

-

.dataframe tbody tr th:only-of-type {

|

| 593 |

-

vertical-align: middle;

|

| 594 |

-

}

|

| 595 |

-

|

| 596 |

-

.dataframe tbody tr th {

|

| 597 |

-

vertical-align: top;

|

| 598 |

-

}

|

| 599 |

-

|

| 600 |

-

.dataframe thead th {

|

| 601 |

-

text-align: right;

|

| 602 |

-

}

|

| 603 |

-

</style>

|

| 604 |

-

<table border="1" class="dataframe">

|

| 605 |

-

<thead>

|

| 606 |

-

<tr style="text-align: right;">

|

| 607 |

-

<th></th>

|

| 608 |

-

<th>text</th>

|

| 609 |

-

<th>prediction</th>

|

| 610 |

-

<th>annotation</th>

|

| 611 |

-

</tr>

|

| 612 |

-

</thead>

|

| 613 |

-

<tbody>

|

| 614 |

-

<tr>

|

| 615 |

-

<th>0</th>

|

| 616 |

-

<td>get on a board and , uh , shred ,</td>

|

| 617 |

-

<td>[(positive, 0.5352609753608704), (negative, 0.46473899483680725)]</td>

|

| 618 |

-

<td>negative</td>

|

| 619 |

-

</tr>

|

| 620 |

-

<tr>

|

| 621 |

-

<th>1</th>

|

| 622 |

-

<td>is , truly and thankfully , a one-of-a-kind work</td>

|

| 623 |

-

<td>[(positive, 0.5819814801216125), (negative, 0.41801854968070984)]</td>

|

| 624 |

-

<td>negative</td>

|

| 625 |

-

</tr>

|

| 626 |

-

<tr>

|

| 627 |

-

<th>2</th>

|

| 628 |

-

<td>starts as a tart little lemon drop of a movie and</td>

|

| 629 |

-

<td>[(negative, 0.5641832947731018), (positive, 0.4358167052268982)]</td>

|

| 630 |

-

<td>positive</td>

|

| 631 |

-

</tr>

|

| 632 |

-

<tr>

|

| 633 |

-

<th>3</th>

|

| 634 |

-

<td>between flaccid satire and what</td>

|

| 635 |

-

<td>[(negative, 0.5532692074775696), (positive, 0.44673076272010803)]</td>

|

| 636 |

-

<td>positive</td>

|

| 637 |

-

</tr>

|

| 638 |

-

<tr>

|

| 639 |

-

<th>4</th>

|

| 640 |

-

<td>it certainly does n't feel like a film that strays past the two and a half mark</td>

|

| 641 |

-

<td>[(negative, 0.5386656522750854), (positive, 0.46133431792259216)]</td>

|

| 642 |

-

<td>positive</td>

|

| 643 |

-

</tr>

|

| 644 |

-

<tr>

|

| 645 |

-

<th>5</th>

|

| 646 |

-

<td>who liked there 's something about mary and both american pie movies</td>

|

| 647 |

-

<td>[(negative, 0.5086333751678467), (positive, 0.4913666248321533)]</td>

|

| 648 |

-

<td>positive</td>

|

| 649 |

-

</tr>

|

| 650 |

-

<tr>

|

| 651 |

-

<th>6</th>

|

| 652 |

-

<td>many good ideas as bad is the cold comfort that chin 's film serves up with style and empathy</td>

|

| 653 |

-

<td>[(positive, 0.557632327079773), (negative, 0.44236767292022705)]</td>

|

| 654 |

-

<td>negative</td>

|

| 655 |

-

</tr>

|

| 656 |

-

<tr>

|

| 657 |

-

<th>7</th>

|

| 658 |

-

<td>about its ideas and</td>

|

| 659 |

-

<td>[(positive, 0.518638551235199), (negative, 0.48136141896247864)]</td>

|

| 660 |

-

<td>negative</td>

|

| 661 |

-

</tr>

|

| 662 |

-

<tr>

|

| 663 |

-

<th>8</th>

|

| 664 |

-

<td>of a sick and evil woman</td>

|

| 665 |

-

<td>[(negative, 0.5554516315460205), (positive, 0.4445483684539795)]</td>

|

| 666 |

-

<td>positive</td>

|

| 667 |

-

</tr>

|

| 668 |

-

<tr>

|

| 669 |

-

<th>9</th>

|

| 670 |

-

<td>though this rude and crude film does deliver a few gut-busting laughs</td>

|

| 671 |

-

<td>[(positive, 0.5045541524887085), (negative, 0.4954459071159363)]</td>

|

| 672 |

-

<td>negative</td>

|

| 673 |

-

</tr>

|

| 674 |

-

<tr>

|

| 675 |

-

<th>10</th>

|

| 676 |

-

<td>to squeeze the action and our emotions into the all-too-familiar dramatic arc of the holocaust escape story</td>

|

| 677 |

-

<td>[(negative, 0.5050069093704224), (positive, 0.49499306082725525)]</td>

|

| 678 |

-

<td>positive</td>

|

| 679 |

-

</tr>

|

| 680 |

-

<tr>

|

| 681 |

-

<th>11</th>

|

| 682 |

-

<td>that throws a bunch of hot-button items in the viewer 's face and asks to be seen as hip , winking social commentary</td>

|

| 683 |

-

<td>[(negative, 0.5873904228210449), (positive, 0.41260960698127747)]</td>

|

| 684 |

-

<td>positive</td>

|

| 685 |

-

</tr>

|

| 686 |

-

<tr>

|

| 687 |

-

<th>12</th>

|

| 688 |

-

<td>'s soulful and unslick</td>

|

| 689 |

-

<td>[(positive, 0.5931627750396729), (negative, 0.40683719515800476)]</td>

|

| 690 |

-

<td>negative</td>

|

| 691 |

-

</tr>

|

| 692 |

-

</tbody>

|

| 693 |

-

</table>

|

| 694 |

-

</div>

|

| 695 |

-

|

| 696 |

-

|

| 697 |

-

|

| 698 |

-

```python

|

| 699 |

-

from rubrix.metrics.commons import *

|

| 700 |

-

```

|

| 701 |

-

|

| 702 |

-

|

| 703 |

-

```python

|

| 704 |

-

text_length("sst2", query="predicted:ko").visualize()

|

| 705 |

-

```

|

| 706 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

dataset_infos.json

DELETED

|

@@ -1,103 +0,0 @@

|

|

| 1 |

-

{"rubrix--sst2_with_predictions": {

|

| 2 |

-

"description": "",

|

| 3 |

-

"citation": "",

|

| 4 |

-

"homepage": "",

|

| 5 |

-

"license": "",

|

| 6 |

-

"features": {

|

| 7 |

-

"text": {

|

| 8 |

-

"dtype": "string",

|

| 9 |

-

"id": null,

|

| 10 |

-

"_type": "Value"

|

| 11 |

-

},

|

| 12 |

-

"inputs": {

|

| 13 |

-

"text": {

|

| 14 |

-

"dtype": "string",

|

| 15 |

-

"id": null,

|

| 16 |

-

"_type": "Value"

|

| 17 |

-

}

|

| 18 |

-

},

|

| 19 |

-

"prediction": [

|

| 20 |

-

{

|

| 21 |

-

"label": {

|

| 22 |

-

"dtype": "string",

|

| 23 |

-

"id": null,

|

| 24 |

-

"_type": "Value"

|

| 25 |

-

},

|

| 26 |

-

"score": {

|

| 27 |

-

"dtype": "float64",

|

| 28 |

-

"id": null,

|

| 29 |

-

"_type": "Value"

|

| 30 |

-

}

|

| 31 |

-

}

|

| 32 |

-

],

|

| 33 |

-

"prediction_agent": {

|

| 34 |

-

"dtype": "null",

|

| 35 |

-

"id": null,

|

| 36 |

-

"_type": "Value"

|

| 37 |

-

},

|

| 38 |

-

"annotation": {

|

| 39 |

-

"dtype": "string",

|

| 40 |

-

"id": null,

|

| 41 |

-

"_type": "Value"

|

| 42 |

-

},

|

| 43 |

-

"annotation_agent": {

|

| 44 |

-

"dtype": "null",

|

| 45 |

-

"id": null,

|

| 46 |

-

"_type": "Value"

|

| 47 |

-

},

|

| 48 |

-

"multi_label": {

|

| 49 |

-

"dtype": "bool",

|

| 50 |

-

"id": null,

|

| 51 |

-

"_type": "Value"

|

| 52 |

-

},

|

| 53 |

-

"explanation": {

|

| 54 |

-

"dtype": "null",

|

| 55 |

-

"id": null,

|

| 56 |

-

"_type": "Value"

|

| 57 |

-

},

|

| 58 |

-

"id": {

|

| 59 |

-

"dtype": "null",

|

| 60 |

-

"id": null,

|

| 61 |

-

"_type": "Value"

|

| 62 |

-

},

|

| 63 |

-

"metadata": {

|

| 64 |

-

"dtype": "null",

|

| 65 |

-

"id": null,

|

| 66 |

-

"_type": "Value"

|

| 67 |

-

},

|

| 68 |

-

"status": {

|

| 69 |

-

"dtype": "string",

|

| 70 |

-

"id": null,

|

| 71 |

-

"_type": "Value"

|

| 72 |

-

},

|

| 73 |

-

"event_timestamp": {

|

| 74 |

-

"dtype": "null",

|

| 75 |

-

"id": null,

|

| 76 |

-

"_type": "Value"

|

| 77 |

-

},

|

| 78 |

-

"metrics": {

|

| 79 |

-

"dtype": "null",

|

| 80 |

-

"id": null,

|

| 81 |

-

"_type": "Value"

|

| 82 |

-

}

|

| 83 |

-

},

|

| 84 |

-

"post_processed": null,

|

| 85 |

-

"supervised_keys": null,

|

| 86 |

-

"task_templates": null,

|

| 87 |

-

"builder_name": null,

|

| 88 |

-

"config_name": null,

|

| 89 |

-

"version": null,

|

| 90 |

-

"splits": {

|

| 91 |

-

"train": {

|

| 92 |

-

"name": "train",

|

| 93 |

-

"num_bytes": 12402330,

|

| 94 |

-

"num_examples": 67349,

|

| 95 |

-

"dataset_name": "sst2_with_predictions"

|

| 96 |

-

}

|

| 97 |

-

},

|

| 98 |

-

"download_checksums": null,

|

| 99 |

-

"download_size": 6242768,

|

| 100 |

-

"post_processing_size": null,

|

| 101 |

-

"dataset_size": 12402330,

|

| 102 |

-

"size_in_bytes": 18645098

|

| 103 |

-

}}

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

example.png

DELETED

|

Binary file (426 kB)

|

|

|

output_14_0.png

DELETED

|

Binary file (36.9 kB)

|

|

|

output_16_0.png

DELETED

|

Binary file (38.1 kB)

|

|

|

output_17_0.png

DELETED

|

Binary file (28.8 kB)

|

|

|

output_18_0.png

DELETED

|

Binary file (25.7 kB)

|

|

|

output_9_1.png

DELETED

|

Binary file (3.94 kB)

|

|

|

data/train-00000-of-00001.parquet → rubrix--sst2_with_predictions/train/0000.parquet

RENAMED

|

File without changes

|

data/validation-00000-of-00001.parquet → rubrix--sst2_with_predictions/validation/0000.parquet

RENAMED

|

File without changes

|

sst2_example.ipynb

DELETED

|

The diff for this file is too large to render.

See raw diff

|

|

|