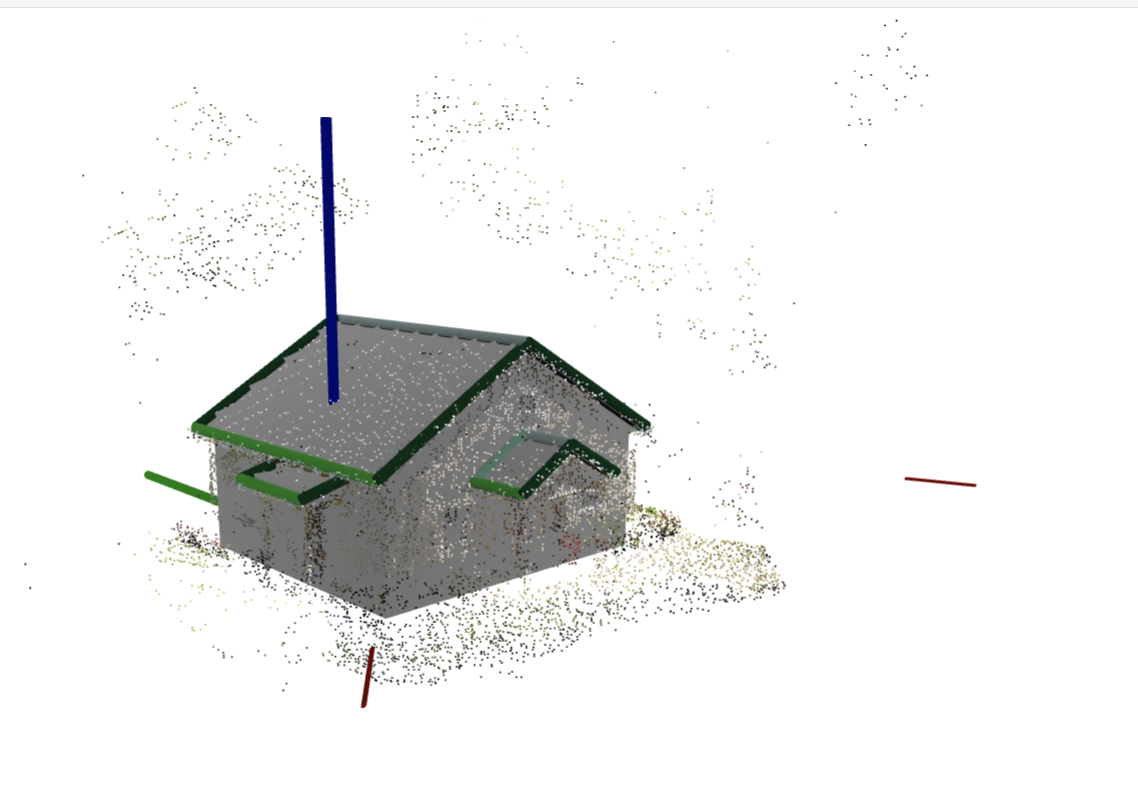

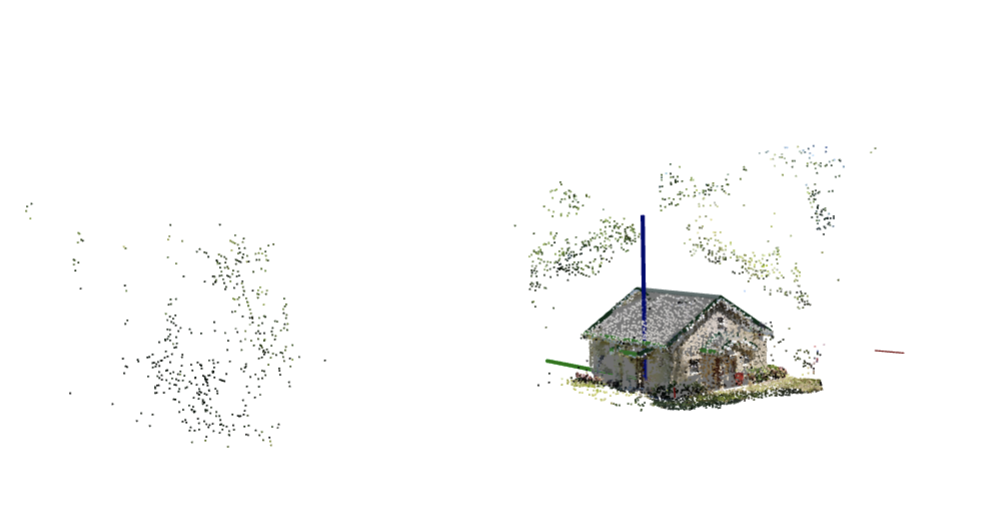

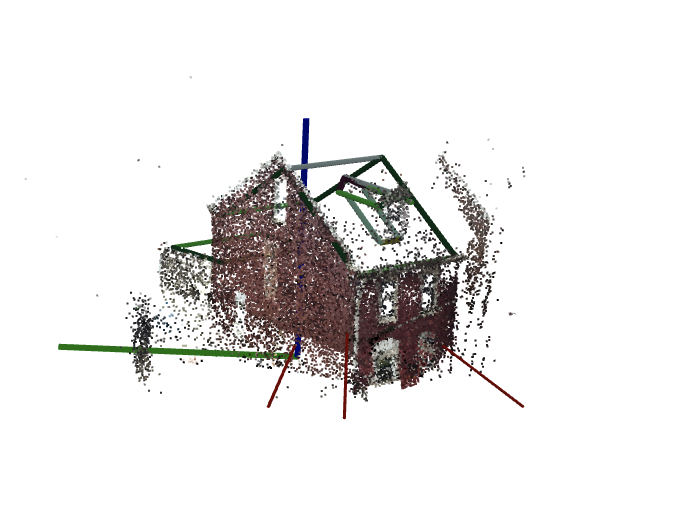

The roofs below are interactive as well!

### Additional notes on data

#### Depth

The `depthcm` is a result of running monocular depth model, and it is not ground truth by no means. If you need to have a GT depth, you can render the GT mesh in the training set using `mesh_faces` and `mesh_vertices`.

The semi-sparse depth from the Colmap reconstructions with dense features, available in `points3d` is much more accurate, than `depthcm`.

At the inference time, `mesh_faces` is not available, so you can use only `depthcm` and colmap point cloud from `points3d`

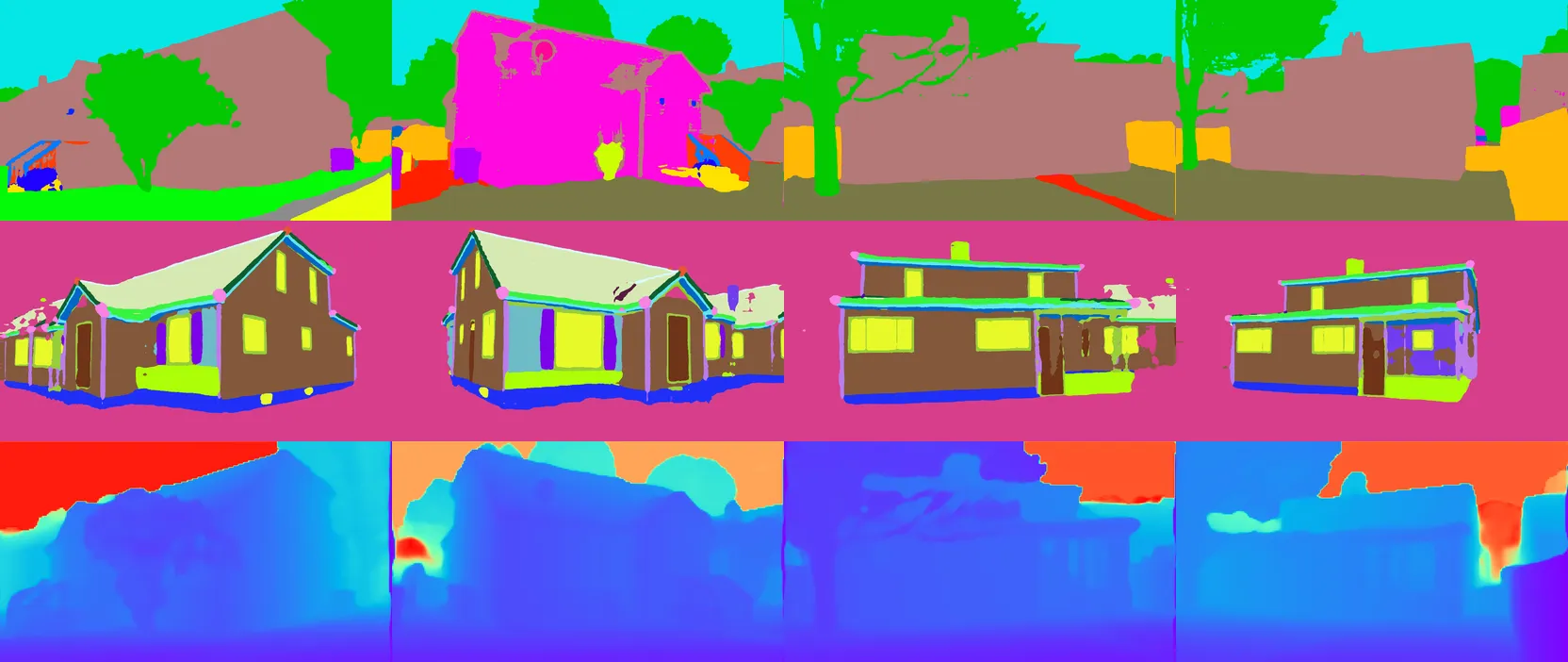

#### Segmentation

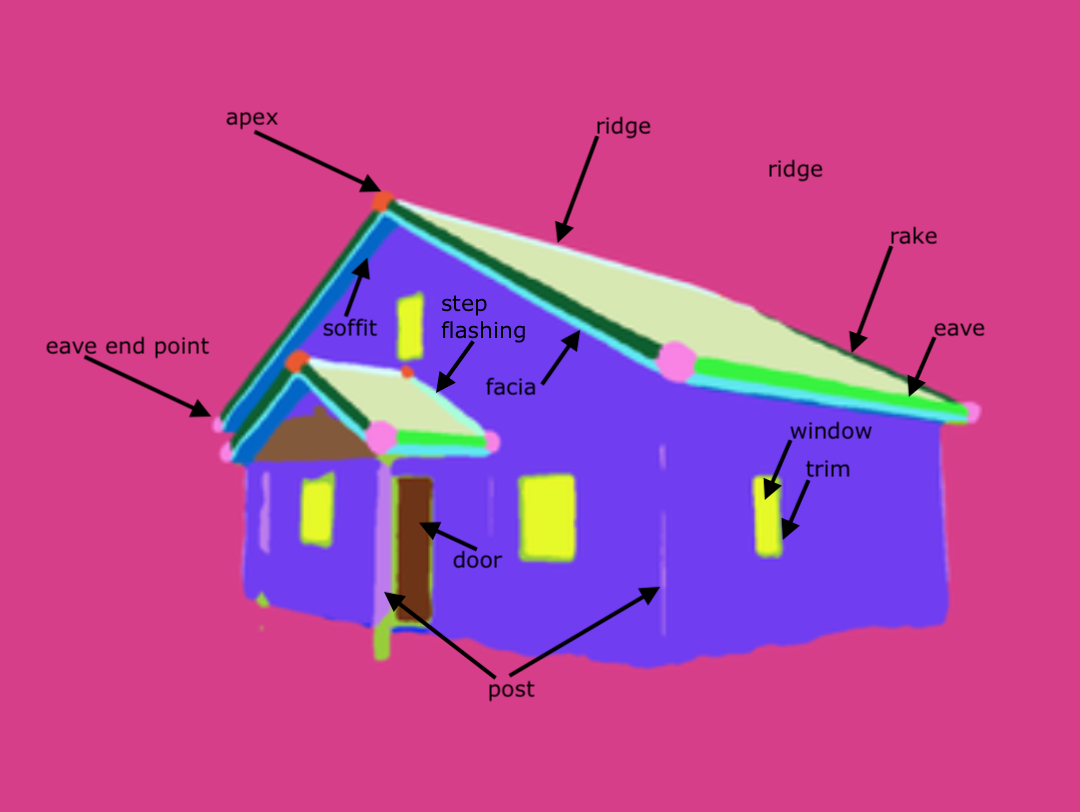

You have two segmentations available. `gestalt` is domain specific model, which "sees-through-occlusions" and provides a detailed information about house parts. See the list of classes in "Dataset" section in the navigation bar.

`ade20k` is a standard ADE20K segmentation model (specifically, [shi-labs/oneformer_ade20k_swin_large](https://huggingface.co/shi-labs/oneformer_ade20k_swin_large)).

## Organizers

Jack Langerman (Hover), Dmytro Mishkin (CTU in Prague / Hover), Ilke Demir (Intel), Hanzhi Chen (TUM), Daoyi Gao (TUM), Caner Korkmaz (ICL), Tolga Birdal (ICL)

## Sponsors

The organizers would like to thank Hover Inc. for their sponsorship of this challenge and dataset.

## Timeline

- Competition Released: March 14, 2024

- Entry Deadline: May 28, 2024

- Team Merging: May 28, 2024

- Final Solution Submission: June 4, 2024

- Writeup Deadline: June 11, 2024

## Prizes

- 1st Place: **$10,000**

- 2nd Place: **$7,000**

- 3rd Place: **$5,000**

- Additional Prizes: **$3,000**

Please see the [Competition Rules](https://usm3d.github.io/S23DR/s23dr_rules.html) for additional information.

### Cite

```

@misc{Langerman_Korkmaz_Chen_Gao_Demir_Mishkin_Birdal2024,

title={S23DR Competition at 1st Workshop on Urban Scene Modeling @ CVPR 2024},

url={usm3d.github.io},

howpublished = {\url{https://huggingface.co/usm3d}},

year={2024},

author={Langerman, Jack and Korkmaz, Caner and Chen, Hanzhi and Gao, Daoyi and Demir, Ilke and Mishkin, Dmytro and Birdal, Tolga}

}

```

The roofs below are interactive as well!

### Additional notes on data

#### Depth

The `depthcm` is a result of running monocular depth model, and it is not ground truth by no means. If you need to have a GT depth, you can render the GT mesh in the training set using `mesh_faces` and `mesh_vertices`.

The semi-sparse depth from the Colmap reconstructions with dense features, available in `points3d` is much more accurate, than `depthcm`.

At the inference time, `mesh_faces` is not available, so you can use only `depthcm` and colmap point cloud from `points3d`

#### Segmentation

You have two segmentations available. `gestalt` is domain specific model, which "sees-through-occlusions" and provides a detailed information about house parts. See the list of classes in "Dataset" section in the navigation bar.

`ade20k` is a standard ADE20K segmentation model (specifically, [shi-labs/oneformer_ade20k_swin_large](https://huggingface.co/shi-labs/oneformer_ade20k_swin_large)).

## Organizers

Jack Langerman (Hover), Dmytro Mishkin (CTU in Prague / Hover), Ilke Demir (Intel), Hanzhi Chen (TUM), Daoyi Gao (TUM), Caner Korkmaz (ICL), Tolga Birdal (ICL)

## Sponsors

The organizers would like to thank Hover Inc. for their sponsorship of this challenge and dataset.

## Timeline

- Competition Released: March 14, 2024

- Entry Deadline: May 28, 2024

- Team Merging: May 28, 2024

- Final Solution Submission: June 4, 2024

- Writeup Deadline: June 11, 2024

## Prizes

- 1st Place: **$10,000**

- 2nd Place: **$7,000**

- 3rd Place: **$5,000**

- Additional Prizes: **$3,000**

Please see the [Competition Rules](https://usm3d.github.io/S23DR/s23dr_rules.html) for additional information.

### Cite

```

@misc{Langerman_Korkmaz_Chen_Gao_Demir_Mishkin_Birdal2024,

title={S23DR Competition at 1st Workshop on Urban Scene Modeling @ CVPR 2024},

url={usm3d.github.io},

howpublished = {\url{https://huggingface.co/usm3d}},

year={2024},

author={Langerman, Jack and Korkmaz, Caner and Chen, Hanzhi and Gao, Daoyi and Demir, Ilke and Mishkin, Dmytro and Birdal, Tolga}

}

```