Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +1 -0

- .ipynb_checkpoints/lecture_intro_cn-checkpoint.ipynb +14 -1

- 01-data_env/.ipynb_checkpoints/3-dataset-use-checkpoint.ipynb +4 -1

- 01-data_env/1-env-intro.ipynb +42 -1

- 01-data_env/2-data-intro.ipynb +3 -3

- 01-data_env/3-dataset-use.ipynb +7 -2

- 01-data_env/img/.ipynb_checkpoints/zhushi-checkpoint.png +0 -0

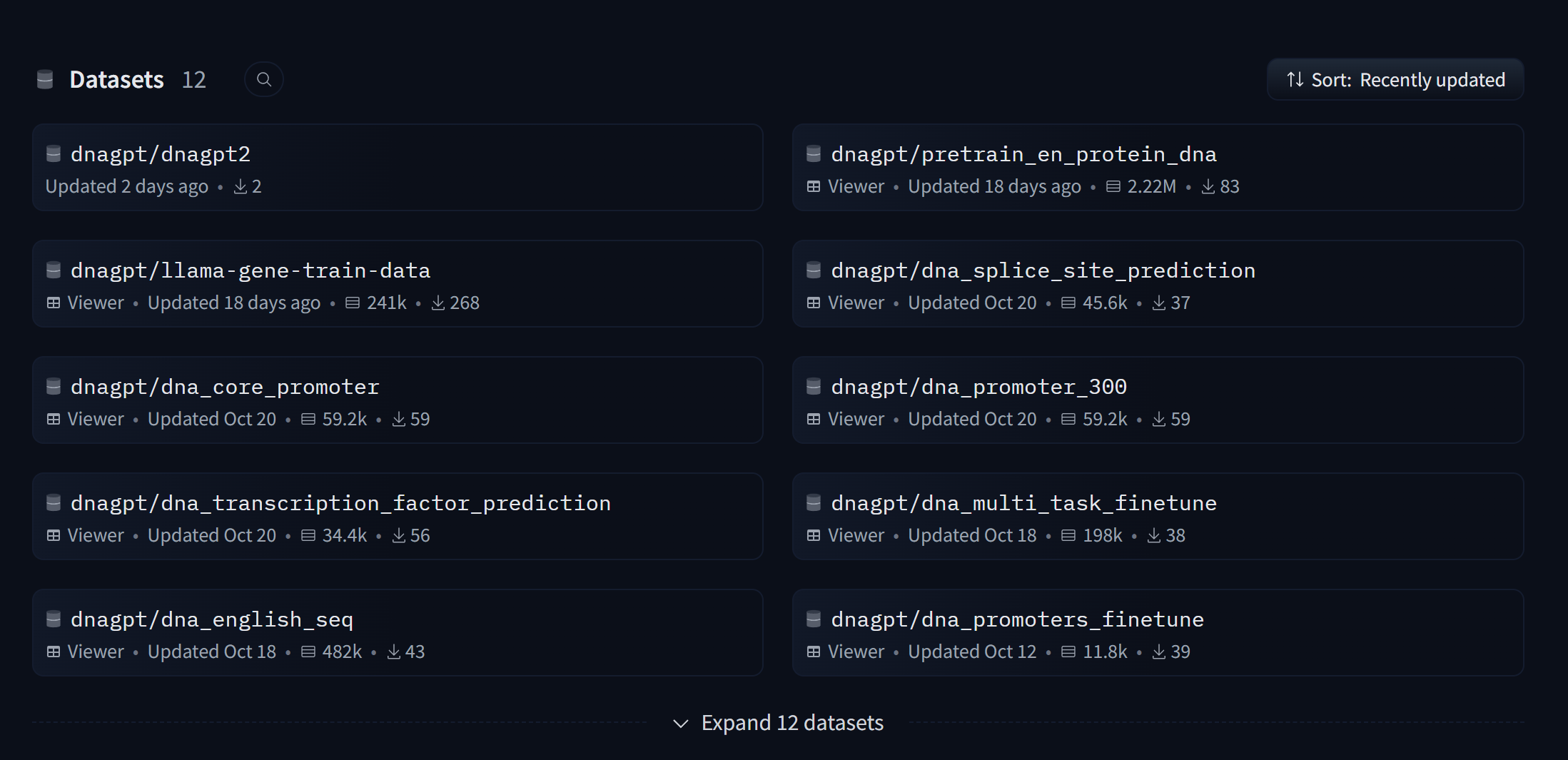

- 01-data_env/img/datasets_dnagpt.png +0 -0

- 02-gpt2_bert/.ipynb_checkpoints/1-dna-bpe-checkpoint.ipynb +528 -0

- 02-gpt2_bert/.ipynb_checkpoints/2-dna-gpt-checkpoint.ipynb +0 -0

- 02-gpt2_bert/.ipynb_checkpoints/3-dna-bert-checkpoint.ipynb +253 -0

- 02-gpt2_bert/.ipynb_checkpoints/4-gene-feature-checkpoint.ipynb +489 -0

- 02-gpt2_bert/.ipynb_checkpoints/5-multi-seq-gpt-checkpoint.ipynb +261 -0

- 02-gpt2_bert/.ipynb_checkpoints/dna_wordpiece_dict-checkpoint.json +0 -0

- 02-gpt2_bert/1-dna-bpe.ipynb +528 -0

- 02-gpt2_bert/2-dna-gpt.ipynb +0 -0

- 02-gpt2_bert/3-dna-bert.ipynb +0 -0

- 02-gpt2_bert/4-gene-feature.ipynb +489 -0

- 02-gpt2_bert/5-multi-seq-gpt.ipynb +261 -0

- 02-gpt2_bert/dna_bert_v0/config.json +28 -0

- 02-gpt2_bert/dna_bert_v0/generation_config.json +7 -0

- 02-gpt2_bert/dna_bert_v0/model.safetensors +3 -0

- 02-gpt2_bert/dna_bert_v0/training_args.bin +3 -0

- 02-gpt2_bert/dna_bpe_dict.json +0 -0

- 02-gpt2_bert/dna_bpe_dict/.ipynb_checkpoints/merges-checkpoint.txt +3 -0

- 02-gpt2_bert/dna_bpe_dict/.ipynb_checkpoints/special_tokens_map-checkpoint.json +5 -0

- 02-gpt2_bert/dna_bpe_dict/.ipynb_checkpoints/tokenizer-checkpoint.json +0 -0

- 02-gpt2_bert/dna_bpe_dict/.ipynb_checkpoints/tokenizer_config-checkpoint.json +20 -0

- 02-gpt2_bert/dna_bpe_dict/merges.txt +3 -0

- 02-gpt2_bert/dna_bpe_dict/special_tokens_map.json +5 -0

- 02-gpt2_bert/dna_bpe_dict/tokenizer.json +0 -0

- 02-gpt2_bert/dna_bpe_dict/tokenizer_config.json +20 -0

- 02-gpt2_bert/dna_bpe_dict/vocab.json +0 -0

- 02-gpt2_bert/dna_gpt2_v0/config.json +39 -0

- 02-gpt2_bert/dna_gpt2_v0/generation_config.json +6 -0

- 02-gpt2_bert/dna_gpt2_v0/merges.txt +3 -0

- 02-gpt2_bert/dna_gpt2_v0/model.safetensors +3 -0

- 02-gpt2_bert/dna_gpt2_v0/special_tokens_map.json +23 -0

- 02-gpt2_bert/dna_gpt2_v0/tokenizer.json +0 -0

- 02-gpt2_bert/dna_gpt2_v0/tokenizer_config.json +20 -0

- 02-gpt2_bert/dna_gpt2_v0/training_args.bin +3 -0

- 02-gpt2_bert/dna_gpt2_v0/vocab.json +0 -0

- 02-gpt2_bert/dna_wordpiece_dict.json +0 -0

- 02-gpt2_bert/dna_wordpiece_dict/special_tokens_map.json +7 -0

- 02-gpt2_bert/dna_wordpiece_dict/tokenizer.json +0 -0

- 02-gpt2_bert/dna_wordpiece_dict/tokenizer_config.json +53 -0

- 02-gpt2_bert/img/.ipynb_checkpoints/gpt2-stru-checkpoint.png +0 -0

- 02-gpt2_bert/img/gpt2-netron.png +0 -0

- 02-gpt2_bert/img/gpt2-stru.png +0 -0

- 02-gpt2_bert/img/llm-visual.png +0 -0

.gitattributes

CHANGED

|

@@ -35,3 +35,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

*.psd filter=lfs diff=lfs merge=lfs -text

|

| 37 |

*.txt filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

*.psd filter=lfs diff=lfs merge=lfs -text

|

| 37 |

*.txt filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

img/gpt2_bridge.png filter=lfs diff=lfs merge=lfs -text

|

.ipynb_checkpoints/lecture_intro_cn-checkpoint.ipynb

CHANGED

|

@@ -5,7 +5,7 @@

|

|

| 5 |

"id": "2365faf7-39fb-4e53-a810-2e28c4f6b4c1",

|

| 6 |

"metadata": {},

|

| 7 |

"source": [

|

| 8 |

-

"# DNAGTP2

|

| 9 |

"\n",

|

| 10 |

"## 1 概要\n",

|

| 11 |

"自然语言大模型早已超出NLP研究领域,正在成为AI for science的基石。生物信息学中的基因序列,则是和自然语言最类似的,把大模型应用于生物序列研究,就成了最近一两年的热门研究方向,特别是2024年预测蛋白质结构的alphaFold获得诺贝尔化学奖,更是为生物学的研究指明了未来的方向。\n",

|

|

@@ -18,6 +18,9 @@

|

|

| 18 |

"\n",

|

| 19 |

"DNAGTP2就是这样的梯子,仅望能抛砖引玉,让更多的生物学工作者能够越过大模型的门槛,戴上大模型的翅膀,卷过同行。\n",

|

| 20 |

"\n",

|

|

|

|

|

|

|

|

|

|

| 21 |

"## 2 教程特色\n",

|

| 22 |

"本教程主要有以下特色:\n",

|

| 23 |

"\n",

|

|

@@ -39,7 +42,17 @@

|

|

| 39 |

"\n",

|

| 40 |

"2 大模型学习入门。不仅是生物学领域的,都可以看看,和一般大模型入门没啥差别,只是数据不同。\n",

|

| 41 |

"\n",

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 42 |

"## 3 教程大纲\n",

|

|

|

|

|

|

|

|

|

|

|

|

|

| 43 |

"1 数据和环境\n",

|

| 44 |

"\n",

|

| 45 |

"1.1 大模型运行环境简介\n",

|

|

|

|

| 5 |

"id": "2365faf7-39fb-4e53-a810-2e28c4f6b4c1",

|

| 6 |

"metadata": {},

|

| 7 |

"source": [

|

| 8 |

+

"# DNAGTP2-基因序列大模型最佳入门\n",

|

| 9 |

"\n",

|

| 10 |

"## 1 概要\n",

|

| 11 |

"自然语言大模型早已超出NLP研究领域,正在成为AI for science的基石。生物信息学中的基因序列,则是和自然语言最类似的,把大模型应用于生物序列研究,就成了最近一两年的热门研究方向,特别是2024年预测蛋白质结构的alphaFold获得诺贝尔化学奖,更是为生物学的研究指明了未来的方向。\n",

|

|

|

|

| 18 |

"\n",

|

| 19 |

"DNAGTP2就是这样的梯子,仅望能抛砖引玉,让更多的生物学工作者能够越过大模型的门槛,戴上大模型的翅膀,卷过同行。\n",

|

| 20 |

"\n",

|

| 21 |

+

"\n",

|

| 22 |

+

"<<img src='img/gpt2_bridge.png' width=\"600px\" />\n",

|

| 23 |

+

"\n",

|

| 24 |

"## 2 教程特色\n",

|

| 25 |

"本教程主要有以下特色:\n",

|

| 26 |

"\n",

|

|

|

|

| 42 |

"\n",

|

| 43 |

"2 大模型学习入门。不仅是生物学领域的,都可以看看,和一般大模型入门没啥差别,只是数据不同。\n",

|

| 44 |

"\n",

|

| 45 |

+

"\n",

|

| 46 |

+

"huggingface: https://huggingface.co/dnagpt/dnagpt2\n",

|

| 47 |

+

"\n",

|

| 48 |

+

"github: https://github.com/maris205/dnagpt2\n",

|

| 49 |

+

"\n",

|

| 50 |

+

"\n",

|

| 51 |

"## 3 教程大纲\n",

|

| 52 |

+

"\n",

|

| 53 |

+

"<img src='img/DNAGPT2.png' width=\"600px\" />\n",

|

| 54 |

+

"\n",

|

| 55 |

+

"\n",

|

| 56 |

"1 数据和环境\n",

|

| 57 |

"\n",

|

| 58 |

"1.1 大模型运行环境简介\n",

|

01-data_env/.ipynb_checkpoints/3-dataset-use-checkpoint.ipynb

CHANGED

|

@@ -134,6 +134,7 @@

|

|

| 134 |

]

|

| 135 |

},

|

| 136 |

{

|

|

|

|

| 137 |

"cell_type": "markdown",

|

| 138 |

"id": "17a1fa7c-ff4b-419f-8a82-e58cc5777cd4",

|

| 139 |

"metadata": {},

|

|

@@ -142,7 +143,9 @@

|

|

| 142 |

"\n",

|

| 143 |

"当然,数据也可以直接从huggingface的线上仓库读取,这时候需要注意科学上网问题。\n",

|

| 144 |

"\n",

|

| 145 |

-

"具体使用函数也是load_dataset"

|

|

|

|

|

|

|

| 146 |

]

|

| 147 |

},

|

| 148 |

{

|

|

|

|

| 134 |

]

|

| 135 |

},

|

| 136 |

{

|

| 137 |

+

"attachments": {},

|

| 138 |

"cell_type": "markdown",

|

| 139 |

"id": "17a1fa7c-ff4b-419f-8a82-e58cc5777cd4",

|

| 140 |

"metadata": {},

|

|

|

|

| 143 |

"\n",

|

| 144 |

"当然,数据也可以直接从huggingface的线上仓库读取,这时候需要注意科学上网问题。\n",

|

| 145 |

"\n",

|

| 146 |

+

"具体使用函数也是load_dataset\n",

|

| 147 |

+

"\n",

|

| 148 |

+

"<img src='img/datasets_dnagpt.png' width='800px' />"

|

| 149 |

]

|

| 150 |

},

|

| 151 |

{

|

01-data_env/1-env-intro.ipynb

CHANGED

|

@@ -62,7 +62,48 @@

|

|

| 62 |

"id": "444adc87-78c8-4209-8260-0c5c4a668ea0",

|

| 63 |

"metadata": {},

|

| 64 |

"outputs": [],

|

| 65 |

-

"source": [

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 66 |

}

|

| 67 |

],

|

| 68 |

"metadata": {

|

|

|

|

| 62 |

"id": "444adc87-78c8-4209-8260-0c5c4a668ea0",

|

| 63 |

"metadata": {},

|

| 64 |

"outputs": [],

|

| 65 |

+

"source": [

|

| 66 |

+

"import os\n",

|

| 67 |

+

"\n",

|

| 68 |

+

"# 设置环境变量, autodl专区 其他idc\n",

|

| 69 |

+

"os.environ['HF_ENDPOINT'] = 'https://hf-mirror.com'\n",

|

| 70 |

+

"\n",

|

| 71 |

+

"# 打印环境变量以确认设置成功\n",

|

| 72 |

+

"print(os.environ.get('HF_ENDPOINT'))"

|

| 73 |

+

]

|

| 74 |

+

},

|

| 75 |

+

{

|

| 76 |

+

"cell_type": "code",

|

| 77 |

+

"execution_count": null,

|

| 78 |

+

"id": "06d9dc67-dbd4-4d37-bbdb-ccf59c8fdbf9",

|

| 79 |

+

"metadata": {},

|

| 80 |

+

"outputs": [],

|

| 81 |

+

"source": [

|

| 82 |

+

"import subprocess\n",

|

| 83 |

+

"import os\n",

|

| 84 |

+

"# 设置环境变量, autodl一般区域\n",

|

| 85 |

+

"result = subprocess.run('bash -c \"source /etc/network_turbo && env | grep proxy\"', shell=True, capture_output=True, text=True)\n",

|

| 86 |

+

"output = result.stdout\n",

|

| 87 |

+

"for line in output.splitlines():\n",

|

| 88 |

+

" if '=' in line:\n",

|

| 89 |

+

" var, value = line.split('=', 1)\n",

|

| 90 |

+

" os.environ[var] = value"

|

| 91 |

+

]

|

| 92 |

+

},

|

| 93 |

+

{

|

| 94 |

+

"cell_type": "code",

|

| 95 |

+

"execution_count": null,

|

| 96 |

+

"id": "2168e365-8254-4063-98bd-27afdbdb2f32",

|

| 97 |

+

"metadata": {},

|

| 98 |

+

"outputs": [],

|

| 99 |

+

"source": [

|

| 100 |

+

"#lfs 支持\n",

|

| 101 |

+

"!apt-get update\n",

|

| 102 |

+

"\n",

|

| 103 |

+

"!apt-get install git-lfs\n",

|

| 104 |

+

"\n",

|

| 105 |

+

"!git lfs install"

|

| 106 |

+

]

|

| 107 |

}

|

| 108 |

],

|

| 109 |

"metadata": {

|

01-data_env/2-data-intro.ipynb

CHANGED

|

@@ -15,9 +15,9 @@

|

|

| 15 |

"source": [

|

| 16 |

"本教程主要关注基因相关的生物序列数据,包括主要的DNA和蛋白质序列,data目录下数据如下:\n",

|

| 17 |

"\n",

|

| 18 |

-

"* dna_1g.txt DNA序列数据,大小1G,从

|

| 19 |

-

"* potein_1g.txt 蛋白质序列数据,大小1G,从pdb

|

| 20 |

-

"* english_500m.txt 英文数据,大小500M,就是英文百科"

|

| 21 |

]

|

| 22 |

},

|

| 23 |

{

|

|

|

|

| 15 |

"source": [

|

| 16 |

"本教程主要关注基因相关的生物序列数据,包括主要的DNA和蛋白质序列,data目录下数据如下:\n",

|

| 17 |

"\n",

|

| 18 |

+

"* dna_1g.txt DNA序列数据,大小1G,从GUE数据集中抽取,具体可参考dnabert2的论文,包括多个模式生物的数据(https://github.com/MAGICS-LAB/DNABERT_2)\n",

|

| 19 |

+

"* potein_1g.txt 蛋白质序列数据,大小1G,从pdb/uniprot数据库中抽取(https://www.uniprot.org/help/downloads)\n",

|

| 20 |

+

"* english_500m.txt 英文数据,大小500M,就是英文百科(https://huggingface.co/datasets/Salesforce/wikitext, https://huggingface.co/datasets/iohadrubin/wikitext-103-raw-v1)"

|

| 21 |

]

|

| 22 |

},

|

| 23 |

{

|

01-data_env/3-dataset-use.ipynb

CHANGED

|

@@ -117,7 +117,9 @@

|

|

| 117 |

"dna_dataset_sample = DatasetDict(\n",

|

| 118 |

" {\n",

|

| 119 |

" \"train\": dna_dataset[\"train\"].shuffle().select(range(50000)), \n",

|

| 120 |

-

" \"valid\": dna_dataset[\"test\"].shuffle().select(range(500))

|

|

|

|

|

|

|

| 121 |

" }\n",

|

| 122 |

")\n",

|

| 123 |

"dna_dataset_sample"

|

|

@@ -134,6 +136,7 @@

|

|

| 134 |

]

|

| 135 |

},

|

| 136 |

{

|

|

|

|

| 137 |

"cell_type": "markdown",

|

| 138 |

"id": "17a1fa7c-ff4b-419f-8a82-e58cc5777cd4",

|

| 139 |

"metadata": {},

|

|

@@ -142,7 +145,9 @@

|

|

| 142 |

"\n",

|

| 143 |

"当然,数据也可以直接从huggingface的线上仓库读取,这时候需要注意科学上网问题。\n",

|

| 144 |

"\n",

|

| 145 |

-

"具体使用函数也是load_dataset"

|

|

|

|

|

|

|

| 146 |

]

|

| 147 |

},

|

| 148 |

{

|

|

|

|

| 117 |

"dna_dataset_sample = DatasetDict(\n",

|

| 118 |

" {\n",

|

| 119 |

" \"train\": dna_dataset[\"train\"].shuffle().select(range(50000)), \n",

|

| 120 |

+

" \"valid\": dna_dataset[\"test\"].shuffle().select(range(500)),\n",

|

| 121 |

+

" \"evla\": dna_dataset[\"test\"].shuffle().select(range(500))\n",

|

| 122 |

+

"\n",

|

| 123 |

" }\n",

|

| 124 |

")\n",

|

| 125 |

"dna_dataset_sample"

|

|

|

|

| 136 |

]

|

| 137 |

},

|

| 138 |

{

|

| 139 |

+

"attachments": {},

|

| 140 |

"cell_type": "markdown",

|

| 141 |

"id": "17a1fa7c-ff4b-419f-8a82-e58cc5777cd4",

|

| 142 |

"metadata": {},

|

|

|

|

| 145 |

"\n",

|

| 146 |

"当然,数据也可以直接从huggingface的线上仓库读取,这时候需要注意科学上网问题。\n",

|

| 147 |

"\n",

|

| 148 |

+

"具体使用函数也是load_dataset\n",

|

| 149 |

+

"\n",

|

| 150 |

+

"<img src='img/datasets_dnagpt.png' width='800px' />"

|

| 151 |

]

|

| 152 |

},

|

| 153 |

{

|

01-data_env/img/.ipynb_checkpoints/zhushi-checkpoint.png

ADDED

|

01-data_env/img/datasets_dnagpt.png

ADDED

|

02-gpt2_bert/.ipynb_checkpoints/1-dna-bpe-checkpoint.ipynb

ADDED

|

@@ -0,0 +1,528 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"id": "a9fffce5-83e3-4838-8335-acb2e3b50c35",

|

| 6 |

+

"metadata": {},

|

| 7 |

+

"source": [

|

| 8 |

+

"# 2.1 DNA分词器构建"

|

| 9 |

+

]

|

| 10 |

+

},

|

| 11 |

+

{

|

| 12 |

+

"cell_type": "markdown",

|

| 13 |

+

"id": "f28b0950-37dc-4f78-ae6c-9fca33d513fc",

|

| 14 |

+

"metadata": {},

|

| 15 |

+

"source": [

|

| 16 |

+

"## **分词算法**\n",

|

| 17 |

+

"\n",

|

| 18 |

+

"### **什么是分词**\n",

|

| 19 |

+

"分词就是把一个文本序列,分成一个一个的token/词,对于英文这种天生带空格的语言,一般使用空格和标点分词就行了,而对于中文等语言,并没有特殊的符号来分词,因此,一般需要设计专门的分词算法,对于大模型而言,一般需要处理多种语言,因此,也需要专门的分词算法。\n",

|

| 20 |

+

"\n",

|

| 21 |

+

"在大模型(如 BERT、GPT 系列、T5 等)中,分词器(tokenizer)扮演着至关重要的角色。它负责将原始文本转换为模型可以处理的格式,即将文本分解成 token 序列,并将这些 token 映射到模型词汇表中的唯一 ID。分词器的选择和配置直接影响模型的性能和效果。以下是几种常见的分词器及其特点,特别关注它们在大型语言模型中的应用。\n",

|

| 22 |

+

"\n",

|

| 23 |

+

"### 1. **WordPiece 分词器**\n",

|

| 24 |

+

"\n",

|

| 25 |

+

"- **使用场景**:广泛应用于 BERT 及其变体。\n",

|

| 26 |

+

"- **工作原理**:基于频率统计,从语料库中学习最有效的词汇表。它根据子词(subword)在文本中的出现频率来决定如何分割单词。例如,“playing” 可能被分为 “play” 和 “##ing”,其中“##”表示该部分是前一个 token 的延续。\n",

|

| 27 |

+

"- **优点**:\n",

|

| 28 |

+

" - 处理未知词汇能力强,能够将未见过的词汇分解为已知的子词。\n",

|

| 29 |

+

" - 兼容性好,适合多种语言任务。\n",

|

| 30 |

+

"- **缺点**:\n",

|

| 31 |

+

" - 需要额外的标记(如 `##`)来指示子词,可能影响某些应用场景下的可读性。\n",

|

| 32 |

+

"\n",

|

| 33 |

+

"### 2. **Byte Pair Encoding (BPE)**\n",

|

| 34 |

+

"\n",

|

| 35 |

+

"- **使用场景**:广泛应用于 GPT 系列、RoBERTa、XLM-R 等模型。\n",

|

| 36 |

+

"- **工作原理**:通过迭代地合并最常见的字符对来构建词汇表。BPE 是一种无监督的学习方法,能够在不依赖于预先定义的词汇表的情况下进行分词。\n",

|

| 37 |

+

"- **优点**:\n",

|

| 38 |

+

" - 灵活性高,适应性强,尤其适用于多语言模型。\n",

|

| 39 |

+

" - 不需要特殊标记,生成的词汇表更简洁。\n",

|

| 40 |

+

"- **缺点**:\n",

|

| 41 |

+

" - 对于某些语言或领域特定的词汇,可能会产生较短的子词,导致信息丢失。\n",

|

| 42 |

+

"\n",

|

| 43 |

+

"### 3. **SentencePiece**\n",

|

| 44 |

+

"\n",

|

| 45 |

+

"- **使用场景**:常见于 T5、mBART 等多语言模型。\n",

|

| 46 |

+

"- **工作原理**:结合了 BPE 和 WordPiece 的优点,同时支持字符级和词汇级分词。它可以在没有空格的语言(如中文、日文)中表现良好。\n",

|

| 47 |

+

"- **优点**:\n",

|

| 48 |

+

" - 支持无空格语言,适合多语言处理。\n",

|

| 49 |

+

" - 学习速度快,适应性强。\n",

|

| 50 |

+

"- **缺点**:\n",

|

| 51 |

+

" - 对于某些特定领域的专业术语,可能需要额外的预处理步骤。\n",

|

| 52 |

+

"\n",

|

| 53 |

+

"### 4. **Character-Level Tokenizer**\n",

|

| 54 |

+

"\n",

|

| 55 |

+

"- **使用场景**:较少用于大型语言模型,但在某些特定任务(如拼写检查、手写识别)中有应用。\n",

|

| 56 |

+

"- **工作原理**:直接将每个字符视为一个 token。这种方式简单直接,但通常会导致较大的词汇表。\n",

|

| 57 |

+

"- **优点**:\n",

|

| 58 |

+

" - 简单易实现,不需要复杂的训练过程。\n",

|

| 59 |

+

" - 对于字符级别的任务非常有效。\n",

|

| 60 |

+

"- **缺点**:\n",

|

| 61 |

+

" - 词汇表较大,计算资源消耗较多。\n",

|

| 62 |

+

" - 捕捉上下文信息的能力较弱。\n",

|

| 63 |

+

"\n",

|

| 64 |

+

"### 5. **Unigram Language Model**\n",

|

| 65 |

+

"\n",

|

| 66 |

+

"- **使用场景**:主要用于 SentencePiece 中。\n",

|

| 67 |

+

"- **工作原理**:基于概率分布,选择最优的分词方案以最大化似然函数。这种方法类似于 BPE,但在构建词汇表时考虑了更多的统计信息。\n",

|

| 68 |

+

"- **优点**:\n",

|

| 69 |

+

" - 统计基础强,优化效果好。\n",

|

| 70 |

+

" - 适应性强,适用于多种语言和任务。\n",

|

| 71 |

+

"- **缺点**:\n",

|

| 72 |

+

" - 计算复杂度较高,训练时间较长。\n",

|

| 73 |

+

"\n",

|

| 74 |

+

"### 分词器的关键特性\n",

|

| 75 |

+

"\n",

|

| 76 |

+

"无论选择哪种分词器,以下几个关键特性都是设计和应用中需要考虑的:\n",

|

| 77 |

+

"\n",

|

| 78 |

+

"- **词汇表大小**:决定了模型所能识别的词汇量。较大的词汇表可以捕捉更多细节,但也增加了内存和计算需求。\n",

|

| 79 |

+

"- **处理未知词汇的能力**:好的分词器应该能够有效地处理未登录词(OOV, Out-Of-Vocabulary),将其分解为已知的子词。\n",

|

| 80 |

+

"- **多语言支持**:对于多语言模型,分词器应能处理不同语言的文本,尤其是那些没有明显分隔符的语言。\n",

|

| 81 |

+

"- **效率和速度**:分词器的执行速度直接影响整个数据处理管道的效率,尤其是在大规模数据集上。\n",

|

| 82 |

+

"- **兼容性和灵活性**:分词器应与目标模型架构兼容,并且能够灵活适应不同的任务需求。"

|

| 83 |

+

]

|

| 84 |

+

},

|

| 85 |

+

{

|

| 86 |

+

"cell_type": "markdown",

|

| 87 |

+

"id": "165e2594-277d-44d0-b582-77859a0bc0b2",

|

| 88 |

+

"metadata": {},

|

| 89 |

+

"source": [

|

| 90 |

+

"## DNA等生物序列分词\n",

|

| 91 |

+

"在生物信息学中,DNA 和蛋白质序列的处理与自然语言处理(NLP)有相似之处,但也有其独特性。为了提取这些生物序列的特征并用于机器学习或深度学习模型,通常需要将长序列分解成更小的片段(类似于 NLP 中的“分词”),以便更好地捕捉局部和全局特征。以下是几种常见的方法,用于对 DNA 和蛋白质序列进行“分词”,以提取有用的特征。\n",

|

| 92 |

+

"\n",

|

| 93 |

+

"### 1. **K-mer 分解**\n",

|

| 94 |

+

"\n",

|

| 95 |

+

"**定义**:K-mer 是指长度为 k 的连续子序列。例如,在 DNA 序列中,一个 3-mer 可能是 \"ATG\" 或 \"CGA\"。\n",

|

| 96 |

+

"\n",

|

| 97 |

+

"**应用**:\n",

|

| 98 |

+

"- **DNA 序列**:常用的 k 值范围从 3 到 6。较小的 k 值可以捕捉到更细粒度的信息,而较大的 k 值则有助于识别更长的模式。\n",

|

| 99 |

+

"- **蛋白质序列**:k 值通常较大,因为氨基酸的数量较多(20 种),较长的 k-mer 可以捕捉到重要的结构域或功能区域。\n",

|

| 100 |

+

"\n",

|

| 101 |

+

"**优点**:\n",

|

| 102 |

+

"- 简单且直观,易于实现。\n",

|

| 103 |

+

"- 可以捕捉到短序列中的局部特征。\n",

|

| 104 |

+

"\n",

|

| 105 |

+

"**缺点**:\n",

|

| 106 |

+

"- 对于非常长的序列,生成的 k-mer 数量会非常大,导致维度爆炸问题。\n",

|

| 107 |

+

"- 不同位置的 k-mer 之间缺乏上下文关系。"

|

| 108 |

+

]

|

| 109 |

+

},

|

| 110 |

+

{

|

| 111 |

+

"cell_type": "code",

|

| 112 |

+

"execution_count": 2,

|

| 113 |

+

"id": "29c390ef-2e9d-493e-9991-69ecb835b52b",

|

| 114 |

+

"metadata": {},

|

| 115 |

+

"outputs": [

|

| 116 |

+

{

|

| 117 |

+

"name": "stdout",

|

| 118 |

+

"output_type": "stream",

|

| 119 |

+

"text": [

|

| 120 |

+

"DNA 3-mers: ['ATG', 'TGC', 'GCG', 'CGT', 'GTA', 'TAC', 'ACG', 'CGT', 'GTA']\n",

|

| 121 |

+

"Protein 4-mers: ['MKQH', 'KQHK', 'QHKA', 'HKAM', 'KAMI', 'AMIV', 'MIVA', 'IVAL', 'VALI', 'ALIV', 'LIVL', 'IVLI', 'VLIT', 'LITA', 'ITAY']\n"

|

| 122 |

+

]

|

| 123 |

+

}

|

| 124 |

+

],

|

| 125 |

+

"source": [

|

| 126 |

+

"#示例代码(Python)\n",

|

| 127 |

+

"\n",

|

| 128 |

+

"def k_mer(seq, k):\n",

|

| 129 |

+

" return [seq[i:i+k] for i in range(len(seq) - k + 1)]\n",

|

| 130 |

+

"\n",

|

| 131 |

+

"dna_sequence = \"ATGCGTACGTA\"\n",

|

| 132 |

+

"protein_sequence = \"MKQHKAMIVALIVLITAY\"\n",

|

| 133 |

+

"\n",

|

| 134 |

+

"print(\"DNA 3-mers:\", k_mer(dna_sequence, 3))\n",

|

| 135 |

+

"print(\"Protein 4-mers:\", k_mer(protein_sequence, 4))"

|

| 136 |

+

]

|

| 137 |

+

},

|

| 138 |

+

{

|

| 139 |

+

"cell_type": "markdown",

|

| 140 |

+

"id": "7ced2bfb-bd42-425a-a3ad-54c9573609c5",

|

| 141 |

+

"metadata": {},

|

| 142 |

+

"source": [

|

| 143 |

+

"### 2. **滑动窗口**\n",

|

| 144 |

+

"\n",

|

| 145 |

+

"**定义**:滑动窗口方法通过设定一个固定大小的窗口沿着序列移动,并在每个位置提取窗口内的子序列。这与 K-mer 类似,但允许重叠。\n",

|

| 146 |

+

"\n",

|

| 147 |

+

"**应用**:\n",

|

| 148 |

+

"- **DNA 和蛋白质序列**:窗口大小可以根据具体任务调整,如基因预测、蛋白质结构预测等。\n",

|

| 149 |

+

"\n",

|

| 150 |

+

"**优点**:\n",

|

| 151 |

+

"- 提供了更多的灵活性,可以控制窗口的步长和大小。\n",

|

| 152 |

+

"- 有助于捕捉局部和全局特征。\n",

|

| 153 |

+

"\n",

|

| 154 |

+

"**缺点**:\n",

|

| 155 |

+

"- 计算复杂度较高,尤其是当窗口大小较大时。"

|

| 156 |

+

]

|

| 157 |

+

},

|

| 158 |

+

{

|

| 159 |

+

"cell_type": "code",

|

| 160 |

+

"execution_count": 4,

|

| 161 |

+

"id": "82cecf91-0076-4c12-b11c-b35120581ef9",

|

| 162 |

+

"metadata": {},

|

| 163 |

+

"outputs": [

|

| 164 |

+

{

|

| 165 |

+

"name": "stdout",

|

| 166 |

+

"output_type": "stream",

|

| 167 |

+

"text": [

|

| 168 |

+

"Sliding window (DNA, size=3, step=1): ['ATG', 'TGC', 'GCG', 'CGT', 'GTA', 'TAC', 'ACG', 'CGT', 'GTA']\n",

|

| 169 |

+

"Sliding window (Protein, size=4, step=2): ['MKQH', 'QHKA', 'KAMI', 'MIVA', 'VALI', 'LIVL', 'VLIT', 'ITAY']\n"

|

| 170 |

+

]

|

| 171 |

+

}

|

| 172 |

+

],

|

| 173 |

+

"source": [

|

| 174 |

+

"def sliding_window(seq, window_size, step=1):\n",

|

| 175 |

+

" return [seq[i:i+window_size] for i in range(0, len(seq) - window_size + 1, step)]\n",

|

| 176 |

+

"\n",

|

| 177 |

+

"dna_sequence = \"ATGCGTACGTA\"\n",

|

| 178 |

+

"protein_sequence = \"MKQHKAMIVALIVLITAY\"\n",

|

| 179 |

+

"\n",

|

| 180 |

+

"print(\"Sliding window (DNA, size=3, step=1):\", sliding_window(dna_sequence, 3))\n",

|

| 181 |

+

"print(\"Sliding window (Protein, size=4, step=2):\", sliding_window(protein_sequence, 4, step=2))"

|

| 182 |

+

]

|

| 183 |

+

},

|

| 184 |

+

{

|

| 185 |

+

"cell_type": "markdown",

|

| 186 |

+

"id": "c33ab920-b451-4846-93d4-20da5a4e1001",

|

| 187 |

+

"metadata": {},

|

| 188 |

+

"source": [

|

| 189 |

+

"### 3. **词表分词和嵌入式表示**\n",

|

| 190 |

+

"\n",

|

| 191 |

+

"**定义**:使用预训练的嵌入模型(如 Word2Vec、BERT 等)来将每个 token 映射到高维向量空间中。对于生物序列,可以使用专门设计的嵌入模型,如 ProtTrans、ESM 等。\n",

|

| 192 |

+

"\n",

|

| 193 |

+

"**应用**:\n",

|

| 194 |

+

"- **DNA 和蛋白质序列**:嵌入模型可以捕捉到序列中的语义信息和上下文依赖关系。\n",

|

| 195 |

+

"\n",

|

| 196 |

+

"**优点**:\n",

|

| 197 |

+

"- 捕捉到丰富的语义信息,适合复杂的下游任务。\n",

|

| 198 |

+

"- 可以利用大规模预训练模型的优势。\n",

|

| 199 |

+

"\n",

|

| 200 |

+

"**缺点**:\n",

|

| 201 |

+

"- 需要大量的计算资源来进行预训练。\n",

|

| 202 |

+

"- 模型复杂度较高,解释性较差。"

|

| 203 |

+

]

|

| 204 |

+

},

|

| 205 |

+

{

|

| 206 |

+

"cell_type": "code",

|

| 207 |

+

"execution_count": 5,

|

| 208 |

+

"id": "02bf2af0-6077-4b27-8822-f1c3f22914fa",

|

| 209 |

+

"metadata": {},

|

| 210 |

+

"outputs": [],

|

| 211 |

+

"source": [

|

| 212 |

+

"import subprocess\n",

|

| 213 |

+

"import os\n",

|

| 214 |

+

"# 设置环境变量, autodl一般区域\n",

|

| 215 |

+

"result = subprocess.run('bash -c \"source /etc/network_turbo && env | grep proxy\"', shell=True, capture_output=True, text=True)\n",

|

| 216 |

+

"output = result.stdout\n",

|

| 217 |

+

"for line in output.splitlines():\n",

|

| 218 |

+

" if '=' in line:\n",

|

| 219 |

+

" var, value = line.split('=', 1)\n",

|

| 220 |

+

" os.environ[var] = value\n",

|

| 221 |

+

"\n",

|

| 222 |

+

"\"\"\"\n",

|

| 223 |

+

"import os\n",

|

| 224 |

+

"\n",

|

| 225 |

+

"# 设置环境变量, autodl专区 其他idc\n",

|

| 226 |

+

"os.environ['HF_ENDPOINT'] = 'https://hf-mirror.com'\n",

|

| 227 |

+

"\n",

|

| 228 |

+

"# 打印环境变量以确认设置成功\n",

|

| 229 |

+

"print(os.environ.get('HF_ENDPOINT'))\n",

|

| 230 |

+

"\"\"\""

|

| 231 |

+

]

|

| 232 |

+

},

|

| 233 |

+

{

|

| 234 |

+

"cell_type": "code",

|

| 235 |

+

"execution_count": 15,

|

| 236 |

+

"id": "d43b60ee-67f2-4d06-95ea-966c01084fc4",

|

| 237 |

+

"metadata": {

|

| 238 |

+

"scrolled": true

|

| 239 |

+

},

|

| 240 |

+

"outputs": [

|

| 241 |

+

{

|

| 242 |

+

"name": "stderr",

|

| 243 |

+

"output_type": "stream",

|

| 244 |

+

"text": [

|

| 245 |

+

"huggingface/tokenizers: The current process just got forked, after parallelism has already been used. Disabling parallelism to avoid deadlocks...\n",

|

| 246 |

+

"To disable this warning, you can either:\n",

|

| 247 |

+

"\t- Avoid using `tokenizers` before the fork if possible\n",

|

| 248 |

+

"\t- Explicitly set the environment variable TOKENIZERS_PARALLELISM=(true | false)\n"

|

| 249 |

+

]

|

| 250 |

+

},

|

| 251 |

+

{

|

| 252 |

+

"name": "stdout",

|

| 253 |

+

"output_type": "stream",

|

| 254 |

+

"text": [

|

| 255 |

+

"['ATGCG', 'TACG', 'T', 'A']\n",

|

| 256 |

+

"Embeddings shape: torch.Size([1, 4, 768])\n"

|

| 257 |

+

]

|

| 258 |

+

}

|

| 259 |

+

],

|

| 260 |

+

"source": [

|

| 261 |

+

"from transformers import AutoTokenizer, AutoModel\n",

|

| 262 |

+

"import torch\n",

|

| 263 |

+

"\n",

|

| 264 |

+

"# 加载预训练的蛋白质嵌入模型\n",

|

| 265 |

+

"tokenizer = AutoTokenizer.from_pretrained(\"dnagpt/gpt_dna_v0\")\n",

|

| 266 |

+

"model = AutoModel.from_pretrained(\"dnagpt/gpt_dna_v0\")\n",

|

| 267 |

+

"\n",

|

| 268 |

+

"dna_sequence = \"ATGCGTACGTA\"\n",

|

| 269 |

+

"print(tokenizer.tokenize(dna_sequence))\n",

|

| 270 |

+

"\n",

|

| 271 |

+

"# 编码序列\n",

|

| 272 |

+

"inputs = tokenizer(dna_sequence, return_tensors=\"pt\")\n",

|

| 273 |

+

"\n",

|

| 274 |

+

"# 获取嵌入\n",

|

| 275 |

+

"with torch.no_grad():\n",

|

| 276 |

+

" outputs = model(**inputs)\n",

|

| 277 |

+

" embeddings = outputs.last_hidden_state\n",

|

| 278 |

+

"\n",

|

| 279 |

+

"print(\"Embeddings shape:\", embeddings.shape)"

|

| 280 |

+

]

|

| 281 |

+

},

|

| 282 |

+

{

|

| 283 |

+

"cell_type": "markdown",

|

| 284 |

+

"id": "c24f10dc-1117-4493-9333-5ed6d898f44a",

|

| 285 |

+

"metadata": {},

|

| 286 |

+

"source": [

|

| 287 |

+

"### 训练DNA BPE分词器\n",

|

| 288 |

+

"\n",

|

| 289 |

+

"以上方法展示了如何对 DNA 和蛋白质序列进行“分词”,以提取有用的特征。选择哪种方法取决于具体的任务需求和数据特性。对于简单的分类或回归任务,K-mer 分解或滑动窗口可能是足够的;而对于更复杂的任务,如序列标注或结构预测,基于词汇表的方法或嵌入表示可能会提供更好的性能。\n",

|

| 290 |

+

"\n",

|

| 291 |

+

"目前大部分生物序列大模型的论文中,使用最多的依然是传统的K-mer,但一些SOTA的论文则以BEP为主。而BEP分词也是目前GPT、llama等主流自然语言大模型使用的基础分词器。\n",

|

| 292 |

+

"\n",

|

| 293 |

+

"因此,我们也演示下从头训练一个DNA BPE分词器的方法。\n",

|

| 294 |

+

"\n",

|

| 295 |

+

"我们首先看下GPT2模型,默认的分词器,对DNA序列分词的结果:"

|

| 296 |

+

]

|

| 297 |

+

},

|

| 298 |

+

{

|

| 299 |

+

"cell_type": "code",

|

| 300 |

+

"execution_count": 10,

|

| 301 |

+

"id": "43f1eb8b-1cc2-4ab5-aa8e-2a63132be98c",

|

| 302 |

+

"metadata": {},

|

| 303 |

+

"outputs": [],

|

| 304 |

+

"source": [

|

| 305 |

+

"from tokenizers import (\n",

|

| 306 |

+

" decoders,\n",

|

| 307 |

+

" models,\n",

|

| 308 |

+

" normalizers,\n",

|

| 309 |

+

" pre_tokenizers,\n",

|

| 310 |

+

" processors,\n",

|

| 311 |

+

" trainers,\n",

|

| 312 |

+

" Tokenizer,\n",

|

| 313 |

+

")\n",

|

| 314 |

+

"from transformers import AutoTokenizer"

|

| 315 |

+

]

|

| 316 |

+

},

|

| 317 |

+

{

|

| 318 |

+

"cell_type": "code",

|

| 319 |

+

"execution_count": 15,

|

| 320 |

+

"id": "27e88f7b-1399-418b-9b91-f970762fac0c",

|

| 321 |

+

"metadata": {},

|

| 322 |

+

"outputs": [],

|

| 323 |

+

"source": [

|

| 324 |

+

"gpt2_tokenizer = AutoTokenizer.from_pretrained('gpt2')\n",

|

| 325 |

+

"gpt2_tokenizer.pad_token = gpt2_tokenizer.eos_token"

|

| 326 |

+

]

|

| 327 |

+

},

|

| 328 |

+

{

|

| 329 |

+

"cell_type": "code",

|

| 330 |

+

"execution_count": 16,

|

| 331 |

+

"id": "4b015db7-63ba-4909-b02f-07634b3d5584",

|

| 332 |

+

"metadata": {},

|

| 333 |

+

"outputs": [

|

| 334 |

+

{

|

| 335 |

+

"data": {

|

| 336 |

+

"text/plain": [

|

| 337 |

+

"['T', 'GG', 'C', 'GT', 'GA', 'AC', 'CC', 'GG', 'G', 'AT', 'C', 'GG', 'G']"

|

| 338 |

+

]

|

| 339 |

+

},

|

| 340 |

+

"execution_count": 16,

|

| 341 |

+

"metadata": {},

|

| 342 |

+

"output_type": "execute_result"

|

| 343 |

+

}

|

| 344 |

+

],

|

| 345 |

+

"source": [

|

| 346 |

+

"gpt2_tokenizer.tokenize(\"TGGCGTGAACCCGGGATCGGG\")"

|

| 347 |

+

]

|

| 348 |

+

},

|

| 349 |

+

{

|

| 350 |

+

"cell_type": "markdown",

|

| 351 |

+

"id": "a246fbc9-9e29-4b63-bdf7-f80635d06d1e",

|

| 352 |

+

"metadata": {},

|

| 353 |

+

"source": [

|

| 354 |

+

"可以看到,gpt2模型因为是以英文为主的BPE分词模型,分解的都是1到2个字母的结果,这样显然很难充分表达生物语义,因此,我们使用DNA序列来训练1个BPE分词器,代码也非常简单:"

|

| 355 |

+

]

|

| 356 |

+

},

|

| 357 |

+

{

|

| 358 |

+

"cell_type": "code",

|

| 359 |

+

"execution_count": 2,

|

| 360 |

+

"id": "8357a695-1c29-4b5c-8099-d2e337189410",

|

| 361 |

+

"metadata": {},

|

| 362 |

+

"outputs": [],

|

| 363 |

+

"source": [

|

| 364 |

+

"tokenizer = Tokenizer(models.BPE())\n",

|

| 365 |

+

"tokenizer.pre_tokenizer = pre_tokenizers.ByteLevel(add_prefix_space=False, use_regex=False) #use_regex=False,空格当成一般字符串\n",

|

| 366 |

+

"trainer = trainers.BpeTrainer(vocab_size=30000, special_tokens=[\"<|endoftext|>\"]) #3w words"

|

| 367 |

+

]

|

| 368 |

+

},

|

| 369 |

+

{

|

| 370 |

+

"cell_type": "code",

|

| 371 |

+

"execution_count": 3,

|

| 372 |

+

"id": "32c95888-1498-45cf-8453-421219cc7d45",

|

| 373 |

+

"metadata": {},

|

| 374 |

+

"outputs": [

|

| 375 |

+

{

|

| 376 |

+

"name": "stdout",

|

| 377 |

+

"output_type": "stream",

|

| 378 |

+

"text": [

|

| 379 |

+

"\n",

|

| 380 |

+

"\n",

|

| 381 |

+

"\n"

|

| 382 |

+

]

|

| 383 |

+

}

|

| 384 |

+

],

|

| 385 |

+

"source": [

|

| 386 |

+

"tokenizer.train([\"../01-data_env/data/dna_1g.txt\"], trainer=trainer) #all file list, take 10-20 min"

|

| 387 |

+

]

|

| 388 |

+

},

|

| 389 |

+

{

|

| 390 |

+

"cell_type": "code",

|

| 391 |

+

"execution_count": 4,

|

| 392 |

+

"id": "5ffdd717-72ed-4a37-bafc-b4a0f61f8ff1",

|

| 393 |

+

"metadata": {},

|

| 394 |

+

"outputs": [

|

| 395 |

+

{

|

| 396 |

+

"name": "stdout",

|

| 397 |

+

"output_type": "stream",

|

| 398 |

+

"text": [

|

| 399 |

+

"['TG', 'GCGTGAA', 'CCCGG', 'GATCGG', 'G']\n"

|

| 400 |

+

]

|

| 401 |

+

}

|

| 402 |

+

],

|

| 403 |

+

"source": [

|

| 404 |

+

"encoding = tokenizer.encode(\"TGGCGTGAACCCGGGATCGGG\")\n",

|

| 405 |

+

"print(encoding.tokens)"

|

| 406 |

+

]

|

| 407 |

+

},

|

| 408 |

+

{

|

| 409 |

+

"cell_type": "markdown",

|

| 410 |

+

"id": "a96e7838-6c23-4446-bf86-b098cd93214a",

|

| 411 |

+

"metadata": {},

|

| 412 |

+

"source": [

|

| 413 |

+

"可以看到,以DNA数据训练的分词器,分词效果明显要好的多,各种长度的词都有。"

|

| 414 |

+

]

|

| 415 |

+

},

|

| 416 |

+

{

|

| 417 |

+

"cell_type": "code",

|

| 418 |

+

"execution_count": 5,

|

| 419 |

+

"id": "f1d757c1-702b-4147-9207-471f422f67b2",

|

| 420 |

+

"metadata": {},

|

| 421 |

+

"outputs": [],

|

| 422 |

+

"source": [

|

| 423 |

+

"tokenizer.save(\"dna_bpe_dict.json\")"

|

| 424 |

+

]

|

| 425 |

+

},

|

| 426 |

+

{

|

| 427 |

+

"cell_type": "code",

|

| 428 |

+

"execution_count": 6,

|

| 429 |

+

"id": "caf8ecea-359e-487b-b456-fab546b9da0d",

|

| 430 |

+

"metadata": {},

|

| 431 |

+

"outputs": [],

|

| 432 |

+

"source": [

|

| 433 |

+

"#然后我们可以使用from_file() 方法从该文件里重新加载 Tokenizer 对象:\n",

|

| 434 |

+

"new_tokenizer = Tokenizer.from_file(\"dna_bpe_dict.json\")"

|

| 435 |

+

]

|

| 436 |

+

},

|

| 437 |

+

{

|

| 438 |

+

"cell_type": "code",

|

| 439 |

+

"execution_count": 7,

|

| 440 |

+

"id": "8ec6f045-bc30-4012-8027-a879df8def3a",

|

| 441 |

+

"metadata": {},

|

| 442 |

+

"outputs": [

|

| 443 |

+

{

|

| 444 |

+

"data": {

|

| 445 |

+

"text/plain": [

|

| 446 |

+

"('dna_bpe_dict/tokenizer_config.json',\n",

|

| 447 |

+

" 'dna_bpe_dict/special_tokens_map.json',\n",

|

| 448 |

+

" 'dna_bpe_dict/vocab.json',\n",

|

| 449 |

+

" 'dna_bpe_dict/merges.txt',\n",

|

| 450 |

+

" 'dna_bpe_dict/added_tokens.json',\n",

|

| 451 |

+

" 'dna_bpe_dict/tokenizer.json')"

|

| 452 |

+

]

|

| 453 |

+

},

|

| 454 |

+

"execution_count": 7,

|

| 455 |

+

"metadata": {},

|

| 456 |

+

"output_type": "execute_result"

|

| 457 |

+

}

|

| 458 |

+

],

|

| 459 |

+

"source": [

|

| 460 |

+

"#要在 🤗 Transformers 中使用这个标记器,我们必须将它包裹在一个 PreTrainedTokenizerFast 类中\n",

|

| 461 |

+

"from transformers import GPT2TokenizerFast\n",

|

| 462 |

+

"dna_tokenizer = GPT2TokenizerFast(tokenizer_object=new_tokenizer)\n",

|

| 463 |

+

"dna_tokenizer.save_pretrained(\"dna_bpe_dict\")\n",

|

| 464 |

+

"#dna_tokenizer.push_to_hub(\"dna_bpe_dict_1g\", organization=\"dnagpt\", use_auth_token=\"hf_*****\") # push to huggingface"

|

| 465 |

+

]

|

| 466 |

+

},

|

| 467 |

+

{

|

| 468 |

+

"cell_type": "code",

|

| 469 |

+

"execution_count": 11,

|

| 470 |

+

"id": "f84506d8-6208-4027-aad7-2b68a1bc16d6",

|

| 471 |

+

"metadata": {},

|

| 472 |

+

"outputs": [],

|

| 473 |

+

"source": [

|

| 474 |

+

"tokenizer_new = AutoTokenizer.from_pretrained('dna_bpe_dict')"

|

| 475 |

+

]

|

| 476 |

+

},

|

| 477 |

+

{

|

| 478 |

+

"cell_type": "code",

|

| 479 |

+

"execution_count": 12,

|

| 480 |

+

"id": "d40d4d53-6fed-445c-afb5-c0346ab854c8",

|

| 481 |

+

"metadata": {},

|

| 482 |

+

"outputs": [

|

| 483 |

+

{

|

| 484 |

+

"data": {

|

| 485 |

+

"text/plain": [

|

| 486 |

+

"['TG', 'GCGTGAA', 'CCCGG', 'GATCGG', 'G']"

|

| 487 |

+

]

|

| 488 |

+

},

|

| 489 |

+

"execution_count": 12,

|

| 490 |

+

"metadata": {},

|

| 491 |

+

"output_type": "execute_result"

|

| 492 |

+

}

|

| 493 |

+

],

|

| 494 |

+

"source": [

|

| 495 |

+

"tokenizer_new.tokenize(\"TGGCGTGAACCCGGGATCGGG\")"

|

| 496 |

+

]

|

| 497 |

+

},

|

| 498 |

+

{

|

| 499 |

+

"cell_type": "code",

|

| 500 |

+

"execution_count": null,

|

| 501 |

+

"id": "640302f6-f740-41a4-ae92-ca4c43d97493",

|

| 502 |

+

"metadata": {},

|

| 503 |

+

"outputs": [],

|

| 504 |

+

"source": []

|

| 505 |

+

}

|

| 506 |

+

],

|

| 507 |

+

"metadata": {

|

| 508 |

+

"kernelspec": {

|

| 509 |

+

"display_name": "Python 3 (ipykernel)",

|

| 510 |

+

"language": "python",

|

| 511 |

+

"name": "python3"

|

| 512 |

+

},

|

| 513 |

+

"language_info": {

|

| 514 |

+

"codemirror_mode": {

|

| 515 |

+

"name": "ipython",

|

| 516 |

+

"version": 3

|

| 517 |

+

},

|

| 518 |

+

"file_extension": ".py",

|

| 519 |

+

"mimetype": "text/x-python",

|

| 520 |

+

"name": "python",

|

| 521 |

+

"nbconvert_exporter": "python",

|

| 522 |

+

"pygments_lexer": "ipython3",

|

| 523 |

+

"version": "3.12.3"

|

| 524 |

+

}

|

| 525 |

+

},

|

| 526 |

+

"nbformat": 4,

|

| 527 |

+

"nbformat_minor": 5

|

| 528 |

+

}

|

02-gpt2_bert/.ipynb_checkpoints/2-dna-gpt-checkpoint.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

02-gpt2_bert/.ipynb_checkpoints/3-dna-bert-checkpoint.ipynb

ADDED

|

@@ -0,0 +1,253 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "code",

|

| 5 |

+

"execution_count": null,

|

| 6 |

+

"id": "a3ec4b86-2029-4d50-9bbf-64b208249165",

|

| 7 |

+

"metadata": {},

|

| 8 |

+

"outputs": [],

|

| 9 |

+

"source": [

|

| 10 |

+

"from tokenizers import Tokenizer\n",

|

| 11 |

+

"from tokenizers.models import WordPiece\n",

|

| 12 |

+

"from tokenizers.trainers import WordPieceTrainer\n",

|

| 13 |

+

"from tokenizers.pre_tokenizers import Whitespace"

|

| 14 |

+

]

|

| 15 |

+

},

|

| 16 |

+

{

|

| 17 |

+

"cell_type": "code",

|

| 18 |

+

"execution_count": null,

|

| 19 |

+

"id": "47b3fc92-df22-4e4b-bdf9-671bda924c49",

|

| 20 |

+

"metadata": {},

|

| 21 |

+

"outputs": [],

|

| 22 |

+

"source": [

|

| 23 |

+

"# 初始化一个空的 WordPiece 模型\n",

|

| 24 |

+

"tokenizer = Tokenizer(WordPiece(unk_token=\"[UNK]\"))"

|

| 25 |

+

]

|

| 26 |

+

},

|

| 27 |

+

{

|

| 28 |

+

"cell_type": "code",

|

| 29 |

+

"execution_count": null,

|

| 30 |

+

"id": "73f59aa6-8cce-4124-a3ee-7a5617b91ea7",

|

| 31 |

+

"metadata": {},

|

| 32 |

+

"outputs": [],

|

| 33 |

+

"source": [

|

| 34 |

+

"# 设置训练参数\n",

|

| 35 |

+

"trainer = WordPieceTrainer(\n",

|

| 36 |

+

" vocab_size=90000, # 词汇表大小\n",

|

| 37 |

+

" min_frequency=2, # 最小词频\n",

|

| 38 |

+

" special_tokens=[\n",

|

| 39 |

+

" \"[PAD]\", \"[UNK]\", \"[CLS]\", \"[SEP]\", \"[MASK]\"\n",

|

| 40 |

+

" ]\n",

|

| 41 |

+

")\n",

|

| 42 |

+

"\n",

|

| 43 |

+

"tokenizer.train(files=[\"../01-data_env/data/dna_1g.txt\"], trainer=trainer)"

|

| 44 |

+

]

|

| 45 |

+

},

|

| 46 |

+

{

|

| 47 |

+

"cell_type": "code",

|

| 48 |

+

"execution_count": null,

|

| 49 |

+

"id": "7a0ccd64-5172-4f40-9868-cdf02687ae10",

|

| 50 |

+

"metadata": {},

|

| 51 |

+

"outputs": [],

|

| 52 |

+

"source": [

|

| 53 |

+

"tokenizer.save(\"dna_wordpiece_dict.json\")"

|

| 54 |

+

]

|

| 55 |

+

},

|

| 56 |

+

{

|

| 57 |

+

"cell_type": "markdown",

|

| 58 |

+

"id": "eea3c48a-2245-478e-a2ce-f5d1af399d83",

|

| 59 |

+

"metadata": {},

|

| 60 |

+

"source": [

|

| 61 |

+

"## GPT2和bert配置的关键区别\n",

|

| 62 |

+

"* 最大长度:\n",

|

| 63 |

+

"在 GPT-2 中,n_ctx 参数指定了模型的最大上下文窗口大小。\n",

|

| 64 |

+

"在 BERT 中,你应该设置 max_position_embeddings 来指定最大位置嵌入数,这限制了输入序列的最大长度。\n",

|

| 65 |

+

"* 特殊 token ID:\n",

|

| 66 |

+

"GPT-2 使用 bos_token_id 和 eos_token_id 分别表示句子的开始和结束。\n",

|

| 67 |

+

"BERT 使用 [CLS] (cls_token_id) 表示句子的开始,用 [SEP] (sep_token_id) 表示句子的结束。BERT 还有专门的填充 token [PAD] (pad_token_id)。\n",

|

| 68 |

+

"* 模型类选择:\n",

|

| 69 |

+

"对于 GPT-2,你使用了 GPT2LMHeadModel,它适合生成任务或语言建模。\n",

|

| 70 |

+

"对于 BERT,如果你打算进行预训练(例如 Masked Language Modeling),应该使用 BertForMaskedLM。\n",

|

| 71 |

+

"* 预训练权重:\n",

|

| 72 |

+

"如果你想从头开始训练,像上面的例子中那样直接从配置创建模型即可。\n",

|

| 73 |

+

"如果你希望基于现有预训练模型微调,则可以使用 from_pretrained 方法加载预训练权重。"

|

| 74 |

+

]

|

| 75 |

+

},

|

| 76 |

+

{

|

| 77 |

+

"cell_type": "code",

|

| 78 |

+

"execution_count": null,

|

| 79 |

+

"id": "48e1f20b-cd1a-49fa-be2b-aba30a24e706",

|

| 80 |

+

"metadata": {},

|

| 81 |

+

"outputs": [],

|

| 82 |

+

"source": [

|

| 83 |

+

"new_tokenizer = Tokenizer.from_file(\"dna_wordpiece_dict.json\")\n",

|

| 84 |

+

"\n",

|

| 85 |

+

"wrapped_tokenizer = PreTrainedTokenizerFast(\n",

|

| 86 |

+

" tokenizer_object=new_tokenizer,\n",

|

| 87 |

+

" unk_token=\"[UNK]\",\n",

|

| 88 |

+

" pad_token=\"[PAD]\",\n",

|

| 89 |

+

" cls_token=\"[CLS]\",\n",

|

| 90 |

+

" sep_token=\"[SEP]\",\n",

|

| 91 |

+

" mask_token=\"[MASK]\",\n",

|

| 92 |

+

")\n",

|

| 93 |

+

"wrapped_tokenizer.save_pretrained(\"dna_wordpiece_dict\")"

|

| 94 |

+

]

|

| 95 |

+

},

|

| 96 |

+

{

|

| 97 |

+

"cell_type": "code",

|

| 98 |

+

"execution_count": null,

|

| 99 |

+

"id": "c94dc601-86ec-421c-8638-c8d8b5078682",

|

| 100 |

+

"metadata": {},

|

| 101 |

+

"outputs": [],

|

| 102 |

+

"source": [

|

| 103 |

+

"from transformers import AutoTokenizer, GPT2LMHeadModel, AutoConfig,GPT2Tokenizer\n",

|

| 104 |

+

"from transformers import GPT2Tokenizer,GPT2Model,AutoModel\n",

|

| 105 |

+

"from transformers import DataCollatorForLanguageModeling\n",

|

| 106 |

+

"from transformers import Trainer, TrainingArguments\n",

|

| 107 |

+

"from transformers import LineByLineTextDataset\n",

|

| 108 |

+

"from tokenizers import Tokenizer\n",

|

| 109 |

+

"from datasets import load_dataset\n",

|

| 110 |

+

"from transformers import BertConfig, BertModel"

|

| 111 |

+

]

|

| 112 |

+

},

|

| 113 |

+

{

|

| 114 |

+

"cell_type": "code",

|

| 115 |

+

"execution_count": null,

|

| 116 |

+

"id": "b2658cd2-0ac5-483e-b04d-2716993770e3",

|

| 117 |

+

"metadata": {},

|

| 118 |

+

"outputs": [],