NOTICE (Korean):

본 모델은 상업적 목적으로 활용하실 수 있습니다. 상업적 이용을 원하시는 경우, Contact us를 통해 문의해 주시기 바랍니다. 간단한 협의 절차를 거쳐 상업적 활용을 승인해 드리도록 하겠습니다.

Try DNA-powered Mnemos Assistant! Beta Open →

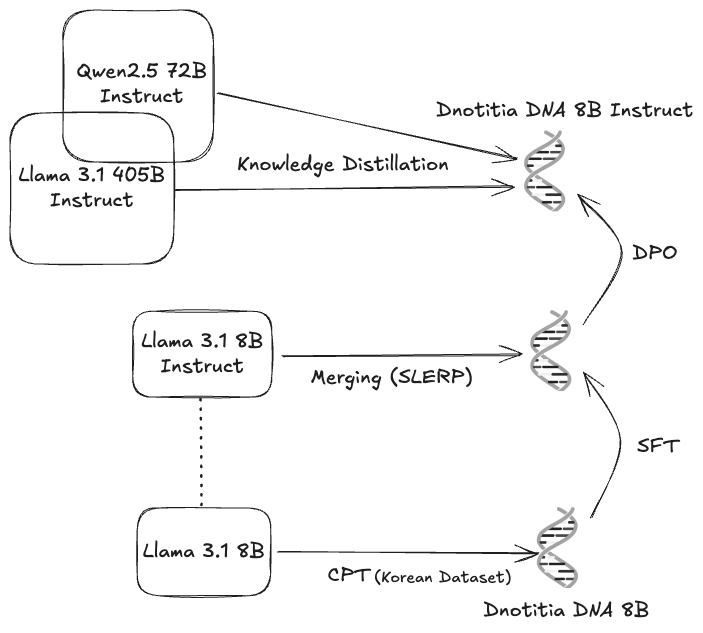

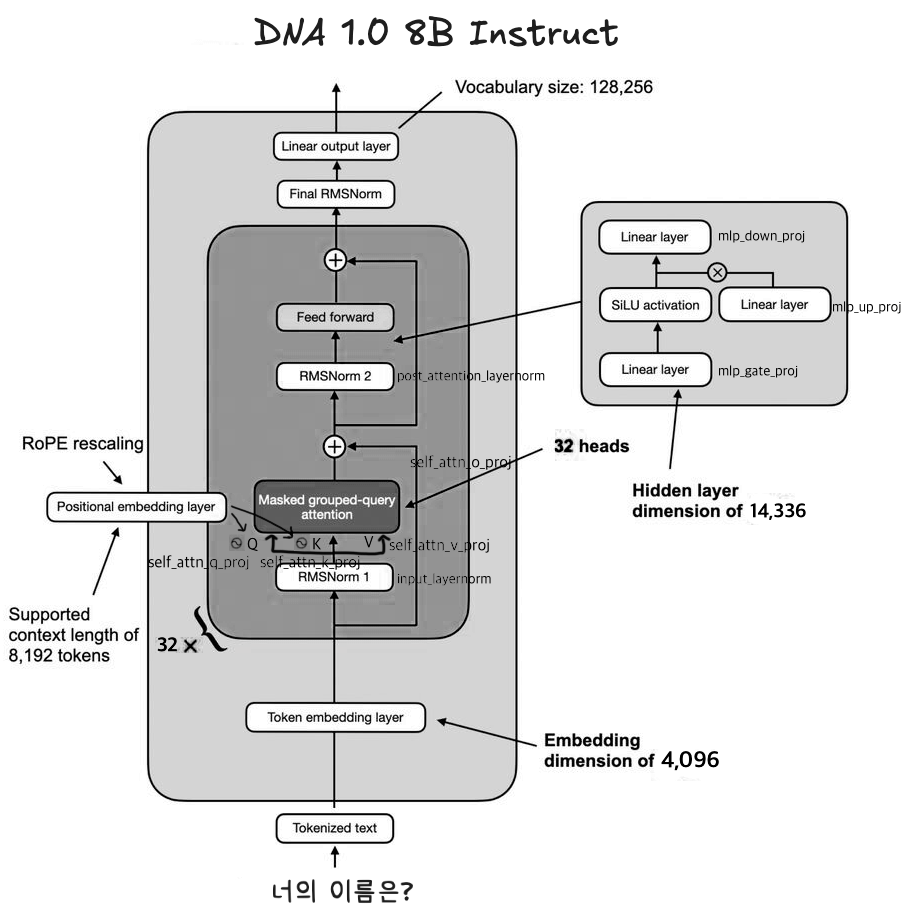

- DNA 1.0 8B Instruct model architecture [1]:

- DNA 1.0 8B Instruct model architecture [1]:

[1]:

[1]:  ## Citation

If you use or discuss this model in your academic research, please cite the project to help spread awareness:

```

@misc{lee2025dna10technicalreport,

title={DNA 1.0 Technical Report},

author={Jungyup Lee and Jemin Kim and Sang Park and SeungJae Lee},

year={2025},

eprint={2501.10648},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2501.10648},

}

```

## Citation

If you use or discuss this model in your academic research, please cite the project to help spread awareness:

```

@misc{lee2025dna10technicalreport,

title={DNA 1.0 Technical Report},

author={Jungyup Lee and Jemin Kim and Sang Park and SeungJae Lee},

year={2025},

eprint={2501.10648},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2501.10648},

}

```