Commit

·

b331cd3

1

Parent(s):

00adddb

added more details to model

Browse files- README.md +42 -180

- assets/arabic-nano-gpt-v0-eval-loss.png +0 -0

- assets/arabic-nano-gpt-v0-train-loss.png +0 -0

README.md

CHANGED

|

@@ -3,41 +3,61 @@ library_name: transformers

|

|

| 3 |

license: mit

|

| 4 |

base_model: openai-community/gpt2

|

| 5 |

tags:

|

| 6 |

-

- generated_from_trainer

|

| 7 |

model-index:

|

| 8 |

-

- name: arabic-nano-gpt

|

| 9 |

-

|

| 10 |

datasets:

|

| 11 |

-

- wikimedia/wikipedia

|

| 12 |

language:

|

| 13 |

-

- ar

|

| 14 |

---

|

| 15 |

|

| 16 |

-

|

| 17 |

# arabic-nano-gpt

|

| 18 |

|

| 19 |

-

This model is a fine-tuned version of [openai-community/gpt2](https://huggingface.co/openai-community/gpt2) on

|

| 20 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

| 21 |

- Loss: 3.28796

|

| 22 |

|

|

|

|

| 23 |

|

| 24 |

-

|

|

|

|

|

|

|

| 25 |

|

| 26 |

-

|

|

|

|

| 27 |

|

| 28 |

-

## Intended uses & limitations

|

| 29 |

|

| 30 |

-

|

| 31 |

|

| 32 |

-

|

|

|

|

|

|

|

| 33 |

|

| 34 |

-

|

| 35 |

|

| 36 |

-

|

|

|

|

|

|

|

|

|

|

| 37 |

|

| 38 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 39 |

|

| 40 |

The following hyperparameters were used during training:

|

|

|

|

| 41 |

- learning_rate: 0.001

|

| 42 |

- train_batch_size: 64

|

| 43 |

- eval_batch_size: 64

|

|

@@ -49,175 +69,17 @@ The following hyperparameters were used during training:

|

|

| 49 |

- lr_scheduler_warmup_ratio: 0.01

|

| 50 |

- num_epochs: 24

|

| 51 |

|

| 52 |

-

|

| 53 |

-

|

| 54 |

-

<!-- | Training Loss | Epoch | Step | Validation Loss |

|

| 55 |

-

|:-------------:|:------:|:------:|:---------------:|

|

| 56 |

-

| 5.62 | 0.0585 | 1000 | 5.3754 |

|

| 57 |

-

| 4.6527 | 0.1170 | 2000 | 4.4918 |

|

| 58 |

-

| 4.2818 | 0.1755 | 3000 | 4.1137 |

|

| 59 |

-

| 4.1289 | 0.2340 | 4000 | 3.9388 |

|

| 60 |

-

| 4.0021 | 0.2924 | 5000 | 3.8274 |

|

| 61 |

-

| 3.9301 | 0.3509 | 6000 | 3.7534 |

|

| 62 |

-

| 3.8822 | 0.4094 | 7000 | 3.6986 |

|

| 63 |

-

| 3.8375 | 0.4679 | 8000 | 3.6557 |

|

| 64 |

-

| 3.7918 | 0.5264 | 9000 | 3.6266 |

|

| 65 |

-

| 3.7723 | 0.5849 | 10000 | 3.5994 |

|

| 66 |

-

| 3.7549 | 0.6434 | 11000 | 3.5787 |

|

| 67 |

-

| 3.7324 | 0.7019 | 12000 | 3.5612 |

|

| 68 |

-

| 3.7249 | 0.7604 | 13000 | 3.5436 |

|

| 69 |

-

| 3.6989 | 0.8188 | 14000 | 3.5323 |

|

| 70 |

-

| 3.7003 | 0.8773 | 15000 | 3.5169 |

|

| 71 |

-

| 3.6919 | 0.9358 | 16000 | 3.5055 |

|

| 72 |

-

| 3.6717 | 0.9943 | 17000 | 3.4966 |

|

| 73 |

-

| 3.6612 | 1.0528 | 18000 | 3.4868 |

|

| 74 |

-

| 3.6467 | 1.1113 | 19000 | 3.4787 |

|

| 75 |

-

| 3.6497 | 1.1698 | 20000 | 3.4707 |

|

| 76 |

-

| 3.6193 | 1.2283 | 21000 | 3.4639 |

|

| 77 |

-

| 3.6302 | 1.2868 | 22000 | 3.4572 |

|

| 78 |

-

| 3.6225 | 1.3452 | 23000 | 3.4516 |

|

| 79 |

-

| 3.635 | 1.4037 | 24000 | 3.4458 |

|

| 80 |

-

| 3.6115 | 1.4622 | 25000 | 3.4416 |

|

| 81 |

-

| 3.6162 | 1.5207 | 26000 | 3.4348 |

|

| 82 |

-

| 3.6142 | 1.5792 | 27000 | 3.4329 |

|

| 83 |

-

| 3.5956 | 1.6377 | 28000 | 3.4293 |

|

| 84 |

-

| 3.5885 | 1.6962 | 29000 | 3.4226 |

|

| 85 |

-

| 3.603 | 1.7547 | 30000 | 3.4195 |

|

| 86 |

-

| 3.5947 | 1.8132 | 31000 | 3.4142 |

|

| 87 |

-

| 3.588 | 1.8716 | 32000 | 3.4113 |

|

| 88 |

-

| 3.5803 | 1.9301 | 33000 | 3.4065 |

|

| 89 |

-

| 3.5891 | 1.9886 | 34000 | 3.4044 |

|

| 90 |

-

| 3.5801 | 2.0471 | 35000 | 3.4032 |

|

| 91 |

-

| 3.5739 | 2.1056 | 36000 | 3.3988 |

|

| 92 |

-

| 3.5661 | 2.1641 | 37000 | 3.3981 |

|

| 93 |

-

| 3.5657 | 2.2226 | 38000 | 3.3934 |

|

| 94 |

-

| 3.5727 | 2.2811 | 39000 | 3.3907 |

|

| 95 |

-

| 3.5617 | 2.3396 | 40000 | 3.3885 |

|

| 96 |

-

| 3.5579 | 2.3980 | 41000 | 3.3855 |

|

| 97 |

-

| 3.5553 | 2.4565 | 42000 | 3.3816 |

|

| 98 |

-

| 3.5647 | 2.5150 | 43000 | 3.3803 |

|

| 99 |

-

| 3.5531 | 2.5735 | 44000 | 3.3799 |

|

| 100 |

-

| 3.5494 | 2.6320 | 45000 | 3.3777 |

|

| 101 |

-

| 3.5525 | 2.6905 | 46000 | 3.3759 |

|

| 102 |

-

| 3.5487 | 2.7490 | 47000 | 3.3725 |

|

| 103 |

-

| 3.5551 | 2.8075 | 48000 | 3.3711 |

|

| 104 |

-

| 3.5511 | 2.8660 | 49000 | 3.3681 |

|

| 105 |

-

| 3.5463 | 2.9244 | 50000 | 3.3695 |

|

| 106 |

-

| 3.5419 | 2.9829 | 51000 | 3.3660 |

|

| 107 |

-

| 3.5414 | 3.0414 | 52000 | 3.3648 |

|

| 108 |

-

| 3.5388 | 3.0999 | 53000 | 3.3605 |

|

| 109 |

-

| 3.5333 | 3.1584 | 54000 | 3.3619 |

|

| 110 |

-

| 3.525 | 3.2169 | 55000 | 3.3588 |

|

| 111 |

-

| 3.5361 | 3.2754 | 56000 | 3.3572 |

|

| 112 |

-

| 3.5302 | 3.3339 | 57000 | 3.3540 |

|

| 113 |

-

| 3.5355 | 3.3924 | 58000 | 3.3553 |

|

| 114 |

-

| 3.5391 | 3.4508 | 59000 | 3.3504 |

|

| 115 |

-

| 3.531 | 3.5093 | 60000 | 3.3495 |

|

| 116 |

-

| 3.5293 | 3.5678 | 61000 | 3.3483 |

|

| 117 |

-

| 3.5269 | 3.6263 | 62000 | 3.3489 |

|

| 118 |

-

| 3.5181 | 3.6848 | 63000 | 3.3494 |

|

| 119 |

-

| 3.5205 | 3.7433 | 64000 | 3.3480 |

|

| 120 |

-

| 3.5237 | 3.8018 | 65000 | 3.3440 |

|

| 121 |

-

| 3.5316 | 3.8603 | 66000 | 3.3417 |

|

| 122 |

-

| 3.5222 | 3.9188 | 67000 | 3.3433 |

|

| 123 |

-

| 3.5174 | 3.9772 | 68000 | 3.3418 |

|

| 124 |

-

| 3.518 | 4.0357 | 69000 | 3.3414 |

|

| 125 |

-

| 3.5036 | 4.0942 | 70000 | 3.3365 |

|

| 126 |

-

| 3.5101 | 4.1527 | 71000 | 3.3367 |

|

| 127 |

-

| 3.5145 | 4.2112 | 72000 | 3.3361 |

|

| 128 |

-

| 3.5053 | 4.2697 | 73000 | 3.3355 |

|

| 129 |

-

| 3.5153 | 4.3282 | 74000 | 3.3334 |

|

| 130 |

-

| 3.5003 | 4.3867 | 75000 | 3.3334 |

|

| 131 |

-

| 3.5001 | 4.4452 | 76000 | 3.3326 |

|

| 132 |

-

| 3.5114 | 4.5036 | 77000 | 3.3298 |

|

| 133 |

-

| 3.5108 | 4.5621 | 78000 | 3.3292 |

|

| 134 |

-

| 3.4985 | 4.6206 | 79000 | 3.3288 |

|

| 135 |

-

| 3.497 | 4.6791 | 80000 | 3.3303 |

|

| 136 |

-

| 3.4982 | 4.7376 | 81000 | 3.3291 |

|

| 137 |

-

| 3.5068 | 4.7961 | 82000 | 3.3272 |

|

| 138 |

-

| 3.4915 | 4.8546 | 83000 | 3.3244 |

|

| 139 |

-

| 3.5036 | 4.9131 | 84000 | 3.3214 |

|

| 140 |

-

| 3.5027 | 4.9716 | 85000 | 3.3214 |

|

| 141 |

-

| 3.5078 | 5.0300 | 86000 | 3.3225 |

|

| 142 |

-

| 3.5112 | 5.0885 | 87000 | 3.3243 |

|

| 143 |

-

| 3.5049 | 5.1470 | 88000 | 3.3216 |

|

| 144 |

-

| 3.4917 | 5.2055 | 89000 | 3.3192 |

|

| 145 |

-

| 3.4802 | 5.2640 | 90000 | 3.3188 |

|

| 146 |

-

| 3.4971 | 5.3225 | 91000 | 3.3201 |

|

| 147 |

-

| 3.4941 | 5.3810 | 92000 | 3.3175 |

|

| 148 |

-

| 3.4998 | 5.4395 | 93000 | 3.3179 |

|

| 149 |

-

| 3.5011 | 5.4980 | 94000 | 3.3164 |

|

| 150 |

-

| 3.4912 | 5.5564 | 95000 | 3.3180 |

|

| 151 |

-

| 3.4961 | 5.6149 | 96000 | 3.3168 |

|

| 152 |

-

| 3.4833 | 5.6734 | 97000 | 3.3148 |

|

| 153 |

-

| 3.498 | 5.7319 | 98000 | 3.3133 |

|

| 154 |

-

| 3.4892 | 5.7904 | 99000 | 3.3142 |

|

| 155 |

-

| 3.4967 | 5.8489 | 100000 | 3.3142 |

|

| 156 |

-

| 3.4847 | 5.9074 | 101000 | 3.3094 |

|

| 157 |

-

| 3.4899 | 5.9659 | 102000 | 3.3102 |

|

| 158 |

-

| 3.4774 | 6.0244 | 103000 | 3.3110 |

|

| 159 |

-

| 3.4854 | 6.0828 | 104000 | 3.3106 |

|

| 160 |

-

| 3.4873 | 6.1413 | 105000 | 3.3087 |

|

| 161 |

-

| 3.4869 | 6.1998 | 106000 | 3.3102 |

|

| 162 |

-

| 3.4833 | 6.2583 | 107000 | 3.3063 |

|

| 163 |

-

| 3.491 | 6.3168 | 108000 | 3.3082 |

|

| 164 |

-

| 3.4776 | 6.3753 | 109000 | 3.3075 |

|

| 165 |

-

| 3.4924 | 6.4338 | 110000 | 3.3068 |

|

| 166 |

-

| 3.4804 | 6.4923 | 111000 | 3.3050 |

|

| 167 |

-

| 3.4805 | 6.5508 | 112000 | 3.3041 |

|

| 168 |

-

| 3.4892 | 6.6093 | 113000 | 3.3031 |

|

| 169 |

-

| 3.4775 | 6.6677 | 114000 | 3.3032 |

|

| 170 |

-

| 3.481 | 6.7262 | 115000 | 3.3036 |

|

| 171 |

-

| 3.4782 | 6.7847 | 116000 | 3.3025 |

|

| 172 |

-

| 3.4804 | 6.8432 | 117000 | 3.3017 |

|

| 173 |

-

| 3.4841 | 6.9017 | 118000 | 3.2999 |

|

| 174 |

-

| 3.4784 | 6.9602 | 119000 | 3.3008 |

|

| 175 |

-

| 3.4821 | 7.0187 | 120000 | 3.3001 |

|

| 176 |

-

| 3.4671 | 7.0772 | 121000 | 3.3008 |

|

| 177 |

-

| 3.485 | 7.1357 | 122000 | 3.2976 |

|

| 178 |

-

| 3.4737 | 7.1941 | 123000 | 3.2985 |

|

| 179 |

-

| 3.4793 | 7.2526 | 124000 | 3.2979 |

|

| 180 |

-

| 3.4651 | 7.3111 | 125000 | 3.2968 |

|

| 181 |

-

| 3.4847 | 7.3696 | 126000 | 3.2974 |

|

| 182 |

-

| 3.474 | 7.4281 | 127000 | 3.2973 |

|

| 183 |

-

| 3.4769 | 7.4866 | 128000 | 3.2955 |

|

| 184 |

-

| 3.486 | 7.5451 | 129000 | 3.2953 |

|

| 185 |

-

| 3.4684 | 7.6036 | 130000 | 3.2944 |

|

| 186 |

-

| 3.4826 | 7.6621 | 131000 | 3.2949 |

|

| 187 |

-

| 3.4685 | 7.7205 | 132000 | 3.2944 |

|

| 188 |

-

| 3.4608 | 7.7790 | 133000 | 3.2931 |

|

| 189 |

-

| 3.4655 | 7.8375 | 134000 | 3.2953 |

|

| 190 |

-

| 3.4648 | 7.8960 | 135000 | 3.2928 |

|

| 191 |

-

| 3.4632 | 7.9545 | 136000 | 3.2936 |

|

| 192 |

-

| 3.4666 | 8.0130 | 137000 | 3.2902 |

|

| 193 |

-

| 3.4663 | 8.0715 | 138000 | 3.2939 |

|

| 194 |

-

| 3.4713 | 8.1300 | 139000 | 3.2904 |

|

| 195 |

-

| 3.4654 | 8.1885 | 140000 | 3.2917 |

|

| 196 |

-

| 3.466 | 8.2469 | 141000 | 3.2913 |

|

| 197 |

-

| 3.4724 | 8.3054 | 142000 | 3.2889 |

|

| 198 |

-

| 3.4695 | 8.3639 | 143000 | 3.2890 |

|

| 199 |

-

| 3.4729 | 8.4224 | 144000 | 3.2876 |

|

| 200 |

-

| 3.4551 | 8.4809 | 145000 | 3.2898 |

|

| 201 |

-

| 3.4652 | 8.5394 | 146000 | 3.2885 |

|

| 202 |

-

| 3.4689 | 8.5979 | 147000 | 3.2854 |

|

| 203 |

-

| 3.4647 | 8.6564 | 148000 | 3.2857 |

|

| 204 |

-

| 3.4653 | 8.7149 | 149000 | 3.2857 |

|

| 205 |

-

| 3.4552 | 8.7733 | 150000 | 3.2861 |

|

| 206 |

-

| 3.47 | 8.8318 | 151000 | 3.2868 |

|

| 207 |

-

| 3.4627 | 8.8903 | 152000 | 3.2854 | -->

|

| 208 |

-

|

| 209 |

-

### Training Loss

|

| 210 |

-

|

| 211 |

-

|

| 212 |

|

| 213 |

-

|

| 214 |

|

| 215 |

-

|

| 216 |

|

|

|

|

| 217 |

|

| 218 |

-

|

| 219 |

|

| 220 |

- Transformers 4.45.2

|

| 221 |

- Pytorch 2.5.0

|

| 222 |

- Datasets 3.0.1

|

| 223 |

-

- Tokenizers 0.20.1

|

|

|

|

| 3 |

license: mit

|

| 4 |

base_model: openai-community/gpt2

|

| 5 |

tags:

|

| 6 |

+

- generated_from_trainer

|

| 7 |

model-index:

|

| 8 |

+

- name: arabic-nano-gpt

|

| 9 |

+

results: []

|

| 10 |

datasets:

|

| 11 |

+

- wikimedia/wikipedia

|

| 12 |

language:

|

| 13 |

+

- ar

|

| 14 |

---

|

| 15 |

|

|

|

|

| 16 |

# arabic-nano-gpt

|

| 17 |

|

| 18 |

+

This model is a fine-tuned version of [openai-community/gpt2](https://huggingface.co/openai-community/gpt2) on the arabic [wikimedia/wikipedia](https://huggingface.co/datasets/wikimedia/wikipedia) dataset.

|

| 19 |

+

|

| 20 |

+

Repository on GitHub: [e-hossam96/arabic-nano-gpt](https://github.com/e-hossam96/arabic-nano-gpt.git)

|

| 21 |

+

|

| 22 |

+

The model achieves the following results on the held-out test set:

|

| 23 |

+

|

| 24 |

- Loss: 3.28796

|

| 25 |

|

| 26 |

+

## How to Use

|

| 27 |

|

| 28 |

+

```python

|

| 29 |

+

import torch

|

| 30 |

+

from transformers import pipeline

|

| 31 |

|

| 32 |

+

model_ckpt = "e-hossam96/arabic-nano-gpt-v0"

|

| 33 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 34 |

|

|

|

|

| 35 |

|

| 36 |

+

lm = pipeline(task="text-generation", model=model_ckpt, device=device)

|

| 37 |

|

| 38 |

+

prompt = """المحرك النفاث هو محرك ينفث الموائع (الماء أو الهواء) بسرعة فائقة \

|

| 39 |

+

لينتج قوة دافعة اعتمادا على مبدأ قانون نيوتن الثالث للحركة. \

|

| 40 |

+

هذا التعريف الواسع للمحركات النفاثة يتضمن أيضا"""

|

| 41 |

|

| 42 |

+

output = lm(prompt, max_new_tokens=128)

|

| 43 |

|

| 44 |

+

print(output[0]["generated_text"])

|

| 45 |

+

```

|

| 46 |

+

|

| 47 |

+

## Model description

|

| 48 |

|

| 49 |

+

- Embedding Size: 256

|

| 50 |

+

- Attention Heads: 4

|

| 51 |

+

- Attention Layers: 4

|

| 52 |

+

|

| 53 |

+

## Training and evaluation data

|

| 54 |

+

|

| 55 |

+

The entire wikipedia dataset was split into three splits based on the 90-5-5 ratios.

|

| 56 |

+

|

| 57 |

+

## Training hyperparameters

|

| 58 |

|

| 59 |

The following hyperparameters were used during training:

|

| 60 |

+

|

| 61 |

- learning_rate: 0.001

|

| 62 |

- train_batch_size: 64

|

| 63 |

- eval_batch_size: 64

|

|

|

|

| 69 |

- lr_scheduler_warmup_ratio: 0.01

|

| 70 |

- num_epochs: 24

|

| 71 |

|

| 72 |

+

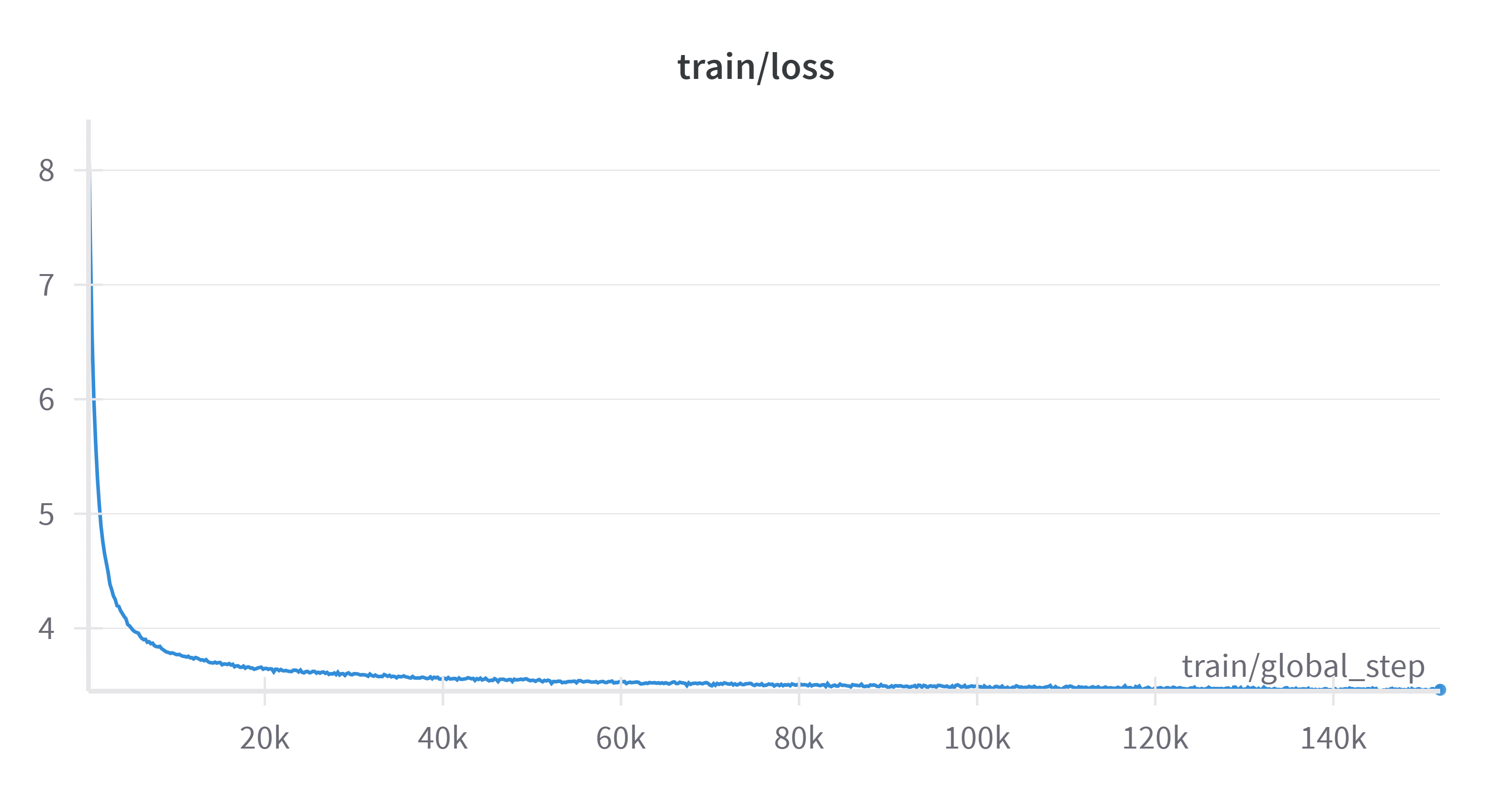

## Training Loss

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 73 |

|

| 74 |

+

|

| 75 |

|

| 76 |

+

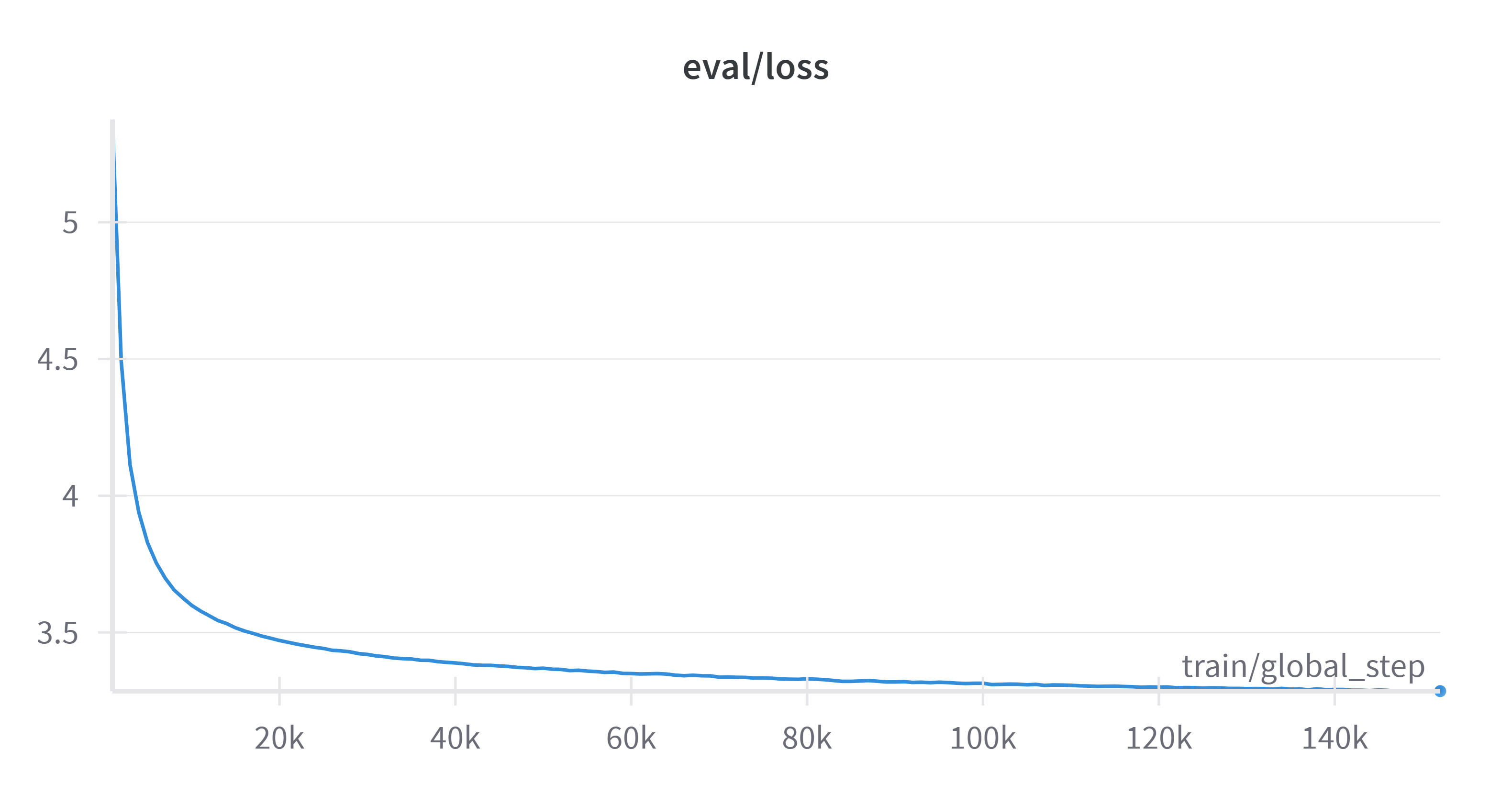

## Validation Loss

|

| 77 |

|

| 78 |

+

|

| 79 |

|

| 80 |

+

## Framework versions

|

| 81 |

|

| 82 |

- Transformers 4.45.2

|

| 83 |

- Pytorch 2.5.0

|

| 84 |

- Datasets 3.0.1

|

| 85 |

+

- Tokenizers 0.20.1

|

assets/arabic-nano-gpt-v0-eval-loss.png

ADDED

|

assets/arabic-nano-gpt-v0-train-loss.png

ADDED

|