Init Commits

Browse files- README.md +73 -3

- control_v1_sd15_layout_fp16/config.json +51 -0

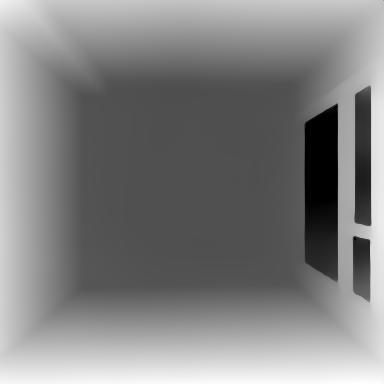

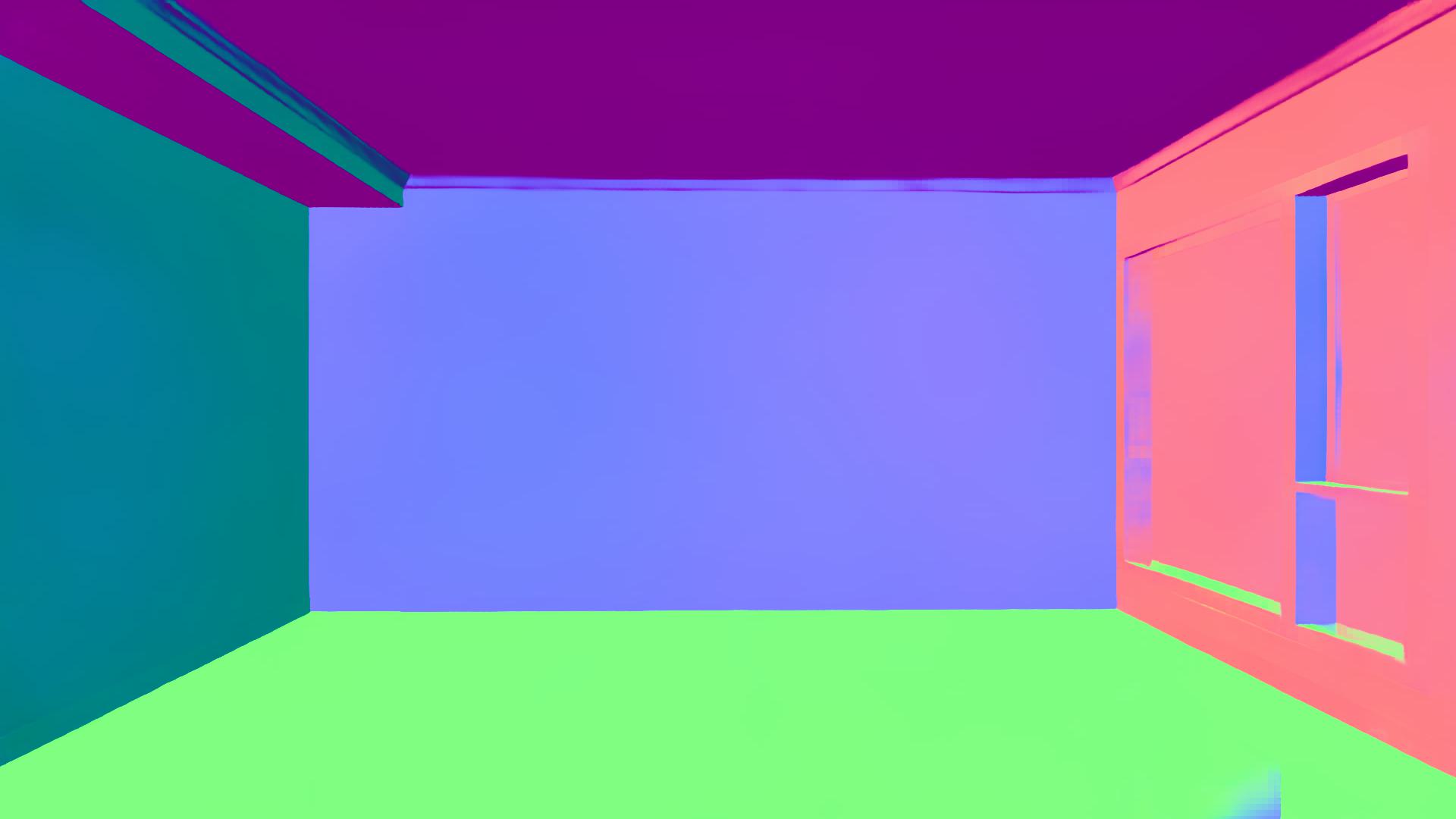

- examples/layout_depth.jpg +0 -0

- examples/layout_input.jpg +0 -0

- examples/layout_normal.jpg +0 -0

- examples/layout_output.jpg +0 -0

- examples/layout_segm.jpg +0 -0

README.md

CHANGED

|

@@ -1,3 +1,73 @@

|

|

| 1 |

-

---

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

language:

|

| 3 |

+

- en

|

| 4 |

+

license: creativeml-openrail-m

|

| 5 |

+

library_name: diffusers

|

| 6 |

+

tags:

|

| 7 |

+

- art

|

| 8 |

+

- diffusion

|

| 9 |

+

- Interior

|

| 10 |

+

---

|

| 11 |

+

|

| 12 |

+

# KuJiaLe Layout ControlNet

|

| 13 |

+

The models are not permitted for commercial usage. For inquiries regarding business, commercial licensing, custom models, and consultation, please contact [[email protected]](mailto:[email protected]).

|

| 14 |

+

|

| 15 |

+

The model is trained on [runwayml/stable-diffusion-v1-5](https://huggingface.co/runwayml/stable-diffusion-v1-5) for interior designs.

|

| 16 |

+

|

| 17 |

+

### Layout ControlNet Example

|

| 18 |

+

Keep the room layout consistent, re-furnish the room.

|

| 19 |

+

<table style="table-layout: fixed;">

|

| 20 |

+

<tr>

|

| 21 |

+

<td style="text-align: center; vertical-align: middle; width: 50%"> <img src="https://huggingface.co/kujiale-ai/controlnet-layout/resolve/main/examples/layout_input.jpg" alt="Input" width="100%" style="display: block; margin-left: auto; margin-right: auto;"></td>

|

| 22 |

+

<td style="text-align: center; vertical-align: middle; width: 50%"> <img src="https://huggingface.co/kujiale-ai/controlnet-layout/resolve/main/examples/layout_output.jpg" alt="Output" width="100%" style="display: block; margin-left: auto; margin-right: auto;"></td>

|

| 23 |

+

</tr>

|

| 24 |

+

<tr>

|

| 25 |

+

<td style="text-align: center; vertical-align: middle; width: 50%">Input</td>

|

| 26 |

+

<td style="text-align: center; vertical-align: middle; width: 50%">Output</td>

|

| 27 |

+

</td>

|

| 28 |

+

</tr>

|

| 29 |

+

</table>

|

| 30 |

+

|

| 31 |

+

## News🔥🔥🔥

|

| 32 |

+

|

| 33 |

+

* May.30, 2024. Our checkpoint Layout-ControlNet are publicly available on [HuggingFace Repo](https://huggingface.co/kujiale-ai/controlnet-layout).

|

| 34 |

+

|

| 35 |

+

<!-- ## Try our Hugging Face demos: -->

|

| 36 |

+

|

| 37 |

+

## Checkpoints

|

| 38 |

+

* `control_v1_sd15_layout_fp16`: Layout ControlNet checkpoint, for SD15 models.

|

| 39 |

+

|

| 40 |

+

## Using in 🧨 diffusers

|

| 41 |

+

### Layout ControlNet

|

| 42 |

+

```python

|

| 43 |

+

import torch

|

| 44 |

+

from diffusers.utils import load_image

|

| 45 |

+

import numpy as np

|

| 46 |

+

from diffusers import ControlNetModel, StableDiffusionControlNetPipeline, UniPCMultistepScheduler

|

| 47 |

+

|

| 48 |

+

controlnet_checkpoint = "kujiale-ai/controlnet-layout"

|

| 49 |

+

# Load original image

|

| 50 |

+

image = load_image("https://huggingface.co/kujiale-ai/controlnet-layout/resolve/main/examples/layout_input.jpg")

|

| 51 |

+

depth_image = load_image("https://huggingface.co/kujiale-ai/controlnet-layout/resolve/main/examples/layout_depth.jpg").convert("L")

|

| 52 |

+

normal_image = load_image("https://huggingface.co/kujiale-ai/controlnet-layout/resolve/main/examples/layout_normal.jpg")

|

| 53 |

+

segm_image = load_image("https://huggingface.co/kujiale-ai/controlnet-layout/resolve/main/examples/layout_segm.jpg")

|

| 54 |

+

W, H = image.size

|

| 55 |

+

depth_image = depth_image.resize((W, H))

|

| 56 |

+

normal_image = normal_image.resize((W, H))

|

| 57 |

+

segm_image = segm_image.resize((W, H))

|

| 58 |

+

# Prepare Layout Control Image

|

| 59 |

+

depth_image = np.array(depth_image, dtype=np.float32) / 255.0

|

| 60 |

+

depth_image = torch.from_numpy(depth_image[:, :, None])[None].permute(0, 3, 1, 2)

|

| 61 |

+

normal_image = np.array(normal_image, dtype=np.float32)

|

| 62 |

+

normal_image = normal_image / 127.5 - 1.0

|

| 63 |

+

normal_image = torch.from_numpy(normal_image)[None].permute(0, 3, 1, 2)

|

| 64 |

+

segm_image = np.array(segm_image, dtype=np.float32) / 255.0

|

| 65 |

+

segm_image = torch.from_numpy(segm_image)[None].permute(0, 3, 1, 2)

|

| 66 |

+

control_image = torch.cat([depth_image, normal_image, segm_image], dim=1)

|

| 67 |

+

# Initialize pipeline

|

| 68 |

+

controlnet = ControlNetModel.from_pretrained(controlnet_checkpoint, subfolder="control_v1_sd15_layout_fp16", torch_dtype=torch.float16)

|

| 69 |

+

pipe = StableDiffusionControlNetPipeline.from_pretrained("runwayml/stable-diffusion-v1-5", controlnet=controlnet, torch_dtype=torch.float16).to("cuda")

|

| 70 |

+

pipe.scheduler = UniPCMultistepScheduler.from_config(pipe.scheduler.config)

|

| 71 |

+

image = pipe("A modern bedroom,best quality", num_inference_steps=30, image=control_image, guidance_scale=7).images[0]

|

| 72 |

+

image.save('layout_output.jpg')

|

| 73 |

+

```

|

control_v1_sd15_layout_fp16/config.json

ADDED

|

@@ -0,0 +1,51 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "ControlNetModel",

|

| 3 |

+

"_diffusers_version": "0.27.2",

|

| 4 |

+

"act_fn": "silu",

|

| 5 |

+

"addition_embed_type": null,

|

| 6 |

+

"addition_embed_type_num_heads": 64,

|

| 7 |

+

"addition_time_embed_dim": null,

|

| 8 |

+

"attention_head_dim": 8,

|

| 9 |

+

"block_out_channels": [

|

| 10 |

+

320,

|

| 11 |

+

640,

|

| 12 |

+

1280,

|

| 13 |

+

1280

|

| 14 |

+

],

|

| 15 |

+

"class_embed_type": null,

|

| 16 |

+

"conditioning_channels": 7,

|

| 17 |

+

"conditioning_embedding_out_channels": [

|

| 18 |

+

16,

|

| 19 |

+

32,

|

| 20 |

+

96,

|

| 21 |

+

256

|

| 22 |

+

],

|

| 23 |

+

"controlnet_conditioning_channel_order": "rgb",

|

| 24 |

+

"cross_attention_dim": 768,

|

| 25 |

+

"down_block_types": [

|

| 26 |

+

"CrossAttnDownBlock2D",

|

| 27 |

+

"CrossAttnDownBlock2D",

|

| 28 |

+

"CrossAttnDownBlock2D",

|

| 29 |

+

"DownBlock2D"

|

| 30 |

+

],

|

| 31 |

+

"downsample_padding": 1,

|

| 32 |

+

"encoder_hid_dim": null,

|

| 33 |

+

"encoder_hid_dim_type": null,

|

| 34 |

+

"flip_sin_to_cos": true,

|

| 35 |

+

"freq_shift": 0,

|

| 36 |

+

"global_pool_conditions": false,

|

| 37 |

+

"in_channels": 4,

|

| 38 |

+

"layers_per_block": 2,

|

| 39 |

+

"mid_block_scale_factor": 1,

|

| 40 |

+

"mid_block_type": "UNetMidBlock2DCrossAttn",

|

| 41 |

+

"norm_eps": 1e-05,

|

| 42 |

+

"norm_num_groups": 32,

|

| 43 |

+

"num_attention_heads": null,

|

| 44 |

+

"num_class_embeds": null,

|

| 45 |

+

"only_cross_attention": false,

|

| 46 |

+

"projection_class_embeddings_input_dim": null,

|

| 47 |

+

"resnet_time_scale_shift": "default",

|

| 48 |

+

"transformer_layers_per_block": 1,

|

| 49 |

+

"upcast_attention": false,

|

| 50 |

+

"use_linear_projection": false

|

| 51 |

+

}

|

examples/layout_depth.jpg

ADDED

|

examples/layout_input.jpg

ADDED

|

examples/layout_normal.jpg

ADDED

|

examples/layout_output.jpg

ADDED

|

examples/layout_segm.jpg

ADDED

|