Update README.md

Browse files

README.md

CHANGED

|

@@ -20,17 +20,6 @@ roleplaying chat model intended to replicate the experience of 1-on-1 roleplay o

|

|

| 20 |

IRC/Discord-style RP (aka "Markdown format") is not supported yet. The model does not include instruction tuning,

|

| 21 |

only manually picked and slightly edited RP conversations with persona and scenario data.

|

| 22 |

|

| 23 |

-

## Important notes on generation settings

|

| 24 |

-

It's recommended not to go overboard with low tail-free-sampling (TFS) values. From previous testing with Llama-2,

|

| 25 |

-

decreasing it too much appeared to easily yield rather repetitive responses. Extensive testing with Mistral has not

|

| 26 |

-

been performed yet, but suggested starting generation settings are:

|

| 27 |

-

|

| 28 |

-

- TFS = 0.92~0.95

|

| 29 |

-

- Temperature = 0.70~0.85

|

| 30 |

-

- Repetition penalty = 1.05~1.10

|

| 31 |

-

- top-k = 0 (disabled)

|

| 32 |

-

- top-p = 1 (disabled)

|

| 33 |

-

|

| 34 |

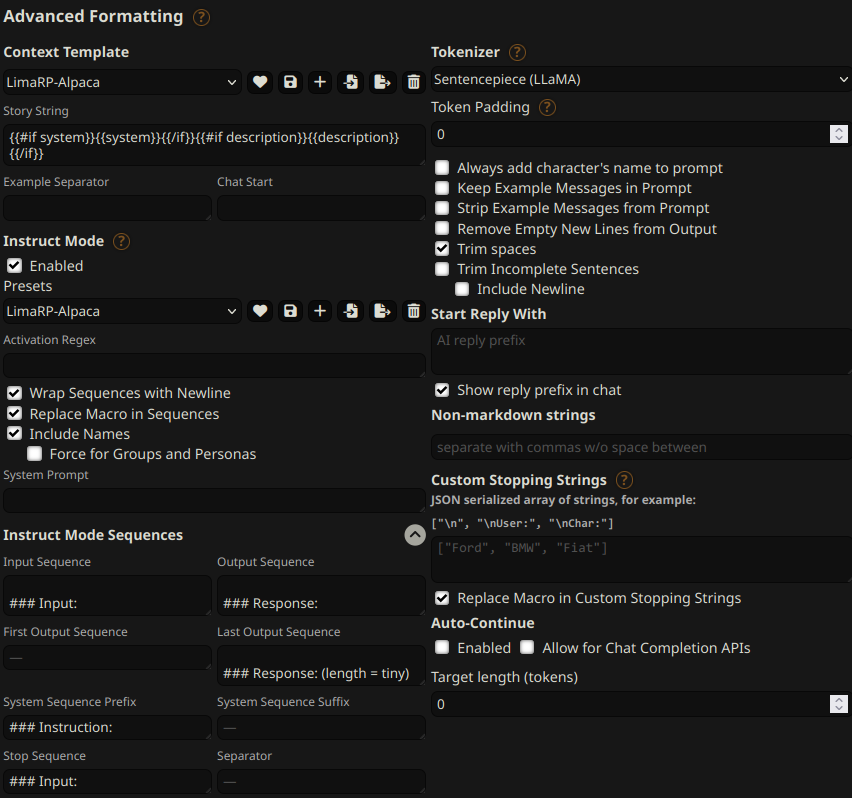

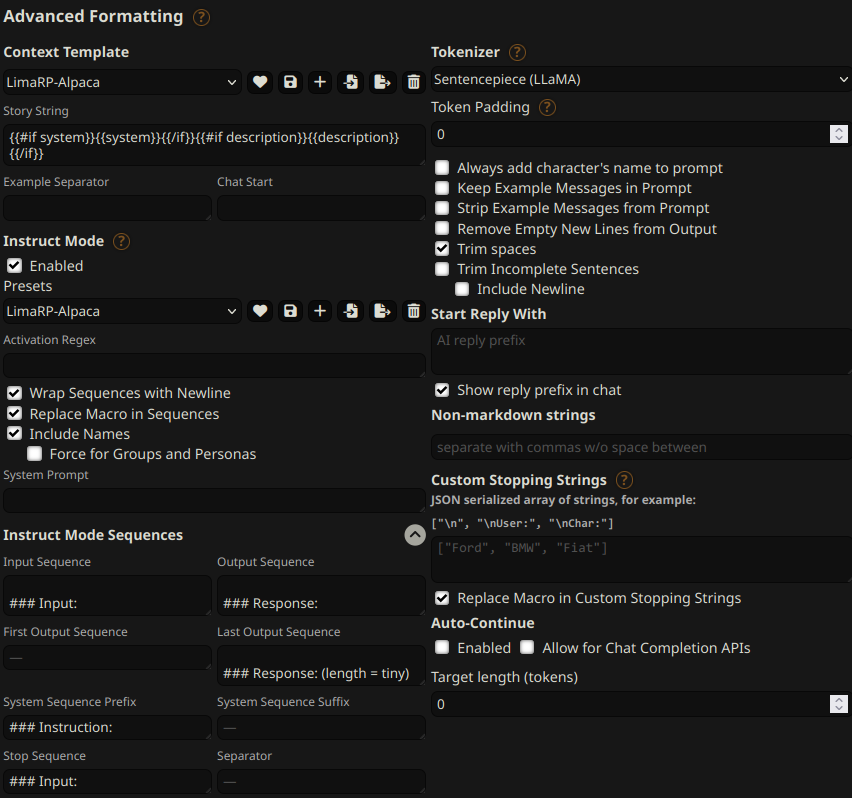

## Prompt format

|

| 35 |

Same as before. It uses the [extended Alpaca format](https://github.com/tatsu-lab/stanford_alpaca),

|

| 36 |

with `### Input:` immediately preceding user inputs and `### Response:` immediately preceding

|

|

@@ -99,6 +88,16 @@ your desired response length:

|

|

| 99 |

|

| 100 |

|

| 101 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 102 |

## Training procedure

|

| 103 |

[Axolotl](https://github.com/OpenAccess-AI-Collective/axolotl) was used for training

|

| 104 |

on a 2x NVidia A40 GPU cluster.

|

|

|

|

| 20 |

IRC/Discord-style RP (aka "Markdown format") is not supported yet. The model does not include instruction tuning,

|

| 21 |

only manually picked and slightly edited RP conversations with persona and scenario data.

|

| 22 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 23 |

## Prompt format

|

| 24 |

Same as before. It uses the [extended Alpaca format](https://github.com/tatsu-lab/stanford_alpaca),

|

| 25 |

with `### Input:` immediately preceding user inputs and `### Response:` immediately preceding

|

|

|

|

| 88 |

|

| 89 |

|

| 90 |

|

| 91 |

+

## Text generation settings

|

| 92 |

+

Extensive testing with Mistral has not been performed yet, but suggested starting text

|

| 93 |

+

generation settings may be:

|

| 94 |

+

|

| 95 |

+

- TFS = 0.92~0.95

|

| 96 |

+

- Temperature = 0.70~0.85

|

| 97 |

+

- Repetition penalty = 1.05~1.10

|

| 98 |

+

- top-k = 0 (disabled)

|

| 99 |

+

- top-p = 1 (disabled)

|

| 100 |

+

|

| 101 |

## Training procedure

|

| 102 |

[Axolotl](https://github.com/OpenAccess-AI-Collective/axolotl) was used for training

|

| 103 |

on a 2x NVidia A40 GPU cluster.

|