Upload 1162 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +4 -0

- s3prl_s3prl_main/Dockerfile +42 -0

- s3prl_s3prl_main/LICENSE +201 -0

- s3prl_s3prl_main/README.md +357 -0

- s3prl_s3prl_main/__pycache__/hubconf.cpython-310.pyc +0 -0

- s3prl_s3prl_main/__pycache__/hubconf.cpython-39.pyc +0 -0

- s3prl_s3prl_main/ci/format.py +84 -0

- s3prl_s3prl_main/docs/Makefile +20 -0

- s3prl_s3prl_main/docs/README.md +27 -0

- s3prl_s3prl_main/docs/from_scratch_tutorial.md +146 -0

- s3prl_s3prl_main/docs/make.bat +35 -0

- s3prl_s3prl_main/docs/rebuild_docs.sh +9 -0

- s3prl_s3prl_main/docs/source/_static/css/custom.css +3 -0

- s3prl_s3prl_main/docs/source/_static/js/custom.js +7 -0

- s3prl_s3prl_main/docs/source/_templates/custom-module-template.rst +81 -0

- s3prl_s3prl_main/docs/source/conf.py +120 -0

- s3prl_s3prl_main/docs/source/contribute/general.rst +167 -0

- s3prl_s3prl_main/docs/source/contribute/private.rst +104 -0

- s3prl_s3prl_main/docs/source/contribute/public.rst +29 -0

- s3prl_s3prl_main/docs/source/contribute/upstream.rst +100 -0

- s3prl_s3prl_main/docs/source/index.rst +75 -0

- s3prl_s3prl_main/docs/source/tutorial/installation.rst +55 -0

- s3prl_s3prl_main/docs/source/tutorial/problem.rst +122 -0

- s3prl_s3prl_main/docs/source/tutorial/upstream_collection.rst +1457 -0

- s3prl_s3prl_main/docs/util/is_valid.py +21 -0

- s3prl_s3prl_main/example/customize.py +43 -0

- s3prl_s3prl_main/example/run_asr.sh +3 -0

- s3prl_s3prl_main/example/run_sid.sh +4 -0

- s3prl_s3prl_main/example/ssl/pretrain.py +285 -0

- s3prl_s3prl_main/example/superb/train.py +291 -0

- s3prl_s3prl_main/example/superb_asr/inference.py +40 -0

- s3prl_s3prl_main/example/superb_asr/train.py +241 -0

- s3prl_s3prl_main/example/superb_asr/train_with_lightning.py +127 -0

- s3prl_s3prl_main/example/superb_sid/inference.py +40 -0

- s3prl_s3prl_main/example/superb_sid/train.py +235 -0

- s3prl_s3prl_main/example/superb_sid/train_with_lightning.py +127 -0

- s3prl_s3prl_main/example/superb_sv/inference.py +47 -0

- s3prl_s3prl_main/example/superb_sv/train.py +266 -0

- s3prl_s3prl_main/example/superb_sv/train_with_lightning.py +184 -0

- s3prl_s3prl_main/external_tools/install_espnet.sh +20 -0

- s3prl_s3prl_main/file/S3PRL-integration.png +0 -0

- s3prl_s3prl_main/file/S3PRL-interface.png +0 -0

- s3prl_s3prl_main/file/S3PRL-logo.png +0 -0

- s3prl_s3prl_main/file/license.svg +1 -0

- s3prl_s3prl_main/find_content.sh +8 -0

- s3prl_s3prl_main/hubconf.py +4 -0

- s3prl_s3prl_main/pyrightconfig.json +5 -0

- s3prl_s3prl_main/pytest.ini +5 -0

- s3prl_s3prl_main/requirements/all.txt +33 -0

- s3prl_s3prl_main/requirements/dev.txt +11 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,7 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

s3prl_s3prl_main/s3prl/downstream/phone_linear/data/converted_aligned_phones.txt filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

s3prl_s3prl_main/s3prl/downstream/voxceleb2_amsoftmax_segment_eval/cache_wav_paths/cache_test_segment.p filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

s3prl_s3prl_main/s3prl/downstream/voxceleb2_amsoftmax_segment_eval/cache_wav_paths/cache_Voxceleb1.p filter=lfs diff=lfs merge=lfs -text

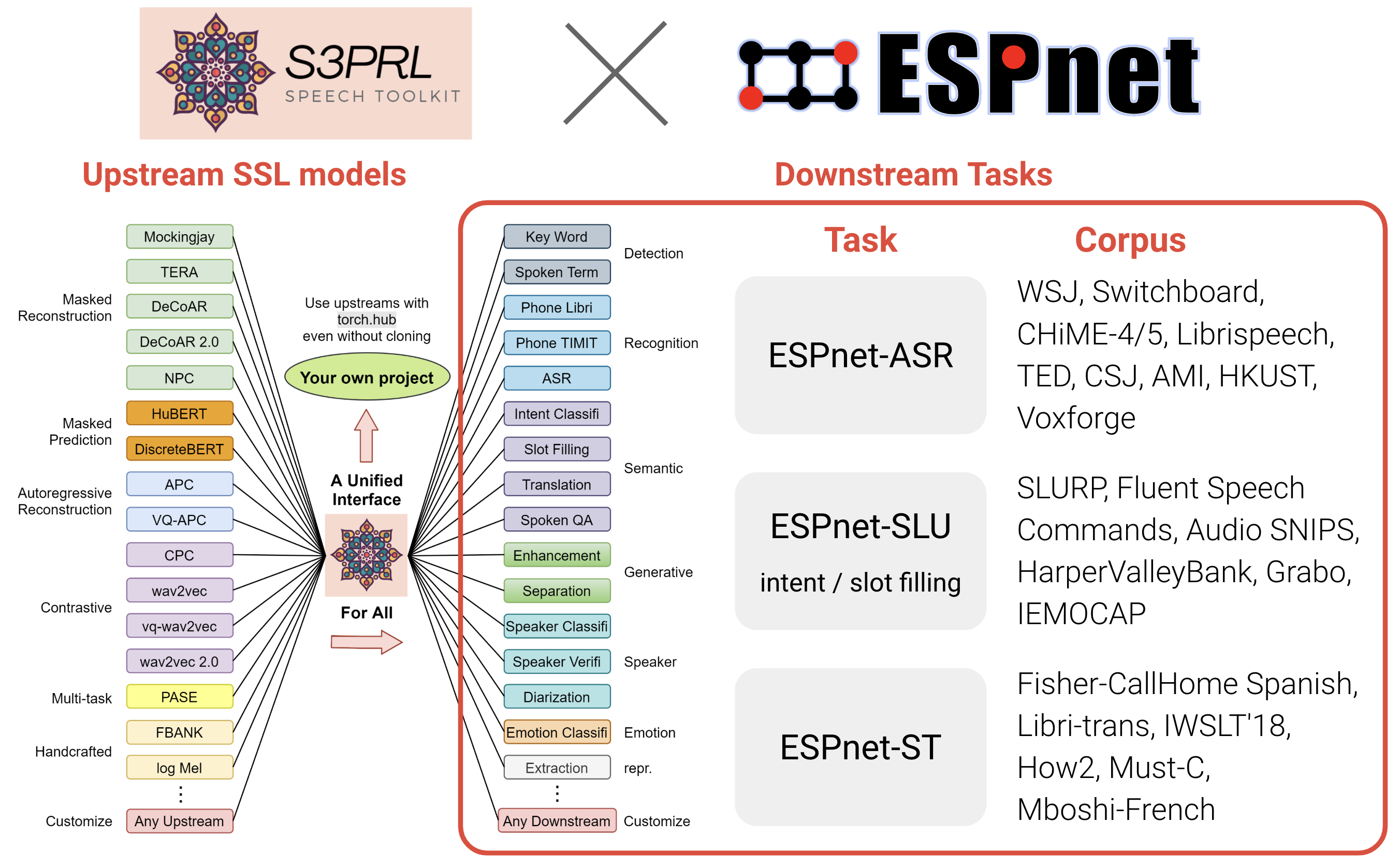

|

| 39 |

+

s3prl_s3prl_main/s3prl/downstream/voxceleb2_amsoftmax_segment_eval/cache_wav_paths/cache_Voxceleb2.p filter=lfs diff=lfs merge=lfs -text

|

s3prl_s3prl_main/Dockerfile

ADDED

|

@@ -0,0 +1,42 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# We need this to use GPUs inside the container

|

| 2 |

+

FROM nvidia/cuda:11.2.2-base

|

| 3 |

+

# Using a multi-stage build simplifies the s3prl installation

|

| 4 |

+

# TODO: Find a slimmer base image that also "just works"

|

| 5 |

+

FROM tiangolo/uvicorn-gunicorn:python3.8

|

| 6 |

+

|

| 7 |

+

RUN apt-get update --fix-missing && apt-get install -y wget \

|

| 8 |

+

libsndfile1 \

|

| 9 |

+

sox \

|

| 10 |

+

git \

|

| 11 |

+

git-lfs

|

| 12 |

+

|

| 13 |

+

RUN python -m pip install --upgrade pip

|

| 14 |

+

RUN python -m pip --no-cache-dir install fairseq@git+https://github.com//pytorch/fairseq.git@f2146bdc7abf293186de9449bfa2272775e39e1d#egg=fairseq

|

| 15 |

+

RUN python -m pip --no-cache-dir install git+https://github.com/s3prl/s3prl.git#egg=s3prl

|

| 16 |

+

|

| 17 |

+

COPY s3prl/ /app/s3prl

|

| 18 |

+

COPY src/ /app/src

|

| 19 |

+

|

| 20 |

+

# Setup filesystem

|

| 21 |

+

RUN mkdir /app/data

|

| 22 |

+

|

| 23 |

+

# Configure Git

|

| 24 |

+

# TODO: Create a dedicated SUPERB account for the project?

|

| 25 |

+

RUN git config --global user.email "[email protected]"

|

| 26 |

+

RUN git config --global user.name "SUPERB Admin"

|

| 27 |

+

|

| 28 |

+

# Default args for fine-tuning

|

| 29 |

+

ENV upstream_model osanseviero/hubert_base

|

| 30 |

+

ENV downstream_task asr

|

| 31 |

+

ENV hub huggingface

|

| 32 |

+

ENV hf_hub_org None

|

| 33 |

+

ENV push_to_hf_hub True

|

| 34 |

+

ENV override None

|

| 35 |

+

|

| 36 |

+

WORKDIR /app/s3prl

|

| 37 |

+

# Each task's config.yaml is used to set all the training parameters, but can be overridden with the `override` argument

|

| 38 |

+

# The results of each training run are stored in /app/s3prl/result/downstream/{downstream_task}

|

| 39 |

+

# and pushed to the Hugging Face Hub with name:

|

| 40 |

+

# Default behaviour - {hf_hub_username}/superb-s3prl-{upstream_model}-{downstream_task}-uuid

|

| 41 |

+

# With hf_hub_org set - {hf_hub_org}/superb-s3prl-{upstream_model}-{downstream_task}-uuid

|

| 42 |

+

CMD python run_downstream.py -n ${downstream_task} -m train -u ${upstream_model} -d ${downstream_task} --hub ${hub} --hf_hub_org ${hf_hub_org} --push_to_hf_hub ${push_to_hf_hub} --override ${override}

|

s3prl_s3prl_main/LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "{}"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright 2022 Andy T. Liu (Ting-Wei Liu) and Shu-wen (Leo) Yang

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

s3prl_s3prl_main/README.md

ADDED

|

@@ -0,0 +1,357 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<p align="center">

|

| 2 |

+

<img src="https://raw.githubusercontent.com/s3prl/s3prl/main/file/S3PRL-logo.png" width="900"/>

|

| 3 |

+

<br>

|

| 4 |

+

<br>

|

| 5 |

+

<a href="./LICENSE.txt"><img alt="Apache License 2.0" src="https://raw.githubusercontent.com/s3prl/s3prl/main/file/license.svg" /></a>

|

| 6 |

+

<a href="https://creativecommons.org/licenses/by-nc/4.0/"><img alt="CC_BY_NC License" src="https://img.shields.io/badge/License-CC%20BY--NC%204.0-lightgrey.svg" /></a>

|

| 7 |

+

<a href="https://github.com/s3prl/s3prl/actions/workflows/ci.yml"><img alt="CI" src="https://github.com/s3prl/s3prl/actions/workflows/ci.yml/badge.svg?branch=main&event=push"></a>

|

| 8 |

+

<a href="#development-pattern-for-contributors"><img alt="Codecov" src="https://img.shields.io/badge/contributions-welcome-brightgreen.svg"></a>

|

| 9 |

+

<a href="https://github.com/s3prl/s3prl/issues"><img alt="Bitbucket open issues" src="https://img.shields.io/github/issues/s3prl/s3prl"></a>

|

| 10 |

+

</p>

|

| 11 |

+

|

| 12 |

+

## Contact

|

| 13 |

+

|

| 14 |

+

We prefer to have discussions directly on Github issue page, so that all the information is transparent to all the contributors and is auto-archived on the Github.

|

| 15 |

+

If you wish to use email, please contact:

|

| 16 |

+

|

| 17 |

+

- [Shu-wen (Leo) Yang](https://leo19941227.github.io/) ([email protected])

|

| 18 |

+

- [Andy T. Liu](https://andi611.github.io/) ([email protected])

|

| 19 |

+

|

| 20 |

+

Please refer to the [legacy citation](https://scholar.google.com/citations?view_op=view_citation&hl=en&user=R1mNI8QAAAAJ&citation_for_view=R1mNI8QAAAAJ:LkGwnXOMwfcC) of S3PRL and the timeline below, which justify our initiative on this project. This information is used to protect us from half-truths. We encourage to cite the individual papers most related to the function you are using to give fair credit to the developer of the function. You can find the names in the [Change Log](#change-log). Finally, we would like to thank our advisor, [Prof. Hung-yi Lee](https://speech.ee.ntu.edu.tw/~hylee/index.php), for his advice. The project would be impossible without his support.

|

| 21 |

+

|

| 22 |

+

If you have any question (e.g., about who came up with / developed which ideas / functions or how the project started), feel free to engage in an open and responsible conversation on the GitHub issue page, and we'll be happy to help!

|

| 23 |

+

|

| 24 |

+

## Contribution (pull request)

|

| 25 |

+

|

| 26 |

+

**Guideline**

|

| 27 |

+

|

| 28 |

+

- Starting in 2024, we will only accept new contributions in the form of new upstream models, so we can save bandwidth for developing new techniques (which will not be in S3PRL.)

|

| 29 |

+

- S3PRL has transitioned into pure maintenance mode, ensuring the long-term maintenance of all existing functions.

|

| 30 |

+

- Reporting bugs or the PR fixing the bugs is always welcome! Thanks!

|

| 31 |

+

|

| 32 |

+

**Tutorials**

|

| 33 |

+

|

| 34 |

+

- [General tutorial](https://s3prl.github.io/s3prl/contribute/general.html)

|

| 35 |

+

- [Tutorial for adding new upstream models](https://s3prl.github.io/s3prl/contribute/upstream.html)

|

| 36 |

+

|

| 37 |

+

## Environment compatibilities [](https://github.com/s3prl/s3prl/actions/workflows/ci.yml)

|

| 38 |

+

|

| 39 |

+

We support the following environments. The test cases are ran with **[tox](./tox.ini)** locally and on **[github action](.github/workflows/ci.yml)**:

|

| 40 |

+

|

| 41 |

+

| Env | versions |

|

| 42 |

+

| --- | --- |

|

| 43 |

+

| os | `ubuntu-18.04`, `ubuntu-20.04` |

|

| 44 |

+

| python | `3.7`, `3.8`, `3.9`, `3.10` |

|

| 45 |

+

| pytorch | `1.8.1`, `1.9.1`, `1.10.2`, `1.11.0`, `1.12.1` , `1.13.1` , `2.0.1` , `2.1.0` |

|

| 46 |

+

|

| 47 |

+

## Star History

|

| 48 |

+

|

| 49 |

+

[](https://star-history.com/#s3prl/s3prl&Date)

|

| 50 |

+

|

| 51 |

+

## Change Log

|

| 52 |

+

|

| 53 |

+

> We only list the major contributors here for conciseness. However, we are deeply grateful for all the contributions. Please see the [Contributors](https://github.com/s3prl/s3prl/graphs/contributors) page for the full list.

|

| 54 |

+

|

| 55 |

+

* *Sep 2024*: Support MS-HuBERT (see [MS-HuBERT](https://arxiv.org/pdf/2406.05661))

|

| 56 |

+

* *Dec 2023*: Support Multi-resolution HuBERT (MR-HuBERT, see [Multiresolution HuBERT](https://arxiv.org/pdf/2310.02720.pdf))

|

| 57 |

+

* *Oct 2023*: Support ESPnet pre-trained upstream models (see [ESPnet HuBERT](https://arxiv.org/abs/2306.06672) and [WavLabLM](https://arxiv.org/abs/2309.15317))

|

| 58 |

+

* *Sep 2022*: In [JSALT 2022](https://jsalt-2022-ssl.github.io/member), We upgrade the codebase to support testing, documentation and a new [S3PRL PyPI package](https://pypi.org/project/s3prl/) for easy installation and usage for upstream models. See our [online doc](https://s3prl.github.io/s3prl/) for more information. The package is now used by many [open-source projects](https://github.com/s3prl/s3prl/network/dependents), including [ESPNet](https://github.com/espnet/espnet/blob/master/espnet2/asr/frontend/s3prl.py). Contributors: [Shu-wen Yang](https://leo19941227.github.io/) ***(NTU)***, [Andy T. Liu](https://andi611.github.io/) ***(NTU)***, [Heng-Jui Chang](https://people.csail.mit.edu/hengjui/) ***(MIT)***, [Haibin Wu](https://hbwu-ntu.github.io/) ***(NTU)*** and [Xuankai Chang](https://www.xuankaic.com/) ***(CMU)***.

|

| 59 |

+

* *Mar 2022*: Introduce [**SUPERB-SG**](https://arxiv.org/abs/2203.06849), see [Speech Translation](./s3prl/downstream/speech_translation) by [Hsiang-Sheng Tsai](https://github.com/bearhsiang) ***(NTU)***, [Out-of-domain ASR](./s3prl/downstream/ctc/) by [Heng-Jui Chang](https://people.csail.mit.edu/hengjui/) ***(NTU)***, [Voice Conversion](./s3prl/downstream/a2o-vc-vcc2020/) by [Wen-Chin Huang](https://unilight.github.io/) ***(Nagoya)***, [Speech Separation](./s3prl/downstream/separation_stft/) and [Speech Enhancement](./s3prl/downstream/enhancement_stft/) by [Zili Huang](https://scholar.google.com/citations?user=iQ-S0fQAAAAJ&hl=en) ***(JHU)*** for more info.

|

| 60 |

+

* *Mar 2022*: Introduce [**SSL for SE/SS**](https://arxiv.org/abs/2203.07960) by [Zili Huang](https://scholar.google.com/citations?user=iQ-S0fQAAAAJ&hl=en) ***(JHU)***. See [SE1](https://github.com/s3prl/s3prl/tree/main/s3prl/downstream/enhancement_stft) and [SS1](https://github.com/s3prl/s3prl/tree/main/s3prl/downstream/separation_stft) folders for more details. Note that the improved performances can be achieved by the later introduced [SE2](https://github.com/s3prl/s3prl/tree/main/s3prl/downstream/enhancement_stft2) and [SS2](https://github.com/s3prl/s3prl/tree/main/s3prl/downstream/separation_stft2). However, for aligning with [SUPERB-SG](https://arxiv.org/abs/2203.06849) benchmarking, please use the version 1.

|

| 61 |

+

* *Nov 2021*: Introduce [**S3PRL-VC**](https://arxiv.org/abs/2110.06280) by [Wen-Chin Huang](https://unilight.github.io/) ***(Nagoya)***, see [Any-to-one](https://github.com/s3prl/s3prl/tree/master/s3prl/downstream/a2o-vc-vcc2020) for more info. We highly recommend to consider the [newly released official repo of S3PRL-VC](https://github.com/unilight/s3prl-vc) which is developed and actively maintained by [Wen-Chin Huang](https://unilight.github.io/). The standalone repo contains much more recepies for the VC experiments. In S3PRL we only include the Any-to-one recipe for reproducing the SUPERB results.

|

| 62 |

+

* *Oct 2021*: Support [**DistilHuBERT**](https://arxiv.org/abs/2110.01900) by [Heng-Jui Chang](https://people.csail.mit.edu/hengjui/) ***(NTU)***, see [docs](./s3prl/upstream/distiller/README.md) for more info.

|

| 63 |

+

* *Sep 2021:* We host a *challenge* in [*AAAI workshop: The 2nd Self-supervised Learning for Audio and Speech Processing*](https://aaai-sas-2022.github.io/)! See [**SUPERB official site**](https://superbbenchmark.org/) for the challenge details and the [**SUPERB documentation**](./s3prl/downstream/docs/superb.md) in this toolkit!

|

| 64 |

+

* *Aug 2021:* [Andy T. Liu](https://andi611.github.io/) ***(NTU)*** and [Shu-wen Yang](https://leo19941227.github.io/) ***(NTU)*** introduces the S3PRL toolkit in [MLSS 2021](https://ai.ntu.edu.tw/%e7%b7%9a%e4%b8%8a%e5%ad%b8%e7%bf%92-2/mlss-2021/), you can also **[watch it on Youtube](https://youtu.be/PkMFnS6cjAc)**!

|

| 65 |

+

* *Aug 2021:* [**TERA**](https://ieeexplore.ieee.org/document/9478264) by [Andy T. Liu](https://andi611.github.io/) ***(NTU)*** is accepted to TASLP!

|

| 66 |

+

* *July 2021:* We are now working on packaging s3prl and reorganizing the file structure in **v0.3**. Please consider using the stable **v0.2.0** for now. We will test and release **v0.3** before August.

|

| 67 |

+

* *June 2021:* Support [**SUPERB:** **S**peech processing **U**niversal **PER**formance **B**enchmark](https://arxiv.org/abs/2105.01051), submitted to Interspeech 2021. Use the tag **superb-interspeech2021** or **v0.2.0**. Contributors: [Shu-wen Yang](https://leo19941227.github.io/) ***(NTU)***, [Pohan Chi](https://scholar.google.com/citations?user=SiyicoEAAAAJ&hl=zh-TW) ***(NTU)***, [Yist Lin](https://scholar.google.com/citations?user=0lrZq9MAAAAJ&hl=en) ***(NTU)***, [Yung-Sung Chuang](https://scholar.google.com/citations?user=3ar1DOwAAAAJ&hl=zh-TW) ***(NTU)***, [Jiatong Shi](https://scholar.google.com/citations?user=FEDNbgkAAAAJ&hl=en) ***(CMU)***, [Xuankai Chang](https://www.xuankaic.com/) ***(CMU)***, [Wei-Cheng Tseng](https://scholar.google.com.tw/citations?user=-d6aNP0AAAAJ&hl=zh-TW) ***(NTU)***, Tzu-Hsien Huang ***(NTU)*** and [Kushal Lakhotia](https://scholar.google.com/citations?user=w9W6zXUAAAAJ&hl=en) ***(Meta)***.

|

| 68 |

+

* *June 2021:* Support extracting multiple hidden states for all the SSL pretrained models by [Shu-wen Yang](https://leo19941227.github.io/) ***(NTU)***.

|

| 69 |

+

* *Jan 2021:* Readme updated with detailed instructions on how to use our latest version!

|

| 70 |

+

* *Dec 2020:* We are migrating to a newer version for a more general, flexible, and scalable code. See the introduction below for more information! The legacy version can be accessed the tag **v0.1.0**.

|

| 71 |

+

* *Oct 2020:* [Shu-wen Yang](https://leo19941227.github.io/) ***(NTU)*** and [Andy T. Liu](https://andi611.github.io/) ***(NTU)*** added varioius classic upstream models, including PASE+, APC, VQ-APC, NPC, wav2vec, vq-wav2vec ...etc.

|

| 72 |

+

* *Oct 2019:* The birth of S3PRL! The repository was created for the [**Mockingjay**](https://arxiv.org/abs/1910.12638) development. [Andy T. Liu](https://andi611.github.io/) ***(NTU)***, [Shu-wen Yang](https://leo19941227.github.io/) ***(NTU)*** and [Pohan Chi](https://scholar.google.com/citations?user=SiyicoEAAAAJ&hl=zh-TW) ***(NTU)*** implemented the pre-training scripts and several simple downstream evaluation tasks. This work was the very start of the S3PRL project which established lots of foundamental modules and coding styles. Feel free to checkout to the old commits to explore our [legacy codebase](https://github.com/s3prl/s3prl/tree/6a53ee92bffeaa75fc2fb56071050bcf71e93785)!

|

| 73 |

+

|

| 74 |

+

****

|

| 75 |

+

|

| 76 |

+

## Introduction and Usages

|

| 77 |

+

|

| 78 |

+

This is an open source toolkit called **s3prl**, which stands for **S**elf-**S**upervised **S**peech **P**re-training and **R**epresentation **L**earning.

|

| 79 |

+

Self-supervised speech pre-trained models are called **upstream** in this toolkit, and are utilized in various **downstream** tasks.

|

| 80 |

+

|

| 81 |

+

The toolkit has **three major usages**:

|

| 82 |

+

|

| 83 |

+

### Pretrain

|

| 84 |

+

|

| 85 |

+

- Pretrain upstream models, including Mockingjay, Audio ALBERT and TERA.

|

| 86 |

+

- Document: [**pretrain/README.md**](./s3prl/pretrain/README.md)

|

| 87 |

+

|

| 88 |

+

### Upstream

|

| 89 |

+

|

| 90 |

+

- Easily load most of the existing upstream models with pretrained weights in a unified I/O interface.

|

| 91 |

+

- Pretrained models are registered through **torch.hub**, which means you can use these models in your own project by one-line plug-and-play without depending on this toolkit's coding style.

|

| 92 |

+

- Document: [**upstream/README.md**](./s3prl/upstream/README.md)

|

| 93 |

+

|

| 94 |

+

### Downstream

|

| 95 |

+

|

| 96 |

+

- Utilize upstream models in lots of downstream tasks

|

| 97 |

+

- Benchmark upstream models with [**SUPERB Benchmark**](./s3prl/downstream/docs/superb.md)

|

| 98 |

+

- Document: [**downstream/README.md**](./s3prl/downstream/README.md)

|

| 99 |

+

|

| 100 |

+

---

|

| 101 |

+

|

| 102 |

+

Here is a high-level illustration of how S3PRL might help you. We support to leverage numerous SSL representations on numerous speech processing tasks in our [GitHub codebase](https://github.com/s3prl/s3prl):

|

| 103 |

+

|

| 104 |

+

|

| 105 |

+

|

| 106 |

+

---

|

| 107 |

+

|

| 108 |

+

We also modularize all the SSL models into a standalone [PyPi package](https://pypi.org/project/s3prl/) so that you can easily install it and use it without depending on our entire codebase. The following shows a simple example and you can find more details in our [documentation](https://s3prl.github.io/s3prl/).

|

| 109 |

+

|

| 110 |

+

1. Install the S3PRL package:

|

| 111 |

+

|

| 112 |

+

```sh

|

| 113 |

+

pip install s3prl

|

| 114 |

+

```

|

| 115 |

+

|

| 116 |

+

2. Use it to extract representations for your own audio:

|

| 117 |

+

|

| 118 |

+

```python

|

| 119 |

+

import torch

|

| 120 |

+

from s3prl.nn import S3PRLUpstream

|

| 121 |

+

|

| 122 |

+

model = S3PRLUpstream("hubert")

|

| 123 |

+

model.eval()

|

| 124 |

+

|

| 125 |

+

with torch.no_grad():

|

| 126 |

+

wavs = torch.randn(2, 16000 * 2)

|

| 127 |

+

wavs_len = torch.LongTensor([16000 * 1, 16000 * 2])

|

| 128 |

+

all_hs, all_hs_len = model(wavs, wavs_len)

|

| 129 |

+

|

| 130 |

+

for hs, hs_len in zip(all_hs, all_hs_len):

|

| 131 |

+

assert isinstance(hs, torch.FloatTensor)

|

| 132 |

+

assert isinstance(hs_len, torch.LongTensor)

|

| 133 |

+

|

| 134 |

+

batch_size, max_seq_len, hidden_size = hs.shape

|

| 135 |

+

assert hs_len.dim() == 1

|

| 136 |

+

```

|

| 137 |

+

|

| 138 |

+

---

|

| 139 |

+

|

| 140 |

+

With this modularization, we have achieved close integration with the general speech processing toolkit [ESPNet](https://github.com/espnet/espnet), enabling the use of SSL models for a broader range of speech processing tasks and corpora to achieve state-of-the-art (SOTA) results (kudos to the [ESPNet Team](https://www.wavlab.org/open_source)):

|

| 141 |

+

|

| 142 |

+

|

| 143 |

+

|

| 144 |

+

You can start the journey of SSL with the following entry points:

|

| 145 |

+

|

| 146 |

+

- S3PRL: [A simple SUPERB downstream task](https://github.com/s3prl/s3prl/blob/main/s3prl/downstream/docs/superb.md#pr-phoneme-recognition)

|

| 147 |

+

- ESPNet: [Levearging S3PRL for ASR](https://github.com/espnet/espnet/tree/master/egs2/librispeech/asr1#self-supervised-learning-features-hubert_large_ll60k-conformer-utt_mvn-with-transformer-lm)

|

| 148 |

+

|

| 149 |

+

---

|

| 150 |

+

|

| 151 |

+

Feel free to use or modify our toolkit in your research. Here is a [list of papers using our toolkit](#used-by). Any question, bug report or improvement suggestion is welcome through [opening up a new issue](https://github.com/s3prl/s3prl/issues).

|

| 152 |

+

|

| 153 |

+

If you find this toolkit helpful to your research, please do consider citing [our papers](#citation), thanks!

|

| 154 |

+

|

| 155 |

+

## Installation

|

| 156 |

+

|

| 157 |

+

1. **Python** >= 3.6

|

| 158 |

+

2. Install **sox** on your OS

|

| 159 |

+

3. Install s3prl: [Read doc](https://s3prl.github.io/s3prl/tutorial/installation.html#) or `pip install -e ".[all]"`

|

| 160 |

+

4. (Optional) Some upstream models require special dependencies. If you encounter error with a specific upstream model, you can look into the `README.md` under each `upstream` folder. E.g., `upstream/pase/README.md`=

|

| 161 |

+

|

| 162 |

+

## Reference Repositories

|

| 163 |

+

|

| 164 |

+

* [Pytorch](https://github.com/pytorch/pytorch), Pytorch.

|

| 165 |

+

* [Audio](https://github.com/pytorch/audio), Pytorch.

|

| 166 |

+

* [Kaldi](https://github.com/kaldi-asr/kaldi), Kaldi-ASR.

|

| 167 |

+

* [Transformers](https://github.com/huggingface/transformers), Hugging Face.

|

| 168 |

+

* [PyTorch-Kaldi](https://github.com/mravanelli/pytorch-kaldi), Mirco Ravanelli.

|

| 169 |

+

* [fairseq](https://github.com/pytorch/fairseq), Facebook AI Research.

|

| 170 |

+

* [CPC](https://github.com/facebookresearch/CPC_audio), Facebook AI Research.

|

| 171 |

+

* [APC](https://github.com/iamyuanchung/Autoregressive-Predictive-Coding), Yu-An Chung.

|

| 172 |

+

* [VQ-APC](https://github.com/s3prl/VQ-APC), Yu-An Chung.

|

| 173 |

+

* [NPC](https://github.com/Alexander-H-Liu/NPC), Alexander-H-Liu.

|

| 174 |

+

* [End-to-end-ASR-Pytorch](https://github.com/Alexander-H-Liu/End-to-end-ASR-Pytorch), Alexander-H-Liu

|

| 175 |

+

* [Mockingjay](https://github.com/andi611/Mockingjay-Speech-Representation), Andy T. Liu.

|

| 176 |

+

* [ESPnet](https://github.com/espnet/espnet), Shinji Watanabe

|

| 177 |

+

* [speech-representations](https://github.com/awslabs/speech-representations), aws lab

|

| 178 |

+

* [PASE](https://github.com/santi-pdp/pase), Santiago Pascual and Mirco Ravanelli

|

| 179 |

+

* [LibriMix](https://github.com/JorisCos/LibriMix), Joris Cosentino and Manuel Pariente

|

| 180 |

+

|

| 181 |

+

## License

|

| 182 |

+

|

| 183 |

+

The majority of S3PRL Toolkit is licensed under the Apache License version 2.0, however all the files authored by Facebook, Inc. (which have explicit copyright statement on the top) are licensed under CC-BY-NC.

|

| 184 |

+

|

| 185 |

+

## Used by

|

| 186 |

+

<details><summary>List of papers that used our toolkit (Feel free to add your own paper by making a pull request)</summary><p>

|

| 187 |

+

|

| 188 |

+

### Self-Supervised Pretraining

|

| 189 |

+

|

| 190 |

+

+ [Mockingjay: Unsupervised Speech Representation Learning with Deep Bidirectional Transformer Encoders (Liu et al., 2020)](https://arxiv.org/abs/1910.12638)

|

| 191 |

+

```

|

| 192 |

+

@article{mockingjay,

|

| 193 |

+

title={Mockingjay: Unsupervised Speech Representation Learning with Deep Bidirectional Transformer Encoders},

|

| 194 |

+

ISBN={9781509066315},

|

| 195 |

+

url={http://dx.doi.org/10.1109/ICASSP40776.2020.9054458},

|

| 196 |

+

DOI={10.1109/icassp40776.2020.9054458},

|

| 197 |

+

journal={ICASSP 2020 - 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

|

| 198 |

+

publisher={IEEE},

|

| 199 |

+

author={Liu, Andy T. and Yang, Shu-wen and Chi, Po-Han and Hsu, Po-chun and Lee, Hung-yi},

|

| 200 |

+

year={2020},

|

| 201 |

+

month={May}

|

| 202 |

+

}

|

| 203 |

+

```

|

| 204 |

+

+ [TERA: Self-Supervised Learning of Transformer Encoder Representation for Speech (Liu et al., 2020)](https://arxiv.org/abs/2007.06028)

|

| 205 |

+

```

|

| 206 |

+

@misc{tera,

|

| 207 |

+

title={TERA: Self-Supervised Learning of Transformer Encoder Representation for Speech},

|

| 208 |

+

author={Andy T. Liu and Shang-Wen Li and Hung-yi Lee},

|

| 209 |

+

year={2020},

|

| 210 |

+

eprint={2007.06028},

|

| 211 |

+

archivePrefix={arXiv},

|

| 212 |

+

primaryClass={eess.AS}

|

| 213 |

+

}

|

| 214 |

+

```

|

| 215 |

+

+ [Audio ALBERT: A Lite BERT for Self-supervised Learning of Audio Representation (Chi et al., 2020)](https://arxiv.org/abs/2005.08575)

|

| 216 |

+

```

|

| 217 |

+

@inproceedings{audio_albert,

|

| 218 |

+

title={Audio ALBERT: A Lite BERT for Self-supervised Learning of Audio Representation},

|

| 219 |

+

author={Po-Han Chi and Pei-Hung Chung and Tsung-Han Wu and Chun-Cheng Hsieh and Shang-Wen Li and Hung-yi Lee},

|

| 220 |

+

year={2020},

|

| 221 |

+

booktitle={SLT 2020},

|

| 222 |

+

}

|

| 223 |

+

```

|

| 224 |

+

|

| 225 |

+

### Explanability

|

| 226 |

+

|

| 227 |

+

+ [Understanding Self-Attention of Self-Supervised Audio Transformers (Yang et al., 2020)](https://arxiv.org/abs/2006.03265)

|

| 228 |

+

```

|

| 229 |

+

@inproceedings{understanding_sat,

|

| 230 |

+

author={Shu-wen Yang and Andy T. Liu and Hung-yi Lee},

|

| 231 |

+

title={{Understanding Self-Attention of Self-Supervised Audio Transformers}},

|

| 232 |

+

year=2020,

|

| 233 |

+

booktitle={Proc. Interspeech 2020},

|

| 234 |

+

pages={3785--3789},

|

| 235 |

+

doi={10.21437/Interspeech.2020-2231},

|

| 236 |

+

url={http://dx.doi.org/10.21437/Interspeech.2020-2231}

|

| 237 |

+

}

|

| 238 |

+

```

|

| 239 |

+

|

| 240 |

+

### Adversarial Attack

|

| 241 |

+

|

| 242 |

+

+ [Defense for Black-box Attacks on Anti-spoofing Models by Self-Supervised Learning (Wu et al., 2020)](https://arxiv.org/abs/2006.03214), code for computing LNSR: [utility/observe_lnsr.py](https://github.com/s3prl/s3prl/blob/master/utility/observe_lnsr.py)

|

| 243 |

+

```

|

| 244 |

+

@inproceedings{mockingjay_defense,

|

| 245 |

+

author={Haibin Wu and Andy T. Liu and Hung-yi Lee},

|

| 246 |

+

title={{Defense for Black-Box Attacks on Anti-Spoofing Models by Self-Supervised Learning}},

|

| 247 |

+

year=2020,

|

| 248 |

+

booktitle={Proc. Interspeech 2020},

|

| 249 |

+

pages={3780--3784},

|

| 250 |

+

doi={10.21437/Interspeech.2020-2026},

|

| 251 |

+

url={http://dx.doi.org/10.21437/Interspeech.2020-2026}

|

| 252 |

+

}

|

| 253 |

+

```

|

| 254 |

+

|

| 255 |

+

+ [Adversarial Defense for Automatic Speaker Verification by Cascaded Self-Supervised Learning Models (Wu et al., 2021)](https://arxiv.org/abs/2102.07047)

|

| 256 |

+

```

|

| 257 |

+

@misc{asv_ssl,

|

| 258 |

+

title={Adversarial defense for automatic speaker verification by cascaded self-supervised learning models},

|

| 259 |

+

author={Haibin Wu and Xu Li and Andy T. Liu and Zhiyong Wu and Helen Meng and Hung-yi Lee},

|

| 260 |

+

year={2021},

|

| 261 |

+

eprint={2102.07047},

|

| 262 |

+

archivePrefix={arXiv},

|

| 263 |

+

primaryClass={eess.AS}

|

| 264 |

+

```

|

| 265 |

+

|

| 266 |

+

### Voice Conversion

|

| 267 |

+

|

| 268 |

+

+ [S2VC: A Framework for Any-to-Any Voice Conversion with Self-Supervised Pretrained Representations (Lin et al., 2021)](https://arxiv.org/abs/2104.02901)

|

| 269 |

+

```

|

| 270 |

+

@misc{s2vc,

|

| 271 |

+

title={S2VC: A Framework for Any-to-Any Voice Conversion with Self-Supervised Pretrained Representations},

|

| 272 |

+

author={Jheng-hao Lin and Yist Y. Lin and Chung-Ming Chien and Hung-yi Lee},

|

| 273 |

+

year={2021},

|

| 274 |

+

eprint={2104.02901},

|

| 275 |

+

archivePrefix={arXiv},

|

| 276 |

+

primaryClass={eess.AS}

|

| 277 |

+

}

|

| 278 |

+

```

|

| 279 |

+

|

| 280 |

+

### Benchmark and Evaluation

|

| 281 |

+

|

| 282 |

+

+ [SUPERB: Speech processing Universal PERformance Benchmark (Yang et al., 2021)](https://arxiv.org/abs/2105.01051)

|

| 283 |

+

```

|

| 284 |

+

@misc{superb,

|

| 285 |

+

title={SUPERB: Speech processing Universal PERformance Benchmark},

|

| 286 |

+

author={Shu-wen Yang and Po-Han Chi and Yung-Sung Chuang and Cheng-I Jeff Lai and Kushal Lakhotia and Yist Y. Lin and Andy T. Liu and Jiatong Shi and Xuankai Chang and Guan-Ting Lin and Tzu-Hsien Huang and Wei-Cheng Tseng and Ko-tik Lee and Da-Rong Liu and Zili Huang and Shuyan Dong and Shang-Wen Li and Shinji Watanabe and Abdelrahman Mohamed and Hung-yi Lee},

|

| 287 |

+

year={2021},

|

| 288 |

+

eprint={2105.01051},

|

| 289 |

+

archivePrefix={arXiv},

|

| 290 |

+

primaryClass={cs.CL}

|

| 291 |

+

}

|

| 292 |

+

```

|

| 293 |

+

|

| 294 |

+

+ [Utilizing Self-supervised Representations for MOS Prediction (Tseng et al., 2021)](https://arxiv.org/abs/2104.03017)

|

| 295 |

+

```

|

| 296 |

+

@misc{ssr_mos,

|

| 297 |

+

title={Utilizing Self-supervised Representations for MOS Prediction},

|

| 298 |

+

author={Wei-Cheng Tseng and Chien-yu Huang and Wei-Tsung Kao and Yist Y. Lin and Hung-yi Lee},

|

| 299 |

+

year={2021},

|

| 300 |

+

eprint={2104.03017},

|

| 301 |

+

archivePrefix={arXiv},

|

| 302 |

+

primaryClass={eess.AS}

|

| 303 |

+

}

|

| 304 |

+

```

|

| 305 |

+

}

|

| 306 |

+

|

| 307 |

+

</p></details>

|

| 308 |

+

|

| 309 |

+

## Citation

|

| 310 |

+

|

| 311 |

+

If you find this toolkit useful, please consider citing following papers.

|

| 312 |

+

|

| 313 |

+

- If you use our pre-training scripts, or the downstream tasks considered in *TERA* and *Mockingjay*, please consider citing the following:

|

| 314 |

+

```

|

| 315 |

+

@misc{tera,

|

| 316 |

+

title={TERA: Self-Supervised Learning of Transformer Encoder Representation for Speech},

|

| 317 |

+

author={Andy T. Liu and Shang-Wen Li and Hung-yi Lee},

|

| 318 |

+

year={2020},

|

| 319 |

+

eprint={2007.06028},

|

| 320 |

+

archivePrefix={arXiv},

|

| 321 |

+

primaryClass={eess.AS}

|

| 322 |

+

}

|

| 323 |

+

```

|

| 324 |

+

```

|

| 325 |

+

@article{mockingjay,

|

| 326 |

+

title={Mockingjay: Unsupervised Speech Representation Learning with Deep Bidirectional Transformer Encoders},

|

| 327 |

+

ISBN={9781509066315},

|

| 328 |

+

url={http://dx.doi.org/10.1109/ICASSP40776.2020.9054458},

|

| 329 |

+

DOI={10.1109/icassp40776.2020.9054458},

|

| 330 |

+

journal={ICASSP 2020 - 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

|

| 331 |

+

publisher={IEEE},

|

| 332 |

+

author={Liu, Andy T. and Yang, Shu-wen and Chi, Po-Han and Hsu, Po-chun and Lee, Hung-yi},

|

| 333 |

+

year={2020},

|

| 334 |

+

month={May}

|

| 335 |

+

}

|

| 336 |

+

```

|

| 337 |

+

|

| 338 |

+

- If you use our organized upstream interface and features, or the *SUPERB* downstream benchmark, please consider citing the following:

|

| 339 |

+

```

|

| 340 |

+

@article{yang2024large,

|

| 341 |

+

title={A Large-Scale Evaluation of Speech Foundation Models},

|

| 342 |

+

author={Yang, Shu-wen and Chang, Heng-Jui and Huang, Zili and Liu, Andy T and Lai, Cheng-I and Wu, Haibin and Shi, Jiatong and Chang, Xuankai and Tsai, Hsiang-Sheng and Huang, Wen-Chin and others},

|

| 343 |

+

journal={IEEE/ACM Transactions on Audio, Speech, and Language Processing},

|

| 344 |

+

year={2024},

|

| 345 |

+

publisher={IEEE}

|

| 346 |

+

}

|

| 347 |

+

```

|

| 348 |

+

```

|

| 349 |

+

@inproceedings{yang21c_interspeech,

|

| 350 |

+

author={Shu-wen Yang and Po-Han Chi and Yung-Sung Chuang and Cheng-I Jeff Lai and Kushal Lakhotia and Yist Y. Lin and Andy T. Liu and Jiatong Shi and Xuankai Chang and Guan-Ting Lin and Tzu-Hsien Huang and Wei-Cheng Tseng and Ko-tik Lee and Da-Rong Liu and Zili Huang and Shuyan Dong and Shang-Wen Li and Shinji Watanabe and Abdelrahman Mohamed and Hung-yi Lee},

|

| 351 |

+

title={{SUPERB: Speech Processing Universal PERformance Benchmark}},

|

| 352 |

+

year=2021,

|

| 353 |

+

booktitle={Proc. Interspeech 2021},

|

| 354 |

+

pages={1194--1198},

|

| 355 |

+

doi={10.21437/Interspeech.2021-1775}

|

| 356 |

+

}

|

| 357 |

+

```

|

s3prl_s3prl_main/__pycache__/hubconf.cpython-310.pyc

ADDED

|

Binary file (248 Bytes). View file

|

|

|

s3prl_s3prl_main/__pycache__/hubconf.cpython-39.pyc

ADDED

|

Binary file (244 Bytes). View file

|

|

|

s3prl_s3prl_main/ci/format.py

ADDED

|

@@ -0,0 +1,84 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env python3

|

| 2 |

+

|

| 3 |

+

import argparse

|

| 4 |

+

from pathlib import Path

|

| 5 |

+