Added model files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +1 -0

- CODE_OF_CONDUCT.md +9 -0

- LICENSE +21 -0

- README.md +567 -3

- SECURITY.md +41 -0

- SUPPORT.md +25 -0

- added_tokens.json +12 -0

- config.json +221 -0

- configuration_phi4mm.py +235 -0

- figures/audio_understand.png +0 -0

- figures/multi_image.png +0 -0

- figures/speech_qa.png +0 -0

- figures/speech_recog_by_lang.png +0 -0

- figures/speech_recognition.png +0 -0

- figures/speech_summarization.png +0 -0

- figures/speech_translate.png +0 -0

- figures/speech_translate_2.png +0 -0

- figures/vision_radar.png +0 -0

- generation_config.json +11 -0

- merges.txt +0 -0

- model-00001-of-00003.safetensors +3 -0

- model-00002-of-00003.safetensors +3 -0

- model-00003-of-00003.safetensors +3 -0

- model.safetensors.index.json +0 -0

- modeling_phi4mm.py +0 -0

- preprocessor_config.json +14 -0

- processing_phi4mm.py +733 -0

- processor_config.json +6 -0

- sample_finetune_speech.py +478 -0

- sample_finetune_vision.py +556 -0

- sample_inference_phi4mm.py +243 -0

- special_tokens_map.json +24 -0

- speech-lora/adapter_config.json +23 -0

- speech-lora/adapter_model.safetensors +3 -0

- speech-lora/added_tokens.json +12 -0

- speech-lora/special_tokens_map.json +24 -0

- speech-lora/tokenizer.json +3 -0

- speech-lora/tokenizer_config.json +125 -0

- speech-lora/vocab.json +0 -0

- speech_conformer_encoder.py +0 -0

- tokenizer.json +3 -0

- tokenizer_config.json +125 -0

- vision-lora/adapter_config.json +23 -0

- vision-lora/adapter_model.safetensors +3 -0

- vision-lora/added_tokens.json +12 -0

- vision-lora/special_tokens_map.json +24 -0

- vision-lora/tokenizer.json +3 -0

- vision-lora/tokenizer_config.json +125 -0

- vision-lora/vocab.json +0 -0

- vision_siglip_navit.py +1717 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

tokenizer.json filter=lfs diff=lfs merge=lfs -text

|

CODE_OF_CONDUCT.md

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

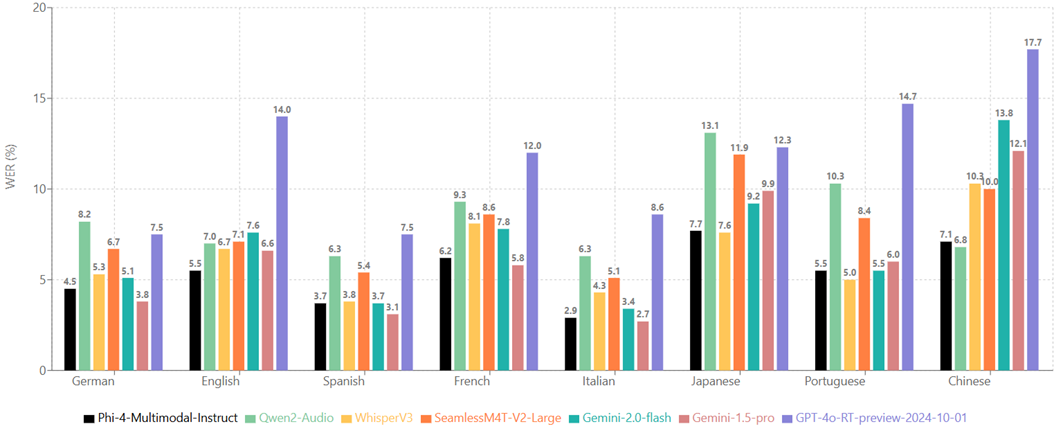

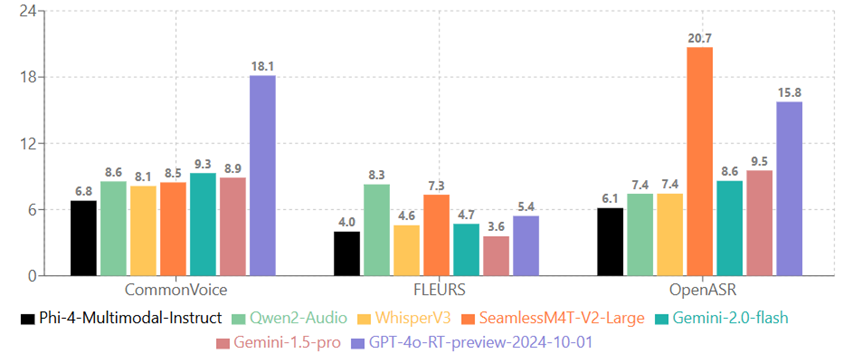

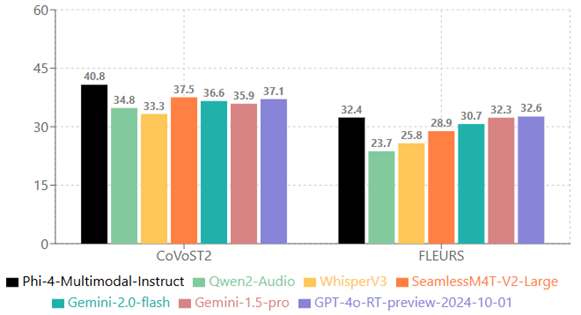

|

|

|

|

|

|

|

|

|

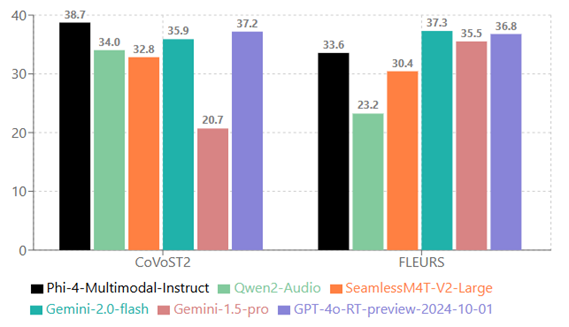

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Microsoft Open Source Code of Conduct

|

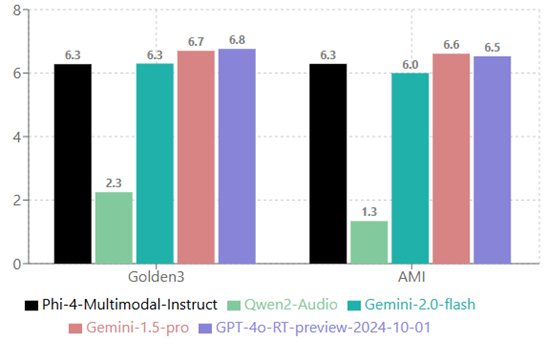

| 2 |

+

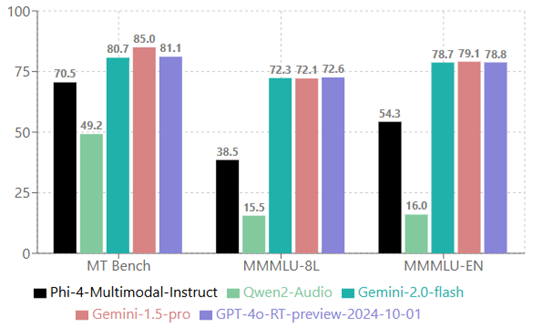

|

| 3 |

+

This project has adopted the [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/).

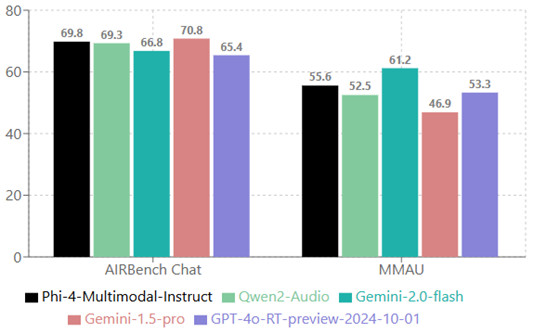

|

| 4 |

+

|

| 5 |

+

Resources:

|

| 6 |

+

|

| 7 |

+

- [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/)

|

| 8 |

+

- [Microsoft Code of Conduct FAQ](https://opensource.microsoft.com/codeofconduct/faq/)

|

| 9 |

+

- Contact [[email protected]](mailto:[email protected]) with questions or concerns

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) Microsoft Corporation.

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE

|

README.md

CHANGED

|

@@ -1,3 +1,567 @@

|

|

| 1 |

-

---

|

| 2 |

-

license: mit

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: mit

|

| 3 |

+

license_link: https://huggingface.co/microsoft/Phi-4-multimodal-instruct/resolve/main/LICENSE

|

| 4 |

+

language:

|

| 5 |

+

- multilingual

|

| 6 |

+

tags:

|

| 7 |

+

- nlp

|

| 8 |

+

- code

|

| 9 |

+

- audio

|

| 10 |

+

- automatic-speech-recognition

|

| 11 |

+

- speech-summarization

|

| 12 |

+

- speech-translation

|

| 13 |

+

- visual-question-answering

|

| 14 |

+

- phi-4-multimodal

|

| 15 |

+

- phi

|

| 16 |

+

- phi-4-mini

|

| 17 |

+

widget:

|

| 18 |

+

- example_title: Librispeech sample 1

|

| 19 |

+

src: https://cdn-media.huggingface.co/speech_samples/sample1.flac

|

| 20 |

+

- example_title: Librispeech sample 2

|

| 21 |

+

src: https://cdn-media.huggingface.co/speech_samples/sample2.flac

|

| 22 |

+

- messages:

|

| 23 |

+

- role: user

|

| 24 |

+

content: Can you provide ways to eat combinations of bananas and dragonfruits?

|

| 25 |

+

library_name: transformers

|

| 26 |

+

---

|

| 27 |

+

|

| 28 |

+

## Model Summary

|

| 29 |

+

|

| 30 |

+

Phi-4-multimodal-instruct is a lightweight open multimodal foundation

|

| 31 |

+

model that leverages the language, vision, and speech research

|

| 32 |

+

and datasets used for Phi-3.5 and 4.0 models. The model processes text,

|

| 33 |

+

image, and audio inputs, generating text outputs, and comes with

|

| 34 |

+

128K token context length. The model underwent an enhancement process,

|

| 35 |

+

incorporating both supervised fine-tuning, direct preference

|

| 36 |

+

optimization and RLHF (Reinforcement Learning from Human Feedback)

|

| 37 |

+

to support precise instruction adherence and safety measures.

|

| 38 |

+

The languages that each modal supports are the following:

|

| 39 |

+

- Text: Arabic, Chinese, Czech, Danish, Dutch, English, Finnish,

|

| 40 |

+

French, German, Hebrew, Hungarian, Italian, Japanese, Korean, Norwegian,

|

| 41 |

+

Polish, Portuguese, Russian, Spanish, Swedish, Thai, Turkish, Ukrainian

|

| 42 |

+

- Vision: English

|

| 43 |

+

- Audio: English, Chinese, German, French, Italian, Japanese, Spanish, Portuguese

|

| 44 |

+

|

| 45 |

+

🏡 [Phi-4-multimodal Portal]() <br>

|

| 46 |

+

📰 [Phi-4-multimodal Microsoft Blog]() <br>

|

| 47 |

+

📖 [Phi-4-multimodal Technical Report]() <br>

|

| 48 |

+

👩🍳 [Phi-4-multimodal Cookbook]() <br>

|

| 49 |

+

🖥️ [Try It](https://aka.ms/try-phi4mm) <br>

|

| 50 |

+

|

| 51 |

+

**Phi-4**: [[multimodal-instruct](https://huggingface.co/microsoft/Phi-3.5-mini-instruct) | [onnx]()]; [[mini-instruct]]();

|

| 52 |

+

|

| 53 |

+

## Intended Uses

|

| 54 |

+

|

| 55 |

+

### Primary Use Cases

|

| 56 |

+

|

| 57 |

+

The model is intended for broad multilingual and multimodal commercial and research use . The model provides uses for general purpose AI systems and applications which require

|

| 58 |

+

|

| 59 |

+

1) Memory/compute constrained environments

|

| 60 |

+

2) Latency bound scenarios

|

| 61 |

+

3) Strong reasoning (especially math and logic)

|

| 62 |

+

4) Function and tool calling

|

| 63 |

+

5) General image understanding

|

| 64 |

+

6) Optical character recognition

|

| 65 |

+

7) Chart and table understanding

|

| 66 |

+

8) Multiple image comparison

|

| 67 |

+

9) Multi-image or video clip summarization

|

| 68 |

+

10) Speech recognition

|

| 69 |

+

11) Speech translation

|

| 70 |

+

12) Speech QA

|

| 71 |

+

13) Speech summarization

|

| 72 |

+

14) Audio understanding

|

| 73 |

+

|

| 74 |

+

The model is designed to accelerate research on language and multimodal models, for use as a building block for generative AI powered features.

|

| 75 |

+

|

| 76 |

+

### Use Case Considerations

|

| 77 |

+

|

| 78 |

+

The model is not specifically designed or evaluated for all downstream purposes. Developers should consider common limitations of language models and multimodal models, as well as performance difference across languages, as they select use cases, and evaluate and mitigate for accuracy, safety, and fairness before using within a specific downstream use case, particularly for high-risk scenarios.

|

| 79 |

+

Developers should be aware of and adhere to applicable laws or regulations (including but not limited to privacy, trade compliance laws, etc.) that are relevant to their use case.

|

| 80 |

+

|

| 81 |

+

***Nothing contained in this Model Card should be interpreted as or deemed a restriction or modification to the license the model is released under.***

|

| 82 |

+

|

| 83 |

+

## Release Notes

|

| 84 |

+

|

| 85 |

+

This release of Phi-4-multimodal-instruct is based on valuable user feedback from the Phi-3 series. Previously, users could use a speech recognition model to talk to the Mini and Vision models. To achieve this, users needed to use a pipeline of two models: one model to transcribe the audio to text, and another model for the language or vision tasks. This pipeline means that the core model was not provided the full breadth of input information – e.g. cannot directly observe multiple speakers, background noises, jointly align speech, vision, language information at the same time on the same representation space.

|

| 86 |

+

With Phi-4-multimodal-instruct, a single new open model has been trained across text, vision, and audio, meaning that all inputs and outputs are processed by the same neural network. The model employed new architecture, larger vocabulary for efficiency, multilingual, and multimodal support, and better post-training techniques were used for instruction following and function calling, as well as additional data leading to substantial gains on key multimodal capabilities.

|

| 87 |

+

It is anticipated that Phi-4-multimodal-instruct will greatly benefit app developers and various use cases. The enthusiastic support for the Phi-4 series is greatly appreciated. Feedback on Phi-4 is welcomed and crucial to the model's evolution and improvement. Thank you for being part of this journey!

|

| 88 |

+

|

| 89 |

+

## Model Quality

|

| 90 |

+

|

| 91 |

+

To understand the capabilities, Phi-4-multimodal-instruct was compared with a set of models over a variety of benchmarks using an internal benchmark platform (See Appendix A for benchmark methodology). Users can refer to the Phi-4-Mini-Instruct model card for details of language benchmarks. At the high-level overview of the model quality on representative speech and vision benchmarks:

|

| 92 |

+

|

| 93 |

+

### Speech

|

| 94 |

+

|

| 95 |

+

The Phi-4-multimodal-instruct was observed as

|

| 96 |

+

- Having strong automatic speech recognition (ASR) and speech translation (ST) performance, surpassing expert ASR model WhisperV3 and ST models SeamlessM4T-v2-Large.

|

| 97 |

+

- Ranking number 1 on the Huggingface OpenASR leaderboard with word error rate 6.14% in comparison with the current best model 6.5% as of Jan 17, 2025.

|

| 98 |

+

- Being the first open-sourced model that can perform speech summarization, and the performance is close to GPT4o.

|

| 99 |

+

- Having a gap with close models, e.g. Gemini-1.5-Flash and GPT-4o-realtime-preview, on speech QA task. Work is being undertaken to improve this capability in the next iterations.

|

| 100 |

+

|

| 101 |

+

#### Speech Recognition (lower is better)

|

| 102 |

+

|

| 103 |

+

The performance of Phi-4-multimodal-instruct on the aggregated benchmark datasets:

|

| 104 |

+

|

| 105 |

+

|

| 106 |

+

The performance of Phi-4-multimodal-instruct on different languages, averaging the WERs of CommonVoice and FLEURS:

|

| 107 |

+

|

| 108 |

+

|

| 109 |

+

|

| 110 |

+

#### Speech Translation (higher is better)

|

| 111 |

+

|

| 112 |

+

Translating from German, Spanish, French, Italian, Japanese, Portugues, Chinese to English:

|

| 113 |

+

|

| 114 |

+

|

| 115 |

+

|

| 116 |

+

Translating from English to German, Spanish, French, Italian, Japanese, Portugues, Chinese. Noted that WhiperV3 does not support this capability:

|

| 117 |

+

|

| 118 |

+

|

| 119 |

+

|

| 120 |

+

|

| 121 |

+

#### Speech Summarization (higher is better)

|

| 122 |

+

|

| 123 |

+

|

| 124 |

+

|

| 125 |

+

#### Speech QA

|

| 126 |

+

|

| 127 |

+

MT bench scores are scaled by 10x to match the score range of MMMLU:

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

|

| 131 |

+

#### Audio Uniderstanding

|

| 132 |

+

|

| 133 |

+

AIR bench scores are scaled by 10x to match the score range of MMAU:

|

| 134 |

+

|

| 135 |

+

|

| 136 |

+

|

| 137 |

+

### Vision

|

| 138 |

+

|

| 139 |

+

#### Vision-Speech tasks

|

| 140 |

+

|

| 141 |

+

Phi-4-multimodal-instruct is capable of processing both image and audio together, the following table shows the model quality when the input query for vision content is synthetic speech on chart/table understanding and document reasoning tasks. Compared to other existing state-of-the-art omni models that can enable audio and visual signal as input, Phi-4-multimodal-instruct achieves much stronger performance on multiple benchmarks.

|

| 142 |

+

|

| 143 |

+

| Benchmarks | Phi-4-multimodal-instruct | InternOmni-7B | Gemini-2.0-Flash-Lite-prv-02-05 | Gemini-2.0-Flash | Gemini-1.5-Pro |

|

| 144 |

+

|-----------------------|--------------------------|---------------|--------------------------------|-----------------|----------------|

|

| 145 |

+

| s_AI2D | **68.9** | 53.9 | 62.0 | **69.4** | 67.7 |

|

| 146 |

+

| s_ChartQA | **69.0** | 56.1 | 35.5 | 51.3 | 46.9 |

|

| 147 |

+

| s_DocVQA | **87.3** | 79.9 | 76.0 | 80.3 | 78.2 |

|

| 148 |

+

| s_InfoVQA | **63.7** | 60.3 | 59.4 | 63.6 | **66.1** |

|

| 149 |

+

| **Average** | **72.2** | **62.6** | **58.2** | **66.2** | **64.7** |

|

| 150 |

+

|

| 151 |

+

### Vision tasks

|

| 152 |

+

To understand the vision capabilities, Phi-4-multimodal-instruct was compared with a set of models over a variety of zero-shot benchmarks using an internal benchmark platform. At the high-level overview of the model quality on representative benchmarks:

|

| 153 |

+

|

| 154 |

+

| Dataset | Phi-4-multimodal-ins | Phi-3.5-vision-ins | Qwen 2.5-VL-3B-ins | Intern VL 2.5-4B | Qwen 2.5-VL-7B-ins | Intern VL 2.5-8B | Gemini 2.0-Flash Lite-preview-0205 | Gemini2.0-Flash | Claude-3.5-Sonnet-2024-10-22 | Gpt-4o-2024-11-20 |

|

| 155 |

+

|----------------------------------|---------------------|-------------------|-------------------|-----------------|-------------------|-----------------|--------------------------------|-----------------|----------------------------|------------------|

|

| 156 |

+

| **Popular aggregated benchmark** | | | | | | | | | | |

|

| 157 |

+

| MMMU | **55.1** | 43.0 | 47.0 | 48.3 | 51.8 | 50.6 | 54.1 | **64.7** | 55.8 | 61.7 |

|

| 158 |

+

| MMBench (dev-en) | **86.7** | 81.9 | 84.3 | 86.8 | 87.8 | 88.2 | 85.0 | **90.0** | 86.7 | 89.0 |

|

| 159 |

+

| MMMU-Pro (std/vision) | **38.5** | 21.8 | 29.9 | 32.4 | 36.9 | 34.4 | 45.1 | **54.4** | 54.3 | 53.0 |

|

| 160 |

+

| **Visual science reasoning** | | | | | | | | | | |

|

| 161 |

+

| ScienceQA Visual (img-test) | **97.5** | 91.3 | 79.4 | 96.2 | 87.7 | **97.3** | 85.0 | 88.3 | 81.2 | 88.2 |

|

| 162 |

+

| **Visual math reasoning** | | | | | | | | | | |

|

| 163 |

+

| MathVista (testmini) | **62.4** | 43.9 | 60.8 | 51.2 | **67.8** | 56.7 | 57.6 | 47.2 | 56.9 | 56.1 |

|

| 164 |

+

| InterGPS | **48.6** | 36.3 | 48.3 | 53.7 | 52.7 | 54.1 | 57.9 | **65.4** | 47.1 | 49.1 |

|

| 165 |

+

| **Chart & table reasoning** | | | | | | | | | | |

|

| 166 |

+

| AI2D | **82.3** | 78.1 | 78.4 | 80.0 | 82.6 | 83.0 | 77.6 | 82.1 | 70.6 | **83.8** |

|

| 167 |

+

| ChartQA | **81.4** | 81.8 | 80.0 | 79.1 | **85.0** | 81.0 | 73.0 | 79.0 | 78.4 | 75.1 |

|

| 168 |

+

| DocVQA | **93.2** | 69.3 | 93.9 | 91.6 | **95.7** | 93.0 | 91.2 | 92.1 | 95.2 | 90.9 |

|

| 169 |

+

| InfoVQA | **72.7** | 36.6 | 77.1 | 72.1 | **82.6** | 77.6 | 73.0 | 77.8 | 74.3 | 71.9 |

|

| 170 |

+

| **Document Intelligence** | | | | | | | | | | |

|

| 171 |

+

| TextVQA (val) | **75.6** | 72.0 | 76.8 | 70.9 | **77.7** | 74.8 | 72.9 | 74.4 | 58.6 | 73.1 |

|

| 172 |

+

| OCR Bench | **84.4** | 63.8 | 82.2 | 71.6 | **87.7** | 74.8 | 75.7 | 81.0 | 77.0 | 77.7 |

|

| 173 |

+

| **Object visual presence verification** | | | | | | | | | | |

|

| 174 |

+

| POPE | **85.6** | 86.1 | 87.9 | 89.4 | 87.5 | **89.1** | 87.5 | 88.0 | 82.6 | 86.5 |

|

| 175 |

+

| **Multi-image perception** | | | | | | | | | | |

|

| 176 |

+

| BLINK | **61.3** | 57.0 | 48.1 | 51.2 | 55.3 | 52.5 | 59.3 | **64.0** | 56.9 | 62.4 |

|

| 177 |

+

| Video MME 16 frames | **55.0** | 50.8 | 56.5 | 57.3 | 58.2 | 58.7 | 58.8 | 65.5 | 60.2 | **68.2** |

|

| 178 |

+

| **Average** | **72.0** | **60.9** | **68.7** | **68.8** | **73.1** | **71.1** | **70.2** | **74.3** | **69.1** | **72.4** |

|

| 179 |

+

|

| 180 |

+

|

| 181 |

+

|

| 182 |

+

#### Visual Perception

|

| 183 |

+

|

| 184 |

+

Below are the comparison results on existing multi-image tasks. On average, Phi-4-multimodal-instruct outperforms competitor models of the same size and competitive with much bigger models on multi-frame capabilities.

|

| 185 |

+

BLINK is an aggregated benchmark with 14 visual tasks that humans can solve very quickly but are still hard for current multimodal LLMs.

|

| 186 |

+

|

| 187 |

+

| Dataset | Phi-4-multimodal-instruct | Qwen2.5-VL-3B-Instruct | InternVL 2.5-4B | Qwen2.5-VL-7B-Instruct | InternVL 2.5-8B | Gemini-2.0-Flash-Lite-prv-02-05 | Gemini-2.0-Flash | Claude-3.5-Sonnet-2024-10-22 | Gpt-4o-2024-11-20 |

|

| 188 |

+

|----------------------------|--------------------------|----------------------|-----------------|----------------------|-----------------|--------------------------------|-----------------|----------------------------|------------------|

|

| 189 |

+

| Art Style | **86.3** | 58.1 | 59.8 | 65.0 | 65.0 | 76.9 | 76.9 | 68.4 | 73.5 |

|

| 190 |

+

| Counting | **60.0** | 67.5 | 60.0 | 66.7 | **71.7** | 45.8 | 69.2 | 60.8 | 65.0 |

|

| 191 |

+

| Forensic Detection | **90.2** | 34.8 | 22.0 | 43.9 | 37.9 | 31.8 | 74.2 | 63.6 | 71.2 |

|

| 192 |

+

| Functional Correspondence | **30.0** | 20.0 | 26.9 | 22.3 | 27.7 | 48.5 | **53.1** | 34.6 | 42.3 |

|

| 193 |

+

| IQ Test | **22.7** | 25.3 | 28.7 | 28.7 | 28.7 | 28.0 | **30.7** | 20.7 | 25.3 |

|

| 194 |

+

| Jigsaw | **68.7** | 52.0 | **71.3** | 69.3 | 53.3 | 62.7 | 69.3 | 61.3 | 68.7 |

|

| 195 |

+

| Multi-View Reasoning | **76.7** | 44.4 | 44.4 | 54.1 | 45.1 | 55.6 | 41.4 | 54.9 | 54.1 |

|

| 196 |

+

| Object Localization | **52.5** | 55.7 | 53.3 | 55.7 | 58.2 | 63.9 | **67.2** | 58.2 | 65.6 |

|

| 197 |

+

| Relative Depth | **69.4** | 68.5 | 68.5 | 80.6 | 76.6 | **81.5** | 72.6 | 66.1 | 73.4 |

|

| 198 |

+

| Relative Reflectance | **26.9** | **38.8** | **38.8** | 32.8 | **38.8** | 33.6 | 34.3 | 38.1 | 38.1 |

|

| 199 |

+

| Semantic Correspondence | **52.5** | 32.4 | 33.8 | 28.8 | 24.5 | **56.1** | 55.4 | 43.9 | 47.5 |

|

| 200 |

+

| Spatial Relation | **72.7** | 80.4 | 86.0 | **88.8** | 86.7 | 74.1 | 79.0 | 74.8 | 83.2 |

|

| 201 |

+

| Visual Correspondence | **67.4** | 28.5 | 39.5 | 50.0 | 44.2 | 84.9 | **91.3** | 72.7 | 82.6 |

|

| 202 |

+

| Visual Similarity | **86.7** | 67.4 | 88.1 | 87.4 | 85.2 | **87.4** | 80.7 | 79.3 | 83.0 |

|

| 203 |

+

| **Overall** | **61.6** | **48.1** | **51.2** | **55.3** | **52.5** | **59.3** | **64.0** | **56.9** | **62.4** |

|

| 204 |

+

|

| 205 |

+

|

| 206 |

+

|

| 207 |

+

|

| 208 |

+

## Usage

|

| 209 |

+

|

| 210 |

+

### Requirements

|

| 211 |

+

|

| 212 |

+

Phi-4 family has been integrated in the `4.48.2` version of `transformers`. The current `transformers` version can be verified with: `pip list | grep transformers`.

|

| 213 |

+

|

| 214 |

+

Examples of required packages:

|

| 215 |

+

```

|

| 216 |

+

flash_attn==2.7.4.post1

|

| 217 |

+

torch==2.6.0

|

| 218 |

+

transformers==4.48.2

|

| 219 |

+

accelerate==1.3.0

|

| 220 |

+

soundfile==0.13.1

|

| 221 |

+

pillow==10.3.0

|

| 222 |

+

```

|

| 223 |

+

|

| 224 |

+

Phi-4-multimodal-instruct is also available in [Azure AI Studio]()

|

| 225 |

+

|

| 226 |

+

### Tokenizer

|

| 227 |

+

|

| 228 |

+

Phi-4-multimodal-instruct supports a vocabulary size of up to `200064` tokens. The [tokenizer files](https://huggingface.co/microsoft/Phi-4-multimodal-instruct/blob/main/added_tokens.json) already provide placeholder tokens that can be used for downstream fine-tuning, but they can also be extended up to the model's vocabulary size.

|

| 229 |

+

|

| 230 |

+

### Input Formats

|

| 231 |

+

|

| 232 |

+

Given the nature of the training data, the Phi-4-multimodal-instruct model is best suited for prompts using the chat format as follows:

|

| 233 |

+

|

| 234 |

+

#### Text chat format

|

| 235 |

+

|

| 236 |

+

This format is used for general conversation and instructions:

|

| 237 |

+

|

| 238 |

+

`

|

| 239 |

+

<|system|>You are a helpful assistant.<|end|><|user|>How to explain Internet for a medieval knight?<|end|><|assistant|>

|

| 240 |

+

`

|

| 241 |

+

|

| 242 |

+

#### Tool-enabled function-calling format

|

| 243 |

+

|

| 244 |

+

This format is used when the user wants the model to provide function calls based on

|

| 245 |

+

the given tools. The user should provide the available tools in the system prompt,

|

| 246 |

+

wrapped by <|tool|> and <|/tool|> tokens. The tools should be specified in JSON format,

|

| 247 |

+

using a JSON dump structure. Example:

|

| 248 |

+

|

| 249 |

+

`

|

| 250 |

+

<|system|>You are a helpful assistant with some tools.<|tool|>[{"name": "get_weather_updates", "description": "Fetches weather updates for a given city using the RapidAPI Weather API.", "parameters": {"city": {"description": "The name of the city for which to retrieve weather information.", "type": "str", "default": "London"}}}]<|/tool|><|end|><|user|>What is the weather like in Paris today?<|end|><|assistant|>

|

| 251 |

+

`

|

| 252 |

+

|

| 253 |

+

#### Vision-Language Format

|

| 254 |

+

|

| 255 |

+

This format is used for conversation with image:

|

| 256 |

+

|

| 257 |

+

`

|

| 258 |

+

<|user|><|image_1|>Describe the image in detail.<|end|><|assistant|>

|

| 259 |

+

`

|

| 260 |

+

|

| 261 |

+

For multiple images, the user needs to insert multiple image placeholders in the prompt as below:

|

| 262 |

+

|

| 263 |

+

`

|

| 264 |

+

<|user|><|image_1|><|image_2|><|image_3|>Summarize the content of the images.<|end|><|assistant|>

|

| 265 |

+

`

|

| 266 |

+

|

| 267 |

+

#### Speech-Language Format

|

| 268 |

+

|

| 269 |

+

This format is used for various speech and audio tasks:

|

| 270 |

+

|

| 271 |

+

`

|

| 272 |

+

<|user|><|audio_1|>{task prompt}<|end|><|assistant|>

|

| 273 |

+

`

|

| 274 |

+

|

| 275 |

+

The task prompt can vary for different task.

|

| 276 |

+

Automatic Speech Recognition:

|

| 277 |

+

|

| 278 |

+

`

|

| 279 |

+

<|user|><|audio_1|>Transcribe the audio clip into text.<|end|><|assistant|>

|

| 280 |

+

`

|

| 281 |

+

|

| 282 |

+

Automatic Speech Translation:

|

| 283 |

+

|

| 284 |

+

`

|

| 285 |

+

<|user|><|audio_1|>Translate the audio to {lang}.<|end|><|assistant|>

|

| 286 |

+

`

|

| 287 |

+

|

| 288 |

+

Automatic Speech Translation with chain-of-thoughts:

|

| 289 |

+

|

| 290 |

+

`

|

| 291 |

+

<|user|><|audio_1|>Transcribe the audio to text, and then translate the audio to {lang}. Use <sep> as a separator between the original transcript and the translation.<|end|><|assistant|>

|

| 292 |

+

`

|

| 293 |

+

|

| 294 |

+

Spoken-query Question Answering:

|

| 295 |

+

|

| 296 |

+

`

|

| 297 |

+

<|user|><|audio_1|><|end|><|assistant|>

|

| 298 |

+

`

|

| 299 |

+

|

| 300 |

+

#### Vision-Speech Format

|

| 301 |

+

|

| 302 |

+

This format is used for conversation with image and audio.

|

| 303 |

+

The audio may contain query related to the image:

|

| 304 |

+

|

| 305 |

+

`

|

| 306 |

+

<|user|><|image_1|><|audio_1|><|end|><|assistant|>

|

| 307 |

+

`

|

| 308 |

+

|

| 309 |

+

For multiple images, the user needs to insert multiple image placeholders in the prompt as below:

|

| 310 |

+

|

| 311 |

+

`

|

| 312 |

+

<|user|><|image_1|><|image_2|><|image_3|><|audio_1|><|end|><|assistant|>

|

| 313 |

+

`

|

| 314 |

+

|

| 315 |

+

**Vision**

|

| 316 |

+

- Any common RGB/gray image format (e.g., (".jpg", ".jpeg", ".png", ".ppm", ".bmp", ".pgm", ".tif", ".tiff", ".webp")) can be supported.

|

| 317 |

+

- Resolution depends on the GPU memory size. Higher resolution and more images will produce more tokens, thus using more GPU memory. During training, 64 crops can be supported.

|

| 318 |

+

If it is a square image, the resolution would be around (8*448 by 8*448). For multiple-images, at most 64 frames can be supported, but with more frames as input, the resolution of each frame needs to be reduced to fit in the memory.

|

| 319 |

+

|

| 320 |

+

**Audio**

|

| 321 |

+

- Any audio format that can be loaded by soundfile package should be supported.

|

| 322 |

+

- To keep the satisfactory performance, maximum audio length is suggested to be 40s. For summarization tasks, the maximum audio length is suggested to 30 mins.

|

| 323 |

+

|

| 324 |

+

|

| 325 |

+

### Loading the model locally

|

| 326 |

+

|

| 327 |

+

After obtaining the Phi-4-Mini-MM-Instruct model checkpoints, users can use this sample code for inference.

|

| 328 |

+

|

| 329 |

+

```python

|

| 330 |

+

import requests

|

| 331 |

+

import torch

|

| 332 |

+

import os

|

| 333 |

+

from PIL import Image

|

| 334 |

+

import soundfile

|

| 335 |

+

from transformers import AutoModelForCausalLM, AutoProcessor, GenerationConfig,pipeline,AutoTokenizer

|

| 336 |

+

|

| 337 |

+

processor = AutoProcessor.from_pretrained(model_path, trust_remote_code=True)

|

| 338 |

+

|

| 339 |

+

model = AutoModelForCausalLM.from_pretrained(

|

| 340 |

+

"microsoft/Phi-4-multimodal-instruct",

|

| 341 |

+

device_map="cuda",

|

| 342 |

+

torch_dtype="auto",

|

| 343 |

+

trust_remote_code=True,

|

| 344 |

+

_attn_implementation='flash_attention_2',

|

| 345 |

+

).cuda()

|

| 346 |

+

|

| 347 |

+

generation_config = GenerationConfig.from_pretrained(model_path, 'generation_config.json')

|

| 348 |

+

|

| 349 |

+

user_prompt = '<|user|>'

|

| 350 |

+

assistant_prompt = '<|assistant|>'

|

| 351 |

+

prompt_suffix = '<|end|>'

|

| 352 |

+

|

| 353 |

+

prompt = f'{user_prompt}<|image_1|>What is shown in this image?{prompt_suffix}{assistant_prompt}'

|

| 354 |

+

url = 'https://www.ilankelman.org/stopsigns/australia.jpg'

|

| 355 |

+

print(f'>>> Prompt\n{prompt}')

|

| 356 |

+

image = Image.open(requests.get(url, stream=True).raw)

|

| 357 |

+

inputs = processor(text=prompt, images=image, return_tensors='pt').to('cuda:0')

|

| 358 |

+

generate_ids = model.generate(

|

| 359 |

+

**inputs,

|

| 360 |

+

max_new_tokens=1000,

|

| 361 |

+

generation_config=generation_config,

|

| 362 |

+

)

|

| 363 |

+

generate_ids = generate_ids[:, inputs['input_ids'].shape[1] :]

|

| 364 |

+

response = processor.batch_decode(

|

| 365 |

+

generate_ids, skip_special_tokens=True, clean_up_tokenization_spaces=False

|

| 366 |

+

)[0]

|

| 367 |

+

print(f'>>> Response\n{response}')

|

| 368 |

+

|

| 369 |

+

|

| 370 |

+

speech_prompt = "Transcribe the audio to text, and then translate the audio to French. Use <sep> as a separator between the original transcript and the translation."

|

| 371 |

+

prompt = f'{user_prompt}<|audio_1|>{speech_prompt}{prompt_suffix}{assistant_prompt}'

|

| 372 |

+

|

| 373 |

+

print(f'>>> Prompt\n{prompt}')

|

| 374 |

+

audio = soundfile.read('https://voiceage.com/wbsamples/in_mono/Trailer.wav')

|

| 375 |

+

inputs = processor(text=prompt, audios=[audio], return_tensors='pt').to('cuda:0')

|

| 376 |

+

generate_ids = model.generate(

|

| 377 |

+

**inputs,

|

| 378 |

+

max_new_tokens=1000,

|

| 379 |

+

generation_config=generation_config,

|

| 380 |

+

)

|

| 381 |

+

generate_ids = generate_ids[:, inputs['input_ids'].shape[1] :]

|

| 382 |

+

response = processor.batch_decode(

|

| 383 |

+

generate_ids, skip_special_tokens=True, clean_up_tokenization_spaces=False

|

| 384 |

+

)[0]

|

| 385 |

+

print(f'>>> Response\n{response}')

|

| 386 |

+

```

|

| 387 |

+

|

| 388 |

+

## Responsible AI Considerations

|

| 389 |

+

|

| 390 |

+

Like other language models, the Phi family of models can potentially behave in ways that are unfair, unreliable, or offensive. Some of the limiting behaviors to be aware of include:

|

| 391 |

+

+ Quality of Service: The Phi models are trained primarily on English language content across text, speech, and visual inputs, with some additional multilingual coverage. Performance may vary significantly across different modalities and languages:

|

| 392 |

+

+ Text: Languages other than English will experience reduced performance, with varying levels of degradation across different non-English languages. English language varieties with less representation in the training data may perform worse than standard American English.

|

| 393 |

+

+ Speech: Speech recognition and processing shows similar language-based performance patterns, with optimal performance for standard American English accents and pronunciations. Other English accents, dialects, and non-English languages may experience lower recognition accuracy and response quality. Background noise, audio quality, and speaking speed can further impact performance.

|

| 394 |

+

+ Vision: Visual processing capabilities may be influenced by cultural and geographical biases in the training data. The model may show reduced performance when analyzing images containing text in non-English languages or visual elements more commonly found in non-Western contexts. Image quality, lighting conditions, and composition can also affect processing accuracy.

|

| 395 |

+

+ Multilingual performance and safety gaps: We believe it is important to make language models more widely available across different languages, but the Phi 4 models still exhibit challenges common across multilingual releases. As with any deployment of LLMs, developers will be better positioned to test for performance or safety gaps for their linguistic and cultural context and customize the model with additional fine-tuning and appropriate safeguards.

|

| 396 |

+

+ Representation of Harms & Perpetuation of Stereotypes: These models can over- or under-represent groups of people, erase representation of some groups, or reinforce demeaning or negative stereotypes. Despite safety post-training, these limitations may still be present due to differing levels of representation of different groups, cultural contexts, or prevalence of examples of negative stereotypes in training data that reflect real-world patterns and societal biases.

|

| 397 |

+

+ Inappropriate or Offensive Content: These models may produce other types of inappropriate or offensive content, which may make it inappropriate to deploy for sensitive contexts without additional mitigations that are specific to the case.

|

| 398 |

+

+ Information Reliability: Language models can generate nonsensical content or fabricate content that might sound reasonable but is inaccurate or outdated.

|

| 399 |

+

+ Limited Scope for Code: The majority of Phi 4 training data is based in Python and uses common packages such as "typing, math, random, collections, datetime, itertools". If the model generates Python scripts that utilize other packages or scripts in other languages, it is strongly recommended that users manually verify all API uses.

|

| 400 |

+

+ Long Conversation: Phi 4 models, like other models, can in some cases generate responses that are repetitive, unhelpful, or inconsistent in very long chat sessions in both English and non-English languages. Developers are encouraged to place appropriate mitigations, like limiting conversation turns to account for the possible conversational drift.

|

| 401 |

+

+ Inference of Sensitive Attributes: The Phi 4 models can sometimes attempt to infer sensitive attributes (such as personality characteristics, country of origin, gender, etc...) from the users’ voices when specifically asked to do so. Phi 4-multimodal-instruct is not designed or intended to be used as a biometric categorization system to categorize individuals based on their biometric data to deduce or infer their race, political opinions, trade union membership, religious or philosophical beliefs, sex life, or sexual orientation. This behavior can be easily and efficiently mitigated at the application level by a system message.

|

| 402 |

+

|

| 403 |

+

Developers should apply responsible AI best practices, including mapping, measuring, and mitigating risks associated with their specific use case and cultural, linguistic context. Phi 4 family of models are general purpose models. As developers plan to deploy these models for specific use cases, they are encouraged to fine-tune the models for their use case and leverage the models as part of broader AI systems with language-specific safeguards in place. Important areas for consideration include:

|

| 404 |

+

|

| 405 |

+

+ Allocation: Models may not be suitable for scenarios that could have consequential impact on legal status or the allocation of resources or life opportunities (ex: housing, employment, credit, etc.) without further assessments and additional debiasing techniques.

|

| 406 |

+

+ High-Risk Scenarios: Developers should assess the suitability of using models in high-risk scenarios where unfair, unreliable or offensive outputs might be extremely costly or lead to harm. This includes providing advice in sensitive or expert domains where accuracy and reliability are critical (ex: legal or health advice). Additional safeguards should be implemented at the application level according to the deployment context.

|

| 407 |

+

+ Misinformation: Models may produce inaccurate information. Developers should follow transparency best practices and inform end-users they are interacting with an AI system. At the application level, developers can build feedback mechanisms and pipelines to ground responses in use-case specific, contextual information, a technique known as Retrieval Augmented Generation (RAG).

|

| 408 |

+

+ Generation of Harmful Content: Developers should assess outputs for their context and use available safety classifiers or custom solutions appropriate for their use case.

|

| 409 |

+

+ Misuse: Other forms of misuse such as fraud, spam, or malware production may be possible, and developers should ensure that their applications do not violate applicable laws and regulations.

|

| 410 |

+

|

| 411 |

+

|

| 412 |

+

## Training

|

| 413 |

+

|

| 414 |

+

### Model

|

| 415 |

+

|

| 416 |

+

+ **Architecture:** Phi-4-multimodal-instruct has 5.6B parameters and is a multimodal transformer model. The model has the pretrained Phi-4-Mini-Instruct as the backbone language model, and the advanced encoders and adapters of vision and speech.<br>

|

| 417 |

+

+ **Inputs:** Text, image, and audio. It is best suited for prompts using the chat format.<br>

|

| 418 |

+

+ **Context length:** 128K tokens<br>

|

| 419 |

+

+ **GPUs:** 512 A100-80G<br>

|

| 420 |

+

+ **Training time:** 28 days<br>

|

| 421 |

+

+ **Training data:** 5T tokens, 2.3M speech hours, and 1.1T image-text tokens<br>

|

| 422 |

+

+ **Outputs:** Generated text in response to the input<br>

|

| 423 |

+

+ **Dates:** Trained between December 2024 and January 2025<br>

|

| 424 |

+

+ **Status:** This is a static model trained on offline datasets with the cutoff date of June 2024 for publicly available data.<br>

|

| 425 |

+

+ **Supported languages:**

|

| 426 |

+

+ Text: Arabic, Chinese, Czech, Danish, Dutch, English, Finnish, French, German, Hebrew, Hungarian, Italian, Japanese, Korean, Norwegian, Polish, Portuguese, Russian, Spanish, Swedish, Thai, Turkish, Ukrainian<br>

|

| 427 |

+

+ Vision: English<br>

|

| 428 |

+

+ Audio: English, Chinese, German, French, Italian, Japanese, Spanish, Portuguese<br>

|

| 429 |

+

+ **Release date:** February 2025<br>

|

| 430 |

+

|

| 431 |

+

### Training Datasets

|

| 432 |

+

|

| 433 |

+

Phi-4-multimodal-instruct's training data includes a wide variety of sources, totaling 5 trillion text tokens, and is a combination of

|

| 434 |

+

1) publicly available documents filtered for quality, selected high-quality educational data, and code

|

| 435 |

+

2) newly created synthetic, “textbook-like” data for the purpose of teaching math, coding, common sense reasoning, general knowledge of the world (e.g., science, daily activities, theory of mind, etc.)

|

| 436 |

+

3) high quality human labeled data in chat format

|

| 437 |

+

4) selected high-quality image-text interleave data

|

| 438 |

+

5) synthetic and publicly available image, multi-image, and video data

|

| 439 |

+

6) anonymized in-house speech-text pair data with strong/weak transcriptions

|

| 440 |

+

7) selected high-quality publicly available and anonymized in-house speech data with task-specific supervisions

|

| 441 |

+

8) selected synthetic speech data

|

| 442 |

+

9) synthetic vision-speech data.

|

| 443 |

+

|

| 444 |

+

Focus was placed on the quality of data that could potentially improve the reasoning ability for the model, and the publicly available documents were filtered to contain a preferred level of knowledge. As an example, the result of a game in premier league on a particular day might be good training data for large foundation models, but such information was removed for the Phi-4-multimodal-instruct to leave more model capacity for reasoning for the model's small size. The data collection process involved sourcing information from publicly available documents, with a focus on filtering out undesirable documents and images. To safeguard privacy, image and text data sources were filtered to remove or scrub potentially personal data from the training data.

|

| 445 |

+

The decontamination process involved normalizing and tokenizing the dataset, then generating and comparing n-grams between the target dataset and benchmark datasets. Samples with matching n-grams above a threshold were flagged as contaminated and removed from the dataset. A detailed contamination report was generated, summarizing the matched text, matching ratio, and filtered results for further analysis.

|

| 446 |

+

|

| 447 |

+

### Fine-tuning

|

| 448 |

+

|

| 449 |

+

A basic example of supervised fine-tuning (SFT) for [speech](https://huggingface.co/microsoft/Phi-4-multimodal-instruct/resolve/main/sample_finetune_speech.py) and [vision](https://huggingface.co/microsoft/Phi-4-multimodal-instruct/resolve/main/sample_finetune_vision.py) is provided respectively.

|

| 450 |

+

|

| 451 |

+

## Safety

|

| 452 |

+

|

| 453 |

+

The Phi-4 family of models has adopted a robust safety post-training approach. This approach leverages a variety of both open-source and in-house generated datasets. The overall technique employed for safety alignment is a combination of SFT (Supervised Fine-Tuning), DPO (Direct Preference Optimization), and RLHF (Reinforcement Learning from Human Feedback) approaches by utilizing human-labeled and synthetic English-language datasets, including publicly available datasets focusing on helpfulness and harmlessness, as well as various questions and answers targeted to multiple safety categories. For non-English languages, existing datasets were extended via machine translation. Speech Safety datasets were generated by running Text Safety datasets through Azure TTS (Text-To-Speech) Service, for both English and non-English languages. Vision (text & images) Safety datasets were created to cover harm categories identified both in public and internal multi-modal RAI datasets.

|

| 454 |

+

|

| 455 |

+

### Safety Evaluation and Red-Teaming

|

| 456 |

+

|

| 457 |

+

Various evaluation techniques including red teaming, adversarial conversation simulations, and multilingual safety evaluation benchmark datasets were leveraged to evaluate Phi-4 models' propensity to produce undesirable outputs across multiple languages and risk categories. Several approaches were used to compensate for the limitations of one approach alone. Findings across the various evaluation methods indicate that safety post-training that was done as detailed in the [Phi 3 Safety Post-Training paper](https://arxiv.org/abs/2407.13833) had a positive impact across multiple languages and risk categories as observed by refusal rates (refusal to output undesirable outputs) and robustness to jailbreak techniques. Details on prior red team evaluations across Phi models can be found in the [Phi 3 Safety Post-Training paper](https://arxiv.org/abs/2407.13833). For this release, the red teaming effort focused on the newest Audio input modality and on the following safety areas: harmful content, self-injury risks, and exploits. The model was found to be more susceptible to providing undesirable outputs when attacked with context manipulation or persuasive techniques. These findings applied to all languages, with the persuasive techniques mostly affecting French and Italian. This highlights the need for industry-wide investment in the development of high-quality safety evaluation datasets across multiple languages, including low resource languages, and risk areas that account for cultural nuances where those languages are spoken.

|

| 458 |

+

|

| 459 |

+

### Vision Safety Evaluation

|

| 460 |

+

|

| 461 |

+

To assess model safety in scenarios involving both text and images, Microsoft's Azure AI Evaluation SDK was utilized. This tool facilitates the simulation of single-turn conversations with the target model by providing prompt text and images designed to incite harmful responses. The target model's responses are subsequently evaluated by a capable model across multiple harm categories, including violence, sexual content, self-harm, hateful and unfair content, with each response scored based on the severity of the harm identified. The evaluation results were compared with those of Phi-3.5-Vision and open-source models of comparable size. In addition, we ran both an internal and the public RTVLM and VLGuard multi-modal (text & vision) RAI benchmarks, once again comparing scores with Phi-3.5-Vision and open-source models of comparable size. However, the model may be susceptible to language-specific attack prompts and cultural context.

|

| 462 |

+

|

| 463 |

+

### Audio Safety Evaluation

|

| 464 |

+

|

| 465 |

+

In addition to extensive red teaming, the Safety of the model was assessed through three distinct evaluations. First, as performed with Text and Vision inputs, Microsoft's Azure AI Evaluation SDK was leveraged to detect the presence of harmful content in the model's responses to Speech prompts. Second, [Microsoft's Speech Fairness evaluation](https://speech.microsoft.com/portal/responsibleai/assess) was run to verify that Speech-To-Text transcription worked well across a variety of demographics. Third, we proposed and evaluated a mitigation approach via a system message to help prevent the model from inferring sensitive attributes (such as gender, sexual orientation, profession, medical condition, etc...) from the voice of a user.

|

| 466 |

+

|

| 467 |

+

|

| 468 |

+

## Software

|

| 469 |

+

* [PyTorch](https://github.com/pytorch/pytorch)

|

| 470 |

+

* [Transformers](https://github.com/huggingface/transformers)

|

| 471 |

+

* [Flash-Attention](https://github.com/HazyResearch/flash-attention)

|

| 472 |

+

* [Accelerate](https://huggingface.co/docs/transformers/main/en/accelerate)

|

| 473 |

+

* [soundfile](https://github.com/bastibe/python-soundfile)

|

| 474 |

+

* [pillow](https://github.com/python-pillow/Pillow)

|

| 475 |

+

|

| 476 |

+

## Hardware

|

| 477 |

+

Note that by default, the Phi-4-multimodal-instruct model uses flash attention, which requires certain types of GPU hardware to run. We have tested on the following GPU types:

|

| 478 |

+

* NVIDIA A100

|

| 479 |

+

* NVIDIA A6000

|

| 480 |

+

* NVIDIA H100

|

| 481 |

+

|

| 482 |

+

If you want to run the model on:

|

| 483 |

+

* NVIDIA V100 or earlier generation GPUs: call AutoModelForCausalLM.from_pretrained() with attn_implementation="eager"

|

| 484 |

+

|

| 485 |

+

## License

|

| 486 |

+

The model is licensed under the [MIT license](./LICENSE).

|

| 487 |

+

|

| 488 |

+

## Trademarks

|

| 489 |

+

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow [Microsoft's Trademark & Brand Guidelines](https://www.microsoft.com/en-us/legal/intellectualproperty/trademarks). Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos are subject to those third-party's policies.

|

| 490 |

+

|

| 491 |

+

## Appendix A: Benchmark Methodology

|

| 492 |

+

|

| 493 |

+

We include a brief word on methodology here - and in particular, how we think about optimizing prompts.

|

| 494 |

+

In an ideal world, we would never change any prompts in our benchmarks to ensure it is always an apples-to-apples comparison when comparing different models. Indeed, this is our default approach, and is the case in the vast majority of models we have run to date.

|

| 495 |

+

There are, however, some exceptions to this. In some cases, we see a model that performs worse than expected on a given eval due to a failure to respect the output format. For example:

|

| 496 |

+

|

| 497 |

+

+ A model may refuse to answer questions (for no apparent reason), or in coding tasks models may prefix their response with “Sure, I can help with that. …” which may break the parser. In such cases, we have opted to try different system messages (e.g. “You must always respond to a question” or “Get to the point!”).

|

| 498 |

+

+ Some models, we observed that few shots actually hurt model performance. In this case we did allow running the benchmarks with 0-shots for all cases.

|

| 499 |

+

+ We have tools to convert between chat and completions APIs. When converting a chat prompt to a completion prompt, some models have different keywords e.g. Human vs User. In these cases, we do allow for model-specific mappings for chat to completion prompts.

|

| 500 |

+

|

| 501 |

+

However, we do not:

|

| 502 |

+

|

| 503 |

+

+ Pick different few-shot examples. Few shots will always be the same when comparing different models.

|

| 504 |

+

+ Change prompt format: e.g. if it is an A/B/C/D multiple choice, we do not tweak this to 1/2/3/4 multiple choice.

|

| 505 |

+

|

| 506 |

+

### Vision Benchmark Settings

|

| 507 |

+

|

| 508 |

+

The goal of the benchmark setup is to measure the performance of the LMM when a regular user utilizes these models for a task involving visual input. To this end, we selected 9 popular and publicly available single-frame datasets and 3 multi-frame benchmarks that cover a wide range of challenging topics and tasks (e.g., mathematics, OCR tasks, charts-and-plots understanding, etc.) as well as a set of high-quality models.

|

| 509 |

+

Our benchmarking setup utilizes zero-shot prompts and all the prompt content are the same for every model. We only formatted the prompt content to satisfy the model's prompt API. This ensures that our evaluation is fair across the set of models we tested. Many benchmarks necessitate models to choose their responses from a presented list of options. Therefore, we've included a directive in the prompt's conclusion, guiding all models to pick the option letter that corresponds to the answer they deem correct.

|

| 510 |

+

In terms of the visual input, we use the images from the benchmarks as they come from the original datasets. We converted these images to base-64 using a JPEG encoding for models that require this format (e.g., GPTV, Claude Sonnet 3.5, Gemini 1.5 Pro/Flash). For other models (e.g., Llava Interleave, and InternVL2 4B and 8B), we used their Huggingface interface and passed in PIL images or a JPEG image stored locally. We did not scale or pre-process images in any other way.

|

| 511 |

+

Lastly, we used the same code to extract answers and evaluate them using the same code for every considered model. This ensures that we are fair in assessing the quality of their answers.

|

| 512 |

+

|

| 513 |

+

### Speech Benchmark Settings

|

| 514 |

+

|

| 515 |

+

The objective of this benchmarking setup is to assess the performance of models in speech and audio understanding tasks as utilized by regular users. To accomplish this, we selected several state-of-the-art open-sourced and closed-sourced models and performed evaluations across a variety of public and in-house benchmarks. These benchmarks encompass diverse and challenging topics, including Automatic Speech Recognition (ASR), Automatic Speech Translation (AST), Spoken Query Question Answering (SQQA), Audio Understanding (AU), and Speech Summarization.

|

| 516 |

+

The results are derived from evaluations conducted on identical test data without any further clarifications. All results were obtained without sampling during inference. For an accurate comparison, we employed consistent prompts for models across different tasks, except for certain model APIs (e.g., GPT-4o), which may refuse to respond to specific prompts for some tasks.

|

| 517 |

+

In conclusion, we used uniform code to extract answers and evaluate them for all considered models. This approach ensured fairness by assessing the quality of their responses.

|

| 518 |

+

|

| 519 |

+

### Benchmark datasets

|

| 520 |

+

|

| 521 |

+

The model was evaluated across a breadth of public and internal benchmarks to understand it's capabilities under multiple tasks and conditions. While most evaluations use English, multilingual benchmark was incorporated to cover performance in select languages. More specifically,

|

| 522 |

+

+ Vision:

|

| 523 |

+

+ Popular aggregated benchmark:

|

| 524 |

+

+ MMMU and MMMU-Pro: massive multi-discipline tasks at college-level subject knowledge and deliberate reasoning.

|

| 525 |

+

+ MMBench: large-scale benchmark to evaluate perception and reasoning capabilities.

|

| 526 |

+

+ Visual reasoning:

|

| 527 |

+