Migel Tissera

commited on

Commit

•

65d56b3

1

Parent(s):

11fc037

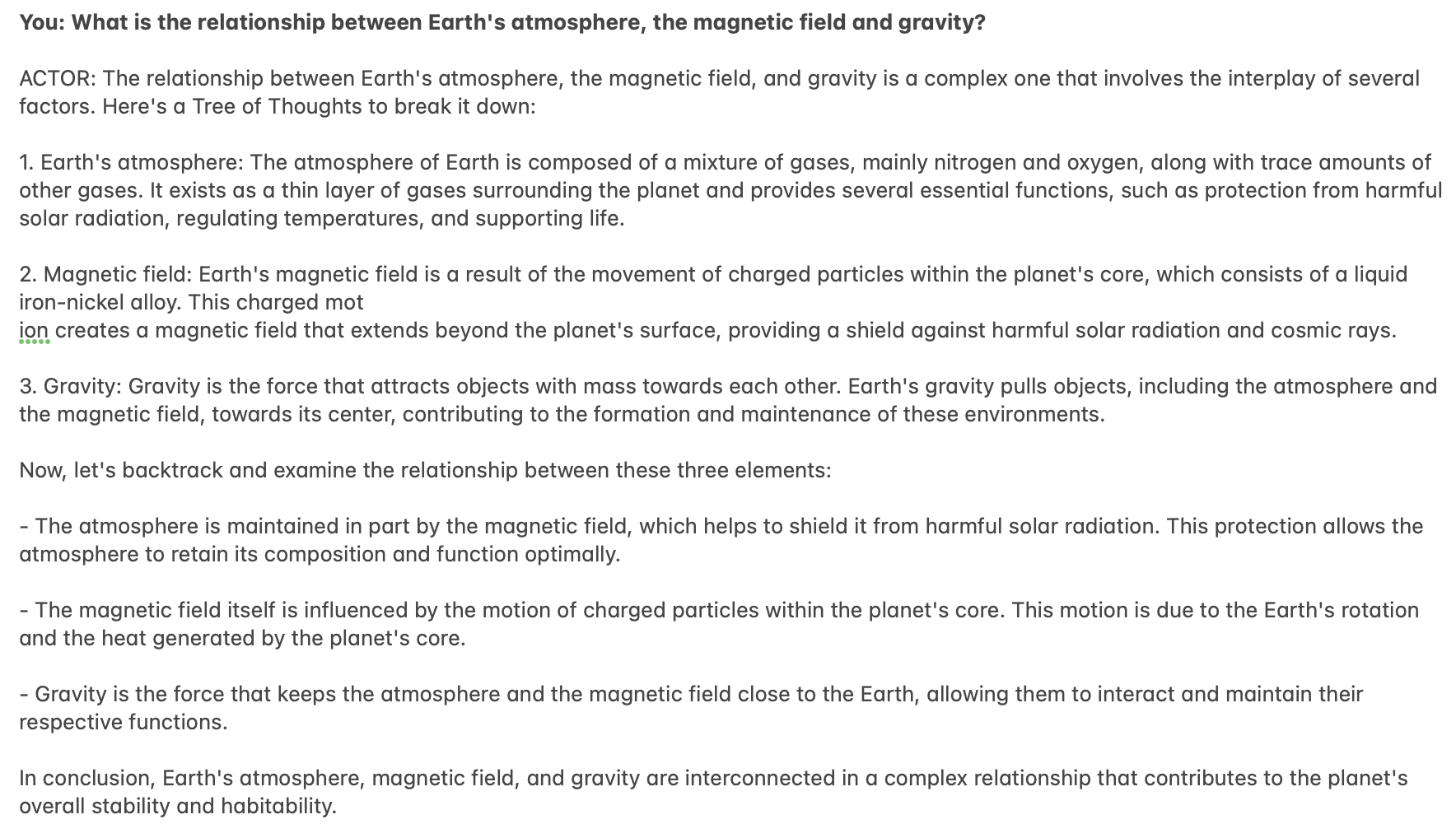

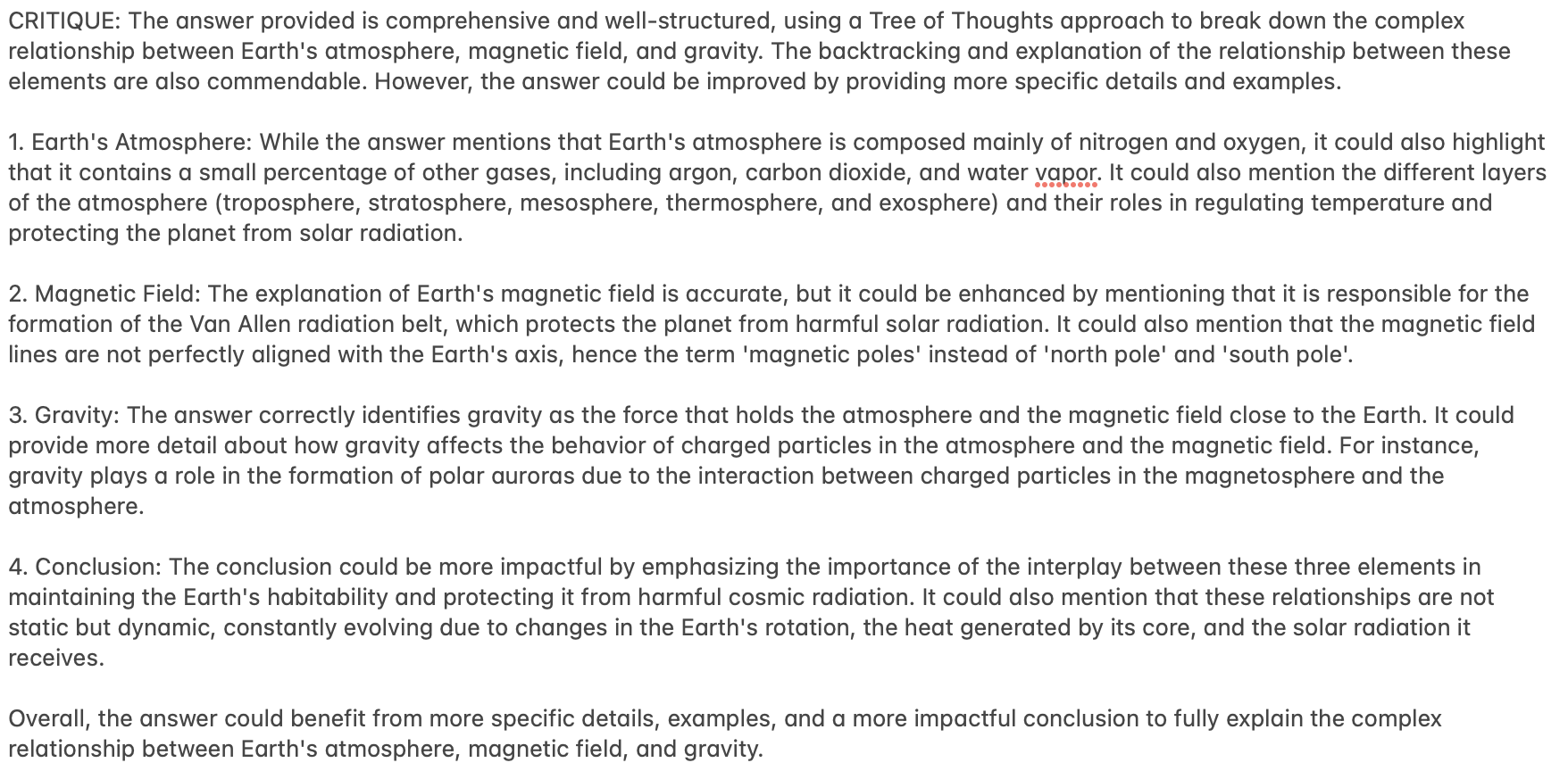

sample code for conversations added

Browse files

README.md

CHANGED

|

@@ -48,6 +48,15 @@ A thrid LLM was fine-tuned using the above data.

|

|

| 48 |

|

| 49 |

The `critic` and the `regenerator` was tested not only on the accopanying actor model, but 13B and 70B SynthIA models as well. They seem to be readily transferrable, as the function that it has learnt is to provide an intelligent critique and then a regeneration of the original response. Please feel free to try out other models as the `actor`. However, the architecture works best with all three as presented here in HelixNet.

|

| 50 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 51 |

# Prompt format:

|

| 52 |

|

| 53 |

```

|

|

@@ -58,7 +67,7 @@ ASSISTANT:

|

|

| 58 |

|

| 59 |

# Example Usage

|

| 60 |

|

| 61 |

-

## Code example:

|

| 62 |

|

| 63 |

The following is a code example on how to use HelixNet. No special system-context messages are needed for the `critic` and the `regenerator`.

|

| 64 |

|

|

@@ -141,8 +150,90 @@ while True:

|

|

| 141 |

|

| 142 |

```

|

| 143 |

|

| 144 |

-

|

| 145 |

|

| 146 |

-

|

| 147 |

|

| 148 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 48 |

|

| 49 |

The `critic` and the `regenerator` was tested not only on the accopanying actor model, but 13B and 70B SynthIA models as well. They seem to be readily transferrable, as the function that it has learnt is to provide an intelligent critique and then a regeneration of the original response. Please feel free to try out other models as the `actor`. However, the architecture works best with all three as presented here in HelixNet.

|

| 50 |

|

| 51 |

+

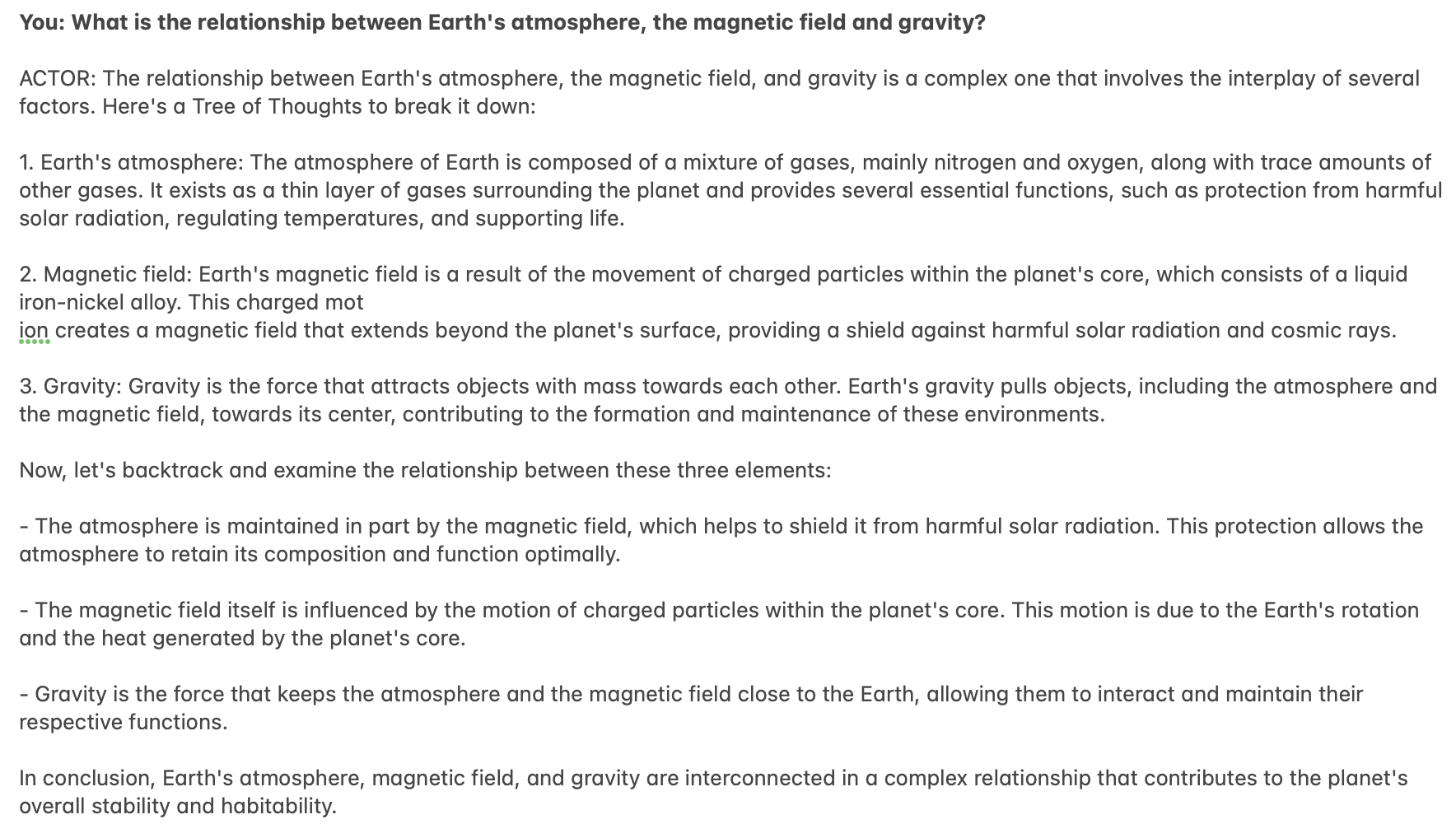

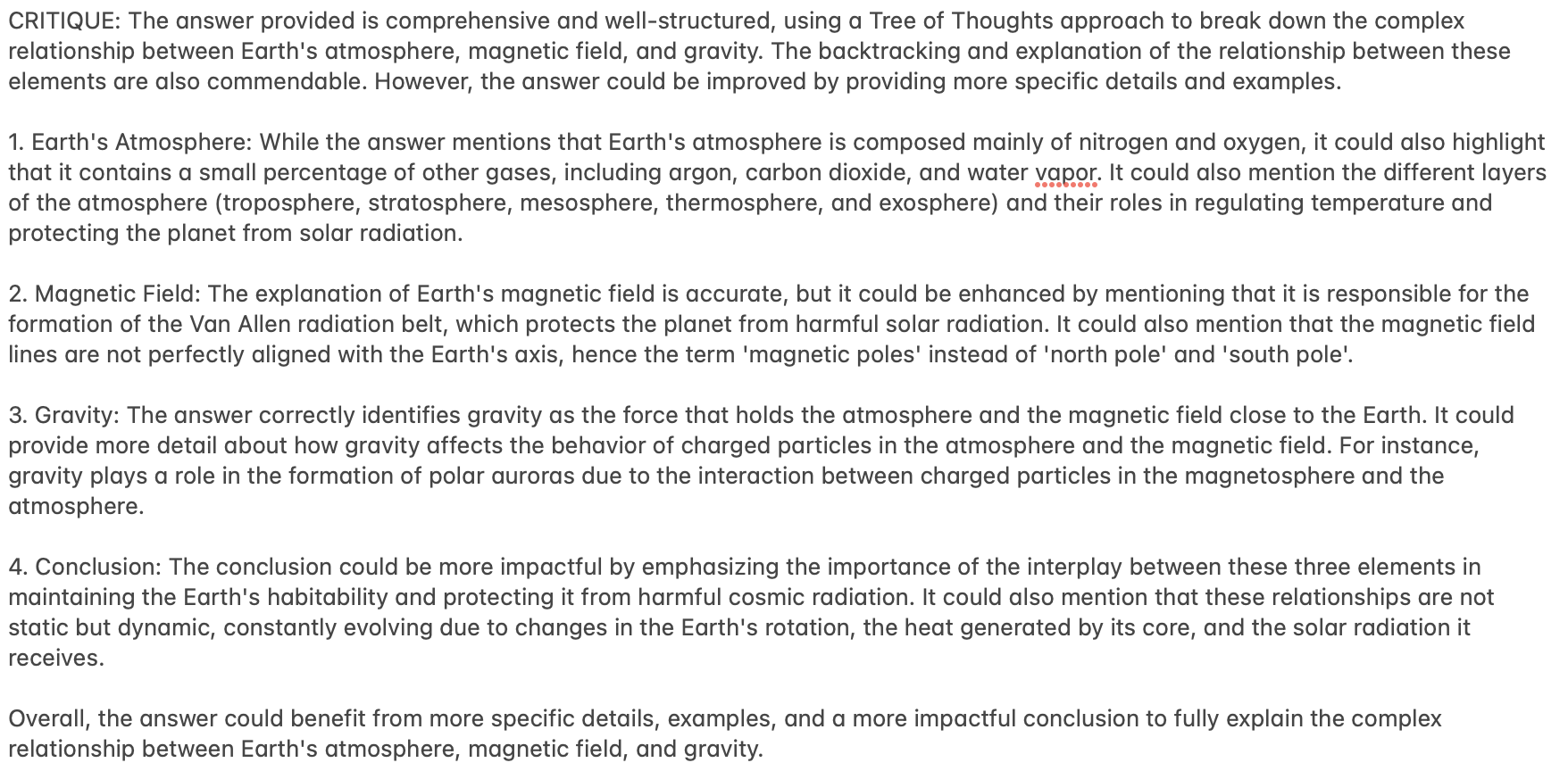

# Sample Generations

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

|

| 60 |

# Prompt format:

|

| 61 |

|

| 62 |

```

|

|

|

|

| 67 |

|

| 68 |

# Example Usage

|

| 69 |

|

| 70 |

+

## Code example (Verbose):

|

| 71 |

|

| 72 |

The following is a code example on how to use HelixNet. No special system-context messages are needed for the `critic` and the `regenerator`.

|

| 73 |

|

|

|

|

| 150 |

|

| 151 |

```

|

| 152 |

|

|

|

|

| 153 |

|

|

|

|

| 154 |

|

| 155 |

+

## Code Example (Continuing a conversation)

|

| 156 |

+

|

| 157 |

+

To have a back-and-forth conversation, only carry forward the system-context, questions and regenerations as shown below.

|

| 158 |

+

|

| 159 |

+

```python

|

| 160 |

+

import torch, json

|

| 161 |

+

from transformers import AutoModelForCausalLM, AutoTokenizer

|

| 162 |

+

|

| 163 |

+

model_path_actor = "/home/ubuntu/llm/HelixNet/actor"

|

| 164 |

+

model_path_critic = "/home/ubuntu/llm/HelixNet/critic"

|

| 165 |

+

model_path_regenerator = "/home/ubuntu/llm/HelixNet/regenerator"

|

| 166 |

+

|

| 167 |

+

def load_model(model_path):

|

| 168 |

+

model = AutoModelForCausalLM.from_pretrained(

|

| 169 |

+

model_path,

|

| 170 |

+

torch_dtype=torch.float16,

|

| 171 |

+

device_map="cuda",

|

| 172 |

+

load_in_4bit=False,

|

| 173 |

+

trust_remote_code=True,

|

| 174 |

+

)

|

| 175 |

+

return model

|

| 176 |

+

|

| 177 |

+

def load_tokenizer(model_path):

|

| 178 |

+

tokenizer = AutoTokenizer.from_pretrained(model_path, trust_remote_code=True)

|

| 179 |

+

return tokenizer

|

| 180 |

+

|

| 181 |

+

model_actor = load_model(model_path_actor)

|

| 182 |

+

model_critic = load_model(model_path_critic)

|

| 183 |

+

model_regenerator = load_model(model_path_regenerator)

|

| 184 |

+

|

| 185 |

+

tokenizer_actor = load_tokenizer(model_path_actor)

|

| 186 |

+

tokenizer_critic = load_tokenizer(model_path_critic)

|

| 187 |

+

tokenizer_regenerator = load_tokenizer(model_path_regenerator)

|

| 188 |

+

|

| 189 |

+

def generate_text(instruction, model, tokenizer):

|

| 190 |

+

tokens = tokenizer.encode(instruction)

|

| 191 |

+

tokens = torch.LongTensor(tokens).unsqueeze(0)

|

| 192 |

+

tokens = tokens.to("cuda")

|

| 193 |

+

|

| 194 |

+

instance = {

|

| 195 |

+

"input_ids": tokens,

|

| 196 |

+

"top_p": 1.0,

|

| 197 |

+

"temperature": 0.75,

|

| 198 |

+

"generate_len": 1024,

|

| 199 |

+

"top_k": 50,

|

| 200 |

+

}

|

| 201 |

+

|

| 202 |

+

length = len(tokens[0])

|

| 203 |

+

with torch.no_grad():

|

| 204 |

+

rest = model.generate(

|

| 205 |

+

input_ids=tokens,

|

| 206 |

+

max_length=length + instance["generate_len"],

|

| 207 |

+

use_cache=True,

|

| 208 |

+

do_sample=True,

|

| 209 |

+

top_p=instance["top_p"],

|

| 210 |

+

temperature=instance["temperature"],

|

| 211 |

+

top_k=instance["top_k"],

|

| 212 |

+

num_return_sequences=1,

|

| 213 |

+

)

|

| 214 |

+

output = rest[0][length:]

|

| 215 |

+

string = tokenizer.decode(output, skip_special_tokens=True)

|

| 216 |

+

return f"{string}"

|

| 217 |

+

|

| 218 |

+

system_prompt = "You are HelixNet. Elaborate on the topic using a Tree of Thoughts and backtrack when necessary to construct a clear, cohesive Chain of Thought reasoning. Always answer without hesitation."

|

| 219 |

+

|

| 220 |

+

conversation = f"SYSTEM:{system_prompt}"

|

| 221 |

+

|

| 222 |

+

while True:

|

| 223 |

+

user_input = input("You: ")

|

| 224 |

+

|

| 225 |

+

prompt_actor = f"{conversation} \nUSER: {user_input} \nASSISTANT: "

|

| 226 |

+

actor_response = generate_text(prompt_actor, model_actor, tokenizer_actor)

|

| 227 |

+

print("Generated ACTOR RESPONSE")

|

| 228 |

+

|

| 229 |

+

prompt_critic = f"SYSTEM: {system_prompt} \nUSER: {user_input} \nRESPONSE: {actor_response} \nCRITIQUE:"

|

| 230 |

+

critic_response = generate_text(prompt_critic, model_critic, tokenizer_critic)

|

| 231 |

+

print("Generated CRITIQUE")

|

| 232 |

+

|

| 233 |

+

prompt_regenerator = f"SYSTEM: {system_prompt} \nUSER: {user_input} \nRESPONSE: {actor_response} \nCRITIQUE: {critic_response} \nREGENERATOR:"

|

| 234 |

+

regenerator_response = generate_text(prompt_regenerator, model_regenerator, tokenizer_regenerator)

|

| 235 |

+

print("Generated REGENERATION")

|

| 236 |

+

|

| 237 |

+

conversation = f"{conversation} \nUSER: {user_input} \nASSISTANT: {regenerator_response}"

|

| 238 |

+

print(conversation)

|

| 239 |

+

```

|