add the ONNX-TensorRT way of model conversion

Browse files- README.md +42 -0

- configs/inference_trt.json +10 -0

- configs/metadata.json +2 -1

- docs/README.md +42 -0

README.md

CHANGED

|

@@ -74,6 +74,33 @@ Accuracy was used for evaluating the performance of the model. This model achiev

|

|

| 74 |

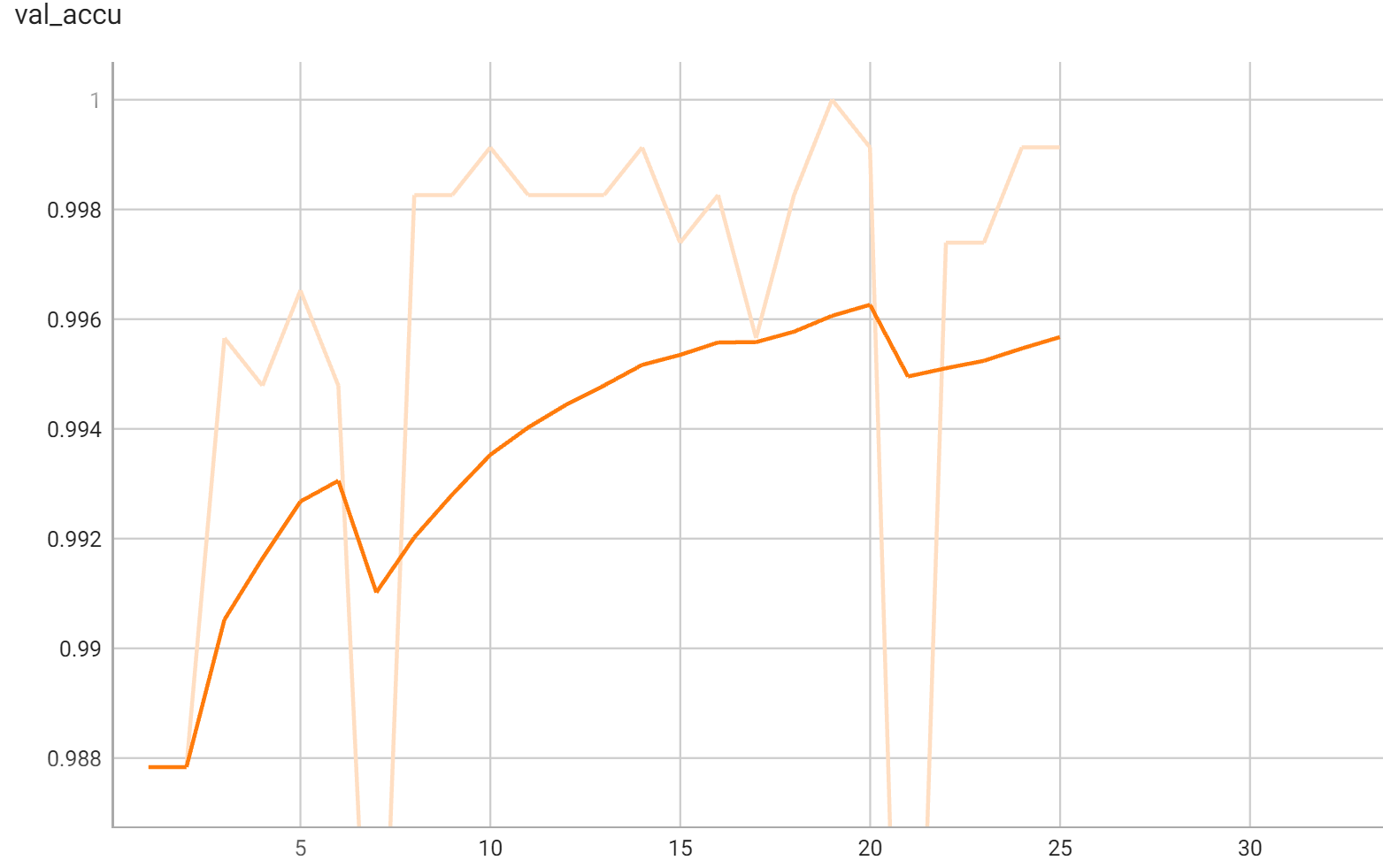

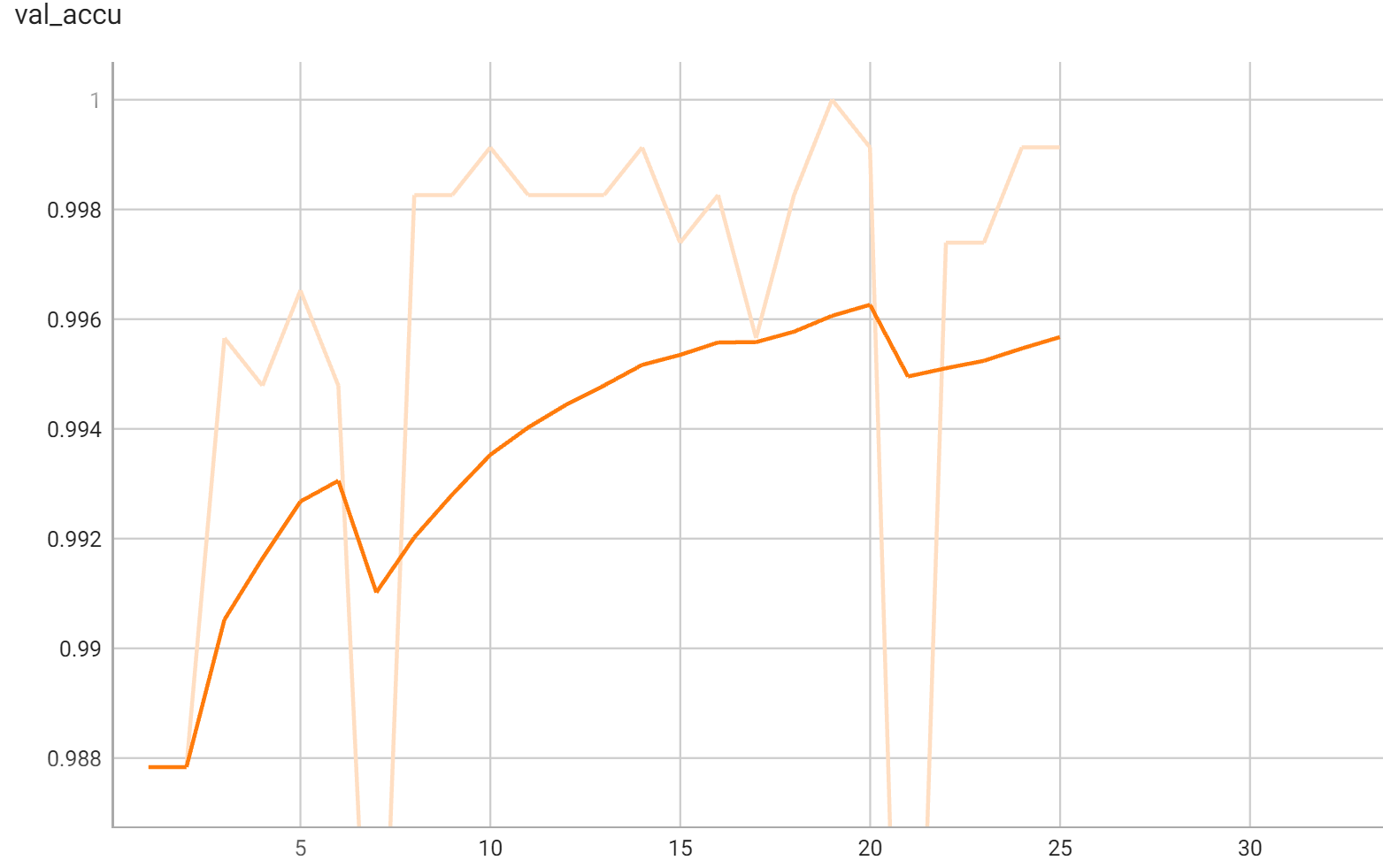

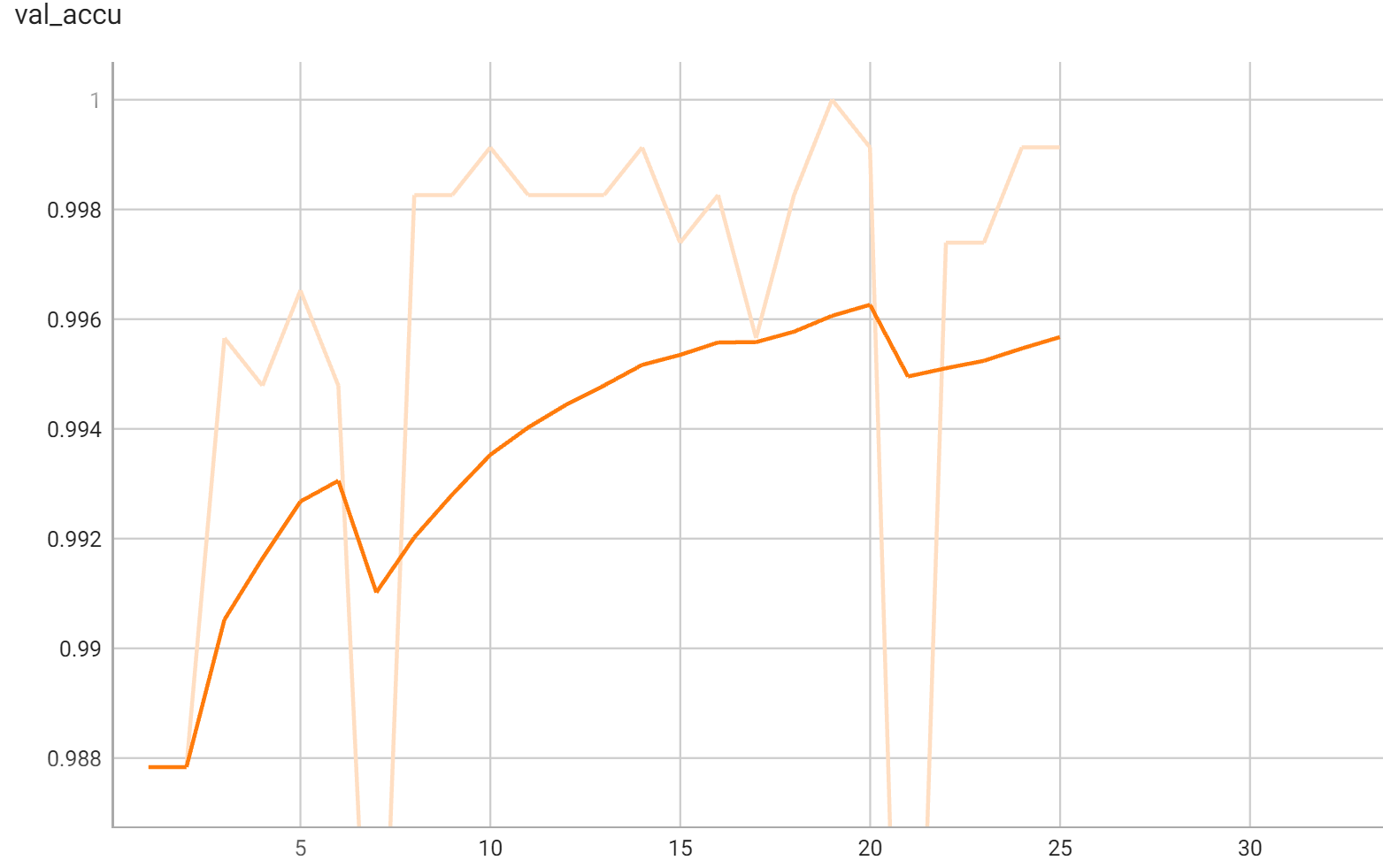

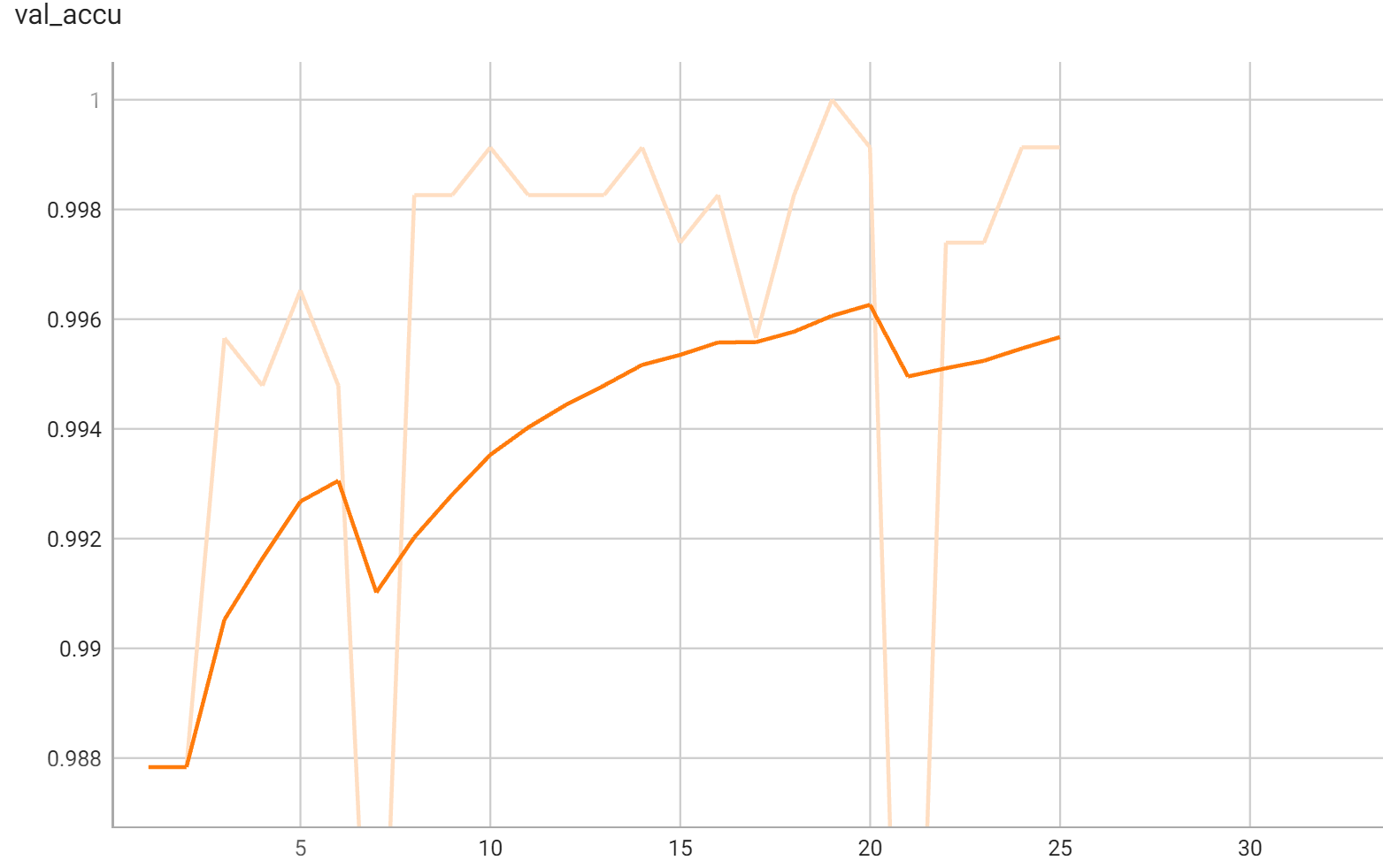

#### Validation Accuracy

|

| 75 |

|

| 76 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 77 |

## MONAI Bundle Commands

|

| 78 |

In addition to the Pythonic APIs, a few command line interfaces (CLI) are provided to interact with the bundle. The CLI supports flexible use cases, such as overriding configs at runtime and predefining arguments in a file.

|

| 79 |

|

|

@@ -115,6 +142,21 @@ The classification result of every images in `test.json` will be printed to the

|

|

| 115 |

python -m monai.bundle ckpt_export network_def --filepath models/model.ts --ckpt_file models/model.pt --meta_file configs/metadata.json --config_file configs/inference.json

|

| 116 |

```

|

| 117 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 118 |

# References

|

| 119 |

[1] J. Hu, L. Shen and G. Sun, Squeeze-and-Excitation Networks, 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 7132-7141. https://arxiv.org/pdf/1709.01507.pdf

|

| 120 |

|

|

|

|

| 74 |

#### Validation Accuracy

|

| 75 |

|

| 76 |

|

| 77 |

+

#### TensorRT speedup

|

| 78 |

+

The `endoscopic_inbody_classification` bundle supports the TensorRT acceleration through the ONNX-TensorRT way. The table below shows the speedup ratios benchmarked on an A100 80G GPU.

|

| 79 |

+

|

| 80 |

+

| method | torch_fp32(ms) | torch_amp(ms) | trt_fp32(ms) | trt_fp16(ms) | speedup amp | speedup fp32 | speedup fp16 | amp vs fp16|

|

| 81 |

+

| :---: | :---: | :---: | :---: | :---: | :---: | :---: | :---: | :---: |

|

| 82 |

+

| model computation | 6.50 | 9.23 | 2.78 | 2.31 | 0.70 | 2.34 | 2.81 | 4.00 |

|

| 83 |

+

| end2end | 23.54 | 23.78 | 7.37 | 7.14 | 0.99 | 3.19 | 3.30 | 3.33 |

|

| 84 |

+

|

| 85 |

+

Where:

|

| 86 |

+

- `model computation` means the speedup ratio of model's inference with a random input without preprocessing and postprocessing

|

| 87 |

+

- `end2end` means run the bundle end-to-end with the TensorRT based model.

|

| 88 |

+

- `torch_fp32` and `torch_amp` are for the PyTorch models with or without `amp` mode.

|

| 89 |

+

- `trt_fp32` and `trt_fp16` are for the TensorRT based models converted in corresponding precision.

|

| 90 |

+

- `speedup amp`, `speedup fp32` and `speedup fp16` are the speedup ratios of corresponding models versus the PyTorch float32 model

|

| 91 |

+

- `amp vs fp16` is the speedup ratio between the PyTorch amp model and the TensorRT float16 based model.

|

| 92 |

+

|

| 93 |

+

Currently, this model can only be accelerated through the ONNX-TensorRT way and the Torch-TensorRT way will come soon.

|

| 94 |

+

|

| 95 |

+

This result is benchmarked under:

|

| 96 |

+

- TensorRT: 8.5.3+cuda11.8

|

| 97 |

+

- Torch-TensorRT Version: 1.4.0

|

| 98 |

+

- CPU Architecture: x86-64

|

| 99 |

+

- OS: ubuntu 20.04

|

| 100 |

+

- Python version:3.8.10

|

| 101 |

+

- CUDA version: 12.0

|

| 102 |

+

- GPU models and configuration: A100 80G

|

| 103 |

+

|

| 104 |

## MONAI Bundle Commands

|

| 105 |

In addition to the Pythonic APIs, a few command line interfaces (CLI) are provided to interact with the bundle. The CLI supports flexible use cases, such as overriding configs at runtime and predefining arguments in a file.

|

| 106 |

|

|

|

|

| 142 |

python -m monai.bundle ckpt_export network_def --filepath models/model.ts --ckpt_file models/model.pt --meta_file configs/metadata.json --config_file configs/inference.json

|

| 143 |

```

|

| 144 |

|

| 145 |

+

#### Export checkpoint to TensorRT based models with fp32 or fp16 precision:

|

| 146 |

+

|

| 147 |

+

```bash

|

| 148 |

+

python -m monai.bundle trt_export --net_id network_def \

|

| 149 |

+

--filepath models/model_trt.ts --ckpt_file models/model.pt \

|

| 150 |

+

--meta_file configs/metadata.json --config_file configs/inference.json \

|

| 151 |

+

--precision <fp32/fp16> --use_onnx "True" --use_trace "True"

|

| 152 |

+

```

|

| 153 |

+

|

| 154 |

+

#### Execute inference with the TensorRT model:

|

| 155 |

+

|

| 156 |

+

```

|

| 157 |

+

python -m monai.bundle run --config_file "['configs/inference.json', 'configs/inference_trt.json']"

|

| 158 |

+

```

|

| 159 |

+

|

| 160 |

# References

|

| 161 |

[1] J. Hu, L. Shen and G. Sun, Squeeze-and-Excitation Networks, 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 7132-7141. https://arxiv.org/pdf/1709.01507.pdf

|

| 162 |

|

configs/inference_trt.json

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"imports": [

|

| 3 |

+

"$import os",

|

| 4 |

+

"$import json",

|

| 5 |

+

"$import torch_tensorrt"

|

| 6 |

+

],

|

| 7 |

+

"handlers#0#_disabled_": true,

|

| 8 |

+

"network_def": "$torch.jit.load(@bundle_root + '/models/model_trt.ts')",

|

| 9 |

+

"evaluator#amp": false

|

| 10 |

+

}

|

configs/metadata.json

CHANGED

|

@@ -1,7 +1,8 @@

|

|

| 1 |

{

|

| 2 |

"schema": "https://github.com/Project-MONAI/MONAI-extra-test-data/releases/download/0.8.1/meta_schema_20220324.json",

|

| 3 |

-

"version": "0.

|

| 4 |

"changelog": {

|

|

|

|

| 5 |

"0.3.9": "fix mgpu finalize issue",

|

| 6 |

"0.3.8": "enable deterministic training",

|

| 7 |

"0.3.7": "adapt to BundleWorkflow interface",

|

|

|

|

| 1 |

{

|

| 2 |

"schema": "https://github.com/Project-MONAI/MONAI-extra-test-data/releases/download/0.8.1/meta_schema_20220324.json",

|

| 3 |

+

"version": "0.4.0",

|

| 4 |

"changelog": {

|

| 5 |

+

"0.4.0": "add the ONNX-TensorRT way of model conversion",

|

| 6 |

"0.3.9": "fix mgpu finalize issue",

|

| 7 |

"0.3.8": "enable deterministic training",

|

| 8 |

"0.3.7": "adapt to BundleWorkflow interface",

|

docs/README.md

CHANGED

|

@@ -67,6 +67,33 @@ Accuracy was used for evaluating the performance of the model. This model achiev

|

|

| 67 |

#### Validation Accuracy

|

| 68 |

|

| 69 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 70 |

## MONAI Bundle Commands

|

| 71 |

In addition to the Pythonic APIs, a few command line interfaces (CLI) are provided to interact with the bundle. The CLI supports flexible use cases, such as overriding configs at runtime and predefining arguments in a file.

|

| 72 |

|

|

@@ -108,6 +135,21 @@ The classification result of every images in `test.json` will be printed to the

|

|

| 108 |

python -m monai.bundle ckpt_export network_def --filepath models/model.ts --ckpt_file models/model.pt --meta_file configs/metadata.json --config_file configs/inference.json

|

| 109 |

```

|

| 110 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 111 |

# References

|

| 112 |

[1] J. Hu, L. Shen and G. Sun, Squeeze-and-Excitation Networks, 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 7132-7141. https://arxiv.org/pdf/1709.01507.pdf

|

| 113 |

|

|

|

|

| 67 |

#### Validation Accuracy

|

| 68 |

|

| 69 |

|

| 70 |

+

#### TensorRT speedup

|

| 71 |

+

The `endoscopic_inbody_classification` bundle supports the TensorRT acceleration through the ONNX-TensorRT way. The table below shows the speedup ratios benchmarked on an A100 80G GPU.

|

| 72 |

+

|

| 73 |

+

| method | torch_fp32(ms) | torch_amp(ms) | trt_fp32(ms) | trt_fp16(ms) | speedup amp | speedup fp32 | speedup fp16 | amp vs fp16|

|

| 74 |

+

| :---: | :---: | :---: | :---: | :---: | :---: | :---: | :---: | :---: |

|

| 75 |

+

| model computation | 6.50 | 9.23 | 2.78 | 2.31 | 0.70 | 2.34 | 2.81 | 4.00 |

|

| 76 |

+

| end2end | 23.54 | 23.78 | 7.37 | 7.14 | 0.99 | 3.19 | 3.30 | 3.33 |

|

| 77 |

+

|

| 78 |

+

Where:

|

| 79 |

+

- `model computation` means the speedup ratio of model's inference with a random input without preprocessing and postprocessing

|

| 80 |

+

- `end2end` means run the bundle end-to-end with the TensorRT based model.

|

| 81 |

+

- `torch_fp32` and `torch_amp` are for the PyTorch models with or without `amp` mode.

|

| 82 |

+

- `trt_fp32` and `trt_fp16` are for the TensorRT based models converted in corresponding precision.

|

| 83 |

+

- `speedup amp`, `speedup fp32` and `speedup fp16` are the speedup ratios of corresponding models versus the PyTorch float32 model

|

| 84 |

+

- `amp vs fp16` is the speedup ratio between the PyTorch amp model and the TensorRT float16 based model.

|

| 85 |

+

|

| 86 |

+

Currently, this model can only be accelerated through the ONNX-TensorRT way and the Torch-TensorRT way will come soon.

|

| 87 |

+

|

| 88 |

+

This result is benchmarked under:

|

| 89 |

+

- TensorRT: 8.5.3+cuda11.8

|

| 90 |

+

- Torch-TensorRT Version: 1.4.0

|

| 91 |

+

- CPU Architecture: x86-64

|

| 92 |

+

- OS: ubuntu 20.04

|

| 93 |

+

- Python version:3.8.10

|

| 94 |

+

- CUDA version: 12.0

|

| 95 |

+

- GPU models and configuration: A100 80G

|

| 96 |

+

|

| 97 |

## MONAI Bundle Commands

|

| 98 |

In addition to the Pythonic APIs, a few command line interfaces (CLI) are provided to interact with the bundle. The CLI supports flexible use cases, such as overriding configs at runtime and predefining arguments in a file.

|

| 99 |

|

|

|

|

| 135 |

python -m monai.bundle ckpt_export network_def --filepath models/model.ts --ckpt_file models/model.pt --meta_file configs/metadata.json --config_file configs/inference.json

|

| 136 |

```

|

| 137 |

|

| 138 |

+

#### Export checkpoint to TensorRT based models with fp32 or fp16 precision:

|

| 139 |

+

|

| 140 |

+

```bash

|

| 141 |

+

python -m monai.bundle trt_export --net_id network_def \

|

| 142 |

+

--filepath models/model_trt.ts --ckpt_file models/model.pt \

|

| 143 |

+

--meta_file configs/metadata.json --config_file configs/inference.json \

|

| 144 |

+

--precision <fp32/fp16> --use_onnx "True" --use_trace "True"

|

| 145 |

+

```

|

| 146 |

+

|

| 147 |

+

#### Execute inference with the TensorRT model:

|

| 148 |

+

|

| 149 |

+

```

|

| 150 |

+

python -m monai.bundle run --config_file "['configs/inference.json', 'configs/inference_trt.json']"

|

| 151 |

+

```

|

| 152 |

+

|

| 153 |

# References

|

| 154 |

[1] J. Hu, L. Shen and G. Sun, Squeeze-and-Excitation Networks, 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 7132-7141. https://arxiv.org/pdf/1709.01507.pdf

|

| 155 |

|