Commit

·

48e115e

1

Parent(s):

76badcb

Update README.md

Browse files

README.md

CHANGED

|

@@ -10,24 +10,52 @@ model-index:

|

|

| 10 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

|

| 11 |

should probably proofread and complete it, then remove this comment. -->

|

| 12 |

|

| 13 |

-

# ArtPrompter

|

| 14 |

|

| 15 |

-

|

| 16 |

|

| 17 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 18 |

|

| 19 |

-

More information needed

|

| 20 |

|

| 21 |

## Intended uses & limitations

|

| 22 |

|

| 23 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 24 |

|

| 25 |

-

##

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 26 |

|

| 27 |

-

More information needed

|

| 28 |

|

| 29 |

## Training procedure

|

| 30 |

|

|

|

|

|

|

|

| 31 |

### Training hyperparameters

|

| 32 |

|

| 33 |

The following hyperparameters were used during training:

|

|

@@ -39,12 +67,8 @@ The following hyperparameters were used during training:

|

|

| 39 |

- lr_scheduler_type: linear

|

| 40 |

- num_epochs: 50

|

| 41 |

|

| 42 |

-

### Training results

|

| 43 |

-

|

| 44 |

-

|

| 45 |

-

|

| 46 |

### Framework versions

|

| 47 |

|

| 48 |

- Transformers 4.25.1

|

| 49 |

- Pytorch 1.13.1

|

| 50 |

-

- Tokenizers 0.13.2

|

|

|

|

| 10 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

|

| 11 |

should probably proofread and complete it, then remove this comment. -->

|

| 12 |

|

| 13 |

+

# [ArtPrompter](https://pearsonkyle.github.io/Art-Prompter/)

|

| 14 |

|

| 15 |

+

A [gpt2](https://huggingface.co/gpt2) powered predictive keyboard for making descriptive text prompts for A.I. image generators (e.g. MidJourney, Stable Diffusion, ArtBot, etc). The model was trained on a custom dataset containing 521K MidJourney images corresponding to 212K unique prompts.

|

| 16 |

|

| 17 |

+

```python

|

| 18 |

+

from transformers import pipeline

|

| 19 |

+

|

| 20 |

+

ai = pipeline('text-generation',model='pearsonkyle/ArtPrompter', tokenizer='gpt2')

|

| 21 |

+

|

| 22 |

+

texts = ai('The', max_length=30, num_return_sequences=5)

|

| 23 |

+

|

| 24 |

+

for i in range(5):

|

| 25 |

+

print(texts[i]['generated_text']+'\n')

|

| 26 |

+

```

|

| 27 |

+

|

| 28 |

+

[](https://colab.research.google.com/drive/1HQOtD2LENTeXEaxHUfIhDKUaPIGd6oTR?usp=sharing)

|

| 29 |

|

|

|

|

| 30 |

|

| 31 |

## Intended uses & limitations

|

| 32 |

|

| 33 |

+

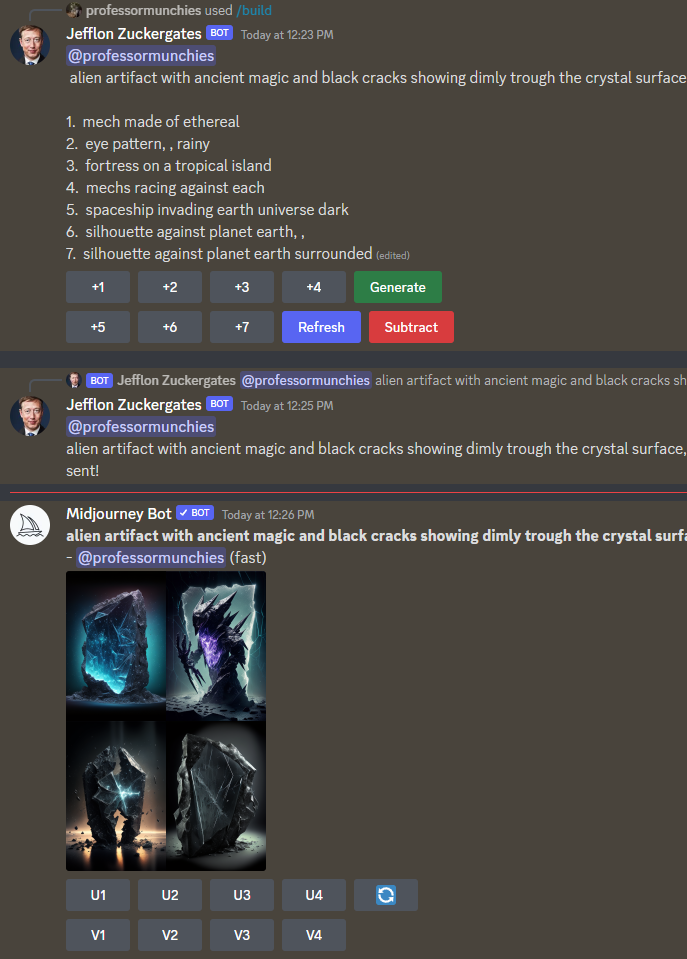

Build prompts and generate images on Discord!

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

[](https://discord.gg/3S8Taqa2Xy)

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

[](https://discord.gg/3S8Taqa2Xy)

|

| 40 |

+

|

| 41 |

|

| 42 |

+

## Examples

|

| 43 |

+

|

| 44 |

+

All text prompts below are generated with our language model

|

| 45 |

+

|

| 46 |

+

- *The entire universe is a simulation,a confessional with a smiling guy fawkes mask, symmetrical, inviting,hyper realistic*

|

| 47 |

+

|

| 48 |

+

- *a pug disguised as a teacher. Setting is a class room*

|

| 49 |

+

|

| 50 |

+

- *I wish I had an angel For one moment of love I wish I had your angel Your Virgin Mary undone Im in love with my desire Burning angelwings to dust*

|

| 51 |

+

|

| 52 |

+

- *The heart of a galaxy, surrounded by stars, magnetic fields, big bang, cinestill 800T,black background, hyper detail, 8k, black*

|

| 53 |

|

|

|

|

| 54 |

|

| 55 |

## Training procedure

|

| 56 |

|

| 57 |

+

~30 hour of finetune on RTX2080 with 212K unique prompts

|

| 58 |

+

|

| 59 |

### Training hyperparameters

|

| 60 |

|

| 61 |

The following hyperparameters were used during training:

|

|

|

|

| 67 |

- lr_scheduler_type: linear

|

| 68 |

- num_epochs: 50

|

| 69 |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 70 |

### Framework versions

|

| 71 |

|

| 72 |

- Transformers 4.25.1

|

| 73 |

- Pytorch 1.13.1

|

| 74 |

+

- Tokenizers 0.13.2

|