quarterturn

commited on

Commit

·

ee26be4

1

Parent(s):

837bad6

first commit

Browse files- .gitattributes +1 -0

- README.md +5 -0

- example.png +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

*.png filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

@@ -24,3 +24,8 @@ Install:

|

|

| 24 |

``` python3 caption.py ```

|

| 25 |

1. make sure your images are in the "images" directory

|

| 26 |

2. captions will be placed in the "images" directory

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 24 |

``` python3 caption.py ```

|

| 25 |

1. make sure your images are in the "images" directory

|

| 26 |

2. captions will be placed in the "images" directory

|

| 27 |

+

|

| 28 |

+

Note:

|

| 29 |

+

- The scripts are configured to load the model at bf16 precision, for max precision and lower memory utilization. This should fit in a single 24GB GPU.

|

| 30 |

+

- You can edit the scripts to use a lower quant of the model, such as fp8, though accuracy may be lower.

|

| 31 |

+

- If torch sees your first GPU supports flash attention and the others do not, it will assume all the cards do and it will throw an exception. A workaround is to use, for example, "CUDA_VISIBLE_DEVICES=0 python3 main.py (or caption.py)", to force torch to ignore the card supporting flash attention, so that it will use your other cards without it. Or, use it to exclude non-flash-attention-supporting GPUs.

|

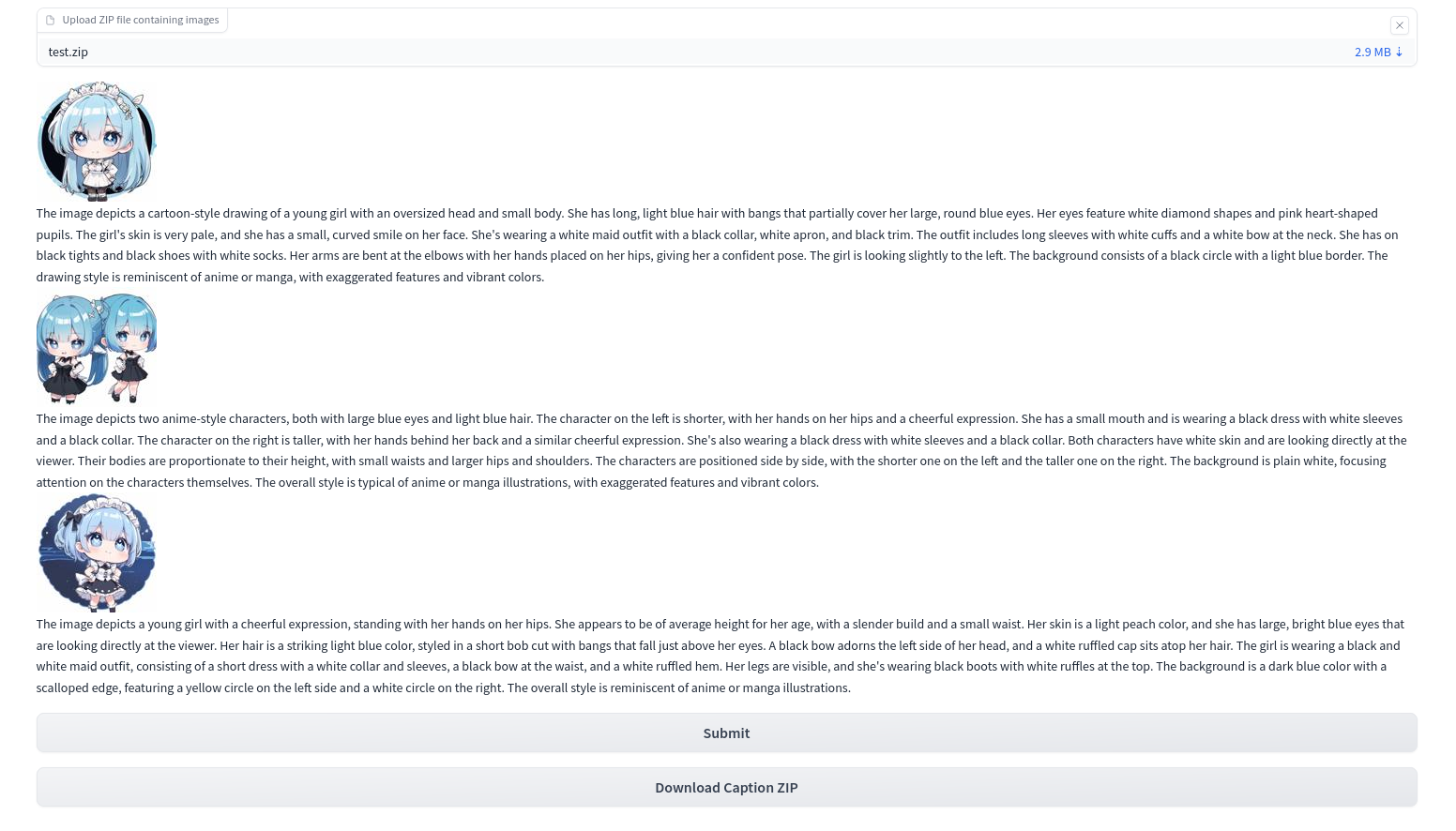

example.png

CHANGED

|

|

Git LFS Details

|