Update README.md

Browse files

README.md

CHANGED

|

@@ -11,9 +11,11 @@ licenses:

|

|

| 11 |

|

| 12 |

## Model overview

|

| 13 |

|

| 14 |

-

Mutual Implication Score

|

| 15 |

based on a RoBERTA model pretrained for natural language inference

|

| 16 |

-

and fine-tuned

|

|

|

|

|

|

|

| 17 |

|

| 18 |

## How to use

|

| 19 |

The following snippet illustrates code usage:

|

|

@@ -33,7 +35,7 @@ print(scores)

|

|

| 33 |

|

| 34 |

## Model details

|

| 35 |

|

| 36 |

-

We slightly modify [RoBERTa-Large NLI](https://huggingface.co/ynie/roberta-large-snli_mnli_fever_anli_R1_R2_R3-nli) model

|

| 37 |

|

| 38 |

|

| 39 |

|

|

@@ -41,7 +43,13 @@ We slightly modify [RoBERTa-Large NLI](https://huggingface.co/ynie/roberta-large

|

|

| 41 |

|

| 42 |

This measure was developed in terms of large scale comparison of different measures on text style transfer and paraphrases datasets.

|

| 43 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 44 |

|

|

|

|

| 45 |

|

| 46 |

## Citations

|

| 47 |

If you find this repository helpful, feel free to cite our publication:

|

|

|

|

| 11 |

|

| 12 |

## Model overview

|

| 13 |

|

| 14 |

+

Mutual Implication Score is a symmetric measure of text semantic similarity

|

| 15 |

based on a RoBERTA model pretrained for natural language inference

|

| 16 |

+

and fine-tuned on paraphrases dataset.

|

| 17 |

+

|

| 18 |

+

It is **particularly useful for paraphrases detection**, but can also be applied to other semantic similarity tasks, such as text style transfer.

|

| 19 |

|

| 20 |

## How to use

|

| 21 |

The following snippet illustrates code usage:

|

|

|

|

| 35 |

|

| 36 |

## Model details

|

| 37 |

|

| 38 |

+

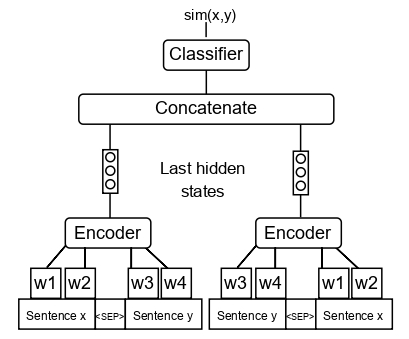

We slightly modify [RoBERTa-Large NLI](https://huggingface.co/ynie/roberta-large-snli_mnli_fever_anli_R1_R2_R3-nli) model architecture (see the scheme below) and fine-tune it with [QQP](https://www.kaggle.com/c/quora-question-pairs) paraphrases dataset.

|

| 39 |

|

| 40 |

|

| 41 |

|

|

|

|

| 43 |

|

| 44 |

This measure was developed in terms of large scale comparison of different measures on text style transfer and paraphrases datasets.

|

| 45 |

|

| 46 |

+

<img src="https://huggingface.co/SkolkovoInstitute/Mutual_Implication_Score/raw/main/corr_main.jpg" alt="drawing" width="1000"/>

|

| 47 |

+

|

| 48 |

+

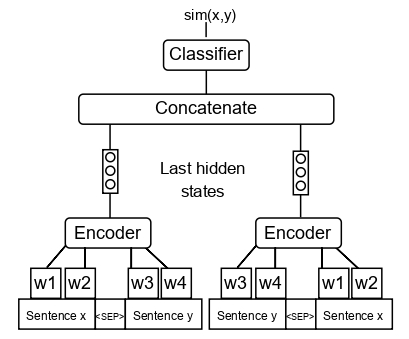

The scheme above shows the correlations of measures of different classes with human judgments on paraphrase and text style transfer datasets. The text above each dataset indicates the best-performing measure. The rightmost columns show the mean performance of measures across the datasets.

|

| 49 |

+

|

| 50 |

+

MIS outperforms all measures on paraphrases detection task and performs on par with top measures on text style transfer task.

|

| 51 |

|

| 52 |

+

To learn more refer to our article: [A large-scale computational study of content preservation measures for text style transfer and paraphrase generation](https://aclanthology.org/2022.acl-srw.23/)

|

| 53 |

|

| 54 |

## Citations

|

| 55 |

If you find this repository helpful, feel free to cite our publication:

|