Update README.md

Browse files

README.md

CHANGED

|

@@ -24,7 +24,7 @@ metrics:

|

|

| 24 |

|

| 25 |

Lamarck 14B v0.7: A generalist merge with emphasis on multi-step reasoning, prose, and multi-language ability. The 14B parameter model class has a lot of strong performers, and Lamarck strives to be well-rounded and solid:

|

| 26 |

|

| 27 |

-

Lamarck is produced by a custom toolchain to automate a

|

| 28 |

|

| 29 |

- **Extracted LoRA adapters from special-purpose merges**

|

| 30 |

- **Custom base models and model_stocks, with LoRAs from from [huihui-ai/Qwen2.5-14B-Instruct-abliterated-v2](https://huggingface.co/huihui-ai/Qwen2.5-14B-Instruct-abliterated-v2) to minimize IFEVAL loss often seen in model_stock merges**

|

|

|

|

| 24 |

|

| 25 |

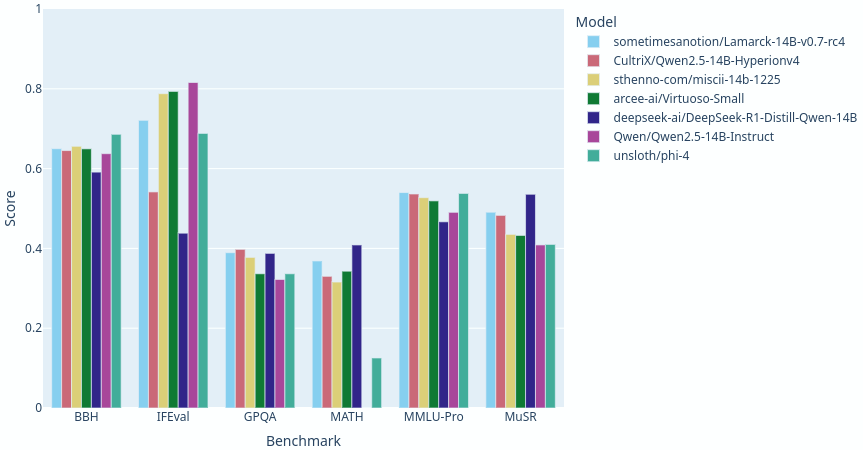

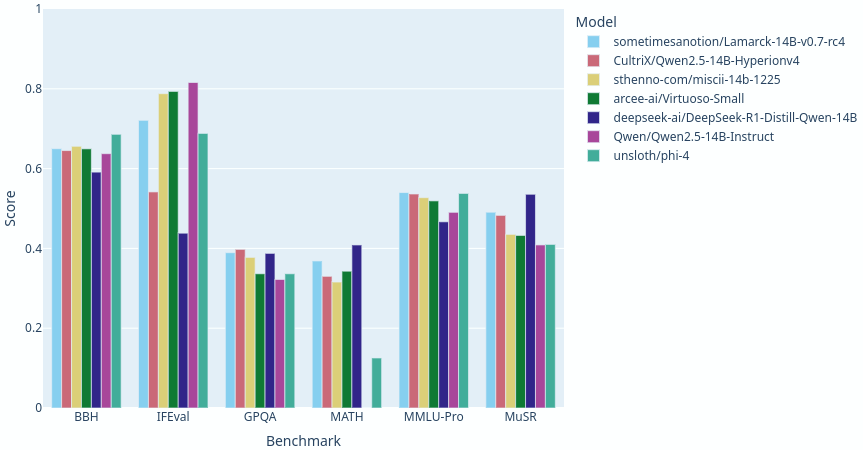

Lamarck 14B v0.7: A generalist merge with emphasis on multi-step reasoning, prose, and multi-language ability. The 14B parameter model class has a lot of strong performers, and Lamarck strives to be well-rounded and solid:

|

| 26 |

|

| 27 |

+

Lamarck is produced by a custom toolchain to automate a process of LoRAs and layer-targeting merges:

|

| 28 |

|

| 29 |

- **Extracted LoRA adapters from special-purpose merges**

|

| 30 |

- **Custom base models and model_stocks, with LoRAs from from [huihui-ai/Qwen2.5-14B-Instruct-abliterated-v2](https://huggingface.co/huihui-ai/Qwen2.5-14B-Instruct-abliterated-v2) to minimize IFEVAL loss often seen in model_stock merges**

|