Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +1 -0

- .gitignore +4 -0

- LICENSE +21 -0

- README.md +330 -13

- cog.yaml +25 -0

- configs/__init__.py +0 -0

- configs/__pycache__/__init__.cpython-310.pyc +0 -0

- configs/__pycache__/paths_config.cpython-310.pyc +0 -0

- configs/data_configs.py +13 -0

- configs/paths_config.py +12 -0

- configs/transforms_config.py +37 -0

- criteria/__init__.py +0 -0

- criteria/aging_loss.py +59 -0

- criteria/id_loss.py +55 -0

- criteria/lpips/__init__.py +0 -0

- criteria/lpips/lpips.py +35 -0

- criteria/lpips/networks.py +96 -0

- criteria/lpips/utils.py +30 -0

- criteria/w_norm.py +14 -0

- datasets/__init__.py +0 -0

- datasets/__pycache__/__init__.cpython-310.pyc +0 -0

- datasets/__pycache__/augmentations.cpython-310.pyc +0 -0

- datasets/augmentations.py +24 -0

- datasets/images_dataset.py +33 -0

- datasets/inference_dataset.py +29 -0

- docs/1005_style_mixing.jpg +0 -0

- docs/1936.jpg +0 -0

- docs/2195.jpg +0 -0

- docs/866.jpg +0 -0

- docs/teaser.jpeg +3 -0

- environment/sam_env.yaml +36 -0

- licenses/LICENSE_InterDigitalInc +150 -0

- licenses/LICENSE_S-aiueo32 +25 -0

- licenses/LICENSE_TreB1eN +21 -0

- licenses/LICENSE_eladrich +21 -0

- licenses/LICENSE_lessw2020 +201 -0

- licenses/LICENSE_rosinality +21 -0

- models/__init__.py +0 -0

- models/__pycache__/__init__.cpython-310.pyc +0 -0

- models/__pycache__/psp.cpython-310.pyc +0 -0

- models/dex_vgg.py +65 -0

- models/encoders/__init__.py +0 -0

- models/encoders/__pycache__/__init__.cpython-310.pyc +0 -0

- models/encoders/__pycache__/helpers.cpython-310.pyc +0 -0

- models/encoders/__pycache__/psp_encoders.cpython-310.pyc +0 -0

- models/encoders/helpers.py +119 -0

- models/encoders/model_irse.py +48 -0

- models/encoders/psp_encoders.py +114 -0

- models/psp.py +131 -0

- models/stylegan2/__init__.py +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

docs/teaser.jpeg filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.idea

|

| 2 |

+

.DS_Store

|

| 3 |

+

pretrained_models/

|

| 4 |

+

shape_predictor_68_face_landmarks.dat

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2021 Yuval Alaluf

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,13 +1,330 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

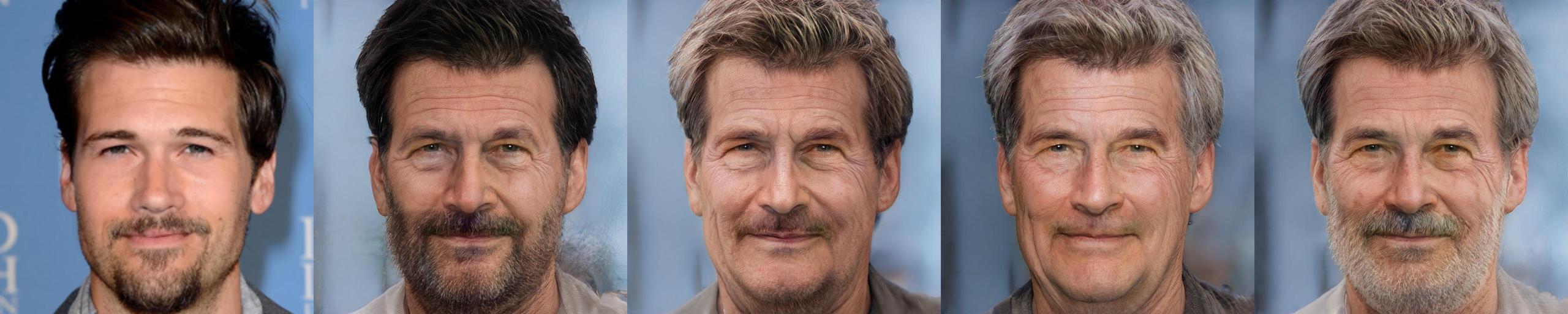

# Only a Matter of Style: Age Transformation Using a Style-Based Regression Model (SIGGRAPH 2021)

|

| 2 |

+

|

| 3 |

+

> The task of age transformation illustrates the change of an individual's appearance over time. Accurately modeling this complex transformation over an input facial image is extremely challenging as it requires making convincing and possibly large changes to facial features and head shape, while still preserving the input identity. In this work, we present an image-to-image translation method that learns to directly encode real facial images into the latent space of a pre-trained unconditional GAN (e.g., StyleGAN) subject to a given aging shift. We employ a pre-trained age regression network used to explicitly guide the encoder to generate the latent codes corresponding to the desired age. In this formulation, our method approaches the continuous aging process as a regression task between the input age and desired target age, providing fine-grained control on the generated image. Moreover, unlike other approaches that operate solely in the latent space using a prior on the path controlling age, our method learns a more disentangled, non-linear path. We demonstrate that the end-to-end nature of our approach, coupled with the rich semantic latent space of StyleGAN, allows for further editing of the generated images. Qualitative and quantitative evaluations show the advantages of our method compared to state-of-the-art approaches.

|

| 4 |

+

|

| 5 |

+

<a href="https://arxiv.org/abs/2102.02754"><img src="https://img.shields.io/badge/arXiv-2008.00951-b31b1b.svg" height=22.5></a>

|

| 6 |

+

<a href="https://opensource.org/licenses/MIT"><img src="https://img.shields.io/badge/License-MIT-yellow.svg" height=22.5></a>

|

| 7 |

+

|

| 8 |

+

<a href="https://www.youtube.com/watch?v=zDTUbtmUbG8"><img src="https://img.shields.io/static/v1?label=Two Minute Papers&message=SAM Video&color=red" height=22.5></a>

|

| 9 |

+

<a href="https://youtu.be/X_pYC_LtBFw"><img src="https://img.shields.io/static/v1?label=SIGGRAPH 2021 &message=5 Minute Video&color=red" height=22.5></a>

|

| 10 |

+

<a href="https://replicate.ai/yuval-alaluf/sam"><img src="https://img.shields.io/static/v1?label=Replicate&message=Demo and Docker Image&color=darkgreen" height=22.5></a>

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

Inference Notebook: <a href="http://colab.research.google.com/github/yuval-alaluf/SAM/blob/master/notebooks/inference_playground.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" height=22.5></a>

|

| 14 |

+

Animation Notebook: <a href="http://colab.research.google.com/github/yuval-alaluf/SAM/blob/master/notebooks/animation_inference_playground.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" height=22.5></a>

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

<p align="center">

|

| 18 |

+

<img src="docs/teaser.jpeg" width="800px"/>

|

| 19 |

+

</p>

|

| 20 |

+

|

| 21 |

+

## Description

|

| 22 |

+

Official Implementation of our Style-based Age Manipulation (SAM) paper for both training and evaluation. SAM

|

| 23 |

+

allows modeling fine-grained age transformation using a single input facial image

|

| 24 |

+

|

| 25 |

+

<p align="center">

|

| 26 |

+

<img src="docs/2195.jpg" width="800px"/>

|

| 27 |

+

<img src="docs/1936.jpg" width="800px"/>

|

| 28 |

+

</p>

|

| 29 |

+

|

| 30 |

+

## Table of Contents

|

| 31 |

+

* [Getting Started](#getting-started)

|

| 32 |

+

+ [Prerequisites](#prerequisites)

|

| 33 |

+

+ [Installation](#installation)

|

| 34 |

+

* [Pretrained Models](#pretrained-models)

|

| 35 |

+

* [Training](#training)

|

| 36 |

+

+ [Preparing your Data](#preparing-your-data)

|

| 37 |

+

+ [Training SAM](#training-sam)

|

| 38 |

+

+ [Additional Notes](#additional-notes)

|

| 39 |

+

* [Notebooks](#notebooks)

|

| 40 |

+

+ [Inference Notebook](#inference-notebook)

|

| 41 |

+

+ [MP4 Notebook](#mp4-notebook)

|

| 42 |

+

* [Testing](#testing)

|

| 43 |

+

+ [Inference](#inference)

|

| 44 |

+

+ [Side-by-Side Inference](#side-by-side-inference)

|

| 45 |

+

+ [Reference-Guided Inference](#reference-guided-inference)

|

| 46 |

+

+ [Style Mixing](#style-mixing)

|

| 47 |

+

* [Repository structure](#repository-structure)

|

| 48 |

+

* [Credits](#credits)

|

| 49 |

+

* [Acknowledgments](#acknowledgments)

|

| 50 |

+

* [Citation](#citation)

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

## Getting Started

|

| 54 |

+

### Prerequisites

|

| 55 |

+

- Linux or macOS

|

| 56 |

+

- NVIDIA GPU + CUDA CuDNN (CPU may be possible with some modifications, but is not inherently supported)

|

| 57 |

+

- Python 3

|

| 58 |

+

|

| 59 |

+

### Installation

|

| 60 |

+

- Dependencies:

|

| 61 |

+

We recommend running this repository using [Anaconda](https://docs.anaconda.com/anaconda/install/).

|

| 62 |

+

All dependencies for defining the environment are provided in `environment/sam_env.yaml`.

|

| 63 |

+

|

| 64 |

+

## Pretrained Models

|

| 65 |

+

Please download the pretrained aging model from the following links.

|

| 66 |

+

|

| 67 |

+

| Path | Description

|

| 68 |

+

| :--- | :----------

|

| 69 |

+

|[SAM](https://drive.google.com/file/d/1XyumF6_fdAxFmxpFcmPf-q84LU_22EMC/view?usp=sharing) | SAM trained on the FFHQ dataset for age transformation.

|

| 70 |

+

|

| 71 |

+

You can run this to download it to the right place:

|

| 72 |

+

|

| 73 |

+

```

|

| 74 |

+

mkdir pretrained_models

|

| 75 |

+

pip install gdown

|

| 76 |

+

gdown "https://drive.google.com/u/0/uc?id=1XyumF6_fdAxFmxpFcmPf-q84LU_22EMC&export=download" -O pretrained_models/sam_ffhq_aging.pt

|

| 77 |

+

wget "https://github.com/italojs/facial-landmarks-recognition/raw/master/shape_predictor_68_face_landmarks.dat"

|

| 78 |

+

```

|

| 79 |

+

|

| 80 |

+

In addition, we provide various auxiliary models needed for training your own SAM model from scratch.

|

| 81 |

+

This includes the pretrained pSp encoder model for generating the encodings of the input image and the aging classifier

|

| 82 |

+

used to compute the aging loss during training.

|

| 83 |

+

|

| 84 |

+

| Path | Description

|

| 85 |

+

| :--- | :----------

|

| 86 |

+

|[pSp Encoder](https://drive.google.com/file/d/1bMTNWkh5LArlaWSc_wa8VKyq2V42T2z0/view?usp=sharing) | pSp taken from [pixel2style2pixel](https://github.com/eladrich/pixel2style2pixel) trained on the FFHQ dataset for StyleGAN inversion.

|

| 87 |

+

|[FFHQ StyleGAN](https://drive.google.com/file/d/1EM87UquaoQmk17Q8d5kYIAHqu0dkYqdT/view?usp=sharing) | StyleGAN model pretrained on FFHQ taken from [rosinality](https://github.com/rosinality/stylegan2-pytorch) with 1024x1024 output resolution.

|

| 88 |

+

|[IR-SE50 Model](https://drive.google.com/file/d/1KW7bjndL3QG3sxBbZxreGHigcCCpsDgn/view?usp=sharing) | Pretrained IR-SE50 model taken from [TreB1eN](https://github.com/TreB1eN/InsightFace_Pytorch) for use in our ID loss during training.

|

| 89 |

+

|[VGG Age Classifier](https://drive.google.com/file/d/1atzjZm_dJrCmFWCqWlyspSpr3nI6Evsh/view?usp=sharing) | VGG age classifier from DEX and fine-tuned on the FFHQ-Aging dataset for use in our aging loss

|

| 90 |

+

|

| 91 |

+

By default, we assume that all auxiliary models are downloaded and saved to the directory `pretrained_models`.

|

| 92 |

+

However, you may use your own paths by changing the necessary values in `configs/path_configs.py`.

|

| 93 |

+

|

| 94 |

+

## Training

|

| 95 |

+

### Preparing your Data

|

| 96 |

+

Please refer to `configs/paths_config.py` to define the necessary data paths and model paths for training and inference.

|

| 97 |

+

Then, refer to `configs/data_configs.py` to define the source/target data paths for the train and test sets as well as the

|

| 98 |

+

transforms to be used for training and inference.

|

| 99 |

+

|

| 100 |

+

As an example, we can first go to `configs/paths_config.py` and define:

|

| 101 |

+

```

|

| 102 |

+

dataset_paths = {

|

| 103 |

+

'ffhq': '/path/to/ffhq/images256x256'

|

| 104 |

+

'celeba_test': '/path/to/CelebAMask-HQ/test_img',

|

| 105 |

+

}

|

| 106 |

+

```

|

| 107 |

+

Then, in `configs/data_configs.py`, we define:

|

| 108 |

+

```

|

| 109 |

+

DATASETS = {

|

| 110 |

+

'ffhq_aging': {

|

| 111 |

+

'transforms': transforms_config.AgingTransforms,

|

| 112 |

+

'train_source_root': dataset_paths['ffhq'],

|

| 113 |

+

'train_target_root': dataset_paths['ffhq'],

|

| 114 |

+

'test_source_root': dataset_paths['celeba_test'],

|

| 115 |

+

'test_target_root': dataset_paths['celeba_test'],

|

| 116 |

+

}

|

| 117 |

+

}

|

| 118 |

+

```

|

| 119 |

+

When defining the datasets for training and inference, we will use the values defined in the above dictionary.

|

| 120 |

+

|

| 121 |

+

|

| 122 |

+

### Training SAM

|

| 123 |

+

The main training script can be found in `scripts/train.py`.

|

| 124 |

+

Intermediate training results are saved to `opts.exp_dir`. This includes checkpoints, train outputs, and test outputs.

|

| 125 |

+

Additionally, if you have tensorboard installed, you can visualize tensorboard logs in `opts.exp_dir/logs`.

|

| 126 |

+

|

| 127 |

+

Training SAM with the settings used in the paper can be done by running the following command:

|

| 128 |

+

```

|

| 129 |

+

python scripts/train.py \

|

| 130 |

+

--dataset_type=ffhq_aging \

|

| 131 |

+

--exp_dir=/path/to/experiment \

|

| 132 |

+

--workers=6 \

|

| 133 |

+

--batch_size=6 \

|

| 134 |

+

--test_batch_size=6 \

|

| 135 |

+

--test_workers=6 \

|

| 136 |

+

--val_interval=2500 \

|

| 137 |

+

--save_interval=10000 \

|

| 138 |

+

--start_from_encoded_w_plus \

|

| 139 |

+

--id_lambda=0.1 \

|

| 140 |

+

--lpips_lambda=0.1 \

|

| 141 |

+

--lpips_lambda_aging=0.1 \

|

| 142 |

+

--lpips_lambda_crop=0.6 \

|

| 143 |

+

--l2_lambda=0.25 \

|

| 144 |

+

--l2_lambda_aging=0.25 \

|

| 145 |

+

--l2_lambda_crop=1 \

|

| 146 |

+

--w_norm_lambda=0.005 \

|

| 147 |

+

--aging_lambda=5 \

|

| 148 |

+

--cycle_lambda=1 \

|

| 149 |

+

--input_nc=4 \

|

| 150 |

+

--target_age=uniform_random \

|

| 151 |

+

--use_weighted_id_loss

|

| 152 |

+

```

|

| 153 |

+

|

| 154 |

+

### Additional Notes

|

| 155 |

+

- See `options/train_options.py` for all training-specific flags.

|

| 156 |

+

- Note that using the flag `--start_from_encoded_w_plus` requires you to specify the path to the pretrained pSp encoder.

|

| 157 |

+

By default, this path is taken from `configs.paths_config.model_paths['pretrained_psp']`.

|

| 158 |

+

- If you wish to resume from a specific checkpoint (e.g. a pretrained SAM model), you may do so using `--checkpoint_path`.

|

| 159 |

+

|

| 160 |

+

|

| 161 |

+

## Notebooks

|

| 162 |

+

### Inference Notebook

|

| 163 |

+

To help visualize the results of SAM we provide a Jupyter notebook found in `notebooks/inference_playground.ipynb`.

|

| 164 |

+

The notebook will download the pretrained aging model and run inference on the images found in `notebooks/images`.

|

| 165 |

+

|

| 166 |

+

In addition, [Replicate](https://replicate.ai/) have created a demo for SAM where you can easily upload an image and run SAM on a desired set of ages! Check

|

| 167 |

+

out the demo [here](https://replicate.ai/yuval-alaluf/sam).

|

| 168 |

+

|

| 169 |

+

### MP4 Notebook

|

| 170 |

+

To show full lifespan results using SAM we provide an additional notebook `notebooks/animation_inference_playground.ipynb` that will

|

| 171 |

+

run aging on multiple ages between 0 and 100 and interpolate between the results to display full aging.

|

| 172 |

+

The results will be saved as an MP4 files in `notebooks/animations` showing the aging and de-aging results.

|

| 173 |

+

|

| 174 |

+

## Testing

|

| 175 |

+

### Inference

|

| 176 |

+

Having trained your model or if you're using a pretrained SAM model, you can use `scripts/inference.py` to run inference

|

| 177 |

+

on a set of images.

|

| 178 |

+

For example,

|

| 179 |

+

```

|

| 180 |

+

python scripts/inference.py \

|

| 181 |

+

--exp_dir=/path/to/experiment \

|

| 182 |

+

--checkpoint_path=experiment/checkpoints/best_model.pt \

|

| 183 |

+

--data_path=/path/to/test_data \

|

| 184 |

+

--test_batch_size=4 \

|

| 185 |

+

--test_workers=4 \

|

| 186 |

+

--couple_outputs

|

| 187 |

+

--target_age=0,10,20,30,40,50,60,70,80

|

| 188 |

+

```

|

| 189 |

+

Additional notes to consider:

|

| 190 |

+

- During inference, the options used during training are loaded from the saved checkpoint and are then updated using the

|

| 191 |

+

test options passed to the inference script.

|

| 192 |

+

- Adding the flag `--couple_outputs` will save an additional image containing the input and output images side-by-side in the sub-directory

|

| 193 |

+

`inference_coupled`. Otherwise, only the output image is saved to the sub-directory `inference_results`.

|

| 194 |

+

- In the above example, we will run age transformation with target ages 0,10,...,80.

|

| 195 |

+

- The results of each target age are saved to the sub-directories `inference_results/TARGET_AGE` and `inference_coupled/TARGET_AGE`.

|

| 196 |

+

- By default, the images will be saved at resolution of 1024x1024, the original output size of StyleGAN.

|

| 197 |

+

- If you wish to save outputs resized to resolutions of 256x256, you can do so by adding the flag `--resize_outputs`.

|

| 198 |

+

|

| 199 |

+

### Side-by-Side Inference

|

| 200 |

+

The above inference script will save each aging result in a different sub-directory for each target age. Sometimes,

|

| 201 |

+

however, it is more convenient to save all aging results of a given input side-by-side like the following:

|

| 202 |

+

|

| 203 |

+

<p align="center">

|

| 204 |

+

<img src="docs/866.jpg" width="800px"/>

|

| 205 |

+

</p>

|

| 206 |

+

|

| 207 |

+

To do so, we provide a script `inference_side_by_side.py` that works in a similar manner as the regular inference script:

|

| 208 |

+

```

|

| 209 |

+

python scripts/inference_side_by_side.py \

|

| 210 |

+

--exp_dir=/path/to/experiment \

|

| 211 |

+

--checkpoint_path=experiment/checkpoints/best_model.pt \

|

| 212 |

+

--data_path=/path/to/test_data \

|

| 213 |

+

--test_batch_size=4 \

|

| 214 |

+

--test_workers=4 \

|

| 215 |

+

--target_age=0,10,20,30,40,50,60,70,80

|

| 216 |

+

```

|

| 217 |

+

Here, all aging results 0,10,...,80 will be save side-by-side with the original input image.

|

| 218 |

+

|

| 219 |

+

### Reference-Guided Inference

|

| 220 |

+

In the paper, we demonstrated how one can perform style-mixing on the fine-level style inputs with a reference image

|

| 221 |

+

to control global features such as hair color. For example,

|

| 222 |

+

|

| 223 |

+

<p align="center">

|

| 224 |

+

<img src="docs/1005_style_mixing.jpg" width="800px"/>

|

| 225 |

+

</p>

|

| 226 |

+

|

| 227 |

+

To perform style mixing using reference images, we provide the script `reference_guided_inference.py`. Here,

|

| 228 |

+

we first perform aging using the specified target age(s). Then, style mixing is performed using the specified

|

| 229 |

+

reference images and the specified layers. For example, one can run:

|

| 230 |

+

```

|

| 231 |

+

python scripts/reference_guided_inference.py \

|

| 232 |

+

--exp_dir=/path/to/experiment \

|

| 233 |

+

--checkpoint_path=experiment/checkpoints/best_model.pt \

|

| 234 |

+

--data_path=/path/to/test_data \

|

| 235 |

+

--test_batch_size=4 \

|

| 236 |

+

--test_workers=4 \

|

| 237 |

+

--ref_images_paths_file=/path/to/ref_list.txt \

|

| 238 |

+

--latent_mask=8,9 \

|

| 239 |

+

--target_age=50,60,70,80

|

| 240 |

+

```

|

| 241 |

+

Here, the reference images should be specified in the file defined by `--ref_images_paths_file` and should have the

|

| 242 |

+

following format:

|

| 243 |

+

```

|

| 244 |

+

/path/to/reference/1.jpg

|

| 245 |

+

/path/to/reference/2.jpg

|

| 246 |

+

/path/to/reference/3.jpg

|

| 247 |

+

/path/to/reference/4.jpg

|

| 248 |

+

/path/to/reference/5.jpg

|

| 249 |

+

```

|

| 250 |

+

In the above example, we will aging using 4 different target ages. For each target age, we first transform the

|

| 251 |

+

test samples defined by `--data_path` and then perform style mixing on layers 8,9 defined by `--latent_mask`.

|

| 252 |

+

The results of each target age are saved in its own sub-directory.

|

| 253 |

+

|

| 254 |

+

### Style Mixing

|

| 255 |

+

Instead of performing style mixing using a reference image, you can perform style mixing using randomly generated

|

| 256 |

+

w latent vectors by running the script `style_mixing.py`. This script works in a similar manner to the reference

|

| 257 |

+

guided inference except you do not need to specify the `--ref_images_paths_file` flag.

|

| 258 |

+

|

| 259 |

+

## Repository structure

|

| 260 |

+

| Path | Description <img width=200>

|

| 261 |

+

| :--- | :---

|

| 262 |

+

| SAM | Repository root folder

|

| 263 |

+

| ├ configs | Folder containing configs defining model/data paths and data transforms

|

| 264 |

+

| ├ criteria | Folder containing various loss criterias for training

|

| 265 |

+

| ├ datasets | Folder with various dataset objects and augmentations

|

| 266 |

+

| ├ docs | Folder containing images displayed in the README

|

| 267 |

+

| ├ environment | Folder containing Anaconda environment used in our experiments

|

| 268 |

+

| ├ models | Folder containing all the models and training objects

|

| 269 |

+

| │ ├ encoders | Folder containing various architecture implementations

|

| 270 |

+

| │ ├ stylegan2 | StyleGAN2 model from [rosinality](https://github.com/rosinality/stylegan2-pytorch)

|

| 271 |

+

| │ ├ psp.py | Implementation of pSp encoder

|

| 272 |

+

| │ └ dex_vgg.py | Implementation of DEX VGG classifier used in computation of aging loss

|

| 273 |

+

| ├ notebook | Folder with jupyter notebook containing SAM inference playground

|

| 274 |

+

| ├ options | Folder with training and test command-line options

|

| 275 |

+

| ├ scripts | Folder with running scripts for training and inference

|

| 276 |

+

| ├ training | Folder with main training logic and Ranger implementation from [lessw2020](https://github.com/lessw2020/Ranger-Deep-Learning-Optimizer)

|

| 277 |

+

| ├ utils | Folder with various utility functions

|

| 278 |

+

| <img width=300> | <img>

|

| 279 |

+

|

| 280 |

+

|

| 281 |

+

## Credits

|

| 282 |

+

**StyleGAN2 model and implementation:**

|

| 283 |

+

https://github.com/rosinality/stylegan2-pytorch

|

| 284 |

+

Copyright (c) 2019 Kim Seonghyeon

|

| 285 |

+

License (MIT) https://github.com/rosinality/stylegan2-pytorch/blob/master/LICENSE

|

| 286 |

+

|

| 287 |

+

**IR-SE50 model and implementations:**

|

| 288 |

+

https://github.com/TreB1eN/InsightFace_Pytorch

|

| 289 |

+

Copyright (c) 2018 TreB1eN

|

| 290 |

+

License (MIT) https://github.com/TreB1eN/InsightFace_Pytorch/blob/master/LICENSE

|

| 291 |

+

|

| 292 |

+

**Ranger optimizer implementation:**

|

| 293 |

+

https://github.com/lessw2020/Ranger-Deep-Learning-Optimizer

|

| 294 |

+

License (Apache License 2.0) https://github.com/lessw2020/Ranger-Deep-Learning-Optimizer/blob/master/LICENSE

|

| 295 |

+

|

| 296 |

+

**LPIPS model and implementation:**

|

| 297 |

+

https://github.com/S-aiueo32/lpips-pytorch

|

| 298 |

+

Copyright (c) 2020, Sou Uchida

|

| 299 |

+

License (BSD 2-Clause) https://github.com/S-aiueo32/lpips-pytorch/blob/master/LICENSE

|

| 300 |

+

|

| 301 |

+

**DEX VGG model and implementation:**

|

| 302 |

+

https://github.com/InterDigitalInc/HRFAE

|

| 303 |

+

Copyright (c) 2020, InterDigital R&D France

|

| 304 |

+

https://github.com/InterDigitalInc/HRFAE/blob/master/LICENSE.txt

|

| 305 |

+

|

| 306 |

+

**pSp model and implementation:**

|

| 307 |

+

https://github.com/eladrich/pixel2style2pixel

|

| 308 |

+

Copyright (c) 2020 Elad Richardson, Yuval Alaluf

|

| 309 |

+

https://github.com/eladrich/pixel2style2pixel/blob/master/LICENSE

|

| 310 |

+

|

| 311 |

+

## Acknowledgments

|

| 312 |

+

This code borrows heavily from [pixel2style2pixel](https://github.com/eladrich/pixel2style2pixel)

|

| 313 |

+

|

| 314 |

+

## Citation

|

| 315 |

+

If you use this code for your research, please cite our paper <a href="https://arxiv.org/abs/2102.02754">Only a Matter of Style: Age Transformation Using a Style-Based Regression Model</a>:

|

| 316 |

+

|

| 317 |

+

```

|

| 318 |

+

@article{alaluf2021matter,

|

| 319 |

+

author = {Alaluf, Yuval and Patashnik, Or and Cohen-Or, Daniel},

|

| 320 |

+

title = {Only a Matter of Style: Age Transformation Using a Style-Based Regression Model},

|

| 321 |

+

journal = {ACM Trans. Graph.},

|

| 322 |

+

issue_date = {August 2021},

|

| 323 |

+

volume = {40},

|

| 324 |

+

number = {4},

|

| 325 |

+

year = {2021},

|

| 326 |

+

articleno = {45},

|

| 327 |

+

publisher = {Association for Computing Machinery},

|

| 328 |

+

url = {https://doi.org/10.1145/3450626.3459805}

|

| 329 |

+

}

|

| 330 |

+

```

|

cog.yaml

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

image: "r8.im/yuval-alaluf/sam"

|

| 2 |

+

build:

|

| 3 |

+

gpu: true

|

| 4 |

+

python_version: "3.8"

|

| 5 |

+

system_packages:

|

| 6 |

+

- "cmake"

|

| 7 |

+

- "libgl1-mesa-glx"

|

| 8 |

+

- "libglib2.0-0"

|

| 9 |

+

- "ninja-build"

|

| 10 |

+

python_packages:

|

| 11 |

+

- "Pillow==8.3.1"

|

| 12 |

+

- "cmake==3.21.1"

|

| 13 |

+

- "dlib==19.22.1"

|

| 14 |

+

- "imageio==2.9.0"

|

| 15 |

+

- "ipython==7.21.0"

|

| 16 |

+

- "matplotlib==3.1.3"

|

| 17 |

+

- "numpy==1.21.1"

|

| 18 |

+

- "opencv-python==4.5.3.56"

|

| 19 |

+

- "scipy==1.4.1"

|

| 20 |

+

- "tensorboard==2.2.1"

|

| 21 |

+

- "torch==1.8.0"

|

| 22 |

+

- "torchvision==0.9.0"

|

| 23 |

+

- "tqdm==4.42.1"

|

| 24 |

+

predict: "predict.py:Predictor"

|

| 25 |

+

|

configs/__init__.py

ADDED

|

File without changes

|

configs/__pycache__/__init__.cpython-310.pyc

ADDED

|

Binary file (155 Bytes). View file

|

|

|

configs/__pycache__/paths_config.cpython-310.pyc

ADDED

|

Binary file (533 Bytes). View file

|

|

|

configs/data_configs.py

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from configs import transforms_config

|

| 2 |

+

from configs.paths_config import dataset_paths

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

DATASETS = {

|

| 6 |

+

'ffhq_aging': {

|

| 7 |

+

'transforms': transforms_config.AgingTransforms,

|

| 8 |

+

'train_source_root': dataset_paths['ffhq'],

|

| 9 |

+

'train_target_root': dataset_paths['ffhq'],

|

| 10 |

+

'test_source_root': dataset_paths['celeba_test'],

|

| 11 |

+

'test_target_root': dataset_paths['celeba_test'],

|

| 12 |

+

}

|

| 13 |

+

}

|

configs/paths_config.py

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

dataset_paths = {

|

| 2 |

+

'celeba_test': '',

|

| 3 |

+

'ffhq': '',

|

| 4 |

+

}

|

| 5 |

+

|

| 6 |

+

model_paths = {

|

| 7 |

+

'pretrained_psp_encoder': 'pretrained_models/psp_ffhq_encode.pt',

|

| 8 |

+

'ir_se50': 'pretrained_models/model_ir_se50.pth',

|

| 9 |

+

'stylegan_ffhq': 'pretrained_models/stylegan2-ffhq-config-f.pt',

|

| 10 |

+

'shape_predictor': 'shape_predictor_68_face_landmarks.dat',

|

| 11 |

+

'age_predictor': 'pretrained_models/dex_age_classifier.pth'

|

| 12 |

+

}

|

configs/transforms_config.py

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from abc import abstractmethod

|

| 2 |

+

import torchvision.transforms as transforms

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

class TransformsConfig(object):

|

| 6 |

+

|

| 7 |

+

def __init__(self, opts):

|

| 8 |

+

self.opts = opts

|

| 9 |

+

|

| 10 |

+

@abstractmethod

|

| 11 |

+

def get_transforms(self):

|

| 12 |

+

pass

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

class AgingTransforms(TransformsConfig):

|

| 16 |

+

|

| 17 |

+

def __init__(self, opts):

|

| 18 |

+

super(AgingTransforms, self).__init__(opts)

|

| 19 |

+

|

| 20 |

+

def get_transforms(self):

|

| 21 |

+

transforms_dict = {

|

| 22 |

+

'transform_gt_train': transforms.Compose([

|

| 23 |

+

transforms.Resize((256, 256)),

|

| 24 |

+

transforms.RandomHorizontalFlip(0.5),

|

| 25 |

+

transforms.ToTensor(),

|

| 26 |

+

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])]),

|

| 27 |

+

'transform_source': None,

|

| 28 |

+

'transform_test': transforms.Compose([

|

| 29 |

+

transforms.Resize((256, 256)),

|

| 30 |

+

transforms.ToTensor(),

|

| 31 |

+

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])]),

|

| 32 |

+

'transform_inference': transforms.Compose([

|

| 33 |

+

transforms.Resize((256, 256)),

|

| 34 |

+

transforms.ToTensor(),

|

| 35 |

+

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])])

|

| 36 |

+

}

|

| 37 |

+

return transforms_dict

|

criteria/__init__.py

ADDED

|

File without changes

|

criteria/aging_loss.py

ADDED

|

@@ -0,0 +1,59 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from torch import nn

|

| 3 |

+

import torch.nn.functional as F

|

| 4 |

+

|

| 5 |

+

from configs.paths_config import model_paths

|

| 6 |

+

from models.dex_vgg import VGG

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

class AgingLoss(nn.Module):

|

| 10 |

+

|

| 11 |

+

def __init__(self, opts):

|

| 12 |

+

super(AgingLoss, self).__init__()

|

| 13 |

+

self.age_net = VGG()

|

| 14 |

+

ckpt = torch.load(model_paths['age_predictor'], map_location="cpu")['state_dict']

|

| 15 |

+

ckpt = {k.replace('-', '_'): v for k, v in ckpt.items()}

|

| 16 |

+

self.age_net.load_state_dict(ckpt)

|

| 17 |

+

self.age_net.cuda()

|

| 18 |

+

self.age_net.eval()

|

| 19 |

+

self.min_age = 0

|

| 20 |

+

self.max_age = 100

|

| 21 |

+

self.opts = opts

|

| 22 |

+

|

| 23 |

+

def __get_predicted_age(self, age_pb):

|

| 24 |

+

predict_age_pb = F.softmax(age_pb)

|

| 25 |

+

predict_age = torch.zeros(age_pb.size(0)).type_as(predict_age_pb)

|

| 26 |

+

for i in range(age_pb.size(0)):

|

| 27 |

+

for j in range(age_pb.size(1)):

|

| 28 |

+

predict_age[i] += j * predict_age_pb[i][j]

|

| 29 |

+

return predict_age

|

| 30 |

+

|

| 31 |

+

def extract_ages(self, x):

|

| 32 |

+

x = F.interpolate(x, size=(224, 224), mode='bilinear')

|

| 33 |

+

predict_age_pb = self.age_net(x)['fc8']

|

| 34 |

+

predicted_age = self.__get_predicted_age(predict_age_pb)

|

| 35 |

+

return predicted_age

|

| 36 |

+

|

| 37 |

+

def forward(self, y_hat, y, target_ages, id_logs, label=None):

|

| 38 |

+

n_samples = y.shape[0]

|

| 39 |

+

|

| 40 |

+

if id_logs is None:

|

| 41 |

+

id_logs = []

|

| 42 |

+

|

| 43 |

+

input_ages = self.extract_ages(y) / 100.

|

| 44 |

+

output_ages = self.extract_ages(y_hat) / 100.

|

| 45 |

+

|

| 46 |

+

for i in range(n_samples):

|

| 47 |

+

# if id logs for the same exists, update the dictionary

|

| 48 |

+

if len(id_logs) > i:

|

| 49 |

+

id_logs[i].update({f'input_age_{label}': float(input_ages[i]) * 100,

|

| 50 |

+

f'output_age_{label}': float(output_ages[i]) * 100,

|

| 51 |

+

f'target_age_{label}': float(target_ages[i]) * 100})

|

| 52 |

+

# otherwise, create a new entry for the sample

|

| 53 |

+

else:

|

| 54 |

+

id_logs.append({f'input_age_{label}': float(input_ages[i]) * 100,

|

| 55 |

+

f'output_age_{label}': float(output_ages[i]) * 100,

|

| 56 |

+

f'target_age_{label}': float(target_ages[i]) * 100})

|

| 57 |

+

|

| 58 |

+

loss = F.mse_loss(output_ages, target_ages)

|

| 59 |

+

return loss, id_logs

|

criteria/id_loss.py

ADDED

|

@@ -0,0 +1,55 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from torch import nn

|

| 3 |

+

from configs.paths_config import model_paths

|

| 4 |

+

from models.encoders.model_irse import Backbone

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

class IDLoss(nn.Module):

|

| 8 |

+

def __init__(self):

|

| 9 |

+

super(IDLoss, self).__init__()

|

| 10 |

+

print('Loading ResNet ArcFace')

|

| 11 |

+

self.facenet = Backbone(input_size=112, num_layers=50, drop_ratio=0.6, mode='ir_se')

|

| 12 |

+

self.facenet.load_state_dict(torch.load(model_paths['ir_se50']))

|

| 13 |

+

self.face_pool = torch.nn.AdaptiveAvgPool2d((112, 112))

|

| 14 |

+

self.facenet.eval()

|

| 15 |

+

|

| 16 |

+

def extract_feats(self, x):

|

| 17 |

+

x = x[:, :, 35:223, 32:220] # Crop interesting region

|

| 18 |

+

x = self.face_pool(x)

|

| 19 |

+

x_feats = self.facenet(x)

|

| 20 |

+

return x_feats

|

| 21 |

+

|

| 22 |

+

def forward(self, y_hat, y, x, label=None, weights=None):

|

| 23 |

+

n_samples = x.shape[0]

|

| 24 |

+

x_feats = self.extract_feats(x)

|

| 25 |

+

y_feats = self.extract_feats(y)

|

| 26 |

+

y_hat_feats = self.extract_feats(y_hat)

|

| 27 |

+

y_feats = y_feats.detach()

|

| 28 |

+

total_loss = 0

|

| 29 |

+

sim_improvement = 0

|

| 30 |

+

id_logs = []

|

| 31 |

+

count = 0

|

| 32 |

+

for i in range(n_samples):

|

| 33 |

+

diff_target = y_hat_feats[i].dot(y_feats[i])

|

| 34 |

+

diff_input = y_hat_feats[i].dot(x_feats[i])

|

| 35 |

+

diff_views = y_feats[i].dot(x_feats[i])

|

| 36 |

+

|

| 37 |

+

if label is None:

|

| 38 |

+

id_logs.append({'diff_target': float(diff_target),

|

| 39 |

+

'diff_input': float(diff_input),

|

| 40 |

+

'diff_views': float(diff_views)})

|

| 41 |

+

else:

|

| 42 |

+

id_logs.append({f'diff_target_{label}': float(diff_target),

|

| 43 |

+

f'diff_input_{label}': float(diff_input),

|

| 44 |

+

f'diff_views_{label}': float(diff_views)})

|

| 45 |

+

|

| 46 |

+

loss = 1 - diff_target

|

| 47 |

+

if weights is not None:

|

| 48 |

+

loss = weights[i] * loss

|

| 49 |

+

|

| 50 |

+

total_loss += loss

|

| 51 |

+

id_diff = float(diff_target) - float(diff_views)

|

| 52 |

+

sim_improvement += id_diff

|

| 53 |

+

count += 1

|

| 54 |

+

|

| 55 |

+

return total_loss / count, sim_improvement / count, id_logs

|

criteria/lpips/__init__.py

ADDED

|

File without changes

|

criteria/lpips/lpips.py

ADDED

|

@@ -0,0 +1,35 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import torch.nn as nn

|

| 3 |

+

|

| 4 |

+

from criteria.lpips.networks import get_network, LinLayers

|

| 5 |

+

from criteria.lpips.utils import get_state_dict

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

class LPIPS(nn.Module):

|

| 9 |

+

r"""Creates a criterion that measures

|

| 10 |

+

Learned Perceptual Image Patch Similarity (LPIPS).

|

| 11 |

+

Arguments:

|

| 12 |

+

net_type (str): the network type to compare the features:

|

| 13 |

+

'alex' | 'squeeze' | 'vgg'. Default: 'alex'.

|

| 14 |

+

version (str): the version of LPIPS. Default: 0.1.

|

| 15 |

+

"""

|

| 16 |

+

def __init__(self, net_type: str = 'alex', version: str = '0.1'):

|

| 17 |

+

|

| 18 |

+

assert version in ['0.1'], 'v0.1 is only supported now'

|

| 19 |

+

|

| 20 |

+

super(LPIPS, self).__init__()

|

| 21 |

+

|

| 22 |

+

# pretrained network

|

| 23 |

+

self.net = get_network(net_type).to("cuda")

|

| 24 |

+

|

| 25 |

+

# linear layers

|

| 26 |

+

self.lin = LinLayers(self.net.n_channels_list).to("cuda")

|

| 27 |

+

self.lin.load_state_dict(get_state_dict(net_type, version))

|

| 28 |

+

|

| 29 |

+

def forward(self, x: torch.Tensor, y: torch.Tensor):

|

| 30 |

+

feat_x, feat_y = self.net(x), self.net(y)

|

| 31 |

+

|

| 32 |

+

diff = [(fx - fy) ** 2 for fx, fy in zip(feat_x, feat_y)]

|

| 33 |

+

res = [l(d).mean((2, 3), True) for d, l in zip(diff, self.lin)]

|

| 34 |

+

|

| 35 |

+

return torch.sum(torch.cat(res, 0)) / x.shape[0]

|

criteria/lpips/networks.py

ADDED

|

@@ -0,0 +1,96 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import Sequence

|

| 2 |

+

|

| 3 |

+

from itertools import chain

|

| 4 |

+

|

| 5 |

+

import torch

|

| 6 |

+

import torch.nn as nn

|

| 7 |

+

from torchvision import models

|

| 8 |

+

|

| 9 |

+

from criteria.lpips.utils import normalize_activation

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

def get_network(net_type: str):

|

| 13 |

+

if net_type == 'alex':

|

| 14 |

+

return AlexNet()

|

| 15 |

+

elif net_type == 'squeeze':

|

| 16 |

+

return SqueezeNet()

|

| 17 |

+

elif net_type == 'vgg':

|

| 18 |

+

return VGG16()

|

| 19 |

+

else:

|

| 20 |

+

raise NotImplementedError('choose net_type from [alex, squeeze, vgg].')

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

class LinLayers(nn.ModuleList):

|

| 24 |

+

def __init__(self, n_channels_list: Sequence[int]):

|

| 25 |

+

super(LinLayers, self).__init__([

|

| 26 |

+

nn.Sequential(

|

| 27 |

+

nn.Identity(),

|

| 28 |

+

nn.Conv2d(nc, 1, 1, 1, 0, bias=False)

|

| 29 |

+

) for nc in n_channels_list

|

| 30 |

+

])

|

| 31 |

+

|

| 32 |

+

for param in self.parameters():

|

| 33 |

+

param.requires_grad = False

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

class BaseNet(nn.Module):

|

| 37 |

+

def __init__(self):

|

| 38 |

+

super(BaseNet, self).__init__()

|

| 39 |

+

|

| 40 |

+

# register buffer

|

| 41 |

+

self.register_buffer(

|

| 42 |

+

'mean', torch.Tensor([-.030, -.088, -.188])[None, :, None, None])

|

| 43 |

+

self.register_buffer(

|

| 44 |

+

'std', torch.Tensor([.458, .448, .450])[None, :, None, None])

|

| 45 |

+

|

| 46 |

+

def set_requires_grad(self, state: bool):

|

| 47 |

+

for param in chain(self.parameters(), self.buffers()):

|

| 48 |

+

param.requires_grad = state

|

| 49 |

+

|

| 50 |

+

def z_score(self, x: torch.Tensor):

|

| 51 |

+

return (x - self.mean) / self.std

|

| 52 |

+

|

| 53 |

+

def forward(self, x: torch.Tensor):

|

| 54 |

+

x = self.z_score(x)

|

| 55 |

+

|

| 56 |

+

output = []

|

| 57 |

+

for i, (_, layer) in enumerate(self.layers._modules.items(), 1):

|

| 58 |

+

x = layer(x)

|

| 59 |

+

if i in self.target_layers:

|

| 60 |

+

output.append(normalize_activation(x))

|

| 61 |

+

if len(output) == len(self.target_layers):

|

| 62 |

+

break

|

| 63 |

+

return output

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

class SqueezeNet(BaseNet):

|

| 67 |

+

def __init__(self):

|

| 68 |

+

super(SqueezeNet, self).__init__()

|

| 69 |

+

|

| 70 |

+

self.layers = models.squeezenet1_1(True).features

|

| 71 |

+

self.target_layers = [2, 5, 8, 10, 11, 12, 13]

|

| 72 |

+

self.n_channels_list = [64, 128, 256, 384, 384, 512, 512]

|

| 73 |

+

|

| 74 |

+

self.set_requires_grad(False)

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

class AlexNet(BaseNet):

|

| 78 |

+

def __init__(self):

|

| 79 |

+

super(AlexNet, self).__init__()

|

| 80 |

+

|

| 81 |

+

self.layers = models.alexnet(True).features

|

| 82 |

+

self.target_layers = [2, 5, 8, 10, 12]

|

| 83 |

+

self.n_channels_list = [64, 192, 384, 256, 256]

|

| 84 |

+

|

| 85 |

+

self.set_requires_grad(False)

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

class VGG16(BaseNet):

|

| 89 |

+

def __init__(self):

|

| 90 |

+

super(VGG16, self).__init__()

|

| 91 |

+

|

| 92 |

+

self.layers = models.vgg16(True).features

|

| 93 |

+

self.target_layers = [4, 9, 16, 23, 30]

|

| 94 |

+

self.n_channels_list = [64, 128, 256, 512, 512]

|

| 95 |

+

|

| 96 |

+

self.set_requires_grad(False)

|

criteria/lpips/utils.py

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from collections import OrderedDict

|

| 2 |

+

|

| 3 |

+

import torch

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

def normalize_activation(x, eps=1e-10):

|

| 7 |

+

norm_factor = torch.sqrt(torch.sum(x ** 2, dim=1, keepdim=True))

|

| 8 |

+

return x / (norm_factor + eps)

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

def get_state_dict(net_type: str = 'alex', version: str = '0.1'):

|

| 12 |

+

# build url

|

| 13 |

+

url = 'https://raw.githubusercontent.com/richzhang/PerceptualSimilarity/' \

|

| 14 |

+

+ f'master/lpips/weights/v{version}/{net_type}.pth'

|

| 15 |

+

|

| 16 |

+

# download

|

| 17 |

+

old_state_dict = torch.hub.load_state_dict_from_url(

|

| 18 |

+

url, progress=True,

|

| 19 |

+

map_location=None if torch.cuda.is_available() else torch.device('cpu')

|

| 20 |

+

)

|

| 21 |

+

|

| 22 |

+

# rename keys

|

| 23 |

+

new_state_dict = OrderedDict()

|

| 24 |

+

for key, val in old_state_dict.items():

|

| 25 |

+

new_key = key

|

| 26 |

+

new_key = new_key.replace('lin', '')

|

| 27 |

+

new_key = new_key.replace('model.', '')

|

| 28 |

+

new_state_dict[new_key] = val

|

| 29 |

+

|

| 30 |

+

return new_state_dict

|

criteria/w_norm.py

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from torch import nn

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

class WNormLoss(nn.Module):

|

| 6 |

+

|

| 7 |

+

def __init__(self, opts):

|

| 8 |

+

super(WNormLoss, self).__init__()

|

| 9 |

+

self.opts = opts

|

| 10 |

+

|

| 11 |

+

def forward(self, latent, latent_avg=None):

|

| 12 |

+

if self.opts.start_from_latent_avg or self.opts.start_from_encoded_w_plus:

|

| 13 |

+

latent = latent - latent_avg

|

| 14 |

+

return torch.sum(latent.norm(2, dim=(1, 2))) / latent.shape[0]

|

datasets/__init__.py

ADDED

|

File without changes

|

datasets/__pycache__/__init__.cpython-310.pyc

ADDED

|

Binary file (156 Bytes). View file

|

|

|

datasets/__pycache__/augmentations.cpython-310.pyc

ADDED

|

Binary file (1.22 kB). View file

|

|

|

datasets/augmentations.py

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import numpy as np

|

| 2 |

+

import torch

|

| 3 |

+

|

| 4 |

+

|