Spaces:

Sleeping

Sleeping

upload model

Browse files- .gitattributes +5 -0

- .gitignore +12 -0

- configparser.ini +169 -0

- convo_qa_chain.py +387 -0

- data/ABPI Code of Practice for the Pharmaceutical Industry 2021.pdf +0 -0

- data/Attention Is All You Need.pdf +3 -0

- data/Gradient Descent The Ultimate Optimizer.pdf +3 -0

- data/JP Morgan 2022 Environmental Social Governance Report.pdf +3 -0

- data/Language Models are Few-Shot Learners.pdf +3 -0

- data/Language Models are Unsupervised Multitask Learners.pdf +0 -0

- data/United Nations 2022 Annual Report.pdf +3 -0

- docs2db.py +346 -0

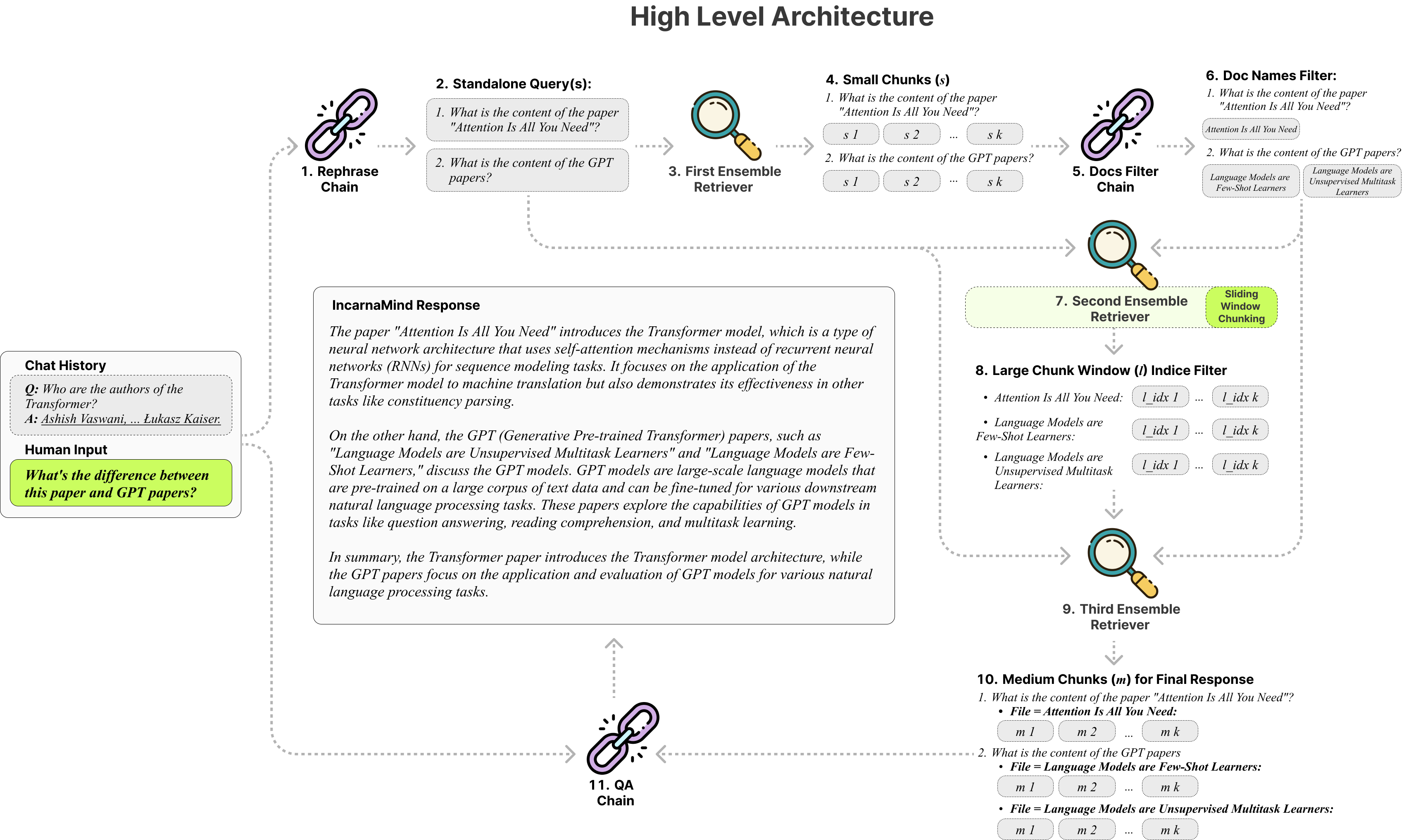

- figs/High_Level_Architecture.png +0 -0

- figs/Sliding_Window_Chunking.png +0 -0

- main.py +150 -0

- requirements.txt +13 -1

- toolkit/___init__.py +0 -0

- toolkit/local_llm.py +193 -0

- toolkit/prompts.py +169 -0

- toolkit/retrivers.py +643 -0

- toolkit/together_api_llm.py +72 -0

- toolkit/utils.py +389 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,8 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

data/Attention[[:space:]]Is[[:space:]]All[[:space:]]You[[:space:]]Need.pdf filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

data/Gradient[[:space:]]Descent[[:space:]]The[[:space:]]Ultimate[[:space:]]Optimizer.pdf filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

data/JP[[:space:]]Morgan[[:space:]]2022[[:space:]]Environmental[[:space:]]Social[[:space:]]Governance[[:space:]]Report.pdf filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

data/Language[[:space:]]Models[[:space:]]are[[:space:]]Few-Shot[[:space:]]Learners.pdf filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

data/United[[:space:]]Nations[[:space:]]2022[[:space:]]Annual[[:space:]]Report.pdf filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.DS_Store

|

| 2 |

+

.history

|

| 3 |

+

.vscode

|

| 4 |

+

__pycache__

|

| 5 |

+

Archieve

|

| 6 |

+

database_store

|

| 7 |

+

IncarnaMind.log

|

| 8 |

+

experiments.ipynb

|

| 9 |

+

.pylintrc

|

| 10 |

+

.flake8

|

| 11 |

+

models/

|

| 12 |

+

model/

|

configparser.ini

ADDED

|

@@ -0,0 +1,169 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[tokens]

|

| 2 |

+

; Enter one/all of your API key here.

|

| 3 |

+

; E.g., OPENAI_API_KEY = sk-xxxxxxx

|

| 4 |

+

OPENAI_API_KEY = sk-proj-2JwvyIn7WoKlkbjPOYVWT3BlbkFJnGAk65YAzvPH6cEVQXmr

|

| 5 |

+

ANTHROPIC_API_KEY = xxxxx

|

| 6 |

+

TOGETHER_API_KEY = xxxxx

|

| 7 |

+

; if you use Meta-Llama models, you may need Huggingface token to access.

|

| 8 |

+

HUGGINGFACE_TOKEN = xxxxx

|

| 9 |

+

VERSION = 1.0.1

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

[directory]

|

| 13 |

+

; Directory for source files.

|

| 14 |

+

DOCS_DIR = ./data

|

| 15 |

+

; Directory to store embeddings and Langchain documents.

|

| 16 |

+

DB_DIR = ./database_store

|

| 17 |

+

LOCAL_MODEL_DIR = ./models

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

; The below parameters are optional to modify:

|

| 21 |

+

; --------------------------------------------

|

| 22 |

+

[parameters]

|

| 23 |

+

; Model name schema: Model Provider|Model Name|Model File. Model File is only valid for GGUF format, set None for other format.

|

| 24 |

+

|

| 25 |

+

; For example:

|

| 26 |

+

; OpenAI|gpt-3.5-turbo|None

|

| 27 |

+

; OpenAI|gpt-4|None

|

| 28 |

+

; Anthropic|claude-2.0|None

|

| 29 |

+

; Together|togethercomputer/llama-2-70b-chat|None

|

| 30 |

+

; HuggingFace|TheBloke/Llama-2-70B-chat-GGUF|llama-2-70b-chat.q4_K_M.gguf

|

| 31 |

+

; HuggingFace|meta-llama/Llama-2-70b-chat-hf|None

|

| 32 |

+

|

| 33 |

+

; The full Together.AI model list can be found in the end of this file; We currently only support quantized gguf and the full huggingface local LLMs.

|

| 34 |

+

MODEL_NAME = OpenAI|gpt-4-1106-preview|None

|

| 35 |

+

; LLM temperature

|

| 36 |

+

TEMPURATURE = 0

|

| 37 |

+

; Maximum tokens for storing chat history.

|

| 38 |

+

MAX_CHAT_HISTORY = 800

|

| 39 |

+

; Maximum tokens for LLM context for retrieved information.

|

| 40 |

+

MAX_LLM_CONTEXT = 1200

|

| 41 |

+

; Maximum tokens for LLM generation.

|

| 42 |

+

MAX_LLM_GENERATION = 1000

|

| 43 |

+

; Supported embeddings: openAIEmbeddings and hkunlpInstructorLarge.

|

| 44 |

+

EMBEDDING_NAME = openAIEmbeddings

|

| 45 |

+

|

| 46 |

+

; This is dependent on your GPU type.

|

| 47 |

+

N_GPU_LAYERS = 100

|

| 48 |

+

; this is depend on your GPU and CPU ram when using open source LLMs.

|

| 49 |

+

N_BATCH = 512

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

; The base (small) chunk size for first stage document retrieval.

|

| 53 |

+

BASE_CHUNK_SIZE = 100

|

| 54 |

+

; Set to 0 for no overlap.

|

| 55 |

+

CHUNK_OVERLAP = 0

|

| 56 |

+

; The final retrieval (medium) chunk size will be BASE_CHUNK_SIZE * CHUNK_SCALE.

|

| 57 |

+

CHUNK_SCALE = 3

|

| 58 |

+

WINDOW_STEPS = 3

|

| 59 |

+

; The # tokens of window chunk will be BASE_CHUNK_SIZE * WINDOW_SCALE.

|

| 60 |

+

WINDOW_SCALE = 18

|

| 61 |

+

|

| 62 |

+

; Ratio of BM25 retriever to Chroma Vectorstore retriever.

|

| 63 |

+

RETRIEVER_WEIGHTS = 0.5, 0.5

|

| 64 |

+

; Number of retrieved chunks will range from FIRST_RETRIEVAL_K to 2*FIRST_RETRIEVAL_K due to the ensemble retriever.

|

| 65 |

+

FIRST_RETRIEVAL_K = 3

|

| 66 |

+

; Number of retrieved chunks will range from SECOND_RETRIEVAL_K to 2*SECOND_RETRIEVAL_K due to the ensemble retriever.

|

| 67 |

+

SECOND_RETRIEVAL_K = 3

|

| 68 |

+

; Number of windows (large chunks) for the third retriever.

|

| 69 |

+

NUM_WINDOWS = 2

|

| 70 |

+

; (The third retrieval gets the final chunks passed to the LLM QA chain. The 'k' value is dynamic (based on MAX_LLM_CONTEXT), depending on the number of rephrased questions and retrieved documents.)

|

| 71 |

+

|

| 72 |

+

|

| 73 |

+

[logging]

|

| 74 |

+

; If you do not want to enable logging, set enabled to False.

|

| 75 |

+

enabled = True

|

| 76 |

+

level = INFO

|

| 77 |

+

filename = IncarnaMind.log

|

| 78 |

+

format = %(asctime)s [%(levelname)s] %(name)s: %(message)s

|

| 79 |

+

|

| 80 |

+

|

| 81 |

+

; Together.AI supported models:

|

| 82 |

+

|

| 83 |

+

; 0 Austism/chronos-hermes-13b

|

| 84 |

+

; 1 EleutherAI/pythia-12b-v0

|

| 85 |

+

; 2 EleutherAI/pythia-1b-v0

|

| 86 |

+

; 3 EleutherAI/pythia-2.8b-v0

|

| 87 |

+

; 4 EleutherAI/pythia-6.9b

|

| 88 |

+

; 5 Gryphe/MythoMax-L2-13b

|

| 89 |

+

; 6 HuggingFaceH4/starchat-alpha

|

| 90 |

+

; 7 NousResearch/Nous-Hermes-13b

|

| 91 |

+

; 8 NousResearch/Nous-Hermes-Llama2-13b

|

| 92 |

+

; 9 NumbersStation/nsql-llama-2-7B

|

| 93 |

+

; 10 OpenAssistant/llama2-70b-oasst-sft-v10

|

| 94 |

+

; 11 OpenAssistant/oasst-sft-4-pythia-12b-epoch-3.5

|

| 95 |

+

; 12 OpenAssistant/stablelm-7b-sft-v7-epoch-3

|

| 96 |

+

; 13 Phind/Phind-CodeLlama-34B-Python-v1

|

| 97 |

+

; 14 Phind/Phind-CodeLlama-34B-v2

|

| 98 |

+

; 15 SG161222/Realistic_Vision_V3.0_VAE

|

| 99 |

+

; 16 WizardLM/WizardCoder-15B-V1.0

|

| 100 |

+

; 17 WizardLM/WizardCoder-Python-34B-V1.0

|

| 101 |

+

; 18 WizardLM/WizardLM-70B-V1.0

|

| 102 |

+

; 19 bigcode/starcoder

|

| 103 |

+

; 20 databricks/dolly-v2-12b

|

| 104 |

+

; 21 databricks/dolly-v2-3b

|

| 105 |

+

; 22 databricks/dolly-v2-7b

|

| 106 |

+

; 23 defog/sqlcoder

|

| 107 |

+

; 24 garage-bAInd/Platypus2-70B-instruct

|

| 108 |

+

; 25 huggyllama/llama-13b

|

| 109 |

+

; 26 huggyllama/llama-30b

|

| 110 |

+

; 27 huggyllama/llama-65b

|

| 111 |

+

; 28 huggyllama/llama-7b

|

| 112 |

+

; 29 lmsys/fastchat-t5-3b-v1.0

|

| 113 |

+

; 30 lmsys/vicuna-13b-v1.3

|

| 114 |

+

; 31 lmsys/vicuna-13b-v1.5-16k

|

| 115 |

+

; 32 lmsys/vicuna-13b-v1.5

|

| 116 |

+

; 33 lmsys/vicuna-7b-v1.3

|

| 117 |

+

; 34 prompthero/openjourney

|

| 118 |

+

; 35 runwayml/stable-diffusion-v1-5

|

| 119 |

+

; 36 stabilityai/stable-diffusion-2-1

|

| 120 |

+

; 37 stabilityai/stable-diffusion-xl-base-1.0

|

| 121 |

+

; 38 togethercomputer/CodeLlama-13b-Instruct

|

| 122 |

+

; 39 togethercomputer/CodeLlama-13b-Python

|

| 123 |

+

; 40 togethercomputer/CodeLlama-13b

|

| 124 |

+

; 41 togethercomputer/CodeLlama-34b-Instruct

|

| 125 |

+

; 42 togethercomputer/CodeLlama-34b-Python

|

| 126 |

+

; 43 togethercomputer/CodeLlama-34b

|

| 127 |

+

; 44 togethercomputer/CodeLlama-7b-Instruct

|

| 128 |

+

; 45 togethercomputer/CodeLlama-7b-Python

|

| 129 |

+

; 46 togethercomputer/CodeLlama-7b

|

| 130 |

+

; 47 togethercomputer/GPT-JT-6B-v1

|

| 131 |

+

; 48 togethercomputer/GPT-JT-Moderation-6B

|

| 132 |

+

; 49 togethercomputer/GPT-NeoXT-Chat-Base-20B

|

| 133 |

+

; 50 togethercomputer/Koala-13B

|

| 134 |

+

; 51 togethercomputer/LLaMA-2-7B-32K

|

| 135 |

+

; 52 togethercomputer/Llama-2-7B-32K-Instruct

|

| 136 |

+

; 53 togethercomputer/Pythia-Chat-Base-7B-v0.16

|

| 137 |

+

; 54 togethercomputer/Qwen-7B-Chat

|

| 138 |

+

; 55 togethercomputer/Qwen-7B

|

| 139 |

+

; 56 togethercomputer/RedPajama-INCITE-7B-Base

|

| 140 |

+

; 57 togethercomputer/RedPajama-INCITE-7B-Chat

|

| 141 |

+

; 58 togethercomputer/RedPajama-INCITE-7B-Instruct

|

| 142 |

+

; 59 togethercomputer/RedPajama-INCITE-Base-3B-v1

|

| 143 |

+

; 60 togethercomputer/RedPajama-INCITE-Chat-3B-v1

|

| 144 |

+

; 61 togethercomputer/RedPajama-INCITE-Instruct-3B-v1

|

| 145 |

+

; 62 togethercomputer/alpaca-7b

|

| 146 |

+

; 63 togethercomputer/codegen2-16B

|

| 147 |

+

; 64 togethercomputer/codegen2-7B

|

| 148 |

+

; 65 togethercomputer/falcon-40b-instruct

|

| 149 |

+

; 66 togethercomputer/falcon-40b

|

| 150 |

+

; 67 togethercomputer/falcon-7b-instruct

|

| 151 |

+

; 68 togethercomputer/falcon-7b

|

| 152 |

+

; 69 togethercomputer/guanaco-13b

|

| 153 |

+

; 70 togethercomputer/guanaco-33b

|

| 154 |

+

; 71 togethercomputer/guanaco-65b

|

| 155 |

+

; 72 togethercomputer/guanaco-7b

|

| 156 |

+

; 73 togethercomputer/llama-2-13b-chat

|

| 157 |

+

; 74 togethercomputer/llama-2-13b

|

| 158 |

+

; 75 togethercomputer/llama-2-70b-chat

|

| 159 |

+

; 76 togethercomputer/llama-2-70b

|

| 160 |

+

; 77 togethercomputer/llama-2-7b-chat

|

| 161 |

+

; 78 togethercomputer/llama-2-7b

|

| 162 |

+

; 79 togethercomputer/mpt-30b-chat

|

| 163 |

+

; 80 togethercomputer/mpt-30b-instruct

|

| 164 |

+

; 81 togethercomputer/mpt-30b

|

| 165 |

+

; 82 togethercomputer/mpt-7b-chat

|

| 166 |

+

; 83 togethercomputer/mpt-7b

|

| 167 |

+

; 84 togethercomputer/replit-code-v1-3b

|

| 168 |

+

; 85 upstage/SOLAR-0-70b-16bit

|

| 169 |

+

; 86 wavymulder/Analog-Diffusion

|

convo_qa_chain.py

ADDED

|

@@ -0,0 +1,387 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""Conversational QA Chain"""

|

| 2 |

+

from __future__ import annotations

|

| 3 |

+

import inspect

|

| 4 |

+

import logging

|

| 5 |

+

from typing import Any, Dict, List, Optional

|

| 6 |

+

from pydantic import Field

|

| 7 |

+

|

| 8 |

+

from langchain.schema import BasePromptTemplate, BaseRetriever, Document

|

| 9 |

+

from langchain.schema.language_model import BaseLanguageModel

|

| 10 |

+

from langchain.chains import LLMChain

|

| 11 |

+

from langchain.chains.question_answering import load_qa_chain

|

| 12 |

+

from langchain.chains.conversational_retrieval.base import (

|

| 13 |

+

BaseConversationalRetrievalChain,

|

| 14 |

+

)

|

| 15 |

+

from langchain.callbacks.manager import (

|

| 16 |

+

AsyncCallbackManagerForChainRun,

|

| 17 |

+

CallbackManagerForChainRun,

|

| 18 |

+

Callbacks,

|

| 19 |

+

)

|

| 20 |

+

|

| 21 |

+

from toolkit.utils import (

|

| 22 |

+

Config,

|

| 23 |

+

_get_chat_history,

|

| 24 |

+

_get_standalone_questions_list,

|

| 25 |

+

)

|

| 26 |

+

from toolkit.retrivers import MyRetriever

|

| 27 |

+

from toolkit.prompts import PromptTemplates

|

| 28 |

+

|

| 29 |

+

configs = Config("configparser.ini")

|

| 30 |

+

logger = logging.getLogger(__name__)

|

| 31 |

+

|

| 32 |

+

prompt_templates = PromptTemplates()

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

class ConvoRetrievalChain(BaseConversationalRetrievalChain):

|

| 36 |

+

"""Chain for having a conversation based on retrieved documents.

|

| 37 |

+

|

| 38 |

+

This chain takes in chat history (a list of messages) and new questions,

|

| 39 |

+

and then returns an answer to that question.

|

| 40 |

+

The algorithm for this chain consists of three parts:

|

| 41 |

+

|

| 42 |

+

1. Use the chat history and the new question to create a "standalone question".

|

| 43 |

+

This is done so that this question can be passed into the retrieval step to fetch

|

| 44 |

+

relevant documents. If only the new question was passed in, then relevant context

|

| 45 |

+

may be lacking. If the whole conversation was passed into retrieval, there may

|

| 46 |

+

be unnecessary information there that would distract from retrieval.

|

| 47 |

+

|

| 48 |

+

2. This new question is passed to the retriever and relevant documents are

|

| 49 |

+

returned.

|

| 50 |

+

|

| 51 |

+

3. The retrieved documents are passed to an LLM along with either the new question

|

| 52 |

+

(default behavior) or the original question and chat history to generate a final

|

| 53 |

+

response.

|

| 54 |

+

|

| 55 |

+

Example:

|

| 56 |

+

.. code-block:: python

|

| 57 |

+

|

| 58 |

+

from langchain.chains import (

|

| 59 |

+

StuffDocumentsChain, LLMChain, ConversationalRetrievalChain

|

| 60 |

+

)

|

| 61 |

+

from langchain.prompts import PromptTemplate

|

| 62 |

+

from langchain.llms import OpenAI

|

| 63 |

+

|

| 64 |

+

combine_docs_chain = StuffDocumentsChain(...)

|

| 65 |

+

vectorstore = ...

|

| 66 |

+

retriever = vectorstore.as_retriever()

|

| 67 |

+

|

| 68 |

+

# This controls how the standalone question is generated.

|

| 69 |

+

# Should take `chat_history` and `question` as input variables.

|

| 70 |

+

template = (

|

| 71 |

+

"Combine the chat history and follow up question into "

|

| 72 |

+

"a standalone question. Chat History: {chat_history}"

|

| 73 |

+

"Follow up question: {question}"

|

| 74 |

+

)

|

| 75 |

+

prompt = PromptTemplate.from_template(template)

|

| 76 |

+

llm = OpenAI()

|

| 77 |

+

question_generator_chain = LLMChain(llm=llm, prompt=prompt)

|

| 78 |

+

chain = ConversationalRetrievalChain(

|

| 79 |

+

combine_docs_chain=combine_docs_chain,

|

| 80 |

+

retriever=retriever,

|

| 81 |

+

question_generator=question_generator_chain,

|

| 82 |

+

)

|

| 83 |

+

"""

|

| 84 |

+

|

| 85 |

+

retriever: MyRetriever = Field(exclude=True)

|

| 86 |

+

"""Retriever to use to fetch documents."""

|

| 87 |

+

file_names: List = Field(exclude=True)

|

| 88 |

+

"""file_names (List): List of file names used for retrieval."""

|

| 89 |

+

|

| 90 |

+

def _get_docs(

|

| 91 |

+

self,

|

| 92 |

+

question: str,

|

| 93 |

+

inputs: Dict[str, Any],

|

| 94 |

+

num_query: int,

|

| 95 |

+

*,

|

| 96 |

+

run_manager: Optional[CallbackManagerForChainRun] = None,

|

| 97 |

+

) -> List[Document]:

|

| 98 |

+

"""Get docs."""

|

| 99 |

+

try:

|

| 100 |

+

docs = self.retriever.get_relevant_documents(

|

| 101 |

+

question, num_query=num_query, run_manager=run_manager

|

| 102 |

+

)

|

| 103 |

+

return docs

|

| 104 |

+

except (IOError, FileNotFoundError) as error:

|

| 105 |

+

logger.error("An error occurred in _get_docs: %s", error)

|

| 106 |

+

return []

|

| 107 |

+

|

| 108 |

+

def _retrieve(

|

| 109 |

+

self,

|

| 110 |

+

question_list: List[str],

|

| 111 |

+

inputs: Dict[str, Any],

|

| 112 |

+

run_manager: Optional[CallbackManagerForChainRun] = None,

|

| 113 |

+

) -> List[str]:

|

| 114 |

+

num_query = len(question_list)

|

| 115 |

+

accepts_run_manager = (

|

| 116 |

+

"run_manager" in inspect.signature(self._get_docs).parameters

|

| 117 |

+

)

|

| 118 |

+

|

| 119 |

+

total_results = {}

|

| 120 |

+

for question in question_list:

|

| 121 |

+

docs_dict = (

|

| 122 |

+

self._get_docs(

|

| 123 |

+

question, inputs, num_query=num_query, run_manager=run_manager

|

| 124 |

+

)

|

| 125 |

+

if accepts_run_manager

|

| 126 |

+

else self._get_docs(question, inputs, num_query=num_query)

|

| 127 |

+

)

|

| 128 |

+

|

| 129 |

+

for file_name, docs in docs_dict.items():

|

| 130 |

+

if file_name not in total_results:

|

| 131 |

+

total_results[file_name] = docs

|

| 132 |

+

else:

|

| 133 |

+

total_results[file_name].extend(docs)

|

| 134 |

+

|

| 135 |

+

logger.info(

|

| 136 |

+

"-----step_done--------------------------------------------------",

|

| 137 |

+

)

|

| 138 |

+

|

| 139 |

+

snippets = ""

|

| 140 |

+

redundancy = set()

|

| 141 |

+

for file_name, docs in total_results.items():

|

| 142 |

+

sorted_docs = sorted(docs, key=lambda x: x.metadata["medium_chunk_idx"])

|

| 143 |

+

temp = "\n".join(

|

| 144 |

+

doc.page_content

|

| 145 |

+

for doc in sorted_docs

|

| 146 |

+

if doc.metadata["page_content_md5"] not in redundancy

|

| 147 |

+

)

|

| 148 |

+

redundancy.update(doc.metadata["page_content_md5"] for doc in sorted_docs)

|

| 149 |

+

snippets += f"\nContext about {file_name}:\n{{{temp}}}\n"

|

| 150 |

+

|

| 151 |

+

return snippets, docs_dict

|

| 152 |

+

|

| 153 |

+

def _call(

|

| 154 |

+

self,

|

| 155 |

+

inputs: Dict[str, Any],

|

| 156 |

+

run_manager: Optional[CallbackManagerForChainRun] = None,

|

| 157 |

+

) -> Dict[str, Any]:

|

| 158 |

+

_run_manager = run_manager or CallbackManagerForChainRun.get_noop_manager()

|

| 159 |

+

question = inputs["question"]

|

| 160 |

+

get_chat_history = self.get_chat_history or _get_chat_history

|

| 161 |

+

chat_history_str = get_chat_history(inputs["chat_history"])

|

| 162 |

+

|

| 163 |

+

callbacks = _run_manager.get_child()

|

| 164 |

+

new_questions = self.question_generator.run(

|

| 165 |

+

question=question,

|

| 166 |

+

chat_history=chat_history_str,

|

| 167 |

+

database=self.file_names,

|

| 168 |

+

callbacks=callbacks,

|

| 169 |

+

)

|

| 170 |

+

logger.info("new_questions: %s", new_questions)

|

| 171 |

+

new_question_list = _get_standalone_questions_list(new_questions, question)[:3]

|

| 172 |

+

# print("new_question_list:", new_question_list)

|

| 173 |

+

logger.info("user_input: %s", question)

|

| 174 |

+

logger.info("new_question_list: %s", new_question_list)

|

| 175 |

+

|

| 176 |

+

snippets, source_docs = self._retrieve(

|

| 177 |

+

new_question_list, inputs, run_manager=_run_manager

|

| 178 |

+

)

|

| 179 |

+

|

| 180 |

+

docs = [

|

| 181 |

+

Document(

|

| 182 |

+

page_content=snippets,

|

| 183 |

+

metadata={},

|

| 184 |

+

)

|

| 185 |

+

]

|

| 186 |

+

|

| 187 |

+

new_inputs = inputs.copy()

|

| 188 |

+

new_inputs["chat_history"] = chat_history_str

|

| 189 |

+

answer = self.combine_docs_chain.run(

|

| 190 |

+

input_documents=docs,

|

| 191 |

+

database=self.file_names,

|

| 192 |

+

callbacks=_run_manager.get_child(),

|

| 193 |

+

**new_inputs,

|

| 194 |

+

)

|

| 195 |

+

output: Dict[str, Any] = {self.output_key: answer}

|

| 196 |

+

if self.return_source_documents:

|

| 197 |

+

output["source_documents"] = source_docs

|

| 198 |

+

if self.return_generated_question:

|

| 199 |

+

output["generated_question"] = new_questions

|

| 200 |

+

|

| 201 |

+

logger.info("*****response*****: %s", output["answer"])

|

| 202 |

+

logger.info(

|

| 203 |

+

"=====epoch_done============================================================",

|

| 204 |

+

)

|

| 205 |

+

return output

|

| 206 |

+

|

| 207 |

+

async def _aget_docs(

|

| 208 |

+

self,

|

| 209 |

+

question: str,

|

| 210 |

+

inputs: Dict[str, Any],

|

| 211 |

+

num_query: int,

|

| 212 |

+

*,

|

| 213 |

+

run_manager: Optional[AsyncCallbackManagerForChainRun] = None,

|

| 214 |

+

) -> List[Document]:

|

| 215 |

+

"""Get docs."""

|

| 216 |

+

try:

|

| 217 |

+

docs = await self.retriever.aget_relevant_documents(

|

| 218 |

+

question, num_query=num_query, run_manager=run_manager

|

| 219 |

+

)

|

| 220 |

+

return docs

|

| 221 |

+

except (IOError, FileNotFoundError) as error:

|

| 222 |

+

logger.error("An error occurred in _get_docs: %s", error)

|

| 223 |

+

return []

|

| 224 |

+

|

| 225 |

+

async def _aretrieve(

|

| 226 |

+

self,

|

| 227 |

+

question_list: List[str],

|

| 228 |

+

inputs: Dict[str, Any],

|

| 229 |

+

run_manager: Optional[AsyncCallbackManagerForChainRun] = None,

|

| 230 |

+

) -> Dict[str, Any]:

|

| 231 |

+

num_query = len(question_list)

|

| 232 |

+

accepts_run_manager = (

|

| 233 |

+

"run_manager" in inspect.signature(self._get_docs).parameters

|

| 234 |

+

)

|

| 235 |

+

|

| 236 |

+

total_results = {}

|

| 237 |

+

for question in question_list:

|

| 238 |

+

docs_dict = (

|

| 239 |

+

await self._aget_docs(

|

| 240 |

+

question, inputs, num_query=num_query, run_manager=run_manager

|

| 241 |

+

)

|

| 242 |

+

if accepts_run_manager

|

| 243 |

+

else await self._aget_docs(question, inputs, num_query=num_query)

|

| 244 |

+

)

|

| 245 |

+

|

| 246 |

+

for file_name, docs in docs_dict.items():

|

| 247 |

+

if file_name not in total_results:

|

| 248 |

+

total_results[file_name] = docs

|

| 249 |

+

else:

|

| 250 |

+

total_results[file_name].extend(docs)

|

| 251 |

+

|

| 252 |

+

logger.info(

|

| 253 |

+

"-----step_done--------------------------------------------------",

|

| 254 |

+

)

|

| 255 |

+

|

| 256 |

+

snippets = ""

|

| 257 |

+

redundancy = set()

|

| 258 |

+

for file_name, docs in total_results.items():

|

| 259 |

+

sorted_docs = sorted(docs, key=lambda x: x.metadata["medium_chunk_idx"])

|

| 260 |

+

temp = "\n".join(

|

| 261 |

+

doc.page_content

|

| 262 |

+

for doc in sorted_docs

|

| 263 |

+

if doc.metadata["page_content_md5"] not in redundancy

|

| 264 |

+

)

|

| 265 |

+

redundancy.update(doc.metadata["page_content_md5"] for doc in sorted_docs)

|

| 266 |

+

snippets += f"\nContext about {file_name}:\n{{{temp}}}\n"

|

| 267 |

+

|

| 268 |

+

return snippets, docs_dict

|

| 269 |

+

|

| 270 |

+

async def _acall(

|

| 271 |

+

self,

|

| 272 |

+

inputs: Dict[str, Any],

|

| 273 |

+

run_manager: Optional[AsyncCallbackManagerForChainRun] = None,

|

| 274 |

+

) -> Dict[str, Any]:

|

| 275 |

+

_run_manager = run_manager or AsyncCallbackManagerForChainRun.get_noop_manager()

|

| 276 |

+

question = inputs["question"]

|

| 277 |

+

get_chat_history = self.get_chat_history or _get_chat_history

|

| 278 |

+

chat_history_str = get_chat_history(inputs["chat_history"])

|

| 279 |

+

|

| 280 |

+

callbacks = _run_manager.get_child()

|

| 281 |

+

new_questions = await self.question_generator.arun(

|

| 282 |

+

question=question,

|

| 283 |

+

chat_history=chat_history_str,

|

| 284 |

+

database=self.file_names,

|

| 285 |

+

callbacks=callbacks,

|

| 286 |

+

)

|

| 287 |

+

new_question_list = _get_standalone_questions_list(new_questions, question)[:3]

|

| 288 |

+

logger.info("new_questions: %s", new_questions)

|

| 289 |

+

logger.info("new_question_list: %s", new_question_list)

|

| 290 |

+

|

| 291 |

+

snippets, source_docs = await self._aretrieve(

|

| 292 |

+

new_question_list, inputs, run_manager=_run_manager

|

| 293 |

+

)

|

| 294 |

+

|

| 295 |

+

docs = [

|

| 296 |

+

Document(

|

| 297 |

+

page_content=snippets,

|

| 298 |

+

metadata={},

|

| 299 |

+

)

|

| 300 |

+

]

|

| 301 |

+

|

| 302 |

+

new_inputs = inputs.copy()

|

| 303 |

+

new_inputs["chat_history"] = chat_history_str

|

| 304 |

+

answer = await self.combine_docs_chain.arun(

|

| 305 |

+

input_documents=docs,

|

| 306 |

+

database=self.file_names,

|

| 307 |

+

callbacks=_run_manager.get_child(),

|

| 308 |

+

**new_inputs,

|

| 309 |

+

)

|

| 310 |

+

output: Dict[str, Any] = {self.output_key: answer}

|

| 311 |

+

if self.return_source_documents:

|

| 312 |

+

output["source_documents"] = source_docs

|

| 313 |

+

if self.return_generated_question:

|

| 314 |

+

output["generated_question"] = new_questions

|

| 315 |

+

|

| 316 |

+

logger.info("*****response*****: %s", output["answer"])

|

| 317 |

+

logger.info(

|

| 318 |

+

"=====epoch_done============================================================",

|

| 319 |

+

)

|

| 320 |

+

|

| 321 |

+

return output

|

| 322 |

+

|

| 323 |

+

@classmethod

|

| 324 |

+

def from_llm(

|

| 325 |

+

cls,

|

| 326 |

+

llm: BaseLanguageModel,

|

| 327 |

+

retriever: BaseRetriever,

|

| 328 |

+

condense_question_prompt: BasePromptTemplate = prompt_templates.get_refine_qa_template(

|

| 329 |

+

configs.model_name

|

| 330 |

+

),

|

| 331 |

+

chain_type: str = "stuff", # only support stuff chain now

|

| 332 |

+

verbose: bool = False,

|

| 333 |

+

condense_question_llm: Optional[BaseLanguageModel] = None,

|

| 334 |

+

combine_docs_chain_kwargs: Optional[Dict] = None,

|

| 335 |

+

callbacks: Callbacks = None,

|

| 336 |

+

**kwargs: Any,

|

| 337 |

+

) -> BaseConversationalRetrievalChain:

|

| 338 |

+

"""Convenience method to load chain from LLM and retriever.

|

| 339 |

+

|

| 340 |

+

This provides some logic to create the `question_generator` chain

|

| 341 |

+

as well as the combine_docs_chain.

|

| 342 |

+

|

| 343 |

+

Args:

|

| 344 |

+

llm: The default language model to use at every part of this chain

|

| 345 |

+

(eg in both the question generation and the answering)

|

| 346 |

+

retriever: The retriever to use to fetch relevant documents from.

|

| 347 |

+

condense_question_prompt: The prompt to use to condense the chat history

|

| 348 |

+

and new question into standalone question(s).

|

| 349 |

+

chain_type: The chain type to use to create the combine_docs_chain, will

|

| 350 |

+

be sent to `load_qa_chain`.

|

| 351 |

+

verbose: Verbosity flag for logging to stdout.

|

| 352 |

+

condense_question_llm: The language model to use for condensing the chat

|

| 353 |

+

history and new question into standalone question(s). If none is

|

| 354 |

+

provided, will default to `llm`.

|

| 355 |

+

combine_docs_chain_kwargs: Parameters to pass as kwargs to `load_qa_chain`

|

| 356 |

+

when constructing the combine_docs_chain.

|

| 357 |

+

callbacks: Callbacks to pass to all subchains.

|

| 358 |

+

**kwargs: Additional parameters to pass when initializing

|

| 359 |

+

ConversationalRetrievalChain

|

| 360 |

+

"""

|

| 361 |

+

combine_docs_chain_kwargs = combine_docs_chain_kwargs or {

|

| 362 |

+

"prompt": prompt_templates.get_retrieval_qa_template_selector(

|

| 363 |

+

configs.model_name

|

| 364 |

+

).get_prompt(llm)

|

| 365 |

+

}

|

| 366 |

+

doc_chain = load_qa_chain(

|

| 367 |

+

llm,

|

| 368 |

+

chain_type=chain_type,

|

| 369 |

+

verbose=verbose,

|

| 370 |

+

callbacks=callbacks,

|

| 371 |

+

**combine_docs_chain_kwargs,

|

| 372 |

+

)

|

| 373 |

+

|

| 374 |

+

_llm = condense_question_llm or llm

|

| 375 |

+

condense_question_chain = LLMChain(

|

| 376 |

+

llm=_llm,

|

| 377 |

+

prompt=condense_question_prompt,

|

| 378 |

+

verbose=verbose,

|

| 379 |

+

callbacks=callbacks,

|

| 380 |

+

)

|

| 381 |

+

return cls(

|

| 382 |

+

retriever=retriever,

|

| 383 |

+

combine_docs_chain=doc_chain,

|

| 384 |

+

question_generator=condense_question_chain,

|

| 385 |

+

callbacks=callbacks,

|

| 386 |

+

**kwargs,

|

| 387 |

+

)

|

data/ABPI Code of Practice for the Pharmaceutical Industry 2021.pdf

ADDED

|

Binary file (803 kB). View file

|

|

|

data/Attention Is All You Need.pdf

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:b7d72988fd8107d07f7d278bf0ba6621adb6ed47df74be4014fa4a01f03aff6a

|

| 3 |

+

size 2215244

|

data/Gradient Descent The Ultimate Optimizer.pdf

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c76077e02756ef3281ce3b1195d080009cb88e00382a8fc225948db339053296

|

| 3 |

+

size 1923635

|

data/JP Morgan 2022 Environmental Social Governance Report.pdf

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:80eab2c81a6c82bde9ccff1a8636fddc8ce1457a13c833d8a7f1e374a4bb439f

|

| 3 |

+

size 7474626

|

data/Language Models are Few-Shot Learners.pdf

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:97fd272f1fdfc18677462d0292f5fbf26ca86b4d1b485c2dba03269b643a0e83

|

| 3 |

+

size 6768044

|

data/Language Models are Unsupervised Multitask Learners.pdf

ADDED

|

Binary file (583 kB). View file

|

|

|

data/United Nations 2022 Annual Report.pdf

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:b4ee2835c06f98e74ab93aa69a0c026577c464fc6bd3942068f14cba5dcad536

|

| 3 |

+

size 36452281

|

docs2db.py

ADDED

|

@@ -0,0 +1,346 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

This module save documents to embeddings and langchain Documents.

|

| 3 |

+

"""

|

| 4 |

+

import os

|

| 5 |

+

import glob

|

| 6 |

+

import pickle

|

| 7 |

+

from typing import List

|

| 8 |

+

from multiprocessing import Pool

|

| 9 |

+

from collections import deque

|

| 10 |

+

import hashlib

|

| 11 |

+

import tiktoken

|

| 12 |

+

|

| 13 |

+

from tqdm import tqdm

|

| 14 |

+

|

| 15 |

+

from langchain.schema import Document

|

| 16 |

+

from langchain.vectorstores import Chroma

|

| 17 |

+

from langchain.text_splitter import (

|

| 18 |

+

RecursiveCharacterTextSplitter,

|

| 19 |

+

)

|

| 20 |

+

from langchain.document_loaders import (

|

| 21 |

+

PyPDFLoader,

|

| 22 |

+

TextLoader,

|

| 23 |

+

)

|

| 24 |

+

|

| 25 |

+

from toolkit.utils import Config, choose_embeddings, clean_text

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

# Load the config file

|

| 29 |

+

configs = Config("configparser.ini")

|

| 30 |

+

|

| 31 |

+

os.environ["OPENAI_API_KEY"] = configs.openai_api_key

|

| 32 |

+

os.environ["ANTHROPIC_API_KEY"] = configs.anthropic_api_key

|

| 33 |

+

|

| 34 |

+

embedding_store_path = configs.db_dir

|

| 35 |

+

files_path = glob.glob(configs.docs_dir + "/*")

|

| 36 |

+

|

| 37 |

+

tokenizer_name = tiktoken.encoding_for_model("gpt-3.5-turbo")

|

| 38 |

+

tokenizer = tiktoken.get_encoding(tokenizer_name.name)

|

| 39 |

+

|

| 40 |

+

loaders = {

|

| 41 |

+

"pdf": (PyPDFLoader, {}),

|

| 42 |

+

"txt": (TextLoader, {}),

|

| 43 |

+

}

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

def tiktoken_len(text: str):

|

| 47 |

+

"""Calculate the token length of a given text string using TikToken.

|

| 48 |

+

|

| 49 |

+

Args:

|

| 50 |

+

text (str): The text to be tokenized.

|

| 51 |

+

|

| 52 |

+

Returns:

|

| 53 |

+

int: The length of the tokenized text.

|

| 54 |

+

"""

|

| 55 |

+

tokens = tokenizer.encode(text, disallowed_special=())

|

| 56 |

+

|

| 57 |

+

return len(tokens)

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

def string2md5(text: str):

|

| 61 |

+

"""Convert a string to its MD5 hash.

|

| 62 |

+

|

| 63 |

+

Args:

|

| 64 |

+

text (str): The text to be hashed.

|

| 65 |

+

|

| 66 |

+

Returns:

|

| 67 |

+

str: The MD5 hash of the input string.

|

| 68 |

+

"""

|

| 69 |

+

hash_md5 = hashlib.md5()

|

| 70 |

+

hash_md5.update(text.encode("utf-8"))

|

| 71 |

+

|

| 72 |

+

return hash_md5.hexdigest()

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

def load_file(file_path):

|

| 76 |

+

"""Load a file and return its content as a Document object.

|

| 77 |

+

|

| 78 |

+

Args:

|

| 79 |

+

file_path (str): The path to the file.

|

| 80 |

+

|

| 81 |

+

Returns:

|

| 82 |

+

Document: The loaded document.

|

| 83 |

+

"""

|

| 84 |

+

ext = file_path.split(".")[-1]

|

| 85 |

+

|

| 86 |

+

if ext in loaders:

|

| 87 |

+

loader_type, args = loaders[ext]

|

| 88 |

+

loader = loader_type(file_path, **args)

|

| 89 |

+

doc = loader.load()

|

| 90 |

+

|

| 91 |

+

return doc

|

| 92 |

+

|

| 93 |

+

raise ValueError(f"Extension {ext} not supported")

|

| 94 |

+

|

| 95 |

+

|

| 96 |

+

def docs2vectorstore(docs: List[Document], embedding_name: str, suffix: str = ""):

|

| 97 |

+

"""Convert a list of Documents into a Chroma vector store.

|

| 98 |

+

|

| 99 |

+

Args:

|

| 100 |

+

docs (Document): The list of Documents.

|

| 101 |

+

suffix (str, optional): Suffix for the embedding. Defaults to "".

|

| 102 |

+

"""

|

| 103 |

+

embedding = choose_embeddings(embedding_name)

|

| 104 |

+

name = f"{embedding_name}_{suffix}"

|

| 105 |

+

# if embedding_store_path is not existing, create it

|

| 106 |

+

if not os.path.exists(embedding_store_path):

|

| 107 |

+

os.makedirs(embedding_store_path)

|

| 108 |

+

Chroma.from_documents(

|

| 109 |

+

docs,

|

| 110 |

+

embedding,

|

| 111 |

+

persist_directory=f"{embedding_store_path}/chroma_{name}",

|

| 112 |

+

)

|

| 113 |

+

|

| 114 |

+

|

| 115 |

+

def file_names2pickle(file_names: list, save_name: str = ""):

|

| 116 |

+

"""Save the list of file names to a pickle file.

|

| 117 |

+

|

| 118 |

+

Args:

|

| 119 |

+

file_names (list): The list of file names.

|

| 120 |

+

save_name (str, optional): The name for the saved pickle file. Defaults to "".

|

| 121 |

+

"""

|

| 122 |

+

name = f"{save_name}"

|

| 123 |

+

if not os.path.exists(embedding_store_path):

|

| 124 |

+

os.makedirs(embedding_store_path)

|

| 125 |

+

with open(f"{embedding_store_path}/{name}.pkl", "wb") as file:

|

| 126 |

+

pickle.dump(file_names, file)

|

| 127 |

+

|

| 128 |

+

|

| 129 |

+

def docs2pickle(docs: List[Document], suffix: str = ""):

|

| 130 |

+

"""Serializes a list of Document objects to a pickle file.

|

| 131 |

+

|

| 132 |

+

Args:

|

| 133 |

+

docs (Document): List of Document objects.

|

| 134 |

+

suffix (str, optional): Suffix for the pickle file. Defaults to "".

|

| 135 |

+

"""

|

| 136 |

+

for doc in docs:

|

| 137 |

+

doc.page_content = clean_text(doc.page_content)

|

| 138 |

+

name = f"pickle_{suffix}"

|

| 139 |

+

if not os.path.exists(embedding_store_path):

|

| 140 |

+

os.makedirs(embedding_store_path)

|

| 141 |

+

with open(f"{embedding_store_path}/docs_{name}.pkl", "wb") as file:

|

| 142 |

+

pickle.dump(docs, file)

|

| 143 |

+

|

| 144 |

+

|

| 145 |

+

def split_doc(

|

| 146 |

+

doc: List[Document], chunk_size: int, chunk_overlap: int, chunk_idx_name: str

|

| 147 |

+

):

|

| 148 |

+

"""Splits a document into smaller chunks based on the provided size and overlap.

|

| 149 |

+

|

| 150 |

+

Args:

|

| 151 |

+

doc (Document): Document to be split.

|

| 152 |

+

chunk_size (int): Size of each chunk.

|

| 153 |

+

chunk_overlap (int): Overlap between adjacent chunks.

|

| 154 |

+

chunk_idx_name (str): Metadata key for storing chunk indices.

|

| 155 |

+

|

| 156 |

+

Returns:

|

| 157 |

+

list: List of Document objects representing the chunks.

|

| 158 |

+

"""

|

| 159 |

+

data_splitter = RecursiveCharacterTextSplitter(

|

| 160 |

+

chunk_size=chunk_size,

|

| 161 |

+

chunk_overlap=chunk_overlap,

|

| 162 |

+

length_function=tiktoken_len,

|

| 163 |

+

)

|

| 164 |

+

doc_split = data_splitter.split_documents(doc)

|

| 165 |

+

chunk_idx = 0

|

| 166 |

+

|

| 167 |

+

for d_split in doc_split:

|

| 168 |

+

d_split.metadata[chunk_idx_name] = chunk_idx

|

| 169 |

+

chunk_idx += 1

|

| 170 |

+

|

| 171 |

+

return doc_split

|

| 172 |

+

|

| 173 |

+

|

| 174 |

+

def process_metadata(doc: List[Document]):

|

| 175 |

+

"""Processes and updates the metadata for a list of Document objects.

|

| 176 |

+

|

| 177 |

+

Args:

|

| 178 |

+

doc (list): List of Document objects.

|

| 179 |

+

"""

|

| 180 |

+

# get file name and remove extension

|

| 181 |

+

file_name_with_extension = os.path.basename(doc[0].metadata["source"])

|

| 182 |

+

file_name, _ = os.path.splitext(file_name_with_extension)

|

| 183 |

+

|