Spaces:

Running

Running

add files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitignore +168 -0

- Dockerfile +16 -2

- README.md +76 -3

- assets/MangaTranslator.png +0 -0

- fonts/GL-NovantiquaMinamoto.ttf +3 -0

- fonts/mangat.ttf +3 -0

- model_creation/011.jpg +0 -0

- model_creation/main.ipynb +0 -0

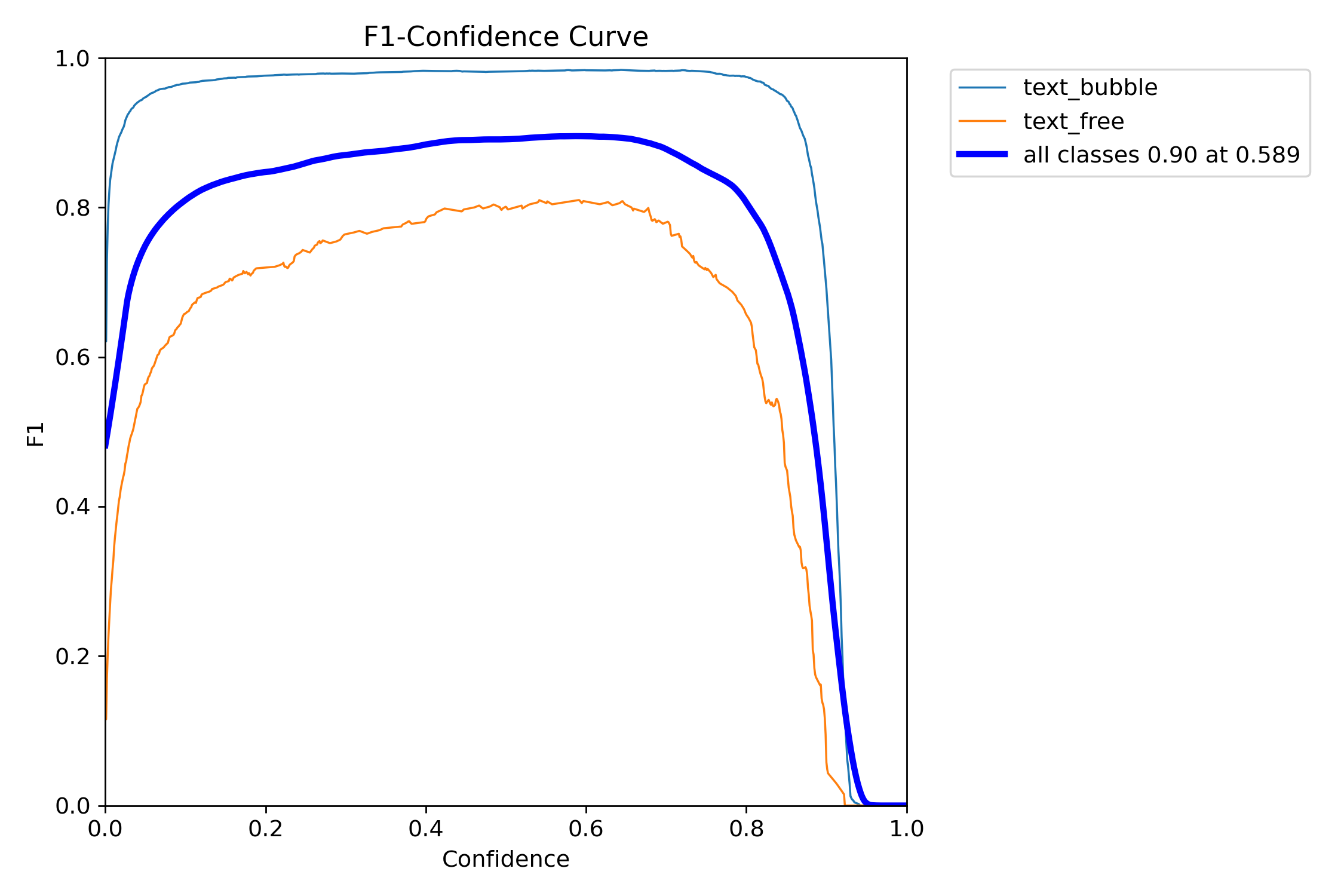

- model_creation/runs/detect/train5/F1_curve.png +0 -0

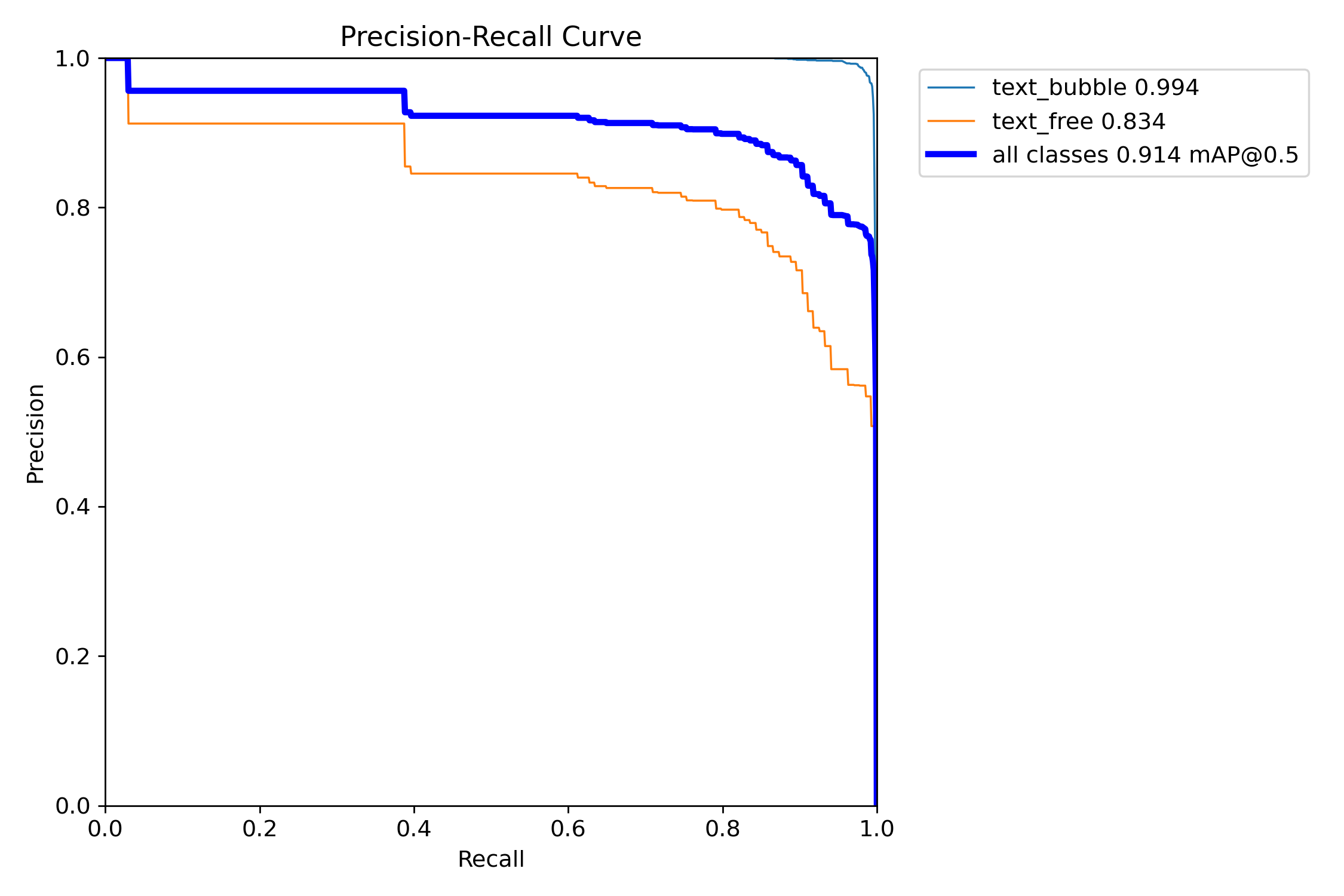

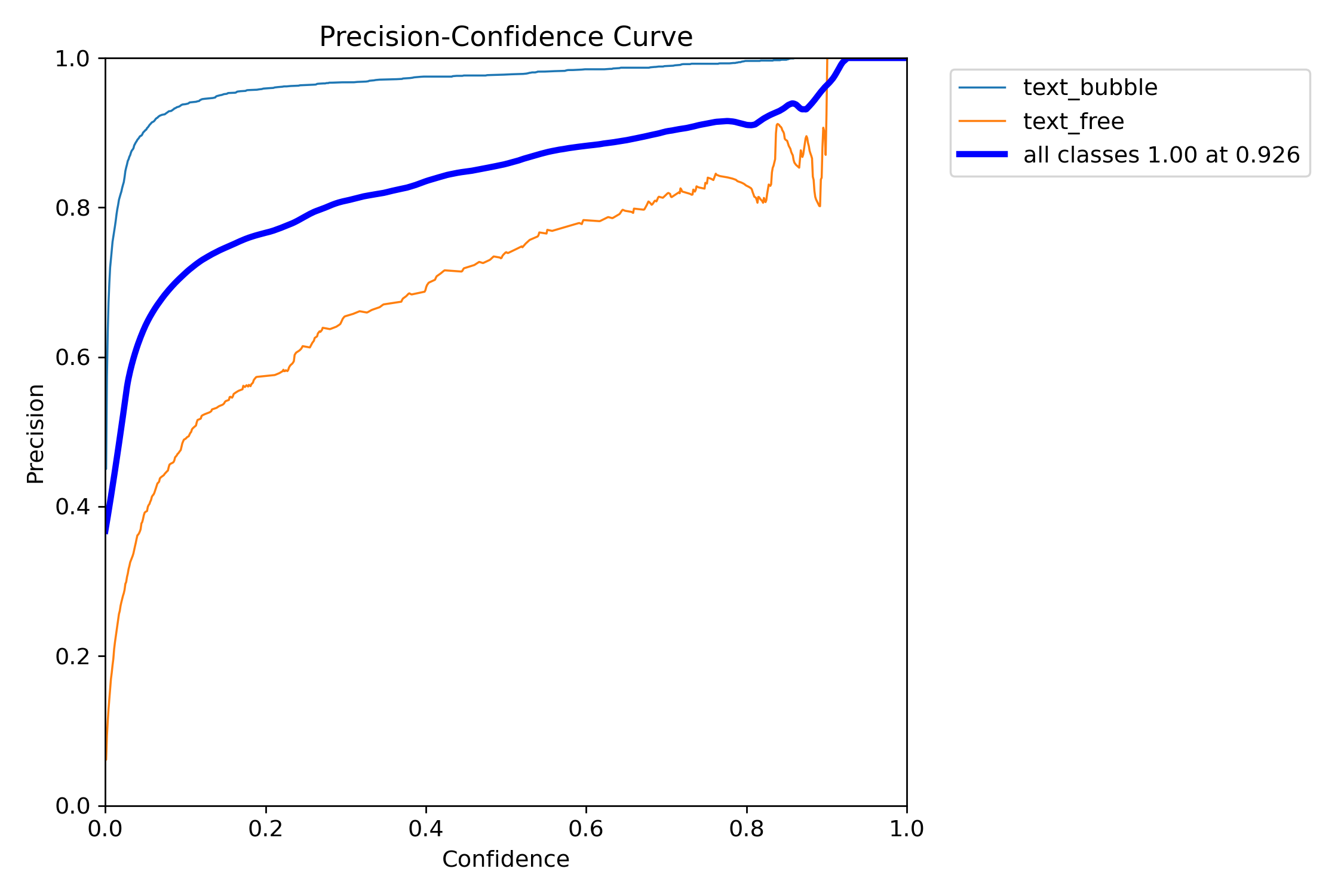

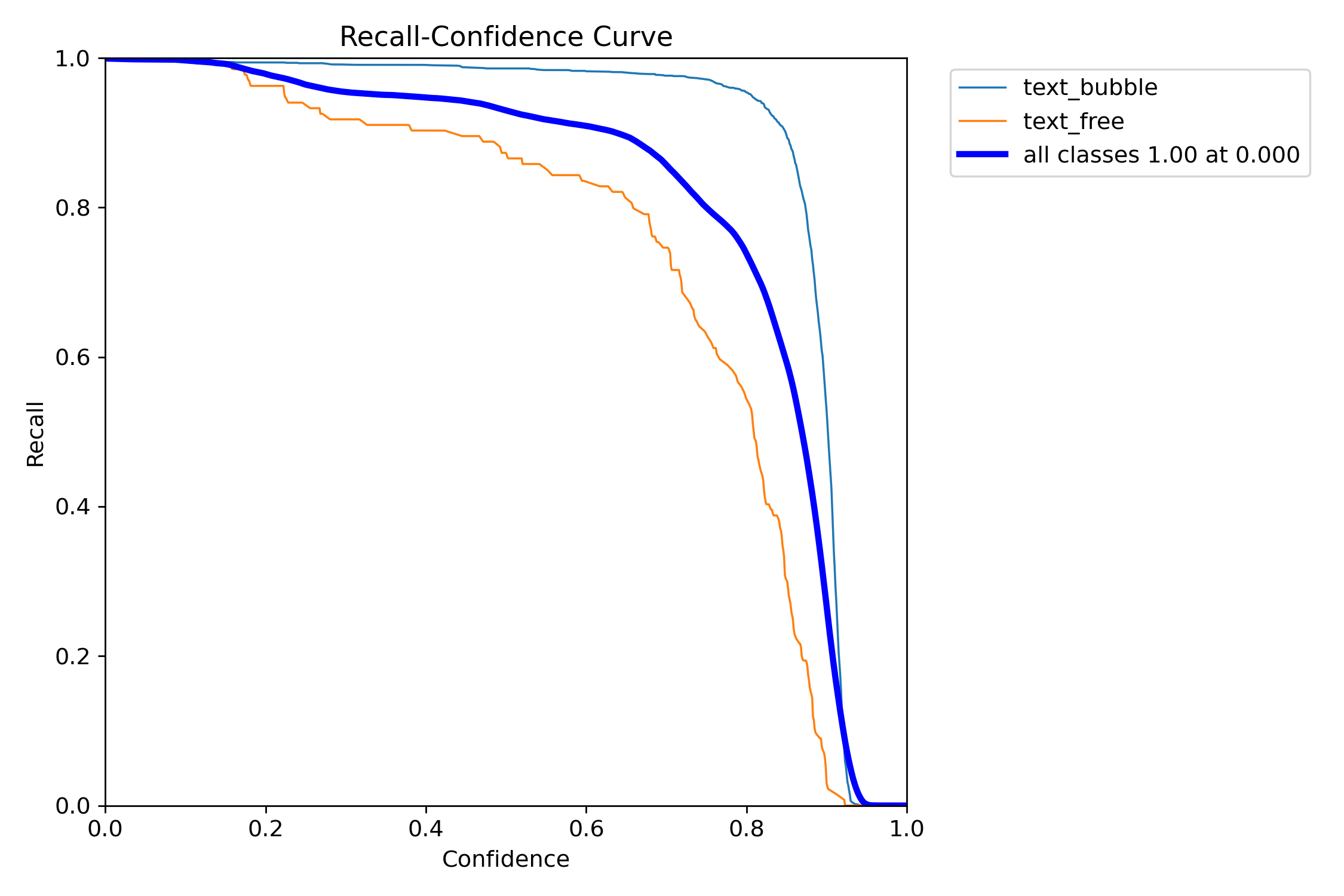

- model_creation/runs/detect/train5/PR_curve.png +0 -0

- model_creation/runs/detect/train5/P_curve.png +0 -0

- model_creation/runs/detect/train5/R_curve.png +0 -0

- model_creation/runs/detect/train5/args.yaml +106 -0

- model_creation/runs/detect/train5/confusion_matrix.png +0 -0

- model_creation/runs/detect/train5/confusion_matrix_normalized.png +0 -0

- model_creation/runs/detect/train5/events.out.tfevents.1716832099.517a5a4e3ae1.2051.0 +3 -0

- model_creation/runs/detect/train5/labels.jpg +0 -0

- model_creation/runs/detect/train5/labels_correlogram.jpg +0 -0

- model_creation/runs/detect/train5/results.csv +6 -0

- model_creation/runs/detect/train5/results.png +0 -0

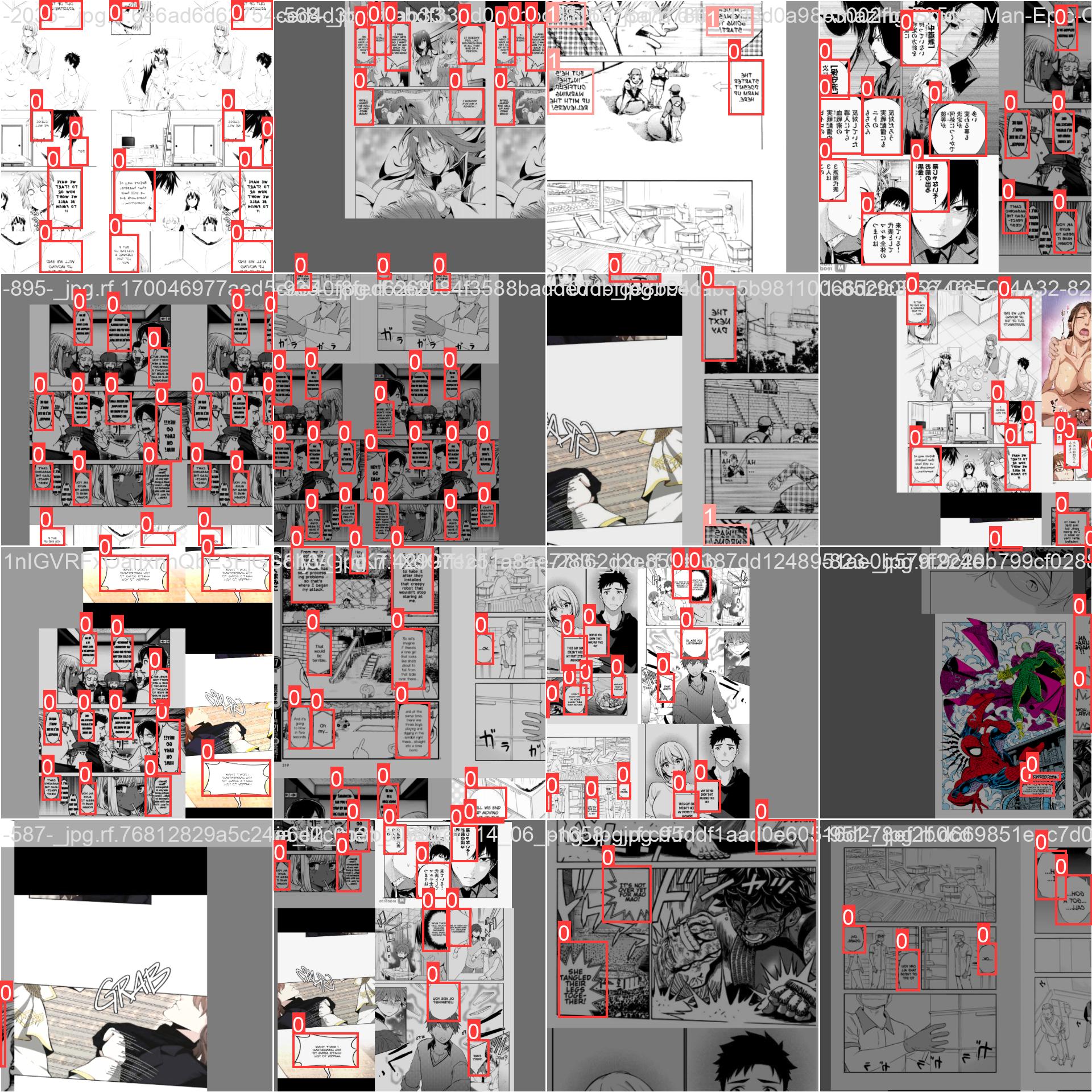

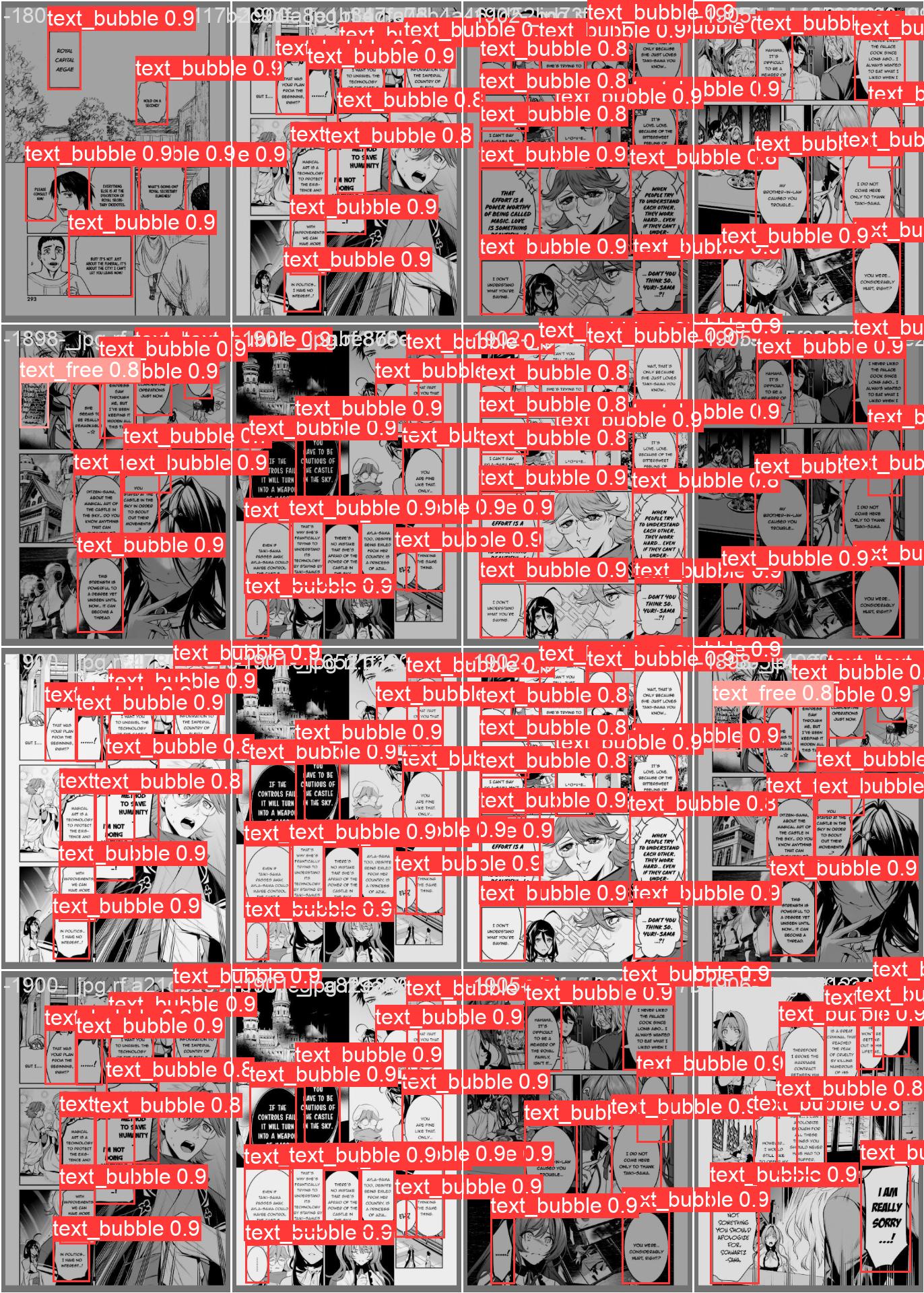

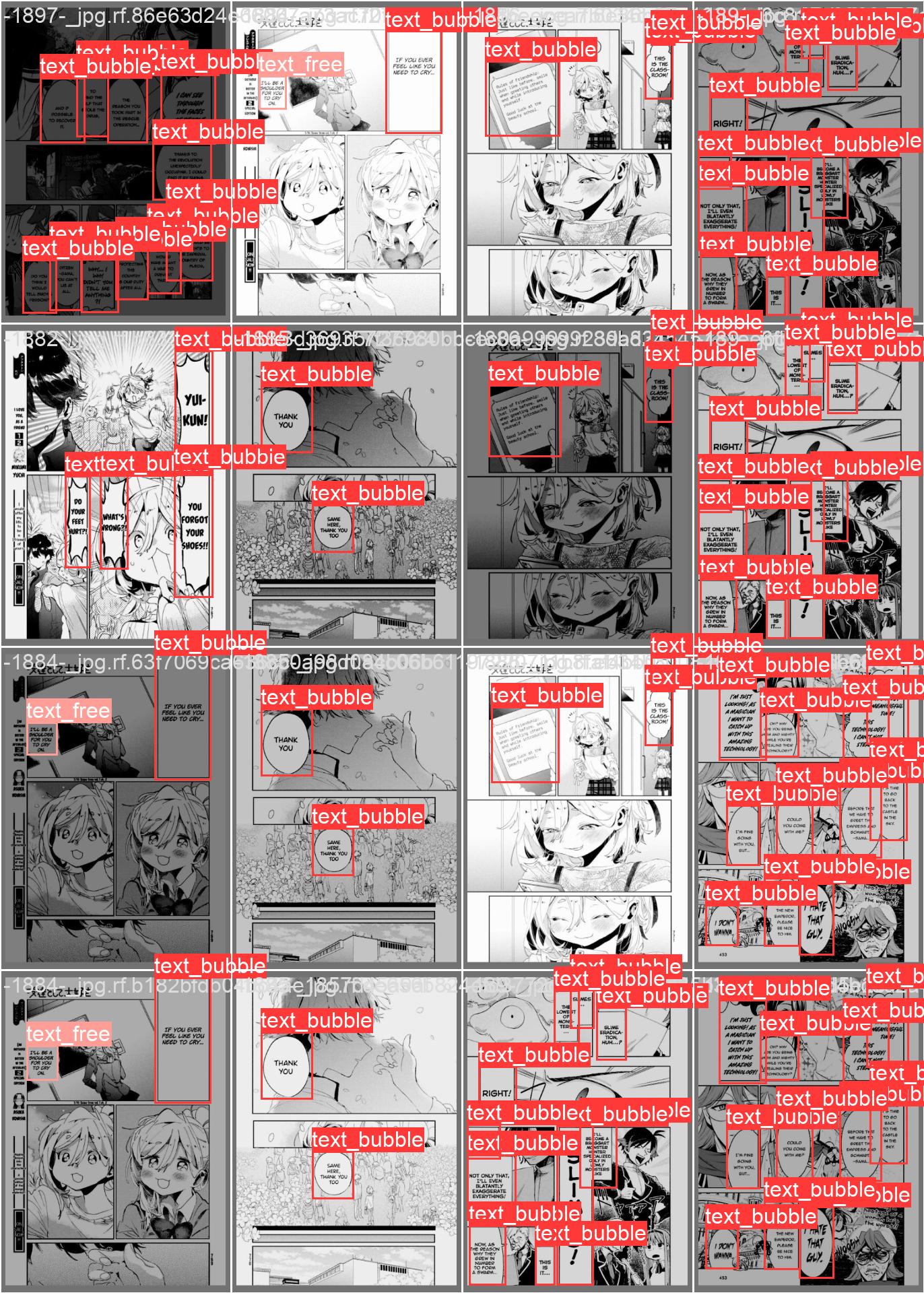

- model_creation/runs/detect/train5/train_batch0.jpg +0 -0

- model_creation/runs/detect/train5/train_batch1.jpg +0 -0

- model_creation/runs/detect/train5/train_batch2.jpg +0 -0

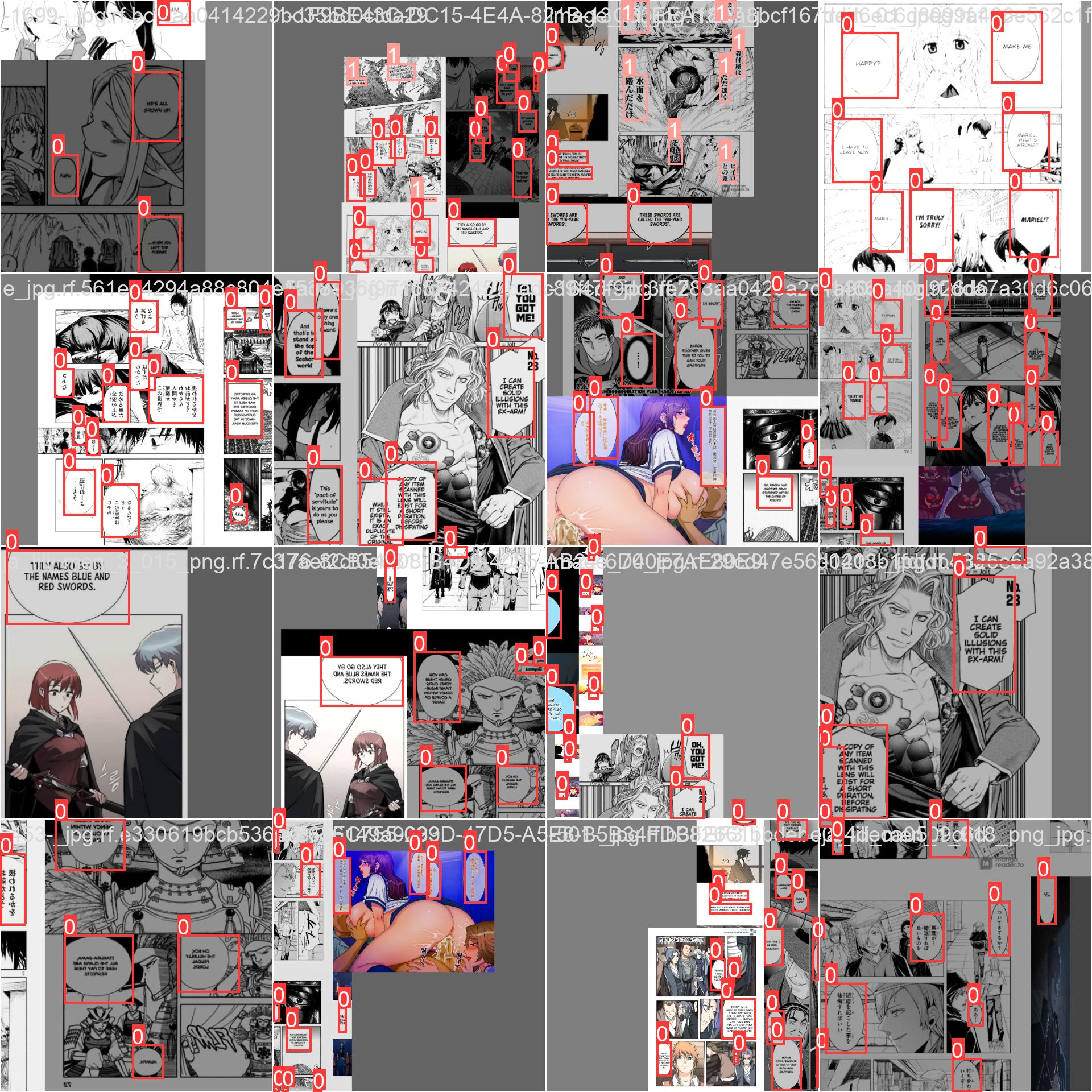

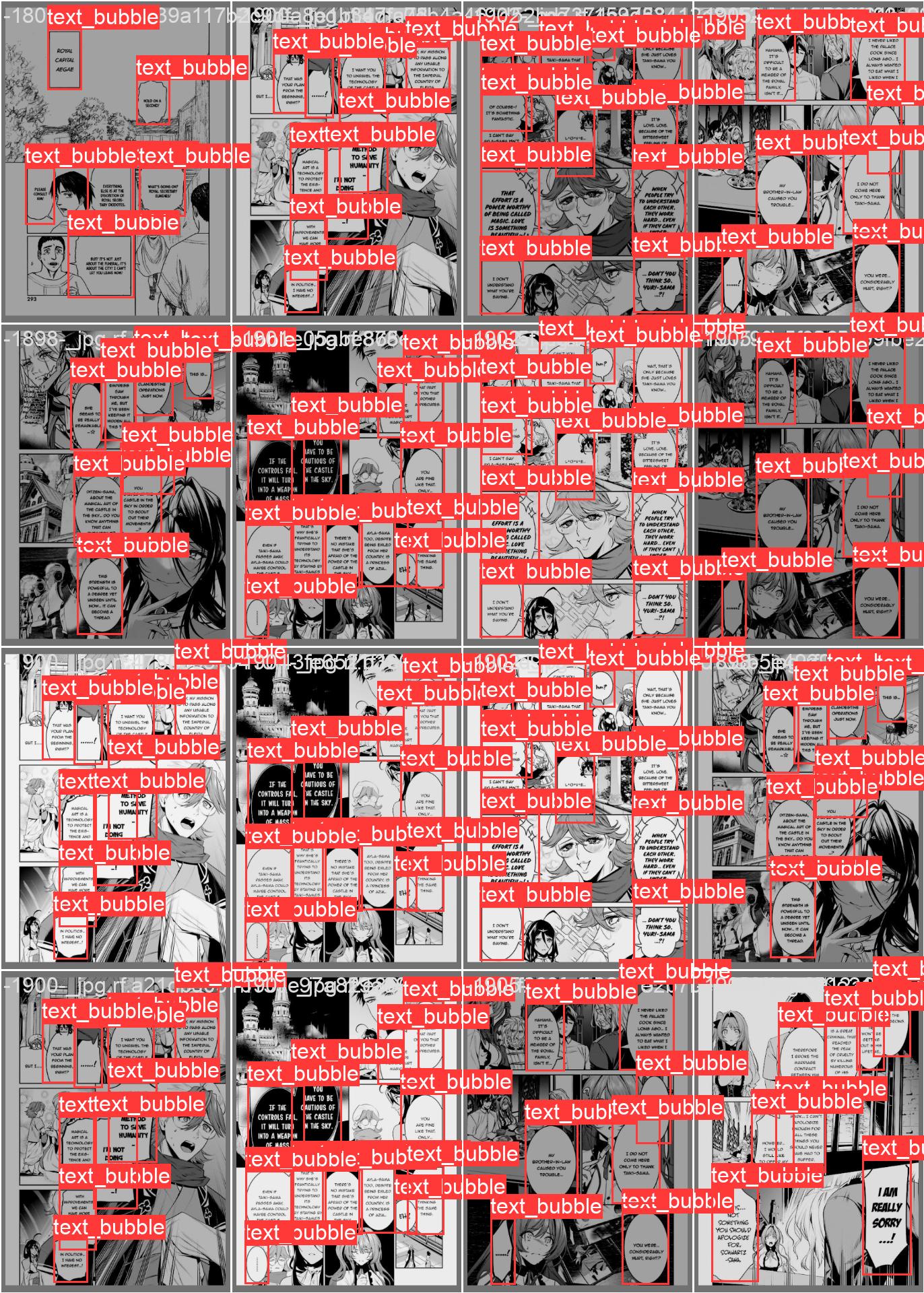

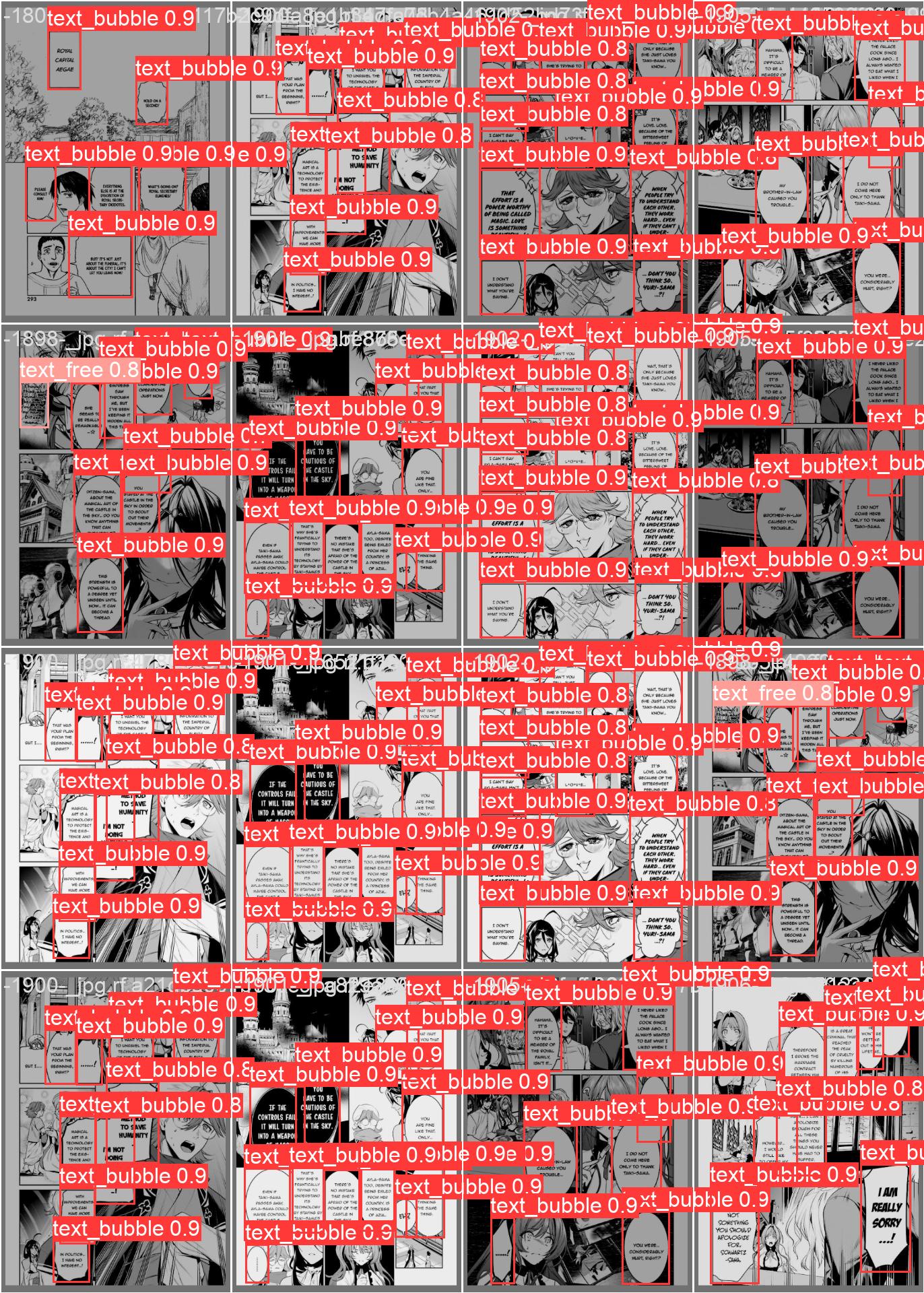

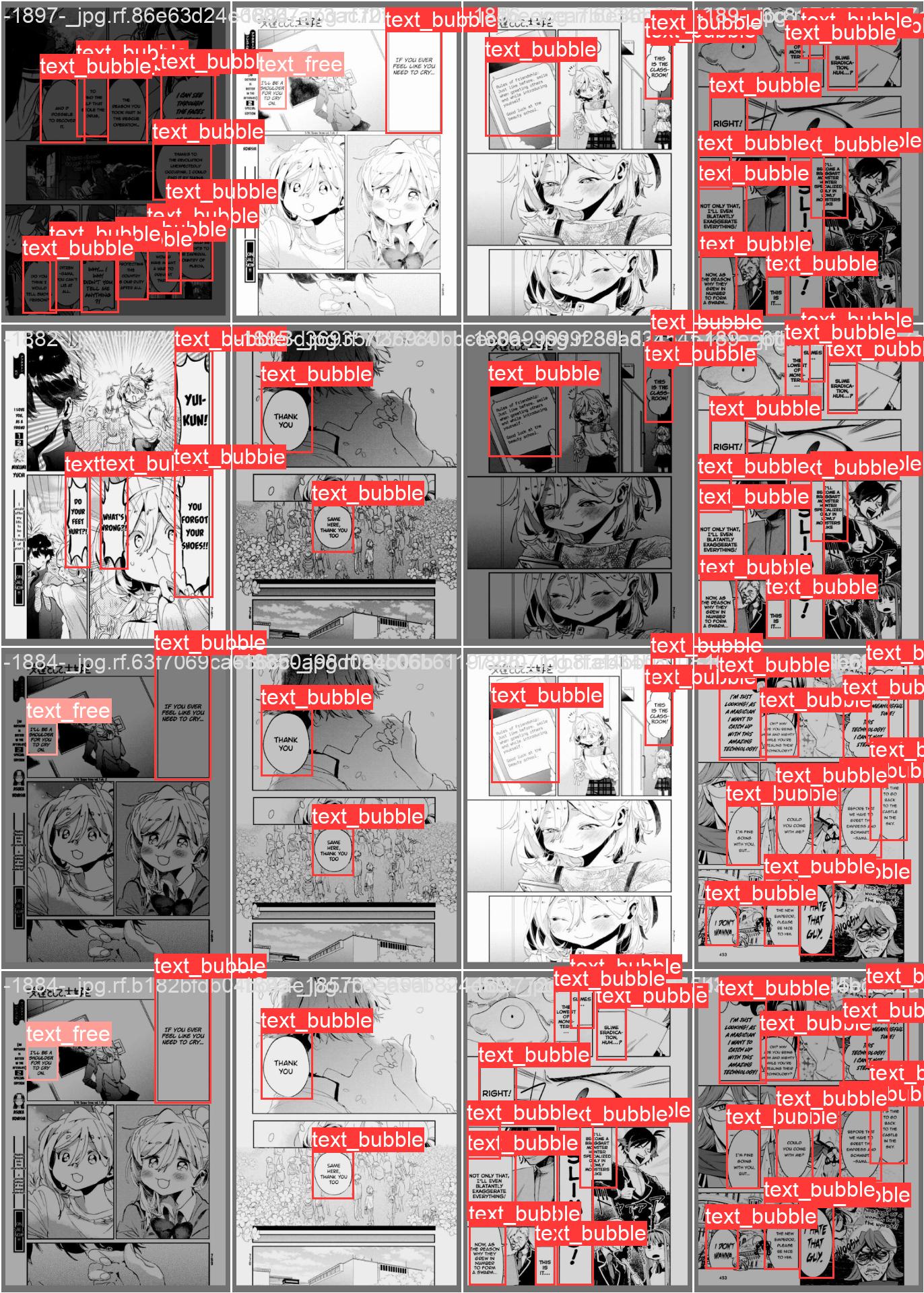

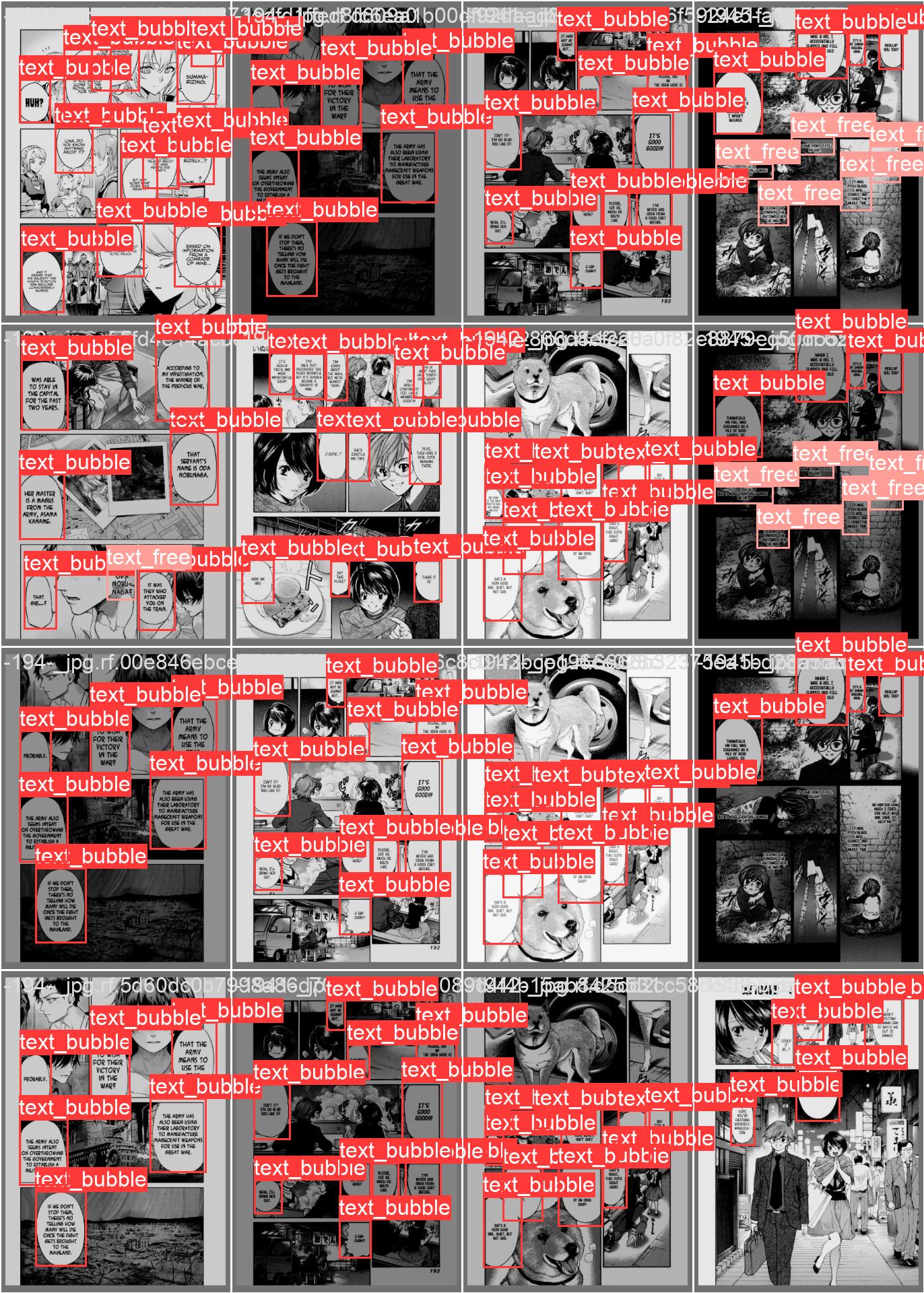

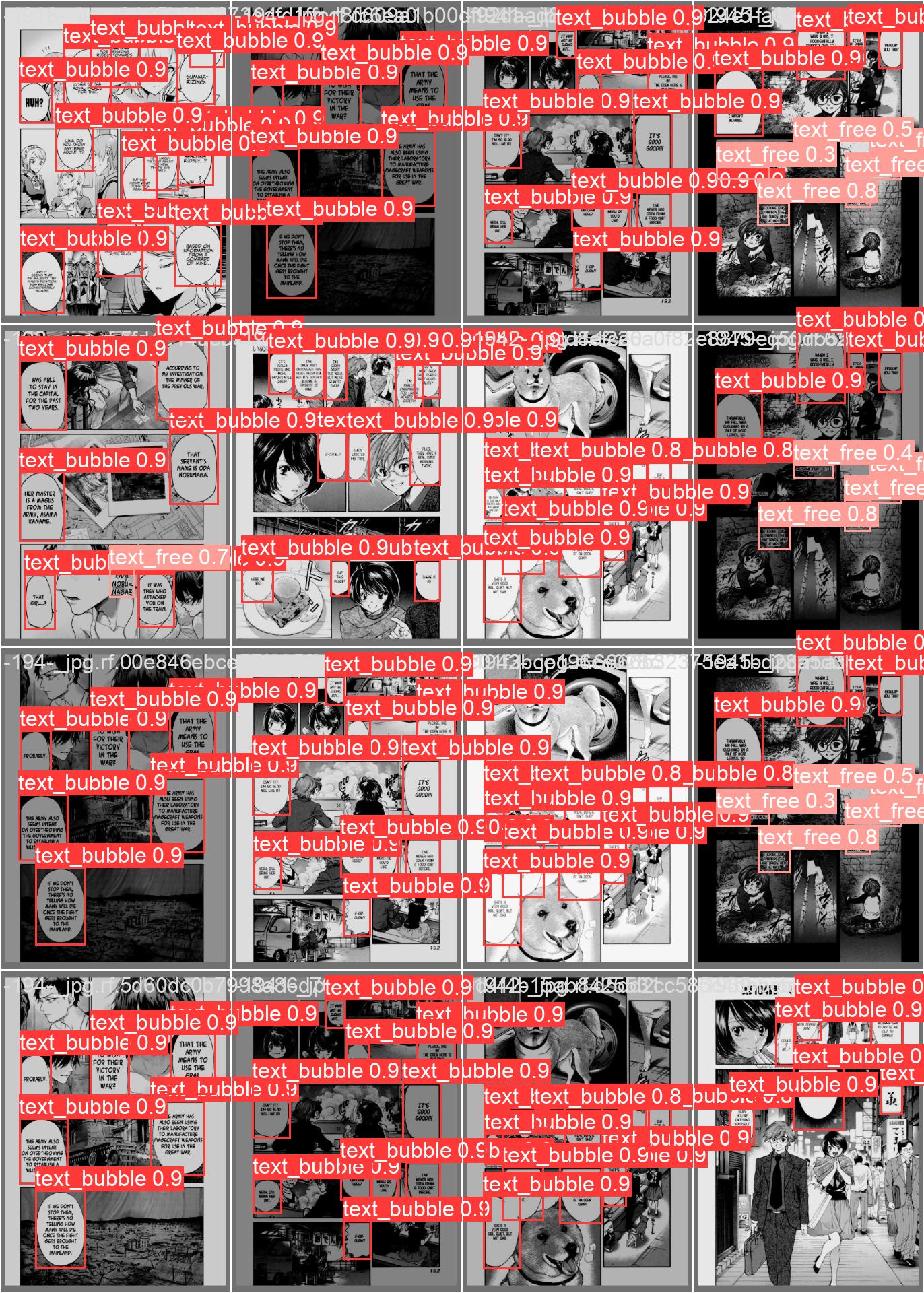

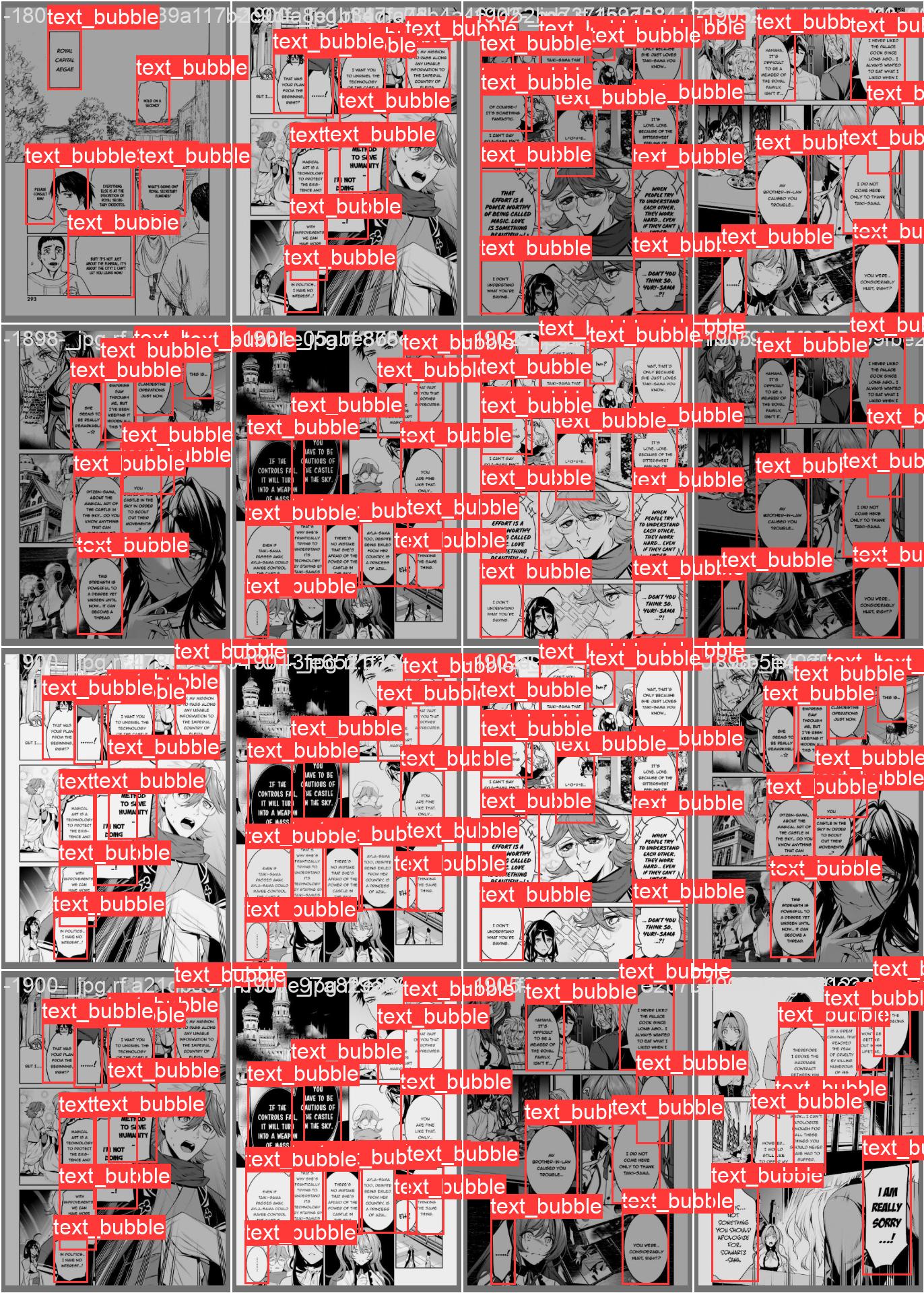

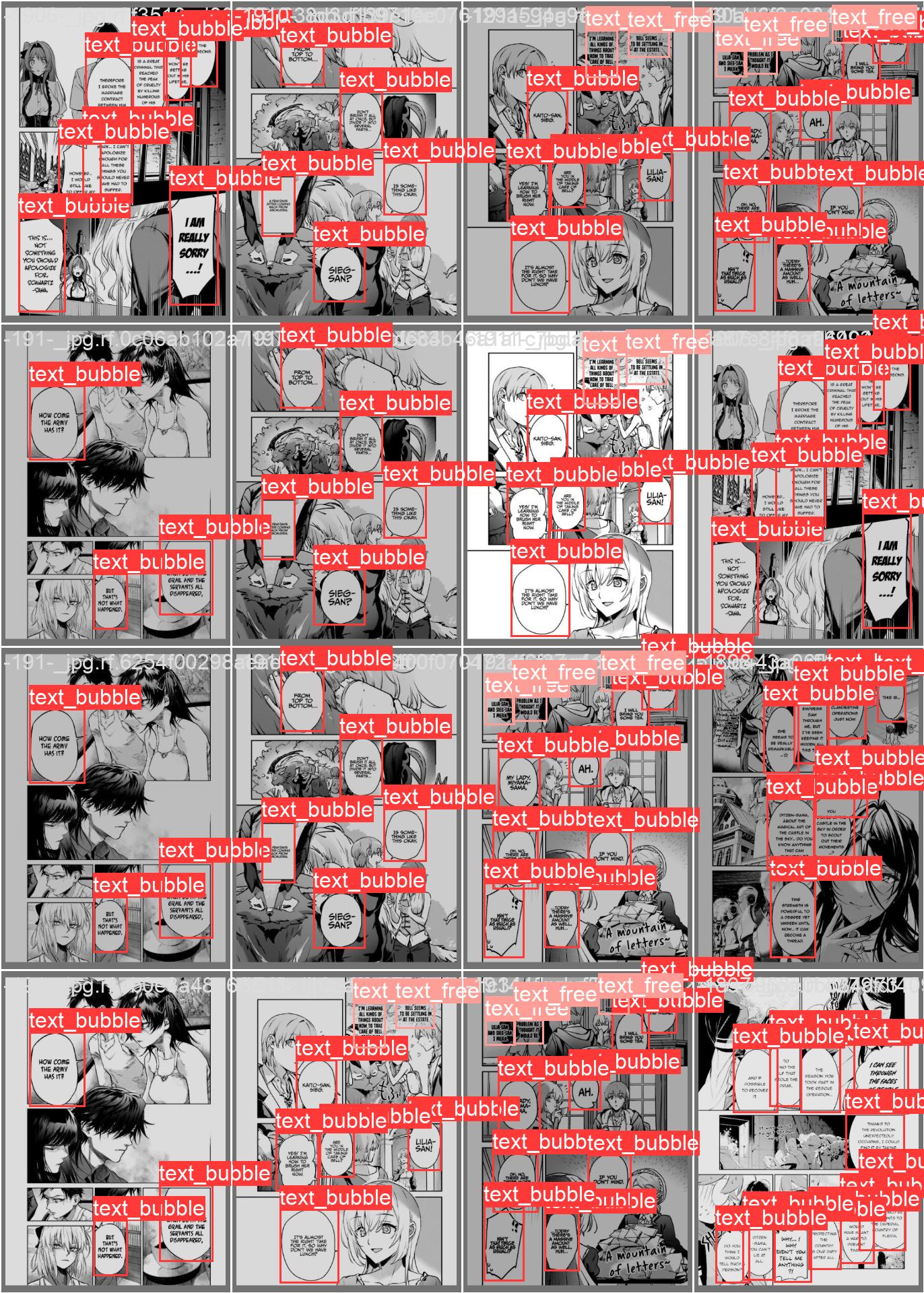

- model_creation/runs/detect/train5/val_batch0_labels.jpg +0 -0

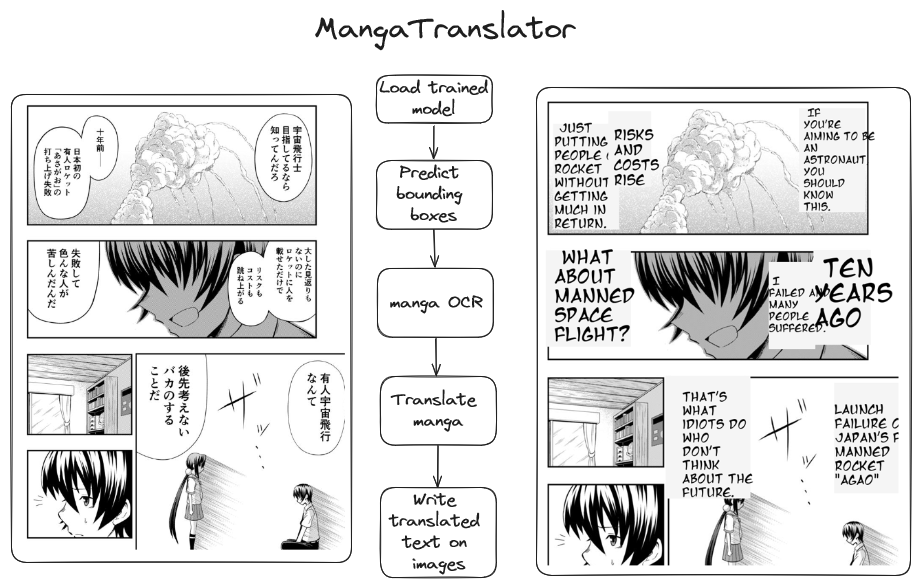

- model_creation/runs/detect/train5/val_batch0_pred.jpg +0 -0

- model_creation/runs/detect/train5/val_batch1_labels.jpg +0 -0

- model_creation/runs/detect/train5/val_batch1_pred.jpg +0 -0

- model_creation/runs/detect/train5/val_batch2_labels.jpg +0 -0

- model_creation/runs/detect/train5/val_batch2_pred.jpg +0 -0

- model_creation/runs/detect/train5/weights/best.pt +3 -0

- model_creation/runs/detect/train5/weights/last.pt +3 -0

- model_creation/runs/detect/train53/F1_curve.png +0 -0

- model_creation/runs/detect/train53/PR_curve.png +0 -0

- model_creation/runs/detect/train53/P_curve.png +0 -0

- model_creation/runs/detect/train53/R_curve.png +0 -0

- model_creation/runs/detect/train53/confusion_matrix.png +0 -0

- model_creation/runs/detect/train53/confusion_matrix_normalized.png +0 -0

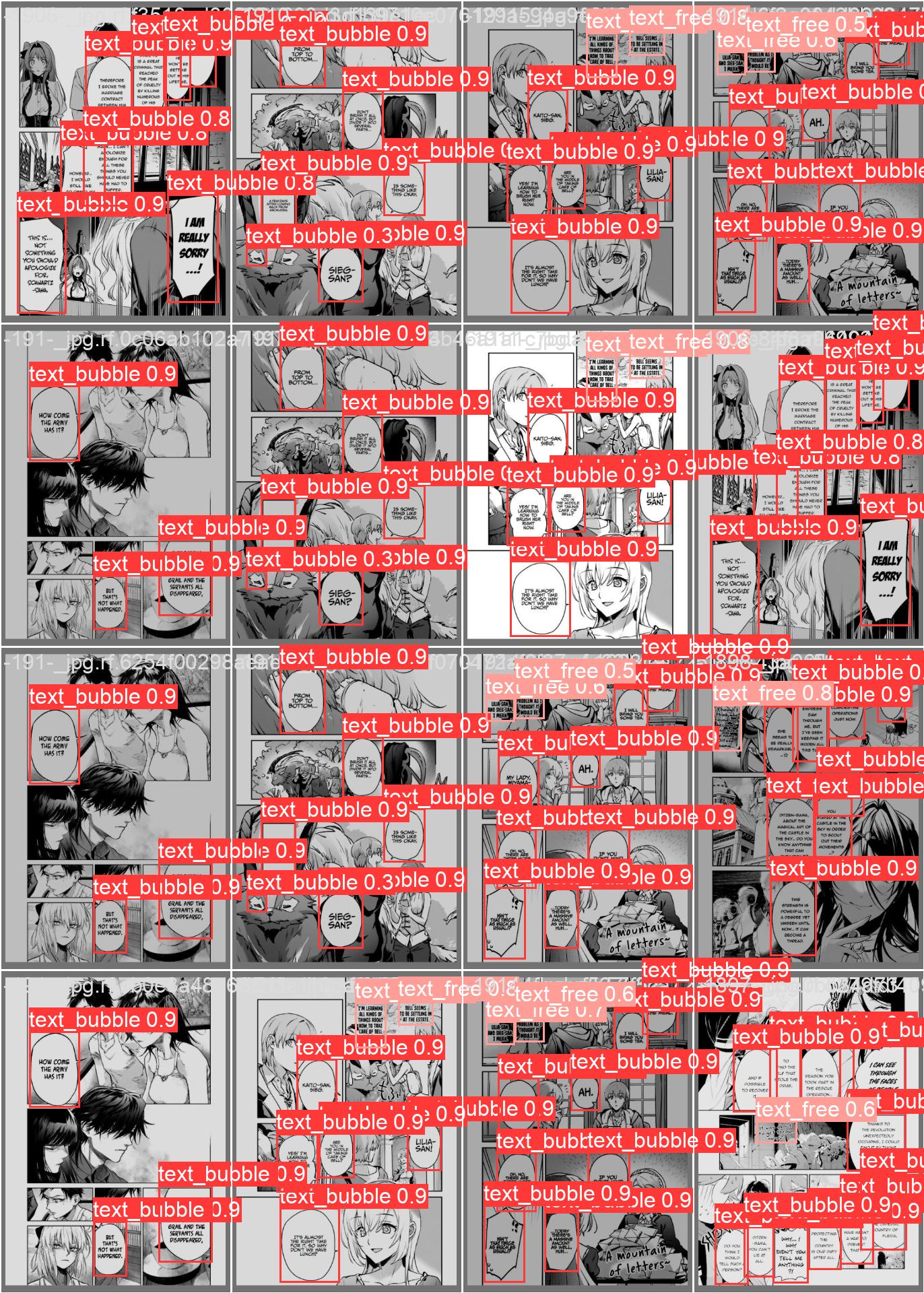

- model_creation/runs/detect/train53/val_batch0_labels.jpg +0 -0

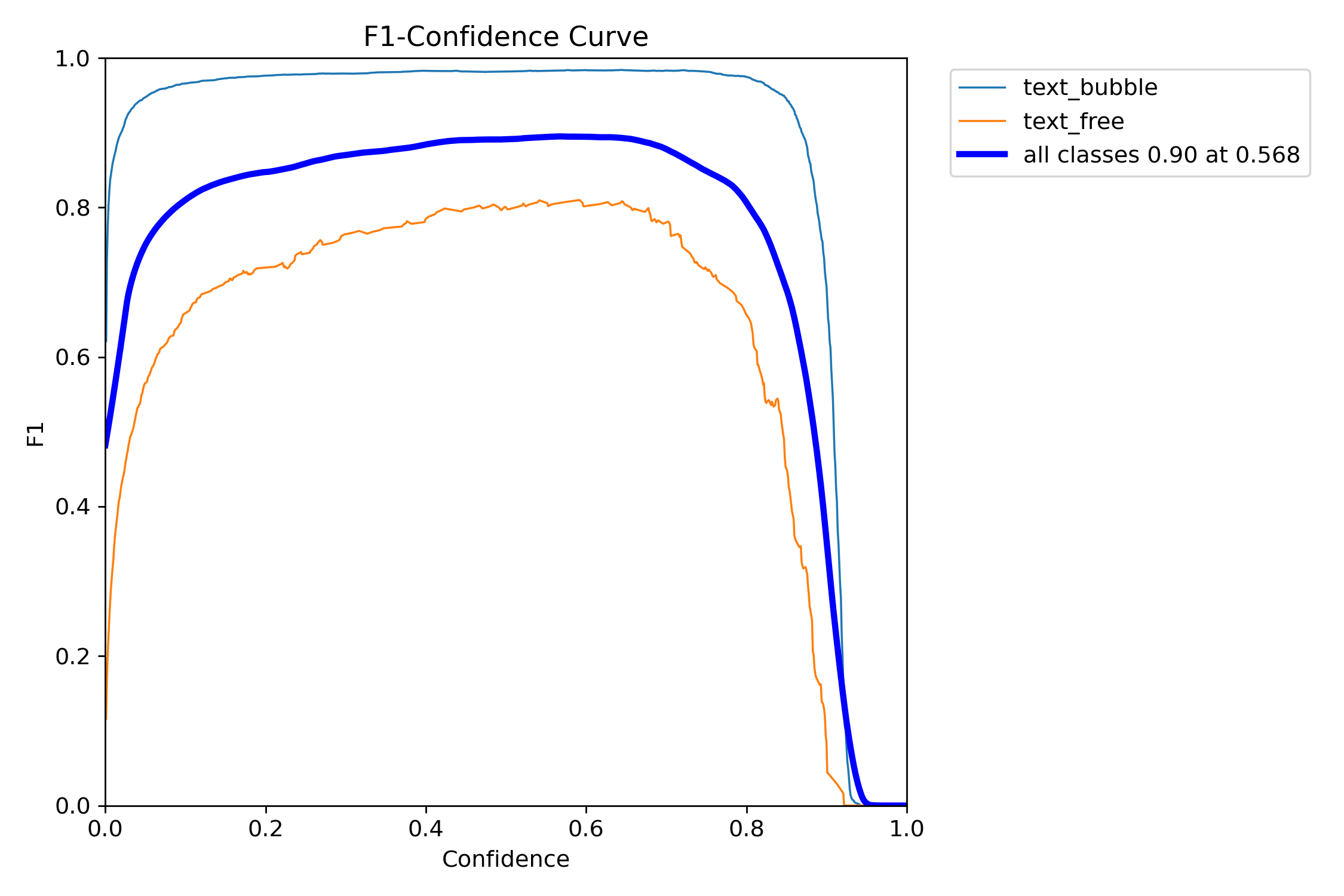

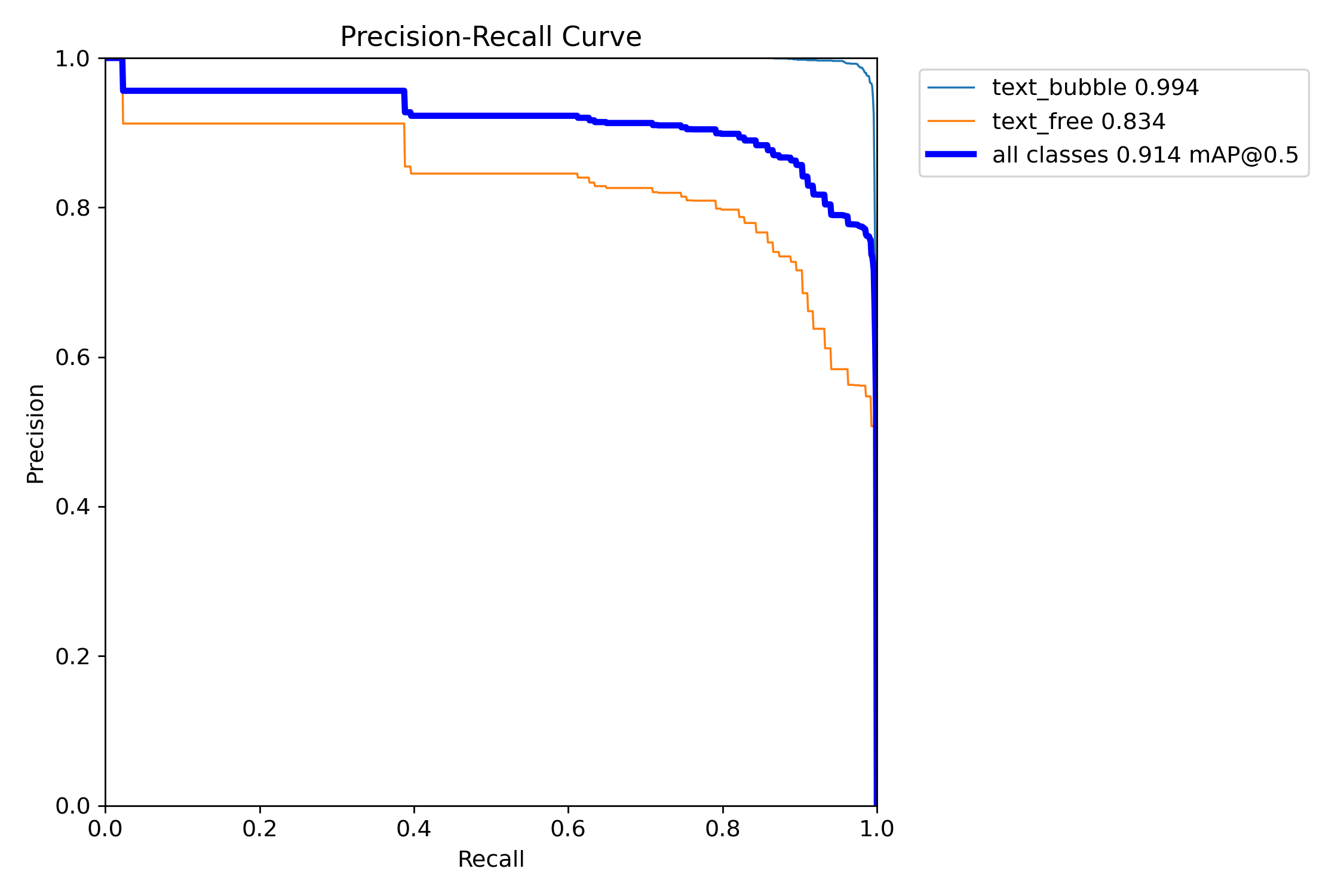

- model_creation/runs/detect/train53/val_batch0_pred.jpg +0 -0

- model_creation/runs/detect/train53/val_batch1_labels.jpg +0 -0

- model_creation/runs/detect/train53/val_batch1_pred.jpg +0 -0

- model_creation/runs/detect/train53/val_batch2_labels.jpg +0 -0

- model_creation/runs/detect/train53/val_batch2_pred.jpg +0 -0

- model_creation/translated_image.png +0 -0

- model_creation/yolov8s.pt +3 -0

- requirements.txt +9 -0

- server.py +83 -0

- static/index.js +57 -0

- static/styles.css +105 -0

- templates/index.html +48 -0

.gitignore

ADDED

|

@@ -0,0 +1,168 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

# Distribution / packaging

|

| 10 |

+

.Python

|

| 11 |

+

build/

|

| 12 |

+

develop-eggs/

|

| 13 |

+

dist/

|

| 14 |

+

downloads/

|

| 15 |

+

eggs/

|

| 16 |

+

.eggs/

|

| 17 |

+

lib/

|

| 18 |

+

lib64/

|

| 19 |

+

parts/

|

| 20 |

+

sdist/

|

| 21 |

+

var/

|

| 22 |

+

wheels/

|

| 23 |

+

share/python-wheels/

|

| 24 |

+

*.egg-info/

|

| 25 |

+

.installed.cfg

|

| 26 |

+

*.egg

|

| 27 |

+

MANIFEST

|

| 28 |

+

|

| 29 |

+

# PyInstaller

|

| 30 |

+

# Usually these files are written by a python script from a template

|

| 31 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 32 |

+

*.manifest

|

| 33 |

+

*.spec

|

| 34 |

+

|

| 35 |

+

# Installer logs

|

| 36 |

+

pip-log.txt

|

| 37 |

+

pip-delete-this-directory.txt

|

| 38 |

+

|

| 39 |

+

# Unit test / coverage reports

|

| 40 |

+

htmlcov/

|

| 41 |

+

.tox/

|

| 42 |

+

.nox/

|

| 43 |

+

.coverage

|

| 44 |

+

.coverage.*

|

| 45 |

+

.cache

|

| 46 |

+

nosetests.xml

|

| 47 |

+

coverage.xml

|

| 48 |

+

*.cover

|

| 49 |

+

*.py,cover

|

| 50 |

+

.hypothesis/

|

| 51 |

+

.pytest_cache/

|

| 52 |

+

cover/

|

| 53 |

+

|

| 54 |

+

# Translations

|

| 55 |

+

*.mo

|

| 56 |

+

*.pot

|

| 57 |

+

|

| 58 |

+

# Django stuff:

|

| 59 |

+

*.log

|

| 60 |

+

local_settings.py

|

| 61 |

+

db.sqlite3

|

| 62 |

+

db.sqlite3-journal

|

| 63 |

+

|

| 64 |

+

# Flask stuff:

|

| 65 |

+

instance/

|

| 66 |

+

.webassets-cache

|

| 67 |

+

|

| 68 |

+

# Scrapy stuff:

|

| 69 |

+

.scrapy

|

| 70 |

+

|

| 71 |

+

# Sphinx documentation

|

| 72 |

+

docs/_build/

|

| 73 |

+

|

| 74 |

+

# PyBuilder

|

| 75 |

+

.pybuilder/

|

| 76 |

+

target/

|

| 77 |

+

|

| 78 |

+

# Jupyter Notebook

|

| 79 |

+

.ipynb_checkpoints

|

| 80 |

+

|

| 81 |

+

# IPython

|

| 82 |

+

profile_default/

|

| 83 |

+

ipython_config.py

|

| 84 |

+

|

| 85 |

+

# pyenv

|

| 86 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 87 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 88 |

+

# .python-version

|

| 89 |

+

|

| 90 |

+

# pipenv

|

| 91 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 92 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 93 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 94 |

+

# install all needed dependencies.

|

| 95 |

+

#Pipfile.lock

|

| 96 |

+

|

| 97 |

+

# poetry

|

| 98 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 99 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 100 |

+

# commonly ignored for libraries.

|

| 101 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 102 |

+

#poetry.lock

|

| 103 |

+

|

| 104 |

+

# pdm

|

| 105 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 106 |

+

#pdm.lock

|

| 107 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 108 |

+

# in version control.

|

| 109 |

+

# https://pdm.fming.dev/latest/usage/project/#working-with-version-control

|

| 110 |

+

.pdm.toml

|

| 111 |

+

.pdm-python

|

| 112 |

+

.pdm-build/

|

| 113 |

+

|

| 114 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 115 |

+

__pypackages__/

|

| 116 |

+

|

| 117 |

+

# Celery stuff

|

| 118 |

+

celerybeat-schedule

|

| 119 |

+

celerybeat.pid

|

| 120 |

+

|

| 121 |

+

# SageMath parsed files

|

| 122 |

+

*.sage.py

|

| 123 |

+

|

| 124 |

+

# Environments

|

| 125 |

+

.env

|

| 126 |

+

.venv

|

| 127 |

+

env/

|

| 128 |

+

venv/

|

| 129 |

+

ENV/

|

| 130 |

+

env.bak/

|

| 131 |

+

venv.bak/

|

| 132 |

+

|

| 133 |

+

# Spyder project settings

|

| 134 |

+

.spyderproject

|

| 135 |

+

.spyproject

|

| 136 |

+

|

| 137 |

+

# Rope project settings

|

| 138 |

+

.ropeproject

|

| 139 |

+

|

| 140 |

+

# mkdocs documentation

|

| 141 |

+

/site

|

| 142 |

+

|

| 143 |

+

# mypy

|

| 144 |

+

.mypy_cache/

|

| 145 |

+

.dmypy.json

|

| 146 |

+

dmypy.json

|

| 147 |

+

|

| 148 |

+

# Pyre type checker

|

| 149 |

+

.pyre/

|

| 150 |

+

|

| 151 |

+

# pytype static type analyzer

|

| 152 |

+

.pytype/

|

| 153 |

+

|

| 154 |

+

# Cython debug symbols

|

| 155 |

+

cython_debug/

|

| 156 |

+

|

| 157 |

+

# PyCharm

|

| 158 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 159 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 160 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 161 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 162 |

+

#.idea/

|

| 163 |

+

|

| 164 |

+

Pipfile

|

| 165 |

+

Pipfile.lock

|

| 166 |

+

|

| 167 |

+

data/

|

| 168 |

+

bounding_box_images/

|

Dockerfile

CHANGED

|

@@ -1,7 +1,7 @@

|

|

| 1 |

# read the doc: https://huggingface.co/docs/hub/spaces-sdks-docker

|

| 2 |

# you will also find guides on how best to write your Dockerfile

|

| 3 |

|

| 4 |

-

FROM python:3.

|

| 5 |

|

| 6 |

WORKDIR /code

|

| 7 |

|

|

@@ -9,6 +9,20 @@ COPY ./requirements.txt /code/requirements.txt

|

|

| 9 |

|

| 10 |

RUN pip install --no-cache-dir --upgrade -r /code/requirements.txt

|

| 11 |

|

|

|

|

|

|

|

|

|

|

| 12 |

COPY . .

|

| 13 |

|

| 14 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

# read the doc: https://huggingface.co/docs/hub/spaces-sdks-docker

|

| 2 |

# you will also find guides on how best to write your Dockerfile

|

| 3 |

|

| 4 |

+

FROM python:3.11

|

| 5 |

|

| 6 |

WORKDIR /code

|

| 7 |

|

|

|

|

| 9 |

|

| 10 |

RUN pip install --no-cache-dir --upgrade -r /code/requirements.txt

|

| 11 |

|

| 12 |

+

# Install espeak

|

| 13 |

+

RUN apt-get install -y espeak

|

| 14 |

+

|

| 15 |

COPY . .

|

| 16 |

|

| 17 |

+

RUN useradd -m -u 1000 user

|

| 18 |

+

|

| 19 |

+

USER user

|

| 20 |

+

|

| 21 |

+

ENV HOME=/home/user \

|

| 22 |

+

PATH=/home/user/.local/bin:$PATH

|

| 23 |

+

|

| 24 |

+

WORKDIR $HOME/app

|

| 25 |

+

|

| 26 |

+

COPY --chown=user . $HOME/app

|

| 27 |

+

|

| 28 |

+

CMD ["uvicorn", "server:app", "--host", "0.0.0.0", "--port", "7860"]

|

README.md

CHANGED

|

@@ -1,10 +1,83 @@

|

|

| 1 |

---

|

| 2 |

title: Manga Translator

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom:

|

| 5 |

-

colorTo:

|

| 6 |

sdk: docker

|

| 7 |

pinned: false

|

| 8 |

---

|

| 9 |

|

| 10 |

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

title: Manga Translator

|

| 3 |

+

emoji: 📖

|

| 4 |

+

colorFrom: pink

|

| 5 |

+

colorTo: yellow

|

| 6 |

sdk: docker

|

| 7 |

pinned: false

|

| 8 |

---

|

| 9 |

|

| 10 |

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

| 11 |

+

|

| 12 |

+

# Manga Translator

|

| 13 |

+

|

| 14 |

+

- [Manga Translator](#manga-translator)

|

| 15 |

+

- [Introduction](#introduction)

|

| 16 |

+

- [Approach](#approach)

|

| 17 |

+

- [Data Collection](#data-collection)

|

| 18 |

+

- [Yolov8](#yolov8)

|

| 19 |

+

- [Manga-ocr](#manga-ocr)

|

| 20 |

+

- [Deep-translator](#deep-translator)

|

| 21 |

+

- [Server](#server)

|

| 22 |

+

- [Demo](#demo)

|

| 23 |

+

|

| 24 |

+

## Introduction

|

| 25 |

+

|

| 26 |

+

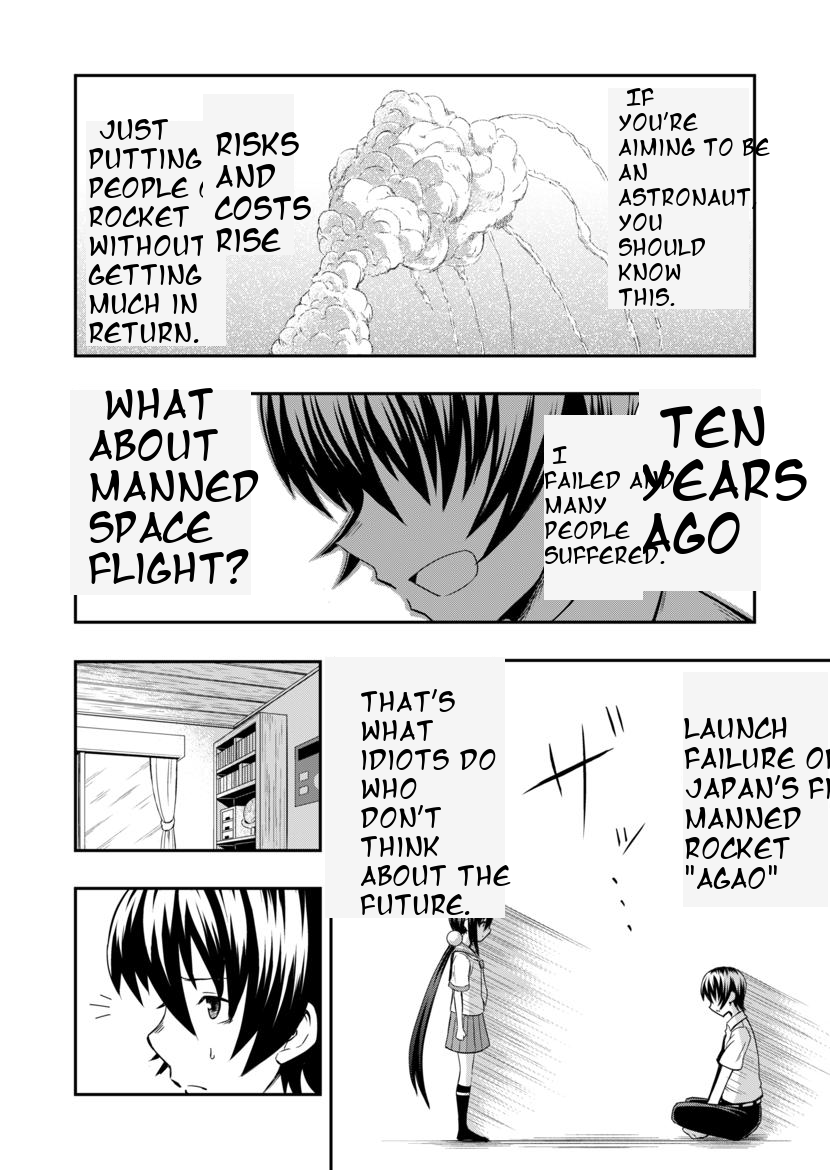

I love reading manga, and I can't wait for the next chapter of my favorite manga to be released. However, the newest chapters are usually in Japanese, and they are translated to English after some time. I want to read the newest chapters as soon as possible, so I decided to build a manga translator that can translate Japanese manga to English.

|

| 27 |

+

|

| 28 |

+

## Approach

|

| 29 |

+

|

| 30 |

+

I want to translate the text in the manga images from Japanese to English. I will first need to know where these speech bubbles are on the image. For this I will use `Yolov8` to detect the speech bubbles. Once I have the speech bubbles, I will use `manga-ocr` to extract the text from the speech bubbles. Finally, I will use `deep-translator` to translate the text from Japanese to English.

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

### Data Collection

|

| 35 |

+

|

| 36 |

+

This [dataset](https://universe.roboflow.com/speechbubbledetection-y9yz3/bubble-detection-gbjon/dataset/2#) contains over 8500 images of manga pages together with their annotations from Roboflow. I will use this dataset to train `Yolov8` to detect the speech bubbles in the manga images. To use this dataset with Yolov8, I will need to convert the annotations to the YOLO format, which is a text file containing the class label and the bounding box coordinates of the object in the image.

|

| 37 |

+

|

| 38 |

+

This dataset is over 1.7GB in size, so I will need to download it to my local machine. The rest of the code should be run after the dataset has been downloaded and extracted in this directory.

|

| 39 |

+

|

| 40 |

+

The dataset contains mostly English manga, but that is fine since I am only interested in the speech bubbles.

|

| 41 |

+

|

| 42 |

+

### Yolov8

|

| 43 |

+

|

| 44 |

+

`Yolov8` is a state-of-the-art, real-time object detection system [that I've used in the past before](https://github.com/Detopall/parking-lot-prediction). I will use `Yolov8` to detect the speech bubbles in the manga images.

|

| 45 |

+

|

| 46 |

+

### Manga-ocr

|

| 47 |

+

|

| 48 |

+

Optical character recognition for Japanese text, with the main focus being Japanese manga. This Python package is built and trained specifically for extracting text from manga images. This makes it perfect for extracting text from the speech bubbles in the manga images.

|

| 49 |

+

|

| 50 |

+

### Deep-translator

|

| 51 |

+

|

| 52 |

+

`Deep-translator` is a Python package that uses the Google Translate API to translate text from one language to another. I will use `deep-translator` to translate the text extracted from the manga images from Japanese to English.

|

| 53 |

+

|

| 54 |

+

## Server

|

| 55 |

+

|

| 56 |

+

I created a simple server and client using FastAPI. The server will receive the manga image from the client, detect the speech bubbles, extract the text from the speech bubbles, and translate the text from Japanese to English. The server will then send the translated text back to the client.

|

| 57 |

+

|

| 58 |

+

To run the server, you will need to install the required packages. You can do this by running the following command:

|

| 59 |

+

|

| 60 |

+

```bash

|

| 61 |

+

pip install -r requirements.txt

|

| 62 |

+

```

|

| 63 |

+

|

| 64 |

+

You can then start the server by running the following command:

|

| 65 |

+

|

| 66 |

+

```bash

|

| 67 |

+

python app.py

|

| 68 |

+

```

|

| 69 |

+

|

| 70 |

+

The server will start running on `http://localhost:8000`. You can then send a POST request to `http://localhost:8000/predict` with the manga image as the request body.

|

| 71 |

+

|

| 72 |

+

```json

|

| 73 |

+

POST /predict

|

| 74 |

+

{

|

| 75 |

+

"image": "base64_encoded_image"

|

| 76 |

+

}

|

| 77 |

+

```

|

| 78 |

+

|

| 79 |

+

## Demo

|

| 80 |

+

|

| 81 |

+

The following video is a screen recording of the client sending a manga image to the server, and the server detecting the speech bubbles, extracting the text, and translating the text from Japanese to English.

|

| 82 |

+

|

| 83 |

+

[](https://www.youtube.com/watch?v=aEOpx1_CUGI)

|

assets/MangaTranslator.png

ADDED

|

fonts/GL-NovantiquaMinamoto.ttf

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:6dd6f8754f1e5ffd3c21a514c15b6bcb0cd5893a4cb60fcd51f795eb9167d4ce

|

| 3 |

+

size 10225352

|

fonts/mangat.ttf

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7de5680ca9a17be79d6b311c1010865e4352da94f3512f6f1738111381a59a26

|

| 3 |

+

size 29964

|

model_creation/011.jpg

ADDED

|

model_creation/main.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

model_creation/runs/detect/train5/F1_curve.png

ADDED

|

model_creation/runs/detect/train5/PR_curve.png

ADDED

|

model_creation/runs/detect/train5/P_curve.png

ADDED

|

model_creation/runs/detect/train5/R_curve.png

ADDED

|

model_creation/runs/detect/train5/args.yaml

ADDED

|

@@ -0,0 +1,106 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

task: detect

|

| 2 |

+

mode: train

|

| 3 |

+

model: yolov8s.pt

|

| 4 |

+

data: /content/data/data.yaml

|

| 5 |

+

epochs: 5

|

| 6 |

+

time: null

|

| 7 |

+

patience: 100

|

| 8 |

+

batch: 16

|

| 9 |

+

imgsz: 640

|

| 10 |

+

save: true

|

| 11 |

+

save_period: -1

|

| 12 |

+

cache: false

|

| 13 |

+

device: null

|

| 14 |

+

workers: 8

|

| 15 |

+

project: null

|

| 16 |

+

name: train5

|

| 17 |

+

exist_ok: false

|

| 18 |

+

pretrained: true

|

| 19 |

+

optimizer: auto

|

| 20 |

+

verbose: true

|

| 21 |

+

seed: 0

|

| 22 |

+

deterministic: true

|

| 23 |

+

single_cls: false

|

| 24 |

+

rect: false

|

| 25 |

+

cos_lr: false

|

| 26 |

+

close_mosaic: 10

|

| 27 |

+

resume: false

|

| 28 |

+

amp: true

|

| 29 |

+

fraction: 1.0

|

| 30 |

+

profile: false

|

| 31 |

+

freeze: null

|

| 32 |

+

multi_scale: false

|

| 33 |

+

overlap_mask: true

|

| 34 |

+

mask_ratio: 4

|

| 35 |

+

dropout: 0.0

|

| 36 |

+

val: true

|

| 37 |

+

split: val

|

| 38 |

+

save_json: false

|

| 39 |

+

save_hybrid: false

|

| 40 |

+

conf: null

|

| 41 |

+

iou: 0.7

|

| 42 |

+

max_det: 300

|

| 43 |

+

half: false

|

| 44 |

+

dnn: false

|

| 45 |

+

plots: true

|

| 46 |

+

source: null

|

| 47 |

+

vid_stride: 1

|

| 48 |

+

stream_buffer: false

|

| 49 |

+

visualize: false

|

| 50 |

+

augment: false

|

| 51 |

+

agnostic_nms: false

|

| 52 |

+

classes: null

|

| 53 |

+

retina_masks: false

|

| 54 |

+

embed: null

|

| 55 |

+

show: false

|

| 56 |

+

save_frames: false

|

| 57 |

+

save_txt: false

|

| 58 |

+

save_conf: false

|

| 59 |

+

save_crop: false

|

| 60 |

+

show_labels: true

|

| 61 |

+

show_conf: true

|

| 62 |

+

show_boxes: true

|

| 63 |

+

line_width: null

|

| 64 |

+

format: torchscript

|

| 65 |

+

keras: false

|

| 66 |

+

optimize: false

|

| 67 |

+

int8: false

|

| 68 |

+

dynamic: false

|

| 69 |

+

simplify: false

|

| 70 |

+

opset: null

|

| 71 |

+

workspace: 4

|

| 72 |

+

nms: false

|

| 73 |

+

lr0: 0.01

|

| 74 |

+

lrf: 0.01

|

| 75 |

+

momentum: 0.937

|

| 76 |

+

weight_decay: 0.0005

|

| 77 |

+

warmup_epochs: 3.0

|

| 78 |

+

warmup_momentum: 0.8

|

| 79 |

+

warmup_bias_lr: 0.1

|

| 80 |

+

box: 7.5

|

| 81 |

+

cls: 0.5

|

| 82 |

+

dfl: 1.5

|

| 83 |

+

pose: 12.0

|

| 84 |

+

kobj: 1.0

|

| 85 |

+

label_smoothing: 0.0

|

| 86 |

+

nbs: 64

|

| 87 |

+

hsv_h: 0.015

|

| 88 |

+

hsv_s: 0.7

|

| 89 |

+

hsv_v: 0.4

|

| 90 |

+

degrees: 0.0

|

| 91 |

+

translate: 0.1

|

| 92 |

+

scale: 0.5

|

| 93 |

+

shear: 0.0

|

| 94 |

+

perspective: 0.0

|

| 95 |

+

flipud: 0.0

|

| 96 |

+

fliplr: 0.5

|

| 97 |

+

bgr: 0.0

|

| 98 |

+

mosaic: 1.0

|

| 99 |

+

mixup: 0.0

|

| 100 |

+

copy_paste: 0.0

|

| 101 |

+

auto_augment: randaugment

|

| 102 |

+

erasing: 0.4

|

| 103 |

+

crop_fraction: 1.0

|

| 104 |

+

cfg: null

|

| 105 |

+

tracker: botsort.yaml

|

| 106 |

+

save_dir: runs/detect/train5

|

model_creation/runs/detect/train5/confusion_matrix.png

ADDED

|

model_creation/runs/detect/train5/confusion_matrix_normalized.png

ADDED

|

model_creation/runs/detect/train5/events.out.tfevents.1716832099.517a5a4e3ae1.2051.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:71ffd4980af6bc63e2b5261b3bef21512ea589d084d037f2af7f528c267129cb

|

| 3 |

+

size 189152

|

model_creation/runs/detect/train5/labels.jpg

ADDED

|

model_creation/runs/detect/train5/labels_correlogram.jpg

ADDED

|

model_creation/runs/detect/train5/results.csv

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

epoch, train/box_loss, train/cls_loss, train/dfl_loss, metrics/precision(B), metrics/recall(B), metrics/mAP50(B), metrics/mAP50-95(B), val/box_loss, val/cls_loss, val/dfl_loss, lr/pg0, lr/pg1, lr/pg2

|

| 2 |

+

1, 0.89006, 0.67441, 1.0306, 0.82238, 0.8392, 0.85022, 0.60429, 0.70056, 0.38437, 0.96738, 0.00055456, 0.00055456, 0.00055456

|

| 3 |

+

2, 0.84925, 0.51813, 1.0105, 0.82923, 0.89142, 0.86099, 0.61496, 0.70351, 0.35903, 0.97034, 0.0008904, 0.0008904, 0.0008904

|

| 4 |

+

3, 0.8267, 0.48975, 1.0046, 0.74549, 0.82015, 0.79615, 0.60803, 0.6697, 0.34986, 0.95315, 0.0010062, 0.0010062, 0.0010062

|

| 5 |

+

4, 0.7923, 0.44796, 0.98622, 0.8072, 0.93015, 0.88952, 0.6569, 0.64922, 0.31112, 0.9423, 0.0006768, 0.0006768, 0.0006768

|

| 6 |

+

5, 0.75979, 0.41155, 0.97252, 0.87983, 0.9133, 0.91496, 0.68457, 0.65444, 0.29384, 0.93771, 0.00034674, 0.00034674, 0.00034674

|

model_creation/runs/detect/train5/results.png

ADDED

|

model_creation/runs/detect/train5/train_batch0.jpg

ADDED

|

model_creation/runs/detect/train5/train_batch1.jpg

ADDED

|

model_creation/runs/detect/train5/train_batch2.jpg

ADDED

|

model_creation/runs/detect/train5/val_batch0_labels.jpg

ADDED

|

model_creation/runs/detect/train5/val_batch0_pred.jpg

ADDED

|

model_creation/runs/detect/train5/val_batch1_labels.jpg

ADDED

|

model_creation/runs/detect/train5/val_batch1_pred.jpg

ADDED

|

model_creation/runs/detect/train5/val_batch2_labels.jpg

ADDED

|

model_creation/runs/detect/train5/val_batch2_pred.jpg

ADDED

|

model_creation/runs/detect/train5/weights/best.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e96681ca0f2777bbf9b81f9ec2c8c9098dc775ee525091491d6ec37e39f0140d

|

| 3 |

+

size 22500057

|

model_creation/runs/detect/train5/weights/last.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:229ada749eb509008639fedd342fee7c851ce0d068af481a0e230863a665e373

|

| 3 |

+

size 22500057

|

model_creation/runs/detect/train53/F1_curve.png

ADDED

|

model_creation/runs/detect/train53/PR_curve.png

ADDED

|

model_creation/runs/detect/train53/P_curve.png

ADDED

|

model_creation/runs/detect/train53/R_curve.png

ADDED

|

model_creation/runs/detect/train53/confusion_matrix.png

ADDED

|

model_creation/runs/detect/train53/confusion_matrix_normalized.png

ADDED

|

model_creation/runs/detect/train53/val_batch0_labels.jpg

ADDED

|

model_creation/runs/detect/train53/val_batch0_pred.jpg

ADDED

|

model_creation/runs/detect/train53/val_batch1_labels.jpg

ADDED

|

model_creation/runs/detect/train53/val_batch1_pred.jpg

ADDED

|

model_creation/runs/detect/train53/val_batch2_labels.jpg

ADDED

|

model_creation/runs/detect/train53/val_batch2_pred.jpg

ADDED

|

model_creation/translated_image.png

ADDED

|

model_creation/yolov8s.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:268e5bb54c640c96c3510224833bc2eeacab4135c6deb41502156e39986b562d

|

| 3 |

+

size 22573363

|

requirements.txt

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

ipykernel==6.29.4

|

| 2 |

+

pillow==10.3.0

|

| 3 |

+

ultralytics==8.2.23

|

| 4 |

+

manga-ocr==0.1.11

|

| 5 |

+

googletrans==4.0.0-rc1

|

| 6 |

+

deep-translator==1.11.4

|

| 7 |

+

fastapi==0.111.0

|

| 8 |

+

uvicorn==0.30.0

|

| 9 |

+

|

server.py

ADDED

|

@@ -0,0 +1,83 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

This file contains the FastAPI application that serves the web interface and handles the API requests.

|

| 3 |

+

"""

|

| 4 |

+

|

| 5 |

+

import os

|

| 6 |

+

import io

|

| 7 |

+

import base64

|

| 8 |

+

|

| 9 |

+

from fastapi import FastAPI

|

| 10 |

+

from fastapi.middleware.cors import CORSMiddleware

|

| 11 |

+

from fastapi.staticfiles import StaticFiles

|

| 12 |

+

from fastapi.templating import Jinja2Templates

|

| 13 |

+

from starlette.requests import Request

|

| 14 |

+

from PIL import Image

|

| 15 |

+

import uvicorn

|

| 16 |

+

from ultralytics import YOLO

|

| 17 |

+

|

| 18 |

+

from utils.predict_bounding_boxes import predict_bounding_boxes

|

| 19 |

+

from utils.manga_ocr import get_text_from_images

|

| 20 |

+

from utils.translate_manga import translate_manga

|

| 21 |

+

from utils.write_text_on_image import write_text

|

| 22 |

+

|

| 23 |

+

# Load the object detection model

|

| 24 |

+

best_model_path = "./model_creation/runs/detect/train5"

|

| 25 |

+

object_detection_model = YOLO(os.path.join(best_model_path, "weights/best.pt"))

|

| 26 |

+

|

| 27 |

+

app = FastAPI()

|

| 28 |

+

|

| 29 |

+

# Add CORS middleware

|

| 30 |

+

app.add_middleware(

|

| 31 |

+

CORSMiddleware,

|

| 32 |

+

allow_origins=["*"],

|

| 33 |

+

allow_methods=["*"],

|

| 34 |

+

allow_headers=["*"]

|

| 35 |

+

)

|

| 36 |

+

|

| 37 |

+

# Serve static files and templates

|

| 38 |

+

app.mount("/static", StaticFiles(directory="static"), name="static")

|

| 39 |

+

app.mount("/fonts", StaticFiles(directory="fonts"), name="fonts")

|

| 40 |

+

templates = Jinja2Templates(directory="templates")

|

| 41 |

+

|

| 42 |

+

@app.get("/")

|

| 43 |

+

def home(request: Request):

|

| 44 |

+

return templates.TemplateResponse("index.html", {"request": request})

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

@app.post("/predict")

|

| 48 |

+

def predict(request: dict):

|

| 49 |

+

image = request["image"]

|

| 50 |

+

|

| 51 |

+

# Decode base64-encoded image

|

| 52 |

+

image = base64.b64decode(image)

|

| 53 |

+

image = Image.open(io.BytesIO(image))

|

| 54 |

+

|

| 55 |

+

# Save the image

|

| 56 |

+

image.save("image.jpg")

|

| 57 |

+

|

| 58 |

+

# Perform object detection

|

| 59 |

+

result = predict_bounding_boxes(object_detection_model, "image.jpg")

|

| 60 |

+

|

| 61 |

+

# Extract text from images

|

| 62 |

+

text_list = get_text_from_images("./bounding_box_images")

|

| 63 |

+

|

| 64 |

+

# Translate the manga

|

| 65 |

+

translated_text_list = translate_manga(text_list)

|

| 66 |

+

|

| 67 |

+

# Write translation text on image

|

| 68 |

+

translated_image = write_text(result, translated_text_list, "image.jpg")

|

| 69 |

+

|

| 70 |

+

# Convert the image to base64

|

| 71 |

+

buff = io.BytesIO()

|

| 72 |

+

translated_image.save(buff, format="JPEG")

|

| 73 |

+

img_str = base64.b64encode(buff.getvalue()).decode("utf-8")

|

| 74 |

+

|

| 75 |

+

# Clean up

|

| 76 |

+

os.remove("image.jpg")

|

| 77 |

+

os.remove("translated_image.png")

|

| 78 |

+

|

| 79 |

+

return {"image": img_str}

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

if __name__ == '__main__':

|

| 83 |

+

uvicorn.run('app:app', host='localhost', port=8000, reload=True)

|

static/index.js

ADDED

|

@@ -0,0 +1,57 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"use strict";

|

| 2 |

+

|

| 3 |

+

async function predict() {

|

| 4 |

+

const fileInput = document.getElementById('fileInput');

|

| 5 |

+

const translateButton = document.getElementById('translateButton');

|

| 6 |

+

const spinner = document.getElementById('spinner');

|

| 7 |

+

const inputImage = document.getElementById('inputImage');

|

| 8 |

+

const outputImage = document.getElementById('outputImage');

|

| 9 |

+

const downloadButton = document.getElementById('downloadButton');

|

| 10 |

+

|

| 11 |

+

downloadButton.style.display = 'none';

|

| 12 |

+

|

| 13 |

+

if (fileInput.files.length === 0) {

|

| 14 |

+

alert('Please select an image file.');

|

| 15 |

+

return;

|

| 16 |

+

}

|

| 17 |

+

|

| 18 |

+

const file = fileInput.files[0];

|

| 19 |

+

const reader = new FileReader();

|

| 20 |

+

|

| 21 |

+

reader.onload = function () {

|

| 22 |

+

const base64Image = reader.result.split(',')[1];

|

| 23 |

+

inputImage.src = `data:image/jpeg;base64,${base64Image}`;

|

| 24 |

+

inputImage.style.display = 'block';

|

| 25 |

+

};

|

| 26 |

+

|

| 27 |

+

reader.readAsDataURL(file);

|

| 28 |

+

|

| 29 |

+

translateButton.style.display = 'none';

|

| 30 |

+

spinner.style.display = 'block';

|

| 31 |

+

|

| 32 |

+

reader.onloadend = async function () {

|

| 33 |

+

const base64Image = reader.result.split(',')[1];

|

| 34 |

+

|

| 35 |

+

const response = await fetch('/predict', {

|

| 36 |

+

method: 'POST',

|

| 37 |

+

headers: {

|

| 38 |

+

'Content-Type': 'application/json'

|

| 39 |

+

},

|

| 40 |

+

body: JSON.stringify({ image: base64Image })

|

| 41 |

+

});

|

| 42 |

+

|

| 43 |

+

const result = await response.json();

|

| 44 |

+

if (response.status !== 200) {

|

| 45 |

+

alert(result.error);

|

| 46 |

+

return;

|

| 47 |

+

}

|

| 48 |

+

outputImage.src = `data:image/jpeg;base64,${result.image}`;

|

| 49 |

+

outputImage.style.display = 'block';

|

| 50 |

+

downloadButton.href = outputImage.src;

|

| 51 |

+

downloadButton.style.display = 'block';

|

| 52 |

+

|

| 53 |

+

translateButton.style.display = 'inline-block';

|

| 54 |

+

spinner.style.display = 'none';

|

| 55 |

+

|

| 56 |

+

};

|

| 57 |

+

}

|

static/styles.css

ADDED

|

@@ -0,0 +1,105 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

@font-face {

|

| 2 |

+

font-family: "MangaFont";

|

| 3 |

+

src: url("../fonts/mangat.ttf") format("truetype");

|

| 4 |

+

}

|

| 5 |

+

|

| 6 |

+

body {

|

| 7 |

+

font-family: "MangaFont", Arial, sans-serif;

|

| 8 |

+

text-align: center;

|

| 9 |

+

background-color: #f0f0f0;

|

| 10 |

+

margin: 0;

|

| 11 |

+

padding: 0;

|

| 12 |

+

}

|

| 13 |

+

|

| 14 |

+

header {

|

| 15 |

+

background-color: #4caf50;

|

| 16 |

+

color: white;

|

| 17 |

+

padding: 10px 0;

|

| 18 |

+

}

|

| 19 |

+

|

| 20 |

+

a {

|

| 21 |

+

color: white;

|

| 22 |

+

text-decoration: none;

|

| 23 |

+

}

|

| 24 |

+

|

| 25 |

+

.container {

|

| 26 |

+

padding: 20px;

|

| 27 |

+

}

|

| 28 |

+

|

| 29 |

+

input[type="file"] {

|

| 30 |

+

margin: 20px 0;

|

| 31 |

+

padding: 10px;

|

| 32 |

+

border: 2px solid #4caf50;

|

| 33 |

+

border-radius: 5px;

|

| 34 |

+

background-color: #fff;

|

| 35 |

+

cursor: pointer;

|

| 36 |

+

transition: border-color 0.3s;

|

| 37 |

+

}

|

| 38 |

+

|

| 39 |

+

input[type="file"]:hover {

|

| 40 |

+

border-color: #45a049;

|

| 41 |

+

}

|

| 42 |

+

|

| 43 |

+

button {

|

| 44 |

+

padding: 10px 20px;

|

| 45 |

+

background-color: #4caf50;

|

| 46 |

+

color: white;

|

| 47 |

+

border: none;

|

| 48 |

+

border-radius: 5px;

|

| 49 |

+

cursor: pointer;

|

| 50 |

+

font-size: 16px;

|

| 51 |

+

transition: background-color 0.3s, transform 0.3s;

|

| 52 |

+

}

|

| 53 |

+

|

| 54 |

+

button:hover {

|

| 55 |

+

background-color: #45a049;

|

| 56 |

+

transform: scale(1.05);

|

| 57 |

+

}

|

| 58 |

+

|

| 59 |

+

.spinner {

|

| 60 |

+

border: 16px solid #f3f3f3;

|

| 61 |

+

border-top: 16px solid #4caf50;

|

| 62 |

+

border-radius: 50%;

|

| 63 |

+

width: 50px;

|

| 64 |

+

height: 50px;

|

| 65 |

+

animation: spin 2s linear infinite;

|

| 66 |

+

margin: 20px auto;

|

| 67 |

+

}

|

| 68 |

+

|

| 69 |

+

@keyframes spin {

|

| 70 |

+

0% {

|

| 71 |

+

transform: rotate(0deg);

|

| 72 |

+

}

|

| 73 |

+

100% {

|

| 74 |

+

transform: rotate(360deg);

|

| 75 |

+

}

|

| 76 |

+

}

|

| 77 |

+

|

| 78 |

+

.images-container {

|

| 79 |

+

display: flex;

|

| 80 |

+

justify-content: space-around;

|

| 81 |

+

margin-top: 20px;

|

| 82 |

+

}

|

| 83 |

+

|

| 84 |

+

.image-wrapper {

|

| 85 |

+

width: 45%;

|

| 86 |

+

}

|

| 87 |

+

|

| 88 |

+

.image-wrapper h3 {

|

| 89 |

+

margin-bottom: 10px;

|

| 90 |

+

}

|

| 91 |

+

|

| 92 |

+

#fileInput::file-selector-button {

|

| 93 |

+

padding: 10px 20px;

|

| 94 |

+

background-color: #4caf50;

|

| 95 |

+

color: white;

|

| 96 |

+

border: none;

|

| 97 |

+

border-radius: 5px;

|

| 98 |

+

cursor: pointer;

|

| 99 |

+

transition: background-color 0.3s, transform 0.3s;

|

| 100 |

+

}

|

| 101 |

+

|

| 102 |

+

#fileInput::file-selector-button:hover {

|

| 103 |

+

background-color: #45a049;

|

| 104 |

+

transform: scale(1.05);

|

| 105 |

+

}

|

templates/index.html

ADDED

|

@@ -0,0 +1,48 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<!DOCTYPE html>

|

| 2 |

+

<html lang="en">

|

| 3 |

+

<head>

|

| 4 |

+

<meta charset="UTF-8" />

|

| 5 |

+

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

|

| 6 |

+

<title>Manga Translator</title>

|

| 7 |

+

<link rel="stylesheet" href="/static/styles.css" />

|

| 8 |

+

</head>

|

| 9 |

+

<body>

|

| 10 |

+

<header>

|

| 11 |

+

<h1>Manga Translator</h1>

|

| 12 |

+

</header>

|

| 13 |

+

<div class="container">

|

| 14 |

+

<input type="file" id="fileInput" accept="image/*" />

|

| 15 |

+

<br />

|

| 16 |

+

<button id="translateButton" onclick="predict()">Translate</button>

|

| 17 |

+

<div id="spinner" class="spinner" style="display: none"></div>

|

| 18 |

+

<div class="images-container">

|

| 19 |

+

<div class="image-wrapper">

|

| 20 |

+

<h3>Original Image</h3>

|

| 21 |

+

<img

|

| 22 |

+

id="inputImage"

|

| 23 |

+

alt="Selected Manga"

|

| 24 |

+

style="max-width: 100%"

|

| 25 |

+

/>

|

| 26 |

+

</div>

|

| 27 |

+

<div class="image-wrapper">

|

| 28 |

+

<h3>Translated Image</h3>

|

| 29 |

+

<img

|

| 30 |

+

id="outputImage"

|

| 31 |

+

alt="Translated Manga"

|

| 32 |

+

style="max-width: 100%; display: none"

|

| 33 |

+

/>

|

| 34 |

+

<button>

|

| 35 |

+

<a

|

| 36 |

+

id="downloadButton"

|

| 37 |

+

href="#"

|

| 38 |

+

download="translated_manga.jpg"

|

| 39 |

+

style="display: none"

|

| 40 |

+

>Download Translated Image</a

|

| 41 |

+

>

|

| 42 |

+

</button>

|

| 43 |

+

</div>

|

| 44 |

+

</div>

|

| 45 |

+

</div>

|

| 46 |

+

<script src="/static/index.js"></script>

|

| 47 |

+

</body>

|

| 48 |

+

</html>

|