Spaces:

Running

Running

refactor based on changes on the github project

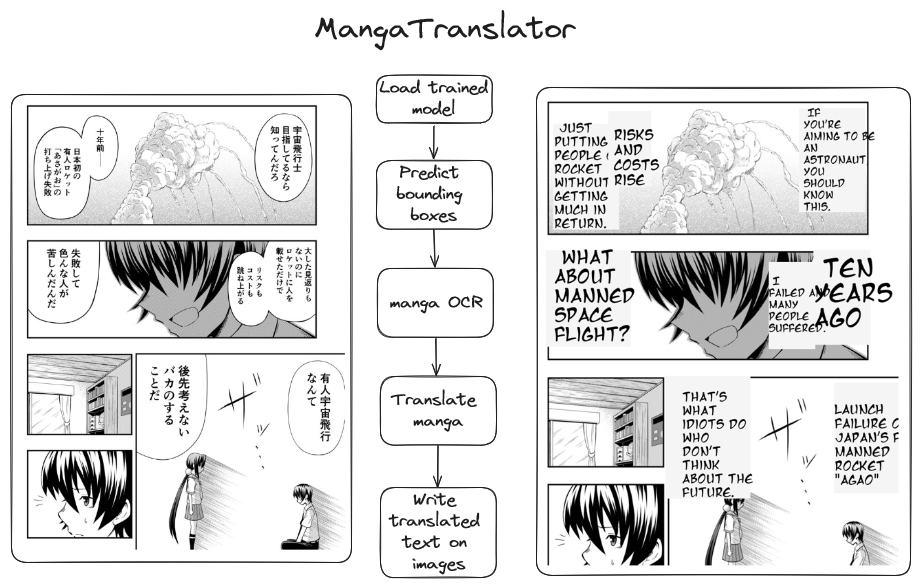

Browse files- assets/MangaTranslator.png +0 -0

- fonts/fonts_animeace_i.ttf +3 -0

- model_creation/main.ipynb +0 -0

- model_creation/translated_image.png +0 -0

- requirements.txt +2 -1

- server.py +27 -17

- utils/manga_ocr.py +5 -13

- utils/predict_bounding_boxes.py +3 -2

- utils/process_contour.py +24 -0

- utils/translate_manga.py +6 -8

- utils/write_text_on_image.py +37 -205

assets/MangaTranslator.png

CHANGED

|

|

fonts/fonts_animeace_i.ttf

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:8a210dee89d1c9e27c5c6ebc2b8141fb9335ae751c773b137ce6b3252c72cac2

|

| 3 |

+

size 28820

|

model_creation/main.ipynb

CHANGED

|

The diff for this file is too large to render.

See raw diff

|

|

|

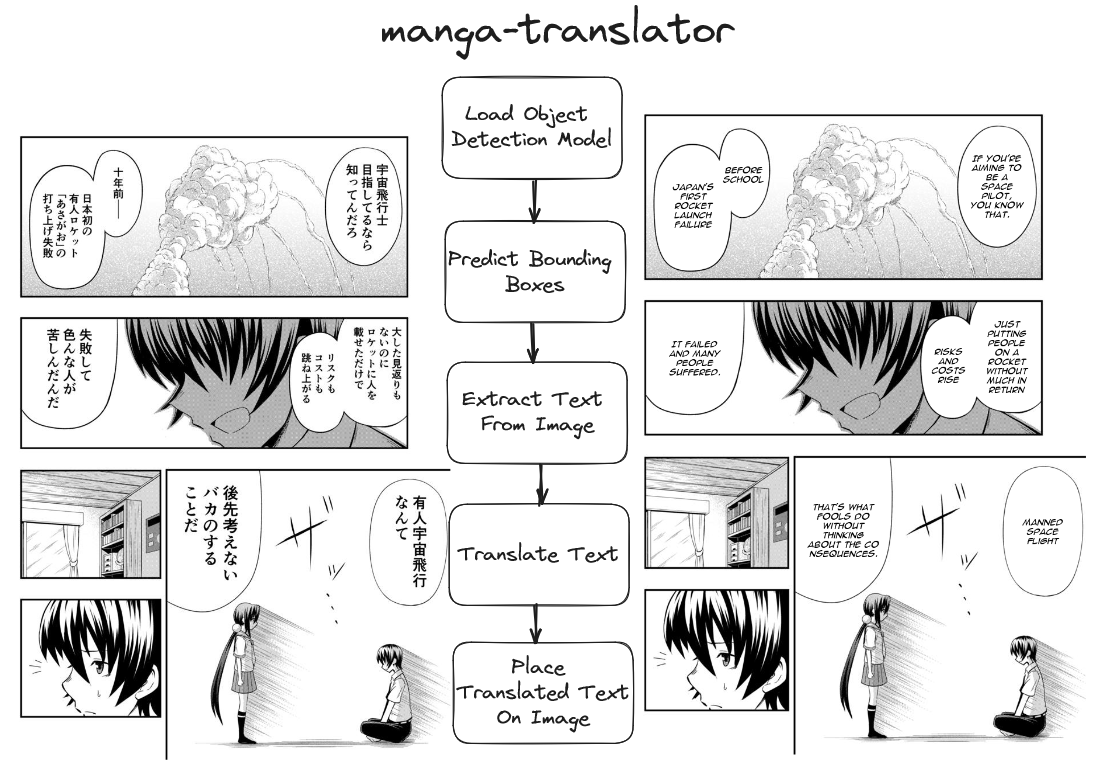

model_creation/translated_image.png

CHANGED

|

|

requirements.txt

CHANGED

|

@@ -6,4 +6,5 @@ googletrans==4.0.0-rc1

|

|

| 6 |

deep-translator==1.11.4

|

| 7 |

fastapi==0.110.3

|

| 8 |

uvicorn==0.30.0

|

| 9 |

-

|

|

|

|

|

|

| 6 |

deep-translator==1.11.4

|

| 7 |

fastapi==0.110.3

|

| 8 |

uvicorn==0.30.0

|

| 9 |

+

opencv-python==4.9.0.80

|

| 10 |

+

numpy==1.26.4

|

server.py

CHANGED

|

@@ -5,7 +5,9 @@ This file contains the FastAPI application that serves the web interface and han

|

|

| 5 |

import os

|

| 6 |

import io

|

| 7 |

import base64

|

|

|

|

| 8 |

|

|

|

|

| 9 |

from fastapi import FastAPI

|

| 10 |

from fastapi.middleware.cors import CORSMiddleware

|

| 11 |

from fastapi.staticfiles import StaticFiles

|

|

@@ -16,9 +18,11 @@ import uvicorn

|

|

| 16 |

from ultralytics import YOLO

|

| 17 |

|

| 18 |

from utils.predict_bounding_boxes import predict_bounding_boxes

|

| 19 |

-

from utils.manga_ocr import

|

| 20 |

from utils.translate_manga import translate_manga

|

| 21 |

-

from utils.

|

|

|

|

|

|

|

| 22 |

|

| 23 |

# Load the object detection model

|

| 24 |

best_model_path = "./model_creation/runs/detect/train5"

|

|

@@ -45,36 +49,42 @@ def home(request: Request):

|

|

| 45 |

|

| 46 |

|

| 47 |

@app.post("/predict")

|

| 48 |

-

def predict(request:

|

| 49 |

image = request["image"]

|

| 50 |

|

| 51 |

# Decode base64-encoded image

|

| 52 |

image = base64.b64decode(image)

|

| 53 |

image = Image.open(io.BytesIO(image))

|

|

|

|

|

|

|

| 54 |

|

| 55 |

-

# Save the image

|

| 56 |

-

image.save(

|

| 57 |

-

|

| 58 |

-

# Perform object detection

|

| 59 |

-

result = predict_bounding_boxes(object_detection_model, "image.jpg")

|

| 60 |

|

| 61 |

-

|

| 62 |

-

|

| 63 |

|

| 64 |

-

|

| 65 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 66 |

|

| 67 |

-

#

|

| 68 |

-

|

|

|

|

| 69 |

|

| 70 |

# Convert the image to base64

|

| 71 |

buff = io.BytesIO()

|

| 72 |

-

|

| 73 |

img_str = base64.b64encode(buff.getvalue()).decode("utf-8")

|

| 74 |

|

| 75 |

# Clean up

|

| 76 |

-

os.remove(

|

| 77 |

-

os.remove(

|

| 78 |

|

| 79 |

return {"image": img_str}

|

| 80 |

|

|

|

|

| 5 |

import os

|

| 6 |

import io

|

| 7 |

import base64

|

| 8 |

+

from typing import Dict

|

| 9 |

|

| 10 |

+

import numpy as np

|

| 11 |

from fastapi import FastAPI

|

| 12 |

from fastapi.middleware.cors import CORSMiddleware

|

| 13 |

from fastapi.staticfiles import StaticFiles

|

|

|

|

| 18 |

from ultralytics import YOLO

|

| 19 |

|

| 20 |

from utils.predict_bounding_boxes import predict_bounding_boxes

|

| 21 |

+

from utils.manga_ocr import get_text_from_image

|

| 22 |

from utils.translate_manga import translate_manga

|

| 23 |

+

from utils.process_contour import process_contour

|

| 24 |

+

from utils.write_text_on_image import add_text

|

| 25 |

+

|

| 26 |

|

| 27 |

# Load the object detection model

|

| 28 |

best_model_path = "./model_creation/runs/detect/train5"

|

|

|

|

| 49 |

|

| 50 |

|

| 51 |

@app.post("/predict")

|

| 52 |

+

def predict(request: Dict):

|

| 53 |

image = request["image"]

|

| 54 |

|

| 55 |

# Decode base64-encoded image

|

| 56 |

image = base64.b64decode(image)

|

| 57 |

image = Image.open(io.BytesIO(image))

|

| 58 |

+

image_path = "image.png"

|

| 59 |

+

translated_image_path = "translated_image.png"

|

| 60 |

|

| 61 |

+

# Save the image locally

|

| 62 |

+

image.save(image_path)

|

|

|

|

|

|

|

|

|

|

| 63 |

|

| 64 |

+

results = predict_bounding_boxes(object_detection_model, image_path)

|

| 65 |

+

image = np.array(image)

|

| 66 |

|

| 67 |

+

for result in results:

|

| 68 |

+

x1, y1, x2, y2, _, _ = result

|

| 69 |

+

detected_image = image[int(y1):int(y2), int(x1):int(x2)]

|

| 70 |

+

im = Image.fromarray(np.uint8((detected_image)*255))

|

| 71 |

+

text = get_text_from_image(im)

|

| 72 |

+

detected_image, cont = process_contour(detected_image)

|

| 73 |

+

text_translated = translate_manga(text)

|

| 74 |

+

add_text(detected_image, text_translated, cont)

|

| 75 |

|

| 76 |

+

# Display the translated image

|

| 77 |

+

result_image = Image.fromarray(image, 'RGB')

|

| 78 |

+

result_image.save(translated_image_path)

|

| 79 |

|

| 80 |

# Convert the image to base64

|

| 81 |

buff = io.BytesIO()

|

| 82 |

+

result_image.save(buff, format="PNG")

|

| 83 |

img_str = base64.b64encode(buff.getvalue()).decode("utf-8")

|

| 84 |

|

| 85 |

# Clean up

|

| 86 |

+

os.remove(image_path)

|

| 87 |

+

os.remove(translated_image_path)

|

| 88 |

|

| 89 |

return {"image": img_str}

|

| 90 |

|

utils/manga_ocr.py

CHANGED

|

@@ -1,22 +1,14 @@

|

|

| 1 |

"""

|

| 2 |

-

This module is used to extract text from images using

|

| 3 |

"""

|

| 4 |

|

| 5 |

-

import os

|

| 6 |

-

from typing import List

|

| 7 |

from manga_ocr import MangaOcr

|

| 8 |

|

| 9 |

-

|

|

|

|

| 10 |

"""

|

| 11 |

-

Extract text from images using

|

| 12 |

"""

|

| 13 |

mocr = MangaOcr()

|

| 14 |

|

| 15 |

-

|

| 16 |

-

|

| 17 |

-

for image_path in os.listdir(bounding_box_images_path):

|

| 18 |

-

image_path = os.path.join(bounding_box_images_path, image_path)

|

| 19 |

-

text = mocr(image_path)

|

| 20 |

-

text_list.append(text)

|

| 21 |

-

|

| 22 |

-

return text_list

|

|

|

|

| 1 |

"""

|

| 2 |

+

This module is used to extract text from images using manga_ocr.

|

| 3 |

"""

|

| 4 |

|

|

|

|

|

|

|

| 5 |

from manga_ocr import MangaOcr

|

| 6 |

|

| 7 |

+

|

| 8 |

+

def get_text_from_image(image):

|

| 9 |

"""

|

| 10 |

+

Extract text from images using manga_ocr.

|

| 11 |

"""

|

| 12 |

mocr = MangaOcr()

|

| 13 |

|

| 14 |

+

return mocr(image)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

utils/predict_bounding_boxes.py

CHANGED

|

@@ -4,10 +4,11 @@ in images using the trained Object Detection model.

|

|

| 4 |

"""

|

| 5 |

import uuid

|

| 6 |

import os

|

|

|

|

| 7 |

from PIL import Image

|

| 8 |

from ultralytics import YOLO

|

| 9 |

|

| 10 |

-

def predict_bounding_boxes(model: YOLO, image_path: str) ->

|

| 11 |

"""

|

| 12 |

Predict bounding boxes for text in images using the trained Object Detection model.

|

| 13 |

"""

|

|

@@ -36,4 +37,4 @@ def predict_bounding_boxes(model: YOLO, image_path: str) -> YOLO:

|

|

| 36 |

# save each image under a unique name

|

| 37 |

cropped_image.save(f"{bounding_box_images_path}/{uuid.uuid4()}.png")

|

| 38 |

|

| 39 |

-

return result

|

|

|

|

| 4 |

"""

|

| 5 |

import uuid

|

| 6 |

import os

|

| 7 |

+

from typing import List

|

| 8 |

from PIL import Image

|

| 9 |

from ultralytics import YOLO

|

| 10 |

|

| 11 |

+

def predict_bounding_boxes(model: YOLO, image_path: str) -> List:

|

| 12 |

"""

|

| 13 |

Predict bounding boxes for text in images using the trained Object Detection model.

|

| 14 |

"""

|

|

|

|

| 37 |

# save each image under a unique name

|

| 38 |

cropped_image.save(f"{bounding_box_images_path}/{uuid.uuid4()}.png")

|

| 39 |

|

| 40 |

+

return result.boxes.data.tolist()

|

utils/process_contour.py

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

This module contains the function to process the contour in the image.

|

| 3 |

+

"""

|

| 4 |

+

from typing import Tuple

|

| 5 |

+

import cv2

|

| 6 |

+

import numpy as np

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

def process_contour(image: np.ndarray) -> Tuple[np.ndarray, np.ndarray]:

|

| 10 |

+

"""

|

| 11 |

+

Process the contour in the image.

|

| 12 |

+

"""

|

| 13 |

+

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

|

| 14 |

+

_, thresh = cv2.threshold(gray, 240, 255, cv2.THRESH_BINARY)

|

| 15 |

+

|

| 16 |

+

contours, _ = cv2.findContours(thresh, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

|

| 17 |

+

largest_contour = max(contours, key=cv2.contourArea)

|

| 18 |

+

|

| 19 |

+

mask = np.zeros_like(gray)

|

| 20 |

+

cv2.drawContours(mask, [largest_contour], -1, 255, cv2.FILLED)

|

| 21 |

+

|

| 22 |

+

image[mask == 255] = (255, 255, 255)

|

| 23 |

+

|

| 24 |

+

return image, largest_contour

|

utils/translate_manga.py

CHANGED

|

@@ -1,17 +1,15 @@

|

|

| 1 |

"""

|

| 2 |

This module is used to translate manga from Japanese to English.

|

| 3 |

"""

|

| 4 |

-

|

| 5 |

from deep_translator import GoogleTranslator

|

| 6 |

|

| 7 |

-

def translate_manga(

|

| 8 |

"""

|

| 9 |

Translate manga from Japanese to English.

|

| 10 |

"""

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

| 14 |

-

print("Translated text:", translated_text)

|

| 15 |

-

text_list[i] = translated_text

|

| 16 |

|

| 17 |

-

return

|

|

|

|

| 1 |

"""

|

| 2 |

This module is used to translate manga from Japanese to English.

|

| 3 |

"""

|

| 4 |

+

|

| 5 |

from deep_translator import GoogleTranslator

|

| 6 |

|

| 7 |

+

def translate_manga(text: str) -> str:

|

| 8 |

"""

|

| 9 |

Translate manga from Japanese to English.

|

| 10 |

"""

|

| 11 |

+

translated_text = GoogleTranslator(source="ja", target="en").translate(text)

|

| 12 |

+

print("Original text:", text)

|

| 13 |

+

print("Translated text:", translated_text)

|

|

|

|

|

|

|

| 14 |

|

| 15 |

+

return translated_text

|

utils/write_text_on_image.py

CHANGED

|

@@ -1,227 +1,59 @@

|

|

| 1 |

"""

|

| 2 |

-

This module

|

| 3 |

"""

|

| 4 |

-

import

|

| 5 |

-

from

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

from ultralytics import YOLO

|

| 9 |

|

| 10 |

|

| 11 |

-

def

|

| 12 |

"""

|

| 13 |

-

|

| 14 |

"""

|

| 15 |

|

| 16 |

-

|

| 17 |

-

|

| 18 |

-

|

| 19 |

-

box = None # For version 8.0.0

|

| 20 |

-

x = 0

|

| 21 |

-

y = 0

|

| 22 |

|

| 23 |

-

|

| 24 |

|

| 25 |

-

|

| 26 |

-

|

|

|

|

| 27 |

|

| 28 |

-

|

| 29 |

-

|

| 30 |

|

| 31 |

-

|

| 32 |

-

new_box = draw.textbbox((0, 0), text, font=new_font)

|

| 33 |

|

| 34 |

-

|

| 35 |

-

|

| 36 |

-

new_h = new_box[3] - new_box[1] # Right - Left

|

| 37 |

|

| 38 |

-

|

| 39 |

-

|

| 40 |

-

|

|

|

|

| 41 |

|

| 42 |

-

|

| 43 |

-

|

| 44 |

-

font = new_font

|

| 45 |

-

box = new_box

|

| 46 |

-

w = new_w

|

| 47 |

-

h = new_h

|

| 48 |

|

| 49 |

-

|

| 50 |

-

x = (width - w) // 2 - box[0] # Minus left margin

|

| 51 |

-

y = (height - h) // 2 - box[1] # Minus top margin

|

| 52 |

|

| 53 |

-

|

|

|

|

| 54 |

|

|

|

|

|

|

|

| 55 |

|

| 56 |

-

|

| 57 |

-

|

| 58 |

-

Add discoloration to the background color.

|

| 59 |

-

"""

|

| 60 |

-

|

| 61 |

-

r, g, b = color

|

| 62 |

-

r = max(0, min(255, r + strength))

|

| 63 |

-

g = max(0, min(255, g + strength))

|

| 64 |

-

b = max(0, min(255, b + strength))

|

| 65 |

-

|

| 66 |

-

if r == 255 and g == 255 and b == 255:

|

| 67 |

-

r, g, b = 245, 245, 245

|

| 68 |

-

|

| 69 |

-

return (r, g, b)

|

| 70 |

-

|

| 71 |

-

|

| 72 |

-

def get_background_color(image: Image, x_min: float,

|

| 73 |

-

y_min: float, x_max: float, y_max: float) -> Tuple[int, int, int]:

|

| 74 |

-

"""

|

| 75 |

-

Determine the background color of the text box.

|

| 76 |

-

"""

|

| 77 |

-

|

| 78 |

-

margin = 10

|

| 79 |

-

edge_region = image.crop(

|

| 80 |

-

(x_min - margin, y_min - margin, x_max + margin, y_max + margin))

|

| 81 |

-

|

| 82 |

-

# Find the most common color in the cropped region

|

| 83 |

-

edge_colors = edge_region.getcolors(

|

| 84 |

-

edge_region.size[0] * edge_region.size[1])

|

| 85 |

-

background_color = max(edge_colors, key=lambda x: x[0])[1]

|

| 86 |

-

|

| 87 |

-

# Add a bit of discoloration to the background color

|

| 88 |

-

background_color = add_discoloration(background_color, 40)

|

| 89 |

-

|

| 90 |

-

return background_color

|

| 91 |

-

|

| 92 |

-

|

| 93 |

-

def get_text_fill_color(background_color: Tuple[int, int, int]):

|

| 94 |

-

"""

|

| 95 |

-

Determine the text color based on the background color.

|

| 96 |

-

"""

|

| 97 |

-

luminance = (

|

| 98 |

-

0.299 * background_color[0]

|

| 99 |

-

+ 0.587 * background_color[1]

|

| 100 |

-

+ 0.114 * background_color[2]

|

| 101 |

-

) / 255

|

| 102 |

-

|

| 103 |

-

# Determine the text color based on the background luminance

|

| 104 |

-

if luminance > 0.5:

|

| 105 |

-

return "black"

|

| 106 |

-

else:

|

| 107 |

-

return "white"

|

| 108 |

-

|

| 109 |

-

|

| 110 |

-

def translated_words_fit(translated_text: str, font: ImageFont,

|

| 111 |

-

width: float, draw: ImageDraw) -> Tuple[bool, float]:

|

| 112 |

-

"""

|

| 113 |

-

Check if the translated words fit within the bounding box.

|

| 114 |

-

"""

|

| 115 |

-

words = translated_text.split()

|

| 116 |

-

total_width = 0

|

| 117 |

-

|

| 118 |

-

for word in words:

|

| 119 |

-

bbox = draw.textbbox((0, 0), word, font=font)

|

| 120 |

-

word_width = bbox[2] - bbox[0]

|

| 121 |

-

total_width += word_width

|

| 122 |

-

|

| 123 |

-

return total_width <= width, total_width

|

| 124 |

-

|

| 125 |

-

|

| 126 |

-

def recalculate_words_and_size(translated_text: List, font: ImageFont,

|

| 127 |

-

image: Image, total_width: float,

|

| 128 |

-

draw: ImageDraw, x_min: float, y_min: float,

|

| 129 |

-

x_max: float, y_max: float) -> List:

|

| 130 |

-

"""

|

| 131 |

-

Recalculate the words and font size to fit within the bounding box.

|

| 132 |

-

"""

|

| 133 |

-

words = translated_text.split()

|

| 134 |

-

even_split = total_width // len(words)

|

| 135 |

-

lines = []

|

| 136 |

-

current_line = ""

|

| 137 |

-

|

| 138 |

-

for word in words:

|

| 139 |

-

bbox = draw.textbbox((0, 0), word, font=font)

|

| 140 |

-

word_width = bbox[2] - bbox[0]

|

| 141 |

-

if len(current_line) + word_width > even_split:

|

| 142 |

-

lines.append(current_line)

|

| 143 |

-

current_line = word

|

| 144 |

-

else:

|

| 145 |

-

current_line += " " + word

|

| 146 |

-

lines.append(current_line)

|

| 147 |

-

translated_text = "\n".join(lines)

|

| 148 |

-

|

| 149 |

-

# Calculate font size and position again

|

| 150 |

-

font, x, y = get_font(image, translated_text, x_max - x_min, y_max - y_min)

|

| 151 |

-

return translated_text, font, x, y

|

| 152 |

-

|

| 153 |

-

|

| 154 |

-

def replace_text_with_translation(image: Image, text_boxes: List, translated_texts: List) -> Image:

|

| 155 |

-

"""

|

| 156 |

-

Replace the text in the bounding boxes with the translated text.

|

| 157 |

-

"""

|

| 158 |

-

|

| 159 |

-

draw = ImageDraw.Draw(image)

|

| 160 |

-

|

| 161 |

-

for text_box, translated_text in zip(text_boxes, translated_texts):

|

| 162 |

-

x_min, y_min, x_max, y_max = text_box

|

| 163 |

-

|

| 164 |

-

if translated_text is None:

|

| 165 |

-

continue

|

| 166 |

-

|

| 167 |

-

# Find the most common color in the text region

|

| 168 |

-

background_color = get_background_color(

|

| 169 |

-

image, x_min, y_min, x_max, y_max)

|

| 170 |

-

|

| 171 |

-

# Draw a rectangle to cover the text region with the original background color

|

| 172 |

-

draw.rectangle(((x_min, y_min), (x_max, y_max)), fill=background_color)

|

| 173 |

-

|

| 174 |

-

# Calculate font size and position

|

| 175 |

-

font, x, y = get_font(image, translated_text,

|

| 176 |

-

x_max - x_min, y_max - y_min)

|

| 177 |

-

|

| 178 |

-

# Split the words if they are too long

|

| 179 |

-

fits, total_width = translated_words_fit(

|

| 180 |

-

translated_text, font, x_max - x_min, draw)

|

| 181 |

-

|

| 182 |

-

if not fits or font.size < 30:

|

| 183 |

-

translated_text, font, x, y = recalculate_words_and_size(

|

| 184 |

-

translated_text, font, image, total_width, draw, x_min, y_min, x_max, y_max)

|

| 185 |

-

|

| 186 |

-

# Use local font

|

| 187 |

-

# Get full path to the font file

|

| 188 |

-

font_path = os.path.join(os.path.dirname(__file__), "../fonts/mangat.ttf")

|

| 189 |

-

font = ImageFont.truetype(

|

| 190 |

-

font=font_path, size=font.size, encoding="unic")

|

| 191 |

-

|

| 192 |

-

# Draw the translated text within the box

|

| 193 |

-

draw.text(

|

| 194 |

-

(x_min + x, y_min + y),

|

| 195 |

-

translated_text,

|

| 196 |

-

fill=get_text_fill_color(background_color),

|

| 197 |

-

font=font,

|

| 198 |

-

)

|

| 199 |

-

|

| 200 |

-

return image

|

| 201 |

-

|

| 202 |

-

|

| 203 |

-

def write_text(result: YOLO, text_list: List[str], image_path: str) -> None:

|

| 204 |

-

"""

|

| 205 |

-

Replace the text in the bounding boxes with the translated text.

|

| 206 |

-

"""

|

| 207 |

-

|

| 208 |

-

all_boxes = []

|

| 209 |

-

all_translated_texts = []

|

| 210 |

-

|

| 211 |

-

for i, box in enumerate(result.boxes):

|

| 212 |

-

coords = [round(x) for x in box.xyxy[0].tolist()]

|

| 213 |

-

text = text_list[i]

|

| 214 |

-

|

| 215 |

-

all_boxes.append(coords)

|

| 216 |

-

all_translated_texts.append(text)

|

| 217 |

|

| 218 |

-

|

|

|

|

| 219 |

|

| 220 |

-

|

| 221 |

-

image = replace_text_with_translation(

|

| 222 |

-

image, all_boxes, all_translated_texts)

|

| 223 |

|

| 224 |

-

|

| 225 |

-

image.save("translated_image.png")

|

| 226 |

|

| 227 |

-

|

|

|

|

| 1 |

"""

|

| 2 |

+

This module contains a function to add text to an image with a bounding box.

|

| 3 |

"""

|

| 4 |

+

import textwrap

|

| 5 |

+

from PIL import Image, ImageDraw, ImageFont

|

| 6 |

+

import numpy as np

|

| 7 |

+

import cv2

|

|

|

|

| 8 |

|

| 9 |

|

| 10 |

+

def add_text(image: np.ndarray, text: str, contour: np.ndarray):

|

| 11 |

"""

|

| 12 |

+

Add text to an image with a bounding box.

|

| 13 |

"""

|

| 14 |

|

| 15 |

+

font_path = "./fonts/fonts_animeace_i.ttf"

|

| 16 |

+

pil_image = Image.fromarray(cv2.cvtColor(image, cv2.COLOR_BGR2RGB))

|

| 17 |

+

draw = ImageDraw.Draw(pil_image)

|

|

|

|

|

|

|

|

|

|

| 18 |

|

| 19 |

+

x, y, w, h = cv2.boundingRect(contour)

|

| 20 |

|

| 21 |

+

line_height = 16

|

| 22 |

+

font_size = 14

|

| 23 |

+

wrapping_ratio = 0.075

|

| 24 |

|

| 25 |

+

wrapped_text = textwrap.fill(text, width=int(w * wrapping_ratio),

|

| 26 |

+

break_long_words=True)

|

| 27 |

|

| 28 |

+

font = ImageFont.truetype(font_path, size=font_size)

|

|

|

|

| 29 |

|

| 30 |

+

lines = wrapped_text.split('\n')

|

| 31 |

+

total_text_height = (len(lines)) * line_height

|

|

|

|

| 32 |

|

| 33 |

+

while total_text_height > h:

|

| 34 |

+

line_height -= 2

|

| 35 |

+

font_size -= 2

|

| 36 |

+

wrapping_ratio += 0.025

|

| 37 |

|

| 38 |

+

wrapped_text = textwrap.fill(text, width=int(w * wrapping_ratio),

|

| 39 |

+

break_long_words=True)

|

|

|

|

|

|

|

|

|

|

|

|

|

| 40 |

|

| 41 |

+

font = ImageFont.truetype(font_path, size=font_size)

|

|

|

|

|

|

|

| 42 |

|

| 43 |

+

lines = wrapped_text.split('\n')

|

| 44 |

+

total_text_height = (len(lines)) * line_height

|

| 45 |

|

| 46 |

+

# Vertical centering

|

| 47 |

+

text_y = y + (h - total_text_height) // 2

|

| 48 |

|

| 49 |

+

for line in lines:

|

| 50 |

+

text_length = draw.textlength(line, font=font)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 51 |

|

| 52 |

+

# Horizontal centering

|

| 53 |

+

text_x = x + (w - text_length) // 2

|

| 54 |

|

| 55 |

+

draw.text((text_x, text_y), line, font=font, fill=(0, 0, 0))

|

|

|

|

|

|

|

| 56 |

|

| 57 |

+

text_y += line_height

|

|

|

|

| 58 |

|

| 59 |

+

image[:, :, :] = cv2.cvtColor(np.array(pil_image), cv2.COLOR_RGB2BGR)

|