Spaces:

Running

on

T4

Running

on

T4

Commit

·

561c629

1

Parent(s):

193b9cd

feat: initial push

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitignore +30 -0

- __assets__/logo.png +0 -0

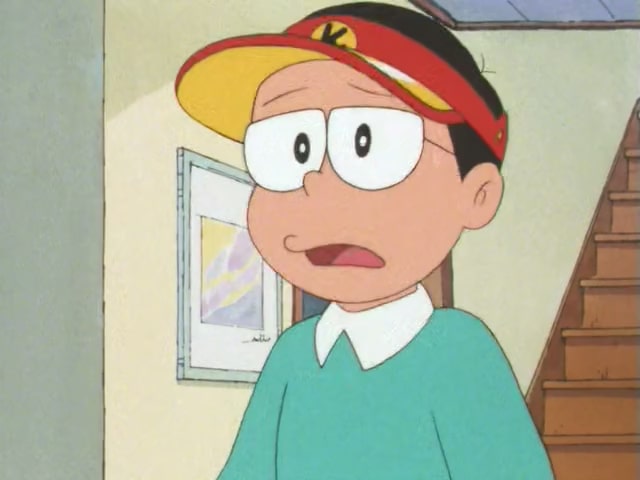

- __assets__/lr_inputs/41.png +0 -0

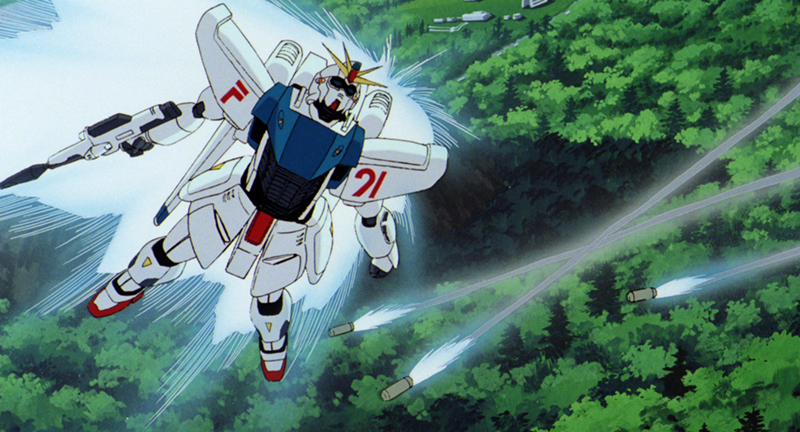

- __assets__/lr_inputs/f91.jpg +0 -0

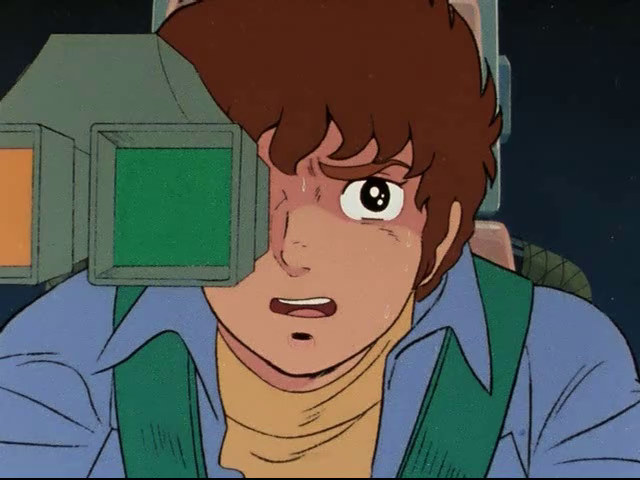

- __assets__/lr_inputs/image-00164.jpg +0 -0

- __assets__/lr_inputs/image-00186.png +0 -0

- __assets__/lr_inputs/image-00277.png +0 -0

- __assets__/lr_inputs/image-00440.png +0 -0

- __assets__/lr_inputs/image-00542.png +0 -0

- __assets__/lr_inputs/img_eva.jpeg +0 -0

- __assets__/lr_inputs/screenshot_resize.jpg +0 -0

- __assets__/visual_results/0079_2_visual.png +0 -0

- __assets__/visual_results/0079_visual.png +0 -0

- __assets__/visual_results/eva_visual.png +0 -0

- __assets__/visual_results/f91_visual.png +0 -0

- __assets__/visual_results/kiteret_visual.png +0 -0

- __assets__/visual_results/pokemon2_visual.png +0 -0

- __assets__/visual_results/pokemon_visual.png +0 -0

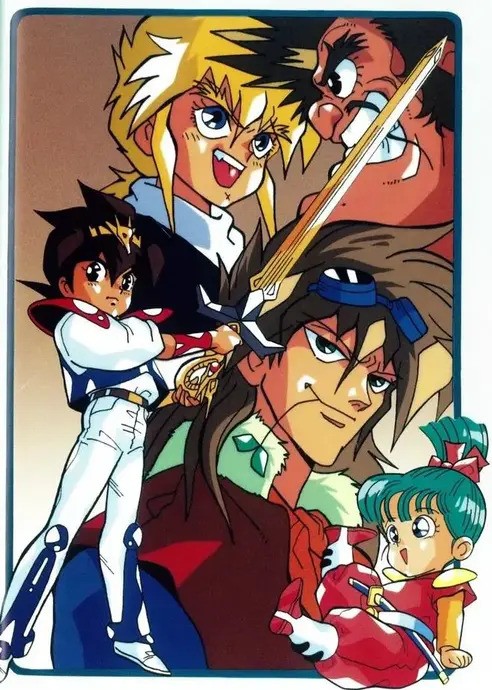

- __assets__/visual_results/wataru_visual.png +0 -0

- __assets__/workflow.png +0 -0

- app.py +117 -0

- architecture/cunet.py +189 -0

- architecture/dataset.py +106 -0

- architecture/discriminator.py +241 -0

- architecture/grl.py +616 -0

- architecture/grl_common/__init__.py +8 -0

- architecture/grl_common/common_edsr.py +227 -0

- architecture/grl_common/mixed_attn_block.py +1126 -0

- architecture/grl_common/mixed_attn_block_efficient.py +568 -0

- architecture/grl_common/ops.py +551 -0

- architecture/grl_common/resblock.py +61 -0

- architecture/grl_common/swin_v1_block.py +602 -0

- architecture/grl_common/swin_v2_block.py +306 -0

- architecture/grl_common/upsample.py +50 -0

- architecture/rrdb.py +218 -0

- architecture/swinir.py +874 -0

- dataset_curation_pipeline/IC9600/ICNet.py +151 -0

- dataset_curation_pipeline/IC9600/gene.py +113 -0

- dataset_curation_pipeline/collect.py +222 -0

- degradation/ESR/degradation_esr_shared.py +180 -0

- degradation/ESR/degradations_functionality.py +785 -0

- degradation/ESR/diffjpeg.py +517 -0

- degradation/ESR/usm_sharp.py +114 -0

- degradation/ESR/utils.py +126 -0

- degradation/degradation_esr.py +110 -0

- degradation/image_compression/avif.py +88 -0

- degradation/image_compression/heif.py +90 -0

- degradation/image_compression/jpeg.py +68 -0

- degradation/image_compression/webp.py +65 -0

- degradation/video_compression/h264.py +73 -0

.gitignore

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

datasets/*

|

| 2 |

+

.ipynb_checkpoints

|

| 3 |

+

.idea

|

| 4 |

+

__pycache__

|

| 5 |

+

|

| 6 |

+

datasets/

|

| 7 |

+

tmp_imgs

|

| 8 |

+

runs/

|

| 9 |

+

runs_last/

|

| 10 |

+

saved_models/*

|

| 11 |

+

saved_models/

|

| 12 |

+

pre_trained/

|

| 13 |

+

save_log/*

|

| 14 |

+

tmp/*

|

| 15 |

+

|

| 16 |

+

*.pyc

|

| 17 |

+

*.pth

|

| 18 |

+

*.png

|

| 19 |

+

*.jpg

|

| 20 |

+

*.mp4

|

| 21 |

+

*.txt

|

| 22 |

+

*.json

|

| 23 |

+

*.zip

|

| 24 |

+

*.mp4

|

| 25 |

+

*.csv

|

| 26 |

+

|

| 27 |

+

!__assets__/lr_inputs/*

|

| 28 |

+

!__assets__/*

|

| 29 |

+

!__assets__/visual_results/*

|

| 30 |

+

!requirements.txt

|

__assets__/logo.png

ADDED

|

__assets__/lr_inputs/41.png

ADDED

|

__assets__/lr_inputs/f91.jpg

ADDED

|

__assets__/lr_inputs/image-00164.jpg

ADDED

|

__assets__/lr_inputs/image-00186.png

ADDED

|

__assets__/lr_inputs/image-00277.png

ADDED

|

__assets__/lr_inputs/image-00440.png

ADDED

|

__assets__/lr_inputs/image-00542.png

ADDED

|

__assets__/lr_inputs/img_eva.jpeg

ADDED

|

__assets__/lr_inputs/screenshot_resize.jpg

ADDED

|

__assets__/visual_results/0079_2_visual.png

ADDED

|

__assets__/visual_results/0079_visual.png

ADDED

|

__assets__/visual_results/eva_visual.png

ADDED

|

__assets__/visual_results/f91_visual.png

ADDED

|

__assets__/visual_results/kiteret_visual.png

ADDED

|

__assets__/visual_results/pokemon2_visual.png

ADDED

|

__assets__/visual_results/pokemon_visual.png

ADDED

|

__assets__/visual_results/wataru_visual.png

ADDED

|

__assets__/workflow.png

ADDED

|

app.py

ADDED

|

@@ -0,0 +1,117 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os, sys

|

| 2 |

+

import cv2

|

| 3 |

+

import gradio as gr

|

| 4 |

+

import torch

|

| 5 |

+

import numpy as np

|

| 6 |

+

from torchvision.utils import save_image

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

# Import files from the local folder

|

| 10 |

+

root_path = os.path.abspath('.')

|

| 11 |

+

sys.path.append(root_path)

|

| 12 |

+

from test_code.inference import super_resolve_img

|

| 13 |

+

from test_code.test_utils import load_grl, load_rrdb

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

def auto_download_if_needed(weight_path):

|

| 17 |

+

if os.path.exists(weight_path):

|

| 18 |

+

return

|

| 19 |

+

|

| 20 |

+

if not os.path.exists("pretrained"):

|

| 21 |

+

os.makedirs("pretrained")

|

| 22 |

+

|

| 23 |

+

if weight_path == "pretrained/4x_APISR_GRL_GAN_generator.pth":

|

| 24 |

+

os.system("wget https://github.com/Kiteretsu77/APISR/releases/download/v0.1.0/4x_APISR_GRL_GAN_generator.pth")

|

| 25 |

+

os.system("mv 4x_APISR_GRL_GAN_generator.pth pretrained")

|

| 26 |

+

|

| 27 |

+

if weight_path == "pretrained/2x_APISR_RRDB_GAN_generator.pth":

|

| 28 |

+

os.system("wget https://github.com/Kiteretsu77/APISR/releases/download/v0.1.0/2x_APISR_RRDB_GAN_generator.pth")

|

| 29 |

+

os.system("mv 2x_APISR_RRDB_GAN_generator.pth pretrained")

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

def inference(img_path, model_name):

|

| 34 |

+

|

| 35 |

+

try:

|

| 36 |

+

weight_dtype = torch.float32

|

| 37 |

+

|

| 38 |

+

# Load the model

|

| 39 |

+

if model_name == "4xGRL":

|

| 40 |

+

weight_path = "pretrained/4x_APISR_GRL_GAN_generator.pth"

|

| 41 |

+

auto_download_if_needed(weight_path)

|

| 42 |

+

generator = load_grl(weight_path, scale=4) # Directly use default way now

|

| 43 |

+

|

| 44 |

+

elif model_name == "2xRRDB":

|

| 45 |

+

weight_path = "pretrained/2x_APISR_RRDB_GAN_generator.pth"

|

| 46 |

+

auto_download_if_needed(weight_path)

|

| 47 |

+

generator = load_rrdb(weight_path, scale=2) # Directly use default way now

|

| 48 |

+

|

| 49 |

+

else:

|

| 50 |

+

raise gr.Error(error)

|

| 51 |

+

|

| 52 |

+

generator = generator.to(dtype=weight_dtype)

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

# In default, we will automatically use crop to match 4x size

|

| 56 |

+

super_resolved_img = super_resolve_img(generator, img_path, output_path=None, weight_dtype=weight_dtype, crop_for_4x=True)

|

| 57 |

+

save_image(super_resolved_img, "SR_result.png")

|

| 58 |

+

outputs = cv2.imread("SR_result.png")

|

| 59 |

+

outputs = cv2.cvtColor(outputs, cv2.COLOR_RGB2BGR)

|

| 60 |

+

|

| 61 |

+

return outputs

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

except Exception as error:

|

| 65 |

+

raise gr.Error(f"global exception: {error}")

|

| 66 |

+

|

| 67 |

+

|

| 68 |

+

|

| 69 |

+

if __name__ == '__main__':

|

| 70 |

+

|

| 71 |

+

MARKDOWN = \

|

| 72 |

+

"""

|

| 73 |

+

## APISR: Anime Production Inspired Real-World Anime Super-Resolution (CVPR 2024)

|

| 74 |

+

|

| 75 |

+

[GitHub](https://github.com/Kiteretsu77/APISR) | [Paper](https://arxiv.org/abs/2403.01598)

|

| 76 |

+

|

| 77 |

+

If APISR is helpful for you, please help star the GitHub Repo. Thanks!

|

| 78 |

+

"""

|

| 79 |

+

|

| 80 |

+

block = gr.Blocks().queue()

|

| 81 |

+

with block:

|

| 82 |

+

with gr.Row():

|

| 83 |

+

gr.Markdown(MARKDOWN)

|

| 84 |

+

with gr.Row(elem_classes=["container"]):

|

| 85 |

+

with gr.Column(scale=2):

|

| 86 |

+

input_image = gr.Image(type="filepath", label="Input")

|

| 87 |

+

model_name = gr.Dropdown(

|

| 88 |

+

[

|

| 89 |

+

"2xRRDB",

|

| 90 |

+

"4xGRL"

|

| 91 |

+

],

|

| 92 |

+

type="value",

|

| 93 |

+

value="4xGRL",

|

| 94 |

+

label="model",

|

| 95 |

+

)

|

| 96 |

+

run_btn = gr.Button(value="Submit")

|

| 97 |

+

|

| 98 |

+

with gr.Column(scale=3):

|

| 99 |

+

output_image = gr.Image(type="numpy", label="Output image")

|

| 100 |

+

|

| 101 |

+

with gr.Row(elem_classes=["container"]):

|

| 102 |

+

gr.Examples(

|

| 103 |

+

[

|

| 104 |

+

["__assets__/lr_inputs/image-00277.png"],

|

| 105 |

+

["__assets__/lr_inputs/image-00542.png"],

|

| 106 |

+

["__assets__/lr_inputs/41.png"],

|

| 107 |

+

["__assets__/lr_inputs/f91.jpg"],

|

| 108 |

+

["__assets__/lr_inputs/image-00440.png"],

|

| 109 |

+

["__assets__/lr_inputs/image-00164.png"],

|

| 110 |

+

["__assets__/lr_inputs/img_eva.jpeg"],

|

| 111 |

+

],

|

| 112 |

+

[input_image],

|

| 113 |

+

)

|

| 114 |

+

|

| 115 |

+

run_btn.click(inference, inputs=[input_image, model_name], outputs=[output_image])

|

| 116 |

+

|

| 117 |

+

block.launch()

|

architecture/cunet.py

ADDED

|

@@ -0,0 +1,189 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Github Repository: https://github.com/bilibili/ailab/blob/main/Real-CUGAN/README_EN.md

|

| 2 |

+

# Code snippet (with certain modificaiton) from: https://github.com/bilibili/ailab/blob/main/Real-CUGAN/VapourSynth/upcunet_v3_vs.py

|

| 3 |

+

|

| 4 |

+

import torch

|

| 5 |

+

from torch import nn as nn

|

| 6 |

+

from torch.nn import functional as F

|

| 7 |

+

import os, sys

|

| 8 |

+

import numpy as np

|

| 9 |

+

from time import time as ttime, sleep

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

class UNet_Full(nn.Module):

|

| 13 |

+

|

| 14 |

+

def __init__(self):

|

| 15 |

+

super(UNet_Full, self).__init__()

|

| 16 |

+

self.unet1 = UNet1(3, 3, deconv=True)

|

| 17 |

+

self.unet2 = UNet2(3, 3, deconv=False)

|

| 18 |

+

|

| 19 |

+

def forward(self, x):

|

| 20 |

+

n, c, h0, w0 = x.shape

|

| 21 |

+

|

| 22 |

+

ph = ((h0 - 1) // 2 + 1) * 2

|

| 23 |

+

pw = ((w0 - 1) // 2 + 1) * 2

|

| 24 |

+

x = F.pad(x, (18, 18 + pw - w0, 18, 18 + ph - h0), 'reflect') # In order to ensure that it can be divided by 2

|

| 25 |

+

|

| 26 |

+

x1 = self.unet1(x)

|

| 27 |

+

x2 = self.unet2(x1)

|

| 28 |

+

|

| 29 |

+

x1 = F.pad(x1, (-20, -20, -20, -20))

|

| 30 |

+

output = torch.add(x2, x1)

|

| 31 |

+

|

| 32 |

+

if (w0 != pw or h0 != ph):

|

| 33 |

+

output = output[:, :, :h0 * 2, :w0 * 2]

|

| 34 |

+

|

| 35 |

+

return output

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

class SEBlock(nn.Module):

|

| 39 |

+

def __init__(self, in_channels, reduction=8, bias=False):

|

| 40 |

+

super(SEBlock, self).__init__()

|

| 41 |

+

self.conv1 = nn.Conv2d(in_channels, in_channels // reduction, 1, 1, 0, bias=bias)

|

| 42 |

+

self.conv2 = nn.Conv2d(in_channels // reduction, in_channels, 1, 1, 0, bias=bias)

|

| 43 |

+

|

| 44 |

+

def forward(self, x):

|

| 45 |

+

if ("Half" in x.type()): # torch.HalfTensor/torch.cuda.HalfTensor

|

| 46 |

+

x0 = torch.mean(x.float(), dim=(2, 3), keepdim=True).half()

|

| 47 |

+

else:

|

| 48 |

+

x0 = torch.mean(x, dim=(2, 3), keepdim=True)

|

| 49 |

+

x0 = self.conv1(x0)

|

| 50 |

+

x0 = F.relu(x0, inplace=True)

|

| 51 |

+

x0 = self.conv2(x0)

|

| 52 |

+

x0 = torch.sigmoid(x0)

|

| 53 |

+

x = torch.mul(x, x0)

|

| 54 |

+

return x

|

| 55 |

+

|

| 56 |

+

class UNetConv(nn.Module):

|

| 57 |

+

def __init__(self, in_channels, mid_channels, out_channels, se):

|

| 58 |

+

super(UNetConv, self).__init__()

|

| 59 |

+

self.conv = nn.Sequential(

|

| 60 |

+

nn.Conv2d(in_channels, mid_channels, 3, 1, 0),

|

| 61 |

+

nn.LeakyReLU(0.1, inplace=True),

|

| 62 |

+

nn.Conv2d(mid_channels, out_channels, 3, 1, 0),

|

| 63 |

+

nn.LeakyReLU(0.1, inplace=True),

|

| 64 |

+

)

|

| 65 |

+

if se:

|

| 66 |

+

self.seblock = SEBlock(out_channels, reduction=8, bias=True)

|

| 67 |

+

else:

|

| 68 |

+

self.seblock = None

|

| 69 |

+

|

| 70 |

+

def forward(self, x):

|

| 71 |

+

z = self.conv(x)

|

| 72 |

+

if self.seblock is not None:

|

| 73 |

+

z = self.seblock(z)

|

| 74 |

+

return z

|

| 75 |

+

|

| 76 |

+

class UNet1(nn.Module):

|

| 77 |

+

def __init__(self, in_channels, out_channels, deconv):

|

| 78 |

+

super(UNet1, self).__init__()

|

| 79 |

+

self.conv1 = UNetConv(in_channels, 32, 64, se=False)

|

| 80 |

+

self.conv1_down = nn.Conv2d(64, 64, 2, 2, 0)

|

| 81 |

+

self.conv2 = UNetConv(64, 128, 64, se=True)

|

| 82 |

+

self.conv2_up = nn.ConvTranspose2d(64, 64, 2, 2, 0)

|

| 83 |

+

self.conv3 = nn.Conv2d(64, 64, 3, 1, 0)

|

| 84 |

+

|

| 85 |

+

if deconv:

|

| 86 |

+

self.conv_bottom = nn.ConvTranspose2d(64, out_channels, 4, 2, 3)

|

| 87 |

+

else:

|

| 88 |

+

self.conv_bottom = nn.Conv2d(64, out_channels, 3, 1, 0)

|

| 89 |

+

|

| 90 |

+

for m in self.modules():

|

| 91 |

+

if isinstance(m, (nn.Conv2d, nn.ConvTranspose2d)):

|

| 92 |

+

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

|

| 93 |

+

elif isinstance(m, nn.Linear):

|

| 94 |

+

nn.init.normal_(m.weight, 0, 0.01)

|

| 95 |

+

if m.bias is not None:

|

| 96 |

+

nn.init.constant_(m.bias, 0)

|

| 97 |

+

|

| 98 |

+

def forward(self, x):

|

| 99 |

+

x1 = self.conv1(x)

|

| 100 |

+

x2 = self.conv1_down(x1)

|

| 101 |

+

x2 = F.leaky_relu(x2, 0.1, inplace=True)

|

| 102 |

+

x2 = self.conv2(x2)

|

| 103 |

+

x2 = self.conv2_up(x2)

|

| 104 |

+

x2 = F.leaky_relu(x2, 0.1, inplace=True)

|

| 105 |

+

|

| 106 |

+

x1 = F.pad(x1, (-4, -4, -4, -4))

|

| 107 |

+

x3 = self.conv3(x1 + x2)

|

| 108 |

+

x3 = F.leaky_relu(x3, 0.1, inplace=True)

|

| 109 |

+

z = self.conv_bottom(x3)

|

| 110 |

+

return z

|

| 111 |

+

|

| 112 |

+

|

| 113 |

+

class UNet2(nn.Module):

|

| 114 |

+

def __init__(self, in_channels, out_channels, deconv):

|

| 115 |

+

super(UNet2, self).__init__()

|

| 116 |

+

|

| 117 |

+

self.conv1 = UNetConv(in_channels, 32, 64, se=False)

|

| 118 |

+

self.conv1_down = nn.Conv2d(64, 64, 2, 2, 0)

|

| 119 |

+

self.conv2 = UNetConv(64, 64, 128, se=True)

|

| 120 |

+

self.conv2_down = nn.Conv2d(128, 128, 2, 2, 0)

|

| 121 |

+

self.conv3 = UNetConv(128, 256, 128, se=True)

|

| 122 |

+

self.conv3_up = nn.ConvTranspose2d(128, 128, 2, 2, 0)

|

| 123 |

+

self.conv4 = UNetConv(128, 64, 64, se=True)

|

| 124 |

+

self.conv4_up = nn.ConvTranspose2d(64, 64, 2, 2, 0)

|

| 125 |

+

self.conv5 = nn.Conv2d(64, 64, 3, 1, 0)

|

| 126 |

+

|

| 127 |

+

if deconv:

|

| 128 |

+

self.conv_bottom = nn.ConvTranspose2d(64, out_channels, 4, 2, 3)

|

| 129 |

+

else:

|

| 130 |

+

self.conv_bottom = nn.Conv2d(64, out_channels, 3, 1, 0)

|

| 131 |

+

|

| 132 |

+

for m in self.modules():

|

| 133 |

+

if isinstance(m, (nn.Conv2d, nn.ConvTranspose2d)):

|

| 134 |

+

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

|

| 135 |

+

elif isinstance(m, nn.Linear):

|

| 136 |

+

nn.init.normal_(m.weight, 0, 0.01)

|

| 137 |

+

if m.bias is not None:

|

| 138 |

+

nn.init.constant_(m.bias, 0)

|

| 139 |

+

|

| 140 |

+

def forward(self, x):

|

| 141 |

+

x1 = self.conv1(x)

|

| 142 |

+

x2 = self.conv1_down(x1)

|

| 143 |

+

x2 = F.leaky_relu(x2, 0.1, inplace=True)

|

| 144 |

+

x2 = self.conv2(x2)

|

| 145 |

+

|

| 146 |

+

x3 = self.conv2_down(x2)

|

| 147 |

+

x3 = F.leaky_relu(x3, 0.1, inplace=True)

|

| 148 |

+

x3 = self.conv3(x3)

|

| 149 |

+

x3 = self.conv3_up(x3)

|

| 150 |

+

x3 = F.leaky_relu(x3, 0.1, inplace=True)

|

| 151 |

+

|

| 152 |

+

x2 = F.pad(x2, (-4, -4, -4, -4))

|

| 153 |

+

x4 = self.conv4(x2 + x3)

|

| 154 |

+

x4 = self.conv4_up(x4)

|

| 155 |

+

x4 = F.leaky_relu(x4, 0.1, inplace=True)

|

| 156 |

+

|

| 157 |

+

x1 = F.pad(x1, (-16, -16, -16, -16))

|

| 158 |

+

x5 = self.conv5(x1 + x4)

|

| 159 |

+

x5 = F.leaky_relu(x5, 0.1, inplace=True)

|

| 160 |

+

|

| 161 |

+

z = self.conv_bottom(x5)

|

| 162 |

+

return z

|

| 163 |

+

|

| 164 |

+

|

| 165 |

+

|

| 166 |

+

def main():

|

| 167 |

+

root_path = os.path.abspath('.')

|

| 168 |

+

sys.path.append(root_path)

|

| 169 |

+

|

| 170 |

+

from opt import opt # Manage GPU to choose

|

| 171 |

+

import time

|

| 172 |

+

|

| 173 |

+

model = UNet_Full().cuda()

|

| 174 |

+

pytorch_total_params = sum(p.numel() for p in model.parameters())

|

| 175 |

+

print(f"CuNet has param {pytorch_total_params//1000} K params")

|

| 176 |

+

|

| 177 |

+

|

| 178 |

+

# Count the number of FLOPs to double check

|

| 179 |

+

x = torch.randn((1, 3, 180, 180)).cuda()

|

| 180 |

+

start = time.time()

|

| 181 |

+

x = model(x)

|

| 182 |

+

print("output size is ", x.shape)

|

| 183 |

+

total = time.time() - start

|

| 184 |

+

print(total)

|

| 185 |

+

|

| 186 |

+

|

| 187 |

+

|

| 188 |

+

if __name__ == "__main__":

|

| 189 |

+

main()

|

architecture/dataset.py

ADDED

|

@@ -0,0 +1,106 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -*- coding: utf-8 -*-

|

| 2 |

+

import torch

|

| 3 |

+

import torch.nn as nn

|

| 4 |

+

import torch.nn.functional as F

|

| 5 |

+

from torch.autograd import Variable

|

| 6 |

+

from torchvision.models import vgg19

|

| 7 |

+

import torchvision.transforms as transforms

|

| 8 |

+

from torch.utils.data import DataLoader, Dataset

|

| 9 |

+

from torchvision.utils import save_image, make_grid

|

| 10 |

+

from torchvision.transforms import ToTensor

|

| 11 |

+

|

| 12 |

+

import numpy as np

|

| 13 |

+

import cv2

|

| 14 |

+

import glob

|

| 15 |

+

import random

|

| 16 |

+

from PIL import Image

|

| 17 |

+

from tqdm import tqdm

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

# from degradation.degradation_main import degredate_process, preparation

|

| 21 |

+

from opt import opt

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

class ImageDataset(Dataset):

|

| 25 |

+

@torch.no_grad()

|

| 26 |

+

def __init__(self, train_lr_paths, degrade_hr_paths, train_hr_paths):

|

| 27 |

+

# print("low_res path sample is ", train_lr_paths[0])

|

| 28 |

+

# print(train_hr_paths[0])

|

| 29 |

+

# hr_height, hr_width = hr_shape

|

| 30 |

+

self.transform = transforms.Compose(

|

| 31 |

+

[

|

| 32 |

+

transforms.ToTensor(),

|

| 33 |

+

]

|

| 34 |

+

)

|

| 35 |

+

|

| 36 |

+

self.files_lr = train_lr_paths

|

| 37 |

+

self.files_degrade_hr = degrade_hr_paths

|

| 38 |

+

self.files_hr = train_hr_paths

|

| 39 |

+

|

| 40 |

+

assert(len(self.files_lr) == len(self.files_hr))

|

| 41 |

+

assert(len(self.files_lr) == len(self.files_degrade_hr))

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

def augment(self, imgs, hflip=True, rotation=True):

|

| 45 |

+

"""Augment: horizontal flips OR rotate (0, 90, 180, 270 degrees).

|

| 46 |

+

|

| 47 |

+

All the images in the list use the same augmentation.

|

| 48 |

+

|

| 49 |

+

Args:

|

| 50 |

+

imgs (list[ndarray] | ndarray): Images to be augmented. If the input

|

| 51 |

+

is an ndarray, it will be transformed to a list.

|

| 52 |

+

hflip (bool): Horizontal flip. Default: True.

|

| 53 |

+

rotation (bool): Rotation. Default: True.

|

| 54 |

+

|

| 55 |

+

Returns:

|

| 56 |

+

imgs (list[ndarray] | ndarray): Augmented images and flows. If returned

|

| 57 |

+

results only have one element, just return ndarray.

|

| 58 |

+

|

| 59 |

+

"""

|

| 60 |

+

hflip = hflip and random.random() < 0.5

|

| 61 |

+

vflip = rotation and random.random() < 0.5

|

| 62 |

+

rot90 = rotation and random.random() < 0.5

|

| 63 |

+

|

| 64 |

+

def _augment(img):

|

| 65 |

+

if hflip: # horizontal

|

| 66 |

+

cv2.flip(img, 1, img)

|

| 67 |

+

if vflip: # vertical

|

| 68 |

+

cv2.flip(img, 0, img)

|

| 69 |

+

if rot90:

|

| 70 |

+

img = img.transpose(1, 0, 2)

|

| 71 |

+

return img

|

| 72 |

+

|

| 73 |

+

|

| 74 |

+

if not isinstance(imgs, list):

|

| 75 |

+

imgs = [imgs]

|

| 76 |

+

|

| 77 |

+

imgs = [_augment(img) for img in imgs]

|

| 78 |

+

if len(imgs) == 1:

|

| 79 |

+

imgs = imgs[0]

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

return imgs

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

def __getitem__(self, index):

|

| 86 |

+

|

| 87 |

+

# Read File

|

| 88 |

+

img_lr = cv2.imread(self.files_lr[index % len(self.files_lr)]) # Should be BGR

|

| 89 |

+

img_degrade_hr = cv2.imread(self.files_degrade_hr[index % len(self.files_degrade_hr)])

|

| 90 |

+

img_hr = cv2.imread(self.files_hr[index % len(self.files_hr)])

|

| 91 |

+

|

| 92 |

+

# Augmentation

|

| 93 |

+

if random.random() < opt["augment_prob"]:

|

| 94 |

+

img_lr, img_degrade_hr, img_hr = self.augment([img_lr, img_degrade_hr, img_hr])

|

| 95 |

+

|

| 96 |

+

# Transform to Tensor

|

| 97 |

+

img_lr = self.transform(img_lr)

|

| 98 |

+

img_degrade_hr = self.transform(img_degrade_hr)

|

| 99 |

+

img_hr = self.transform(img_hr) # ToTensor() is already in the range [0, 1]

|

| 100 |

+

|

| 101 |

+

|

| 102 |

+

return {"lr": img_lr, "degrade_hr": img_degrade_hr, "hr": img_hr}

|

| 103 |

+

|

| 104 |

+

def __len__(self):

|

| 105 |

+

assert(len(self.files_hr) == len(self.files_lr))

|

| 106 |

+

return len(self.files_hr)

|

architecture/discriminator.py

ADDED

|

@@ -0,0 +1,241 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -*- coding: utf-8 -*-

|

| 2 |

+

from torch import nn as nn

|

| 3 |

+

from torch.nn import functional as F

|

| 4 |

+

from torch.nn.utils import spectral_norm

|

| 5 |

+

import torch

|

| 6 |

+

import functools

|

| 7 |

+

|

| 8 |

+

class UNetDiscriminatorSN(nn.Module):

|

| 9 |

+

"""Defines a U-Net discriminator with spectral normalization (SN)

|

| 10 |

+

|

| 11 |

+

It is used in Real-ESRGAN: Training Real-World Blind Super-Resolution with Pure Synthetic Data.

|

| 12 |

+

|

| 13 |

+

Arg:

|

| 14 |

+

num_in_ch (int): Channel number of inputs. Default: 3.

|

| 15 |

+

num_feat (int): Channel number of base intermediate features. Default: 64.

|

| 16 |

+

skip_connection (bool): Whether to use skip connections between U-Net. Default: True.

|

| 17 |

+

"""

|

| 18 |

+

|

| 19 |

+

def __init__(self, num_in_ch, num_feat=64, skip_connection=True):

|

| 20 |

+

super(UNetDiscriminatorSN, self).__init__()

|

| 21 |

+

self.skip_connection = skip_connection

|

| 22 |

+

norm = spectral_norm

|

| 23 |

+

# the first convolution

|

| 24 |

+

self.conv0 = nn.Conv2d(num_in_ch, num_feat, kernel_size=3, stride=1, padding=1)

|

| 25 |

+

# downsample

|

| 26 |

+

self.conv1 = norm(nn.Conv2d(num_feat, num_feat * 2, 4, 2, 1, bias=False))

|

| 27 |

+

self.conv2 = norm(nn.Conv2d(num_feat * 2, num_feat * 4, 4, 2, 1, bias=False))

|

| 28 |

+

self.conv3 = norm(nn.Conv2d(num_feat * 4, num_feat * 8, 4, 2, 1, bias=False))

|

| 29 |

+

# upsample

|

| 30 |

+

self.conv4 = norm(nn.Conv2d(num_feat * 8, num_feat * 4, 3, 1, 1, bias=False))

|

| 31 |

+

self.conv5 = norm(nn.Conv2d(num_feat * 4, num_feat * 2, 3, 1, 1, bias=False))

|

| 32 |

+

self.conv6 = norm(nn.Conv2d(num_feat * 2, num_feat, 3, 1, 1, bias=False))

|

| 33 |

+

# extra convolutions

|

| 34 |

+

self.conv7 = norm(nn.Conv2d(num_feat, num_feat, 3, 1, 1, bias=False))

|

| 35 |

+

self.conv8 = norm(nn.Conv2d(num_feat, num_feat, 3, 1, 1, bias=False))

|

| 36 |

+

self.conv9 = nn.Conv2d(num_feat, 1, 3, 1, 1)

|

| 37 |

+

|

| 38 |

+

def forward(self, x):

|

| 39 |

+

|

| 40 |

+

# downsample

|

| 41 |

+

x0 = F.leaky_relu(self.conv0(x), negative_slope=0.2, inplace=True)

|

| 42 |

+

x1 = F.leaky_relu(self.conv1(x0), negative_slope=0.2, inplace=True)

|

| 43 |

+

x2 = F.leaky_relu(self.conv2(x1), negative_slope=0.2, inplace=True)

|

| 44 |

+

x3 = F.leaky_relu(self.conv3(x2), negative_slope=0.2, inplace=True)

|

| 45 |

+

|

| 46 |

+

# upsample

|

| 47 |

+

x3 = F.interpolate(x3, scale_factor=2, mode='bilinear', align_corners=False)

|

| 48 |

+

x4 = F.leaky_relu(self.conv4(x3), negative_slope=0.2, inplace=True)

|

| 49 |

+

|

| 50 |

+

if self.skip_connection:

|

| 51 |

+

x4 = x4 + x2

|

| 52 |

+

x4 = F.interpolate(x4, scale_factor=2, mode='bilinear', align_corners=False)

|

| 53 |

+

x5 = F.leaky_relu(self.conv5(x4), negative_slope=0.2, inplace=True)

|

| 54 |

+

|

| 55 |

+

if self.skip_connection:

|

| 56 |

+

x5 = x5 + x1

|

| 57 |

+

x5 = F.interpolate(x5, scale_factor=2, mode='bilinear', align_corners=False)

|

| 58 |

+

x6 = F.leaky_relu(self.conv6(x5), negative_slope=0.2, inplace=True)

|

| 59 |

+

|

| 60 |

+

if self.skip_connection:

|

| 61 |

+

x6 = x6 + x0

|

| 62 |

+

|

| 63 |

+

# extra convolutions

|

| 64 |

+

out = F.leaky_relu(self.conv7(x6), negative_slope=0.2, inplace=True)

|

| 65 |

+

out = F.leaky_relu(self.conv8(out), negative_slope=0.2, inplace=True)

|

| 66 |

+

out = self.conv9(out)

|

| 67 |

+

|

| 68 |

+

return out

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

def get_conv_layer(input_nc, ndf, kernel_size, stride, padding, bias=True, use_sn=False):

|

| 73 |

+

if not use_sn:

|

| 74 |

+

return nn.Conv2d(input_nc, ndf, kernel_size=kernel_size, stride=stride, padding=padding, bias=bias)

|

| 75 |

+

return spectral_norm(nn.Conv2d(input_nc, ndf, kernel_size=kernel_size, stride=stride, padding=padding, bias=bias))

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

class PatchDiscriminator(nn.Module):

|

| 79 |

+

"""Defines a PatchGAN discriminator, the receptive field of default config is 70x70.

|

| 80 |

+

|

| 81 |

+

Args:

|

| 82 |

+

use_sn (bool): Use spectra_norm or not, if use_sn is True, then norm_type should be none.

|

| 83 |

+

"""

|

| 84 |

+

|

| 85 |

+

def __init__(self,

|

| 86 |

+

num_in_ch,

|

| 87 |

+

num_feat=64,

|

| 88 |

+

num_layers=3,

|

| 89 |

+

max_nf_mult=8,

|

| 90 |

+

norm_type='batch',

|

| 91 |

+

use_sigmoid=False,

|

| 92 |

+

use_sn=False):

|

| 93 |

+

super(PatchDiscriminator, self).__init__()

|

| 94 |

+

|

| 95 |

+

norm_layer = self._get_norm_layer(norm_type)

|

| 96 |

+

if type(norm_layer) == functools.partial: # no need to use bias as BatchNorm2d has affine parameters

|

| 97 |

+

use_bias = norm_layer.func != nn.BatchNorm2d

|

| 98 |

+

else:

|

| 99 |

+

use_bias = norm_layer != nn.BatchNorm2d

|

| 100 |

+

|

| 101 |

+

kw = 4

|

| 102 |

+

padw = 1

|

| 103 |

+

sequence = [

|

| 104 |

+

get_conv_layer(num_in_ch, num_feat, kernel_size=kw, stride=2, padding=padw, use_sn=use_sn),

|

| 105 |

+

nn.LeakyReLU(0.2, True)

|

| 106 |

+

]

|

| 107 |

+

nf_mult = 1

|

| 108 |

+

nf_mult_prev = 1

|

| 109 |

+

for n in range(1, num_layers): # gradually increase the number of filters

|

| 110 |

+

nf_mult_prev = nf_mult

|

| 111 |

+

nf_mult = min(2**n, max_nf_mult)

|

| 112 |

+

sequence += [

|

| 113 |

+

get_conv_layer(

|

| 114 |

+

num_feat * nf_mult_prev,

|

| 115 |

+

num_feat * nf_mult,

|

| 116 |

+

kernel_size=kw,

|

| 117 |

+

stride=2,

|

| 118 |

+

padding=padw,

|

| 119 |

+

bias=use_bias,

|

| 120 |

+

use_sn=use_sn),

|

| 121 |

+

norm_layer(num_feat * nf_mult),

|

| 122 |

+

nn.LeakyReLU(0.2, True)

|

| 123 |

+

]

|

| 124 |

+

|

| 125 |

+

nf_mult_prev = nf_mult

|

| 126 |

+

nf_mult = min(2**num_layers, max_nf_mult)

|

| 127 |

+

sequence += [

|

| 128 |

+

get_conv_layer(

|

| 129 |

+

num_feat * nf_mult_prev,

|

| 130 |

+

num_feat * nf_mult,

|

| 131 |

+

kernel_size=kw,

|

| 132 |

+

stride=1,

|

| 133 |

+

padding=padw,

|

| 134 |

+

bias=use_bias,

|

| 135 |

+

use_sn=use_sn),

|

| 136 |

+

norm_layer(num_feat * nf_mult),

|

| 137 |

+

nn.LeakyReLU(0.2, True)

|

| 138 |

+

]

|

| 139 |

+

|

| 140 |

+

# output 1 channel prediction map 我觉得这个应该就是pixel by pixel的feedback反馈

|

| 141 |

+

sequence += [get_conv_layer(num_feat * nf_mult, 1, kernel_size=kw, stride=1, padding=padw, use_sn=use_sn)]

|

| 142 |

+

|

| 143 |

+

if use_sigmoid:

|

| 144 |

+

sequence += [nn.Sigmoid()]

|

| 145 |

+

self.model = nn.Sequential(*sequence)

|

| 146 |

+

|

| 147 |

+

def _get_norm_layer(self, norm_type='batch'):

|

| 148 |

+

if norm_type == 'batch':

|

| 149 |

+

norm_layer = functools.partial(nn.BatchNorm2d, affine=True)

|

| 150 |

+

elif norm_type == 'instance':

|

| 151 |

+

norm_layer = functools.partial(nn.InstanceNorm2d, affine=False)

|

| 152 |

+

elif norm_type == 'batchnorm2d':

|

| 153 |

+

norm_layer = nn.BatchNorm2d

|

| 154 |

+

elif norm_type == 'none':

|

| 155 |

+

norm_layer = nn.Identity

|

| 156 |

+

else:

|

| 157 |

+

raise NotImplementedError(f'normalization layer [{norm_type}] is not found')

|

| 158 |

+

|

| 159 |

+

return norm_layer

|

| 160 |

+

|

| 161 |

+

def forward(self, x):

|

| 162 |

+

return self.model(x)

|

| 163 |

+

|

| 164 |

+

|

| 165 |

+

class MultiScaleDiscriminator(nn.Module):

|

| 166 |

+

"""Define a multi-scale discriminator, each discriminator is a instance of PatchDiscriminator.

|

| 167 |

+

|

| 168 |

+

Args:

|

| 169 |

+

num_layers (int or list): If the type of this variable is int, then degrade to PatchDiscriminator.

|

| 170 |

+

If the type of this variable is list, then the length of the list is

|

| 171 |

+

the number of discriminators.

|

| 172 |

+

use_downscale (bool): Progressive downscale the input to feed into different discriminators.

|

| 173 |

+

If set to True, then the discriminators are usually the same.

|

| 174 |

+

"""

|

| 175 |

+

|

| 176 |

+

def __init__(self,

|

| 177 |

+

num_in_ch,

|

| 178 |

+

num_feat=64,

|

| 179 |

+

num_layers=[3, 3, 3],

|

| 180 |

+

max_nf_mult=8,

|

| 181 |

+

norm_type='none',

|

| 182 |

+

use_sigmoid=False,

|

| 183 |

+

use_sn=True,

|

| 184 |

+

use_downscale=True):

|

| 185 |

+

super(MultiScaleDiscriminator, self).__init__()

|

| 186 |

+

|

| 187 |

+

if isinstance(num_layers, int):

|

| 188 |

+

num_layers = [num_layers]

|

| 189 |

+

|

| 190 |

+

# check whether the discriminators are the same

|

| 191 |

+

if use_downscale:

|

| 192 |

+

assert len(set(num_layers)) == 1

|

| 193 |

+

self.use_downscale = use_downscale

|

| 194 |

+

|

| 195 |

+

self.num_dis = len(num_layers)

|

| 196 |

+

self.dis_list = nn.ModuleList()

|

| 197 |

+

for nl in num_layers:

|

| 198 |

+

self.dis_list.append(

|

| 199 |

+

PatchDiscriminator(

|

| 200 |

+

num_in_ch,

|

| 201 |

+

num_feat=num_feat,

|

| 202 |

+

num_layers=nl,

|

| 203 |

+

max_nf_mult=max_nf_mult,

|

| 204 |

+

norm_type=norm_type,

|

| 205 |

+

use_sigmoid=use_sigmoid,

|

| 206 |

+

use_sn=use_sn,

|

| 207 |

+

))

|

| 208 |

+

|

| 209 |

+

def forward(self, x):

|

| 210 |

+

outs = []

|

| 211 |

+

h, w = x.size()[2:]

|

| 212 |

+

|

| 213 |

+

y = x

|

| 214 |

+

for i in range(self.num_dis):

|

| 215 |

+

if i != 0 and self.use_downscale:

|

| 216 |

+

y = F.interpolate(y, size=(h // 2, w // 2), mode='bilinear', align_corners=True)

|

| 217 |

+

h, w = y.size()[2:]

|

| 218 |

+

outs.append(self.dis_list[i](y))

|

| 219 |

+

|

| 220 |

+

return outs

|

| 221 |

+

|

| 222 |

+

|

| 223 |

+

def main():

|

| 224 |

+

from pthflops import count_ops

|

| 225 |

+

from torchsummary import summary

|

| 226 |

+

|

| 227 |

+

model = UNetDiscriminatorSN(3)

|

| 228 |

+

pytorch_total_params = sum(p.numel() for p in model.parameters())

|

| 229 |

+

|

| 230 |

+

# Create a network and a corresponding input

|

| 231 |

+

device = 'cuda'

|

| 232 |

+

inp = torch.rand(1, 3, 400, 400)

|

| 233 |

+

|

| 234 |

+

# Count the number of FLOPs

|

| 235 |

+

count_ops(model, inp)

|

| 236 |

+

summary(model.cuda(), (3, 400, 400), batch_size=1)

|

| 237 |

+

# print(f"pathGAN has param {pytorch_total_params//1000} K params")

|

| 238 |

+

|

| 239 |

+

|

| 240 |

+

if __name__ == "__main__":

|

| 241 |

+

main()

|

architecture/grl.py

ADDED

|

@@ -0,0 +1,616 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|