Upload 11 files

Browse files- notebooks/ch0-intro.ipynb +84 -0

- notebooks/ch1-what-we-will-do.ipynb +73 -0

- notebooks/ch10-sharing-with-gradio.ipynb +168 -0

- notebooks/ch2-the-LLM-advantage.ipynb +45 -0

- notebooks/ch3-getting-started-with-hf.ipynb +63 -0

- notebooks/ch4-installing-jupyterlab.ipynb +142 -0

- notebooks/ch5-prompting-with-python.ipynb +536 -0

- notebooks/ch6-structured-responses.ipynb +719 -0

- notebooks/ch7-bulk-prompts.ipynb +954 -0

- notebooks/ch8-evaluating-prompts.ipynb +655 -0

- notebooks/ch9-improving-prompts.ipynb +669 -0

notebooks/ch0-intro.ipynb

ADDED

|

@@ -0,0 +1,84 @@

|

|

|

|

|

|

|

|

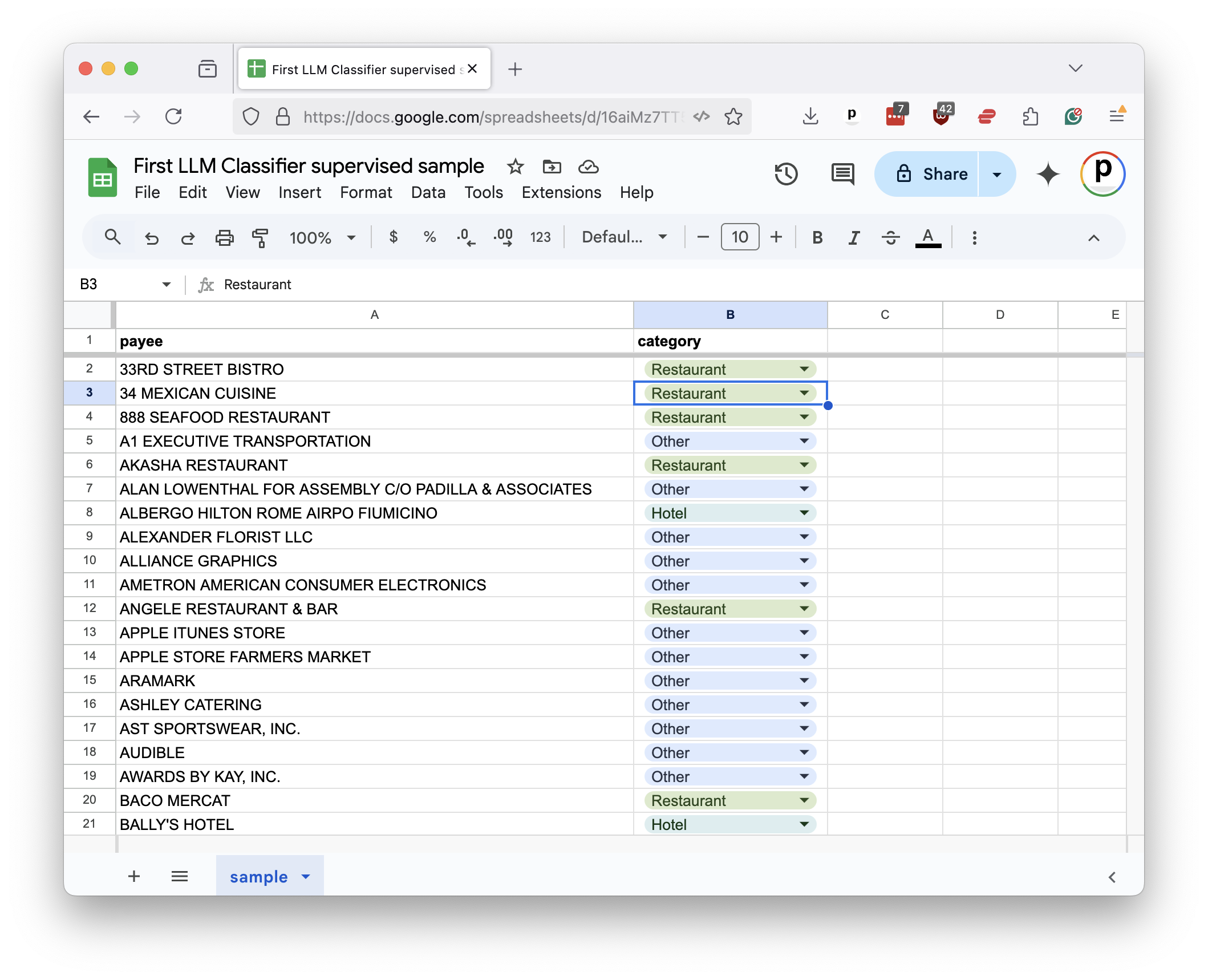

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"id": "bf2cde26",

|

| 6 |

+

"metadata": {},

|

| 7 |

+

"source": [

|

| 8 |

+

"# First LLM Classifier\n",

|

| 9 |

+

"\n",

|

| 10 |

+

"Learn how journalists use large-language models to organize and analyze massive datasets\n",

|

| 11 |

+

"\n",

|

| 12 |

+

"## What you will learn\n",

|

| 13 |

+

"\n",

|

| 14 |

+

"This class will give you hands-on experience creating a machine-learning model that can read and categorize the text recorded in newsworthy datasets.\n",

|

| 15 |

+

"\n",

|

| 16 |

+

"It will teach you how to:\n",

|

| 17 |

+

"\n",

|

| 18 |

+

"- Submit large-language model prompts with the Python programming language\n",

|

| 19 |

+

"- Write structured prompts that can classify text into predefined categories\n",

|

| 20 |

+

"- Submit dozens of prompts at once as part of an automated routine\n",

|

| 21 |

+

"- Evaluate results using a rigorous, scientific approach\n",

|

| 22 |

+

"- Improve results by training the model with rules and examples\n",

|

| 23 |

+

"\n",

|

| 24 |

+

"By the end, you will understand how LLM classifiers can outperform traditional machine-learning methods with significantly less code. And you will be ready to write a classifier on your own.\n",

|

| 25 |

+

"\n",

|

| 26 |

+

"## Who can take it\n",

|

| 27 |

+

"\n",

|

| 28 |

+

"This course is free. Anyone who has dabbled with code and AI is qualified to work through the materials. A curious mind and good attitude are all that’s required, but a familiarity with Python will certainly come in handy.\n",

|

| 29 |

+

"\n",

|

| 30 |

+

"💬 Need help or want to connect with others? Join the **Journalists on Hugging Face** community by signing up for our Slack group [here](https://forms.gle/JMCULh3jEdgFEsJu5).\n",

|

| 31 |

+

"\n",

|

| 32 |

+

"## Table of contents\n",

|

| 33 |

+

"\n",

|

| 34 |

+

"- [1. What we’ll do](ch1-what-we-will-do.ipynb) \n",

|

| 35 |

+

"- [2. The LLM advantage](ch2-the-LLM-advantage.ipynb) \n",

|

| 36 |

+

"- [3. Getting started with Hugging Face](ch3-getting-started-with-hf.ipynb) \n",

|

| 37 |

+

"- [4. Installing JupyterLab (optional)](ch4-installing-jupyterlab.ipynb) \n",

|

| 38 |

+

"- [5. Prompting with Python](ch5-prompting-with-python.ipynb) \n",

|

| 39 |

+

"- [6. Structured responses](ch6-structured-responses.ipynb) \n",

|

| 40 |

+

"- [7. Bulk prompts](ch7-bulk-prompts.ipynb) \n",

|

| 41 |

+

"- [8. Evaluating prompts](ch8-evaluating-prompts.ipynb) \n",

|

| 42 |

+

"- [9. Improving prompts](ch9-improving-prompts.ipynb) \n",

|

| 43 |

+

"- [10. Sharing your app with Gradio](ch10-sharing-with-gradio.ipynb)\n",

|

| 44 |

+

"\n",

|

| 45 |

+

"## About this class\n",

|

| 46 |

+

"[Ben Welsh](https://palewi.re/who-is-ben-welsh/) and [Derek Willis](https://thescoop.org/about/) prepared this guide for [a training session](https://schedules.ire.org/nicar-2025/index.html#2045) at the National Institute for Computer-Assisted Reporting’s 2025 conference in Minneapolis. \n",

|

| 47 |

+

"The project was adapted to run on Hugging Face by [Florent Daudens](https://www.linkedin.com/in/fdaudens/). \n",

|

| 48 |

+

"\n",

|

| 49 |

+

"Some of the copy was written with the assistance of GitHub’s Copilot, an AI-powered text generator. The materials are available as free and open source.\n",

|

| 50 |

+

"\n",

|

| 51 |

+

"**[1. What we’ll do →](ch1-what-we-will-do.ipynb)**"

|

| 52 |

+

]

|

| 53 |

+

},

|

| 54 |

+

{

|

| 55 |

+

"cell_type": "code",

|

| 56 |

+

"execution_count": null,

|

| 57 |

+

"id": "02477b14-edff-4380-ad41-9954b6c80863",

|

| 58 |

+

"metadata": {},

|

| 59 |

+

"outputs": [],

|

| 60 |

+

"source": []

|

| 61 |

+

}

|

| 62 |

+

],

|

| 63 |

+

"metadata": {

|

| 64 |

+

"kernelspec": {

|

| 65 |

+

"display_name": "Python 3 (ipykernel)",

|

| 66 |

+

"language": "python",

|

| 67 |

+

"name": "python3"

|

| 68 |

+

},

|

| 69 |

+

"language_info": {

|

| 70 |

+

"codemirror_mode": {

|

| 71 |

+

"name": "ipython",

|

| 72 |

+

"version": 3

|

| 73 |

+

},

|

| 74 |

+

"file_extension": ".py",

|

| 75 |

+

"mimetype": "text/x-python",

|

| 76 |

+

"name": "python",

|

| 77 |

+

"nbconvert_exporter": "python",

|

| 78 |

+

"pygments_lexer": "ipython3",

|

| 79 |

+

"version": "3.9.5"

|

| 80 |

+

}

|

| 81 |

+

},

|

| 82 |

+

"nbformat": 4,

|

| 83 |

+

"nbformat_minor": 5

|

| 84 |

+

}

|

notebooks/ch1-what-we-will-do.ipynb

ADDED

|

@@ -0,0 +1,73 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"id": "9d45b5fc",

|

| 6 |

+

"metadata": {},

|

| 7 |

+

"source": [

|

| 8 |

+

"# First LLM Classifier\n",

|

| 9 |

+

"\n",

|

| 10 |

+

"## 1. What we’ll do\n",

|

| 11 |

+

"\n",

|

| 12 |

+

"Journalists frequently encounter the mountains of messy data generated by our periphrastic society. This vast and verbose corpus boasts everything from long-hand entries in police reports to the legalese of legislative bills.\n",

|

| 13 |

+

"\n",

|

| 14 |

+

"Understanding and analyzing this data is critical to the job but can be time-consuming and inefficient. Computers can help by automating sorting through blocks of text, extracting key details and flagging unusual patterns.\n",

|

| 15 |

+

"\n",

|

| 16 |

+

"A common goal in this work is to classify text into categories. For example, you might want to sort a collection of emails as “spam” and “not spam” or identify corporate filings that suggest a company is about to go bankrupt.\n",

|

| 17 |

+

"\n",

|

| 18 |

+

"Traditional techniques for classifying text, like keyword searches or regular expressions, can be brittle and error-prone. Machine learning models can be more flexible, but they require large amounts of human training, a high level of computer programming expertise and often yield unimpressive results.\n",

|

| 19 |

+

"\n",

|

| 20 |

+

"Large-language models offer a better deal. We will demonstrate how you can use them to get superior results with less hassle.\n",

|

| 21 |

+

"\n",

|

| 22 |

+

"### 1.1. Our example case\n",

|

| 23 |

+

"\n",

|

| 24 |

+

"To show the power of this approach, we’ll focus on a specific data set: campaign expenditures.\n",

|

| 25 |

+

"\n",

|

| 26 |

+

"Candidates for office must disclose the money they spend on everything from pizza to private jets. Tracking their spending can reveal patterns and lead to important stories.\n",

|

| 27 |

+

"\n",

|

| 28 |

+

"But it’s no easy task. Each election cycle, thousands of candidates log transactions into the public databases where spending is disclosed. That’s so much data that no one can examine it all. To make matters worse, campaigns often use vague or misleading descriptions of their spending, making it difficult to parse and understand.\n",

|

| 29 |

+

"\n",

|

| 30 |

+

"It wasn’t until after his 2022 election to Congress that [journalists discovered](https://www.nytimes.com/2022/12/29/nyregion/george-santos-campaign-finance.html) that Rep. George Santos of New York had spent thousands of campaign dollars on questionable and potentially illegal expenses. While much of his shady spending was publicly disclosed, it was largely overlooked in the run-up to election day.\n",

|

| 31 |

+

"\n",

|

| 32 |

+

"[](https://www.nytimes.com/2022/12/29/nyregion/george-santos-campaign-finance.html)\n",

|

| 33 |

+

"\n",

|

| 34 |

+

"Inspired by this scoop, we will create a classifier that can scan the expenditures logged in campaign finance reports and identify those that may be newsworthy.\n",

|

| 35 |

+

"\n",

|

| 36 |

+

"[](https://californiacivicdata.org/)\n",

|

| 37 |

+

"\n",

|

| 38 |

+

"We will draw data from The Golden State, where the California Civic Data Coalition developed a clean, structured version of the statehouse’s disclosure data.\n",

|

| 39 |

+

"\n",

|

| 40 |

+

"**[2. The LLM advantage →](ch2-the-LLM-advantage.ipynb)**"

|

| 41 |

+

]

|

| 42 |

+

},

|

| 43 |

+

{

|

| 44 |

+

"cell_type": "code",

|

| 45 |

+

"execution_count": null,

|

| 46 |

+

"id": "934bc606-e7a5-4dff-9154-dc5c42bf3fc7",

|

| 47 |

+

"metadata": {},

|

| 48 |

+

"outputs": [],

|

| 49 |

+

"source": []

|

| 50 |

+

}

|

| 51 |

+

],

|

| 52 |

+

"metadata": {

|

| 53 |

+

"kernelspec": {

|

| 54 |

+

"display_name": "Python 3 (ipykernel)",

|

| 55 |

+

"language": "python",

|

| 56 |

+

"name": "python3"

|

| 57 |

+

},

|

| 58 |

+

"language_info": {

|

| 59 |

+

"codemirror_mode": {

|

| 60 |

+

"name": "ipython",

|

| 61 |

+

"version": 3

|

| 62 |

+

},

|

| 63 |

+

"file_extension": ".py",

|

| 64 |

+

"mimetype": "text/x-python",

|

| 65 |

+

"name": "python",

|

| 66 |

+

"nbconvert_exporter": "python",

|

| 67 |

+

"pygments_lexer": "ipython3",

|

| 68 |

+

"version": "3.9.5"

|

| 69 |

+

}

|

| 70 |

+

},

|

| 71 |

+

"nbformat": 4,

|

| 72 |

+

"nbformat_minor": 5

|

| 73 |

+

}

|

notebooks/ch10-sharing-with-gradio.ipynb

ADDED

|

@@ -0,0 +1,168 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"metadata": {},

|

| 6 |

+

"source": [

|

| 7 |

+

"## 10. Building a Demo with Gradio and Hugging Face Spaces\n",

|

| 8 |

+

"\n",

|

| 9 |

+

"Now that we've built a powerful LLM-based classifier, let's showcase it to the world (or your colleagues) by creating an interactive demo. In this chapter, we'll learn how to:\n",

|

| 10 |

+

"\n",

|

| 11 |

+

"1. Create a user-friendly web interface using Gradio\n",

|

| 12 |

+

"2. Package our demo for deployment\n",

|

| 13 |

+

"3. Deploy it on Hugging Face Spaces for free\n",

|

| 14 |

+

"4. Use the Hugging Face Inference API for model access"

|

| 15 |

+

]

|

| 16 |

+

},

|

| 17 |

+

{

|

| 18 |

+

"cell_type": "markdown",

|

| 19 |

+

"metadata": {},

|

| 20 |

+

"source": [

|

| 21 |

+

"### What we will do is the following:\n",

|

| 22 |

+

"\n",

|

| 23 |

+

"We will essentially start from [the functional notebook](ch9-improving-prompts.ipynb) we created in Chapter 9, and add an interactive component to it.\n",

|

| 24 |

+

"\n",

|

| 25 |

+

"1. **Add Gradio**\n",

|

| 26 |

+

"\n",

|

| 27 |

+

"Gradio is a Python library that allows you to easily create web-based interfaces where users can interact with your model. We will install **Gradio** to set up the interface for our model (it will be included in the requirements file — more on that below).\n",

|

| 28 |

+

"\n",

|

| 29 |

+

" ```python\n",

|

| 30 |

+

" import gradio as gr\n",

|

| 31 |

+

" ```\n",

|

| 32 |

+

"\n",

|

| 33 |

+

"2. **Add an interface function that will call what we already coded**\n",

|

| 34 |

+

"\n",

|

| 35 |

+

"Here we will define the interface function that connects Gradio to the model we built earlier. This function will take input from the user, process it with the classifier, and return the result.\n",

|

| 36 |

+

"\n",

|

| 37 |

+

"```python\n",

|

| 38 |

+

" # -- Gradio interface function --\n",

|

| 39 |

+

" def classify_business_names(input_text):\n",

|

| 40 |

+

" # Parse input text into list of names\n",

|

| 41 |

+

" name_list = [line.strip() for line in input_text.splitlines() if line.strip()]\n",

|

| 42 |

+

" \n",

|

| 43 |

+

" if not name_list:\n",

|

| 44 |

+

" return json.dumps({\"error\": \"No business names provided. Please enter at least one business name.\"})\n",

|

| 45 |

+

" \n",

|

| 46 |

+

" try:\n",

|

| 47 |

+

" result = classify_payees(name_list)\n",

|

| 48 |

+

" return json.dumps(result, indent=2)\n",

|

| 49 |

+

" except Exception as e:\n",

|

| 50 |

+

" return json.dumps({\"error\": f\"Classification failed: {str(e)}\"})\n",

|

| 51 |

+

" ```\n",

|

| 52 |

+

"\n",

|

| 53 |

+

"3. **Launch the Gradio interface**\n",

|

| 54 |

+

" \n",

|

| 55 |

+

" ```python\n",

|

| 56 |

+

" # -- Launch the demo --\n",

|

| 57 |

+

" demo = gr.Interface(\n",

|

| 58 |

+

" fn=classify_business_names,\n",

|

| 59 |

+

" inputs=gr.Textbox(lines=10, placeholder=\"Enter business names, one per line\"),\n",

|

| 60 |

+

" outputs=\"json\",\n",

|

| 61 |

+

" title=\"Business Category Classifier\",\n",

|

| 62 |

+

" description=\"Enter business names and get a classification: Restaurant, Bar, Hotel, or Other.\"\n",

|

| 63 |

+

" )\n",

|

| 64 |

+

"\n",

|

| 65 |

+

" demo.launch(share=True)\n",

|

| 66 |

+

"```"

|

| 67 |

+

]

|

| 68 |

+

},

|

| 69 |

+

{

|

| 70 |

+

"cell_type": "markdown",

|

| 71 |

+

"metadata": {},

|

| 72 |

+

"source": [

|

| 73 |

+

"## 🌍 Publish your demo to Hugging Face Spaces\n",

|

| 74 |

+

"\n",

|

| 75 |

+

"To share your Gradio app with the world, you can deploy it to [Hugging Face Spaces](https://huggingface.co/spaces) in just a few steps.\n",

|

| 76 |

+

"\n",

|

| 77 |

+

"### 1. Prepare your files\n",

|

| 78 |

+

"\n",

|

| 79 |

+

"Make sure your project has:\n",

|

| 80 |

+

"- A `app.py` file containing your Gradio app (e.g. `gr.Interface(...)`)\n",

|

| 81 |

+

"- The `sample.csv` file for the few shots classification\n",

|

| 82 |

+

"- A `requirements.txt` file listing any Python dependencies:\n",

|

| 83 |

+

"```\n",

|

| 84 |

+

"gradio\n",

|

| 85 |

+

"huggingface_hub\n",

|

| 86 |

+

"pandas\n",

|

| 87 |

+

"scikit-learn\n",

|

| 88 |

+

"retry\n",

|

| 89 |

+

"rich\n",

|

| 90 |

+

"```\n",

|

| 91 |

+

"\n",

|

| 92 |

+

"> **Example files are ready to use in the [gradio-app](gradio-app) folder!**\n",

|

| 93 |

+

"\n",

|

| 94 |

+

"### 2. Create a new Space\n",

|

| 95 |

+

"\n",

|

| 96 |

+

"1. Go to [huggingface.co/spaces](https://huggingface.co/spaces)\n",

|

| 97 |

+

"2. Click **\"Create new Space\"**\n",

|

| 98 |

+

"3. Choose:\n",

|

| 99 |

+

" - **SDK**: Gradio\n",

|

| 100 |

+

" - **License**: (choose one, e.g. MIT)\n",

|

| 101 |

+

" - **Visibility**: Public or Private\n",

|

| 102 |

+

"4. Name your Space and click **Create Space**\n",

|

| 103 |

+

"\n",

|

| 104 |

+

"### 3. Upload your files\n",

|

| 105 |

+

"\n",

|

| 106 |

+

"You can:\n",

|

| 107 |

+

"- Use the web interface to upload `app.py`, `sample.csv` and `requirements.txt`, or\n",

|

| 108 |

+

"- Clone the Space repo with Git and push your files:\n",

|

| 109 |

+

"```bash\n",

|

| 110 |

+

"git lfs install\n",

|

| 111 |

+

"git clone https://huggingface.co/spaces/YOUR_USERNAME/YOUR_SPACE_NAME\n",

|

| 112 |

+

"cd YOUR_SPACE_NAME\n",

|

| 113 |

+

"# Add your files here\n",

|

| 114 |

+

"git add .\n",

|

| 115 |

+

"git commit -m \"Initial commit\"\n",

|

| 116 |

+

"git push\n",

|

| 117 |

+

"```\n",

|

| 118 |

+

"### 4. Add your Hugging Face token to Secrets\n",

|

| 119 |

+

"\n",

|

| 120 |

+

"For your Gradio app to interact with Hugging Face’s Inference API (or any other Hugging Face service), you need to securely store your Hugging Face token.\n",

|

| 121 |

+

"\n",

|

| 122 |

+

"1. In your Hugging Face Space:\n",

|

| 123 |

+

" - Navigate to the **Settings** of your Space.\n",

|

| 124 |

+

" - Go to the **Secrets** tab.\n",

|

| 125 |

+

" - Add your token as a new secret with the key `HF_TOKEN`.\n",

|

| 126 |

+

" - **Key**: `HF_TOKEN`\n",

|

| 127 |

+

" - **Value**: Your Hugging Face token, which you can get from [here](https://huggingface.co/settings/tokens).\n",

|

| 128 |

+

"\n",

|

| 129 |

+

"Once added, the token will be accessible in your Space, and you can securely reference it in your code with:\n",

|

| 130 |

+

"\n",

|

| 131 |

+

"```python\n",

|

| 132 |

+

"api_key = os.getenv(\"HF_TOKEN\")\n",

|

| 133 |

+

"client = InferenceClient(token=api_key)\n",

|

| 134 |

+

"```\n",

|

| 135 |

+

"\n",

|

| 136 |

+

"### 5. Done 🎉\n",

|

| 137 |

+

"\n",

|

| 138 |

+

"Your app will build and be available at:\n",

|

| 139 |

+

"```\n",

|

| 140 |

+

"https://huggingface.co/spaces/YOUR_USERNAME/YOUR_SPACE_NAME\n",

|

| 141 |

+

"```\n",

|

| 142 |

+

"\n",

|

| 143 |

+

"Need inspiration? Check out [awesome Spaces](https://huggingface.co/spaces?sort=trending)!\n"

|

| 144 |

+

]

|

| 145 |

+

}

|

| 146 |

+

],

|

| 147 |

+

"metadata": {

|

| 148 |

+

"kernelspec": {

|

| 149 |

+

"display_name": "Python 3 (ipykernel)",

|

| 150 |

+

"language": "python",

|

| 151 |

+

"name": "python3"

|

| 152 |

+

},

|

| 153 |

+

"language_info": {

|

| 154 |

+

"codemirror_mode": {

|

| 155 |

+

"name": "ipython",

|

| 156 |

+

"version": 3

|

| 157 |

+

},

|

| 158 |

+

"file_extension": ".py",

|

| 159 |

+

"mimetype": "text/x-python",

|

| 160 |

+

"name": "python",

|

| 161 |

+

"nbconvert_exporter": "python",

|

| 162 |

+

"pygments_lexer": "ipython3",

|

| 163 |

+

"version": "3.9.5"

|

| 164 |

+

}

|

| 165 |

+

},

|

| 166 |

+

"nbformat": 4,

|

| 167 |

+

"nbformat_minor": 4

|

| 168 |

+

}

|

notebooks/ch2-the-LLM-advantage.ipynb

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"id": "48d73cd4",

|

| 6 |

+

"metadata": {},

|

| 7 |

+

"source": [

|

| 8 |

+

"## 2. The LLM advantage\n",

|

| 9 |

+

"\n",

|

| 10 |

+

"A [large-language model](https://en.wikipedia.org/wiki/Large_language_model) is an artificial intelligence system capable of understanding and generating human language due to its extensive training on vast amounts of text. These systems are commonly referred to by the acronym LLM. The most prominent examples include OpenAI’s ChatGPT, Google’s Gemini and Anthropic’s Claude, but there are many others, including several open-source options.\n",

|

| 11 |

+

"\n",

|

| 12 |

+

"While they are most famous for their ability to converse with humans as chatbots, LLMs can perform a wide range of language processing tasks, including text classification, summarization and translation.\n",

|

| 13 |

+

"\n",

|

| 14 |

+

"Unlike traditional machine-learning models, LLMs do not require users to provide pre-prepared training data to perform a specific task. Instead, LLMs can be prompted with a broad description of their goals and a few examples of rules they should follow. The LLMs will then generate responses informed by the massive amount of information they contain. That deep knowledge can be especially beneficial when dealing with large and diverse datasets that are difficult for humans to process on their own. This advancement is recognized as a landmark achievement in the development of artificial intelligence.\n",

|

| 15 |

+

"\n",

|

| 16 |

+

"[](https://www.wired.com/story/eight-google-employees-invented-modern-ai-transformers-paper/)\n",

|

| 17 |

+

"\n",

|

| 18 |

+

"LLMs also do not require the user to understand machine-learning concepts, like vectorization or Bayesian statistics, or to write complex code to train and evaluate the model. Instead, users can submit prompts in plain language, which the model will use to generate responses. This makes it easier for journalists to experiment with different approaches and quickly iterate on their work.\n",

|

| 19 |

+

"\n",

|

| 20 |

+

"**[3. Getting started with Hugging Face →](ch3-getting-started-with-hf.ipynb)**"

|

| 21 |

+

]

|

| 22 |

+

}

|

| 23 |

+

],

|

| 24 |

+

"metadata": {

|

| 25 |

+

"kernelspec": {

|

| 26 |

+

"display_name": "Python 3 (ipykernel)",

|

| 27 |

+

"language": "python",

|

| 28 |

+

"name": "python3"

|

| 29 |

+

},

|

| 30 |

+

"language_info": {

|

| 31 |

+

"codemirror_mode": {

|

| 32 |

+

"name": "ipython",

|

| 33 |

+

"version": 3

|

| 34 |

+

},

|

| 35 |

+

"file_extension": ".py",

|

| 36 |

+

"mimetype": "text/x-python",

|

| 37 |

+

"name": "python",

|

| 38 |

+

"nbconvert_exporter": "python",

|

| 39 |

+

"pygments_lexer": "ipython3",

|

| 40 |

+

"version": "3.9.5"

|

| 41 |

+

}

|

| 42 |

+

},

|

| 43 |

+

"nbformat": 4,

|

| 44 |

+

"nbformat_minor": 5

|

| 45 |

+

}

|

notebooks/ch3-getting-started-with-hf.ipynb

ADDED

|

@@ -0,0 +1,63 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"id": "c2c4bff1",

|

| 6 |

+

"metadata": {},

|

| 7 |

+

"source": [

|

| 8 |

+

"## 3. Getting started with Hugging Face\n",

|

| 9 |

+

"\n",

|

| 10 |

+

"In addition to the commercial chatbots that draw the most media attention, there are many other ways to access large-language models — including free and open-source options that you can run directly in the cloud using Hugging Face.\n",

|

| 11 |

+

"\n",

|

| 12 |

+

"For this demonstration, we will use [Hugging Face Serverless Inference API](https://huggingface.co/docs/api-inference/en/index), which offers free access to a wide range of powerful language models. It’s fast, beginner-friendly, and widely supported in the AI community. The skills you learn here will transfer easily to other platforms as well.\n",

|

| 13 |

+

"\n",

|

| 14 |

+

"To get started, go to [huggingface.co](https://huggingface.co/). Click on **Sign Up** to create an account or **Log In** at the top right.\n",

|

| 15 |

+

"\n",

|

| 16 |

+

"[](https://huggingface.co/)\n",

|

| 17 |

+

"\n",

|

| 18 |

+

"Once you’re logged in, navigate to your profile dropdown and select **Settings**, then [**Access Tokens**](https://huggingface.co/settings/tokens). Click on **New token**, give it a name (we recommend `first-llm-classifier`), set the role to **Fine-Grained**, select the following options and hit **Generate**.\n",

|

| 19 |

+

"\n",

|

| 20 |

+

"[](https://huggingface.co/)\n",

|

| 21 |

+

"\n",

|

| 22 |

+

"Copy the token that appears — you'll only see it once — and store it somewhere safe. You’ll use it to authenticate your Python scripts when making requests to Hugging Face's APIs.\n",

|

| 23 |

+

"\n",

|

| 24 |

+

"You can now access any public model using the Hugging Face Inference API — no deployment required. For example, visit the [Llama 3.3 70B Instruct model page](https://huggingface.co/meta-llama/Llama-3.3-70B-Instruct), click **Deploy**, then go to the **Inference Providers** tab, and select **HF Inference API**. This gives you instant access to the model via a hosted endpoint maintained by Hugging Face.\n",

|

| 25 |

+

"\n",

|

| 26 |

+

"[](https://huggingface.co/meta-llama/Llama-3.3-70B-Instruct)\n",

|

| 27 |

+

"\n",

|

| 28 |

+

"This approach is ideal if you want to quickly try out models without spinning up your own infrastructure. Many models are available with generous free-tier access.\n",

|

| 29 |

+

"\n",

|

| 30 |

+

"**[4. Installing JupyterLab →](ch4-installing-jupyterlab.ipynb)**"

|

| 31 |

+

]

|

| 32 |

+

},

|

| 33 |

+

{

|

| 34 |

+

"cell_type": "code",

|

| 35 |

+

"execution_count": null,

|

| 36 |

+

"id": "6f8b3428-2d43-4691-82b4-085341c8a1d2",

|

| 37 |

+

"metadata": {},

|

| 38 |

+

"outputs": [],

|

| 39 |

+

"source": []

|

| 40 |

+

}

|

| 41 |

+

],

|

| 42 |

+

"metadata": {

|

| 43 |

+

"kernelspec": {

|

| 44 |

+

"display_name": "Python 3 (ipykernel)",

|

| 45 |

+

"language": "python",

|

| 46 |

+

"name": "python3"

|

| 47 |

+

},

|

| 48 |

+

"language_info": {

|

| 49 |

+

"codemirror_mode": {

|

| 50 |

+

"name": "ipython",

|

| 51 |

+

"version": 3

|

| 52 |

+

},

|

| 53 |

+

"file_extension": ".py",

|

| 54 |

+

"mimetype": "text/x-python",

|

| 55 |

+

"name": "python",

|

| 56 |

+

"nbconvert_exporter": "python",

|

| 57 |

+

"pygments_lexer": "ipython3",

|

| 58 |

+

"version": "3.9.5"

|

| 59 |

+

}

|

| 60 |

+

},

|

| 61 |

+

"nbformat": 4,

|

| 62 |

+

"nbformat_minor": 5

|

| 63 |

+

}

|

notebooks/ch4-installing-jupyterlab.ipynb

ADDED

|

@@ -0,0 +1,142 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"id": "26b5a248",

|

| 6 |

+

"metadata": {},

|

| 7 |

+

"source": [

|

| 8 |

+

"## 4. Installing JupyterLab\n",

|

| 9 |

+

"\n",

|

| 10 |

+

"> ⚠️ Note: This step is optional. We’ll be running all code directly in JupyterLab on Hugging Face. Follow this step only if you prefer to run the code on your local machine—otherwise, you can skip to the next step.\n",

|

| 11 |

+

"\n",

|

| 12 |

+

"This class will show you how to interact with the Hugging Face API using the Python computer programming language.\n",

|

| 13 |

+

"\n",

|

| 14 |

+

"If you want to run it on your computer, you can write Python code in your terminal, in a text file and any number of other places. If you’re a skilled programmer who already has a preferred venue for coding, feel free to use it as you work through this class.\n",

|

| 15 |

+

"\n",

|

| 16 |

+

"If you’re not, the tool we recommend for beginners is [Project Jupyter](http://jupyter.org/), a browser-based interface where you can write, run, remix, and republish code.\n",

|

| 17 |

+

"\n",

|

| 18 |

+

"It is free software that anyone can install and run. It is used by [scientists](http://nbviewer.jupyter.org/github/robertodealmeida/notebooks/blob/master/earth_day_data_challenge/Analyzing%20whale%20tracks.ipynb), [scholars](http://nbviewer.jupyter.org/github/nealcaren/workshop_2014/blob/master/notebooks/5_Times_API.ipynb), [investors](https://github.com/rsvp/fecon235/blob/master/nb/fred-debt-pop.ipynb), and corporations to create and share their research. It is also used by journalists to develop stories and show their work.\n",

|

| 19 |

+

"\n",

|

| 20 |

+

"The easiest way to use it is by installing [JupyterLab Desktop](https://github.com/jupyterlab/jupyterlab-desktop), a self-contained application that provides a ready-to-use Python environment with several popular libraries bundled in. \n",

|

| 21 |

+

"It can be installed on any operating system with a simple point-and-click interface."

|

| 22 |

+

]

|

| 23 |

+

},

|

| 24 |

+

{

|

| 25 |

+

"cell_type": "code",

|

| 26 |

+

"execution_count": 2,

|

| 27 |

+

"id": "c97c32a7-0497-4231-a628-647afdaac68b",

|

| 28 |

+

"metadata": {},

|

| 29 |

+

"outputs": [

|

| 30 |

+

{

|

| 31 |

+

"data": {

|

| 32 |

+

"text/html": [

|

| 33 |

+

"\n",

|

| 34 |

+

"<div style=\"position: relative; padding-bottom: 56.25%; height: 0; overflow: hidden; max-width: 100%;\">\n",

|

| 35 |

+

" <iframe \n",

|

| 36 |

+

" src=\"https://www.youtube.com/embed/578B63wZ7rI\" \n",

|

| 37 |

+

" style=\"position: absolute; top: 0; left: 0; width: 100%; height: 100%;\" \n",

|

| 38 |

+

" frameborder=\"0\" \n",

|

| 39 |

+

" allowfullscreen>\n",

|

| 40 |

+

" </iframe>\n",

|

| 41 |

+

"</div>\n"

|

| 42 |

+

],

|

| 43 |

+

"text/plain": [

|

| 44 |

+

"<IPython.core.display.HTML object>"

|

| 45 |

+

]

|

| 46 |

+

},

|

| 47 |

+

"execution_count": 2,

|

| 48 |

+

"metadata": {},

|

| 49 |

+

"output_type": "execute_result"

|

| 50 |

+

}

|

| 51 |

+

],

|

| 52 |

+

"source": [

|

| 53 |

+

"from IPython.display import HTML\n",

|

| 54 |

+

"\n",

|

| 55 |

+

"HTML(\"\"\"\n",

|

| 56 |

+

"<div style=\"position: relative; padding-bottom: 56.25%; height: 0; overflow: hidden; max-width: 100%;\">\n",

|

| 57 |

+

" <iframe \n",

|

| 58 |

+

" src=\"https://www.youtube.com/embed/578B63wZ7rI\" \n",

|

| 59 |

+

" style=\"position: absolute; top: 0; left: 0; width: 100%; height: 100%;\" \n",

|

| 60 |

+

" frameborder=\"0\" \n",

|

| 61 |

+

" allowfullscreen>\n",

|

| 62 |

+

" </iframe>\n",

|

| 63 |

+

"</div>\n",

|

| 64 |

+

"\"\"\")"

|

| 65 |

+

]

|

| 66 |

+

},

|

| 67 |

+

{

|

| 68 |

+

"cell_type": "markdown",

|

| 69 |

+

"id": "d5decd99-55a2-4a56-b88f-1930a6245203",

|

| 70 |

+

"metadata": {},

|

| 71 |

+

"source": [

|

| 72 |

+

"The first step is to visit [JupyterLab Desktop’s homepage on GitHub](https://github.com/jupyterlab/jupyterlab-desktop) in your web browser. \n",

|

| 73 |

+

"\n",

|

| 74 |

+

"\n",

|

| 75 |

+

"\n",

|

| 76 |

+

"Scroll down to the documentation below the code until you reach the [Installation](https://github.com/jupyterlab/jupyterlab-desktop) section. \n",

|

| 77 |

+

"\n",

|

| 78 |

+

"\n",

|

| 79 |

+

"\n",

|

| 80 |

+

"Then pick the link appropriate for your operating system. The installation file is large, so the download might take a while.\n",

|

| 81 |

+

"\n",

|

| 82 |

+

"Find the file in your downloads directory and double-click it to begin the installation process. \n",

|

| 83 |

+

"\n",

|

| 84 |

+

"Follow the instructions presented by the pop-up windows, sticking to the default options.\n",

|

| 85 |

+

"\n",

|

| 86 |

+

"> ⚠️ **Warning** \n",

|

| 87 |

+

"> Your computer’s operating system might flag the JupyterLab Desktop installer as an unverified or insecure application. Don’t worry. The tool has been vetted by Project Jupyter’s core developers and it’s safe to use. \n",

|

| 88 |

+

"> If your system is blocking you from installing the tool, you’ll likely need to work around its barriers. For instance, on macOS, this might require [visiting your system’s security settings](https://www.wikihow.com/Install-Software-from-Unsigned-Developers-on-a-Mac) to allow the installation.\n",

|

| 89 |

+

"\n",

|

| 90 |

+

"Once JupyterLab Desktop is installed, you can accept the installation wizard’s offer to immediately open the program, or you can search for “Jupyter Lab” in your operating system’s application finder.\n",

|

| 91 |

+

"\n",

|

| 92 |

+

"That will open up a new window that looks something like this:\n",

|

| 93 |

+

"\n",

|

| 94 |

+

"\n",

|

| 95 |

+

"\n",

|

| 96 |

+

"> ⚠️ **Warning** \n",

|

| 97 |

+

"> If you see a warning bar at the bottom of the screen that says you need to install Python, click the link provided to make that happen.\n",

|

| 98 |

+

"\n",

|

| 99 |

+

"Click the “New notebook…” button to open the Python interface.\n",

|

| 100 |

+

"\n",

|

| 101 |

+

"\n",

|

| 102 |

+

"\n",

|

| 103 |

+

"Welcome to your first Jupyter notebook. Now you’re ready to move on to writing code.\n",

|

| 104 |

+

"\n",

|

| 105 |

+

"> 💡 **Note** \n",

|

| 106 |

+

"> If you’re struggling to make Jupyter work and need help with the basics, \n",

|

| 107 |

+

"> we recommend you check out [“First Python Notebook”](https://palewi.re/docs/first-python-notebook/), where you can get up to speed.\n",

|

| 108 |

+

"\n",

|

| 109 |

+

"**[5. Prompting with Python →](ch5-prompting-with-python.ipynb)**"

|

| 110 |

+

]

|

| 111 |

+

},

|

| 112 |

+

{

|

| 113 |

+

"cell_type": "code",

|

| 114 |

+

"execution_count": null,

|

| 115 |

+

"id": "cca91757-438f-4a5f-b3d5-2bdd774278de",

|

| 116 |

+

"metadata": {},

|

| 117 |

+

"outputs": [],

|

| 118 |

+

"source": []

|

| 119 |

+

}

|

| 120 |

+

],

|

| 121 |

+

"metadata": {

|

| 122 |

+

"kernelspec": {

|

| 123 |

+

"display_name": "Python 3 (ipykernel)",

|

| 124 |

+

"language": "python",

|

| 125 |

+

"name": "python3"

|

| 126 |

+

},

|

| 127 |

+

"language_info": {

|

| 128 |

+

"codemirror_mode": {

|

| 129 |

+

"name": "ipython",

|

| 130 |

+

"version": 3

|

| 131 |

+

},

|

| 132 |

+

"file_extension": ".py",

|

| 133 |

+

"mimetype": "text/x-python",

|

| 134 |

+

"name": "python",

|

| 135 |

+

"nbconvert_exporter": "python",

|

| 136 |

+

"pygments_lexer": "ipython3",

|

| 137 |

+

"version": "3.9.5"

|

| 138 |

+

}

|

| 139 |

+

},

|

| 140 |

+

"nbformat": 4,

|

| 141 |

+

"nbformat_minor": 5

|

| 142 |

+

}

|

notebooks/ch5-prompting-with-python.ipynb

ADDED

|

@@ -0,0 +1,536 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"id": "84dd193e",

|

| 6 |

+

"metadata": {},

|

| 7 |

+

"source": [

|

| 8 |

+

"## 5. Prompting with Python"

|

| 9 |

+

]

|

| 10 |

+

},

|

| 11 |

+

{

|

| 12 |

+

"cell_type": "markdown",

|

| 13 |

+

"id": "862b8218",

|

| 14 |

+

"metadata": {},

|

| 15 |

+

"source": [

|

| 16 |

+

"First, we’ll install the libraries we need. The `huggingface_hub` package is the official client for Hugging Face’s API. The `rich` and `ipywidgets` packages are helper libraries that will improve how your outputs look in Jupyter notebooks."

|

| 17 |

+

]

|

| 18 |

+

},

|

| 19 |

+

{

|

| 20 |

+

"cell_type": "markdown",

|

| 21 |

+

"id": "c09f0b15",

|

| 22 |

+

"metadata": {},

|

| 23 |

+

"source": [

|

| 24 |

+

"A common way to install packages from inside your JupyterLab Desktop notebook is to use the `%pip command`. Hit the play button in the top toolbar after selecting the cell below."

|

| 25 |

+

]

|

| 26 |

+

},

|

| 27 |

+

{

|

| 28 |

+

"cell_type": "code",

|

| 29 |

+

"execution_count": null,

|

| 30 |

+

"id": "e728c5fe",

|

| 31 |

+

"metadata": {

|

| 32 |

+

"scrolled": true

|

| 33 |

+

},

|

| 34 |

+

"outputs": [],

|

| 35 |

+

"source": [

|

| 36 |

+

"%pip install rich ipywidgets huggingface_hub"

|

| 37 |

+

]

|

| 38 |

+

},

|

| 39 |

+

{

|

| 40 |

+

"cell_type": "markdown",

|

| 41 |

+

"id": "79f96b1a",

|

| 42 |

+

"metadata": {},

|

| 43 |

+

"source": [

|

| 44 |

+

"If the `%pip command` doesn’t work on your computer, try substituting the `!pip command` instead. Or you can install the packages from the command line on your computer and restart your notebook."

|

| 45 |

+

]

|

| 46 |

+

},

|

| 47 |

+

{

|

| 48 |

+

"cell_type": "markdown",

|

| 49 |

+

"id": "75f1366a",

|

| 50 |

+

"metadata": {},

|

| 51 |

+

"source": [

|

| 52 |

+

"Now let's import them in the cell that appears below the installation output. Hit play again."

|

| 53 |

+

]

|

| 54 |

+

},

|

| 55 |

+

{

|

| 56 |

+

"cell_type": "code",

|

| 57 |

+

"execution_count": 2,

|

| 58 |

+

"id": "8013a72c-670e-48ab-8619-99a337fd5392",

|

| 59 |

+

"metadata": {},

|

| 60 |

+

"outputs": [],

|

| 61 |

+

"source": [

|

| 62 |

+

"import os\n",

|

| 63 |

+

"from rich import print\n",

|

| 64 |

+