+ DAS3R: Dynamics-Aware Gaussian Splatting for Static Scene Reconstruction

+

+

+

+

+

+

+

+

+

+

+

+

+

+* Official demo of [DAS3R: Dynamics-Aware Gaussian Splatting for Static Scene Reconstruction](https://kai422.github.io/DAS3R/).

+* You can explore the sample results by clicking the sequence names at the bottom of the page.

+* Due to GPU memory and time constraints, the total processing frame number is constrained at 20 and the iterations for GS training is constrained at 2000. We apply uniform sampling when the total number of input frames exceeds 20.

+* This Gradio demo is built upon InstantSplat, which can be found at [https://huggingface.co/spaces/kairunwen/InstantSplat](https://huggingface.co/spaces/kairunwen/InstantSplat).

+

+'''

+

+block = gr.Blocks().queue()

+with block:

+ with gr.Row():

+ with gr.Column(scale=1):

+ # gr.Markdown('# ' + _TITLE)

+ gr.Markdown(_DESCRIPTION)

+

+ with gr.Row(variant='panel'):

+ with gr.Tab("Input"):

+ inputfiles = gr.File(file_count="multiple", label="images")

+ input_path = gr.Textbox(visible=False, label="example_path")

+ button_gen = gr.Button("RUN")

+

+ with gr.Row(variant='panel'):

+ with gr.Tab("Output"):

+ with gr.Column(scale=2):

+ with gr.Group():

+ output_model = gr.Model3D(

+ label="3D Dense Model under Gaussian Splats Formats, need more time to visualize",

+ interactive=False,

+ camera_position=[0.5, 0.5, 1], # 稍微偏移一点,以便更好地查看模型

+ )

+ gr.Markdown(

+ """

+

+ Use the left mouse button to rotate, the scroll wheel to zoom, and the right mouse button to move.

+

+ """

+ )

+ output_file = gr.File(label="ply")

+ with gr.Column(scale=1):

+ output_video = gr.Video(label="video")

+

+ button_gen.click(process, inputs=[inputfiles], outputs=[output_video, output_file, output_model])

+

+ gr.Examples(

+ examples=[

+ "davis-dog",

+ # "sintel-market_2",

+ ],

+ inputs=[input_path],

+ outputs=[output_video, output_file, output_model],

+ fn=lambda x: process(inputfiles=None, input_path=x),

+ cache_examples=True,

+ label='Sparse-view Examples'

+ )

+block.launch(server_name="0.0.0.0", share=False)

\ No newline at end of file

diff --git a/app_wrapper.py b/app_wrapper.py

new file mode 100644

index 0000000000000000000000000000000000000000..be1af7756d476cbd12c07cf0c901bb1efaab1dd7

--- /dev/null

+++ b/app_wrapper.py

@@ -0,0 +1,19 @@

+import gradio as gr

+import os

+# import spaces

+

+hf_token = os.getenv("instantsplat_token")

+

+# gr.load("kairunwen/tmp", hf_token=token, src="spaces").launch()

+

+

+

+import shlex

+import subprocess

+

+from huggingface_hub import HfApi

+

+api = HfApi()

+api.snapshot_download(repo_id="kairunwen/tmp", repo_type="space", local_dir=".", token=hf_token)

+subprocess.run(shlex.split("pip install -r requirements.txt"))

+subprocess.run(shlex.split("python app.py"))

diff --git a/arguments/__init__.py b/arguments/__init__.py

new file mode 100755

index 0000000000000000000000000000000000000000..9ee5acb1717345919959219c9fac89a31b5c8591

--- /dev/null

+++ b/arguments/__init__.py

@@ -0,0 +1,112 @@

+#

+# Copyright (C) 2023, Inria

+# GRAPHDECO research group, https://team.inria.fr/graphdeco

+# All rights reserved.

+#

+# This software is free for non-commercial, research and evaluation use

+# under the terms of the LICENSE.md file.

+#

+# For inquiries contact george.drettakis@inria.fr

+#

+

+from argparse import ArgumentParser, Namespace

+import sys

+import os

+

+class GroupParams:

+ pass

+

+class ParamGroup:

+ def __init__(self, parser: ArgumentParser, name : str, fill_none = False):

+ group = parser.add_argument_group(name)

+ for key, value in vars(self).items():

+ shorthand = False

+ if key.startswith("_"):

+ shorthand = True

+ key = key[1:]

+ t = type(value)

+ value = value if not fill_none else None

+ if shorthand:

+ if t == bool:

+ group.add_argument("--" + key, ("-" + key[0:1]), default=value, action="store_true")

+ else:

+ group.add_argument("--" + key, ("-" + key[0:1]), default=value, type=t)

+ else:

+ if t == bool:

+ group.add_argument("--" + key, default=value, action="store_true")

+ else:

+ group.add_argument("--" + key, default=value, type=t)

+

+ def extract(self, args):

+ group = GroupParams()

+ for arg in vars(args).items():

+ if arg[0] in vars(self) or ("_" + arg[0]) in vars(self):

+ setattr(group, arg[0], arg[1])

+ return group

+

+class ModelParams(ParamGroup):

+ def __init__(self, parser, sentinel=False):

+ self.sh_degree = 3

+ self._source_path = ""

+ self._model_path = ""

+ self._images = "images"

+ self._resolution = -1

+ self._white_background = False

+ self.data_device = "cuda"

+ self.eval = False

+ super().__init__(parser, "Loading Parameters", sentinel)

+

+ def extract(self, args):

+ g = super().extract(args)

+ g.source_path = os.path.abspath(g.source_path)

+ return g

+

+class PipelineParams(ParamGroup):

+ def __init__(self, parser):

+ self.convert_SHs_python = False

+ self.compute_cov3D_python = False

+ self.debug = False

+ super().__init__(parser, "Pipeline Parameters")

+

+class OptimizationParams(ParamGroup):

+ def __init__(self, parser):

+ self.iterations = 30_000

+ self.position_lr_init = 0.00016

+ self.position_lr_final = 0.0000016

+ self.position_lr_delay_mult = 0.01

+ self.position_lr_max_steps = 30_000

+ self.feature_lr = 0.0025

+ self.opacity_lr = 0.05

+ self.scaling_lr = 0.005

+ self.rotation_lr = 0.001

+ self.percent_dense = 0.01

+ self.lambda_dssim = 0.2

+ self.densification_interval = 100

+ self.opacity_reset_interval = 3000

+ self.densify_from_iter = 500

+ self.densify_until_iter = 15_000

+ self.densify_grad_threshold = 0.0002

+ self.random_background = False

+ super().__init__(parser, "Optimization Parameters")

+

+def get_combined_args(parser : ArgumentParser):

+ cmdlne_string = sys.argv[1:]

+ cfgfile_string = "Namespace()"

+ args_cmdline = parser.parse_args(cmdlne_string)

+

+ try:

+ cfgfilepath = os.path.join(args_cmdline.model_path, "cfg_args")

+ print("Looking for config file in", cfgfilepath)

+ with open(cfgfilepath) as cfg_file:

+ print("Config file found: {}".format(cfgfilepath))

+ cfgfile_string = cfg_file.read()

+ except TypeError:

+ print("Config file not found at")

+ pass

+ args_cfgfile = eval(cfgfile_string)

+

+ merged_dict = vars(args_cfgfile).copy()

+ for k,v in vars(args_cmdline).items():

+ if v != None:

+ merged_dict[k] = v

+ return Namespace(**merged_dict)

diff --git a/assets/example/davis-dog/00000.jpg b/assets/example/davis-dog/00000.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..a80ffa8856d21e0e0ce248aaa28f1d0c0b34e552

Binary files /dev/null and b/assets/example/davis-dog/00000.jpg differ

diff --git a/assets/example/davis-dog/00001.jpg b/assets/example/davis-dog/00001.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..b5043f31ee12e6d787058445ef84e610ac1c497f

Binary files /dev/null and b/assets/example/davis-dog/00001.jpg differ

diff --git a/assets/example/davis-dog/00002.jpg b/assets/example/davis-dog/00002.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..d903ae0d308b92af6fbfae6e3bd4a60eb59094d2

Binary files /dev/null and b/assets/example/davis-dog/00002.jpg differ

diff --git a/assets/example/davis-dog/00003.jpg b/assets/example/davis-dog/00003.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..3a03a653b661b3642cae3252d5cff8ce34d62424

Binary files /dev/null and b/assets/example/davis-dog/00003.jpg differ

diff --git a/assets/example/davis-dog/00004.jpg b/assets/example/davis-dog/00004.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..e070d2fd3521bb9faefbb3224b009a69924c0c7f

Binary files /dev/null and b/assets/example/davis-dog/00004.jpg differ

diff --git a/assets/example/davis-dog/00005.jpg b/assets/example/davis-dog/00005.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..1a3c068a167483ff572a9dfe594bec572f0716b9

Binary files /dev/null and b/assets/example/davis-dog/00005.jpg differ

diff --git a/assets/example/davis-dog/00006.jpg b/assets/example/davis-dog/00006.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..45d4cee6499a17d42e2cb802a072f7dcf56484d3

Binary files /dev/null and b/assets/example/davis-dog/00006.jpg differ

diff --git a/assets/example/davis-dog/00007.jpg b/assets/example/davis-dog/00007.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..2c55dcc000b853d186a6ee8248b0a209948b53b2

Binary files /dev/null and b/assets/example/davis-dog/00007.jpg differ

diff --git a/assets/example/davis-dog/00008.jpg b/assets/example/davis-dog/00008.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..426b5b7e7ad81995ba4c2d6de0f8929047cafc38

Binary files /dev/null and b/assets/example/davis-dog/00008.jpg differ

diff --git a/assets/example/davis-dog/00009.jpg b/assets/example/davis-dog/00009.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..8d104333700949e4158ca6214124b66a5294a3e3

Binary files /dev/null and b/assets/example/davis-dog/00009.jpg differ

diff --git a/assets/example/davis-dog/00010.jpg b/assets/example/davis-dog/00010.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..28c61aeb441a87a6108037645f810aed1a8c3b7f

Binary files /dev/null and b/assets/example/davis-dog/00010.jpg differ

diff --git a/assets/example/davis-dog/00011.jpg b/assets/example/davis-dog/00011.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..c1d9c6d9b4219c86576f685534108943b85e6c72

Binary files /dev/null and b/assets/example/davis-dog/00011.jpg differ

diff --git a/assets/example/davis-dog/00012.jpg b/assets/example/davis-dog/00012.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..53a8905ae8238221eee9de488da84f85ae5e1149

Binary files /dev/null and b/assets/example/davis-dog/00012.jpg differ

diff --git a/assets/example/davis-dog/00013.jpg b/assets/example/davis-dog/00013.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..69a7808771ad9852a4fd7c917d8ead20252e6aa7

Binary files /dev/null and b/assets/example/davis-dog/00013.jpg differ

diff --git a/assets/example/davis-dog/00014.jpg b/assets/example/davis-dog/00014.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..faa0edeb2c7d8a48875679d94d8be54ff7cdf600

Binary files /dev/null and b/assets/example/davis-dog/00014.jpg differ

diff --git a/assets/example/davis-dog/00015.jpg b/assets/example/davis-dog/00015.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..4a01fcfed9eaa6896fa256cbd8e0f9b7a130a3ab

Binary files /dev/null and b/assets/example/davis-dog/00015.jpg differ

diff --git a/assets/example/davis-dog/00016.jpg b/assets/example/davis-dog/00016.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..95286db9843bd396b624fe03b5001d6a081fd4f4

Binary files /dev/null and b/assets/example/davis-dog/00016.jpg differ

diff --git a/assets/example/davis-dog/00017.jpg b/assets/example/davis-dog/00017.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..370e3c646003af019aecbd9c0fdb1409e573f6cf

Binary files /dev/null and b/assets/example/davis-dog/00017.jpg differ

diff --git a/assets/example/davis-dog/00018.jpg b/assets/example/davis-dog/00018.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..dc590cbba10f2351aad3ca13b749e632e258197e

Binary files /dev/null and b/assets/example/davis-dog/00018.jpg differ

diff --git a/assets/example/davis-dog/00019.jpg b/assets/example/davis-dog/00019.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..e8eddf5cbfda3c67793d700255200a7bcc1f06df

Binary files /dev/null and b/assets/example/davis-dog/00019.jpg differ

diff --git a/assets/example/davis-dog/00020.jpg b/assets/example/davis-dog/00020.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..fbc9a27dca64d96309a17b035e5ab1a9c02547b4

Binary files /dev/null and b/assets/example/davis-dog/00020.jpg differ

diff --git a/assets/example/davis-dog/00021.jpg b/assets/example/davis-dog/00021.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..016945e123b82ae86187e1494dfa31e420ba90ec

Binary files /dev/null and b/assets/example/davis-dog/00021.jpg differ

diff --git a/assets/example/davis-dog/00022.jpg b/assets/example/davis-dog/00022.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..def7fe8991fbcad609ba6b2efdd2aff5c40faa5c

Binary files /dev/null and b/assets/example/davis-dog/00022.jpg differ

diff --git a/assets/example/davis-dog/00023.jpg b/assets/example/davis-dog/00023.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..8d2b892b347978cd66e231d0563a963858254b4b

Binary files /dev/null and b/assets/example/davis-dog/00023.jpg differ

diff --git a/assets/example/davis-dog/00024.jpg b/assets/example/davis-dog/00024.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..890d85970686785162e4d34080ef1c9bb2fb2a9b

Binary files /dev/null and b/assets/example/davis-dog/00024.jpg differ

diff --git a/assets/example/davis-dog/00025.jpg b/assets/example/davis-dog/00025.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..5f584e194a871a655b1c4f186cce3c9167d30caa

Binary files /dev/null and b/assets/example/davis-dog/00025.jpg differ

diff --git a/assets/example/davis-dog/00026.jpg b/assets/example/davis-dog/00026.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..8d7767c35ed165fab73a6a0d3a94651f3135ef52

Binary files /dev/null and b/assets/example/davis-dog/00026.jpg differ

diff --git a/assets/example/davis-dog/00027.jpg b/assets/example/davis-dog/00027.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..f379d96ad082123aa91298e352488419d7d733b2

Binary files /dev/null and b/assets/example/davis-dog/00027.jpg differ

diff --git a/assets/example/davis-dog/00028.jpg b/assets/example/davis-dog/00028.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..4cbab43498888a3e7ec94dd47834ce667a0cf97b

Binary files /dev/null and b/assets/example/davis-dog/00028.jpg differ

diff --git a/assets/example/davis-dog/00029.jpg b/assets/example/davis-dog/00029.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..b76893a6827834da544fd0e828c8a4dc7231e723

Binary files /dev/null and b/assets/example/davis-dog/00029.jpg differ

diff --git a/assets/example/davis-dog/00030.jpg b/assets/example/davis-dog/00030.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..e15962042fc33ac86dc98876990ef9e0f9d7d969

Binary files /dev/null and b/assets/example/davis-dog/00030.jpg differ

diff --git a/assets/example/davis-dog/00031.jpg b/assets/example/davis-dog/00031.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..e193d3944dd1450bee86661db921d1442b853ca9

Binary files /dev/null and b/assets/example/davis-dog/00031.jpg differ

diff --git a/assets/example/davis-dog/00032.jpg b/assets/example/davis-dog/00032.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..9f796529e61eb479da9d93e0402394a890024556

Binary files /dev/null and b/assets/example/davis-dog/00032.jpg differ

diff --git a/assets/example/davis-dog/00033.jpg b/assets/example/davis-dog/00033.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..9cab6d2f908ef604db3196fd4448857eae8e9688

Binary files /dev/null and b/assets/example/davis-dog/00033.jpg differ

diff --git a/assets/example/davis-dog/00034.jpg b/assets/example/davis-dog/00034.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..61400eed0aea2429a7e4c60776d650836973c7a6

Binary files /dev/null and b/assets/example/davis-dog/00034.jpg differ

diff --git a/assets/example/davis-dog/00035.jpg b/assets/example/davis-dog/00035.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..d41e97096523f8cc7602cf2386a162773082b32e

Binary files /dev/null and b/assets/example/davis-dog/00035.jpg differ

diff --git a/assets/example/davis-dog/00036.jpg b/assets/example/davis-dog/00036.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..b9345f5dda0debc8405e30326c987451964fab3f

Binary files /dev/null and b/assets/example/davis-dog/00036.jpg differ

diff --git a/assets/example/davis-dog/00037.jpg b/assets/example/davis-dog/00037.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..995c87a63915a1ca50143e07460faa29afe6f5ff

Binary files /dev/null and b/assets/example/davis-dog/00037.jpg differ

diff --git a/assets/example/davis-dog/00038.jpg b/assets/example/davis-dog/00038.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..7701e865db962a9ec3ac4c2d60f536d88f15eea2

Binary files /dev/null and b/assets/example/davis-dog/00038.jpg differ

diff --git a/assets/example/davis-dog/00039.jpg b/assets/example/davis-dog/00039.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..fb2e8d88fefa5a844b69e0591c8f6f9d61967eaf

Binary files /dev/null and b/assets/example/davis-dog/00039.jpg differ

diff --git a/assets/example/davis-dog/00040.jpg b/assets/example/davis-dog/00040.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..887dda87b9613c14a1ccd47cfe7272baa44452b7

Binary files /dev/null and b/assets/example/davis-dog/00040.jpg differ

diff --git a/assets/example/davis-dog/00041.jpg b/assets/example/davis-dog/00041.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..977cfe7ac19254429dac1748f6441eca5eb6266d

Binary files /dev/null and b/assets/example/davis-dog/00041.jpg differ

diff --git a/assets/example/davis-dog/00042.jpg b/assets/example/davis-dog/00042.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..caff6b3feb8cba9a00e036a93b660bc6d28324c6

Binary files /dev/null and b/assets/example/davis-dog/00042.jpg differ

diff --git a/assets/example/davis-dog/00043.jpg b/assets/example/davis-dog/00043.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..0fc4f42cd2f4b68cc3e8cf75976ebac28b5727ef

Binary files /dev/null and b/assets/example/davis-dog/00043.jpg differ

diff --git a/assets/example/davis-dog/00044.jpg b/assets/example/davis-dog/00044.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..263d0053571571b501962f5cf62e61dbf9178a02

Binary files /dev/null and b/assets/example/davis-dog/00044.jpg differ

diff --git a/assets/example/davis-dog/00045.jpg b/assets/example/davis-dog/00045.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..85ca5a410828b249a508417e3f8a0c1d59904078

Binary files /dev/null and b/assets/example/davis-dog/00045.jpg differ

diff --git a/assets/example/davis-dog/00046.jpg b/assets/example/davis-dog/00046.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..40763b0aab3c771e858ec789d6e75057aebd4265

Binary files /dev/null and b/assets/example/davis-dog/00046.jpg differ

diff --git a/assets/example/davis-dog/00047.jpg b/assets/example/davis-dog/00047.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..3a89b557776fa616c3cae224f21a518759bd9338

Binary files /dev/null and b/assets/example/davis-dog/00047.jpg differ

diff --git a/assets/example/davis-dog/00048.jpg b/assets/example/davis-dog/00048.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..8dd6700e13d3cdbdd7947756dc43595ce1e99ac5

Binary files /dev/null and b/assets/example/davis-dog/00048.jpg differ

diff --git a/assets/example/davis-dog/00049.jpg b/assets/example/davis-dog/00049.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..ed36dec3a55e73a5f1b5ccb2aa78ec690939c2a7

Binary files /dev/null and b/assets/example/davis-dog/00049.jpg differ

diff --git a/assets/example/davis-dog/00050.jpg b/assets/example/davis-dog/00050.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..a14bec357e4aeccc245abed522320efa3e33bc1f

Binary files /dev/null and b/assets/example/davis-dog/00050.jpg differ

diff --git a/assets/example/davis-dog/00051.jpg b/assets/example/davis-dog/00051.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..974ed8d6bad6a788578f370a1943a491c89c4f4b

Binary files /dev/null and b/assets/example/davis-dog/00051.jpg differ

diff --git a/assets/example/davis-dog/00052.jpg b/assets/example/davis-dog/00052.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..394e7b0416a74a1ee004e58193043c4b71447415

Binary files /dev/null and b/assets/example/davis-dog/00052.jpg differ

diff --git a/assets/example/davis-dog/00053.jpg b/assets/example/davis-dog/00053.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..7e2f0adc92b587a82cacd9d825ad992e204e9f95

Binary files /dev/null and b/assets/example/davis-dog/00053.jpg differ

diff --git a/assets/example/davis-dog/00054.jpg b/assets/example/davis-dog/00054.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..e3d517eb86ca001ceee5e40a63c6ea242942800c

Binary files /dev/null and b/assets/example/davis-dog/00054.jpg differ

diff --git a/assets/example/davis-dog/00055.jpg b/assets/example/davis-dog/00055.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..8c7ead10f769c6cc83cfc5ccc86dd1b11eac8e06

Binary files /dev/null and b/assets/example/davis-dog/00055.jpg differ

diff --git a/assets/example/davis-dog/00056.jpg b/assets/example/davis-dog/00056.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..9fb21a9902b73e755e8f8f3efbc8dc8e4d4bb85c

Binary files /dev/null and b/assets/example/davis-dog/00056.jpg differ

diff --git a/assets/example/davis-dog/00057.jpg b/assets/example/davis-dog/00057.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..89deb5393c73c54f05d5b5ae6a625f1cd4ad48d7

Binary files /dev/null and b/assets/example/davis-dog/00057.jpg differ

diff --git a/assets/example/davis-dog/00058.jpg b/assets/example/davis-dog/00058.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..8f982159c7e295b3e2b4d1227036816efd53a125

Binary files /dev/null and b/assets/example/davis-dog/00058.jpg differ

diff --git a/assets/example/davis-dog/00059.jpg b/assets/example/davis-dog/00059.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..59b038929f2dfe78b35cf9de4acc4695d076e41c

Binary files /dev/null and b/assets/example/davis-dog/00059.jpg differ

diff --git a/assets/example/sintel-market_2/frame_0001.png b/assets/example/sintel-market_2/frame_0001.png

new file mode 100644

index 0000000000000000000000000000000000000000..cc417d1e038ee6ede1f4149297eb9aab7dd75a7c

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0001.png differ

diff --git a/assets/example/sintel-market_2/frame_0002.png b/assets/example/sintel-market_2/frame_0002.png

new file mode 100644

index 0000000000000000000000000000000000000000..9162dcb0d961b2d7e132f7389087bb6a82e4c83c

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0002.png differ

diff --git a/assets/example/sintel-market_2/frame_0003.png b/assets/example/sintel-market_2/frame_0003.png

new file mode 100644

index 0000000000000000000000000000000000000000..0aad46e8214aeea908ac99bacee28769d3260b98

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0003.png differ

diff --git a/assets/example/sintel-market_2/frame_0004.png b/assets/example/sintel-market_2/frame_0004.png

new file mode 100644

index 0000000000000000000000000000000000000000..dabef3ab83c3acc5304e0edcbbf5236d31cf1e44

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0004.png differ

diff --git a/assets/example/sintel-market_2/frame_0005.png b/assets/example/sintel-market_2/frame_0005.png

new file mode 100644

index 0000000000000000000000000000000000000000..3158ce5d72f2446c0e55543d08b00f5ad91ad81f

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0005.png differ

diff --git a/assets/example/sintel-market_2/frame_0006.png b/assets/example/sintel-market_2/frame_0006.png

new file mode 100644

index 0000000000000000000000000000000000000000..579d47d726bc6f7aad9954b852e725dab8ebd10a

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0006.png differ

diff --git a/assets/example/sintel-market_2/frame_0007.png b/assets/example/sintel-market_2/frame_0007.png

new file mode 100644

index 0000000000000000000000000000000000000000..ff28354433478595e7cdae0c417a5be5ba5a35b8

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0007.png differ

diff --git a/assets/example/sintel-market_2/frame_0008.png b/assets/example/sintel-market_2/frame_0008.png

new file mode 100644

index 0000000000000000000000000000000000000000..d4f45aac79f20b602219cf3cfb64e897cb6195c6

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0008.png differ

diff --git a/assets/example/sintel-market_2/frame_0009.png b/assets/example/sintel-market_2/frame_0009.png

new file mode 100644

index 0000000000000000000000000000000000000000..1e0e2d5667e3a60ffa22fe585bea1b56b8375088

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0009.png differ

diff --git a/assets/example/sintel-market_2/frame_0010.png b/assets/example/sintel-market_2/frame_0010.png

new file mode 100644

index 0000000000000000000000000000000000000000..bbdfb686ca6b19f4b1a0000b243948b78723c5d1

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0010.png differ

diff --git a/assets/example/sintel-market_2/frame_0011.png b/assets/example/sintel-market_2/frame_0011.png

new file mode 100644

index 0000000000000000000000000000000000000000..b4765fcce889a97352ca0f636c07deb24fd90c26

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0011.png differ

diff --git a/assets/example/sintel-market_2/frame_0012.png b/assets/example/sintel-market_2/frame_0012.png

new file mode 100644

index 0000000000000000000000000000000000000000..200c174326503bf86ccb1f5f2b8caa3b26917a71

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0012.png differ

diff --git a/assets/example/sintel-market_2/frame_0013.png b/assets/example/sintel-market_2/frame_0013.png

new file mode 100644

index 0000000000000000000000000000000000000000..9b395e2fa3b0b0dd6a1c29c67167830d1998c397

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0013.png differ

diff --git a/assets/example/sintel-market_2/frame_0014.png b/assets/example/sintel-market_2/frame_0014.png

new file mode 100644

index 0000000000000000000000000000000000000000..f3600170b066fce09ca497b5b962898255963c64

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0014.png differ

diff --git a/assets/example/sintel-market_2/frame_0015.png b/assets/example/sintel-market_2/frame_0015.png

new file mode 100644

index 0000000000000000000000000000000000000000..3bd5ab51cecae5ddd96995f8d7b5a3e4ada98531

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0015.png differ

diff --git a/assets/example/sintel-market_2/frame_0016.png b/assets/example/sintel-market_2/frame_0016.png

new file mode 100644

index 0000000000000000000000000000000000000000..99e56e262148a6c3c6f6808111d591a774171a82

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0016.png differ

diff --git a/assets/example/sintel-market_2/frame_0017.png b/assets/example/sintel-market_2/frame_0017.png

new file mode 100644

index 0000000000000000000000000000000000000000..66e6b756183fbd05e1b9e0569f1e8a9eaf8a8b63

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0017.png differ

diff --git a/assets/example/sintel-market_2/frame_0018.png b/assets/example/sintel-market_2/frame_0018.png

new file mode 100644

index 0000000000000000000000000000000000000000..bbb9598c578a34c51e8f53592769d343961e7592

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0018.png differ

diff --git a/assets/example/sintel-market_2/frame_0019.png b/assets/example/sintel-market_2/frame_0019.png

new file mode 100644

index 0000000000000000000000000000000000000000..367c6ec52d040aefdd2a56b9a9cb1a038b581f02

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0019.png differ

diff --git a/assets/example/sintel-market_2/frame_0020.png b/assets/example/sintel-market_2/frame_0020.png

new file mode 100644

index 0000000000000000000000000000000000000000..f9293f00a1f52bdc2eb5bd9c478137d1777bb00d

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0020.png differ

diff --git a/assets/example/sintel-market_2/frame_0021.png b/assets/example/sintel-market_2/frame_0021.png

new file mode 100644

index 0000000000000000000000000000000000000000..8a470396bd7128b0962db1fe32ddf02ead3e3f9b

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0021.png differ

diff --git a/assets/example/sintel-market_2/frame_0022.png b/assets/example/sintel-market_2/frame_0022.png

new file mode 100644

index 0000000000000000000000000000000000000000..99d7094b53c1189409ee0f0a0fb20d7c1ca31603

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0022.png differ

diff --git a/assets/example/sintel-market_2/frame_0023.png b/assets/example/sintel-market_2/frame_0023.png

new file mode 100644

index 0000000000000000000000000000000000000000..a9d26b3c094a6d9176318d486e3e890449b48559

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0023.png differ

diff --git a/assets/example/sintel-market_2/frame_0024.png b/assets/example/sintel-market_2/frame_0024.png

new file mode 100644

index 0000000000000000000000000000000000000000..6efb0879ac57cd2ef60d938928fd9a8db2ca884b

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0024.png differ

diff --git a/assets/example/sintel-market_2/frame_0025.png b/assets/example/sintel-market_2/frame_0025.png

new file mode 100644

index 0000000000000000000000000000000000000000..29d00e8857e2367d971073c7a715ef71ac0c1057

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0025.png differ

diff --git a/assets/example/sintel-market_2/frame_0026.png b/assets/example/sintel-market_2/frame_0026.png

new file mode 100644

index 0000000000000000000000000000000000000000..78715311e0cc632c609c86c39828dd56c78a79ba

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0026.png differ

diff --git a/assets/example/sintel-market_2/frame_0027.png b/assets/example/sintel-market_2/frame_0027.png

new file mode 100644

index 0000000000000000000000000000000000000000..949c875a5135fb8296d115815cfbe74848b66b71

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0027.png differ

diff --git a/assets/example/sintel-market_2/frame_0028.png b/assets/example/sintel-market_2/frame_0028.png

new file mode 100644

index 0000000000000000000000000000000000000000..f75fa75e984a56c2dfa432b612d973a51e0b1586

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0028.png differ

diff --git a/assets/example/sintel-market_2/frame_0029.png b/assets/example/sintel-market_2/frame_0029.png

new file mode 100644

index 0000000000000000000000000000000000000000..0c4993499a2320336ff4ad7b2154043cc2d71b0f

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0029.png differ

diff --git a/assets/example/sintel-market_2/frame_0030.png b/assets/example/sintel-market_2/frame_0030.png

new file mode 100644

index 0000000000000000000000000000000000000000..e859be76f5110adacba3c33b4f4b9e9f2cbb1072

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0030.png differ

diff --git a/assets/example/sintel-market_2/frame_0031.png b/assets/example/sintel-market_2/frame_0031.png

new file mode 100644

index 0000000000000000000000000000000000000000..ce64437a76e4cb2e24c3b4c0a950a0034da483c0

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0031.png differ

diff --git a/assets/example/sintel-market_2/frame_0032.png b/assets/example/sintel-market_2/frame_0032.png

new file mode 100644

index 0000000000000000000000000000000000000000..f3c7edcbadd550642a8280877354371e8e0da972

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0032.png differ

diff --git a/assets/example/sintel-market_2/frame_0033.png b/assets/example/sintel-market_2/frame_0033.png

new file mode 100644

index 0000000000000000000000000000000000000000..76669846fbaf400e301550b5e679a3c656a52e14

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0033.png differ

diff --git a/assets/example/sintel-market_2/frame_0034.png b/assets/example/sintel-market_2/frame_0034.png

new file mode 100644

index 0000000000000000000000000000000000000000..7a2de6b882e6885a2e5fb3e1f22a2e331939daf5

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0034.png differ

diff --git a/assets/example/sintel-market_2/frame_0035.png b/assets/example/sintel-market_2/frame_0035.png

new file mode 100644

index 0000000000000000000000000000000000000000..247be1ae88d60ae251a32c914495e57bdb29040f

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0035.png differ

diff --git a/assets/example/sintel-market_2/frame_0036.png b/assets/example/sintel-market_2/frame_0036.png

new file mode 100644

index 0000000000000000000000000000000000000000..357ea68119652e216ed8575e0b8c63a4d82ad9bc

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0036.png differ

diff --git a/assets/example/sintel-market_2/frame_0037.png b/assets/example/sintel-market_2/frame_0037.png

new file mode 100644

index 0000000000000000000000000000000000000000..f6ce642a1fc5df57080a4ebd591cd2097694b74e

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0037.png differ

diff --git a/assets/example/sintel-market_2/frame_0038.png b/assets/example/sintel-market_2/frame_0038.png

new file mode 100644

index 0000000000000000000000000000000000000000..0e3aca24bcc6b512aa8740e80e7f8a22ed3b16c0

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0038.png differ

diff --git a/assets/example/sintel-market_2/frame_0039.png b/assets/example/sintel-market_2/frame_0039.png

new file mode 100644

index 0000000000000000000000000000000000000000..727e8202b105aec0c540f9b74d14394efc4b8ec0

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0039.png differ

diff --git a/assets/example/sintel-market_2/frame_0040.png b/assets/example/sintel-market_2/frame_0040.png

new file mode 100644

index 0000000000000000000000000000000000000000..c221145d9a4859441872d6cd23eb0cc844178456

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0040.png differ

diff --git a/assets/example/sintel-market_2/frame_0041.png b/assets/example/sintel-market_2/frame_0041.png

new file mode 100644

index 0000000000000000000000000000000000000000..3554e0fae2b5c41dd1455e2fe50565725519bcb8

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0041.png differ

diff --git a/assets/example/sintel-market_2/frame_0042.png b/assets/example/sintel-market_2/frame_0042.png

new file mode 100644

index 0000000000000000000000000000000000000000..6f5e297ec9c84247818b0e1d94c075e2f5b0da76

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0042.png differ

diff --git a/assets/example/sintel-market_2/frame_0043.png b/assets/example/sintel-market_2/frame_0043.png

new file mode 100644

index 0000000000000000000000000000000000000000..b69dee44da56b869ebc3465cf0f8a75b599475ac

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0043.png differ

diff --git a/assets/example/sintel-market_2/frame_0044.png b/assets/example/sintel-market_2/frame_0044.png

new file mode 100644

index 0000000000000000000000000000000000000000..3721b598d49c8c7ed7b424ea94e3623bcbe04949

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0044.png differ

diff --git a/assets/example/sintel-market_2/frame_0045.png b/assets/example/sintel-market_2/frame_0045.png

new file mode 100644

index 0000000000000000000000000000000000000000..344f23104fcdf9318d380f8d9e23c0d83108c3e9

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0045.png differ

diff --git a/assets/example/sintel-market_2/frame_0046.png b/assets/example/sintel-market_2/frame_0046.png

new file mode 100644

index 0000000000000000000000000000000000000000..7b4265e2024d026d0f4ad1b006779d20b7759771

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0046.png differ

diff --git a/assets/example/sintel-market_2/frame_0047.png b/assets/example/sintel-market_2/frame_0047.png

new file mode 100644

index 0000000000000000000000000000000000000000..f1562294a9ca4c1ce2c04121422e0e1649b7b9ff

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0047.png differ

diff --git a/assets/example/sintel-market_2/frame_0048.png b/assets/example/sintel-market_2/frame_0048.png

new file mode 100644

index 0000000000000000000000000000000000000000..3d68f4f1076fb193ba6d7b1a5b7c5c4dbdb2a420

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0048.png differ

diff --git a/assets/example/sintel-market_2/frame_0049.png b/assets/example/sintel-market_2/frame_0049.png

new file mode 100644

index 0000000000000000000000000000000000000000..07bb3a2ce25e25e7644f5425ad6ec852b6f18dfa

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0049.png differ

diff --git a/assets/example/sintel-market_2/frame_0050.png b/assets/example/sintel-market_2/frame_0050.png

new file mode 100644

index 0000000000000000000000000000000000000000..a01c6a6f161f0eaf14a758c93ff2ede355a602ef

Binary files /dev/null and b/assets/example/sintel-market_2/frame_0050.png differ

diff --git a/datasets_preprocess/pointodyssey_rearrange.py b/datasets_preprocess/pointodyssey_rearrange.py

new file mode 100644

index 0000000000000000000000000000000000000000..5c309db58122c3554a3a210800bfe8a48bf3ba80

--- /dev/null

+++ b/datasets_preprocess/pointodyssey_rearrange.py

@@ -0,0 +1,64 @@

+import sys

+import torch

+sys.path.append('.')

+import os

+import numpy as np

+import glob

+from tqdm import tqdm

+

+dataset_location = '../data/point_odyssey'

+# print(dataset_location)

+for dset in ["train", "test", "sample"]:

+ sequences = []

+ subdir = os.path.join(dataset_location, dset)

+ for seq in glob.glob(os.path.join(subdir, "*/")):

+ sequences.append(seq)

+ # sequences = sorted(sequences)

+ squences = sorted(sequences)

+

+ print('found %d unique videos in %s (dset=%s)' % (len(sequences), dataset_location, dset))

+

+ ## load trajectories

+ print('loading trajectories...')

+

+ for seq in sequences:

+ # print('seq', seq)

+ # if os.path.exists(os.path.join(seq, 'trajs_2d')):

+ # print('skipping', seq)

+ # continue

+ info_path = os.path.join(seq, 'info.npz')

+ info = np.load(info_path, allow_pickle=True)

+ trajs_3d_shape = info['trajs_3d'].astype(np.float32)

+

+ if len(trajs_3d_shape):

+ print('processing', seq)

+ rgb_path = os.path.join(seq, 'rgbs')

+ info_path = os.path.join(seq, 'info.npz')

+ annotations_path = os.path.join(seq, 'anno.npz')

+

+ trajs_3d_path = os.path.join(seq, 'trajs_3d')

+ trajs_2d_path = os.path.join(seq, 'trajs_2d')

+ os.makedirs(trajs_3d_path, exist_ok=True)

+ os.makedirs(trajs_2d_path, exist_ok=True)

+

+

+ info = np.load(info_path, allow_pickle=True)

+ trajs_3d_shape = info['trajs_3d']

+ anno = np.load(annotations_path, allow_pickle=True)

+ keys = {'trajs_2d': 'traj_2d', 'trajs_3d': 'traj_3d', 'valids': 'valid', 'visibs': 'visib', 'intrinsics': 'intrinsic', 'extrinsics': 'extrinsic'}

+ if len(trajs_3d_shape) == 0:

+ print(anno['trajs_3d'])

+ print('skipping', seq)

+ continue

+ tensors = {key: torch.tensor(anno[key]).cuda() for key in keys}

+

+ for t in tqdm(range(trajs_3d_shape[0])):

+ for key, item_key in keys.items():

+ path = os.path.join(seq, key)

+ os.makedirs(path, exist_ok=True)

+ filename = os.path.join(path, f'{item_key}_{t:05d}.npy')

+ np.save(filename, tensors[key][t].cpu().numpy())

+

+

+

+

diff --git a/datasets_preprocess/sintel_get_dynamics.py b/datasets_preprocess/sintel_get_dynamics.py

new file mode 100644

index 0000000000000000000000000000000000000000..e116380fafcbf1dbd9d633412045b7b84ca34b10

--- /dev/null

+++ b/datasets_preprocess/sintel_get_dynamics.py

@@ -0,0 +1,170 @@

+import numpy as np

+import cv2

+import os

+from tqdm import tqdm

+import argparse

+import torch

+

+TAG_FLOAT = 202021.25

+def flow_read(filename):

+ """ Read optical flow from file, return (U,V) tuple.

+

+ Original code by Deqing Sun, adapted from Daniel Scharstein.

+ """

+ f = open(filename,'rb')

+ check = np.fromfile(f,dtype=np.float32,count=1)[0]

+ assert check == TAG_FLOAT, 'flow_read:: Wrong tag in flow file (should be: {0}, is: {1}). Big-endian machine?'.format(TAG_FLOAT,check)

+ width = np.fromfile(f,dtype=np.int32,count=1)[0]

+ height = np.fromfile(f,dtype=np.int32,count=1)[0]

+ size = width*height

+ assert width > 0 and height > 0 and size > 1 and size < 100000000, 'flow_read:: Invalid input size (width = {0}, height = {1}).'.format(width,height)

+ tmp = np.fromfile(f,dtype=np.float32,count=-1).reshape((height,width*2))

+ u = tmp[:,np.arange(width)*2]

+ v = tmp[:,np.arange(width)*2 + 1]

+ return u,v

+

+def cam_read(filename):

+ """ Read camera data, return (M,N) tuple.

+

+ M is the intrinsic matrix, N is the extrinsic matrix, so that

+

+ x = M*N*X,

+ where x is a point in homogeneous image pixel coordinates, and X is a

+ point in homogeneous world coordinates.

+ """

+ f = open(filename,'rb')

+ check = np.fromfile(f,dtype=np.float32,count=1)[0]

+ assert check == TAG_FLOAT, 'cam_read:: Wrong tag in cam file (should be: {0}, is: {1}). Big-endian machine?'.format(TAG_FLOAT,check)

+ M = np.fromfile(f,dtype='float64',count=9).reshape((3,3))

+ N = np.fromfile(f,dtype='float64',count=12).reshape((3,4))

+ return M,N

+

+def depth_read(filename):

+ """ Read depth data from file, return as numpy array. """

+ f = open(filename,'rb')

+ check = np.fromfile(f,dtype=np.float32,count=1)[0]

+ assert check == TAG_FLOAT, 'depth_read:: Wrong tag in depth file (should be: {0}, is: {1}). Big-endian machine?'.format(TAG_FLOAT,check)

+ width = np.fromfile(f,dtype=np.int32,count=1)[0]

+ height = np.fromfile(f,dtype=np.int32,count=1)[0]

+ size = width*height

+ assert width > 0 and height > 0 and size > 1 and size < 100000000, 'depth_read:: Invalid input size (width = {0}, height = {1}).'.format(width,height)

+ depth = np.fromfile(f,dtype=np.float32,count=-1).reshape((height,width))

+ return depth

+

+def RT_to_extrinsic_matrix(R, T):

+ extrinsic_matrix = np.concatenate([R, T], axis=-1)

+ extrinsic_matrix = np.concatenate([extrinsic_matrix, np.array([[0, 0, 0, 1]])], axis=0)

+ return np.linalg.inv(extrinsic_matrix)

+

+def depth_to_3d(depth_map, intrinsic_matrix):

+ height, width = depth_map.shape

+ i, j = np.meshgrid(np.arange(width), np.arange(height))

+

+ # Convert pixel coordinates and depth values to 3D points

+ x = (i - intrinsic_matrix[0, 2]) * depth_map / intrinsic_matrix[0, 0]

+ y = (j - intrinsic_matrix[1, 2]) * depth_map / intrinsic_matrix[1, 1]

+ z = depth_map

+

+ points_3d = np.stack([x, y, z], axis=-1)

+ return points_3d

+

+def project_3d_to_2d(points_3d, intrinsic_matrix):

+ # Convert 3D points to homogeneous coordinates

+ projected_2d_hom = intrinsic_matrix @ points_3d.T

+ # Convert from homogeneous coordinates to 2D image coordinates

+ projected_2d = projected_2d_hom[:2, :] / projected_2d_hom[2, :]

+ return projected_2d.T

+

+def compute_optical_flow(depth1, depth2, pose1, pose2, intrinsic_matrix1, intrinsic_matrix2):

+ # Input: All inputs as numpy arrays; convert torch tensors to numpy arrays if needed

+ if isinstance(depth1, torch.Tensor):

+ depth1 = depth1.cpu().numpy()

+ if isinstance(depth2, torch.Tensor):

+ depth2 = depth2.cpu().numpy()

+ if isinstance(pose1, torch.Tensor):

+ pose1 = pose1.cpu().numpy()

+ if isinstance(pose2, torch.Tensor):

+ pose2 = pose2.cpu().numpy()

+ if isinstance(intrinsic_matrix1, torch.Tensor):

+ intrinsic_matrix1 = intrinsic_matrix1.cpu().numpy()

+ if isinstance(intrinsic_matrix2, torch.Tensor):

+ intrinsic_matrix2 = intrinsic_matrix2.cpu().numpy()

+

+ points_3d_frame1 = depth_to_3d(depth1, intrinsic_matrix1).reshape(-1, 3)

+ points_3d_frame1_hom = np.concatenate([points_3d_frame1, np.ones((points_3d_frame1.shape[0], 1))], axis=1).T

+

+ # Calculate the transformation matrix from frame 1 to frame 2

+ transformation_matrix = (pose2) @ np.linalg.inv(pose1)

+ points_3d_frame2_hom = transformation_matrix @ points_3d_frame1_hom

+ points_3d_frame2 = (points_3d_frame2_hom[:3, :]).T

+

+ points_2d_frame1 = project_3d_to_2d(points_3d_frame1, intrinsic_matrix1)

+ points_2d_frame2 = project_3d_to_2d(points_3d_frame2, intrinsic_matrix2)

+

+ # Compute optical flow vectors

+ optical_flow = points_2d_frame2 - points_2d_frame1

+ return optical_flow

+

+def get_dynamic_label(base_dir, seq, continuous=False, threshold=13.75, save_dir='dynamic_label'):

+ depth_dir = os.path.join(base_dir, 'depth', seq)

+ cam_dir = os.path.join(base_dir, 'camdata_left', seq)

+ flow_dir = os.path.join(base_dir, 'flow', seq)

+ dynamic_label_dir = os.path.join(base_dir, save_dir, seq)

+ os.makedirs(dynamic_label_dir, exist_ok=True)

+

+ frames = sorted([f for f in os.listdir(depth_dir) if f.endswith('.dpt')])

+ for i, frame1 in enumerate(frames):

+ if i == len(frames) - 1:

+ continue

+ frame2 = frames[i + 1]

+

+ frame1_id = frame1.split('.')[0]

+ frame2_id = frame2.split('.')[0]

+

+ # Load depth maps

+ depth_map_frame1 = depth_read(os.path.join(depth_dir, frame1))

+ depth_map_frame2 = depth_read(os.path.join(depth_dir, frame2))

+

+ # Load camera intrinsics and poses

+ intrinsic_matrix1, pose_frame1 = cam_read(os.path.join(cam_dir, f'{frame1_id}.cam'))

+ intrinsic_matrix2, pose_frame2 = cam_read(os.path.join(cam_dir, f'{frame2_id}.cam'))

+

+ # Pad pose with [0,0,0,1]

+ pose_frame1 = np.concatenate([pose_frame1, np.array([[0, 0, 0, 1]])], axis=0)

+ pose_frame2 = np.concatenate([pose_frame2, np.array([[0, 0, 0, 1]])], axis=0)

+

+ # Compute optical flow

+ optical_flow = compute_optical_flow(depth_map_frame1, depth_map_frame2, pose_frame1, pose_frame2, intrinsic_matrix1, intrinsic_matrix2)

+

+ # Reshape the optical flow to the image dimensions

+ height, width = depth_map_frame1.shape

+ optical_flow_image = optical_flow.reshape(height, width, 2)

+

+ # Load ground truth optical flow

+ u, v = flow_read(os.path.join(flow_dir, f'{frame1_id}.flo'))

+ gt_flow = np.stack([u, v], axis=-1)

+

+ # Compute the error map

+ error_map = np.linalg.norm(gt_flow - optical_flow_image, axis=-1)

+ if not continuous:

+ binary_error_map = error_map > threshold

+

+ # Save the binary error map

+ cv2.imwrite(os.path.join(dynamic_label_dir, f'{frame1_id}.png'), binary_error_map.astype(np.uint8) * 255)

+ else:

+ # Normalize the error map

+ error_map = error_map / error_map.max()

+ cv2.imwrite(os.path.join(dynamic_label_dir, f'{frame1_id}.png'), (error_map * 255).astype(np.uint8))

+

+if __name__ == '__main__':

+ # Process all sequences

+ sequences = sorted(os.listdir('data/sintel/training/depth'))

+ base_dir = 'data/sintel/training'

+ parser = argparse.ArgumentParser()

+ parser.add_argument('--continuous', action='store_true')

+ parser.add_argument('--threshold', type=float, default=13.75)

+ parser.add_argument('--save_dir', type=str, default='dynamic_label')

+ args = parser.parse_args()

+ for seq in tqdm(sequences):

+ get_dynamic_label(base_dir, seq, continuous=args.continuous, threshold=args.threshold, save_dir=args.save_dir)

+ print(f'Finished processing {seq}')

diff --git a/dynamic_predictor/DAS3R_b32_g4.sh b/dynamic_predictor/DAS3R_b32_g4.sh

new file mode 100644

index 0000000000000000000000000000000000000000..58f65de331aea15f65d44e9de288d99cec3753eb

--- /dev/null

+++ b/dynamic_predictor/DAS3R_b32_g4.sh

@@ -0,0 +1,19 @@

+# Set environment variables

+export CUDA_VISIBLE_DEVICES="0,1,2,3"

+

+# Run the Python script using torchrun (adjust if using distributed training)

+torchrun --nproc_per_node=1 --master_port=27777 launch.py --mode=train \

+ --model="AsymmetricCroCo3DStereo(pos_embed='RoPE100', patch_embed_cls='ManyAR_PatchEmbed', \

+ img_size=(512, 512), head_type='dpt', output_mode='pts3d', depth_mode=('exp', -inf, inf), conf_mode=('exp', 1, inf), \

+ enc_embed_dim=1024, enc_depth=24, enc_num_heads=16, dec_embed_dim=768, dec_depth=12, dec_num_heads=12, freeze='encoder_and_3d_predictor')" \

+ --train_dataset="10_000 @ PointOdysseyDUSt3R(dset='train', z_far=80, dataset_location='../data/point_odyssey', S=2, aug_crop=16, resolution=[(512, 288), (512, 384), (512, 336)], transform=ColorJitter, strides=[1,2,3,4,5,6,7,8,9], dist_type='linear_1_2', aug_focal=0.9)" \

+ --test_dataset="1 * PointOdysseyDUSt3R(dset='test', z_far=80, dataset_location='../data/point_odyssey', S=2, strides=[1,2,3,4,5,6,7,8,9], resolution=[(512, 288)], seed=777) + 1 * SintelDUSt3R(dset='final', z_far=80, S=2, strides=[1,2,3,4,5,6,7,8,9], resolution=[(512, 224)], seed=777)" \

+ --train_criterion="ConfLoss(Regr3D_MMask(L21, norm_mode='avg_dis'), alpha=0.2)" \

+ --test_criterion="Regr3D_ScaleShiftInv_MMask(L21, gt_scale=True)" \

+ --pretrained="Junyi42/MonST3R_PO-TA-S-W_ViTLarge_BaseDecoder_512_dpt" \

+ --lr=0.00005 --min_lr=1e-06 --warmup_epochs=3 --epochs=50 --batch_size=8 --accum_iter=1 \

+ --test_batch_size=8 \

+ --save_freq=3 --keep_freq=5 --eval_freq=50 \

+ --output_dir=results/MSeg_from_monst3r_b32_g4 \

+ --num_workers=16 --wandb \

+

diff --git a/dynamic_predictor/croco/.gitignore b/dynamic_predictor/croco/.gitignore

new file mode 100644

index 0000000000000000000000000000000000000000..b6e47617de110dea7ca47e087ff1347cc2646eda

--- /dev/null

+++ b/dynamic_predictor/croco/.gitignore

@@ -0,0 +1,129 @@

+# Byte-compiled / optimized / DLL files

+__pycache__/

+*.py[cod]

+*$py.class

+

+# C extensions

+*.so

+

+# Distribution / packaging

+.Python

+build/

+develop-eggs/

+dist/

+downloads/

+eggs/

+.eggs/

+lib/

+lib64/

+parts/

+sdist/

+var/

+wheels/

+pip-wheel-metadata/

+share/python-wheels/

+*.egg-info/

+.installed.cfg

+*.egg

+MANIFEST

+

+# PyInstaller

+# Usually these files are written by a python script from a template

+# before PyInstaller builds the exe, so as to inject date/other infos into it.

+*.manifest

+*.spec

+

+# Installer logs

+pip-log.txt

+pip-delete-this-directory.txt

+

+# Unit test / coverage reports

+htmlcov/

+.tox/

+.nox/

+.coverage

+.coverage.*

+.cache

+nosetests.xml

+coverage.xml

+*.cover

+*.py,cover

+.hypothesis/

+.pytest_cache/

+

+# Translations

+*.mo

+*.pot

+

+# Django stuff:

+*.log

+local_settings.py

+db.sqlite3

+db.sqlite3-journal

+

+# Flask stuff:

+instance/

+.webassets-cache

+

+# Scrapy stuff:

+.scrapy

+

+# Sphinx documentation

+docs/_build/

+

+# PyBuilder

+target/

+

+# Jupyter Notebook

+.ipynb_checkpoints

+

+# IPython

+profile_default/

+ipython_config.py

+

+# pyenv

+.python-version

+

+# pipenv

+# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

+# However, in case of collaboration, if having platform-specific dependencies or dependencies

+# having no cross-platform support, pipenv may install dependencies that don't work, or not

+# install all needed dependencies.

+#Pipfile.lock

+

+# PEP 582; used by e.g. github.com/David-OConnor/pyflow

+__pypackages__/

+

+# Celery stuff

+celerybeat-schedule

+celerybeat.pid

+

+# SageMath parsed files

+*.sage.py

+

+# Environments

+.env

+.venv

+env/

+venv/

+ENV/

+env.bak/

+venv.bak/

+

+# Spyder project settings

+.spyderproject

+.spyproject

+

+# Rope project settings

+.ropeproject

+

+# mkdocs documentation

+/site

+

+# mypy

+.mypy_cache/

+.dmypy.json

+dmypy.json

+

+# Pyre type checker

+.pyre/

diff --git a/dynamic_predictor/croco/LICENSE b/dynamic_predictor/croco/LICENSE

new file mode 100644

index 0000000000000000000000000000000000000000..d9b84b1a65f9db6d8920a9048d162f52ba3ea56d

--- /dev/null

+++ b/dynamic_predictor/croco/LICENSE

@@ -0,0 +1,52 @@

+CroCo, Copyright (c) 2022-present Naver Corporation, is licensed under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 license.

+

+A summary of the CC BY-NC-SA 4.0 license is located here:

+ https://creativecommons.org/licenses/by-nc-sa/4.0/

+

+The CC BY-NC-SA 4.0 license is located here:

+ https://creativecommons.org/licenses/by-nc-sa/4.0/legalcode

+

+

+SEE NOTICE BELOW WITH RESPECT TO THE FILE: models/pos_embed.py, models/blocks.py

+

+***************************

+

+NOTICE WITH RESPECT TO THE FILE: models/pos_embed.py

+

+This software is being redistributed in a modifiled form. The original form is available here:

+

+https://github.com/facebookresearch/mae/blob/main/util/pos_embed.py

+

+This software in this file incorporates parts of the following software available here:

+

+Transformer: https://github.com/tensorflow/models/blob/master/official/legacy/transformer/model_utils.py

+available under the following license: https://github.com/tensorflow/models/blob/master/LICENSE

+

+MoCo v3: https://github.com/facebookresearch/moco-v3

+available under the following license: https://github.com/facebookresearch/moco-v3/blob/main/LICENSE

+

+DeiT: https://github.com/facebookresearch/deit

+available under the following license: https://github.com/facebookresearch/deit/blob/main/LICENSE

+

+

+ORIGINAL COPYRIGHT NOTICE AND PERMISSION NOTICE AVAILABLE HERE IS REPRODUCE BELOW:

+

+https://github.com/facebookresearch/mae/blob/main/LICENSE

+

+Attribution-NonCommercial 4.0 International

+

+***************************

+

+NOTICE WITH RESPECT TO THE FILE: models/blocks.py

+

+This software is being redistributed in a modifiled form. The original form is available here:

+

+https://github.com/rwightman/pytorch-image-models

+

+ORIGINAL COPYRIGHT NOTICE AND PERMISSION NOTICE AVAILABLE HERE IS REPRODUCE BELOW:

+

+https://github.com/rwightman/pytorch-image-models/blob/master/LICENSE

+

+Apache License

+Version 2.0, January 2004

+http://www.apache.org/licenses/

\ No newline at end of file

diff --git a/dynamic_predictor/croco/NOTICE b/dynamic_predictor/croco/NOTICE

new file mode 100644

index 0000000000000000000000000000000000000000..d51bb365036c12d428d6e3a4fd00885756d5261c

--- /dev/null

+++ b/dynamic_predictor/croco/NOTICE

@@ -0,0 +1,21 @@

+CroCo

+Copyright 2022-present NAVER Corp.

+

+This project contains subcomponents with separate copyright notices and license terms.

+Your use of the source code for these subcomponents is subject to the terms and conditions of the following licenses.

+

+====

+

+facebookresearch/mae

+https://github.com/facebookresearch/mae

+

+Attribution-NonCommercial 4.0 International

+

+====

+

+rwightman/pytorch-image-models

+https://github.com/rwightman/pytorch-image-models

+

+Apache License

+Version 2.0, January 2004

+http://www.apache.org/licenses/

\ No newline at end of file

diff --git a/dynamic_predictor/croco/README.MD b/dynamic_predictor/croco/README.MD

new file mode 100644

index 0000000000000000000000000000000000000000..38e33b001a60bd16749317fb297acd60f28a6f1b

--- /dev/null

+++ b/dynamic_predictor/croco/README.MD

@@ -0,0 +1,124 @@

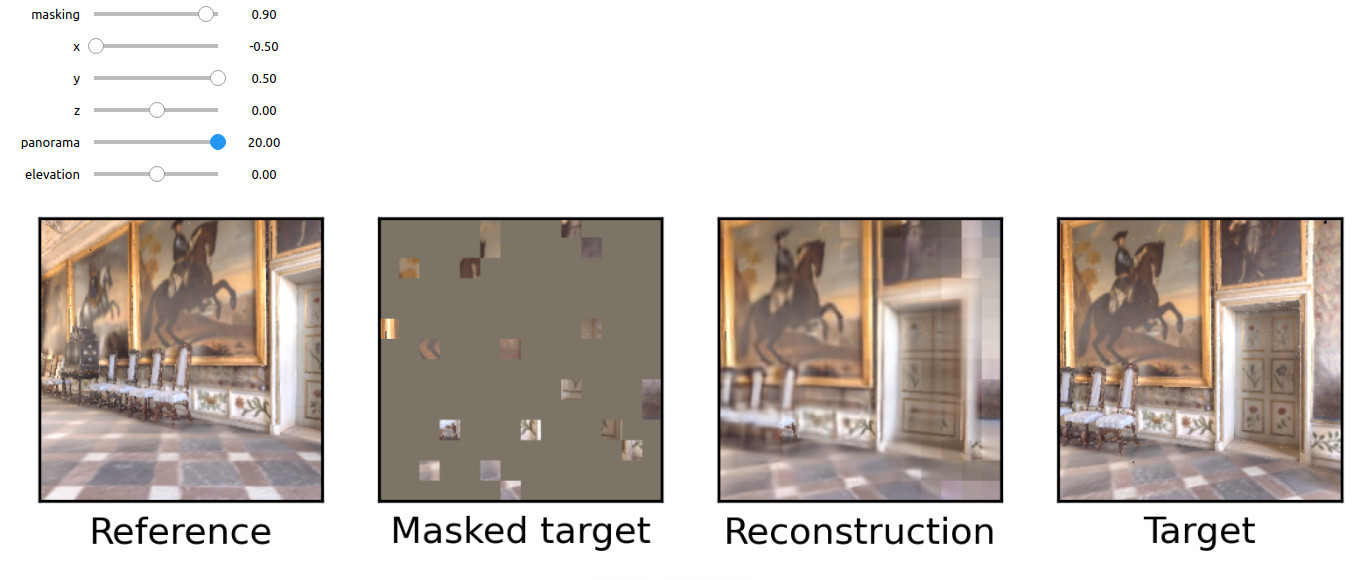

+# CroCo + CroCo v2 / CroCo-Stereo / CroCo-Flow

+

+[[`CroCo arXiv`](https://arxiv.org/abs/2210.10716)] [[`CroCo v2 arXiv`](https://arxiv.org/abs/2211.10408)] [[`project page and demo`](https://croco.europe.naverlabs.com/)]

+

+This repository contains the code for our CroCo model presented in our NeurIPS'22 paper [CroCo: Self-Supervised Pre-training for 3D Vision Tasks by Cross-View Completion](https://openreview.net/pdf?id=wZEfHUM5ri) and its follow-up extension published at ICCV'23 [Improved Cross-view Completion Pre-training for Stereo Matching and Optical Flow](https://openaccess.thecvf.com/content/ICCV2023/html/Weinzaepfel_CroCo_v2_Improved_Cross-view_Completion_Pre-training_for_Stereo_Matching_and_ICCV_2023_paper.html), refered to as CroCo v2:

+

+

+

+```bibtex

+@inproceedings{croco,

+ title={{CroCo: Self-Supervised Pre-training for 3D Vision Tasks by Cross-View Completion}},

+ author={{Weinzaepfel, Philippe and Leroy, Vincent and Lucas, Thomas and Br\'egier, Romain and Cabon, Yohann and Arora, Vaibhav and Antsfeld, Leonid and Chidlovskii, Boris and Csurka, Gabriela and Revaud J\'er\^ome}},

+ booktitle={{NeurIPS}},

+ year={2022}

+}

+

+@inproceedings{croco_v2,

+ title={{CroCo v2: Improved Cross-view Completion Pre-training for Stereo Matching and Optical Flow}},

+ author={Weinzaepfel, Philippe and Lucas, Thomas and Leroy, Vincent and Cabon, Yohann and Arora, Vaibhav and Br{\'e}gier, Romain and Csurka, Gabriela and Antsfeld, Leonid and Chidlovskii, Boris and Revaud, J{\'e}r{\^o}me},

+ booktitle={ICCV},

+ year={2023}

+}

+```

+

+## License

+

+The code is distributed under the CC BY-NC-SA 4.0 License. See [LICENSE](LICENSE) for more information.

+Some components are based on code from [MAE](https://github.com/facebookresearch/mae) released under the CC BY-NC-SA 4.0 License and [timm](https://github.com/rwightman/pytorch-image-models) released under the Apache 2.0 License.

+Some components for stereo matching and optical flow are based on code from [unimatch](https://github.com/autonomousvision/unimatch) released under the MIT license.

+

+## Preparation

+

+1. Install dependencies on a machine with a NVidia GPU using e.g. conda. Note that `habitat-sim` is required only for the interactive demo and the synthetic pre-training data generation. If you don't plan to use it, you can ignore the line installing it and use a more recent python version.

+

+```bash

+conda create -n croco python=3.7 cmake=3.14.0

+conda activate croco

+conda install habitat-sim headless -c conda-forge -c aihabitat

+conda install pytorch torchvision -c pytorch

+conda install notebook ipykernel matplotlib

+conda install ipywidgets widgetsnbextension

+conda install scikit-learn tqdm quaternion opencv # only for pretraining / habitat data generation

+

+```

+

+2. Compile cuda kernels for RoPE

+

+CroCo v2 relies on RoPE positional embeddings for which you need to compile some cuda kernels.

+```bash

+cd models/curope/

+python setup.py build_ext --inplace

+cd ../../

+```

+

+This can be a bit long as we compile for all cuda architectures, feel free to update L9 of `models/curope/setup.py` to compile for specific architectures only.

+You might also need to set the environment `CUDA_HOME` in case you use a custom cuda installation.

+

+In case you cannot provide, we also provide a slow pytorch version, which will be automatically loaded.

+

+3. Download pre-trained model

+

+We provide several pre-trained models:

+

+| modelname | pre-training data | pos. embed. | Encoder | Decoder |

+|------------------------------------------------------------------------------------------------------------------------------------|-------------------|-------------|---------|---------|

+| [`CroCo.pth`](https://download.europe.naverlabs.com/ComputerVision/CroCo/CroCo.pth) | Habitat | cosine | ViT-B | Small |

+| [`CroCo_V2_ViTBase_SmallDecoder.pth`](https://download.europe.naverlabs.com/ComputerVision/CroCo/CroCo_V2_ViTBase_SmallDecoder.pth) | Habitat + real | RoPE | ViT-B | Small |

+| [`CroCo_V2_ViTBase_BaseDecoder.pth`](https://download.europe.naverlabs.com/ComputerVision/CroCo/CroCo_V2_ViTBase_BaseDecoder.pth) | Habitat + real | RoPE | ViT-B | Base |

+| [`CroCo_V2_ViTLarge_BaseDecoder.pth`](https://download.europe.naverlabs.com/ComputerVision/CroCo/CroCo_V2_ViTLarge_BaseDecoder.pth) | Habitat + real | RoPE | ViT-L | Base |

+

+To download a specific model, i.e., the first one (`CroCo.pth`)

+```bash

+mkdir -p pretrained_models/

+wget https://download.europe.naverlabs.com/ComputerVision/CroCo/CroCo.pth -P pretrained_models/

+```

+

+## Reconstruction example

+

+Simply run after downloading the `CroCo_V2_ViTLarge_BaseDecoder` pretrained model (or update the corresponding line in `demo.py`)

+```bash

+python demo.py

+```

+

+## Interactive demonstration of cross-view completion reconstruction on the Habitat simulator

+

+First download the test scene from Habitat:

+```bash

+python -m habitat_sim.utils.datasets_download --uids habitat_test_scenes --data-path habitat-sim-data/

+```

+

+Then, run the Notebook demo `interactive_demo.ipynb`.

+

+In this demo, you should be able to sample a random reference viewpoint from an [Habitat](https://github.com/facebookresearch/habitat-sim) test scene. Use the sliders to change viewpoint and select a masked target view to reconstruct using CroCo.

+

+

+## Pre-training

+

+### CroCo

+

+To pre-train CroCo, please first generate the pre-training data from the Habitat simulator, following the instructions in [datasets/habitat_sim/README.MD](datasets/habitat_sim/README.MD) and then run the following command:

+```

+torchrun --nproc_per_node=4 pretrain.py --output_dir ./output/pretraining/

+```

+

+Our CroCo pre-training was launched on a single server with 4 GPUs.

+It should take around 10 days with A100 or 15 days with V100 to do the 400 pre-training epochs, but decent performances are obtained earlier in training.

+Note that, while the code contains the same scaling rule of the learning rate as MAE when changing the effective batch size, we did not experimented if it is valid in our case.

+The first run can take a few minutes to start, to parse all available pre-training pairs.

+

+### CroCo v2

+

+For CroCo v2 pre-training, in addition to the generation of the pre-training data from the Habitat simulator above, please pre-extract the crops from the real datasets following the instructions in [datasets/crops/README.MD](datasets/crops/README.MD).

+Then, run the following command for the largest model (ViT-L encoder, Base decoder):

+```

+torchrun --nproc_per_node=8 pretrain.py --model "CroCoNet(enc_embed_dim=1024, enc_depth=24, enc_num_heads=16, dec_embed_dim=768, dec_num_heads=12, dec_depth=12, pos_embed='RoPE100')" --dataset "habitat_release+ARKitScenes+MegaDepth+3DStreetView+IndoorVL" --warmup_epochs 12 --max_epoch 125 --epochs 250 --amp 0 --keep_freq 5 --output_dir ./output/pretraining_crocov2/

+```

+

+Our CroCo v2 pre-training was launched on a single server with 8 GPUs for the largest model, and on a single server with 4 GPUs for the smaller ones, keeping a batch size of 64 per gpu in all cases.

+The largest model should take around 12 days on A100.

+Note that, while the code contains the same scaling rule of the learning rate as MAE when changing the effective batch size, we did not experimented if it is valid in our case.

+

+## Stereo matching and Optical flow downstream tasks

+

+For CroCo-Stereo and CroCo-Flow, please refer to [stereoflow/README.MD](stereoflow/README.MD).

diff --git a/dynamic_predictor/croco/assets/Chateau1.png b/dynamic_predictor/croco/assets/Chateau1.png

new file mode 100644

index 0000000000000000000000000000000000000000..d282fc6a51c00b8dd8267d5d507220ae253c2d65

Binary files /dev/null and b/dynamic_predictor/croco/assets/Chateau1.png differ

diff --git a/dynamic_predictor/croco/assets/Chateau2.png b/dynamic_predictor/croco/assets/Chateau2.png

new file mode 100644

index 0000000000000000000000000000000000000000..722b2fc553ec089346722efb9445526ddfa8e7bd

Binary files /dev/null and b/dynamic_predictor/croco/assets/Chateau2.png differ

diff --git a/dynamic_predictor/croco/assets/arch.jpg b/dynamic_predictor/croco/assets/arch.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..3f5b032729ddc58c06d890a0ebda1749276070c4

Binary files /dev/null and b/dynamic_predictor/croco/assets/arch.jpg differ

diff --git a/dynamic_predictor/croco/croco-stereo-flow-demo.ipynb b/dynamic_predictor/croco/croco-stereo-flow-demo.ipynb

new file mode 100644

index 0000000000000000000000000000000000000000..2b00a7607ab5f82d1857041969bfec977e56b3e0

--- /dev/null

+++ b/dynamic_predictor/croco/croco-stereo-flow-demo.ipynb

@@ -0,0 +1,191 @@

+{

+ "cells": [

+ {

+ "cell_type": "markdown",

+ "id": "9bca0f41",

+ "metadata": {},

+ "source": [

+ "# Simple inference example with CroCo-Stereo or CroCo-Flow"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "80653ef7",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# Copyright (C) 2022-present Naver Corporation. All rights reserved.\n",

+ "# Licensed under CC BY-NC-SA 4.0 (non-commercial use only)."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "4f033862",

+ "metadata": {},

+ "source": [

+ "First download the model(s) of your choice by running\n",

+ "```\n",

+ "bash stereoflow/download_model.sh crocostereo.pth\n",

+ "bash stereoflow/download_model.sh crocoflow.pth\n",

+ "```"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "1fb2e392",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "import torch\n",

+ "use_gpu = torch.cuda.is_available() and torch.cuda.device_count()>0\n",

+ "device = torch.device('cuda:0' if use_gpu else 'cpu')\n",

+ "import matplotlib.pylab as plt"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "e0e25d77",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "from stereoflow.test import _load_model_and_criterion\n",

+ "from stereoflow.engine import tiled_pred\n",

+ "from stereoflow.datasets_stereo import img_to_tensor, vis_disparity\n",

+ "from stereoflow.datasets_flow import flowToColor\n",

+ "tile_overlap=0.7 # recommended value, higher value can be slightly better but slower"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "86a921f5",

+ "metadata": {},

+ "source": [

+ "### CroCo-Stereo example"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "64e483cb",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "image1 = np.asarray(Image.open(''))\n",

+ "image2 = np.asarray(Image.open(''))"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "f0d04303",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "model, _, cropsize, with_conf, task, tile_conf_mode = _load_model_and_criterion('stereoflow_models/crocostereo.pth', None, device)\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "47dc14b5",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "im1 = img_to_tensor(image1).to(device).unsqueeze(0)\n",

+ "im2 = img_to_tensor(image2).to(device).unsqueeze(0)\n",

+ "with torch.inference_mode():\n",

+ " pred, _, _ = tiled_pred(model, None, im1, im2, None, conf_mode=tile_conf_mode, overlap=tile_overlap, crop=cropsize, with_conf=with_conf, return_time=False)\n",

+ "pred = pred.squeeze(0).squeeze(0).cpu().numpy()"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "583b9f16",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "plt.imshow(vis_disparity(pred))\n",

+ "plt.axis('off')"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "d2df5d70",

+ "metadata": {},

+ "source": [

+ "### CroCo-Flow example"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "9ee257a7",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "image1 = np.asarray(Image.open(''))\n",

+ "image2 = np.asarray(Image.open(''))"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "d5edccf0",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "model, _, cropsize, with_conf, task, tile_conf_mode = _load_model_and_criterion('stereoflow_models/crocoflow.pth', None, device)\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "b19692c3",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "im1 = img_to_tensor(image1).to(device).unsqueeze(0)\n",

+ "im2 = img_to_tensor(image2).to(device).unsqueeze(0)\n",

+ "with torch.inference_mode():\n",

+ " pred, _, _ = tiled_pred(model, None, im1, im2, None, conf_mode=tile_conf_mode, overlap=tile_overlap, crop=cropsize, with_conf=with_conf, return_time=False)\n",

+ "pred = pred.squeeze(0).permute(1,2,0).cpu().numpy()"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "26f79db3",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "plt.imshow(flowToColor(pred))\n",

+ "plt.axis('off')"

+ ]

+ }

+ ],

+ "metadata": {

+ "kernelspec": {

+ "display_name": "Python 3 (ipykernel)",

+ "language": "python",

+ "name": "python3"

+ },

+ "language_info": {

+ "codemirror_mode": {

+ "name": "ipython",

+ "version": 3

+ },

+ "file_extension": ".py",

+ "mimetype": "text/x-python",

+ "name": "python",

+ "nbconvert_exporter": "python",

+ "pygments_lexer": "ipython3",

+ "version": "3.9.7"

+ }

+ },

+ "nbformat": 4,

+ "nbformat_minor": 5

+}

diff --git a/dynamic_predictor/croco/datasets/__init__.py b/dynamic_predictor/croco/datasets/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..e69de29bb2d1d6434b8b29ae775ad8c2e48c5391

diff --git a/dynamic_predictor/croco/datasets/crops/README.MD b/dynamic_predictor/croco/datasets/crops/README.MD

new file mode 100644

index 0000000000000000000000000000000000000000..47ddabebb177644694ee247ae878173a3a16644f

--- /dev/null

+++ b/dynamic_predictor/croco/datasets/crops/README.MD

@@ -0,0 +1,104 @@

+## Generation of crops from the real datasets

+

+The instructions below allow to generate the crops used for pre-training CroCo v2 from the following real-world datasets: ARKitScenes, MegaDepth, 3DStreetView and IndoorVL.

+

+### Download the metadata of the crops to generate

+

+First, download the metadata and put them in `./data/`:

+```

+mkdir -p data

+cd data/

+wget https://download.europe.naverlabs.com/ComputerVision/CroCo/data/crop_metadata.zip

+unzip crop_metadata.zip

+rm crop_metadata.zip

+cd ..

+```

+

+### Prepare the original datasets

+

+Second, download the original datasets in `./data/original_datasets/`.

+```

+mkdir -p data/original_datasets

+```

+

+##### ARKitScenes

+

+Download the `raw` dataset from https://github.com/apple/ARKitScenes/blob/main/DATA.md and put it in `./data/original_datasets/ARKitScenes/`.

+The resulting file structure should be like:

+```

+./data/original_datasets/ARKitScenes/

+└───Training

+ └───40753679

+ │ │ ultrawide

+ │ │ ...

+ └───40753686

+ │

+ ...

+```

+

+##### MegaDepth

+

+Download `MegaDepth v1 Dataset` from https://www.cs.cornell.edu/projects/megadepth/ and put it in `./data/original_datasets/MegaDepth/`.

+The resulting file structure should be like:

+

+```

+./data/original_datasets/MegaDepth/

+└───0000

+│ └───images

+│ │ │ 1000557903_87fa96b8a4_o.jpg

+│ │ └ ...

+│ └─── ...

+└───0001

+│ │

+│ └ ...

+└─── ...

+```

+

+##### 3DStreetView

+

+Download `3D_Street_View` dataset from https://github.com/amir32002/3D_Street_View and put it in `./data/original_datasets/3DStreetView/`.

+The resulting file structure should be like:

+

+```

+./data/original_datasets/3DStreetView/

+└───dataset_aligned

+│ └───0002

+│ │ │ 0000002_0000001_0000002_0000001.jpg

+│ │ └ ...

+│ └─── ...

+└───dataset_unaligned

+│ └───0003

+│ │ │ 0000003_0000001_0000002_0000001.jpg

+│ │ └ ...

+│ └─── ...

+```

+

+##### IndoorVL

+

+Download the `IndoorVL` datasets using [Kapture](https://github.com/naver/kapture).

+

+```