Spaces:

Runtime error

Runtime error

ui

Browse files

app.py

CHANGED

|

@@ -319,19 +319,18 @@ def main():

|

|

| 319 |

num_frames = gr.Slider(minimum=1, maximum=129, step=1, label="Number of Frames", value=49)

|

| 320 |

|

| 321 |

with gr.Row():

|

| 322 |

-

|

| 323 |

-

|

| 324 |

-

|

| 325 |

-

|

| 326 |

-

|

| 327 |

-

|

|

|

|

| 328 |

|

| 329 |

-

generate_button = gr.Button("Generate Video")

|

| 330 |

-

generate_button.click(generate_video, inputs=[prompt_textbox, frame1, frame2, resolution, guidance_scale, num_frames, num_inference_steps], outputs=outputs)

|

| 331 |

with gr.Accordion():

|

| 332 |

gr.Markdown("""

|

| 333 |

|

| 334 |

-

##

|

| 335 |

---

|

| 336 |

**Our architecture builds upon existing models, introducing key enhancements to optimize keyframe-based video generation**:

|

| 337 |

|

|

@@ -341,13 +340,17 @@ def main():

|

|

| 341 |

|

| 342 |

## Example Results

|

| 343 |

|

|

|

|

|

|

|

| 344 |

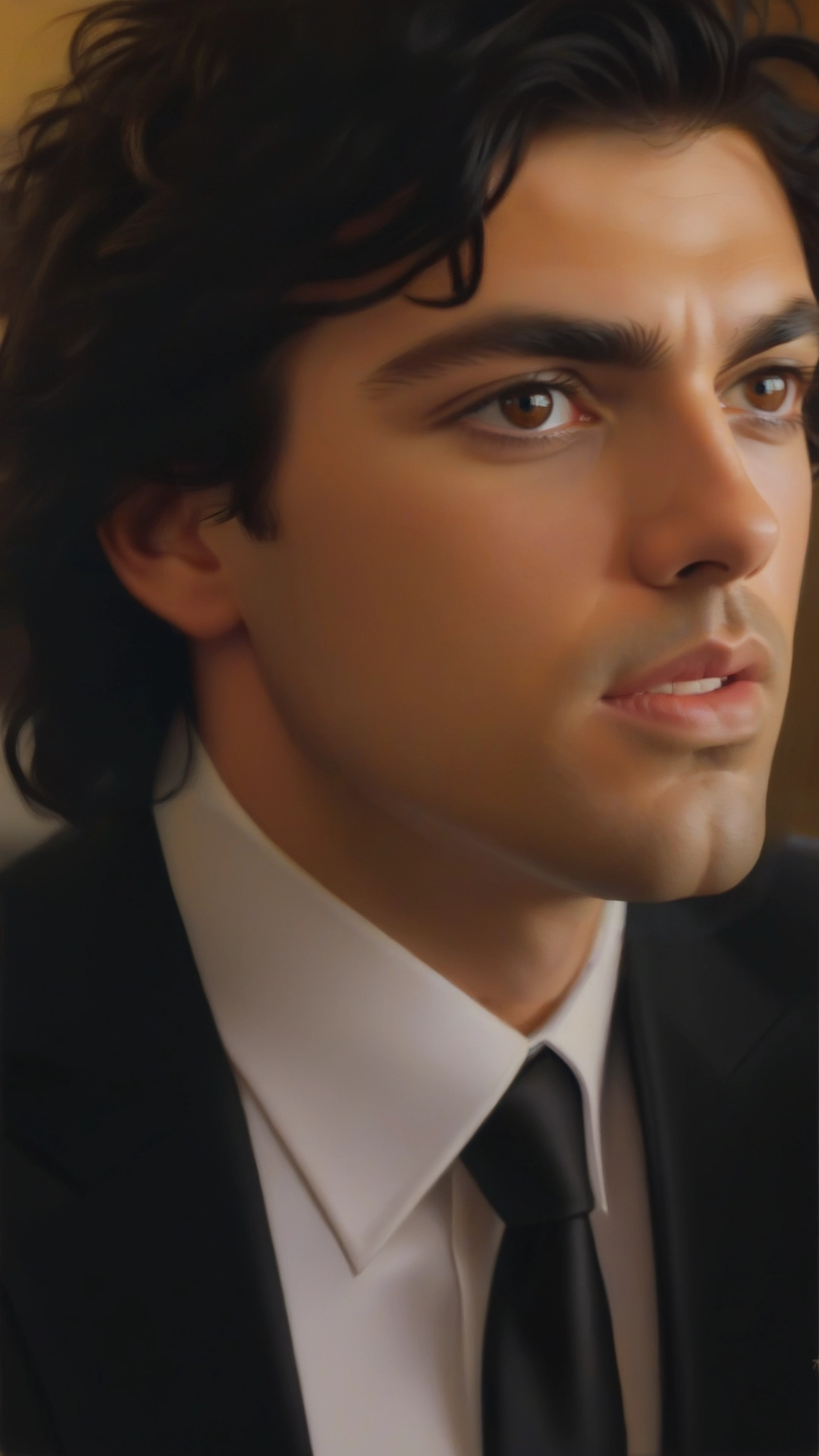

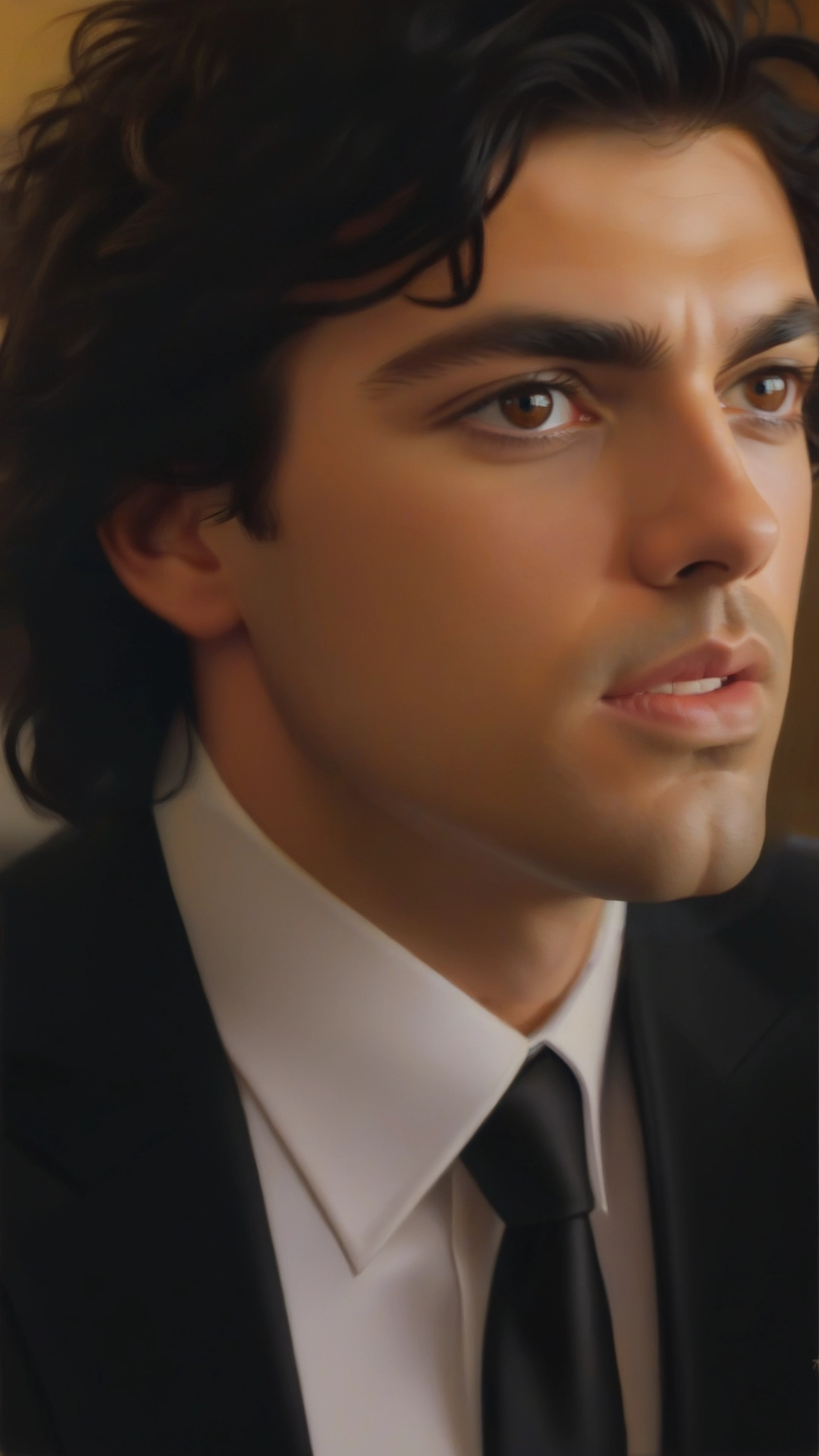

| Image 1 | Image 2 | Generated Video |

|

| 345 |

|---------|---------|-----------------|

|

| 346 |

-

|

|

| 347 |

|  |  | <video controls autoplay src="https://content.dashtoon.ai/stability-images/b00ba193-b3b7-41a1-9bc1-9fdaceba6efa.mp4"></video> |

|

| 348 |

|  |  | <video controls autoplay src="https://content.dashtoon.ai/stability-images/0cb84780-4fdf-4ecc-ab48-12e7e1055a39.mp4"></video> |

|

| 349 |

|  |  | <video controls autoplay src="https://content.dashtoon.ai/stability-images/ce12156f-0ac2-4d16-b489-37e85c61b5b2.mp4"></video> |

|

| 350 |

|

|

|

|

|

|

|

| 351 |

## Recommended Settings

|

| 352 |

1. The model works best on human subjects. Single subject images work slightly better.

|

| 353 |

2. It is recommended to use the following image generation resolutions `720x1280`, `544x960`, `1280x720`, `960x544`.

|

|

@@ -358,6 +361,7 @@ def main():

|

|

| 358 |

## FINAL THOUGHTS: This ZeroGPU space, while successfully loaded, has its memory packed to the rim. If you're lucky you may be able to sneak in a small demo inference here and there, but you will most definitely not be using the recommended settings listed above. Help, of course, is not only welcome but very much appreciated. Learn more about our non-profit initiative, [AI Without Borders](https://huggingface.co/aiwithoutborders-xyz), by following us on Huggingface or on [X](http://x.com/borderlesstools), where we will be announcing a handful of exciting developments.

|

| 359 |

|

| 360 |

""", sanitize_html=False, elem_id="md_footer", container=True)

|

|

|

|

| 361 |

|

| 362 |

demo.launch(show_error=True)

|

| 363 |

|

|

|

|

| 319 |

num_frames = gr.Slider(minimum=1, maximum=129, step=1, label="Number of Frames", value=49)

|

| 320 |

|

| 321 |

with gr.Row():

|

| 322 |

+

with gr.Column():

|

| 323 |

+

frame1 = gr.Image(label="Frame 1", type="pil")

|

| 324 |

+

frame2 = gr.Image(label="Frame 2", type="pil")

|

| 325 |

+

num_inference_steps = gr.Slider(minimum=1, maximum=100, step=1, label="Number of Inference Steps", value=30)

|

| 326 |

+

generate_button = gr.Button("Generate Video")

|

| 327 |

+

with gr.Column():

|

| 328 |

+

outputs = gr.Video(label="Generated Video")

|

| 329 |

|

|

|

|

|

|

|

| 330 |

with gr.Accordion():

|

| 331 |

gr.Markdown("""

|

| 332 |

|

| 333 |

+

## HunyuanVideo Keyframe Control Lora is an adapter for HunyuanVideo T2V model for keyframe-based video generation.

|

| 334 |

---

|

| 335 |

**Our architecture builds upon existing models, introducing key enhancements to optimize keyframe-based video generation**:

|

| 336 |

|

|

|

|

| 340 |

|

| 341 |

## Example Results

|

| 342 |

|

| 343 |

+

<div>

|

| 344 |

+

|

| 345 |

| Image 1 | Image 2 | Generated Video |

|

| 346 |

|---------|---------|-----------------|

|

| 347 |

+

| <img src="https://content.dashtoon.ai/stability-images/41aeca63-064a-4003-8c8b-bfe2cc80d275.png" > |  | <video controls autoplay src="https://content.dashtoon.ai/stability-images/14b7dd1a-1f46-4c4c-b4ec-9d0f948712af.mp4"></video> |

|

| 348 |

|  |  | <video controls autoplay src="https://content.dashtoon.ai/stability-images/b00ba193-b3b7-41a1-9bc1-9fdaceba6efa.mp4"></video> |

|

| 349 |

|  |  | <video controls autoplay src="https://content.dashtoon.ai/stability-images/0cb84780-4fdf-4ecc-ab48-12e7e1055a39.mp4"></video> |

|

| 350 |

|  |  | <video controls autoplay src="https://content.dashtoon.ai/stability-images/ce12156f-0ac2-4d16-b489-37e85c61b5b2.mp4"></video> |

|

| 351 |

|

| 352 |

+

</div>

|

| 353 |

+

|

| 354 |

## Recommended Settings

|

| 355 |

1. The model works best on human subjects. Single subject images work slightly better.

|

| 356 |

2. It is recommended to use the following image generation resolutions `720x1280`, `544x960`, `1280x720`, `960x544`.

|

|

|

|

| 361 |

## FINAL THOUGHTS: This ZeroGPU space, while successfully loaded, has its memory packed to the rim. If you're lucky you may be able to sneak in a small demo inference here and there, but you will most definitely not be using the recommended settings listed above. Help, of course, is not only welcome but very much appreciated. Learn more about our non-profit initiative, [AI Without Borders](https://huggingface.co/aiwithoutborders-xyz), by following us on Huggingface or on [X](http://x.com/borderlesstools), where we will be announcing a handful of exciting developments.

|

| 362 |

|

| 363 |

""", sanitize_html=False, elem_id="md_footer", container=True)

|

| 364 |

+

generate_button.click(generate_video, inputs=[prompt_textbox, frame1, frame2, resolution, guidance_scale, num_frames, num_inference_steps], outputs=outputs)

|

| 365 |

|

| 366 |

demo.launch(show_error=True)

|

| 367 |

|