Spaces:

Runtime error

Runtime error

Upload 25 files

Browse files- .gitattributes +1 -0

- README.md +71 -13

- __pycache__/app.cpython-311.pyc +0 -0

- __pycache__/app.cpython-37.pyc +0 -0

- app.py +10 -198

- images/1_gaussian_filter.png +3 -0

- images/inputExample/0.jpg +0 -0

- images/inputExample/1155.jpg +0 -0

- ip_adapter/__init__.py +9 -0

- ip_adapter/__pycache__/__init__.cpython-311.pyc +0 -0

- ip_adapter/__pycache__/__init__.cpython-37.pyc +0 -0

- ip_adapter/__pycache__/attention_processor.cpython-311.pyc +0 -0

- ip_adapter/__pycache__/attention_processor.cpython-37.pyc +0 -0

- ip_adapter/__pycache__/ip_adapter.cpython-311.pyc +0 -0

- ip_adapter/__pycache__/ip_adapter.cpython-37.pyc +0 -0

- ip_adapter/__pycache__/resampler.cpython-311.pyc +0 -0

- ip_adapter/__pycache__/resampler.cpython-37.pyc +0 -0

- ip_adapter/__pycache__/utils.cpython-311.pyc +0 -0

- ip_adapter/__pycache__/utils.cpython-37.pyc +0 -0

- ip_adapter/attention_processor.py +652 -0

- ip_adapter/custom_pipelines.py +394 -0

- ip_adapter/ip_adapter.py +504 -0

- ip_adapter/resampler.py +165 -0

- ip_adapter/test_resampler.py +44 -0

- ip_adapter/utils.py +5 -0

- requirements.txt +10 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

images/1_gaussian_filter.png filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

@@ -1,13 +1,71 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

---

|

| 12 |

-

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# ___***FilterPrompt: Guiding Image Transfer in Diffusion Models***___

|

| 2 |

+

|

| 3 |

+

<a href='https://meaoxixi.github.io/FilterPrompt/'><img src='https://img.shields.io/badge/Project-Page-green'></a>

|

| 4 |

+

<a href='https://arxiv.org/pdf/2404.13263'><img src='https://img.shields.io/badge/Paper-blue'></a>

|

| 5 |

+

<a href='https://arxiv.org/pdf/2404.13263'><img src='https://img.shields.io/badge/Demo-orange'></a>

|

| 6 |

+

|

| 7 |

+

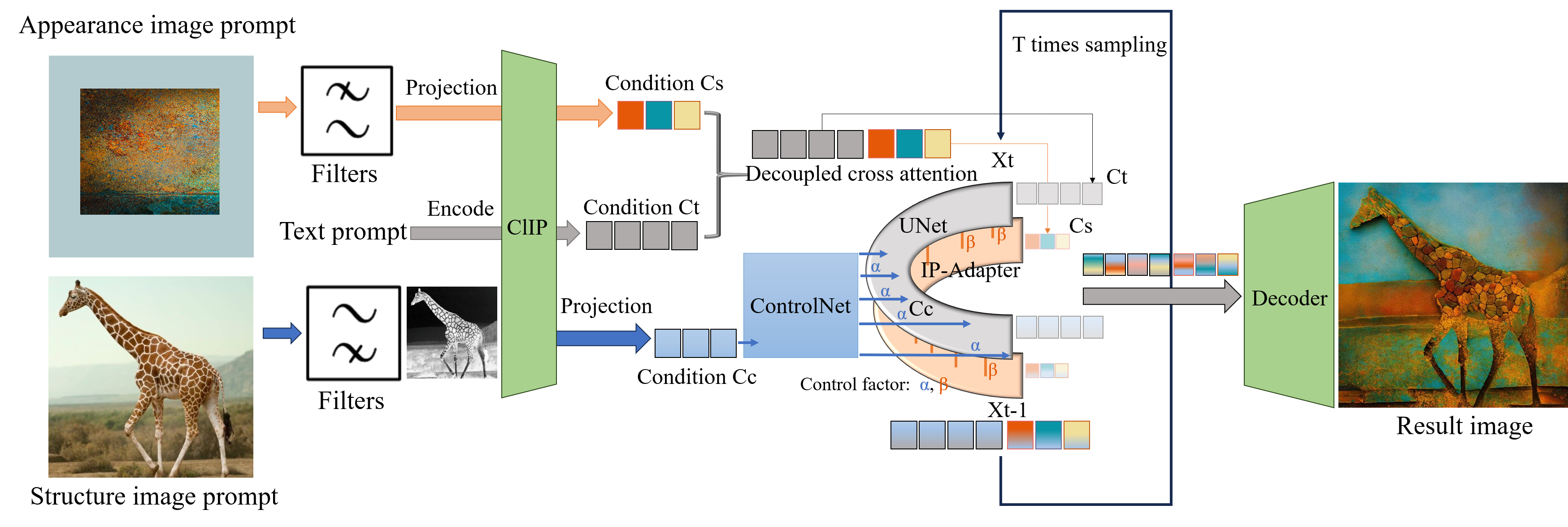

We propose FilterPrompt, an approach to enhance the model control effect. It can be universally applied to any diffusion model, allowing users to adjust the representation of specific image features in accordance with task requirements, thereby facilitating more precise and controllable generation outcomes. In particular, our designed experiments demonstrate that the FilterPrompt optimizes feature correlation, mitigates content conflicts during the generation process, and enhances the model's control capability.

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

---

|

| 12 |

+

# Getting Started

|

| 13 |

+

## Prerequisites

|

| 14 |

+

- We recommend running this repository using [Anaconda](https://docs.anaconda.com/anaconda/install/).

|

| 15 |

+

- NVIDIA GPU (Available memory is greater than 20GB)

|

| 16 |

+

- CUDA CuDNN (version ≥ 11.1, we actually use 11.7)

|

| 17 |

+

- Python 3.11.3 (Gradio requires Python 3.8 or higher)

|

| 18 |

+

- PyTorch: [Find the torch version that is suitable for the current cuda](https://pytorch.org/get-started/previous-versions/)

|

| 19 |

+

- 【example】:`pip install torch==2.0.0+cu117 torchvision==0.15.1+cu117 torchaudio==2.0.1+cu117 --extra-index-url https://download.pytorch.org/whl/cu117`

|

| 20 |

+

|

| 21 |

+

## Installation

|

| 22 |

+

Specifically, inspired by the concept of decoupled cross-attention in [IP-Adapter](https://ip-adapter.github.io/), we apply a similar methodology.

|

| 23 |

+

Please follow the instructions below to complete the environment configuration required for the code:

|

| 24 |

+

- Cloning this repo

|

| 25 |

+

```

|

| 26 |

+

git clone --single-branch --branch main https://github.com/Meaoxixi/FilterPrompt.git

|

| 27 |

+

```

|

| 28 |

+

- Dependencies

|

| 29 |

+

|

| 30 |

+

All dependencies for defining the environment are provided in `requirements.txt`.

|

| 31 |

+

```

|

| 32 |

+

cd FilterPrompt

|

| 33 |

+

conda create --name fp_env python=3.11.3

|

| 34 |

+

conda activate fp_env

|

| 35 |

+

pip install torch==2.0.0+cu117 torchvision==0.15.1+cu117 torchaudio==2.0.1+cu117 --extra-index-url https://download.pytorch.org/whl/cu117

|

| 36 |

+

pip install -r requirements.txt

|

| 37 |

+

```

|

| 38 |

+

- Download the necessary modules in the relative path `models/` from the following links

|

| 39 |

+

|

| 40 |

+

| Path | Description |

|

| 41 |

+

|:---------------------------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------------------------------------|

|

| 42 |

+

| `models/` | root path |

|

| 43 |

+

| ├── `ControlNet/` | Place the pre-trained model of [ControlNet](https://huggingface.co/lllyasviel) |

|

| 44 |

+

| ├── `control_v11f1p_sd15_depth ` | [ControlNet_depth](https://huggingface.co/lllyasviel/control_v11f1p_sd15_depth/tree/main) |

|

| 45 |

+

| └── `control_v11p_sd15_softedge` | [ControlNet_softEdge](https://huggingface.co/lllyasviel/control_v11p_sd15_softedge/tree/main) |

|

| 46 |

+

| ├── `IP-Adapter/` | [IP-Adapter](https://huggingface.co/h94/IP-Adapter/tree/main/models) |

|

| 47 |

+

| ├── `image_encoder ` | image_encoder of IP-Adapter |

|

| 48 |

+

| └── `other needed configuration files` | |

|

| 49 |

+

| ├── `sd-vae-ft-mse/` | Place the model of [sd-vae-ft-mse](https://huggingface.co/stabilityai/sd-vae-ft-mse/tree/main) |

|

| 50 |

+

| ├── `stable-diffusion-v1-5/` | Place the model of [stable-diffusion-v1-5](https://huggingface.co/runwayml/stable-diffusion-v1-5) |

|

| 51 |

+

| ├── `Realistic_Vision_V4.0_noVAE/` | Place the model of [Realistic_Vision_V4.0_noVAE](https://huggingface.co/SG161222/Realistic_Vision_V4.0_noVAE/tree/main) |

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

## Demo on Gradio

|

| 57 |

+

|

| 58 |

+

After installation and downloading the models, you can use `python app.py` to perform code in gradio. We have designed four task types to facilitate you to experience the application scenarios of FilterPrompt.

|

| 59 |

+

|

| 60 |

+

## Citation

|

| 61 |

+

If you find [FilterPrompt](https://arxiv.org/abs/2404.13263) helpful in your research/applications, please cite using this BibTeX:

|

| 62 |

+

```bibtex

|

| 63 |

+

@misc{wang2024filterprompt,

|

| 64 |

+

title={FilterPrompt: Guiding Image Transfer in Diffusion Models},

|

| 65 |

+

author={Xi Wang and Yichen Peng and Heng Fang and Haoran Xie and Xi Yang and Chuntao Li},

|

| 66 |

+

year={2024},

|

| 67 |

+

eprint={2404.13263},

|

| 68 |

+

archivePrefix={arXiv},

|

| 69 |

+

primaryClass={cs.CV}

|

| 70 |

+

}

|

| 71 |

+

```

|

__pycache__/app.cpython-311.pyc

ADDED

|

Binary file (22 kB). View file

|

|

|

__pycache__/app.cpython-37.pyc

ADDED

|

Binary file (6.39 kB). View file

|

|

|

app.py

CHANGED

|

@@ -2,8 +2,6 @@ import gradio as gr

|

|

| 2 |

import torch

|

| 3 |

from PIL import Image, ImageFilter, ImageOps,ImageEnhance

|

| 4 |

from scipy.ndimage import rank_filter, maximum_filter

|

| 5 |

-

from skimage.filters import gabor

|

| 6 |

-

import skimage.color

|

| 7 |

import numpy as np

|

| 8 |

import pathlib

|

| 9 |

import glob

|

|

@@ -15,8 +13,6 @@ from ip_adapter import IPAdapter

|

|

| 15 |

DESCRIPTION = """# [FilterPrompt](https://arxiv.org/abs/2404.13263): Guiding Imgae Transfer in Diffusion Models

|

| 16 |

<img id="teaser" alt="teaser" src="https://raw.githubusercontent.com/Meaoxixi/FilterPrompt/gh-pages/resources/teaser.png" />

|

| 17 |

"""

|

| 18 |

-

# <img id="overview" alt="overview" src="https://github.com/Meaoxixi/FilterPrompt/blob/gh-pages/resources/teaser.png" />

|

| 19 |

-

# 在你提供的链接中,你需要将 GitHub 页面链接中的 github.com 替换为 raw.githubusercontent.com,并将 blob 分支名称从链接中删除,以便获取原始文件内容。

|

| 20 |

##################################################################################################################

|

| 21 |

# 0. Get Pre-Models' Path Ready

|

| 22 |

##################################################################################################################

|

|

@@ -77,7 +73,6 @@ def image_grid(imgs, rows, cols):

|

|

| 77 |

grid.paste(img, box=(i % cols * w, i // cols * h))

|

| 78 |

return grid

|

| 79 |

#########################################################################

|

| 80 |

-

# 接下来是有关demo有关的函数定义

|

| 81 |

## funcitions for task 1 : style transfer

|

| 82 |

#########################################################################

|

| 83 |

def gaussian_blur(image, blur_radius):

|

|

@@ -120,50 +115,17 @@ def task1_test(photo, blur_radius, sketch):

|

|

| 120 |

#########################################################################

|

| 121 |

## funcitions for task 2 : color transfer

|

| 122 |

#########################################################################

|

| 123 |

-

#

|

| 124 |

-

def desaturate_filter(image):

|

| 125 |

-

image = Image.open(image)

|

| 126 |

-

return ImageOps.grayscale(image)

|

| 127 |

-

|

| 128 |

-

def gabor_filter(image):

|

| 129 |

-

image = Image.open(image)

|

| 130 |

-

image_array = np.array(image.convert('L')) # 转换为灰度图像

|

| 131 |

-

filtered_real, filtered_imag = gabor(image_array, frequency=0.6)

|

| 132 |

-

filtered_image = np.sqrt(filtered_real**2 + filtered_imag**2)

|

| 133 |

-

return Image.fromarray(np.uint8(filtered_image))

|

| 134 |

-

|

| 135 |

-

def rank_filter_func(image):

|

| 136 |

-

image = Image.open(image)

|

| 137 |

-

image_array = np.array(image.convert('L'))

|

| 138 |

-

filtered_image = rank_filter(image_array, rank=5, size=5)

|

| 139 |

-

return Image.fromarray(np.uint8(filtered_image))

|

| 140 |

-

|

| 141 |

-

def max_filter_func(image):

|

| 142 |

-

image = Image.open(image)

|

| 143 |

-

image_array = np.array(image.convert('L'))

|

| 144 |

-

filtered_image = maximum_filter(image_array, size=20)

|

| 145 |

-

return Image.fromarray(np.uint8(filtered_image))

|

| 146 |

-

# 定义处理函数

|

| 147 |

-

def fun2(image,image2, filter_name):

|

| 148 |

-

if filter_name == "Desaturate Filter":

|

| 149 |

-

return desaturate_filter(image),desaturate_filter(image2)

|

| 150 |

-

elif filter_name == "Gabor Filter":

|

| 151 |

-

return gabor_filter(image),gabor_filter(image2)

|

| 152 |

-

elif filter_name == "Rank Filter":

|

| 153 |

-

return rank_filter_func(image),rank_filter_func(image2)

|

| 154 |

-

elif filter_name == "Max Filter":

|

| 155 |

-

return max_filter_func(image),max_filter_func(image2)

|

| 156 |

-

else:

|

| 157 |

-

return image,image2

|

| 158 |

-

|

| 159 |

|

| 160 |

#############################################

|

| 161 |

-

# Demo

|

| 162 |

#############################################

|

| 163 |

-

|

| 164 |

-

#

|

| 165 |

-

|

| 166 |

-

|

|

|

|

|

|

|

| 167 |

gr.Markdown(DESCRIPTION)

|

| 168 |

|

| 169 |

# 1. 第一个任务Style Transfer的界面代码(青铜器拓本转照片)

|

|

@@ -220,161 +182,11 @@ with gr.Blocks(css="style.css") as demo:

|

|

| 220 |

inputs=[photo, gaussianKernel, sketch],

|

| 221 |

outputs=[original_result_task1, result_image_1],

|

| 222 |

)

|

| 223 |

-

|

| 224 |

-

# # 2. 第二个任务增强几何属性保护-Color transfer

|

| 225 |

-

# with gr.Group():

|

| 226 |

-

# ## 2.1 任务描述

|

| 227 |

-

# gr.Markdown(

|

| 228 |

-

# """

|

| 229 |

-

# ## Case 2: Color transferr

|

| 230 |

-

# - In this task, our main goal is to transfer color from imageA to imageB. We can feel the effect of the filter on the protection of geometric properties.

|

| 231 |

-

# - In the standard Controlnet-depth mode, the ideal input is the depth map.

|

| 232 |

-

# - Here, we choose to input the result processed by some filters into the network instead of the original depth map.

|

| 233 |

-

# - You can feel from the use of different filters that "decolorization+inversion+enhancement of contrast" can maximize the retention of detailed geometric information in the original image.

|

| 234 |

-

# """)

|

| 235 |

-

# ## 2.1 输入输出控件布局

|

| 236 |

-

# with gr.Row():

|

| 237 |

-

# with gr.Column():

|

| 238 |

-

# with gr.Row():

|

| 239 |

-

# input_appearImage = gr.Image(label="Input Appearance Image", type="filepath")

|

| 240 |

-

# with gr.Row():

|

| 241 |

-

# filter_dropdown = gr.Dropdown(

|

| 242 |

-

# choices=["Desaturate Filter", "Gabor Filter", "Rank Filter", "Max Filter"],

|

| 243 |

-

# label="Select Filter",

|

| 244 |

-

# value="Desaturate Filter"

|

| 245 |

-

# )

|

| 246 |

-

# with gr.Column():

|

| 247 |

-

# with gr.Row():

|

| 248 |

-

# input_strucImage = gr.Image(label="Input Structure Image", type="filepath")

|

| 249 |

-

# with gr.Row():

|

| 250 |

-

# geometry_button = gr.Button("Preprocess")

|

| 251 |

-

# with gr.Column():

|

| 252 |

-

# with gr.Row():

|

| 253 |

-

# afterFilterImage = gr.Image(label="Appearance image after filter choosed", interactive=False)

|

| 254 |

-

# with gr.Column():

|

| 255 |

-

# result_task2 = gr.Image(label="Generate results")

|

| 256 |

-

# # instyle = gr.State()

|

| 257 |

-

#

|

| 258 |

-

# ## 2.3 示例图展示

|

| 259 |

-

# with gr.Row():

|

| 260 |

-

# gr.Image(value="task/color_transfer.png", label="example Image", type="filepath")

|

| 261 |

-

#

|

| 262 |

-

# # 3. 第3个任务是光照效果的改善

|

| 263 |

-

# with gr.Group():

|

| 264 |

-

# ## 3.1 任务描述

|

| 265 |

-

# gr.Markdown(

|

| 266 |

-

# """

|

| 267 |

-

# ## Case 3: Image-to-Image translation

|

| 268 |

-

# - In this example, our goal is to turn a simple outline drawing/sketch into a detailed and realistic photo.

|

| 269 |

-

# - Here, we provide the original mask generation results, and provide the generation results after superimposing the image on the mask and passing the decolorization filter.

|

| 270 |

-

# - From this, you can feel that the mask obtained by the decolorization operation can retain a certain amount of original lighting information and improve the texture of the generated results.

|

| 271 |

-

# """)

|

| 272 |

-

# ## 3.2 输入输出控件布局

|

| 273 |

-

# with gr.Row():

|

| 274 |

-

# with gr.Column():

|

| 275 |

-

# with gr.Row():

|

| 276 |

-

# input_appearImage = gr.Image(label="Input Appearance Image", type="filepath")

|

| 277 |

-

# with gr.Row():

|

| 278 |

-

# filter_dropdown = gr.Dropdown(

|

| 279 |

-

# choices=["Desaturate Filter", "Gabor Filter", "Rank Filter", "Max Filter"],

|

| 280 |

-

# label="Select Filter",

|

| 281 |

-

# value="Desaturate Filter"

|

| 282 |

-

# )

|

| 283 |

-

# with gr.Column():

|

| 284 |

-

# with gr.Row():

|

| 285 |

-

# input_strucImage = gr.Image(label="Input Structure Image", type="filepath")

|

| 286 |

-

# with gr.Row():

|

| 287 |

-

# geometry_button = gr.Button("Preprocess")

|

| 288 |

-

# with gr.Column():

|

| 289 |

-

# with gr.Row():

|

| 290 |

-

# afterFilterImage = gr.Image(label="Appearance image after filter choosed", interactive=False)

|

| 291 |

-

# with gr.Column():

|

| 292 |

-

# result_task2 = gr.Image(label="Generate results")

|

| 293 |

-

# # instyle = gr.State()

|

| 294 |

-

#

|

| 295 |

-

# ## 3.3 示例图展示

|

| 296 |

-

# with gr.Row():

|

| 297 |

-

# gr.Image(value="task/4_light.jpg", label="example Image", type="filepath")

|

| 298 |

-

#

|

| 299 |

-

# # 4. 第4个任务是materials transfer

|

| 300 |

-

# with gr.Group():

|

| 301 |

-

# ## 4.1 任务描述

|

| 302 |

-

# gr.Markdown(

|

| 303 |

-

# """

|

| 304 |

-

# ## Case 4: Materials Transfer

|

| 305 |

-

# - In this example, our goal is to transfer the material appearance of one object image to another object image. The process involves changing the surface properties of objects in the image so that they appear to be made of another material.

|

| 306 |

-

# - Here, we provide the original generation results and provide a variety of edited filters.

|

| 307 |

-

# - You can specify any filtering operation and intuitively feel the impact of the filtering on the rendering properties in the generated results.

|

| 308 |

-

# - For example, a sharpen filter can sharpen the texture of a stone, a Gaussian blur can smooth the texture of a stone, and a custom filter can change the style of a stone. These all show that filterPrompt is simple and intuitive.

|

| 309 |

-

# """)

|

| 310 |

-

# ## 4.2 输入输出控件布局

|

| 311 |

-

# with gr.Row():

|

| 312 |

-

# with gr.Column():

|

| 313 |

-

# with gr.Row():

|

| 314 |

-

# input_appearImage = gr.Image(label="Input Appearance Image", type="filepath")

|

| 315 |

-

# with gr.Row():

|

| 316 |

-

# filter_dropdown = gr.Dropdown(

|

| 317 |

-

# choices=["Desaturate Filter", "Gabor Filter", "Rank Filter", "Max Filter"],

|

| 318 |

-

# label="Select Filter",

|

| 319 |

-

# value="Desaturate Filter"

|

| 320 |

-

# )

|

| 321 |

-

# with gr.Column():

|

| 322 |

-

# with gr.Row():

|

| 323 |

-

# input_strucImage = gr.Image(label="Input Structure Image", type="filepath")

|

| 324 |

-

# with gr.Row():

|

| 325 |

-

# geometry_button = gr.Button("Preprocess")

|

| 326 |

-

# with gr.Column():

|

| 327 |

-

# with gr.Row():

|

| 328 |

-

# afterFilterImage = gr.Image(label="Appearance image after filter choosed", interactive=False)

|

| 329 |

-

# with gr.Column():

|

| 330 |

-

# result_task2 = gr.Image(label="Generate results")

|

| 331 |

-

# # instyle = gr.State()

|

| 332 |

-

#

|

| 333 |

-

# ## 3.3 示例图展示

|

| 334 |

-

# with gr.Row():

|

| 335 |

-

# gr.Image(value="task/3mateialsTransfer.jpg", label="example Image", type="filepath")

|

| 336 |

-

#

|

| 337 |

-

#

|

| 338 |

-

# geometry_button.click(

|

| 339 |

-

# fn=fun2,

|

| 340 |

-

# inputs=[input_strucImage, input_appearImage, filter_dropdown],

|

| 341 |

-

# outputs=[afterFilterImage, result_task2],

|

| 342 |

-

# )

|

| 343 |

-

# aligned_face.change(

|

| 344 |

-

# fn=model.reconstruct_face,

|

| 345 |

-

# inputs=[aligned_face, encoder_type],

|

| 346 |

-

# outputs=[

|

| 347 |

-

# reconstructed_face,

|

| 348 |

-

# instyle,

|

| 349 |

-

# ],

|

| 350 |

-

# )

|

| 351 |

-

# style_type.change(

|

| 352 |

-

# fn=update_slider,

|

| 353 |

-

# inputs=style_type,

|

| 354 |

-

# outputs=style_index,

|

| 355 |

-

# )

|

| 356 |

-

# style_type.change(

|

| 357 |

-

# fn=update_style_image,

|

| 358 |

-

# inputs=style_type,

|

| 359 |

-

# outputs=style_image,

|

| 360 |

-

# )

|

| 361 |

-

# generate_button.click(

|

| 362 |

-

# fn=model.generate,

|

| 363 |

-

# inputs=[

|

| 364 |

-

# style_type,

|

| 365 |

-

# style_index,

|

| 366 |

-

# structure_weight,

|

| 367 |

-

# color_weight,

|

| 368 |

-

# structure_only,

|

| 369 |

-

# instyle,

|

| 370 |

-

# ],

|

| 371 |

-

# outputs=result,

|

| 372 |

-

#)

|

| 373 |

##################################################################################################################

|

| 374 |

# 2. run Demo on gradio

|

| 375 |

##################################################################################################################

|

| 376 |

|

| 377 |

if __name__ == "__main__":

|

| 378 |

demo.queue(max_size=5).launch()

|

| 379 |

-

|

| 380 |

-

#demo.queue(max_size=5).launch(server_port=12345, share=True)

|

|

|

|

| 2 |

import torch

|

| 3 |

from PIL import Image, ImageFilter, ImageOps,ImageEnhance

|

| 4 |

from scipy.ndimage import rank_filter, maximum_filter

|

|

|

|

|

|

|

| 5 |

import numpy as np

|

| 6 |

import pathlib

|

| 7 |

import glob

|

|

|

|

| 13 |

DESCRIPTION = """# [FilterPrompt](https://arxiv.org/abs/2404.13263): Guiding Imgae Transfer in Diffusion Models

|

| 14 |

<img id="teaser" alt="teaser" src="https://raw.githubusercontent.com/Meaoxixi/FilterPrompt/gh-pages/resources/teaser.png" />

|

| 15 |

"""

|

|

|

|

|

|

|

| 16 |

##################################################################################################################

|

| 17 |

# 0. Get Pre-Models' Path Ready

|

| 18 |

##################################################################################################################

|

|

|

|

| 73 |

grid.paste(img, box=(i % cols * w, i // cols * h))

|

| 74 |

return grid

|

| 75 |

#########################################################################

|

|

|

|

| 76 |

## funcitions for task 1 : style transfer

|

| 77 |

#########################################################################

|

| 78 |

def gaussian_blur(image, blur_radius):

|

|

|

|

| 115 |

#########################################################################

|

| 116 |

## funcitions for task 2 : color transfer

|

| 117 |

#########################################################################

|

| 118 |

+

# todo

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 119 |

|

| 120 |

#############################################

|

| 121 |

+

# Demo

|

| 122 |

#############################################

|

| 123 |

+

theme = gr.themes.Monochrome(primary_hue="blue").set(

|

| 124 |

+

loader_color="#FF0000",

|

| 125 |

+

slider_color="#FF0000",

|

| 126 |

+

)

|

| 127 |

+

|

| 128 |

+

with gr.Blocks(theme=theme) as demo:

|

| 129 |

gr.Markdown(DESCRIPTION)

|

| 130 |

|

| 131 |

# 1. 第一个任务Style Transfer的界面代码(青铜器拓本转照片)

|

|

|

|

| 182 |

inputs=[photo, gaussianKernel, sketch],

|

| 183 |

outputs=[original_result_task1, result_image_1],

|

| 184 |

)

|

| 185 |

+

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 186 |

##################################################################################################################

|

| 187 |

# 2. run Demo on gradio

|

| 188 |

##################################################################################################################

|

| 189 |

|

| 190 |

if __name__ == "__main__":

|

| 191 |

demo.queue(max_size=5).launch()

|

| 192 |

+

|

|

|

images/1_gaussian_filter.png

ADDED

|

Git LFS Details

|

images/inputExample/0.jpg

ADDED

|

images/inputExample/1155.jpg

ADDED

|

ip_adapter/__init__.py

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# from .ip_adapter import IPAdapter, IPAdapterPlus, IPAdapterPlusXL, IPAdapterXL, IPAdapterFull

|

| 2 |

+

from .ip_adapter import IPAdapter

|

| 3 |

+

__all__ = [

|

| 4 |

+

"IPAdapter",

|

| 5 |

+

# "IPAdapterPlus",

|

| 6 |

+

# "IPAdapterPlusXL",

|

| 7 |

+

# "IPAdapterXL",

|

| 8 |

+

# "IPAdapterFull",

|

| 9 |

+

]

|

ip_adapter/__pycache__/__init__.cpython-311.pyc

ADDED

|

Binary file (253 Bytes). View file

|

|

|

ip_adapter/__pycache__/__init__.cpython-37.pyc

ADDED

|

Binary file (211 Bytes). View file

|

|

|

ip_adapter/__pycache__/attention_processor.cpython-311.pyc

ADDED

|

Binary file (22.9 kB). View file

|

|

|

ip_adapter/__pycache__/attention_processor.cpython-37.pyc

ADDED

|

Binary file (10.8 kB). View file

|

|

|

ip_adapter/__pycache__/ip_adapter.cpython-311.pyc

ADDED

|

Binary file (22 kB). View file

|

|

|

ip_adapter/__pycache__/ip_adapter.cpython-37.pyc

ADDED

|

Binary file (11 kB). View file

|

|

|

ip_adapter/__pycache__/resampler.cpython-311.pyc

ADDED

|

Binary file (8.54 kB). View file

|

|

|

ip_adapter/__pycache__/resampler.cpython-37.pyc

ADDED

|

Binary file (4.17 kB). View file

|

|

|

ip_adapter/__pycache__/utils.cpython-311.pyc

ADDED

|

Binary file (470 Bytes). View file

|

|

|

ip_adapter/__pycache__/utils.cpython-37.pyc

ADDED

|

Binary file (359 Bytes). View file

|

|

|

ip_adapter/attention_processor.py

ADDED

|

@@ -0,0 +1,652 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# modified from https://github.com/huggingface/diffusers/blob/main/src/diffusers/models/attention_processor.py

|

| 2 |

+

import torch

|

| 3 |

+

import torch.nn as nn

|

| 4 |

+

import torch.nn.functional as F

|

| 5 |

+

from PIL import Image

|

| 6 |

+

import numpy as np

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

class AttnProcessor(nn.Module):

|

| 10 |

+

r"""

|

| 11 |

+

Default processor for performing attention-related computations.

|

| 12 |

+

|

| 13 |

+

用于执行与注意力相关的计算。

|

| 14 |

+

这个类的作用是对注意力相关的计算进行封装,使得代码更易于维护和扩展。

|

| 15 |

+

|

| 16 |

+

"""

|

| 17 |

+

|

| 18 |

+

def __init__(

|

| 19 |

+

self,

|

| 20 |

+

hidden_size=None,

|

| 21 |

+

cross_attention_dim=None,

|

| 22 |

+

):

|

| 23 |

+

super().__init__()

|

| 24 |

+

|

| 25 |

+

# 在__call__方法中,它接受一些输入参数:

|

| 26 |

+

# * attn(注意力机制)

|

| 27 |

+

# hidden_states(隐藏状态)

|

| 28 |

+

# encoder_hidden_states(编码器隐藏状态)

|

| 29 |

+

# attention_mask(注意力掩码)

|

| 30 |

+

# temb(可选的温度参数):通常用于控制注意力分布的集中度。

|

| 31 |

+

# 通过调整temb的数值,可以改变注意力分布的“尖锐程度”,从而影响模型对不同部分的关注程度。

|

| 32 |

+

# 较高的temb值可能会导致更加平均的注意力分布,而较低的temb值则可能导致更加集中的注意力分布。

|

| 33 |

+

# 这种机制可以用来调节模型的行为,使其更加灵活地适应不同的任务和数据特征。

|

| 34 |

+

#

|

| 35 |

+

# 然后它执行一系列操作,包括对隐藏状态进行一些变换,计算注意力分数,应用注意力权重,进行线性投影和丢弃操作,最后返回处理后的隐藏状态。

|

| 36 |

+

#

|

| 37 |

+

def __call__(

|

| 38 |

+

self,

|

| 39 |

+

attn,

|

| 40 |

+

hidden_states,

|

| 41 |

+

encoder_hidden_states=None,

|

| 42 |

+

attention_mask=None,

|

| 43 |

+

temb=None,

|

| 44 |

+

):

|

| 45 |

+

residual = hidden_states

|

| 46 |

+

# 残差连接

|

| 47 |

+

|

| 48 |

+

if attn.spatial_norm is not None:

|

| 49 |

+

hidden_states = attn.spatial_norm(hidden_states, temb)

|

| 50 |

+

|

| 51 |

+

input_ndim = hidden_states.ndim

|

| 52 |

+

|

| 53 |

+

if input_ndim == 4:

|

| 54 |

+

batch_size, channel, height, width = hidden_states.shape

|

| 55 |

+

hidden_states = hidden_states.view(batch_size, channel, height * width).transpose(1, 2)

|

| 56 |

+

|

| 57 |

+

batch_size, sequence_length, _ = (

|

| 58 |

+

hidden_states.shape if encoder_hidden_states is None else encoder_hidden_states.shape

|

| 59 |

+

)

|

| 60 |

+

attention_mask = attn.prepare_attention_mask(attention_mask, sequence_length, batch_size)

|

| 61 |

+

|

| 62 |

+

if attn.group_norm is not None:

|

| 63 |

+

hidden_states = attn.group_norm(hidden_states.transpose(1, 2)).transpose(1, 2)

|

| 64 |

+

|

| 65 |

+

query = attn.to_q(hidden_states)

|

| 66 |

+

|

| 67 |

+

if encoder_hidden_states is None:

|

| 68 |

+

encoder_hidden_states = hidden_states

|

| 69 |

+

elif attn.norm_cross:

|

| 70 |

+

encoder_hidden_states = attn.norm_encoder_hidden_states(encoder_hidden_states)

|

| 71 |

+

|

| 72 |

+

key = attn.to_k(encoder_hidden_states)

|

| 73 |

+

value = attn.to_v(encoder_hidden_states)

|

| 74 |

+

|

| 75 |

+

query = attn.head_to_batch_dim(query)

|

| 76 |

+

key = attn.head_to_batch_dim(key)

|

| 77 |

+

value = attn.head_to_batch_dim(value)

|

| 78 |

+

|

| 79 |

+

# 这段代码首先使用query和key计算注意力分数,同时考虑了可能存在的attention_mask

|

| 80 |

+

# 然后利用这些注意力分数对value进行加权求和,得到了经过注意力机制加权后的hidden_states

|

| 81 |

+

# 最后,通过attn.batch_to_head_dim操作将hidden_states从批处理维度转换回头部维度。

|

| 82 |

+

# 这些操作是多头注意力机制中常见的步骤,用于计算并应用注意力权重。

|

| 83 |

+

attention_probs = attn.get_attention_scores(query, key, attention_mask)

|

| 84 |

+

hidden_states = torch.bmm(attention_probs, value)

|

| 85 |

+

hidden_states = attn.batch_to_head_dim(hidden_states)

|

| 86 |

+

|

| 87 |

+

# linear proj

|

| 88 |

+

hidden_states = attn.to_out[0](hidden_states)

|

| 89 |

+

# dropout

|

| 90 |

+

hidden_states = attn.to_out[1](hidden_states)

|

| 91 |

+

|

| 92 |

+

if input_ndim == 4:

|

| 93 |

+

hidden_states = hidden_states.transpose(-1, -2).reshape(batch_size, channel, height, width)

|

| 94 |

+

|

| 95 |

+

if attn.residual_connection:

|

| 96 |

+

hidden_states = hidden_states + residual

|

| 97 |

+

|

| 98 |

+

# 这个操作可能是为了对输出进行缩放或归一化,以确保输出的数值范围符合模型的需要

|

| 99 |

+

hidden_states = hidden_states / attn.rescale_output_factor

|

| 100 |

+

|

| 101 |

+

return hidden_states

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

class IPAttnProcessor(nn.Module):

|

| 105 |

+

r"""

|

| 106 |

+

Attention processor for IP-Adapater.

|

| 107 |

+

Args:

|

| 108 |

+

hidden_size (`int`):

|

| 109 |

+

The hidden size of the attention layer.

|

| 110 |

+

cross_attention_dim (`int`):

|

| 111 |

+

The number of channels in the `encoder_hidden_states`.

|

| 112 |

+

scale (`float`, defaults to 1.0):

|

| 113 |

+

the weight scale of image prompt.

|

| 114 |

+

num_tokens (`int`, defaults to 4 when do ip_adapter_plus it should be 16):

|

| 115 |

+

The context length of the image features.

|

| 116 |

+

"""

|

| 117 |

+

roundNumber = 0

|

| 118 |

+

|

| 119 |

+

def __init__(self, hidden_size, cross_attention_dim=None, scale=1.0, num_tokens=4, Control_factor=1.0, IP_factor= 1.0):

|

| 120 |

+

super().__init__()

|

| 121 |

+

|

| 122 |

+

self.hidden_size = hidden_size

|

| 123 |

+

self.cross_attention_dim = cross_attention_dim

|

| 124 |

+

#获取到的cross_attention_dim大小为768,这个类调用了16次

|

| 125 |

+

#print(cross_attention_dim)

|

| 126 |

+

self.scale = scale

|

| 127 |

+

self.num_tokens = num_tokens

|

| 128 |

+

self.Control_factor = Control_factor

|

| 129 |

+

self.IP_factor = IP_factor

|

| 130 |

+

#print("IPAttnProcessor中获取得到的Control_factor:{}".format(self.Control_factor))

|

| 131 |

+

|

| 132 |

+

self.to_k_ip = nn.Linear(cross_attention_dim or hidden_size, hidden_size, bias=False)

|

| 133 |

+

self.to_v_ip = nn.Linear(cross_attention_dim or hidden_size, hidden_size, bias=False)

|

| 134 |

+

|

| 135 |

+

def __call__(

|

| 136 |

+

self,

|

| 137 |

+

attn,

|

| 138 |

+

hidden_states,

|

| 139 |

+

encoder_hidden_states=None,

|

| 140 |

+

attention_mask=None,

|

| 141 |

+

temb=None,

|

| 142 |

+

):

|

| 143 |

+

residual = hidden_states

|

| 144 |

+

|

| 145 |

+

if attn.spatial_norm is not None:

|

| 146 |

+

hidden_states = attn.spatial_norm(hidden_states, temb)

|

| 147 |

+

|

| 148 |

+

input_ndim = hidden_states.ndim

|

| 149 |

+

|

| 150 |

+

if input_ndim == 4:

|

| 151 |

+

batch_size, channel, height, width = hidden_states.shape

|

| 152 |

+

hidden_states = hidden_states.view(batch_size, channel, height * width).transpose(1, 2)

|

| 153 |

+

|

| 154 |

+

batch_size, sequence_length, _ = (

|

| 155 |

+

hidden_states.shape if encoder_hidden_states is None else encoder_hidden_states.shape

|

| 156 |

+

)

|

| 157 |

+

attention_mask = attn.prepare_attention_mask(attention_mask, sequence_length, batch_size)

|

| 158 |

+

# sequence_length =81

|

| 159 |

+

# batch_size=2

|

| 160 |

+

|

| 161 |

+

if attn.group_norm is not None:

|

| 162 |

+

hidden_states = attn.group_norm(hidden_states.transpose(1, 2)).transpose(1, 2)

|

| 163 |

+

|

| 164 |

+

query = attn.to_q(hidden_states)

|

| 165 |

+

# query.shape = [2,6205,320]

|

| 166 |

+

###########################################

|

| 167 |

+

# queryBegin = attn.to_q(hidden_states)

|

| 168 |

+

###########################################

|

| 169 |

+

|

| 170 |

+

|

| 171 |

+

# 这段代码首先检查encoder_hidden_states是否为None,如果是空,说明是无条件生成

|

| 172 |

+

if encoder_hidden_states is None:

|

| 173 |

+

encoder_hidden_states = hidden_states

|

| 174 |

+

else:

|

| 175 |

+

# get encoder_hidden_states, ip_hidden_states

|

| 176 |

+

# 如果encoder_hidden_states不为None

|

| 177 |

+

# 则对encoder_hidden_states进行切片操作,将其分为两部分,分别赋值给encoder_hidden_states和ip_hidden_states

|

| 178 |

+

end_pos = encoder_hidden_states.shape[1] - self.num_tokens

|

| 179 |

+

encoder_hidden_states, ip_hidden_states = (

|

| 180 |

+

encoder_hidden_states[:, :end_pos, :],

|

| 181 |

+

encoder_hidden_states[:, end_pos:, :],

|

| 182 |

+

)

|

| 183 |

+

# 接着,如果attn.norm_cross为True,则对encoder_hidden_states进行规范化处理。

|

| 184 |

+

if attn.norm_cross:

|

| 185 |

+

encoder_hidden_states = attn.norm_encoder_hidden_states(encoder_hidden_states)

|

| 186 |

+

|

| 187 |

+

# encoder_hidden_states.shape = [2,77,768]

|

| 188 |

+

# ip_hidden_states.shape = [2,4,768]

|

| 189 |

+

key = attn.to_k(encoder_hidden_states)

|

| 190 |

+

# keyforIPADAPTER = attn.to_q(encoder_hidden_states)

|

| 191 |

+

value = attn.to_v(encoder_hidden_states)

|

| 192 |

+

|

| 193 |

+

query = attn.head_to_batch_dim(query)

|

| 194 |

+

# query.shape = [16,6205,40]

|

| 195 |

+

|

| 196 |

+

key = attn.head_to_batch_dim(key)

|

| 197 |

+

value = attn.head_to_batch_dim(value)

|

| 198 |

+

|

| 199 |

+

attention_probs = attn.get_attention_scores(query, key, attention_mask)

|

| 200 |

+

hidden_states = torch.bmm(attention_probs, value)

|

| 201 |

+

hidden_states = attn.batch_to_head_dim(hidden_states)

|

| 202 |

+

# hidden_states.shape = [2,6205,320]

|

| 203 |

+

# print("**************************************************")

|

| 204 |

+

|

| 205 |

+

# queryforip = queryBegin,参数1.5为0就等于原先的IP-Adapter

|

| 206 |

+

#queryforip = 4*attn.to_q(hidden_states)+ 2*queryBegin

|

| 207 |

+

# queryforip = attn.to_q(hidden_states)

|

| 208 |

+

#queryforip = attn.head_to_batch_dim(queryforip)

|

| 209 |

+

# print("hidden_states.shape=queryforip.shape:")

|

| 210 |

+

# print(queryforip.shape)

|

| 211 |

+

# print("**************************************************")

|

| 212 |

+

# for ip-adapter

|

| 213 |

+

ip_key = self.to_k_ip(ip_hidden_states)

|

| 214 |

+

ip_value = self.to_v_ip(ip_hidden_states)

|

| 215 |

+

|

| 216 |

+

ip_key = attn.head_to_batch_dim(ip_key)

|

| 217 |

+

ip_value = attn.head_to_batch_dim(ip_value)

|

| 218 |

+

|

| 219 |

+

# ip_key.shape=[16, 4, 40]

|

| 220 |

+

# query = [16,6025,40]

|

| 221 |

+

# target = [16,6025,4]

|

| 222 |

+

# print("**************************************************")

|

| 223 |

+

# print(query)

|

| 224 |

+

# print("**************************************************")

|

| 225 |

+

# threshold = 5

|

| 226 |

+

# tensor_from_data = torch.tensor(query).to("cuda")

|

| 227 |

+

# binary_mask = torch.where(tensor_from_data > threshold, torch.tensor(0).to("cuda"), torch.tensor(1).to("cuda"))

|

| 228 |

+

# binary_mask = binary_mask.to(torch.float16)

|

| 229 |

+

# print("**************************************************")

|

| 230 |

+

# print(binary_mask)

|

| 231 |

+

# print("**************************************************")

|

| 232 |

+

|

| 233 |

+

|

| 234 |

+

# query.shape=[16,6205,40]

|

| 235 |

+

ip_attention_probs = attn.get_attention_scores(query, ip_key, None)

|

| 236 |

+

##########################################

|

| 237 |

+

# attention_probs

|

| 238 |

+

#ip_attention_probs = attn.get_attention_scores(keyforIPADAPTER, ip_key, None)

|

| 239 |

+

##########################################

|

| 240 |

+

|

| 241 |

+

ip_hidden_states = torch.bmm(ip_attention_probs, ip_value)

|

| 242 |

+

##########################################

|

| 243 |

+

# ip_hidden_states = ip_hidden_states*binary_mask +(1-binary_mask)*query

|

| 244 |

+

##########################################

|

| 245 |

+

ip_hidden_states = attn.batch_to_head_dim(ip_hidden_states)

|

| 246 |

+

|

| 247 |

+

# hidden_states.shape=【2,6205,320】s

|

| 248 |

+

# ip_hidden_states.shape=【2,3835,320】

|

| 249 |

+

# hidden_states = hidden_states + self.scale* ip_hidden_states

|

| 250 |

+

#print("Control_factor:{}".format(self.Control_factor))

|

| 251 |

+

#print("IP_factor:{}".format(self.IP_factor))

|

| 252 |

+

hidden_states = self.Control_factor * hidden_states + self.IP_factor * self.scale * ip_hidden_states

|

| 253 |

+

|

| 254 |

+

|

| 255 |

+

|

| 256 |

+

#hidden_states = 2*hidden_states +0.6*self.scale*ip_hidden_states

|

| 257 |

+

# if self.roundNumber < 12:

|

| 258 |

+

# hidden_states = hidden_states

|

| 259 |

+

# else:

|

| 260 |

+

# hidden_states = 1.2*hidden_states +0.6*self.scale*ip_hidden_states

|

| 261 |

+

# self.roundNumber = self.roundNumber + 1

|

| 262 |

+

|

| 263 |

+

|

| 264 |

+

# linear proj

|

| 265 |

+

hidden_states = attn.to_out[0](hidden_states)

|

| 266 |

+

# dropout

|

| 267 |

+

hidden_states = attn.to_out[1](hidden_states)

|

| 268 |

+

|

| 269 |

+

if input_ndim == 4:

|

| 270 |

+

hidden_states = hidden_states.transpose(-1, -2).reshape(batch_size, channel, height, width)

|

| 271 |

+

|

| 272 |

+

if attn.residual_connection:

|

| 273 |

+

hidden_states = hidden_states + residual

|

| 274 |

+

|

| 275 |

+

hidden_states = hidden_states / attn.rescale_output_factor

|

| 276 |

+

|

| 277 |

+

return hidden_states

|

| 278 |

+

|

| 279 |

+

|

| 280 |

+

class AttnProcessor2_0(torch.nn.Module):

|

| 281 |

+

r"""

|

| 282 |

+

Processor for implementing scaled dot-product attention (enabled by default if you're using PyTorch 2.0).

|

| 283 |

+

"""

|

| 284 |

+

|

| 285 |

+

def __init__(

|

| 286 |

+

self,

|

| 287 |

+

hidden_size=None,

|

| 288 |

+

cross_attention_dim=None,

|

| 289 |

+

):

|

| 290 |

+

super().__init__()

|

| 291 |

+

if not hasattr(F, "scaled_dot_product_attention"):

|

| 292 |

+

raise ImportError("AttnProcessor2_0 requires PyTorch 2.0, to use it, please upgrade PyTorch to 2.0.")

|

| 293 |

+

|

| 294 |

+

def __call__(

|

| 295 |

+

self,

|

| 296 |

+

attn,

|

| 297 |

+

hidden_states,

|

| 298 |

+

encoder_hidden_states=None,

|

| 299 |

+

attention_mask=None,

|

| 300 |

+

temb=None,

|

| 301 |

+

):

|

| 302 |

+

residual = hidden_states

|

| 303 |

+

|

| 304 |

+

if attn.spatial_norm is not None:

|

| 305 |

+

hidden_states = attn.spatial_norm(hidden_states, temb)

|

| 306 |

+

|

| 307 |

+

input_ndim = hidden_states.ndim

|

| 308 |

+

|

| 309 |

+

if input_ndim == 4:

|

| 310 |

+

batch_size, channel, height, width = hidden_states.shape

|

| 311 |

+

hidden_states = hidden_states.view(batch_size, channel, height * width).transpose(1, 2)

|

| 312 |

+

|

| 313 |

+

batch_size, sequence_length, _ = (

|

| 314 |

+

hidden_states.shape if encoder_hidden_states is None else encoder_hidden_states.shape

|

| 315 |

+

)

|

| 316 |

+

|

| 317 |

+

if attention_mask is not None:

|

| 318 |

+

attention_mask = attn.prepare_attention_mask(attention_mask, sequence_length, batch_size)

|

| 319 |

+

# scaled_dot_product_attention expects attention_mask shape to be

|

| 320 |

+

# (batch, heads, source_length, target_length)

|

| 321 |

+

attention_mask = attention_mask.view(batch_size, attn.heads, -1, attention_mask.shape[-1])

|

| 322 |

+

|

| 323 |

+

if attn.group_norm is not None:

|

| 324 |

+

hidden_states = attn.group_norm(hidden_states.transpose(1, 2)).transpose(1, 2)

|

| 325 |

+

|

| 326 |

+

query = attn.to_q(hidden_states)

|

| 327 |

+

|

| 328 |

+

if encoder_hidden_states is None:

|

| 329 |

+

encoder_hidden_states = hidden_states

|

| 330 |

+