+  +

+

+

+[](https://github.com/open-mmlab/mmocr/actions)

+[](https://mmocr.readthedocs.io/en/dev-1.x/?badge=dev-1.x)

+[](https://codecov.io/gh/open-mmlab/mmocr)

+[](https://github.com/open-mmlab/mmocr/blob/main/LICENSE)

+[](https://pypi.org/project/mmocr/)

+[](https://github.com/open-mmlab/mmocr/issues)

+[](https://github.com/open-mmlab/mmocr/issues)

+

+

+

+

+[](https://github.com/open-mmlab/mmocr/actions)

+[](https://mmocr.readthedocs.io/en/dev-1.x/?badge=dev-1.x)

+[](https://codecov.io/gh/open-mmlab/mmocr)

+[](https://github.com/open-mmlab/mmocr/blob/main/LICENSE)

+[](https://pypi.org/project/mmocr/)

+[](https://github.com/open-mmlab/mmocr/issues)

+[](https://github.com/open-mmlab/mmocr/issues)

+  +

+[📘Documentation](https://mmocr.readthedocs.io/en/dev-1.x/) |

+[🛠️Installation](https://mmocr.readthedocs.io/en/dev-1.x/get_started/install.html) |

+[👀Model Zoo](https://mmocr.readthedocs.io/en/dev-1.x/modelzoo.html) |

+[🆕Update News](https://mmocr.readthedocs.io/en/dev-1.x/notes/changelog.html) |

+[🤔Reporting Issues](https://github.com/open-mmlab/mmocr/issues/new/choose)

+

+

+

+[📘Documentation](https://mmocr.readthedocs.io/en/dev-1.x/) |

+[🛠️Installation](https://mmocr.readthedocs.io/en/dev-1.x/get_started/install.html) |

+[👀Model Zoo](https://mmocr.readthedocs.io/en/dev-1.x/modelzoo.html) |

+[🆕Update News](https://mmocr.readthedocs.io/en/dev-1.x/notes/changelog.html) |

+[🤔Reporting Issues](https://github.com/open-mmlab/mmocr/issues/new/choose)

+

+

+

+ +

+

+ OpenMMLab website

+

+

+ HOT

+

+

+

+ OpenMMLab platform

+

+

+ TRY IT OUT

+

+

+

+  +

+[📘Documentation](https://mmocr.readthedocs.io/en/dev-1.x/) |

+[🛠️Installation](https://mmocr.readthedocs.io/en/dev-1.x/get_started/install.html) |

+[👀Model Zoo](https://mmocr.readthedocs.io/en/dev-1.x/modelzoo.html) |

+[🆕Update News](https://mmocr.readthedocs.io/en/dev-1.x/notes/changelog.html) |

+[🤔Reporting Issues](https://github.com/open-mmlab/mmocr/issues/new/choose)

+

+

+

+[📘Documentation](https://mmocr.readthedocs.io/en/dev-1.x/) |

+[🛠️Installation](https://mmocr.readthedocs.io/en/dev-1.x/get_started/install.html) |

+[👀Model Zoo](https://mmocr.readthedocs.io/en/dev-1.x/modelzoo.html) |

+[🆕Update News](https://mmocr.readthedocs.io/en/dev-1.x/notes/changelog.html) |

+[🤔Reporting Issues](https://github.com/open-mmlab/mmocr/issues/new/choose)

+

+

+

+English | [简体中文](README_zh-CN.md)

+

+

+

+

+## Latest Updates

+

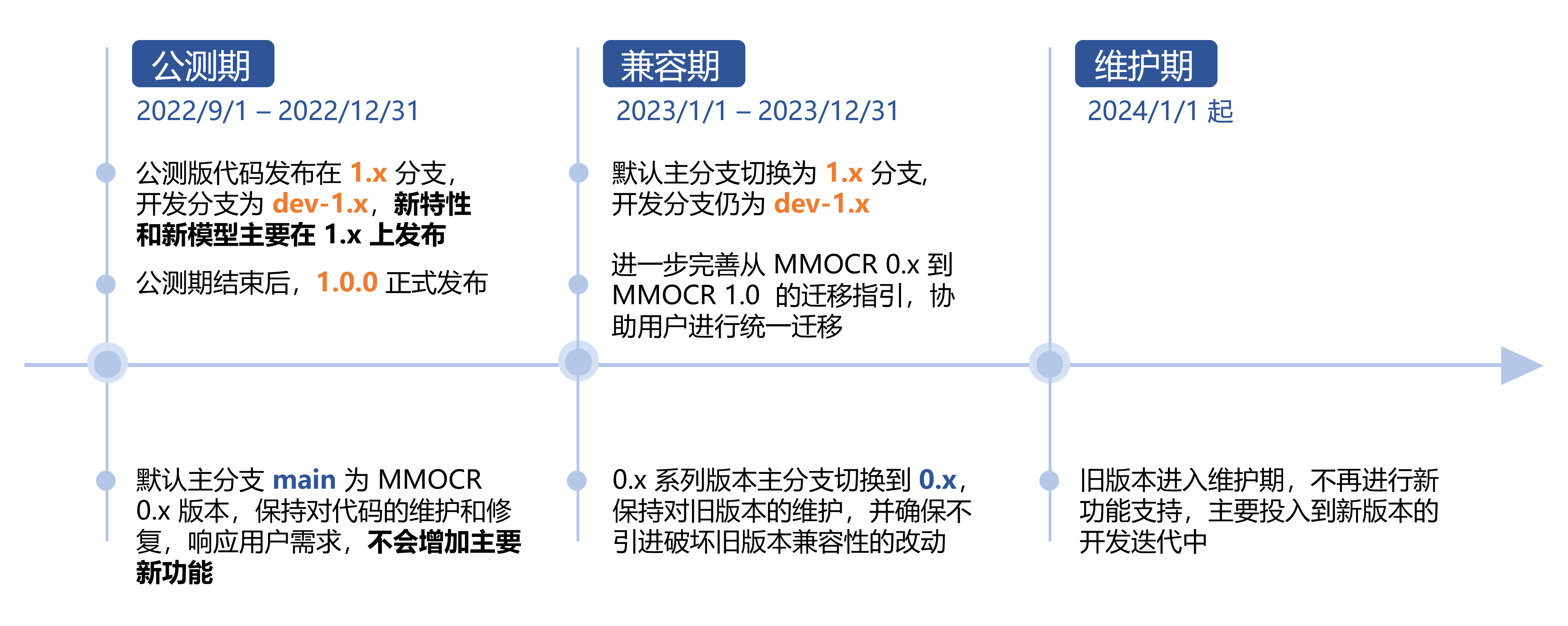

+**The default branch is now `main` and the code on the branch has been upgraded to v1.0.0. The old `main` branch (v0.6.3) code now exists on the `0.x` branch.** If you have been using the `main` branch and encounter upgrade issues, please read the [Migration Guide](https://mmocr.readthedocs.io/en/dev-1.x/migration/overview.html) and notes on [Branches](https://mmocr.readthedocs.io/en/dev-1.x/migration/branches.html) .

+

+v1.0.0 was released in 2023-04-06. Major updates from 1.0.0rc6 include:

+

+1. Support for SCUT-CTW1500, SynthText, and MJSynth datasets in Dataset Preparer

+2. Updated FAQ and documentation

+3. Deprecation of file_client_args in favor of backend_args

+4. Added a new MMOCR tutorial notebook

+

+To know more about the updates in MMOCR 1.0, please refer to [What's New in MMOCR 1.x](https://mmocr.readthedocs.io/en/dev-1.x/migration/news.html), or

+Read [Changelog](https://mmocr.readthedocs.io/en/dev-1.x/notes/changelog.html) for more details!

+

+## Introduction

+

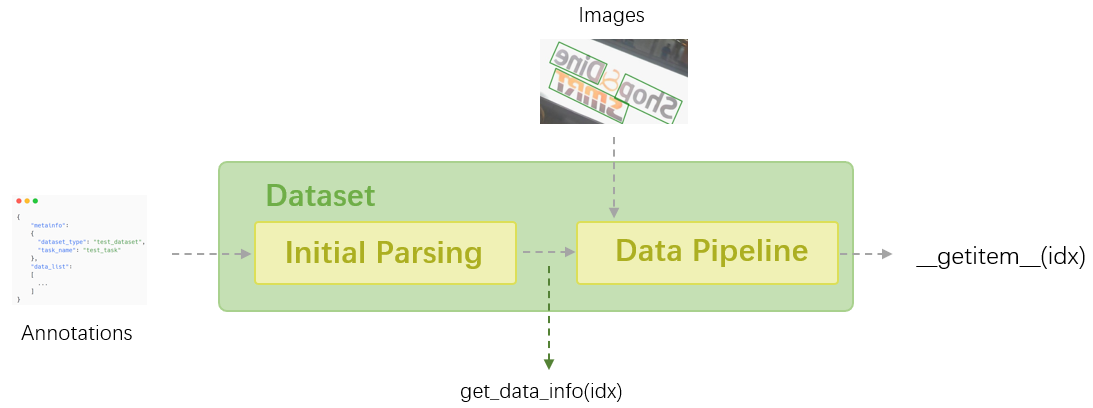

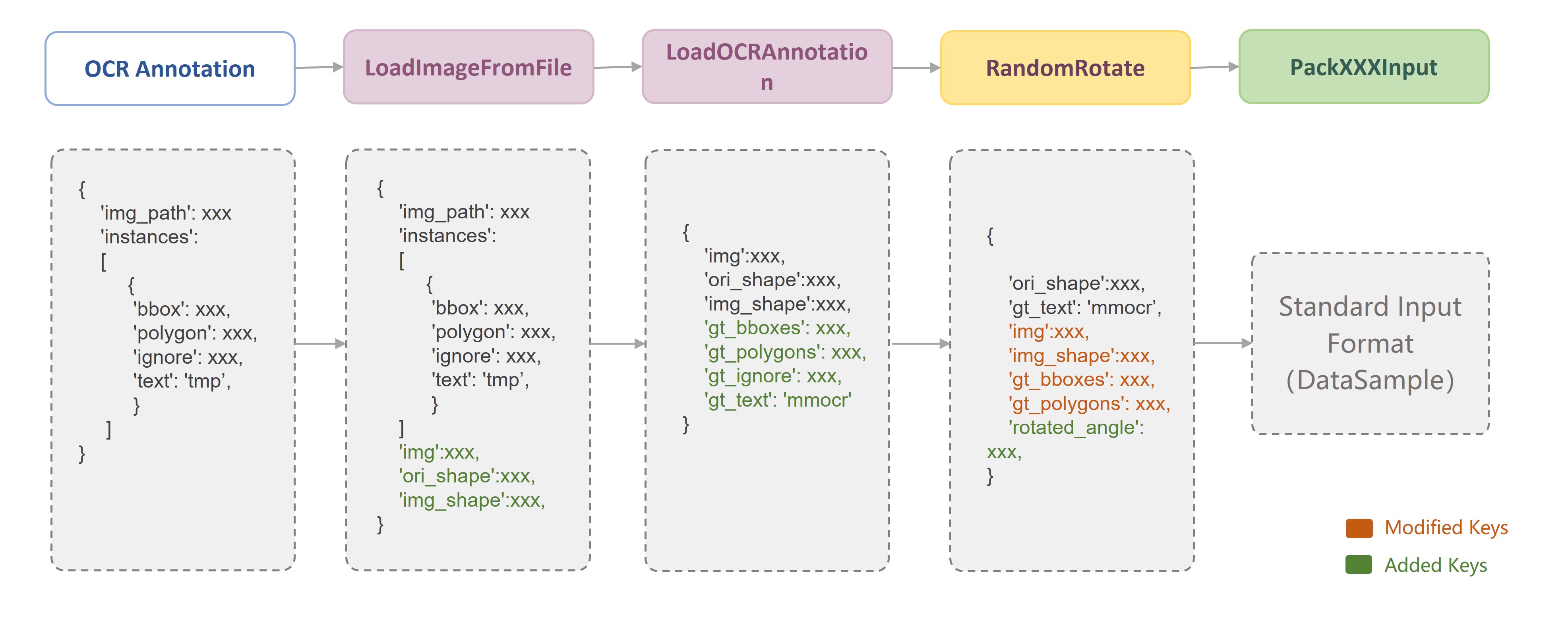

+MMOCR is an open-source toolbox based on PyTorch and mmdetection for text detection, text recognition, and the corresponding downstream tasks including key information extraction. It is part of the [OpenMMLab](https://openmmlab.com/) project.

+

+The main branch works with **PyTorch 1.6+**.

+

+

+  +

+

+

+### Major Features

+

+- **Comprehensive Pipeline**

+

+ The toolbox supports not only text detection and text recognition, but also their downstream tasks such as key information extraction.

+

+- **Multiple Models**

+

+ The toolbox supports a wide variety of state-of-the-art models for text detection, text recognition and key information extraction.

+

+- **Modular Design**

+

+ The modular design of MMOCR enables users to define their own optimizers, data preprocessors, and model components such as backbones, necks and heads as well as losses. Please refer to [Overview](https://mmocr.readthedocs.io/en/dev-1.x/get_started/overview.html) for how to construct a customized model.

+

+- **Numerous Utilities**

+

+ The toolbox provides a comprehensive set of utilities which can help users assess the performance of models. It includes visualizers which allow visualization of images, ground truths as well as predicted bounding boxes, and a validation tool for evaluating checkpoints during training. It also includes data converters to demonstrate how to convert your own data to the annotation files which the toolbox supports.

+

+## Installation

+

+MMOCR depends on [PyTorch](https://pytorch.org/), [MMEngine](https://github.com/open-mmlab/mmengine), [MMCV](https://github.com/open-mmlab/mmcv) and [MMDetection](https://github.com/open-mmlab/mmdetection).

+Below are quick steps for installation.

+Please refer to [Install Guide](https://mmocr.readthedocs.io/en/dev-1.x/get_started/install.html) for more detailed instruction.

+

+```shell

+conda create -n open-mmlab python=3.8 pytorch=1.10 cudatoolkit=11.3 torchvision -c pytorch -y

+conda activate open-mmlab

+pip3 install openmim

+git clone https://github.com/open-mmlab/mmocr.git

+cd mmocr

+mim install -e .

+```

+

+## Get Started

+

+Please see [Quick Run](https://mmocr.readthedocs.io/en/dev-1.x/get_started/quick_run.html) for the basic usage of MMOCR.

+

+## [Model Zoo](https://mmocr.readthedocs.io/en/dev-1.x/modelzoo.html)

+

+Supported algorithms:

+

+ +

+

+

+

+BackBone

+ +- [x] [oCLIP](configs/backbone/oclip/README.md) (ECCV'2022) + +

+

+

+Text Detection

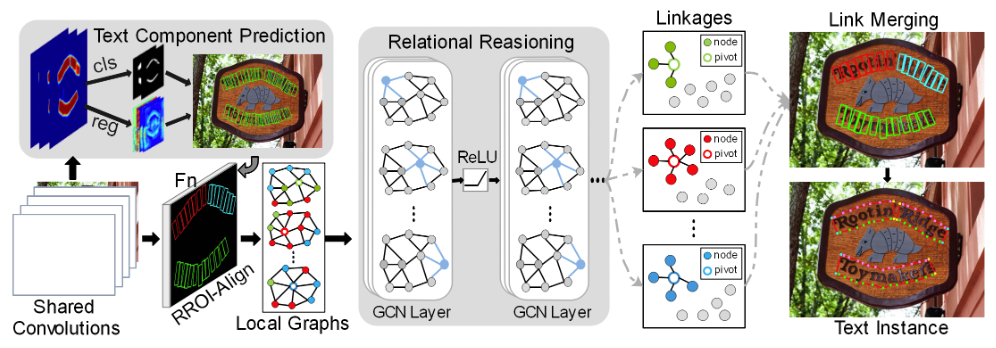

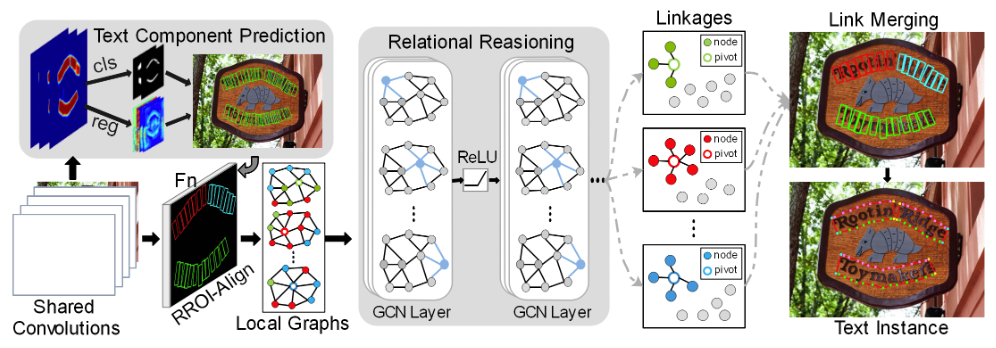

+ +- [x] [DBNet](configs/textdet/dbnet/README.md) (AAAI'2020) / [DBNet++](configs/textdet/dbnetpp/README.md) (TPAMI'2022) +- [x] [Mask R-CNN](configs/textdet/maskrcnn/README.md) (ICCV'2017) +- [x] [PANet](configs/textdet/panet/README.md) (ICCV'2019) +- [x] [PSENet](configs/textdet/psenet/README.md) (CVPR'2019) +- [x] [TextSnake](configs/textdet/textsnake/README.md) (ECCV'2018) +- [x] [DRRG](configs/textdet/drrg/README.md) (CVPR'2020) +- [x] [FCENet](configs/textdet/fcenet/README.md) (CVPR'2021) + +

+

+

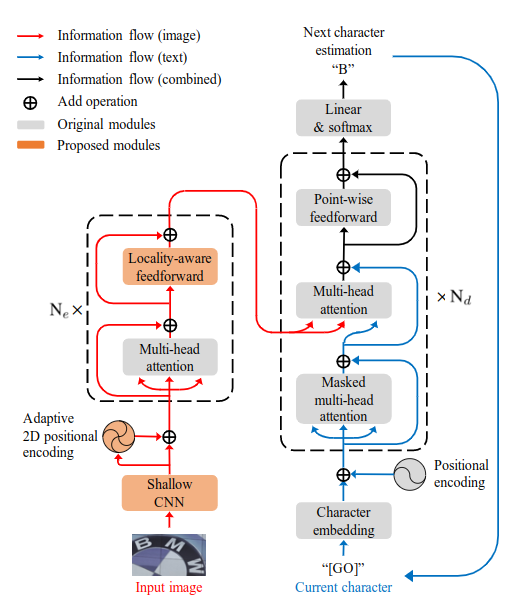

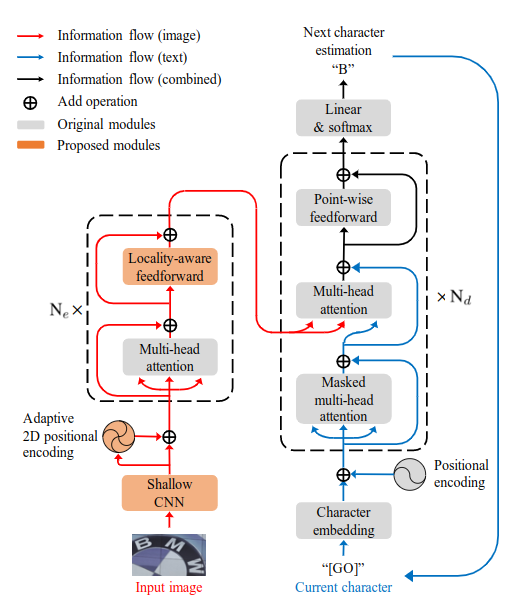

+Text Recognition

+ +- [x] [ABINet](configs/textrecog/abinet/README.md) (CVPR'2021) +- [x] [ASTER](configs/textrecog/aster/README.md) (TPAMI'2018) +- [x] [CRNN](configs/textrecog/crnn/README.md) (TPAMI'2016) +- [x] [MASTER](configs/textrecog/master/README.md) (PR'2021) +- [x] [NRTR](configs/textrecog/nrtr/README.md) (ICDAR'2019) +- [x] [RobustScanner](configs/textrecog/robust_scanner/README.md) (ECCV'2020) +- [x] [SAR](configs/textrecog/sar/README.md) (AAAI'2019) +- [x] [SATRN](configs/textrecog/satrn/README.md) (CVPR'2020 Workshop on Text and Documents in the Deep Learning Era) +- [x] [SVTR](configs/textrecog/svtr/README.md) (IJCAI'2022) + +

+

+

+Key Information Extraction

+ +- [x] [SDMG-R](configs/kie/sdmgr/README.md) (ArXiv'2021) + +

+

+

+Please refer to [model_zoo](https://mmocr.readthedocs.io/en/dev-1.x/modelzoo.html) for more details.

+

+## Projects

+

+[Here](projects/README.md) are some implementations of SOTA models and solutions built on MMOCR, which are supported and maintained by community users. These projects demonstrate the best practices based on MMOCR for research and product development. We welcome and appreciate all the contributions to OpenMMLab ecosystem.

+

+## Contributing

+

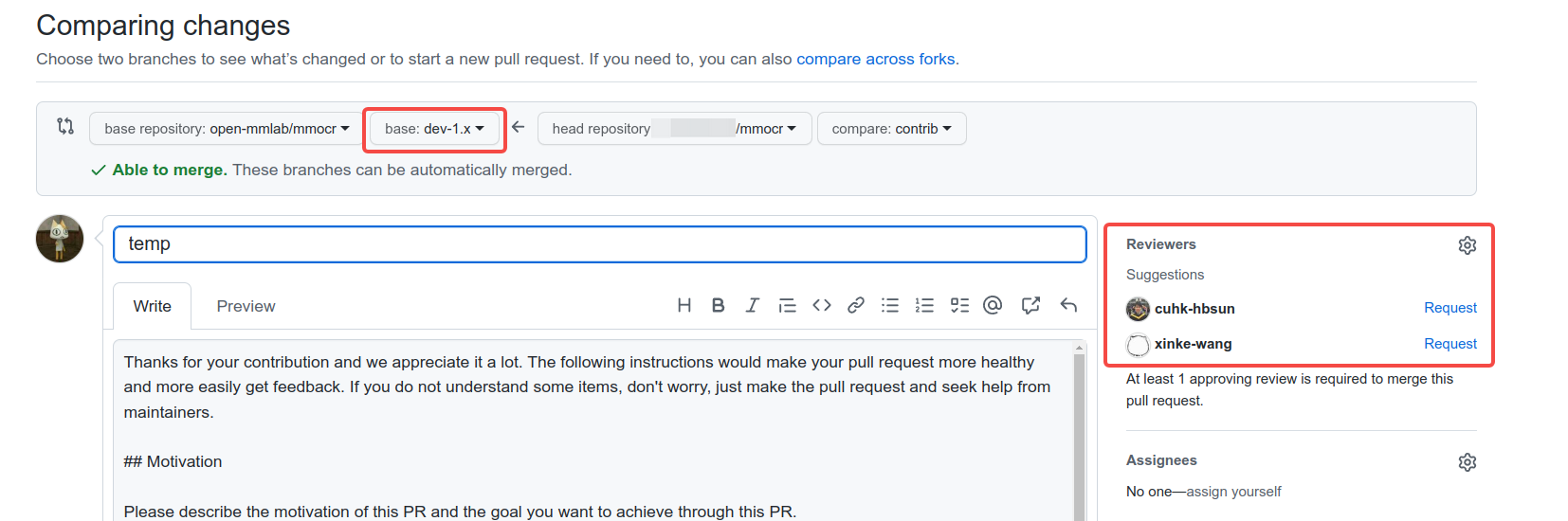

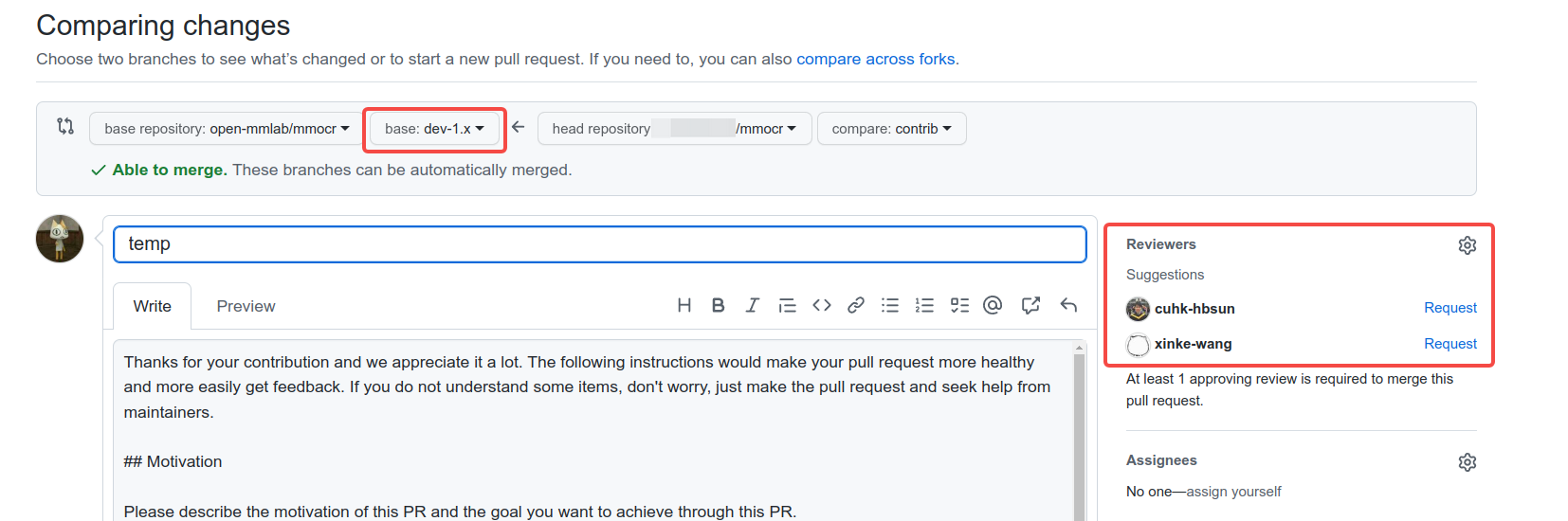

+We appreciate all contributions to improve MMOCR. Please refer to [CONTRIBUTING.md](.github/CONTRIBUTING.md) for the contributing guidelines.

+

+## Acknowledgement

+

+MMOCR is an open-source project that is contributed by researchers and engineers from various colleges and companies. We appreciate all the contributors who implement their methods or add new features, as well as users who give valuable feedbacks.

+We hope the toolbox and benchmark could serve the growing research community by providing a flexible toolkit to reimplement existing methods and develop their own new OCR methods.

+

+## Citation

+

+If you find this project useful in your research, please consider cite:

+

+```bibtex

+@article{mmocr2021,

+ title={MMOCR: A Comprehensive Toolbox for Text Detection, Recognition and Understanding},

+ author={Kuang, Zhanghui and Sun, Hongbin and Li, Zhizhong and Yue, Xiaoyu and Lin, Tsui Hin and Chen, Jianyong and Wei, Huaqiang and Zhu, Yiqin and Gao, Tong and Zhang, Wenwei and Chen, Kai and Zhang, Wayne and Lin, Dahua},

+ journal= {arXiv preprint arXiv:2108.06543},

+ year={2021}

+}

+```

+

+## License

+

+This project is released under the [Apache 2.0 license](LICENSE).

+

+## OpenMMLab Family

+

+- [MMEngine](https://github.com/open-mmlab/mmengine): OpenMMLab foundational library for training deep learning models

+- [MMCV](https://github.com/open-mmlab/mmcv): OpenMMLab foundational library for computer vision.

+- [MIM](https://github.com/open-mmlab/mim): MIM installs OpenMMLab packages.

+- [MMClassification](https://github.com/open-mmlab/mmclassification): OpenMMLab image classification toolbox and benchmark.

+- [MMDetection](https://github.com/open-mmlab/mmdetection): OpenMMLab detection toolbox and benchmark.

+- [MMDetection3D](https://github.com/open-mmlab/mmdetection3d): OpenMMLab's next-generation platform for general 3D object detection.

+- [MMRotate](https://github.com/open-mmlab/mmrotate): OpenMMLab rotated object detection toolbox and benchmark.

+- [MMSegmentation](https://github.com/open-mmlab/mmsegmentation): OpenMMLab semantic segmentation toolbox and benchmark.

+- [MMOCR](https://github.com/open-mmlab/mmocr): OpenMMLab text detection, recognition, and understanding toolbox.

+- [MMPose](https://github.com/open-mmlab/mmpose): OpenMMLab pose estimation toolbox and benchmark.

+- [MMHuman3D](https://github.com/open-mmlab/mmhuman3d): OpenMMLab 3D human parametric model toolbox and benchmark.

+- [MMSelfSup](https://github.com/open-mmlab/mmselfsup): OpenMMLab self-supervised learning toolbox and benchmark.

+- [MMRazor](https://github.com/open-mmlab/mmrazor): OpenMMLab model compression toolbox and benchmark.

+- [MMFewShot](https://github.com/open-mmlab/mmfewshot): OpenMMLab fewshot learning toolbox and benchmark.

+- [MMAction2](https://github.com/open-mmlab/mmaction2): OpenMMLab's next-generation action understanding toolbox and benchmark.

+- [MMTracking](https://github.com/open-mmlab/mmtracking): OpenMMLab video perception toolbox and benchmark.

+- [MMFlow](https://github.com/open-mmlab/mmflow): OpenMMLab optical flow toolbox and benchmark.

+- [MMEditing](https://github.com/open-mmlab/mmediting): OpenMMLab image and video editing toolbox.

+- [MMGeneration](https://github.com/open-mmlab/mmgeneration): OpenMMLab image and video generative models toolbox.

+- [MMDeploy](https://github.com/open-mmlab/mmdeploy): OpenMMLab model deployment framework.

+

+## Welcome to the OpenMMLab community

+

+Scan the QR code below to follow the OpenMMLab team's [**Zhihu Official Account**](https://www.zhihu.com/people/openmmlab) and join the OpenMMLab team's [**QQ Group**](https://jq.qq.com/?_wv=1027&k=aCvMxdr3), or join the official communication WeChat group by adding the WeChat, or join our [**Slack**](https://join.slack.com/t/mmocrworkspace/shared_invite/zt-1ifqhfla8-yKnLO_aKhVA2h71OrK8GZw)

+

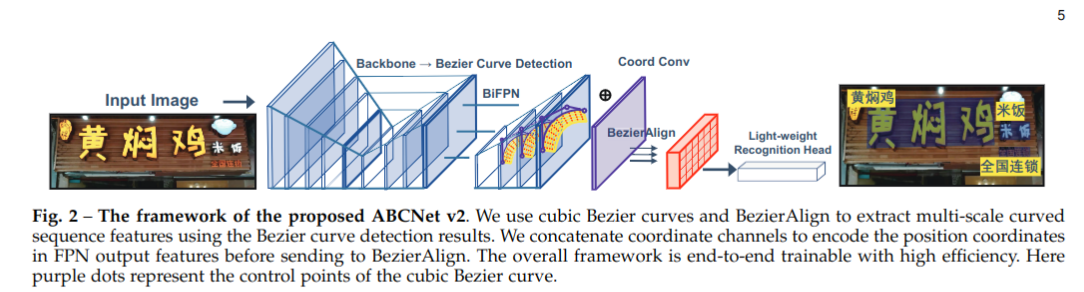

+Text Spotting

+ +- [x] [ABCNet](projects/ABCNet/README.md) (CVPR'2020) +- [x] [ABCNetV2](projects/ABCNet/README_V2.md) (TPAMI'2021) +- [x] [SPTS](projects/SPTS/README.md) (ACM MM'2022) + +

+

+

+

+

+We will provide you with the OpenMMLab community

+

+- 📢 share the latest core technologies of AI frameworks

+- 💻 Explaining PyTorch common module source Code

+- 📰 News related to the release of OpenMMLab

+- 🚀 Introduction of cutting-edge algorithms developed by OpenMMLab

+ 🏃 Get the more efficient answer and feedback

+- 🔥 Provide a platform for communication with developers from all walks of life

+

+The OpenMMLab community looks forward to your participation! 👬

diff --git a/mmocr-dev-1.x/README_zh-CN.md b/mmocr-dev-1.x/README_zh-CN.md

new file mode 100644

index 0000000000000000000000000000000000000000..c38839637ec2e410e830cbcc8eb45160b178f8fd

--- /dev/null

+++ b/mmocr-dev-1.x/README_zh-CN.md

@@ -0,0 +1,250 @@

+

+

+

+  +

+

+

+[](https://github.com/open-mmlab/mmocr/actions)

+[](https://mmocr.readthedocs.io/en/dev-1.x/?badge=dev-1.x)

+[](https://codecov.io/gh/open-mmlab/mmocr)

+[](https://github.com/open-mmlab/mmocr/blob/main/LICENSE)

+[](https://pypi.org/project/mmocr/)

+[](https://github.com/open-mmlab/mmocr/issues)

+[](https://github.com/open-mmlab/mmocr/issues)

+

+

+

+

+[](https://github.com/open-mmlab/mmocr/actions)

+[](https://mmocr.readthedocs.io/en/dev-1.x/?badge=dev-1.x)

+[](https://codecov.io/gh/open-mmlab/mmocr)

+[](https://github.com/open-mmlab/mmocr/blob/main/LICENSE)

+[](https://pypi.org/project/mmocr/)

+[](https://github.com/open-mmlab/mmocr/issues)

+[](https://github.com/open-mmlab/mmocr/issues)

+  +

+[📘文档](https://mmocr.readthedocs.io/zh_CN/dev-1.x/) |

+[🛠️安装](https://mmocr.readthedocs.io/zh_CN/dev-1.x/get_started/install.html) |

+[👀模型库](https://mmocr.readthedocs.io/zh_CN/dev-1.x/modelzoo.html) |

+[🆕更新日志](https://mmocr.readthedocs.io/en/dev-1.x/notes/changelog.html) |

+[🤔报告问题](https://github.com/open-mmlab/mmocr/issues/new/choose)

+

+

+

+[📘文档](https://mmocr.readthedocs.io/zh_CN/dev-1.x/) |

+[🛠️安装](https://mmocr.readthedocs.io/zh_CN/dev-1.x/get_started/install.html) |

+[👀模型库](https://mmocr.readthedocs.io/zh_CN/dev-1.x/modelzoo.html) |

+[🆕更新日志](https://mmocr.readthedocs.io/en/dev-1.x/notes/changelog.html) |

+[🤔报告问题](https://github.com/open-mmlab/mmocr/issues/new/choose)

+

+

+

+ +

+

+ OpenMMLab 官网

+

+

+ HOT

+

+

+

+ OpenMMLab 开放平台

+

+

+ TRY IT OUT

+

+

+

+  +

+[📘文档](https://mmocr.readthedocs.io/zh_CN/dev-1.x/) |

+[🛠️安装](https://mmocr.readthedocs.io/zh_CN/dev-1.x/get_started/install.html) |

+[👀模型库](https://mmocr.readthedocs.io/zh_CN/dev-1.x/modelzoo.html) |

+[🆕更新日志](https://mmocr.readthedocs.io/en/dev-1.x/notes/changelog.html) |

+[🤔报告问题](https://github.com/open-mmlab/mmocr/issues/new/choose)

+

+

+

+[📘文档](https://mmocr.readthedocs.io/zh_CN/dev-1.x/) |

+[🛠️安装](https://mmocr.readthedocs.io/zh_CN/dev-1.x/get_started/install.html) |

+[👀模型库](https://mmocr.readthedocs.io/zh_CN/dev-1.x/modelzoo.html) |

+[🆕更新日志](https://mmocr.readthedocs.io/en/dev-1.x/notes/changelog.html) |

+[🤔报告问题](https://github.com/open-mmlab/mmocr/issues/new/choose)

+

+

+

+[English](/README.md) | 简体中文

+

+

+

+

+

+## 近期更新

+

+**默认分支目前为 `main`,且分支上的代码已经切换到 v1.0.0 版本。旧版 `main` 分支(v0.6.3)的代码现存在 `0.x` 分支上。** 如果您一直在使用 `main` 分支,并遇到升级问题,请阅读 [迁移指南](https://mmocr.readthedocs.io/zh_CN/dev-1.x/migration/overview.html) 和 [分支说明](https://mmocr.readthedocs.io/zh_CN/dev-1.x/migration/branches.html) 。

+

+最新的版本 v1.0.0 于 2023-04-06 发布。其相对于 1.0.0rc6 的主要更新如下:

+

+1. Dataset Preparer 中支持了 SCUT-CTW1500, SynthText 和 MJSynth 数据集;

+2. 更新了文档和 FAQ;

+3. 升级文件后端;使用了 `backend_args` 替换 `file_client_args`;

+4. 增加了 MMOCR 教程 notebook。

+

+如果需要了解 MMOCR 1.0 相对于 0.x 的升级内容,请阅读 [MMOCR 1.x 更新汇总](https://mmocr.readthedocs.io/zh_CN/dev-1.x/migration/news.html);或者阅读[更新日志](https://mmocr.readthedocs.io/zh_CN/dev-1.x/notes/changelog.html)以获取更多信息。

+

+## 简介

+

+MMOCR 是基于 PyTorch 和 mmdetection 的开源工具箱,专注于文本检测,文本识别以及相应的下游任务,如关键信息提取。 它是 OpenMMLab 项目的一部分。

+

+主分支目前支持 **PyTorch 1.6 以上**的版本。

+

+

+  +

+

+

+### 主要特性

+

+-**全流程**

+

+该工具箱不仅支持文本检测和文本识别,还支持其下游任务,例如关键信息提取。

+

+-**多种模型**

+

+该工具箱支持用于文本检测,文本识别和关键信息提取的各种最新模型。

+

+-**模块化设计**

+

+MMOCR 的模块化设计使用户可以定义自己的优化器,数据预处理器,模型组件如主干模块,颈部模块和头部模块,以及损失函数。有关如何构建自定义模型的信息,请参考[概览](https://mmocr.readthedocs.io/zh_CN/dev-1.x/get_started/overview.html)。

+

+-**众多实用工具**

+

+该工具箱提供了一套全面的实用程序,可以帮助用户评估模型的性能。它包括可对图像,标注的真值以及预测结果进行可视化的可视化工具,以及用于在训练过程中评估模型的验证工具。它还包括数据转换器,演示了如何将用户自建的标注数据转换为 MMOCR 支持的标注文件。

+

+## 安装

+

+MMOCR 依赖 [PyTorch](https://pytorch.org/), [MMEngine](https://github.com/open-mmlab/mmengine), [MMCV](https://github.com/open-mmlab/mmcv) 和 [MMDetection](https://github.com/open-mmlab/mmdetection),以下是安装的简要步骤。

+更详细的安装指南请参考 [安装文档](https://mmocr.readthedocs.io/zh_CN/dev-1.x/get_started/install.html)。

+

+```shell

+conda create -n open-mmlab python=3.8 pytorch=1.10 cudatoolkit=11.3 torchvision -c pytorch -y

+conda activate open-mmlab

+pip3 install openmim

+git clone https://github.com/open-mmlab/mmocr.git

+cd mmocr

+mim install -e .

+```

+

+## 快速入门

+

+请参考[快速入门](https://mmocr.readthedocs.io/zh_CN/dev-1.x/get_started/quick_run.html)文档学习 MMOCR 的基本使用。

+

+## [模型库](https://mmocr.readthedocs.io/zh_CN/dev-1.x/modelzoo.html)

+

+支持的算法:

+

+ +

+

+

+

+骨干网络

+ +- [x] [oCLIP](configs/backbone/oclip/README.md) (ECCV'2022) + +

+

+

+文字检测

+ +- [x] [DBNet](configs/textdet/dbnet/README.md) (AAAI'2020) / [DBNet++](configs/textdet/dbnetpp/README.md) (TPAMI'2022) +- [x] [Mask R-CNN](configs/textdet/maskrcnn/README.md) (ICCV'2017) +- [x] [PANet](configs/textdet/panet/README.md) (ICCV'2019) +- [x] [PSENet](configs/textdet/psenet/README.md) (CVPR'2019) +- [x] [TextSnake](configs/textdet/textsnake/README.md) (ECCV'2018) +- [x] [DRRG](configs/textdet/drrg/README.md) (CVPR'2020) +- [x] [FCENet](configs/textdet/fcenet/README.md) (CVPR'2021) + +

+

+

+文字识别

+ +- [x] [ABINet](configs/textrecog/abinet/README.md) (CVPR'2021) +- [x] [ASTER](configs/textrecog/aster/README.md) (TPAMI'2018) +- [x] [CRNN](configs/textrecog/crnn/README.md) (TPAMI'2016) +- [x] [MASTER](configs/textrecog/master/README.md) (PR'2021) +- [x] [NRTR](configs/textrecog/nrtr/README.md) (ICDAR'2019) +- [x] [RobustScanner](configs/textrecog/robust_scanner/README.md) (ECCV'2020) +- [x] [SAR](configs/textrecog/sar/README.md) (AAAI'2019) +- [x] [SATRN](configs/textrecog/satrn/README.md) (CVPR'2020 Workshop on Text and Documents in the Deep Learning Era) +- [x] [SVTR](configs/textrecog/svtr/README.md) (IJCAI'2022) + +

+

+

+关键信息提取

+ +- [x] [SDMG-R](configs/kie/sdmgr/README.md) (ArXiv'2021) + +

+

+

+请点击[模型库](https://mmocr.readthedocs.io/zh_CN/dev-1.x/modelzoo.html)查看更多关于上述算法的详细信息。

+

+## 社区项目

+

+[这里](projects/README.md)有一些由社区用户支持和维护的基于 MMOCR 的 SOTA 模型和解决方案的实现。这些项目展示了基于 MMOCR 的研究和产品开发的最佳实践。

+我们欢迎并感谢对 OpenMMLab 生态系统的所有贡献。

+

+## 贡献指南

+

+我们感谢所有的贡献者为改进和提升 MMOCR 所作出的努力。请参考[贡献指南](.github/CONTRIBUTING.md)来了解参与项目贡献的相关指引。

+

+## 致谢

+

+MMOCR 是一款由来自不同高校和企业的研发人员共同参与贡献的开源项目。我们感谢所有为项目提供算法复现和新功能支持的贡献者,以及提供宝贵反馈的用户。 我们希望此工具箱可以帮助大家来复现已有的方法和开发新的方法,从而为研究社区贡献力量。

+

+## 引用

+

+如果您发现此项目对您的研究有用,请考虑引用:

+

+```bibtex

+@article{mmocr2021,

+ title={MMOCR: A Comprehensive Toolbox for Text Detection, Recognition and Understanding},

+ author={Kuang, Zhanghui and Sun, Hongbin and Li, Zhizhong and Yue, Xiaoyu and Lin, Tsui Hin and Chen, Jianyong and Wei, Huaqiang and Zhu, Yiqin and Gao, Tong and Zhang, Wenwei and Chen, Kai and Zhang, Wayne and Lin, Dahua},

+ journal= {arXiv preprint arXiv:2108.06543},

+ year={2021}

+}

+```

+

+## 开源许可证

+

+该项目采用 [Apache 2.0 license](LICENSE) 开源许可证。

+

+## OpenMMLab 的其他项目

+

+- [MMEngine](https://github.com/open-mmlab/mmengine): OpenMMLab 深度学习模型训练基础库

+- [MMCV](https://github.com/open-mmlab/mmcv): OpenMMLab 计算机视觉基础库

+- [MIM](https://github.com/open-mmlab/mim): MIM 是 OpenMMlab 项目、算法、模型的统一入口

+- [MMClassification](https://github.com/open-mmlab/mmclassification): OpenMMLab 图像分类工具箱

+- [MMDetection](https://github.com/open-mmlab/mmdetection): OpenMMLab 目标检测工具箱

+- [MMDetection3D](https://github.com/open-mmlab/mmdetection3d): OpenMMLab 新一代通用 3D 目标检测平台

+- [MMRotate](https://github.com/open-mmlab/mmrotate): OpenMMLab 旋转框检测工具箱与测试基准

+- [MMSegmentation](https://github.com/open-mmlab/mmsegmentation): OpenMMLab 语义分割工具箱

+- [MMOCR](https://github.com/open-mmlab/mmocr): OpenMMLab 全流程文字检测识别理解工具箱

+- [MMPose](https://github.com/open-mmlab/mmpose): OpenMMLab 姿态估计工具箱

+- [MMHuman3D](https://github.com/open-mmlab/mmhuman3d): OpenMMLab 人体参数化模型工具箱与测试基准

+- [MMSelfSup](https://github.com/open-mmlab/mmselfsup): OpenMMLab 自监督学习工具箱与测试基准

+- [MMRazor](https://github.com/open-mmlab/mmrazor): OpenMMLab 模型压缩工具箱与测试基准

+- [MMFewShot](https://github.com/open-mmlab/mmfewshot): OpenMMLab 少样本学习工具箱与测试基准

+- [MMAction2](https://github.com/open-mmlab/mmaction2): OpenMMLab 新一代视频理解工具箱

+- [MMTracking](https://github.com/open-mmlab/mmtracking): OpenMMLab 一体化视频目标感知平台

+- [MMFlow](https://github.com/open-mmlab/mmflow): OpenMMLab 光流估计工具箱与测试基准

+- [MMEditing](https://github.com/open-mmlab/mmediting): OpenMMLab 图像视频编辑工具箱

+- [MMGeneration](https://github.com/open-mmlab/mmgeneration): OpenMMLab 图片视频生成模型工具箱

+- [MMDeploy](https://github.com/open-mmlab/mmdeploy): OpenMMLab 模型部署框架

+

+## 欢迎加入 OpenMMLab 社区

+

+扫描下方的二维码可关注 OpenMMLab 团队的 [知乎官方账号](https://www.zhihu.com/people/openmmlab),加入 OpenMMLab 团队的 [官方交流 QQ 群](https://r.vansin.top/?r=join-qq),或通过添加微信“Open小喵Lab”加入官方交流微信群。

+

+端对端 OCR

+ +- [x] [ABCNet](projects/ABCNet/README.md) (CVPR'2020) +- [x] [ABCNetV2](projects/ABCNet/README_V2.md) (TPAMI'2021) +- [x] [SPTS](projects/SPTS/README.md) (ACM MM'2022) + +

+

+

+

+

+我们会在 OpenMMLab 社区为大家

+

+- 📢 分享 AI 框架的前沿核心技术

+- 💻 解读 PyTorch 常用模块源码

+- 📰 发布 OpenMMLab 的相关新闻

+- 🚀 介绍 OpenMMLab 开发的前沿算法

+- 🏃 获取更高效的问题答疑和意见反馈

+- 🔥 提供与各行各业开发者充分交流的平台

+

+干货满满 📘,等你来撩 💗,OpenMMLab 社区期待您的加入 👬

diff --git a/mmocr-dev-1.x/configs/backbone/oclip/README.md b/mmocr-dev-1.x/configs/backbone/oclip/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..e29cf971f6f8e6ba6c4fc640e6d06c5583d2909d

--- /dev/null

+++ b/mmocr-dev-1.x/configs/backbone/oclip/README.md

@@ -0,0 +1,41 @@

+# oCLIP

+

+> [Language Matters: A Weakly Supervised Vision-Language Pre-training Approach for Scene Text Detection and Spotting](https://www.ecva.net/papers/eccv_2022/papers_ECCV/papers/136880282.pdf)

+

+

+

+## Abstract

+

+Recently, Vision-Language Pre-training (VLP) techniques have greatly benefited various vision-language tasks by jointly learning visual and textual representations, which intuitively helps in Optical Character Recognition (OCR) tasks due to the rich visual and textual information in scene text images. However, these methods cannot well cope with OCR tasks because of the difficulty in both instance-level text encoding and image-text pair acquisition (i.e. images and captured texts in them). This paper presents a weakly supervised pre-training method, oCLIP, which can acquire effective scene text representations by jointly learning and aligning visual and textual information. Our network consists of an image encoder and a character-aware text encoder that extract visual and textual features, respectively, as well as a visual-textual decoder that models the interaction among textual and visual features for learning effective scene text representations. With the learning of textual features, the pre-trained model can attend texts in images well with character awareness. Besides, these designs enable the learning from weakly annotated texts (i.e. partial texts in images without text bounding boxes) which mitigates the data annotation constraint greatly. Experiments over the weakly annotated images in ICDAR2019-LSVT show that our pre-trained model improves F-score by +2.5% and +4.8% while transferring its weights to other text detection and spotting networks, respectively. In addition, the proposed method outperforms existing pre-training techniques consistently across multiple public datasets (e.g., +3.2% and +1.3% for Total-Text and CTW1500).

+

+

+

+

+ +

+

+

+## Models

+

+| Backbone | Pre-train Data | Model |

+| :-------: | :------------: | :-------------------------------------------------------------------------------: |

+| ResNet-50 | SynthText | [Link](https://download.openmmlab.com/mmocr/backbone/resnet50-oclip-7ba0c533.pth) |

+

+```{note}

+The model is converted from the official [oCLIP](https://github.com/bytedance/oclip.git).

+```

+

+## Supported Text Detection Models

+

+| | [DBNet](https://mmocr.readthedocs.io/en/dev-1.x/textdet_models.html#dbnet) | [DBNet++](https://mmocr.readthedocs.io/en/dev-1.x/textdet_models.html#dbnetpp) | [FCENet](https://mmocr.readthedocs.io/en/dev-1.x/textdet_models.html#fcenet) | [TextSnake](https://mmocr.readthedocs.io/en/dev-1.x/textdet_models.html#fcenet) | [PSENet](https://mmocr.readthedocs.io/en/dev-1.x/textdet_models.html#psenet) | [DRRG](https://mmocr.readthedocs.io/en/dev-1.x/textdet_models.html#drrg) | [Mask R-CNN](https://mmocr.readthedocs.io/en/dev-1.x/textdet_models.html#mask-r-cnn) |

+| :-------: | :------------------------------------------------------------------------: | :----------------------------------------------------------------------------: | :--------------------------------------------------------------------------: | :-----------------------------------------------------------------------------: | :--------------------------------------------------------------------------: | :----------------------------------------------------------------------: | :----------------------------------------------------------------------------------: |

+| ICDAR2015 | ✓ | ✓ | ✓ | | ✓ | | ✓ |

+| CTW1500 | | | ✓ | ✓ | ✓ | ✓ | ✓ |

+

+## Citation

+

+```bibtex

+@article{xue2022language,

+ title={Language Matters: A Weakly Supervised Vision-Language Pre-training Approach for Scene Text Detection and Spotting},

+ author={Xue, Chuhui and Zhang, Wenqing and Hao, Yu and Lu, Shijian and Torr, Philip and Bai, Song},

+ journal={Proceedings of the European Conference on Computer Vision (ECCV)},

+ year={2022}

+}

+```

diff --git a/mmocr-dev-1.x/configs/backbone/oclip/metafile.yml b/mmocr-dev-1.x/configs/backbone/oclip/metafile.yml

new file mode 100644

index 0000000000000000000000000000000000000000..8953af1b6b3c7b6190602be0af9e07753ed67518

--- /dev/null

+++ b/mmocr-dev-1.x/configs/backbone/oclip/metafile.yml

@@ -0,0 +1,13 @@

+Collections:

+- Name: oCLIP

+ Metadata:

+ Training Data: SynthText

+ Architecture:

+ - CLIPResNet

+ Paper:

+ URL: https://arxiv.org/abs/2203.03911

+ Title: 'Language Matters: A Weakly Supervised Vision-Language Pre-training Approach for Scene Text Detection and Spotting'

+ README: configs/backbone/oclip/README.md

+

+Models:

+ Weights: https://download.openmmlab.com/mmocr/backbone/resnet50-oclip-7ba0c533.pth

diff --git a/mmocr-dev-1.x/configs/kie/_base_/datasets/wildreceipt-openset.py b/mmocr-dev-1.x/configs/kie/_base_/datasets/wildreceipt-openset.py

new file mode 100644

index 0000000000000000000000000000000000000000..f82512839cdea57e559bd375be2a3f4146558af3

--- /dev/null

+++ b/mmocr-dev-1.x/configs/kie/_base_/datasets/wildreceipt-openset.py

@@ -0,0 +1,26 @@

+wildreceipt_openset_data_root = 'data/wildreceipt/'

+

+wildreceipt_openset_train = dict(

+ type='WildReceiptDataset',

+ data_root=wildreceipt_openset_data_root,

+ metainfo=dict(category=[

+ dict(id=0, name='bg'),

+ dict(id=1, name='key'),

+ dict(id=2, name='value'),

+ dict(id=3, name='other')

+ ]),

+ ann_file='openset_train.txt',

+ pipeline=None)

+

+wildreceipt_openset_test = dict(

+ type='WildReceiptDataset',

+ data_root=wildreceipt_openset_data_root,

+ metainfo=dict(category=[

+ dict(id=0, name='bg'),

+ dict(id=1, name='key'),

+ dict(id=2, name='value'),

+ dict(id=3, name='other')

+ ]),

+ ann_file='openset_test.txt',

+ test_mode=True,

+ pipeline=None)

diff --git a/mmocr-dev-1.x/configs/kie/_base_/datasets/wildreceipt.py b/mmocr-dev-1.x/configs/kie/_base_/datasets/wildreceipt.py

new file mode 100644

index 0000000000000000000000000000000000000000..9c1122edd53c5c8df4bad55ad764c12e1714026a

--- /dev/null

+++ b/mmocr-dev-1.x/configs/kie/_base_/datasets/wildreceipt.py

@@ -0,0 +1,16 @@

+wildreceipt_data_root = 'data/wildreceipt/'

+

+wildreceipt_train = dict(

+ type='WildReceiptDataset',

+ data_root=wildreceipt_data_root,

+ metainfo=wildreceipt_data_root + 'class_list.txt',

+ ann_file='train.txt',

+ pipeline=None)

+

+wildreceipt_test = dict(

+ type='WildReceiptDataset',

+ data_root=wildreceipt_data_root,

+ metainfo=wildreceipt_data_root + 'class_list.txt',

+ ann_file='test.txt',

+ test_mode=True,

+ pipeline=None)

diff --git a/mmocr-dev-1.x/configs/kie/_base_/default_runtime.py b/mmocr-dev-1.x/configs/kie/_base_/default_runtime.py

new file mode 100644

index 0000000000000000000000000000000000000000..bcc5b3fa02a0f3259f701cddecbc307988424a6b

--- /dev/null

+++ b/mmocr-dev-1.x/configs/kie/_base_/default_runtime.py

@@ -0,0 +1,33 @@

+default_scope = 'mmocr'

+env_cfg = dict(

+ cudnn_benchmark=False,

+ mp_cfg=dict(mp_start_method='fork', opencv_num_threads=0),

+ dist_cfg=dict(backend='nccl'),

+)

+randomness = dict(seed=None)

+

+default_hooks = dict(

+ timer=dict(type='IterTimerHook'),

+ logger=dict(type='LoggerHook', interval=100),

+ param_scheduler=dict(type='ParamSchedulerHook'),

+ checkpoint=dict(type='CheckpointHook', interval=1),

+ sampler_seed=dict(type='DistSamplerSeedHook'),

+ sync_buffer=dict(type='SyncBuffersHook'),

+ visualization=dict(

+ type='VisualizationHook',

+ interval=1,

+ enable=False,

+ show=False,

+ draw_gt=False,

+ draw_pred=False),

+)

+

+# Logging

+log_level = 'INFO'

+log_processor = dict(type='LogProcessor', window_size=10, by_epoch=True)

+

+load_from = None

+resume = False

+

+visualizer = dict(

+ type='KIELocalVisualizer', name='visualizer', is_openset=False)

diff --git a/mmocr-dev-1.x/configs/kie/_base_/schedules/schedule_adam_60e.py b/mmocr-dev-1.x/configs/kie/_base_/schedules/schedule_adam_60e.py

new file mode 100644

index 0000000000000000000000000000000000000000..fd7147e2b86a8640966617bae1eb86d3347057f9

--- /dev/null

+++ b/mmocr-dev-1.x/configs/kie/_base_/schedules/schedule_adam_60e.py

@@ -0,0 +1,10 @@

+# optimizer

+optim_wrapper = dict(

+ type='OptimWrapper', optimizer=dict(type='Adam', weight_decay=0.0001))

+train_cfg = dict(type='EpochBasedTrainLoop', max_epochs=60, val_interval=1)

+val_cfg = dict(type='ValLoop')

+test_cfg = dict(type='TestLoop')

+# learning rate

+param_scheduler = [

+ dict(type='MultiStepLR', milestones=[40, 50], end=60),

+]

diff --git a/mmocr-dev-1.x/configs/kie/sdmgr/README.md b/mmocr-dev-1.x/configs/kie/sdmgr/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..921af5310e46803c937168c6e1c0bdf17a372798

--- /dev/null

+++ b/mmocr-dev-1.x/configs/kie/sdmgr/README.md

@@ -0,0 +1,41 @@

+# SDMGR

+

+> [Spatial Dual-Modality Graph Reasoning for Key Information Extraction](https://arxiv.org/abs/2103.14470)

+

+

+

+## Abstract

+

+Key information extraction from document images is of paramount importance in office automation. Conventional template matching based approaches fail to generalize well to document images of unseen templates, and are not robust against text recognition errors. In this paper, we propose an end-to-end Spatial Dual-Modality Graph Reasoning method (SDMG-R) to extract key information from unstructured document images. We model document images as dual-modality graphs, nodes of which encode both the visual and textual features of detected text regions, and edges of which represent the spatial relations between neighboring text regions. The key information extraction is solved by iteratively propagating messages along graph edges and reasoning the categories of graph nodes. In order to roundly evaluate our proposed method as well as boost the future research, we release a new dataset named WildReceipt, which is collected and annotated tailored for the evaluation of key information extraction from document images of unseen templates in the wild. It contains 25 key information categories, a total of about 69000 text boxes, and is about 2 times larger than the existing public datasets. Extensive experiments validate that all information including visual features, textual features and spatial relations can benefit key information extraction. It has been shown that SDMG-R can effectively extract key information from document images of unseen templates, and obtain new state-of-the-art results on the recent popular benchmark SROIE and our WildReceipt. Our code and dataset will be publicly released.

+

+ +

+

+ +

+

+

+## Results and models

+

+### WildReceipt

+

+| Method | Modality | Macro F1-Score | Download |

+| :--------------------------------------------------------------------: | :--------------: | :------------: | :--------------------------------------------------------------------------------------------------: |

+| [sdmgr_unet16](/configs/kie/sdmgr/sdmgr_unet16_60e_wildreceipt.py) | Visual + Textual | 0.890 | [model](https://download.openmmlab.com/mmocr/kie/sdmgr/sdmgr_unet16_60e_wildreceipt/sdmgr_unet16_60e_wildreceipt_20220825_151648-22419f37.pth) \| [log](https://download.openmmlab.com/mmocr/kie/sdmgr/sdmgr_unet16_60e_wildreceipt/20220825_151648.log) |

+| [sdmgr_novisual](/configs/kie/sdmgr/sdmgr_novisual_60e_wildreceipt.py) | Textual | 0.873 | [model](https://download.openmmlab.com/mmocr/kie/sdmgr/sdmgr_novisual_60e_wildreceipt/sdmgr_novisual_60e_wildreceipt_20220831_193317-827649d8.pth) \| [log](https://download.openmmlab.com/mmocr/kie/sdmgr/sdmgr_novisual_60e_wildreceipt/20220831_193317.log) |

+

+### WildReceiptOpenset

+

+| Method | Modality | Edge F1-Score | Node Macro F1-Score | Node Micro F1-Score | Download |

+| :-------------------------------------------------------------------: | :------: | :-----------: | :-----------------: | :-----------------: | :----------------------------------------------------------------------: |

+| [sdmgr_novisual_openset](/configs/kie/sdmgr/sdmgr_novisual_60e_wildreceipt-openset.py) | Textual | 0.792 | 0.931 | 0.940 | [model](https://download.openmmlab.com/mmocr/kie/sdmgr/sdmgr_novisual_60e_wildreceipt-openset/sdmgr_novisual_60e_wildreceipt-openset_20220831_200807-dedf15ec.pth) \| [log](https://download.openmmlab.com/mmocr/kie/sdmgr/sdmgr_novisual_60e_wildreceipt-openset/20220831_200807.log) |

+

+## Citation

+

+```bibtex

+@misc{sun2021spatial,

+ title={Spatial Dual-Modality Graph Reasoning for Key Information Extraction},

+ author={Hongbin Sun and Zhanghui Kuang and Xiaoyu Yue and Chenhao Lin and Wayne Zhang},

+ year={2021},

+ eprint={2103.14470},

+ archivePrefix={arXiv},

+ primaryClass={cs.CV}

+}

+```

diff --git a/mmocr-dev-1.x/configs/kie/sdmgr/_base_sdmgr_novisual.py b/mmocr-dev-1.x/configs/kie/sdmgr/_base_sdmgr_novisual.py

new file mode 100644

index 0000000000000000000000000000000000000000..5e85de2f78f020bd5695858098ad143dbbd09ed0

--- /dev/null

+++ b/mmocr-dev-1.x/configs/kie/sdmgr/_base_sdmgr_novisual.py

@@ -0,0 +1,35 @@

+num_classes = 26

+

+model = dict(

+ type='SDMGR',

+ kie_head=dict(

+ type='SDMGRHead',

+ visual_dim=16,

+ num_classes=num_classes,

+ module_loss=dict(type='SDMGRModuleLoss'),

+ postprocessor=dict(type='SDMGRPostProcessor')),

+ dictionary=dict(

+ type='Dictionary',

+ dict_file='{{ fileDirname }}/../../../dicts/sdmgr_dict.txt',

+ with_padding=True,

+ with_unknown=True,

+ unknown_token=None),

+)

+

+train_pipeline = [

+ dict(type='LoadKIEAnnotations'),

+ dict(type='Resize', scale=(1024, 512), keep_ratio=True),

+ dict(type='PackKIEInputs')

+]

+test_pipeline = [

+ dict(type='LoadKIEAnnotations'),

+ dict(type='Resize', scale=(1024, 512), keep_ratio=True),

+ dict(type='PackKIEInputs'),

+]

+

+val_evaluator = dict(

+ type='F1Metric',

+ mode='macro',

+ num_classes=num_classes,

+ ignored_classes=[0, 2, 4, 6, 8, 10, 12, 14, 16, 18, 20, 22, 24, 25])

+test_evaluator = val_evaluator

diff --git a/mmocr-dev-1.x/configs/kie/sdmgr/_base_sdmgr_unet16.py b/mmocr-dev-1.x/configs/kie/sdmgr/_base_sdmgr_unet16.py

new file mode 100644

index 0000000000000000000000000000000000000000..76aa631bdfbbf29013d27ac76c0e160d232d1500

--- /dev/null

+++ b/mmocr-dev-1.x/configs/kie/sdmgr/_base_sdmgr_unet16.py

@@ -0,0 +1,28 @@

+_base_ = '_base_sdmgr_novisual.py'

+

+model = dict(

+ backbone=dict(type='UNet', base_channels=16),

+ roi_extractor=dict(

+ type='mmdet.SingleRoIExtractor',

+ roi_layer=dict(type='RoIAlign', output_size=7),

+ featmap_strides=[1]),

+ data_preprocessor=dict(

+ type='ImgDataPreprocessor',

+ mean=[123.675, 116.28, 103.53],

+ std=[58.395, 57.12, 57.375],

+ bgr_to_rgb=True,

+ pad_size_divisor=32),

+)

+

+train_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(type='LoadKIEAnnotations'),

+ dict(type='Resize', scale=(1024, 512), keep_ratio=True),

+ dict(type='PackKIEInputs')

+]

+test_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(type='LoadKIEAnnotations'),

+ dict(type='Resize', scale=(1024, 512), keep_ratio=True),

+ dict(type='PackKIEInputs', meta_keys=('img_path', )),

+]

diff --git a/mmocr-dev-1.x/configs/kie/sdmgr/metafile.yml b/mmocr-dev-1.x/configs/kie/sdmgr/metafile.yml

new file mode 100644

index 0000000000000000000000000000000000000000..da430e3d87ab7fe02a9560f7d0e441cce2ccf929

--- /dev/null

+++ b/mmocr-dev-1.x/configs/kie/sdmgr/metafile.yml

@@ -0,0 +1,52 @@

+Collections:

+- Name: SDMGR

+ Metadata:

+ Training Data: KIEDataset

+ Training Techniques:

+ - Adam

+ Training Resources: 1x NVIDIA A100-SXM4-80GB

+ Architecture:

+ - UNet

+ - SDMGRHead

+ Paper:

+ URL: https://arxiv.org/abs/2103.14470.pdf

+ Title: 'Spatial Dual-Modality Graph Reasoning for Key Information Extraction'

+ README: configs/kie/sdmgr/README.md

+

+Models:

+ - Name: sdmgr_unet16_60e_wildreceipt

+ Alias: SDMGR

+ In Collection: SDMGR

+ Config: configs/kie/sdmgr/sdmgr_unet16_60e_wildreceipt.py

+ Metadata:

+ Training Data: wildreceipt

+ Results:

+ - Task: Key Information Extraction

+ Dataset: wildreceipt

+ Metrics:

+ macro_f1: 0.890

+ Weights: https://download.openmmlab.com/mmocr/kie/sdmgr/sdmgr_unet16_60e_wildreceipt/sdmgr_unet16_60e_wildreceipt_20220825_151648-22419f37.pth

+ - Name: sdmgr_novisual_60e_wildreceipt

+ In Collection: SDMGR

+ Config: configs/kie/sdmgr/sdmgr_novisual_60e_wildreceipt.py

+ Metadata:

+ Training Data: wildreceipt

+ Results:

+ - Task: Key Information Extraction

+ Dataset: wildreceipt

+ Metrics:

+ macro_f1: 0.873

+ Weights: https://download.openmmlab.com/mmocr/kie/sdmgr/sdmgr_novisual_60e_wildreceipt/sdmgr_novisual_60e_wildreceipt_20220831_193317-827649d8.pth

+ - Name: sdmgr_novisual_60e_wildreceipt_openset

+ In Collection: SDMGR

+ Config: configs/kie/sdmgr/sdmgr_novisual_60e_wildreceipt-openset.py

+ Metadata:

+ Training Data: wildreceipt-openset

+ Results:

+ - Task: Key Information Extraction

+ Dataset: wildreceipt

+ Metrics:

+ macro_f1: 0.931

+ micro_f1: 0.940

+ edge_micro_f1: 0.792

+ Weights: https://download.openmmlab.com/mmocr/kie/sdmgr/sdmgr_novisual_60e_wildreceipt-openset/sdmgr_novisual_60e_wildreceipt-openset_20220831_200807-dedf15ec.pth

diff --git a/mmocr-dev-1.x/configs/kie/sdmgr/sdmgr_novisual_60e_wildreceipt-openset.py b/mmocr-dev-1.x/configs/kie/sdmgr/sdmgr_novisual_60e_wildreceipt-openset.py

new file mode 100644

index 0000000000000000000000000000000000000000..bc3d52a1ce93d4baf267edc923c71f2b9482e767

--- /dev/null

+++ b/mmocr-dev-1.x/configs/kie/sdmgr/sdmgr_novisual_60e_wildreceipt-openset.py

@@ -0,0 +1,71 @@

+_base_ = [

+ '../_base_/default_runtime.py',

+ '../_base_/datasets/wildreceipt-openset.py',

+ '../_base_/schedules/schedule_adam_60e.py',

+ '_base_sdmgr_novisual.py',

+]

+

+node_num_classes = 4 # 4 classes: bg, key, value and other

+edge_num_classes = 2 # edge connectivity

+key_node_idx = 1

+value_node_idx = 2

+

+model = dict(

+ type='SDMGR',

+ kie_head=dict(

+ num_classes=node_num_classes,

+ postprocessor=dict(

+ link_type='one-to-many',

+ key_node_idx=key_node_idx,

+ value_node_idx=value_node_idx)),

+)

+

+test_pipeline = [

+ dict(

+ type='LoadKIEAnnotations',

+ key_node_idx=key_node_idx,

+ value_node_idx=value_node_idx), # Keep key->value edges for evaluation

+ dict(type='Resize', scale=(1024, 512), keep_ratio=True),

+ dict(type='PackKIEInputs'),

+]

+

+wildreceipt_openset_train = _base_.wildreceipt_openset_train

+wildreceipt_openset_train.pipeline = _base_.train_pipeline

+wildreceipt_openset_test = _base_.wildreceipt_openset_test

+wildreceipt_openset_test.pipeline = test_pipeline

+

+train_dataloader = dict(

+ batch_size=4,

+ num_workers=1,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=wildreceipt_openset_train)

+val_dataloader = dict(

+ batch_size=1,

+ num_workers=1,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=False),

+ dataset=wildreceipt_openset_test)

+test_dataloader = val_dataloader

+

+val_evaluator = [

+ dict(

+ type='F1Metric',

+ prefix='node',

+ key='labels',

+ mode=['micro', 'macro'],

+ num_classes=node_num_classes,

+ cared_classes=[key_node_idx, value_node_idx]),

+ dict(

+ type='F1Metric',

+ prefix='edge',

+ mode='micro',

+ key='edge_labels',

+ cared_classes=[1], # Collapse to binary F1 score

+ num_classes=edge_num_classes)

+]

+test_evaluator = val_evaluator

+

+visualizer = dict(

+ type='KIELocalVisualizer', name='visualizer', is_openset=True)

+auto_scale_lr = dict(base_batch_size=4)

diff --git a/mmocr-dev-1.x/configs/kie/sdmgr/sdmgr_novisual_60e_wildreceipt.py b/mmocr-dev-1.x/configs/kie/sdmgr/sdmgr_novisual_60e_wildreceipt.py

new file mode 100644

index 0000000000000000000000000000000000000000..b56c2b9b665b1bd5c2734aa41fa1e563feda5a81

--- /dev/null

+++ b/mmocr-dev-1.x/configs/kie/sdmgr/sdmgr_novisual_60e_wildreceipt.py

@@ -0,0 +1,28 @@

+_base_ = [

+ '../_base_/default_runtime.py',

+ '../_base_/datasets/wildreceipt.py',

+ '../_base_/schedules/schedule_adam_60e.py',

+ '_base_sdmgr_novisual.py',

+]

+

+wildreceipt_train = _base_.wildreceipt_train

+wildreceipt_train.pipeline = _base_.train_pipeline

+wildreceipt_test = _base_.wildreceipt_test

+wildreceipt_test.pipeline = _base_.test_pipeline

+

+train_dataloader = dict(

+ batch_size=4,

+ num_workers=1,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=wildreceipt_train)

+

+val_dataloader = dict(

+ batch_size=1,

+ num_workers=1,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=False),

+ dataset=wildreceipt_test)

+test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=4)

diff --git a/mmocr-dev-1.x/configs/kie/sdmgr/sdmgr_unet16_60e_wildreceipt.py b/mmocr-dev-1.x/configs/kie/sdmgr/sdmgr_unet16_60e_wildreceipt.py

new file mode 100644

index 0000000000000000000000000000000000000000..d49cbbc33798e815a24cb29cf3bc008460948c88

--- /dev/null

+++ b/mmocr-dev-1.x/configs/kie/sdmgr/sdmgr_unet16_60e_wildreceipt.py

@@ -0,0 +1,29 @@

+_base_ = [

+ '../_base_/default_runtime.py',

+ '../_base_/datasets/wildreceipt.py',

+ '../_base_/schedules/schedule_adam_60e.py',

+ '_base_sdmgr_unet16.py',

+]

+

+wildreceipt_train = _base_.wildreceipt_train

+wildreceipt_train.pipeline = _base_.train_pipeline

+wildreceipt_test = _base_.wildreceipt_test

+wildreceipt_test.pipeline = _base_.test_pipeline

+

+train_dataloader = dict(

+ batch_size=4,

+ num_workers=4,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=wildreceipt_train)

+

+val_dataloader = dict(

+ batch_size=1,

+ num_workers=1,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=False),

+ dataset=wildreceipt_test)

+

+test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=4)

diff --git a/mmocr-dev-1.x/configs/textdet/_base_/datasets/ctw1500.py b/mmocr-dev-1.x/configs/textdet/_base_/datasets/ctw1500.py

new file mode 100644

index 0000000000000000000000000000000000000000..3361f734d0d92752336d13b60f293b785a92e927

--- /dev/null

+++ b/mmocr-dev-1.x/configs/textdet/_base_/datasets/ctw1500.py

@@ -0,0 +1,15 @@

+ctw1500_textdet_data_root = 'data/ctw1500'

+

+ctw1500_textdet_train = dict(

+ type='OCRDataset',

+ data_root=ctw1500_textdet_data_root,

+ ann_file='textdet_train.json',

+ filter_cfg=dict(filter_empty_gt=True, min_size=32),

+ pipeline=None)

+

+ctw1500_textdet_test = dict(

+ type='OCRDataset',

+ data_root=ctw1500_textdet_data_root,

+ ann_file='textdet_test.json',

+ test_mode=True,

+ pipeline=None)

diff --git a/mmocr-dev-1.x/configs/textdet/_base_/datasets/icdar2015.py b/mmocr-dev-1.x/configs/textdet/_base_/datasets/icdar2015.py

new file mode 100644

index 0000000000000000000000000000000000000000..958cb4fa17f50ed7dc967ccceb11cfb9426cd867

--- /dev/null

+++ b/mmocr-dev-1.x/configs/textdet/_base_/datasets/icdar2015.py

@@ -0,0 +1,15 @@

+icdar2015_textdet_data_root = 'data/icdar2015'

+

+icdar2015_textdet_train = dict(

+ type='OCRDataset',

+ data_root=icdar2015_textdet_data_root,

+ ann_file='textdet_train.json',

+ filter_cfg=dict(filter_empty_gt=True, min_size=32),

+ pipeline=None)

+

+icdar2015_textdet_test = dict(

+ type='OCRDataset',

+ data_root=icdar2015_textdet_data_root,

+ ann_file='textdet_test.json',

+ test_mode=True,

+ pipeline=None)

diff --git a/mmocr-dev-1.x/configs/textdet/_base_/datasets/icdar2017.py b/mmocr-dev-1.x/configs/textdet/_base_/datasets/icdar2017.py

new file mode 100644

index 0000000000000000000000000000000000000000..804cb26f96f2bcfb3fdf9803cf36d79e997c57a8

--- /dev/null

+++ b/mmocr-dev-1.x/configs/textdet/_base_/datasets/icdar2017.py

@@ -0,0 +1,17 @@

+icdar2017_textdet_data_root = 'data/det/icdar_2017'

+

+icdar2017_textdet_train = dict(

+ type='OCRDataset',

+ data_root=icdar2017_textdet_data_root,

+ ann_file='instances_training.json',

+ data_prefix=dict(img_path='imgs/'),

+ filter_cfg=dict(filter_empty_gt=True, min_size=32),

+ pipeline=None)

+

+icdar2017_textdet_test = dict(

+ type='OCRDataset',

+ data_root=icdar2017_textdet_data_root,

+ ann_file='instances_test.json',

+ data_prefix=dict(img_path='imgs/'),

+ test_mode=True,

+ pipeline=None)

diff --git a/mmocr-dev-1.x/configs/textdet/_base_/datasets/synthtext.py b/mmocr-dev-1.x/configs/textdet/_base_/datasets/synthtext.py

new file mode 100644

index 0000000000000000000000000000000000000000..9b2310c36fbd89be9a99d2ecba6f823d28532e35

--- /dev/null

+++ b/mmocr-dev-1.x/configs/textdet/_base_/datasets/synthtext.py

@@ -0,0 +1,8 @@

+synthtext_textdet_data_root = 'data/synthtext'

+

+synthtext_textdet_train = dict(

+ type='OCRDataset',

+ data_root=synthtext_textdet_data_root,

+ ann_file='textdet_train.json',

+ filter_cfg=dict(filter_empty_gt=True, min_size=32),

+ pipeline=None)

diff --git a/mmocr-dev-1.x/configs/textdet/_base_/datasets/totaltext.py b/mmocr-dev-1.x/configs/textdet/_base_/datasets/totaltext.py

new file mode 100644

index 0000000000000000000000000000000000000000..29efc842fb0c558b98c1b8e805973360013b804e

--- /dev/null

+++ b/mmocr-dev-1.x/configs/textdet/_base_/datasets/totaltext.py

@@ -0,0 +1,15 @@

+totaltext_textdet_data_root = 'data/totaltext'

+

+totaltext_textdet_train = dict(

+ type='OCRDataset',

+ data_root=totaltext_textdet_data_root,

+ ann_file='textdet_train.json',

+ filter_cfg=dict(filter_empty_gt=True, min_size=32),

+ pipeline=None)

+

+totaltext_textdet_test = dict(

+ type='OCRDataset',

+ data_root=totaltext_textdet_data_root,

+ ann_file='textdet_test.json',

+ test_mode=True,

+ pipeline=None)

diff --git a/mmocr-dev-1.x/configs/textdet/_base_/datasets/toy_data.py b/mmocr-dev-1.x/configs/textdet/_base_/datasets/toy_data.py

new file mode 100644

index 0000000000000000000000000000000000000000..50138769b7bfd99babafcc2aa6e85593c2b0dbf1

--- /dev/null

+++ b/mmocr-dev-1.x/configs/textdet/_base_/datasets/toy_data.py

@@ -0,0 +1,17 @@

+toy_det_data_root = 'tests/data/det_toy_dataset'

+

+toy_det_train = dict(

+ type='OCRDataset',

+ data_root=toy_det_data_root,

+ ann_file='instances_training.json',

+ data_prefix=dict(img_path='imgs/'),

+ filter_cfg=dict(filter_empty_gt=True, min_size=32),

+ pipeline=None)

+

+toy_det_test = dict(

+ type='OCRDataset',

+ data_root=toy_det_data_root,

+ ann_file='instances_test.json',

+ data_prefix=dict(img_path='imgs/'),

+ test_mode=True,

+ pipeline=None)

diff --git a/mmocr-dev-1.x/configs/textdet/_base_/default_runtime.py b/mmocr-dev-1.x/configs/textdet/_base_/default_runtime.py

new file mode 100644

index 0000000000000000000000000000000000000000..81480273b5a7b30d5d7113fb1cb9380b16de5e8f

--- /dev/null

+++ b/mmocr-dev-1.x/configs/textdet/_base_/default_runtime.py

@@ -0,0 +1,41 @@

+default_scope = 'mmocr'

+env_cfg = dict(

+ cudnn_benchmark=False,

+ mp_cfg=dict(mp_start_method='fork', opencv_num_threads=0),

+ dist_cfg=dict(backend='nccl'),

+)

+randomness = dict(seed=None)

+

+default_hooks = dict(

+ timer=dict(type='IterTimerHook'),

+ logger=dict(type='LoggerHook', interval=5),

+ param_scheduler=dict(type='ParamSchedulerHook'),

+ checkpoint=dict(type='CheckpointHook', interval=20),

+ sampler_seed=dict(type='DistSamplerSeedHook'),

+ sync_buffer=dict(type='SyncBuffersHook'),

+ visualization=dict(

+ type='VisualizationHook',

+ interval=1,

+ enable=False,

+ show=False,

+ draw_gt=False,

+ draw_pred=False),

+)

+

+# Logging

+log_level = 'INFO'

+log_processor = dict(type='LogProcessor', window_size=10, by_epoch=True)

+

+load_from = None

+resume = False

+

+# Evaluation

+val_evaluator = dict(type='HmeanIOUMetric')

+test_evaluator = val_evaluator

+

+# Visualization

+vis_backends = [dict(type='LocalVisBackend')]

+visualizer = dict(

+ type='TextDetLocalVisualizer',

+ name='visualizer',

+ vis_backends=vis_backends)

diff --git a/mmocr-dev-1.x/configs/textdet/_base_/pretrain_runtime.py b/mmocr-dev-1.x/configs/textdet/_base_/pretrain_runtime.py

new file mode 100644

index 0000000000000000000000000000000000000000..cb2800d50a570881475035e3b0da9c81e88712d1

--- /dev/null

+++ b/mmocr-dev-1.x/configs/textdet/_base_/pretrain_runtime.py

@@ -0,0 +1,14 @@

+_base_ = 'default_runtime.py'

+

+default_hooks = dict(

+ logger=dict(type='LoggerHook', interval=1000),

+ checkpoint=dict(

+ type='CheckpointHook',

+ interval=10000,

+ by_epoch=False,

+ max_keep_ckpts=1),

+)

+

+# Evaluation

+val_evaluator = None

+test_evaluator = None

diff --git a/mmocr-dev-1.x/configs/textdet/_base_/schedules/schedule_adam_600e.py b/mmocr-dev-1.x/configs/textdet/_base_/schedules/schedule_adam_600e.py

new file mode 100644

index 0000000000000000000000000000000000000000..eb61f7b9ee1b2ab18c8f75f24e7a204a9f90ee54

--- /dev/null

+++ b/mmocr-dev-1.x/configs/textdet/_base_/schedules/schedule_adam_600e.py

@@ -0,0 +1,9 @@

+# optimizer

+optim_wrapper = dict(type='OptimWrapper', optimizer=dict(type='Adam', lr=1e-3))

+train_cfg = dict(type='EpochBasedTrainLoop', max_epochs=600, val_interval=20)

+val_cfg = dict(type='ValLoop')

+test_cfg = dict(type='TestLoop')

+# learning rate

+param_scheduler = [

+ dict(type='PolyLR', power=0.9, end=600),

+]

diff --git a/mmocr-dev-1.x/configs/textdet/_base_/schedules/schedule_sgd_100k.py b/mmocr-dev-1.x/configs/textdet/_base_/schedules/schedule_sgd_100k.py

new file mode 100644

index 0000000000000000000000000000000000000000..f760774b7b2e21886fc3bbe0746fe3bf843d3471

--- /dev/null

+++ b/mmocr-dev-1.x/configs/textdet/_base_/schedules/schedule_sgd_100k.py

@@ -0,0 +1,12 @@

+# optimizer

+optim_wrapper = dict(

+ type='OptimWrapper',

+ optimizer=dict(type='SGD', lr=0.007, momentum=0.9, weight_decay=0.0001))

+

+train_cfg = dict(type='IterBasedTrainLoop', max_iters=100000)

+test_cfg = None

+val_cfg = None

+# learning policy

+param_scheduler = [

+ dict(type='PolyLR', power=0.9, eta_min=1e-7, by_epoch=False, end=100000),

+]

diff --git a/mmocr-dev-1.x/configs/textdet/_base_/schedules/schedule_sgd_1200e.py b/mmocr-dev-1.x/configs/textdet/_base_/schedules/schedule_sgd_1200e.py

new file mode 100644

index 0000000000000000000000000000000000000000..f8555e468bccaa6e5dbca23c9d2821164e21e516

--- /dev/null

+++ b/mmocr-dev-1.x/configs/textdet/_base_/schedules/schedule_sgd_1200e.py

@@ -0,0 +1,11 @@

+# optimizer

+optim_wrapper = dict(

+ type='OptimWrapper',

+ optimizer=dict(type='SGD', lr=0.007, momentum=0.9, weight_decay=0.0001))

+train_cfg = dict(type='EpochBasedTrainLoop', max_epochs=1200, val_interval=20)

+val_cfg = dict(type='ValLoop')

+test_cfg = dict(type='TestLoop')

+# learning policy

+param_scheduler = [

+ dict(type='PolyLR', power=0.9, eta_min=1e-7, end=1200),

+]

diff --git a/mmocr-dev-1.x/configs/textdet/_base_/schedules/schedule_sgd_base.py b/mmocr-dev-1.x/configs/textdet/_base_/schedules/schedule_sgd_base.py

new file mode 100644

index 0000000000000000000000000000000000000000..baf559de231db06382529079be7d5bba071b209e

--- /dev/null

+++ b/mmocr-dev-1.x/configs/textdet/_base_/schedules/schedule_sgd_base.py

@@ -0,0 +1,15 @@

+# Note: This schedule config serves as a base config for other schedules.

+# Users would have to at least fill in "max_epochs" and "val_interval"

+# in order to use this config in their experiments.

+

+# optimizer

+optim_wrapper = dict(

+ type='OptimWrapper',

+ optimizer=dict(type='SGD', lr=0.007, momentum=0.9, weight_decay=0.0001))

+train_cfg = dict(type='EpochBasedTrainLoop', max_epochs=None, val_interval=20)

+val_cfg = dict(type='ValLoop')

+test_cfg = dict(type='TestLoop')

+# learning policy

+param_scheduler = [

+ dict(type='ConstantLR', factor=1.0),

+]

diff --git a/mmocr-dev-1.x/configs/textdet/dbnet/README.md b/mmocr-dev-1.x/configs/textdet/dbnet/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..07c91edbaf8c8bbe96ae59fc8d17725314da47c8

--- /dev/null

+++ b/mmocr-dev-1.x/configs/textdet/dbnet/README.md

@@ -0,0 +1,47 @@

+# DBNet

+

+> [Real-time Scene Text Detection with Differentiable Binarization](https://arxiv.org/abs/1911.08947)

+

+

+

+## Abstract

+

+Recently, segmentation-based methods are quite popular in scene text detection, as the segmentation results can more accurately describe scene text of various shapes such as curve text. However, the post-processing of binarization is essential for segmentation-based detection, which converts probability maps produced by a segmentation method into bounding boxes/regions of text. In this paper, we propose a module named Differentiable Binarization (DB), which can perform the binarization process in a segmentation network. Optimized along with a DB module, a segmentation network can adaptively set the thresholds for binarization, which not only simplifies the post-processing but also enhances the performance of text detection. Based on a simple segmentation network, we validate the performance improvements of DB on five benchmark datasets, which consistently achieves state-of-the-art results, in terms of both detection accuracy and speed. In particular, with a light-weight backbone, the performance improvements by DB are significant so that we can look for an ideal tradeoff between detection accuracy and efficiency. Specifically, with a backbone of ResNet-18, our detector achieves an F-measure of 82.8, running at 62 FPS, on the MSRA-TD500 dataset.

+

+ +

+

+ +

+

+

+## Results and models

+

+### SynthText

+

+| Method | Backbone | Training set | #iters | Download |

+| :-----------------------------------------------------------------------: | :------: | :----------: | :-----: | :--------------------------------------------------------------------------------------------------: |

+| [DBNet_r18](/configs/textdet/dbnet/dbnet_resnet18_fpnc_100k_synthtext.py) | ResNet18 | SynthText | 100,000 | [model](https://download.openmmlab.com/mmocr/textdet/dbnet/dbnet_resnet18_fpnc_100k_synthtext/dbnet_resnet18_fpnc_100k_synthtext-2e9bf392.pth) \| [log](https://download.openmmlab.com/mmocr/textdet/dbnet/dbnet_resnet18_fpnc_100k_synthtext/20221214_150351.log) |

+

+### ICDAR2015

+

+| Method | Backbone | Pretrained Model | Training set | Test set | #epochs | Test size | Precision | Recall | Hmean | Download |

+| :----------------------------: | :------------------------------: | :--------------------------------------: | :-------------: | :------------: | :-----: | :-------: | :-------: | :----: | :----: | :------------------------------: |

+| [DBNet_r18](/configs/textdet/dbnet/dbnet_resnet18_fpnc_1200e_icdar2015.py) | ResNet18 | - | ICDAR2015 Train | ICDAR2015 Test | 1200 | 736 | 0.8853 | 0.7583 | 0.8169 | [model](https://download.openmmlab.com/mmocr/textdet/dbnet/dbnet_resnet18_fpnc_1200e_icdar2015/dbnet_resnet18_fpnc_1200e_icdar2015_20220825_221614-7c0e94f2.pth) \| [log](https://download.openmmlab.com/mmocr/textdet/dbnet/dbnet_resnet18_fpnc_1200e_icdar2015/20220825_221614.log) |

+| [DBNet_r50](/configs/textdet/dbnet/dbnet_resnet50_1200e_icdar2015.py) | ResNet50 | - | ICDAR2015 Train | ICDAR2015 Test | 1200 | 1024 | 0.8744 | 0.8276 | 0.8504 | [model](https://download.openmmlab.com/mmocr/textdet/dbnet/dbnet_resnet50_1200e_icdar2015/dbnet_resnet50_1200e_icdar2015_20221102_115917-54f50589.pth) \| [log](https://download.openmmlab.com/mmocr/textdet/dbnet/dbnet_resnet50_1200e_icdar2015/20221102_115917.log) |

+| [DBNet_r50dcn](/configs/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_1200e_icdar2015.py) | ResNet50-DCN | [Synthtext](https://download.openmmlab.com/mmocr/textdet/dbnet/tmp_1.0_pretrain/dbnet_r50dcnv2_fpnc_sbn_2e_synthtext_20210325-ed322016.pth) | ICDAR2015 Train | ICDAR2015 Test | 1200 | 1024 | 0.8784 | 0.8315 | 0.8543 | [model](https://download.openmmlab.com/mmocr/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_1200e_icdar2015/dbnet_resnet50-dcnv2_fpnc_1200e_icdar2015_20220828_124917-452c443c.pth) \| [log](https://download.openmmlab.com/mmocr/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_1200e_icdar2015/20220828_124917.log) |

+| [DBNet_r50-oclip](/configs/textdet/dbnet/dbnet_resnet50-oclip_1200e_icdar2015.py) | [ResNet50-oCLIP](https://download.openmmlab.com/mmocr/backbone/resnet50-oclip-7ba0c533.pth) | - | ICDAR2015 Train | ICDAR2015 Test | 1200 | 1024 | 0.9052 | 0.8272 | 0.8644 | [model](https://download.openmmlab.com/mmocr/textdet/dbnet/dbnet_resnet50-oclip_1200e_icdar2015/dbnet_resnet50-oclip_1200e_icdar2015_20221102_115917-bde8c87a.pth) \| [log](https://download.openmmlab.com/mmocr/textdet/dbnet/dbnet_resnet50-oclip_1200e_icdar2015/20221102_115917.log) |

+

+### Total Text

+

+| Method | Backbone | Pretrained Model | Training set | Test set | #epochs | Test size | Precision | Recall | Hmean | Download |

+| :----------------------------------------------------: | :------: | :--------------: | :-------------: | :------------: | :-----: | :-------: | :-------: | :----: | :----: | :------------------------------------------------------: |

+| [DBNet_r18](/configs/textdet/dbnet/dbnet_resnet18_fpnc_1200e_totaltext.py) | ResNet18 | - | Totaltext Train | Totaltext Test | 1200 | 736 | 0.8640 | 0.7770 | 0.8182 | [model](https://download.openmmlab.com/mmocr/textdet/dbnet/dbnet_resnet18_fpnc_1200e_totaltext/dbnet_resnet18_fpnc_1200e_totaltext-3ed3233c.pth) \| [log](https://download.openmmlab.com/mmocr/textdet/dbnet/dbnet_resnet18_fpnc_1200e_totaltext/20221219_201038.log) |

+

+## Citation

+

+```bibtex

+@article{Liao_Wan_Yao_Chen_Bai_2020,

+ title={Real-Time Scene Text Detection with Differentiable Binarization},

+ journal={Proceedings of the AAAI Conference on Artificial Intelligence},

+ author={Liao, Minghui and Wan, Zhaoyi and Yao, Cong and Chen, Kai and Bai, Xiang},

+ year={2020},

+ pages={11474-11481}}

+```

diff --git a/mmocr-dev-1.x/configs/textdet/dbnet/_base_dbnet_resnet18_fpnc.py b/mmocr-dev-1.x/configs/textdet/dbnet/_base_dbnet_resnet18_fpnc.py

new file mode 100644

index 0000000000000000000000000000000000000000..44907100b05b2544e27ce476a6368feef1a178da

--- /dev/null

+++ b/mmocr-dev-1.x/configs/textdet/dbnet/_base_dbnet_resnet18_fpnc.py

@@ -0,0 +1,64 @@

+model = dict(

+ type='DBNet',

+ backbone=dict(

+ type='mmdet.ResNet',

+ depth=18,

+ num_stages=4,

+ out_indices=(0, 1, 2, 3),

+ frozen_stages=-1,

+ norm_cfg=dict(type='BN', requires_grad=True),

+ init_cfg=dict(type='Pretrained', checkpoint='torchvision://resnet18'),

+ norm_eval=False,

+ style='caffe'),

+ neck=dict(

+ type='FPNC', in_channels=[64, 128, 256, 512], lateral_channels=256),

+ det_head=dict(

+ type='DBHead',

+ in_channels=256,

+ module_loss=dict(type='DBModuleLoss'),

+ postprocessor=dict(type='DBPostprocessor', text_repr_type='quad')),

+ data_preprocessor=dict(

+ type='TextDetDataPreprocessor',

+ mean=[123.675, 116.28, 103.53],

+ std=[58.395, 57.12, 57.375],

+ bgr_to_rgb=True,

+ pad_size_divisor=32))

+

+train_pipeline = [

+ dict(type='LoadImageFromFile', color_type='color_ignore_orientation'),

+ dict(

+ type='LoadOCRAnnotations',

+ with_polygon=True,

+ with_bbox=True,

+ with_label=True,

+ ),

+ dict(

+ type='TorchVisionWrapper',

+ op='ColorJitter',

+ brightness=32.0 / 255,

+ saturation=0.5),

+ dict(

+ type='ImgAugWrapper',

+ args=[['Fliplr', 0.5],

+ dict(cls='Affine', rotate=[-10, 10]), ['Resize', [0.5, 3.0]]]),

+ dict(type='RandomCrop', min_side_ratio=0.1),

+ dict(type='Resize', scale=(640, 640), keep_ratio=True),

+ dict(type='Pad', size=(640, 640)),

+ dict(

+ type='PackTextDetInputs',

+ meta_keys=('img_path', 'ori_shape', 'img_shape'))

+]

+

+test_pipeline = [

+ dict(type='LoadImageFromFile', color_type='color_ignore_orientation'),

+ dict(type='Resize', scale=(1333, 736), keep_ratio=True),

+ dict(

+ type='LoadOCRAnnotations',

+ with_polygon=True,

+ with_bbox=True,

+ with_label=True,

+ ),

+ dict(

+ type='PackTextDetInputs',

+ meta_keys=('img_path', 'ori_shape', 'img_shape', 'scale_factor'))

+]

diff --git a/mmocr-dev-1.x/configs/textdet/dbnet/_base_dbnet_resnet50-dcnv2_fpnc.py b/mmocr-dev-1.x/configs/textdet/dbnet/_base_dbnet_resnet50-dcnv2_fpnc.py

new file mode 100644

index 0000000000000000000000000000000000000000..952f079d478586516c28ddafea63ebc45ab7aa80

--- /dev/null

+++ b/mmocr-dev-1.x/configs/textdet/dbnet/_base_dbnet_resnet50-dcnv2_fpnc.py

@@ -0,0 +1,66 @@

+model = dict(

+ type='DBNet',

+ backbone=dict(

+ type='mmdet.ResNet',

+ depth=50,

+ num_stages=4,

+ out_indices=(0, 1, 2, 3),

+ frozen_stages=-1,

+ norm_cfg=dict(type='BN', requires_grad=True),

+ norm_eval=False,

+ style='pytorch',

+ dcn=dict(type='DCNv2', deform_groups=1, fallback_on_stride=False),

+ init_cfg=dict(type='Pretrained', checkpoint='torchvision://resnet50'),

+ stage_with_dcn=(False, True, True, True)),

+ neck=dict(

+ type='FPNC', in_channels=[256, 512, 1024, 2048], lateral_channels=256),

+ det_head=dict(

+ type='DBHead',

+ in_channels=256,

+ module_loss=dict(type='DBModuleLoss'),

+ postprocessor=dict(type='DBPostprocessor', text_repr_type='quad')),

+ data_preprocessor=dict(

+ type='TextDetDataPreprocessor',

+ mean=[123.675, 116.28, 103.53],

+ std=[58.395, 57.12, 57.375],

+ bgr_to_rgb=True,

+ pad_size_divisor=32))

+

+train_pipeline = [

+ dict(type='LoadImageFromFile', color_type='color_ignore_orientation'),

+ dict(

+ type='LoadOCRAnnotations',

+ with_bbox=True,

+ with_polygon=True,

+ with_label=True,

+ ),

+ dict(

+ type='TorchVisionWrapper',

+ op='ColorJitter',

+ brightness=32.0 / 255,

+ saturation=0.5),

+ dict(

+ type='ImgAugWrapper',

+ args=[['Fliplr', 0.5],

+ dict(cls='Affine', rotate=[-10, 10]), ['Resize', [0.5, 3.0]]]),

+ dict(type='RandomCrop', min_side_ratio=0.1),

+ dict(type='Resize', scale=(640, 640), keep_ratio=True),

+ dict(type='Pad', size=(640, 640)),

+ dict(

+ type='PackTextDetInputs',

+ meta_keys=('img_path', 'ori_shape', 'img_shape'))

+]

+

+test_pipeline = [

+ dict(type='LoadImageFromFile', color_type='color_ignore_orientation'),

+ dict(type='Resize', scale=(4068, 1024), keep_ratio=True),

+ dict(

+ type='LoadOCRAnnotations',

+ with_polygon=True,

+ with_bbox=True,

+ with_label=True,

+ ),

+ dict(

+ type='PackTextDetInputs',

+ meta_keys=('img_path', 'ori_shape', 'img_shape', 'scale_factor'))

+]

diff --git a/mmocr-dev-1.x/configs/textdet/dbnet/dbnet_resnet18_fpnc_100k_synthtext.py b/mmocr-dev-1.x/configs/textdet/dbnet/dbnet_resnet18_fpnc_100k_synthtext.py

new file mode 100644

index 0000000000000000000000000000000000000000..839146dd380a5b6f2a24280bdab123662b0d8476

--- /dev/null

+++ b/mmocr-dev-1.x/configs/textdet/dbnet/dbnet_resnet18_fpnc_100k_synthtext.py

@@ -0,0 +1,45 @@

+_base_ = [

+ '_base_dbnet_resnet18_fpnc.py',

+ '../_base_/datasets/synthtext.py',

+ '../_base_/pretrain_runtime.py',

+ '../_base_/schedules/schedule_sgd_100k.py',

+]

+

+train_pipeline = [

+ dict(type='LoadImageFromFile', color_type='color_ignore_orientation'),

+ dict(

+ type='LoadOCRAnnotations',

+ with_polygon=True,

+ with_bbox=True,

+ with_label=True,

+ ),

+ dict(type='FixInvalidPolygon'),

+ dict(

+ type='TorchVisionWrapper',

+ op='ColorJitter',

+ brightness=32.0 / 255,

+ saturation=0.5),

+ dict(

+ type='ImgAugWrapper',

+ args=[['Fliplr', 0.5],

+ dict(cls='Affine', rotate=[-10, 10]), ['Resize', [0.5, 3.0]]]),

+ dict(type='RandomCrop', min_side_ratio=0.1),

+ dict(type='Resize', scale=(640, 640), keep_ratio=True),

+ dict(type='Pad', size=(640, 640)),

+ dict(

+ type='PackTextDetInputs',

+ meta_keys=('img_path', 'ori_shape', 'img_shape'))

+]

+

+# dataset settings

+synthtext_textdet_train = _base_.synthtext_textdet_train

+synthtext_textdet_train.pipeline = train_pipeline

+

+train_dataloader = dict(

+ batch_size=16,

+ num_workers=8,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=synthtext_textdet_train)

+

+auto_scale_lr = dict(base_batch_size=16)

diff --git a/mmocr-dev-1.x/configs/textdet/dbnet/dbnet_resnet18_fpnc_1200e_icdar2015.py b/mmocr-dev-1.x/configs/textdet/dbnet/dbnet_resnet18_fpnc_1200e_icdar2015.py

new file mode 100644

index 0000000000000000000000000000000000000000..feea2004b158fa3787b9a9f9d1c2b32e1bb8ae1d

--- /dev/null

+++ b/mmocr-dev-1.x/configs/textdet/dbnet/dbnet_resnet18_fpnc_1200e_icdar2015.py

@@ -0,0 +1,30 @@

+_base_ = [

+ '_base_dbnet_resnet18_fpnc.py',

+ '../_base_/datasets/icdar2015.py',

+ '../_base_/default_runtime.py',

+ '../_base_/schedules/schedule_sgd_1200e.py',

+]

+

+# dataset settings

+icdar2015_textdet_train = _base_.icdar2015_textdet_train

+icdar2015_textdet_train.pipeline = _base_.train_pipeline

+icdar2015_textdet_test = _base_.icdar2015_textdet_test

+icdar2015_textdet_test.pipeline = _base_.test_pipeline

+

+train_dataloader = dict(

+ batch_size=16,

+ num_workers=8,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=icdar2015_textdet_train)

+

+val_dataloader = dict(

+ batch_size=1,

+ num_workers=4,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=False),

+ dataset=icdar2015_textdet_test)

+

+test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=16)

diff --git a/mmocr-dev-1.x/configs/textdet/dbnet/dbnet_resnet18_fpnc_1200e_totaltext.py b/mmocr-dev-1.x/configs/textdet/dbnet/dbnet_resnet18_fpnc_1200e_totaltext.py

new file mode 100644

index 0000000000000000000000000000000000000000..9728db946b0419ae1825a986c9918c7e0f70bb55

--- /dev/null

+++ b/mmocr-dev-1.x/configs/textdet/dbnet/dbnet_resnet18_fpnc_1200e_totaltext.py

@@ -0,0 +1,73 @@

+_base_ = [

+ '_base_dbnet_resnet18_fpnc.py',

+ '../_base_/datasets/totaltext.py',

+ '../_base_/default_runtime.py',

+ '../_base_/schedules/schedule_sgd_1200e.py',

+]

+

+train_pipeline = [

+ dict(type='LoadImageFromFile', color_type='color_ignore_orientation'),

+ dict(

+ type='LoadOCRAnnotations',

+ with_polygon=True,

+ with_bbox=True,

+ with_label=True,

+ ),

+ dict(type='FixInvalidPolygon', min_poly_points=4),

+ dict(

+ type='TorchVisionWrapper',

+ op='ColorJitter',

+ brightness=32.0 / 255,

+ saturation=0.5),

+ dict(

+ type='ImgAugWrapper',

+ args=[['Fliplr', 0.5],

+ dict(cls='Affine', rotate=[-10, 10]), ['Resize', [0.5, 3.0]]]),

+ dict(type='RandomCrop', min_side_ratio=0.1),

+ dict(type='Resize', scale=(640, 640), keep_ratio=True),

+ dict(type='Pad', size=(640, 640)),

+ dict(

+ type='PackTextDetInputs',

+ meta_keys=('img_path', 'ori_shape', 'img_shape'))

+]

+

+test_pipeline = [

+ dict(type='LoadImageFromFile', color_type='color_ignore_orientation'),

+ dict(type='Resize', scale=(1333, 736), keep_ratio=True),

+ dict(

+ type='LoadOCRAnnotations',

+ with_polygon=True,

+ with_bbox=True,

+ with_label=True,

+ ),

+ dict(type='FixInvalidPolygon', min_poly_points=4),

+ dict(

+ type='PackTextDetInputs',

+ meta_keys=('img_path', 'ori_shape', 'img_shape', 'scale_factor'))

+]

+

+# dataset settings

+totaltext_textdet_train = _base_.totaltext_textdet_train

+totaltext_textdet_test = _base_.totaltext_textdet_test

+totaltext_textdet_train.pipeline = train_pipeline

+totaltext_textdet_test.pipeline = test_pipeline

+

+train_dataloader = dict(

+ batch_size=16,

+ num_workers=16,

+ pin_memory=True,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=totaltext_textdet_train)

+

+val_dataloader = dict(

+ batch_size=1,

+ num_workers=1,

+ pin_memory=True,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=False),

+ dataset=totaltext_textdet_test)

+

+test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=16)

diff --git a/mmocr-dev-1.x/configs/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_100k_synthtext.py b/mmocr-dev-1.x/configs/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_100k_synthtext.py

new file mode 100644

index 0000000000000000000000000000000000000000..567e5984e54e9747f044715078d2a6f69bcfc792

--- /dev/null

+++ b/mmocr-dev-1.x/configs/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_100k_synthtext.py

@@ -0,0 +1,30 @@

+_base_ = [

+ '_base_dbnet_resnet50-dcnv2_fpnc.py',

+ '../_base_/default_runtime.py',

+ '../_base_/datasets/synthtext.py',

+ '../_base_/schedules/schedule_sgd_100k.py',

+]

+

+# dataset settings

+synthtext_textdet_train = _base_.synthtext_textdet_train

+synthtext_textdet_train.pipeline = _base_.train_pipeline

+synthtext_textdet_test = _base_.synthtext_textdet_test

+synthtext_textdet_test.pipeline = _base_.test_pipeline

+

+train_dataloader = dict(

+ batch_size=16,

+ num_workers=8,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=synthtext_textdet_train)

+

+val_dataloader = dict(

+ batch_size=1,

+ num_workers=4,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=False),

+ dataset=synthtext_textdet_test)

+

+test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=16)

diff --git a/mmocr-dev-1.x/configs/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_1200e_icdar2015.py b/mmocr-dev-1.x/configs/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_1200e_icdar2015.py

new file mode 100644

index 0000000000000000000000000000000000000000..f961a2e70c9a17d0bfbfbc5963bd8a0da79427b1

--- /dev/null

+++ b/mmocr-dev-1.x/configs/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_1200e_icdar2015.py

@@ -0,0 +1,33 @@

+_base_ = [

+ '_base_dbnet_resnet50-dcnv2_fpnc.py',

+ '../_base_/datasets/icdar2015.py',

+ '../_base_/default_runtime.py',

+ '../_base_/schedules/schedule_sgd_1200e.py',

+]

+