Spaces:

Runtime error

Runtime error

Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +2 -35

- .gitignore +160 -0

- .gradio/certificate.pem +31 -0

- LICENSE +21 -0

- README.md +248 -6

- images/webui_dl_model.png +0 -0

- images/webui_generate.png +0 -0

- images/webui_upload_model.png +0 -0

- notebooks/ultimate_rvc_colab.ipynb +109 -0

- notes/TODO.md +462 -0

- notes/app-doc.md +19 -0

- notes/cli-doc.md +74 -0

- notes/gradio.md +615 -0

- pyproject.toml +225 -0

- src/ultimate_rvc/__init__.py +40 -0

- src/ultimate_rvc/cli/__init__.py +8 -0

- src/ultimate_rvc/cli/generate/song_cover.py +409 -0

- src/ultimate_rvc/cli/main.py +21 -0

- src/ultimate_rvc/common.py +10 -0

- src/ultimate_rvc/core/__init__.py +7 -0

- src/ultimate_rvc/core/common.py +285 -0

- src/ultimate_rvc/core/exceptions.py +297 -0

- src/ultimate_rvc/core/generate/__init__.py +13 -0

- src/ultimate_rvc/core/generate/song_cover.py +1728 -0

- src/ultimate_rvc/core/main.py +48 -0

- src/ultimate_rvc/core/manage/__init__.py +4 -0

- src/ultimate_rvc/core/manage/audio.py +214 -0

- src/ultimate_rvc/core/manage/models.py +424 -0

- src/ultimate_rvc/core/manage/other_settings.py +29 -0

- src/ultimate_rvc/core/manage/public_models.json +646 -0

- src/ultimate_rvc/core/typing_extra.py +294 -0

- src/ultimate_rvc/py.typed +0 -0

- src/ultimate_rvc/stubs/audio_separator/separator/__init__.pyi +100 -0

- src/ultimate_rvc/stubs/gradio/__init__.pyi +245 -0

- src/ultimate_rvc/stubs/gradio/events.pyi +344 -0

- src/ultimate_rvc/stubs/pedalboard_native/io/__init__.pyi +41 -0

- src/ultimate_rvc/stubs/soundfile/__init__.pyi +34 -0

- src/ultimate_rvc/stubs/sox/__init__.pyi +19 -0

- src/ultimate_rvc/stubs/static_ffmpeg/__init__.pyi +1 -0

- src/ultimate_rvc/stubs/static_sox/__init__.pyi +1 -0

- src/ultimate_rvc/stubs/yt_dlp/__init__.pyi +27 -0

- src/ultimate_rvc/typing_extra.py +56 -0

- src/ultimate_rvc/vc/__init__.py +8 -0

- src/ultimate_rvc/vc/configs/32k.json +46 -0

- src/ultimate_rvc/vc/configs/32k_v2.json +46 -0

- src/ultimate_rvc/vc/configs/40k.json +46 -0

- src/ultimate_rvc/vc/configs/48k.json +46 -0

- src/ultimate_rvc/vc/configs/48k_v2.json +46 -0

- src/ultimate_rvc/vc/infer_pack/attentions.py +417 -0

- src/ultimate_rvc/vc/infer_pack/commons.py +166 -0

.gitattributes

CHANGED

|

@@ -1,35 +1,2 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

-

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

-

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

-

*.ftz filter=lfs diff=lfs merge=lfs -text

|

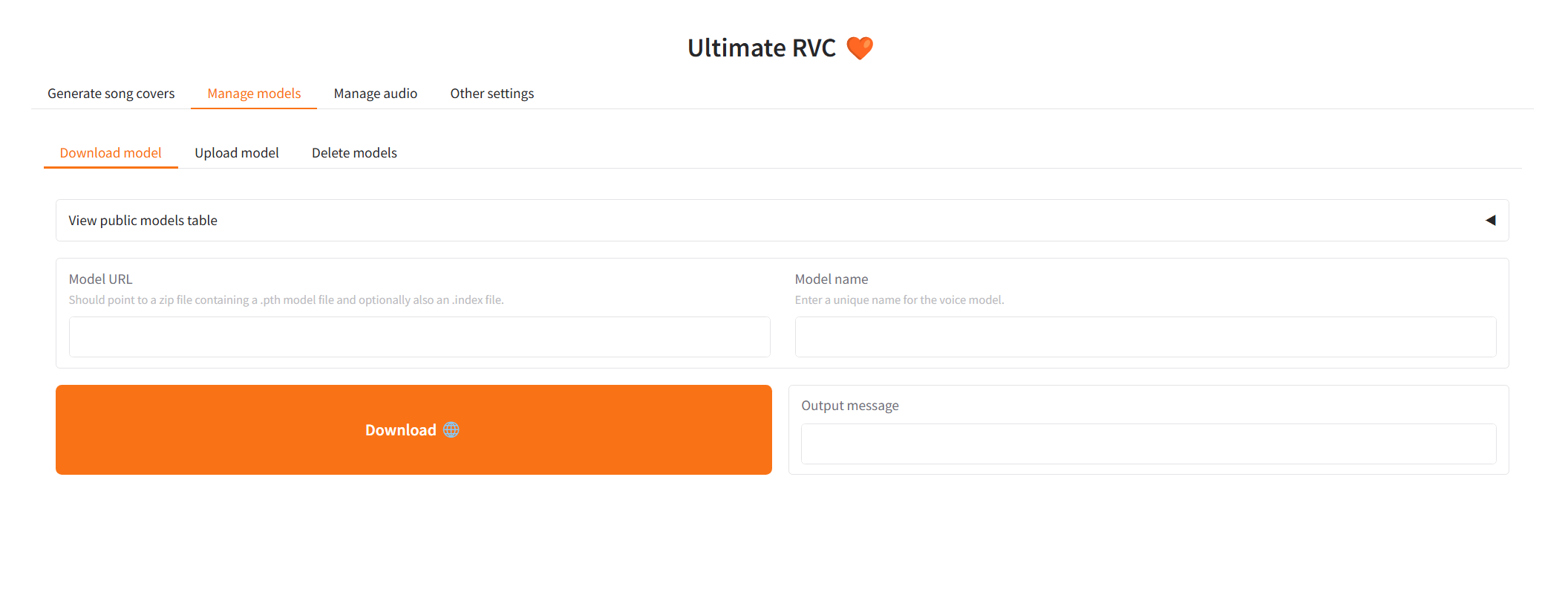

| 7 |

-

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

-

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

-

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

-

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

-

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

-

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

-

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

-

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

-

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

-

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

-

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

-

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

-

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

-

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

-

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

-

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

-

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

-

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

-

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

-

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

-

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

-

*.tar filter=lfs diff=lfs merge=lfs -text

|

| 29 |

-

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 30 |

-

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 31 |

-

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 32 |

-

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 33 |

-

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

-

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

-

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

| 1 |

+

# Auto detect text files and perform LF normalization

|

| 2 |

+

* text=auto

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

.gitignore

ADDED

|

@@ -0,0 +1,160 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Ultimate RVC project

|

| 2 |

+

audio

|

| 3 |

+

models

|

| 4 |

+

temp

|

| 5 |

+

uv

|

| 6 |

+

uv.lock

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

# Byte-compiled / optimized / DLL files

|

| 10 |

+

__pycache__/

|

| 11 |

+

*.py[cod]

|

| 12 |

+

*$py.class

|

| 13 |

+

|

| 14 |

+

# C extensions

|

| 15 |

+

*.so

|

| 16 |

+

|

| 17 |

+

# Distribution / packaging

|

| 18 |

+

.Python

|

| 19 |

+

build/

|

| 20 |

+

develop-eggs/

|

| 21 |

+

dist/

|

| 22 |

+

downloads/

|

| 23 |

+

eggs/

|

| 24 |

+

.eggs/

|

| 25 |

+

lib/

|

| 26 |

+

lib64/

|

| 27 |

+

parts/

|

| 28 |

+

sdist/

|

| 29 |

+

var/

|

| 30 |

+

wheels/

|

| 31 |

+

share/python-wheels/

|

| 32 |

+

*.egg-info/

|

| 33 |

+

.installed.cfg

|

| 34 |

+

*.egg

|

| 35 |

+

MANIFEST

|

| 36 |

+

|

| 37 |

+

# PyInstaller

|

| 38 |

+

# Usually these files are written by a python script from a template

|

| 39 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 40 |

+

*.manifest

|

| 41 |

+

*.spec

|

| 42 |

+

|

| 43 |

+

# Installer logs

|

| 44 |

+

pip-log.txt

|

| 45 |

+

pip-delete-this-directory.txt

|

| 46 |

+

|

| 47 |

+

# Unit test / coverage reports

|

| 48 |

+

htmlcov/

|

| 49 |

+

.tox/

|

| 50 |

+

.nox/

|

| 51 |

+

.coverage

|

| 52 |

+

.coverage.*

|

| 53 |

+

.cache

|

| 54 |

+

nosetests.xml

|

| 55 |

+

coverage.xml

|

| 56 |

+

*.cover

|

| 57 |

+

*.py,cover

|

| 58 |

+

.hypothesis/

|

| 59 |

+

.pytest_cache/

|

| 60 |

+

cover/

|

| 61 |

+

|

| 62 |

+

# Translations

|

| 63 |

+

*.mo

|

| 64 |

+

*.pot

|

| 65 |

+

|

| 66 |

+

# Django stuff:

|

| 67 |

+

*.log

|

| 68 |

+

local_settings.py

|

| 69 |

+

db.sqlite3

|

| 70 |

+

db.sqlite3-journal

|

| 71 |

+

|

| 72 |

+

# Flask stuff:

|

| 73 |

+

instance/

|

| 74 |

+

.webassets-cache

|

| 75 |

+

|

| 76 |

+

# Scrapy stuff:

|

| 77 |

+

.scrapy

|

| 78 |

+

|

| 79 |

+

# Sphinx documentation

|

| 80 |

+

docs/_build/

|

| 81 |

+

|

| 82 |

+

# PyBuilder

|

| 83 |

+

.pybuilder/

|

| 84 |

+

target/

|

| 85 |

+

|

| 86 |

+

# Jupyter Notebook

|

| 87 |

+

.ipynb_checkpoints

|

| 88 |

+

|

| 89 |

+

# IPython

|

| 90 |

+

profile_default/

|

| 91 |

+

ipython_config.py

|

| 92 |

+

|

| 93 |

+

# pyenv

|

| 94 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 95 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 96 |

+

# .python-version

|

| 97 |

+

|

| 98 |

+

# pipenv

|

| 99 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 100 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 101 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 102 |

+

# install all needed dependencies.

|

| 103 |

+

#Pipfile.lock

|

| 104 |

+

|

| 105 |

+

# poetry

|

| 106 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 107 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 108 |

+

# commonly ignored for libraries.

|

| 109 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 110 |

+

#poetry.lock

|

| 111 |

+

|

| 112 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow

|

| 113 |

+

__pypackages__/

|

| 114 |

+

|

| 115 |

+

# Celery stuff

|

| 116 |

+

celerybeat-schedule

|

| 117 |

+

celerybeat.pid

|

| 118 |

+

|

| 119 |

+

# SageMath parsed files

|

| 120 |

+

*.sage.py

|

| 121 |

+

|

| 122 |

+

# Environments

|

| 123 |

+

.env

|

| 124 |

+

.venv

|

| 125 |

+

env/

|

| 126 |

+

venv/

|

| 127 |

+

ENV/

|

| 128 |

+

env.bak/

|

| 129 |

+

venv.bak/

|

| 130 |

+

|

| 131 |

+

# Spyder project settings

|

| 132 |

+

.spyderproject

|

| 133 |

+

.spyproject

|

| 134 |

+

|

| 135 |

+

# Rope project settings

|

| 136 |

+

.ropeproject

|

| 137 |

+

|

| 138 |

+

# mkdocs documentation

|

| 139 |

+

/site

|

| 140 |

+

|

| 141 |

+

# mypy

|

| 142 |

+

.mypy_cache/

|

| 143 |

+

.dmypy.json

|

| 144 |

+

dmypy.json

|

| 145 |

+

|

| 146 |

+

# Pyre type checker

|

| 147 |

+

.pyre/

|

| 148 |

+

|

| 149 |

+

# pytype static type analyzer

|

| 150 |

+

.pytype/

|

| 151 |

+

|

| 152 |

+

# Cython debug symbols

|

| 153 |

+

cython_debug/

|

| 154 |

+

|

| 155 |

+

# PyCharm

|

| 156 |

+

# JetBrains specific template is maintainted in a separate JetBrains.gitignore that can

|

| 157 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 158 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 159 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 160 |

+

.idea/

|

.gradio/certificate.pem

ADDED

|

@@ -0,0 +1,31 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

-----BEGIN CERTIFICATE-----

|

| 2 |

+

MIIFazCCA1OgAwIBAgIRAIIQz7DSQONZRGPgu2OCiwAwDQYJKoZIhvcNAQELBQAw

|

| 3 |

+

TzELMAkGA1UEBhMCVVMxKTAnBgNVBAoTIEludGVybmV0IFNlY3VyaXR5IFJlc2Vh

|

| 4 |

+

cmNoIEdyb3VwMRUwEwYDVQQDEwxJU1JHIFJvb3QgWDEwHhcNMTUwNjA0MTEwNDM4

|

| 5 |

+

WhcNMzUwNjA0MTEwNDM4WjBPMQswCQYDVQQGEwJVUzEpMCcGA1UEChMgSW50ZXJu

|

| 6 |

+

ZXQgU2VjdXJpdHkgUmVzZWFyY2ggR3JvdXAxFTATBgNVBAMTDElTUkcgUm9vdCBY

|

| 7 |

+

MTCCAiIwDQYJKoZIhvcNAQEBBQADggIPADCCAgoCggIBAK3oJHP0FDfzm54rVygc

|

| 8 |

+

h77ct984kIxuPOZXoHj3dcKi/vVqbvYATyjb3miGbESTtrFj/RQSa78f0uoxmyF+

|

| 9 |

+

0TM8ukj13Xnfs7j/EvEhmkvBioZxaUpmZmyPfjxwv60pIgbz5MDmgK7iS4+3mX6U

|

| 10 |

+

A5/TR5d8mUgjU+g4rk8Kb4Mu0UlXjIB0ttov0DiNewNwIRt18jA8+o+u3dpjq+sW

|

| 11 |

+

T8KOEUt+zwvo/7V3LvSye0rgTBIlDHCNAymg4VMk7BPZ7hm/ELNKjD+Jo2FR3qyH

|

| 12 |

+

B5T0Y3HsLuJvW5iB4YlcNHlsdu87kGJ55tukmi8mxdAQ4Q7e2RCOFvu396j3x+UC

|

| 13 |

+

B5iPNgiV5+I3lg02dZ77DnKxHZu8A/lJBdiB3QW0KtZB6awBdpUKD9jf1b0SHzUv

|

| 14 |

+

KBds0pjBqAlkd25HN7rOrFleaJ1/ctaJxQZBKT5ZPt0m9STJEadao0xAH0ahmbWn

|

| 15 |

+

OlFuhjuefXKnEgV4We0+UXgVCwOPjdAvBbI+e0ocS3MFEvzG6uBQE3xDk3SzynTn

|

| 16 |

+

jh8BCNAw1FtxNrQHusEwMFxIt4I7mKZ9YIqioymCzLq9gwQbooMDQaHWBfEbwrbw

|

| 17 |

+

qHyGO0aoSCqI3Haadr8faqU9GY/rOPNk3sgrDQoo//fb4hVC1CLQJ13hef4Y53CI

|

| 18 |

+

rU7m2Ys6xt0nUW7/vGT1M0NPAgMBAAGjQjBAMA4GA1UdDwEB/wQEAwIBBjAPBgNV

|

| 19 |

+

HRMBAf8EBTADAQH/MB0GA1UdDgQWBBR5tFnme7bl5AFzgAiIyBpY9umbbjANBgkq

|

| 20 |

+

hkiG9w0BAQsFAAOCAgEAVR9YqbyyqFDQDLHYGmkgJykIrGF1XIpu+ILlaS/V9lZL

|

| 21 |

+

ubhzEFnTIZd+50xx+7LSYK05qAvqFyFWhfFQDlnrzuBZ6brJFe+GnY+EgPbk6ZGQ

|

| 22 |

+

3BebYhtF8GaV0nxvwuo77x/Py9auJ/GpsMiu/X1+mvoiBOv/2X/qkSsisRcOj/KK

|

| 23 |

+

NFtY2PwByVS5uCbMiogziUwthDyC3+6WVwW6LLv3xLfHTjuCvjHIInNzktHCgKQ5

|

| 24 |

+

ORAzI4JMPJ+GslWYHb4phowim57iaztXOoJwTdwJx4nLCgdNbOhdjsnvzqvHu7Ur

|

| 25 |

+

TkXWStAmzOVyyghqpZXjFaH3pO3JLF+l+/+sKAIuvtd7u+Nxe5AW0wdeRlN8NwdC

|

| 26 |

+

jNPElpzVmbUq4JUagEiuTDkHzsxHpFKVK7q4+63SM1N95R1NbdWhscdCb+ZAJzVc

|

| 27 |

+

oyi3B43njTOQ5yOf+1CceWxG1bQVs5ZufpsMljq4Ui0/1lvh+wjChP4kqKOJ2qxq

|

| 28 |

+

4RgqsahDYVvTH9w7jXbyLeiNdd8XM2w9U/t7y0Ff/9yi0GE44Za4rF2LN9d11TPA

|

| 29 |

+

mRGunUHBcnWEvgJBQl9nJEiU0Zsnvgc/ubhPgXRR4Xq37Z0j4r7g1SgEEzwxA57d

|

| 30 |

+

emyPxgcYxn/eR44/KJ4EBs+lVDR3veyJm+kXQ99b21/+jh5Xos1AnX5iItreGCc=

|

| 31 |

+

-----END CERTIFICATE-----

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2023 JackismyShephard

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,12 +1,254 @@

|

|

| 1 |

---

|

| 2 |

title: HRVC

|

| 3 |

-

|

| 4 |

-

colorFrom: green

|

| 5 |

-

colorTo: gray

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 5.6.0

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

---

|

|

|

|

| 11 |

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

title: HRVC

|

| 3 |

+

app_file: src/ultimate_rvc/web/main.py

|

|

|

|

|

|

|

| 4 |

sdk: gradio

|

| 5 |

sdk_version: 5.6.0

|

|

|

|

|

|

|

| 6 |

---

|

| 7 |

+

# Ultimate RVC

|

| 8 |

|

| 9 |

+

An extension of [AiCoverGen](https://github.com/SociallyIneptWeeb/AICoverGen), which provides several new features and improvements, enabling users to generate song covers using RVC with ease. Ideal for people who want to incorporate singing functionality into their AI assistant/chatbot/vtuber, or for people who want to hear their favourite characters sing their favourite song.

|

| 10 |

+

|

| 11 |

+

<!-- Showcase: TBA -->

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

Ultimate RVC is under constant development and testing, but you can try it out right now locally or on Google Colab!

|

| 16 |

+

|

| 17 |

+

## New Features

|

| 18 |

+

|

| 19 |

+

* Easy and automated setup using launcher scripts for both windows and Debian-based linux systems

|

| 20 |

+

* Caching system which saves intermediate audio files as needed, thereby reducing inference time as much as possible. For example, if song A has already been converted using model B and now you want to convert song A using model C, then vocal extraction can be skipped and inference time reduced drastically

|

| 21 |

+

* Ability to listen to intermediate audio files in the UI. This is useful for getting an idea of what is happening in each step of the song cover generation pipeline

|

| 22 |

+

* A "multi-step" song cover generation tab: here you can try out each step of the song cover generation pipeline in isolation. For example, if you already have extracted vocals available and only want to convert these using your voice model, then you can do that here. Besides, this tab is useful for experimenting with settings for each step of the song cover generation pipeline

|

| 23 |

+

* An overhaul of the song input component for the song cover generation pipeline. Now cached input songs can be selected from a dropdown, so that you don't have to supply the Youtube link of a song each time you want to convert it.

|

| 24 |

+

* A new "manage models" tab, which collects and revamps all existing functionality for managing voice models, as well as adds some new features, such as the ability to delete existing models

|

| 25 |

+

* A new "manage audio" tab, which allows you to interact with all audio generated by the app. Currently, this tab supports deleting audio files.

|

| 26 |

+

* Lots of visual and performance improvements resulting from updating from Gradio 3 to Gradio 5 and from python 3.9 to python 3.12

|

| 27 |

+

* A redistributable package on PyPI, which allows you to access the Ultimate RVC project without cloning any repositories.

|

| 28 |

+

|

| 29 |

+

## Colab notebook

|

| 30 |

+

|

| 31 |

+

For those without a powerful enough NVIDIA GPU, you may try Ultimate RVC out using Google Colab.

|

| 32 |

+

|

| 33 |

+

[](https://colab.research.google.com/github/JackismyShephard/ultimate-rvc/blob/main/notebooks/ultimate_rvc_colab.ipynb)

|

| 34 |

+

|

| 35 |

+

For those who want to run the Ultimate RVC project locally, follow the setup guide below.

|

| 36 |

+

|

| 37 |

+

## Setup

|

| 38 |

+

|

| 39 |

+

The Ultimate RVC project currently supports Windows and Debian-based Linux distributions, namely Ubuntu 22.04 and Ubuntu 24.04. Support for other platforms is not guaranteed.

|

| 40 |

+

|

| 41 |

+

To setup the project follow the steps below and execute the provided commands in an appropriate terminal. On windows this terminal should be **powershell**, while on Debian-based linux distributions it should be a **bash**-compliant shell.

|

| 42 |

+

|

| 43 |

+

### Install Git

|

| 44 |

+

|

| 45 |

+

Follow the instructions [here](https://git-scm.com/book/en/v2/Getting-Started-Installing-Git) to install Git on your computer.

|

| 46 |

+

|

| 47 |

+

### Set execution policy (Windows only)

|

| 48 |

+

|

| 49 |

+

To execute the subsequent commands on Windows, it is necessary to first grant

|

| 50 |

+

powershell permission to run scripts. This can be done at a user level as follows:

|

| 51 |

+

|

| 52 |

+

```console

|

| 53 |

+

Set-ExecutionPolicy RemoteSigned -Scope CurrentUser

|

| 54 |

+

```

|

| 55 |

+

|

| 56 |

+

### Clone Ultimate RVC repository

|

| 57 |

+

|

| 58 |

+

```console

|

| 59 |

+

git clone https://github.com/JackismyShephard/ultimate-rvc

|

| 60 |

+

cd ultimate-rvc

|

| 61 |

+

```

|

| 62 |

+

|

| 63 |

+

### Install dependencies

|

| 64 |

+

|

| 65 |

+

```console

|

| 66 |

+

./urvc install

|

| 67 |

+

```

|

| 68 |

+

Note that on Linux, this command will install the CUDA 12.4 toolkit system-wide, if it is not already available. In case you have problems, you may need to install the toolkit manually.

|

| 69 |

+

|

| 70 |

+

## Usage

|

| 71 |

+

|

| 72 |

+

### Start the app

|

| 73 |

+

|

| 74 |

+

```console

|

| 75 |

+

./urvc run

|

| 76 |

+

```

|

| 77 |

+

|

| 78 |

+

Once the following output message `Running on local URL: http://127.0.0.1:7860` appears, you can click on the link to open a tab with the web app.

|

| 79 |

+

|

| 80 |

+

### Manage models

|

| 81 |

+

|

| 82 |

+

#### Download models

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

Navigate to the `Download model` subtab under the `Manage models` tab, and paste the download link to an RVC model and give it a unique name.

|

| 87 |

+

You may search the [AI Hub Discord](https://discord.gg/aihub) where already trained voice models are available for download.

|

| 88 |

+

The downloaded zip file should contain the .pth model file and an optional .index file.

|

| 89 |

+

|

| 90 |

+

Once the 2 input fields are filled in, simply click `Download`! Once the output message says `[NAME] Model successfully downloaded!`, you should be able to use it in the `Generate song covers` tab!

|

| 91 |

+

|

| 92 |

+

#### Upload models

|

| 93 |

+

|

| 94 |

+

|

| 95 |

+

|

| 96 |

+

For people who have trained RVC v2 models locally and would like to use them for AI cover generations.

|

| 97 |

+

Navigate to the `Upload model` subtab under the `Manage models` tab, and follow the instructions.

|

| 98 |

+

Once the output message says `Model with name [NAME] successfully uploaded!`, you should be able to use it in the `Generate song covers` tab!

|

| 99 |

+

|

| 100 |

+

#### Delete RVC models

|

| 101 |

+

|

| 102 |

+

TBA

|

| 103 |

+

|

| 104 |

+

### Generate song covers

|

| 105 |

+

|

| 106 |

+

#### One-click generation

|

| 107 |

+

|

| 108 |

+

|

| 109 |

+

|

| 110 |

+

* From the Voice model dropdown menu, select the voice model to use.

|

| 111 |

+

* In the song input field, copy and paste the link to any song on YouTube, the full path to a local audio file, or select a cached input song.

|

| 112 |

+

* Pitch should be set to either -12, 0, or 12 depending on the original vocals and the RVC AI modal. This ensures the voice is not *out of tune*.

|

| 113 |

+

* Other advanced options for vocal conversion, audio mixing and etc. can be viewed by clicking the appropriate accordion arrow to expand.

|

| 114 |

+

|

| 115 |

+

Once all options are filled in, click `Generate` and the AI generated cover should appear in a less than a few minutes depending on your GPU.

|

| 116 |

+

|

| 117 |

+

#### Multi-step generation

|

| 118 |

+

|

| 119 |

+

TBA

|

| 120 |

+

|

| 121 |

+

## CLI

|

| 122 |

+

|

| 123 |

+

### Manual download of RVC models

|

| 124 |

+

|

| 125 |

+

Unzip (if needed) and transfer the `.pth` and `.index` files to a new folder in the [rvc models](models/rvc) directory. Each folder should only contain one `.pth` and one `.index` file.

|

| 126 |

+

|

| 127 |

+

The directory structure should look something like this:

|

| 128 |

+

|

| 129 |

+

```text

|

| 130 |

+

├── models

|

| 131 |

+

| ├── audio_separator

|

| 132 |

+

| ├── rvc

|

| 133 |

+

│ ├── John

|

| 134 |

+

│ │ ├── JohnV2.pth

|

| 135 |

+

│ │ └── added_IVF2237_Flat_nprobe_1_v2.index

|

| 136 |

+

│ ├── May

|

| 137 |

+

│ │ ├── May.pth

|

| 138 |

+

│ │ └── added_IVF2237_Flat_nprobe_1_v2.index

|

| 139 |

+

│ └── hubert_base.pt

|

| 140 |

+

├── notebooks

|

| 141 |

+

├── notes

|

| 142 |

+

└── src

|

| 143 |

+

```

|

| 144 |

+

|

| 145 |

+

### Running the pipeline

|

| 146 |

+

|

| 147 |

+

#### Usage

|

| 148 |

+

|

| 149 |

+

```console

|

| 150 |

+

./urvc cli song-cover run-pipeline [OPTIONS] SOURCE MODEL_NAME

|

| 151 |

+

```

|

| 152 |

+

|

| 153 |

+

##### Arguments

|

| 154 |

+

|

| 155 |

+

* `SOURCE`: A Youtube URL, the path to a local audio file or the path to a song directory. [required]

|

| 156 |

+

* `MODEL_NAME`: The name of the voice model to use for vocal conversion. [required]

|

| 157 |

+

|

| 158 |

+

##### Options

|

| 159 |

+

|

| 160 |

+

* `--n-octaves INTEGER`: The number of octaves to pitch-shift the converted vocals by.Use 1 for male-to-female and -1 for vice-versa. [default: 0]

|

| 161 |

+

* `--n-semitones INTEGER`: The number of semi-tones to pitch-shift the converted vocals, instrumentals, and backup vocals by. Altering this slightly reduces sound quality [default: 0]

|

| 162 |

+

* `--f0-method [rmvpe|mangio-crepe]`: The method to use for pitch detection during vocal conversion. Best option is RMVPE (clarity in vocals), then Mangio-Crepe (smoother vocals). [default: rmvpe]

|

| 163 |

+

* `--index-rate FLOAT RANGE`: A decimal number e.g. 0.5, Controls how much of the accent in the voice model to keep in the converted vocals. Increase to bias the conversion towards the accent of the voice model. [default: 0.5; 0<=x<=1]

|

| 164 |

+

* `--filter-radius INTEGER RANGE`: A number between 0 and 7. If >=3: apply median filtering to the pitch results harvested during vocal conversion. Can help reduce breathiness in the converted vocals. [default: 3; 0<=x<=7]

|

| 165 |

+

* `--rms-mix-rate FLOAT RANGE`: A decimal number e.g. 0.25. Controls how much to mimic the loudness of the input vocals (0) or a fixed loudness (1) during vocal conversion. [default: 0.25; 0<=x<=1]

|

| 166 |

+

* `--protect FLOAT RANGE`: A decimal number e.g. 0.33. Controls protection of voiceless consonants and breath sounds during vocal conversion. Decrease to increase protection at the cost of indexing accuracy. Set to 0.5 to disable. [default: 0.33; 0<=x<=0.5]

|

| 167 |

+

* `--hop-length INTEGER`: Controls how often the CREPE-based pitch detection algorithm checks for pitch changes during vocal conversion. Measured in milliseconds. Lower values lead to longer conversion times and a higher risk of voice cracks, but better pitch accuracy. Recommended value: 128. [default: 128]

|

| 168 |

+

* `--room-size FLOAT RANGE`: The room size of the reverb effect applied to the converted vocals. Increase for longer reverb time. Should be a value between 0 and 1. [default: 0.15; 0<=x<=1]

|

| 169 |

+

* `--wet-level FLOAT RANGE`: The loudness of the converted vocals with reverb effect applied. Should be a value between 0 and 1 [default: 0.2; 0<=x<=1]

|

| 170 |

+

* `--dry-level FLOAT RANGE`: The loudness of the converted vocals wihout reverb effect applied. Should be a value between 0 and 1. [default: 0.8; 0<=x<=1]

|

| 171 |

+

* `--damping FLOAT RANGE`: The absorption of high frequencies in the reverb effect applied to the converted vocals. Should be a value between 0 and 1. [default: 0.7; 0<=x<=1]

|

| 172 |

+

* `--main-gain INTEGER`: The gain to apply to the post-processed vocals. Measured in dB. [default: 0]

|

| 173 |

+

* `--inst-gain INTEGER`: The gain to apply to the pitch-shifted instrumentals. Measured in dB. [default: 0]

|

| 174 |

+

* `--backup-gain INTEGER`: The gain to apply to the pitch-shifted backup vocals. Measured in dB. [default: 0]

|

| 175 |

+

* `--output-sr INTEGER`: The sample rate of the song cover. [default: 44100]

|

| 176 |

+

* `--output-format [mp3|wav|flac|ogg|m4a|aac]`: The audio format of the song cover. [default: mp3]

|

| 177 |

+

* `--output-name TEXT`: The name of the song cover.

|

| 178 |

+

* `--help`: Show this message and exit.

|

| 179 |

+

|

| 180 |

+

## Update to latest version

|

| 181 |

+

|

| 182 |

+

```console

|

| 183 |

+

./urvc update

|

| 184 |

+

```

|

| 185 |

+

|

| 186 |

+

## Development mode

|

| 187 |

+

|

| 188 |

+

When developing new features or debugging, it is recommended to run the app in development mode. This enables hot reloading, which means that the app will automatically reload when changes are made to the code.

|

| 189 |

+

|

| 190 |

+

```console

|

| 191 |

+

./urvc dev

|

| 192 |

+

```

|

| 193 |

+

|

| 194 |

+

## PyPI package

|

| 195 |

+

|

| 196 |

+

The Ultimate RVC project is also available as a [distributable package](https://pypi.org/project/ultimate-rvc/) on [PyPI](https://pypi.org/).

|

| 197 |

+

|

| 198 |

+

### Installation

|

| 199 |

+

|

| 200 |

+

The package can be installed with pip in a **Python 3.12**-based environment. To do so requires first installing PyTorch with Cuda support:

|

| 201 |

+

|

| 202 |

+

```console

|

| 203 |

+

pip install torch==2.5.1+cu124 torchaudio==2.5.1+cu124 --index-url https://download.pytorch.org/whl/cu124

|

| 204 |

+

```

|

| 205 |

+

|

| 206 |

+

Additionally, on Windows the `diffq` package must be installed manually as follows:

|

| 207 |

+

|

| 208 |

+

```console

|

| 209 |

+

pip install https://huggingface.co/JackismyShephard/ultimate-rvc/resolve/main/diffq-0.2.4-cp312-cp312-win_amd64.whl

|

| 210 |

+

```

|

| 211 |

+

|

| 212 |

+

The Ultimate RVC project package can then be installed as follows:

|

| 213 |

+

|

| 214 |

+

```console

|

| 215 |

+

pip install ultimate-rvc

|

| 216 |

+

```

|

| 217 |

+

|

| 218 |

+

### Usage

|

| 219 |

+

|

| 220 |

+

The `ultimate-rvc` package can be used as a python library but is primarily intended to be used as a command line tool. The package exposes two top-level commands:

|

| 221 |

+

|

| 222 |

+

* `urvc` which lets the user generate song covers directly from their terminal

|

| 223 |

+

* `urvc-web` which starts a local instance of the Ultimate RVC web application

|

| 224 |

+

|

| 225 |

+

For more information on either command supply the option `--help`.

|

| 226 |

+

|

| 227 |

+

## Environment Variables

|

| 228 |

+

|

| 229 |

+

The behaviour of the Ultimate RVC project can be customized via a number of environment variables. Currently these environment variables control only logging behaviour. They are as follows:

|

| 230 |

+

|

| 231 |

+

* `URVC_CONSOLE_LOG_LEVEL`: The log level for console logging. If not set, defaults to `ERROR`.

|

| 232 |

+

* `URVC_FILE_LOG_LEVEL`: The log level for file logging. If not set, defaults to `INFO`.

|

| 233 |

+

* `URVC_LOGS_DIR`: The directory in which log files will be stored. If not set, logs will be stored in a `logs` directory in the current working directory.

|

| 234 |

+

* `URVC_NO_LOGGING`: If set to `1`, logging will be disabled.

|

| 235 |

+

|

| 236 |

+

## Terms of Use

|

| 237 |

+

|

| 238 |

+

The use of the converted voice for the following purposes is prohibited.

|

| 239 |

+

|

| 240 |

+

* Criticizing or attacking individuals.

|

| 241 |

+

|

| 242 |

+

* Advocating for or opposing specific political positions, religions, or ideologies.

|

| 243 |

+

|

| 244 |

+

* Publicly displaying strongly stimulating expressions without proper zoning.

|

| 245 |

+

|

| 246 |

+

* Selling of voice models and generated voice clips.

|

| 247 |

+

|

| 248 |

+

* Impersonation of the original owner of the voice with malicious intentions to harm/hurt others.

|

| 249 |

+

|

| 250 |

+

* Fraudulent purposes that lead to identity theft or fraudulent phone calls.

|

| 251 |

+

|

| 252 |

+

## Disclaimer

|

| 253 |

+

|

| 254 |

+

I am not liable for any direct, indirect, consequential, incidental, or special damages arising out of or in any way connected with the use/misuse or inability to use this software.

|

images/webui_dl_model.png

ADDED

|

images/webui_generate.png

ADDED

|

images/webui_upload_model.png

ADDED

|

notebooks/ultimate_rvc_colab.ipynb

ADDED

|

@@ -0,0 +1,109 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"metadata": {

|

| 6 |

+

"id": "kmyCzJVyCymN"

|

| 7 |

+

},

|

| 8 |

+

"source": [

|

| 9 |

+

"Colab for [Ultimate RVC](https://github.com/JackismyShephard/ultimate-rvc)\n",

|

| 10 |

+

"\n",

|

| 11 |

+

"This Colab notebook will **help** you if you don’t have a GPU or if your PC isn’t very powerful.\n",

|

| 12 |

+

"\n",

|

| 13 |

+

"Simply click `Runtime` in the top navigation bar and `Run all`. Wait for the output of the final cell to show the public gradio url and click on it.\n"

|

| 14 |

+

]

|

| 15 |

+

},

|

| 16 |

+

{

|

| 17 |

+

"cell_type": "code",

|

| 18 |

+

"execution_count": null,

|

| 19 |

+

"metadata": {},

|

| 20 |

+

"outputs": [],

|

| 21 |

+

"source": [

|

| 22 |

+

"# @title 0: Initialize notebook\n",

|

| 23 |

+

"%pip install ipython-autotime\n",

|

| 24 |

+

"%load_ext autotime\n",

|

| 25 |

+

"\n",

|

| 26 |

+

"import codecs\n",

|

| 27 |

+

"import os\n",

|

| 28 |

+

"\n",

|

| 29 |

+

"from IPython.display import clear_output\n",

|

| 30 |

+

"\n",

|

| 31 |

+

"clear_output()"

|

| 32 |

+

]

|

| 33 |

+

},

|

| 34 |

+

{

|

| 35 |

+

"cell_type": "code",

|

| 36 |

+

"execution_count": null,

|

| 37 |

+

"metadata": {

|

| 38 |

+

"cellView": "form",

|

| 39 |

+

"id": "aaokDv1VzpAX"

|

| 40 |

+

},

|

| 41 |

+

"outputs": [],

|

| 42 |

+

"source": [

|

| 43 |

+

"# @title 1: Clone repository\n",

|

| 44 |

+

"cloneing = codecs.decode(\n",

|

| 45 |

+

" \"uggcf://tvguho.pbz/WnpxvfzlFurcuneq/hygvzngr-eip.tvg\",\n",

|

| 46 |

+

" \"rot_13\",\n",

|

| 47 |

+

")\n",

|

| 48 |

+

"\n",

|

| 49 |

+

"!git clone $cloneing HRVC\n",

|

| 50 |

+

"%cd /content/HRVC\n",

|

| 51 |

+

"clear_output()"

|

| 52 |

+

]

|

| 53 |

+

},

|

| 54 |

+

{

|

| 55 |

+

"cell_type": "code",

|

| 56 |

+

"execution_count": null,

|

| 57 |

+

"metadata": {

|

| 58 |

+

"cellView": "form",

|

| 59 |

+

"id": "lVGNygIa0F_1"

|

| 60 |

+

},

|

| 61 |

+

"outputs": [],

|

| 62 |

+

"source": [

|

| 63 |

+

"# @title 2: Install dependencies\n",

|

| 64 |

+

"\n",

|

| 65 |

+

"light = codecs.decode(\"uggcf://nfgeny.fu/hi/0.5.0/vafgnyy.fu\", \"rot_13\")\n",

|

| 66 |

+

"inits = codecs.decode(\"./fep/hygvzngr_eip/pber/znva.cl\", \"rot_13\")\n",

|

| 67 |

+

"\n",

|

| 68 |

+

"!apt install -y python3-dev unzip\n",

|

| 69 |

+

"!curl -LsSf $light | sh\n",

|

| 70 |

+

"\n",

|

| 71 |

+

"os.environ[\"URVC_CONSOLE_LOG_LEVEL\"] = \"WARNING\"\n",

|

| 72 |

+

"!uv run -q $inits\n",

|

| 73 |

+

"clear_output()"

|

| 74 |

+

]

|

| 75 |

+

},

|

| 76 |

+

{

|

| 77 |

+

"cell_type": "code",

|

| 78 |

+

"execution_count": null,

|

| 79 |

+

"metadata": {

|

| 80 |

+

"cellView": "form",

|

| 81 |

+

"id": "lVGNygIa0F_2"

|

| 82 |

+

},

|

| 83 |

+

"outputs": [],

|

| 84 |

+

"source": [

|

| 85 |

+

"# @title 3: Run Ultimate RVC\n",

|

| 86 |

+

"\n",

|

| 87 |

+

"runpice = codecs.decode(\"./fep/hygvzngr_eip/jro/znva.cl\", \"rot_13\")\n",

|

| 88 |

+

"\n",

|

| 89 |

+

"!uv run $runpice --share"

|

| 90 |

+

]

|

| 91 |

+

}

|

| 92 |

+

],

|

| 93 |

+

"metadata": {

|

| 94 |

+

"accelerator": "GPU",

|

| 95 |

+

"colab": {

|

| 96 |

+

"gpuType": "T4",

|

| 97 |

+

"provenance": []

|

| 98 |

+

},

|

| 99 |

+

"kernelspec": {

|

| 100 |

+

"display_name": "Python 3",

|

| 101 |

+

"name": "python3"

|

| 102 |

+

},

|

| 103 |

+

"language_info": {

|

| 104 |

+

"name": "python"

|

| 105 |

+

}

|

| 106 |

+

},

|

| 107 |

+

"nbformat": 4,

|

| 108 |

+

"nbformat_minor": 0

|

| 109 |

+

}

|

notes/TODO.md

ADDED

|

@@ -0,0 +1,462 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# TODO

|

| 2 |

+

|

| 3 |

+

* should rename instances of "models" to "voice models"

|

| 4 |

+

|

| 5 |

+

## Project/task management

|

| 6 |

+

|

| 7 |

+

* Should find tool for project/task management

|

| 8 |

+

* Tool should support:

|

| 9 |

+

* hierarchical tasks

|

| 10 |

+

* custom labels and or priorities on tasks

|

| 11 |

+

* being able to filter tasks based on those labels

|

| 12 |

+

* being able to close and resolve tasks

|

| 13 |

+

* Being able to integrate with vscode

|

| 14 |

+

* Access for multiple people (in a team)

|

| 15 |

+

* Should migrate the content of this file into tool

|

| 16 |

+

* Potential candidates

|

| 17 |

+

* GitHub projects

|

| 18 |

+

* Does not yet support hierarchical tasks so no

|

| 19 |

+

* Trello

|

| 20 |

+

* Does not seem to support hierarchical tasks either

|

| 21 |

+

* Notion

|

| 22 |

+

* Seems to support hierarchical tasks, but is complicated

|

| 23 |

+

* Todoist

|

| 24 |

+

* seems to support both hierarchical tasks, custom labels, filtering on those labels, multiple users and there are unofficial plugins for vscode.

|

| 25 |

+

|

| 26 |

+

## Front end

|

| 27 |

+

|

| 28 |

+

### Modularization

|

| 29 |

+

|

| 30 |

+

* Improve modularization of web code using helper functions defined [here](https://huggingface.co/spaces/WoWoWoWololo/wrapping-layouts/blob/main/app.py)

|

| 31 |

+

* Split front-end modules into further sub-modules.

|

| 32 |

+

* Structure of web folder should be:

|

| 33 |

+

* `web`

|

| 34 |

+

* `manage_models`

|

| 35 |

+

* `__init__.py`

|

| 36 |

+

* `main.py`

|

| 37 |

+

* `manage_audio`

|

| 38 |

+

* `__init__.py`

|

| 39 |

+

* `main.py`

|

| 40 |

+

* `generate_song_covers`

|

| 41 |

+

* `__init__.py`

|

| 42 |

+

* `main.py`

|

| 43 |

+

* `one_click_generation`

|

| 44 |

+

* `__init__.py`

|

| 45 |

+

* `main.py`

|

| 46 |

+

* `accordions`

|

| 47 |

+

* `__init__.py`

|

| 48 |

+