Spaces:

Runtime error

Runtime error

clean up. add descriptions

Browse files- README.md +62 -0

- app.py +2 -7

- screenshot/filter.png +0 -0

- screenshot/selfquery1.png +0 -0

- screenshot/selfquery2.png +0 -0

- screenshot/selfquery_setup.png +0 -0

README.md

CHANGED

|

@@ -11,3 +11,65 @@ license: mit

|

|

| 11 |

---

|

| 12 |

|

| 13 |

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 11 |

---

|

| 12 |

|

| 13 |

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

| 14 |

+

|

| 15 |

+

# Chatbot

|

| 16 |

+

|

| 17 |

+

This is a chatbot that can answer questions based on a given context. It uses OpenAI embeddings and a language model to generate responses. The chatbot also has the ability to ingest documents and store them in a Pinecone index with a given namespace for later retrieval. The most important feature is to utilize Langchain's self-querying retriever, which automatically creates a filter from the user query on the metadata of stored documents and to execute those filter.

|

| 18 |

+

|

| 19 |

+

## Setup

|

| 20 |

+

|

| 21 |

+

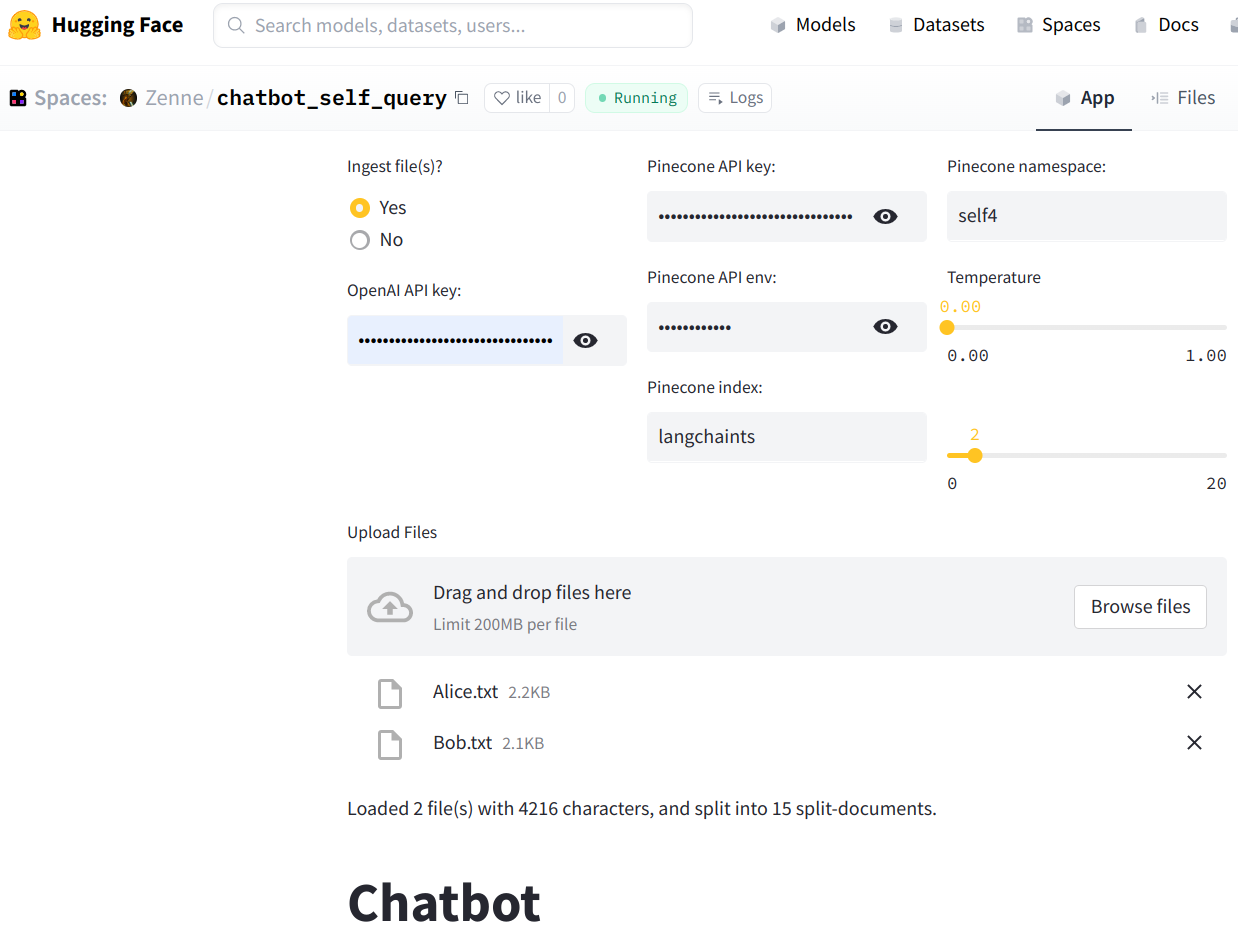

To use the chatbot, you will need to provide an OpenAI API key and a Pinecone API key. You can enter these keys in the appropriate fields when prompted. The Pinecone API environment, index, and namespace will also be entered.

|

| 22 |

+

|

| 23 |

+

## Ingesting Files

|

| 24 |

+

|

| 25 |

+

If you have files that you would like to ingest, you can do so by selecting "Yes" when prompted to ingest files. You can then upload your files and they will be stored in the given Pinecone index associated with the given namespace for later retrieval. The files can be PDF, doc/docx, txt, or a mixture of them.

|

| 26 |

+

If you have previously ingested files and stored in Pinecone, you may indicate No to 'Ingest file(s)?' and the data in the given Pinecone index/namespace will be used.

|

| 27 |

+

|

| 28 |

+

## Usage

|

| 29 |

+

|

| 30 |

+

To use the chatbot, simply enter your question in the text area provided. The chatbot will generate a response based on the context and your question.

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

## Retrieving Documents and self-query filter

|

| 34 |

+

|

| 35 |

+

To retrieve documents, the chatbot uses a SelfQueryRetriever and a Pinecone index. The chatbot will search the index for documents that are relevant to your question and return them as a list.

|

| 36 |

+

|

| 37 |

+

Self-query retrieval is described in https://python.langchain.com/en/latest/modules/indexes/retrievers/examples/self_query_retriever.html. In this app, a simple, pre-defined metadata field is provided:

|

| 38 |

+

```

|

| 39 |

+

metadata_field_info = [

|

| 40 |

+

AttributeInfo(

|

| 41 |

+

name="author",

|

| 42 |

+

description="The author of the document/text/piece of context",

|

| 43 |

+

type="string or list[string]",

|

| 44 |

+

)

|

| 45 |

+

]

|

| 46 |

+

document_content_description = "Views/opions/proposals suggested by the author on one or more discussion points."

|

| 47 |

+

```

|

| 48 |

+

This assumes the ingested files are named by their authors in order to use the self-querying retriever. Then when the user asks a question about one or more specific authors, a filter will be automatically created and only those files by those authors will be used to generate responses. An example:

|

| 49 |

+

```

|

| 50 |

+

query='space exploration alice view' filter=Comparison(comparator=<Comparator.EQ: 'eq'>, attribute='author', value='Alice')

|

| 51 |

+

query='space exploration bob view' filter=Comparison(comparator=<Comparator.EQ: 'eq'>, attribute='author', value='Bob')

|

| 52 |

+

```

|

| 53 |

+

|

| 54 |

+

If the filter returns nothing, such as when the user's question is unrelated to the metadata field, or when self-querying is not intended, the app will fallback to the traditional similarity search for answering the question.

|

| 55 |

+

|

| 56 |

+

This can be easily generalized, e.g., user uploading a metadata field file and description for a particular task. This is subject to future work.

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

## Generating Responses

|

| 60 |

+

|

| 61 |

+

The chatbot generates responses using a large language model and OpenAI embeddings. It uses the context and your question to generate a response that is relevant and informative.

|

| 62 |

+

|

| 63 |

+

## Saving Chat History

|

| 64 |

+

|

| 65 |

+

The chatbot saves chat history in a JSON file. You can view your chat history in chat_history folder. However, as of now, chat history is not used in the Q&A process.

|

| 66 |

+

|

| 67 |

+

## Screenshot

|

| 68 |

+

* Set up the API keys and Pinecone index/namespace

|

| 69 |

+

|

| 70 |

+

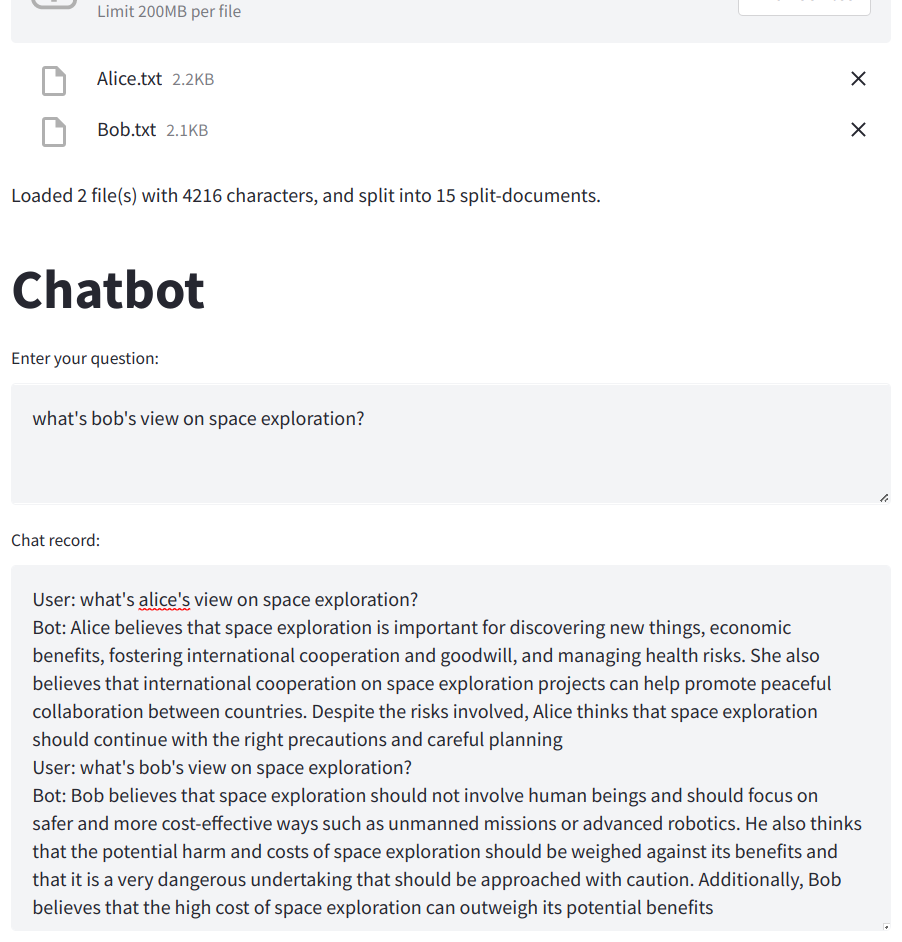

* A first self-querying retrieval

|

| 71 |

+

|

| 72 |

+

* A second self-querying retrieval

|

| 73 |

+

|

| 74 |

+

* The fitlers automatically created by the app for the self-querying retrievals

|

| 75 |

+

|

app.py

CHANGED

|

@@ -94,7 +94,7 @@ def ingest(_all_texts, _embeddings, pinecone_index_name, pinecone_namespace):

|

|

| 94 |

return docsearch

|

| 95 |

|

| 96 |

|

| 97 |

-

def setup_retriever(docsearch,

|

| 98 |

metadata_field_info = [

|

| 99 |

AttributeInfo(

|

| 100 |

name="author",

|

|

@@ -163,9 +163,7 @@ pinecone_index_name, pinecone_namespace, docsearch_ready, directory_name = init(

|

|

| 163 |

def main(pinecone_index_name, pinecone_namespace, docsearch_ready):

|

| 164 |

docsearch_ready = False

|

| 165 |

chat_history = []

|

| 166 |

-

# Get user input of whether to use Pinecone or not

|

| 167 |

col1, col2, col3 = st.columns([1, 1, 1])

|

| 168 |

-

# create the radio buttons and text input fields

|

| 169 |

with col1:

|

| 170 |

r_ingest = st.radio(

|

| 171 |

'Ingest file(s)?', ('Yes', 'No'))

|

|

@@ -183,7 +181,6 @@ def main(pinecone_index_name, pinecone_namespace, docsearch_ready):

|

|

| 183 |

with col3:

|

| 184 |

pinecone_namespace = st.text_input('Pinecone namespace:')

|

| 185 |

temperature = st.slider('Temperature', 0.0, 1.0, 0.1)

|

| 186 |

-

k_sources = st.slider('# source(s) to print out', 0, 20, 2)

|

| 187 |

|

| 188 |

if pinecone_index_name:

|

| 189 |

session_name = pinecone_index_name

|

|

@@ -204,9 +201,7 @@ def main(pinecone_index_name, pinecone_namespace, docsearch_ready):

|

|

| 204 |

embeddings)

|

| 205 |

docsearch_ready = True

|

| 206 |

if docsearch_ready:

|

| 207 |

-

|

| 208 |

-

k = min([20, n_texts])

|

| 209 |

-

retriever = setup_retriever(docsearch, k, llm)

|

| 210 |

CRqa = load_qa_with_sources_chain(llm, chain_type="stuff")

|

| 211 |

|

| 212 |

st.title('Chatbot')

|

|

|

|

| 94 |

return docsearch

|

| 95 |

|

| 96 |

|

| 97 |

+

def setup_retriever(docsearch, llm):

|

| 98 |

metadata_field_info = [

|

| 99 |

AttributeInfo(

|

| 100 |

name="author",

|

|

|

|

| 163 |

def main(pinecone_index_name, pinecone_namespace, docsearch_ready):

|

| 164 |

docsearch_ready = False

|

| 165 |

chat_history = []

|

|

|

|

| 166 |

col1, col2, col3 = st.columns([1, 1, 1])

|

|

|

|

| 167 |

with col1:

|

| 168 |

r_ingest = st.radio(

|

| 169 |

'Ingest file(s)?', ('Yes', 'No'))

|

|

|

|

| 181 |

with col3:

|

| 182 |

pinecone_namespace = st.text_input('Pinecone namespace:')

|

| 183 |

temperature = st.slider('Temperature', 0.0, 1.0, 0.1)

|

|

|

|

| 184 |

|

| 185 |

if pinecone_index_name:

|

| 186 |

session_name = pinecone_index_name

|

|

|

|

| 201 |

embeddings)

|

| 202 |

docsearch_ready = True

|

| 203 |

if docsearch_ready:

|

| 204 |

+

retriever = setup_retriever(docsearch, llm)

|

|

|

|

|

|

|

| 205 |

CRqa = load_qa_with_sources_chain(llm, chain_type="stuff")

|

| 206 |

|

| 207 |

st.title('Chatbot')

|

screenshot/filter.png

ADDED

|

screenshot/selfquery1.png

ADDED

|

screenshot/selfquery2.png

ADDED

|

screenshot/selfquery_setup.png

ADDED

|