Spaces:

Runtime error

Runtime error

init

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .ipynb_checkpoints/README-checkpoint.md +68 -0

- README_zh.md +56 -0

- app/__init__.py +0 -0

- app/all_models.py +22 -0

- app/custom_models/image2image-objaverseF-rgb2normal.yaml +61 -0

- app/custom_models/image2mvimage-objaverseFrot-wonder3d.yaml +63 -0

- app/custom_models/mvimg_prediction.py +57 -0

- app/custom_models/normal_prediction.py +26 -0

- app/custom_models/utils.py +75 -0

- app/examples/Groot.png +3 -0

- app/examples/aaa.png +3 -0

- app/examples/abma.png +3 -0

- app/examples/akun.png +3 -0

- app/examples/anya.png +3 -0

- app/examples/bag.png +3 -0

- app/examples/generated_1715761545_frame0.png +3 -0

- app/examples/generated_1715762357_frame0.png +3 -0

- app/examples/generated_1715763329_frame0.png +3 -0

- app/examples/hatsune_miku.png +3 -0

- app/examples/princess-large.png +3 -0

- app/examples/shoe.png +3 -0

- app/gradio_3dgen.py +71 -0

- app/gradio_3dgen_steps.py +87 -0

- app/gradio_local.py +76 -0

- app/utils.py +112 -0

- assets/teaser.jpg +0 -0

- ckpt/controlnet-tile/config.json +52 -0

- ckpt/controlnet-tile/diffusion_pytorch_model.safetensors +3 -0

- ckpt/image2normal/feature_extractor/preprocessor_config.json +44 -0

- ckpt/image2normal/image_encoder/config.json +23 -0

- ckpt/image2normal/image_encoder/model.safetensors +3 -0

- ckpt/image2normal/model_index.json +31 -0

- ckpt/image2normal/scheduler/scheduler_config.json +16 -0

- ckpt/image2normal/unet/config.json +68 -0

- ckpt/image2normal/unet/diffusion_pytorch_model.safetensors +3 -0

- ckpt/image2normal/unet_state_dict.pth +3 -0

- ckpt/image2normal/vae/config.json +34 -0

- ckpt/image2normal/vae/diffusion_pytorch_model.safetensors +3 -0

- ckpt/img2mvimg/feature_extractor/preprocessor_config.json +44 -0

- ckpt/img2mvimg/image_encoder/config.json +23 -0

- ckpt/img2mvimg/image_encoder/model.safetensors +3 -0

- ckpt/img2mvimg/model_index.json +31 -0

- ckpt/img2mvimg/scheduler/scheduler_config.json +20 -0

- ckpt/img2mvimg/unet/config.json +68 -0

- ckpt/img2mvimg/unet/diffusion_pytorch_model.safetensors +3 -0

- ckpt/img2mvimg/unet_state_dict.pth +3 -0

- ckpt/img2mvimg/vae/config.json +34 -0

- ckpt/img2mvimg/vae/diffusion_pytorch_model.safetensors +3 -0

- ckpt/realesrgan-x4.onnx +3 -0

- ckpt/v1-inference.yaml +70 -0

.ipynb_checkpoints/README-checkpoint.md

ADDED

|

@@ -0,0 +1,68 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

**中文版本 [中文](README_zh.md)**

|

| 2 |

+

|

| 3 |

+

# Unique3D

|

| 4 |

+

High-Quality and Efficient 3D Mesh Generation from a Single Image

|

| 5 |

+

|

| 6 |

+

## [Paper]() | [Project page](https://wukailu.github.io/Unique3D/) | [Huggingface Demo]() | [Online Demo](https://www.aiuni.ai/)

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

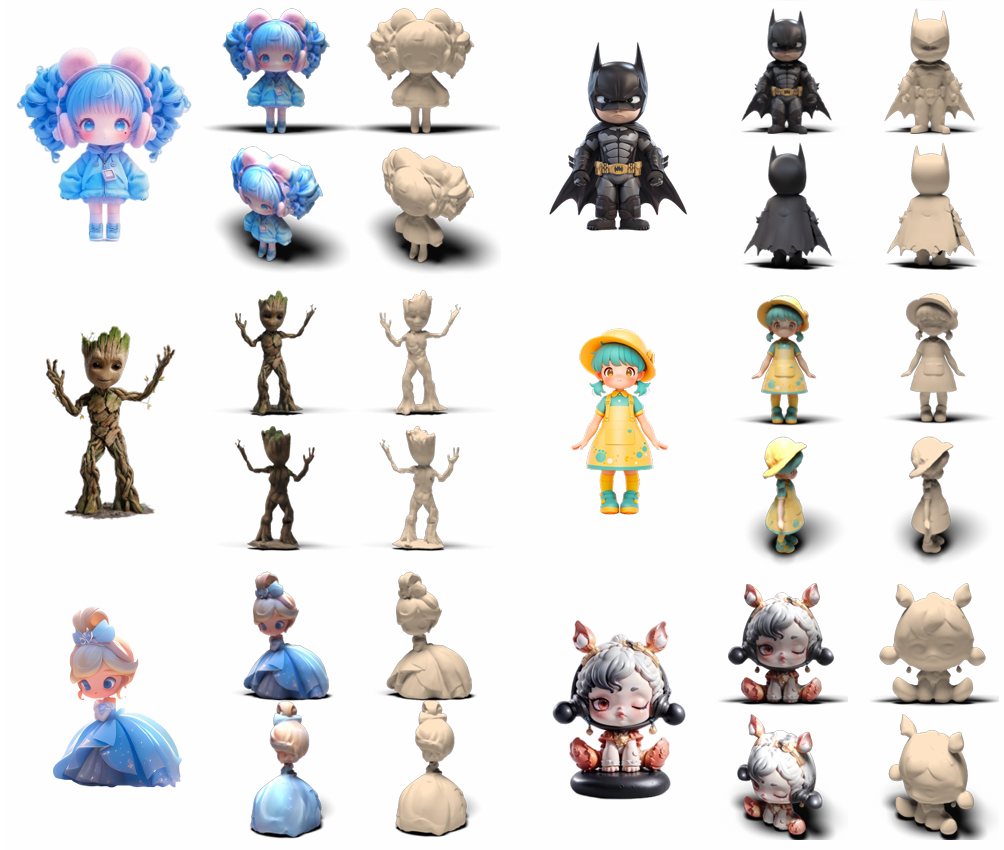

High-fidelity and diverse textured meshes generated by Unique3D from single-view wild images in 30 seconds.

|

| 11 |

+

|

| 12 |

+

## More features

|

| 13 |

+

|

| 14 |

+

The repo is still being under construction, thanks for your patience.

|

| 15 |

+

- [x] Local gradio demo.

|

| 16 |

+

- [ ] Detailed tutorial.

|

| 17 |

+

- [ ] Huggingface demo.

|

| 18 |

+

- [ ] Detailed local demo.

|

| 19 |

+

- [ ] Comfyui support.

|

| 20 |

+

- [ ] Windows support.

|

| 21 |

+

- [ ] Docker support.

|

| 22 |

+

- [ ] More stable reconstruction with normal.

|

| 23 |

+

- [ ] Training code release.

|

| 24 |

+

|

| 25 |

+

## Preparation for inference

|

| 26 |

+

|

| 27 |

+

### Linux System Setup.

|

| 28 |

+

```angular2html

|

| 29 |

+

conda create -n unique3d

|

| 30 |

+

conda activate unique3d

|

| 31 |

+

pip install -r requirements.txt

|

| 32 |

+

```

|

| 33 |

+

|

| 34 |

+

### Interactive inference: run your local gradio demo.

|

| 35 |

+

|

| 36 |

+

1. Download the [ckpt.zip](), and extract it to `ckpt/*`.

|

| 37 |

+

```

|

| 38 |

+

Unique3D

|

| 39 |

+

├──ckpt

|

| 40 |

+

├── controlnet-tile/

|

| 41 |

+

├── image2normal/

|

| 42 |

+

├── img2mvimg/

|

| 43 |

+

├── realesrgan-x4.onnx

|

| 44 |

+

└── v1-inference.yaml

|

| 45 |

+

```

|

| 46 |

+

|

| 47 |

+

2. Run the interactive inference locally.

|

| 48 |

+

```bash

|

| 49 |

+

python app/gradio_local.py --port 7860

|

| 50 |

+

```

|

| 51 |

+

|

| 52 |

+

## Tips to get better results

|

| 53 |

+

|

| 54 |

+

1. Unique3D is sensitive to the facing direction of input images. Due to the distribution of the training data, orthographic front-facing images with a rest pose always lead to good reconstructions.

|

| 55 |

+

2. Images with occlusions will cause worse reconstructions, since four views cannot cover the complete object. Images with fewer occlusions lead to better results.

|

| 56 |

+

3. Pass an image with as high a resolution as possible to the input when resolution is a factor.

|

| 57 |

+

|

| 58 |

+

## Acknowledgement

|

| 59 |

+

|

| 60 |

+

We have intensively borrowed code from the following repositories. Many thanks to the authors for sharing their code.

|

| 61 |

+

- [Stable Diffusion](https://github.com/CompVis/stable-diffusion)

|

| 62 |

+

- [Wonder3d](https://github.com/xxlong0/Wonder3D)

|

| 63 |

+

- [Zero123Plus](https://github.com/SUDO-AI-3D/zero123plus)

|

| 64 |

+

- [Continues Remeshing](https://github.com/Profactor/continuous-remeshing)

|

| 65 |

+

- [Depth from Normals](https://github.com/YertleTurtleGit/depth-from-normals)

|

| 66 |

+

|

| 67 |

+

## Collaborations

|

| 68 |

+

Our mission is to create a 4D generative model with 3D concepts. This is just our first step, and the road ahead is still long, but we are confident. We warmly invite you to join the discussion and explore potential collaborations in any capacity. <span style="color:red">**If you're interested in connecting or partnering with us, please don't hesitate to reach out via email ([email protected])**</span>.

|

README_zh.md

ADDED

|

@@ -0,0 +1,56 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

**其他语言版本 [English](README.md)**

|

| 2 |

+

|

| 3 |

+

# Unique3D

|

| 4 |

+

High-Quality and Efficient 3D Mesh Generation from a Single Image

|

| 5 |

+

|

| 6 |

+

## [论文]() | [项目页面](https://wukailu.github.io/Unique3D/) | [Huggingface Demo]() | [在线演示](https://www.aiuni.ai/)

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

Unique3D从单视图图像生成高保真度和多样化纹理的网格,在4090上大约需要30秒。

|

| 11 |

+

|

| 12 |

+

### 推理准备

|

| 13 |

+

|

| 14 |

+

#### Linux系统设置

|

| 15 |

+

```angular2html

|

| 16 |

+

conda create -n unique3d

|

| 17 |

+

conda activate unique3d

|

| 18 |

+

pip install -r requirements.txt

|

| 19 |

+

```

|

| 20 |

+

|

| 21 |

+

#### 交互式推理:运行您的本地gradio演示

|

| 22 |

+

|

| 23 |

+

1. 下载[ckpt.zip](),并将其解压到`ckpt/*`。

|

| 24 |

+

```

|

| 25 |

+

Unique3D

|

| 26 |

+

├──ckpt

|

| 27 |

+

├── controlnet-tile/

|

| 28 |

+

├── image2normal/

|

| 29 |

+

├── img2mvimg/

|

| 30 |

+

├── realesrgan-x4.onnx

|

| 31 |

+

└── v1-inference.yaml

|

| 32 |

+

```

|

| 33 |

+

|

| 34 |

+

2. 在本地运行交互式推理。

|

| 35 |

+

```bash

|

| 36 |

+

python app/gradio_local.py --port 7860

|

| 37 |

+

```

|

| 38 |

+

|

| 39 |

+

## 获取更好结果的提示

|

| 40 |

+

|

| 41 |

+

1. Unique3D对输入图像的朝向非常敏感。由于训练数据的分布,**正交正视图像**通常总是能带来良好的重建。对于人物而言,最好是 A-pose 或者 T-pose,因为目前训练数据很少含有其他类型姿态。

|

| 42 |

+

2. 有遮挡的图像会导致更差的重建,因为4个视图无法覆盖完整的对象。遮挡较少的图像会带来更好的结果。

|

| 43 |

+

3. 尽可能将高分辨率的图像用作输入。

|

| 44 |

+

|

| 45 |

+

## 致谢

|

| 46 |

+

|

| 47 |

+

我们借用了以下代码库的代码。非常感谢作者们分享他们的代码。

|

| 48 |

+

- [Stable Diffusion](https://github.com/CompVis/stable-diffusion)

|

| 49 |

+

- [Wonder3d](https://github.com/xxlong0/Wonder3D)

|

| 50 |

+

- [Zero123Plus](https://github.com/SUDO-AI-3D/zero123plus)

|

| 51 |

+

- [Continues Remeshing](https://github.com/Profactor/continuous-remeshing)

|

| 52 |

+

- [Depth from Normals](https://github.com/YertleTurtleGit/depth-from-normals)

|

| 53 |

+

|

| 54 |

+

## 合作

|

| 55 |

+

|

| 56 |

+

我们使命是创建一个具有3D概念的4D生成模型。这只是我们的第一步,前方的道路仍然很长,但我们有信心。我们热情邀请您加入讨论,并探索任何形式的潜在合作。<span style="color:red">**如果您有兴趣联系或与我们合作,欢迎通过电子邮件([email protected])与我们联系**</span>。

|

app/__init__.py

ADDED

|

File without changes

|

app/all_models.py

ADDED

|

@@ -0,0 +1,22 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from scripts.sd_model_zoo import load_common_sd15_pipe

|

| 3 |

+

from diffusers import StableDiffusionControlNetImg2ImgPipeline, StableDiffusionPipeline

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

class MyModelZoo:

|

| 7 |

+

_pipe_disney_controlnet_lineart_ipadapter_i2i: StableDiffusionControlNetImg2ImgPipeline = None

|

| 8 |

+

|

| 9 |

+

base_model = "runwayml/stable-diffusion-v1-5"

|

| 10 |

+

|

| 11 |

+

def __init__(self, base_model=None) -> None:

|

| 12 |

+

if base_model is not None:

|

| 13 |

+

self.base_model = base_model

|

| 14 |

+

|

| 15 |

+

@property

|

| 16 |

+

def pipe_disney_controlnet_tile_ipadapter_i2i(self):

|

| 17 |

+

return self._pipe_disney_controlnet_lineart_ipadapter_i2i

|

| 18 |

+

|

| 19 |

+

def init_models(self):

|

| 20 |

+

self._pipe_disney_controlnet_lineart_ipadapter_i2i = load_common_sd15_pipe(base_model=self.base_model, ip_adapter=True, plus_model=False, controlnet="./ckpt/controlnet-tile", pipeline_class=StableDiffusionControlNetImg2ImgPipeline)

|

| 21 |

+

|

| 22 |

+

model_zoo = MyModelZoo()

|

app/custom_models/image2image-objaverseF-rgb2normal.yaml

ADDED

|

@@ -0,0 +1,61 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

pretrained_model_name_or_path: "lambdalabs/sd-image-variations-diffusers"

|

| 2 |

+

mixed_precision: "bf16"

|

| 3 |

+

|

| 4 |

+

init_config:

|

| 5 |

+

# enable controls

|

| 6 |

+

enable_cross_attn_lora: False

|

| 7 |

+

enable_cross_attn_ip: False

|

| 8 |

+

enable_self_attn_lora: False

|

| 9 |

+

enable_self_attn_ref: True

|

| 10 |

+

enable_multiview_attn: False

|

| 11 |

+

|

| 12 |

+

# for cross attention

|

| 13 |

+

init_cross_attn_lora: False

|

| 14 |

+

init_cross_attn_ip: False

|

| 15 |

+

cross_attn_lora_rank: 512 # 0 for not enabled

|

| 16 |

+

cross_attn_lora_only_kv: False

|

| 17 |

+

ipadapter_pretrained_name: "h94/IP-Adapter"

|

| 18 |

+

ipadapter_subfolder_name: "models"

|

| 19 |

+

ipadapter_weight_name: "ip-adapter_sd15.safetensors"

|

| 20 |

+

ipadapter_effect_on: "all" # all, first

|

| 21 |

+

|

| 22 |

+

# for self attention

|

| 23 |

+

init_self_attn_lora: False

|

| 24 |

+

self_attn_lora_rank: 512

|

| 25 |

+

self_attn_lora_only_kv: False

|

| 26 |

+

|

| 27 |

+

# for self attention ref

|

| 28 |

+

init_self_attn_ref: True

|

| 29 |

+

self_attn_ref_position: "attn1"

|

| 30 |

+

self_attn_ref_other_model_name: "lambdalabs/sd-image-variations-diffusers"

|

| 31 |

+

self_attn_ref_pixel_wise_crosspond: True

|

| 32 |

+

self_attn_ref_effect_on: "all"

|

| 33 |

+

|

| 34 |

+

# for multiview attention

|

| 35 |

+

init_multiview_attn: False

|

| 36 |

+

multiview_attn_position: "attn1"

|

| 37 |

+

num_modalities: 1

|

| 38 |

+

|

| 39 |

+

# for unet

|

| 40 |

+

init_unet_path: "${pretrained_model_name_or_path}"

|

| 41 |

+

init_num_cls_label: 0 # for initialize

|

| 42 |

+

cls_labels: [] # for current task

|

| 43 |

+

|

| 44 |

+

trainers:

|

| 45 |

+

- trainer_type: "image2image_trainer"

|

| 46 |

+

trainer:

|

| 47 |

+

pretrained_model_name_or_path: "${pretrained_model_name_or_path}"

|

| 48 |

+

attn_config:

|

| 49 |

+

cls_labels: [] # for current task

|

| 50 |

+

enable_cross_attn_lora: False

|

| 51 |

+

enable_cross_attn_ip: False

|

| 52 |

+

enable_self_attn_lora: False

|

| 53 |

+

enable_self_attn_ref: True

|

| 54 |

+

enable_multiview_attn: False

|

| 55 |

+

resolution: "512"

|

| 56 |

+

condition_image_resolution: "512"

|

| 57 |

+

condition_image_column_name: "conditioning_image"

|

| 58 |

+

image_column_name: "image"

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

|

app/custom_models/image2mvimage-objaverseFrot-wonder3d.yaml

ADDED

|

@@ -0,0 +1,63 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

pretrained_model_name_or_path: "./ckpt/img2mvimg"

|

| 2 |

+

mixed_precision: "bf16"

|

| 3 |

+

|

| 4 |

+

init_config:

|

| 5 |

+

# enable controls

|

| 6 |

+

enable_cross_attn_lora: False

|

| 7 |

+

enable_cross_attn_ip: False

|

| 8 |

+

enable_self_attn_lora: False

|

| 9 |

+

enable_self_attn_ref: False

|

| 10 |

+

enable_multiview_attn: True

|

| 11 |

+

|

| 12 |

+

# for cross attention

|

| 13 |

+

init_cross_attn_lora: False

|

| 14 |

+

init_cross_attn_ip: False

|

| 15 |

+

cross_attn_lora_rank: 256 # 0 for not enabled

|

| 16 |

+

cross_attn_lora_only_kv: False

|

| 17 |

+

ipadapter_pretrained_name: "h94/IP-Adapter"

|

| 18 |

+

ipadapter_subfolder_name: "models"

|

| 19 |

+

ipadapter_weight_name: "ip-adapter_sd15.safetensors"

|

| 20 |

+

ipadapter_effect_on: "all" # all, first

|

| 21 |

+

|

| 22 |

+

# for self attention

|

| 23 |

+

init_self_attn_lora: False

|

| 24 |

+

self_attn_lora_rank: 256

|

| 25 |

+

self_attn_lora_only_kv: False

|

| 26 |

+

|

| 27 |

+

# for self attention ref

|

| 28 |

+

init_self_attn_ref: False

|

| 29 |

+

self_attn_ref_position: "attn1"

|

| 30 |

+

self_attn_ref_other_model_name: "lambdalabs/sd-image-variations-diffusers"

|

| 31 |

+

self_attn_ref_pixel_wise_crosspond: False

|

| 32 |

+

self_attn_ref_effect_on: "all"

|

| 33 |

+

|

| 34 |

+

# for multiview attention

|

| 35 |

+

init_multiview_attn: True

|

| 36 |

+

multiview_attn_position: "attn1"

|

| 37 |

+

use_mv_joint_attn: True

|

| 38 |

+

num_modalities: 1

|

| 39 |

+

|

| 40 |

+

# for unet

|

| 41 |

+

init_unet_path: "${pretrained_model_name_or_path}"

|

| 42 |

+

cat_condition: True # cat condition to input

|

| 43 |

+

|

| 44 |

+

# for cls embedding

|

| 45 |

+

init_num_cls_label: 8 # for initialize

|

| 46 |

+

cls_labels: [0, 1, 2, 3] # for current task

|

| 47 |

+

|

| 48 |

+

trainers:

|

| 49 |

+

- trainer_type: "image2mvimage_trainer"

|

| 50 |

+

trainer:

|

| 51 |

+

pretrained_model_name_or_path: "${pretrained_model_name_or_path}"

|

| 52 |

+

attn_config:

|

| 53 |

+

cls_labels: [0, 1, 2, 3] # for current task

|

| 54 |

+

enable_cross_attn_lora: False

|

| 55 |

+

enable_cross_attn_ip: False

|

| 56 |

+

enable_self_attn_lora: False

|

| 57 |

+

enable_self_attn_ref: False

|

| 58 |

+

enable_multiview_attn: True

|

| 59 |

+

resolution: "256"

|

| 60 |

+

condition_image_resolution: "256"

|

| 61 |

+

normal_cls_offset: 4

|

| 62 |

+

condition_image_column_name: "conditioning_image"

|

| 63 |

+

image_column_name: "image"

|

app/custom_models/mvimg_prediction.py

ADDED

|

@@ -0,0 +1,57 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import sys

|

| 2 |

+

import torch

|

| 3 |

+

import gradio as gr

|

| 4 |

+

from PIL import Image

|

| 5 |

+

import numpy as np

|

| 6 |

+

from rembg import remove

|

| 7 |

+

from app.utils import change_rgba_bg, rgba_to_rgb

|

| 8 |

+

from app.custom_models.utils import load_pipeline

|

| 9 |

+

from scripts.all_typing import *

|

| 10 |

+

from scripts.utils import session, simple_preprocess

|

| 11 |

+

|

| 12 |

+

training_config = "app/custom_models/image2mvimage-objaverseFrot-wonder3d.yaml"

|

| 13 |

+

checkpoint_path = "ckpt/img2mvimg/unet_state_dict.pth"

|

| 14 |

+

trainer, pipeline = load_pipeline(training_config, checkpoint_path)

|

| 15 |

+

pipeline.enable_model_cpu_offload()

|

| 16 |

+

|

| 17 |

+

def predict(img_list: List[Image.Image], guidance_scale=2., **kwargs):

|

| 18 |

+

if isinstance(img_list, Image.Image):

|

| 19 |

+

img_list = [img_list]

|

| 20 |

+

img_list = [rgba_to_rgb(i) if i.mode == 'RGBA' else i for i in img_list]

|

| 21 |

+

ret = []

|

| 22 |

+

for img in img_list:

|

| 23 |

+

images = trainer.pipeline_forward(

|

| 24 |

+

pipeline=pipeline,

|

| 25 |

+

image=img,

|

| 26 |

+

guidance_scale=guidance_scale,

|

| 27 |

+

**kwargs

|

| 28 |

+

).images

|

| 29 |

+

ret.extend(images)

|

| 30 |

+

return ret

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

def run_mvprediction(input_image: Image.Image, remove_bg=True, guidance_scale=1.5, seed=1145):

|

| 34 |

+

if input_image.mode == 'RGB' or np.array(input_image)[..., -1].mean() == 255.:

|

| 35 |

+

# still do remove using rembg, since simple_preprocess requires RGBA image

|

| 36 |

+

print("RGB image not RGBA! still remove bg!")

|

| 37 |

+

remove_bg = True

|

| 38 |

+

|

| 39 |

+

if remove_bg:

|

| 40 |

+

input_image = remove(input_image, session=session)

|

| 41 |

+

|

| 42 |

+

# make front_pil RGBA with white bg

|

| 43 |

+

input_image = change_rgba_bg(input_image, "white")

|

| 44 |

+

single_image = simple_preprocess(input_image)

|

| 45 |

+

|

| 46 |

+

generator = torch.Generator(device="cuda").manual_seed(int(seed)) if seed >= 0 else None

|

| 47 |

+

|

| 48 |

+

rgb_pils = predict(

|

| 49 |

+

single_image,

|

| 50 |

+

generator=generator,

|

| 51 |

+

guidance_scale=guidance_scale,

|

| 52 |

+

width=256,

|

| 53 |

+

height=256,

|

| 54 |

+

num_inference_steps=30,

|

| 55 |

+

)

|

| 56 |

+

|

| 57 |

+

return rgb_pils, single_image

|

app/custom_models/normal_prediction.py

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import sys

|

| 2 |

+

from PIL import Image

|

| 3 |

+

from app.utils import rgba_to_rgb, simple_remove

|

| 4 |

+

from app.custom_models.utils import load_pipeline

|

| 5 |

+

from scripts.utils import rotate_normals_torch

|

| 6 |

+

from scripts.all_typing import *

|

| 7 |

+

|

| 8 |

+

training_config = "app/custom_models/image2image-objaverseF-rgb2normal.yaml"

|

| 9 |

+

checkpoint_path = "ckpt/image2normal/unet_state_dict.pth"

|

| 10 |

+

trainer, pipeline = load_pipeline(training_config, checkpoint_path)

|

| 11 |

+

pipeline.enable_model_cpu_offload()

|

| 12 |

+

|

| 13 |

+

def predict_normals(image: List[Image.Image], guidance_scale=2., do_rotate=True, num_inference_steps=30, **kwargs):

|

| 14 |

+

img_list = image if isinstance(image, list) else [image]

|

| 15 |

+

img_list = [rgba_to_rgb(i) if i.mode == 'RGBA' else i for i in img_list]

|

| 16 |

+

images = trainer.pipeline_forward(

|

| 17 |

+

pipeline=pipeline,

|

| 18 |

+

image=img_list,

|

| 19 |

+

num_inference_steps=num_inference_steps,

|

| 20 |

+

guidance_scale=guidance_scale,

|

| 21 |

+

**kwargs

|

| 22 |

+

).images

|

| 23 |

+

images = simple_remove(images)

|

| 24 |

+

if do_rotate and len(images) > 1:

|

| 25 |

+

images = rotate_normals_torch(images, return_types='pil')

|

| 26 |

+

return images

|

app/custom_models/utils.py

ADDED

|

@@ -0,0 +1,75 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from typing import List

|

| 3 |

+

from dataclasses import dataclass

|

| 4 |

+

from app.utils import rgba_to_rgb

|

| 5 |

+

from custum_3d_diffusion.trainings.config_classes import ExprimentConfig, TrainerSubConfig

|

| 6 |

+

from custum_3d_diffusion import modules

|

| 7 |

+

from custum_3d_diffusion.custum_modules.unifield_processor import AttnConfig, ConfigurableUNet2DConditionModel

|

| 8 |

+

from custum_3d_diffusion.trainings.base import BasicTrainer

|

| 9 |

+

from custum_3d_diffusion.trainings.utils import load_config

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

@dataclass

|

| 13 |

+

class FakeAccelerator:

|

| 14 |

+

device: torch.device = torch.device("cuda")

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

def init_trainers(cfg_path: str, weight_dtype: torch.dtype, extras: dict):

|

| 18 |

+

accelerator = FakeAccelerator()

|

| 19 |

+

cfg: ExprimentConfig = load_config(ExprimentConfig, cfg_path, extras)

|

| 20 |

+

init_config: AttnConfig = load_config(AttnConfig, cfg.init_config)

|

| 21 |

+

configurable_unet = ConfigurableUNet2DConditionModel(init_config, weight_dtype)

|

| 22 |

+

configurable_unet.enable_xformers_memory_efficient_attention()

|

| 23 |

+

trainer_cfgs: List[TrainerSubConfig] = [load_config(TrainerSubConfig, trainer) for trainer in cfg.trainers]

|

| 24 |

+

trainers: List[BasicTrainer] = [modules.find(trainer.trainer_type)(accelerator, None, configurable_unet, trainer.trainer, weight_dtype, i) for i, trainer in enumerate(trainer_cfgs)]

|

| 25 |

+

return trainers, configurable_unet

|

| 26 |

+

|

| 27 |

+

from app.utils import make_image_grid, split_image

|

| 28 |

+

def process_image(function, img, guidance_scale=2., merged_image=False, remove_bg=True):

|

| 29 |

+

from rembg import remove

|

| 30 |

+

if remove_bg:

|

| 31 |

+

img = remove(img)

|

| 32 |

+

img = rgba_to_rgb(img)

|

| 33 |

+

if merged_image:

|

| 34 |

+

img = split_image(img, rows=2)

|

| 35 |

+

images = function(

|

| 36 |

+

image=img,

|

| 37 |

+

guidance_scale=guidance_scale,

|

| 38 |

+

)

|

| 39 |

+

if len(images) > 1:

|

| 40 |

+

return make_image_grid(images, rows=2)

|

| 41 |

+

else:

|

| 42 |

+

return images[0]

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

def process_text(trainer, pipeline, img, guidance_scale=2.):

|

| 46 |

+

pipeline.cfg.validation_prompts = [img]

|

| 47 |

+

titles, images = trainer.batched_validation_forward(pipeline, guidance_scale=[guidance_scale])

|

| 48 |

+

return images[0]

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

def load_pipeline(config_path, ckpt_path, pipeline_filter=lambda x: True, weight_dtype = torch.bfloat16):

|

| 52 |

+

training_config = config_path

|

| 53 |

+

load_from_checkpoint = ckpt_path

|

| 54 |

+

extras = []

|

| 55 |

+

device = "cuda"

|

| 56 |

+

trainers, configurable_unet = init_trainers(training_config, weight_dtype, extras)

|

| 57 |

+

shared_modules = dict()

|

| 58 |

+

for trainer in trainers:

|

| 59 |

+

shared_modules = trainer.init_shared_modules(shared_modules)

|

| 60 |

+

|

| 61 |

+

if load_from_checkpoint is not None:

|

| 62 |

+

state_dict = torch.load(load_from_checkpoint)

|

| 63 |

+

configurable_unet.unet.load_state_dict(state_dict, strict=False)

|

| 64 |

+

# Move unet, vae and text_encoder to device and cast to weight_dtype

|

| 65 |

+

configurable_unet.unet.to(device, dtype=weight_dtype)

|

| 66 |

+

|

| 67 |

+

pipeline = None

|

| 68 |

+

trainer_out = None

|

| 69 |

+

for trainer in trainers:

|

| 70 |

+

if pipeline_filter(trainer.cfg.trainer_name):

|

| 71 |

+

pipeline = trainer.construct_pipeline(shared_modules, configurable_unet.unet)

|

| 72 |

+

pipeline.set_progress_bar_config(disable=False)

|

| 73 |

+

trainer_out = trainer

|

| 74 |

+

pipeline = pipeline.to(device)

|

| 75 |

+

return trainer_out, pipeline

|

app/examples/Groot.png

ADDED

|

Git LFS Details

|

app/examples/aaa.png

ADDED

|

Git LFS Details

|

app/examples/abma.png

ADDED

|

Git LFS Details

|

app/examples/akun.png

ADDED

|

Git LFS Details

|

app/examples/anya.png

ADDED

|

Git LFS Details

|

app/examples/bag.png

ADDED

|

Git LFS Details

|

app/examples/generated_1715761545_frame0.png

ADDED

|

Git LFS Details

|

app/examples/generated_1715762357_frame0.png

ADDED

|

Git LFS Details

|

app/examples/generated_1715763329_frame0.png

ADDED

|

Git LFS Details

|

app/examples/hatsune_miku.png

ADDED

|

Git LFS Details

|

app/examples/princess-large.png

ADDED

|

Git LFS Details

|

app/examples/shoe.png

ADDED

|

Git LFS Details

|

app/gradio_3dgen.py

ADDED

|

@@ -0,0 +1,71 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import gradio as gr

|

| 3 |

+

from PIL import Image

|

| 4 |

+

from pytorch3d.structures import Meshes

|

| 5 |

+

from app.utils import clean_up

|

| 6 |

+

from app.custom_models.mvimg_prediction import run_mvprediction

|

| 7 |

+

from app.custom_models.normal_prediction import predict_normals

|

| 8 |

+

from scripts.refine_lr_to_sr import run_sr_fast

|

| 9 |

+

from scripts.utils import save_glb_and_video

|

| 10 |

+

from scripts.multiview_inference import geo_reconstruct

|

| 11 |

+

|

| 12 |

+

def generate3dv2(preview_img, input_processing, seed, render_video=True, do_refine=True, expansion_weight=0.1, init_type="std"):

|

| 13 |

+

if preview_img is None:

|

| 14 |

+

raise gr.Error("preview_img is none")

|

| 15 |

+

if isinstance(preview_img, str):

|

| 16 |

+

preview_img = Image.open(preview_img)

|

| 17 |

+

|

| 18 |

+

if preview_img.size[0] <= 512:

|

| 19 |

+

preview_img = run_sr_fast([preview_img])[0]

|

| 20 |

+

rgb_pils, front_pil = run_mvprediction(preview_img, remove_bg=input_processing, seed=int(seed)) # 6s

|

| 21 |

+

new_meshes = geo_reconstruct(rgb_pils, None, front_pil, do_refine=do_refine, predict_normal=True, expansion_weight=expansion_weight, init_type=init_type)

|

| 22 |

+

vertices = new_meshes.verts_packed()

|

| 23 |

+

vertices = vertices / 2 * 1.35

|

| 24 |

+

vertices[..., [0, 2]] = - vertices[..., [0, 2]]

|

| 25 |

+

new_meshes = Meshes(verts=[vertices], faces=new_meshes.faces_list(), textures=new_meshes.textures)

|

| 26 |

+

|

| 27 |

+

ret_mesh, video = save_glb_and_video("/tmp/gradio/generated", new_meshes, with_timestamp=True, dist=3.5, fov_in_degrees=2 / 1.35, cam_type="ortho", export_video=render_video)

|

| 28 |

+

return ret_mesh, video

|

| 29 |

+

|

| 30 |

+

#######################################

|

| 31 |

+

def create_ui(concurrency_id="wkl"):

|

| 32 |

+

with gr.Row():

|

| 33 |

+

with gr.Column(scale=2):

|

| 34 |

+

input_image = gr.Image(type='pil', image_mode='RGBA', label='Frontview')

|

| 35 |

+

|

| 36 |

+

example_folder = os.path.join(os.path.dirname(__file__), "./examples")

|

| 37 |

+

example_fns = sorted([os.path.join(example_folder, example) for example in os.listdir(example_folder)])

|

| 38 |

+

gr.Examples(

|

| 39 |

+

examples=example_fns,

|

| 40 |

+

inputs=[input_image],

|

| 41 |

+

cache_examples=False,

|

| 42 |

+

label='Examples (click one of the images below to start)',

|

| 43 |

+

examples_per_page=12

|

| 44 |

+

)

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

with gr.Column(scale=3):

|

| 48 |

+

# export mesh display

|

| 49 |

+

output_mesh = gr.Model3D(value=None, label="Mesh Model", show_label=True, height=320)

|

| 50 |

+

output_video = gr.Video(label="Preview", show_label=True, show_share_button=True, height=320, visible=False)

|

| 51 |

+

|

| 52 |

+

input_processing = gr.Checkbox(

|

| 53 |

+

value=True,

|

| 54 |

+

label='Remove Background',

|

| 55 |

+

visible=True,

|

| 56 |

+

)

|

| 57 |

+

do_refine = gr.Checkbox(value=True, label="Refine Multiview Details", visible=False)

|

| 58 |

+

expansion_weight = gr.Slider(minimum=-1., maximum=1.0, value=0.1, step=0.1, label="Expansion Weight", visible=False)

|

| 59 |

+

init_type = gr.Dropdown(choices=["std", "thin"], label="Mesh Initialization", value="std", visible=False)

|

| 60 |

+

setable_seed = gr.Slider(-1, 1000000000, -1, step=1, visible=True, label="Seed")

|

| 61 |

+

render_video = gr.Checkbox(value=False, visible=False, label="generate video")

|

| 62 |

+

fullrunv2_btn = gr.Button('Generate 3D', interactive=True)

|

| 63 |

+

|

| 64 |

+

fullrunv2_btn.click(

|

| 65 |

+

fn = generate3dv2,

|

| 66 |

+

inputs=[input_image, input_processing, setable_seed, render_video, do_refine, expansion_weight, init_type],

|

| 67 |

+

outputs=[output_mesh, output_video],

|

| 68 |

+

concurrency_id=concurrency_id,

|

| 69 |

+

api_name="generate3dv2",

|

| 70 |

+

).success(clean_up, api_name=False)

|

| 71 |

+

return input_image

|

app/gradio_3dgen_steps.py

ADDED

|

@@ -0,0 +1,87 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

from PIL import Image

|

| 3 |

+

|

| 4 |

+

from app.custom_models.mvimg_prediction import run_mvprediction

|

| 5 |

+

from app.utils import make_image_grid, split_image

|

| 6 |

+

from scripts.utils import save_glb_and_video

|

| 7 |

+

|

| 8 |

+

def concept_to_multiview(preview_img, input_processing, seed, guidance=1.):

|

| 9 |

+

seed = int(seed)

|

| 10 |

+

if preview_img is None:

|

| 11 |

+

raise gr.Error("preview_img is none.")

|

| 12 |

+

if isinstance(preview_img, str):

|

| 13 |

+

preview_img = Image.open(preview_img)

|

| 14 |

+

|

| 15 |

+

rgb_pils, front_pil = run_mvprediction(preview_img, remove_bg=input_processing, seed=seed, guidance_scale=guidance)

|

| 16 |

+

rgb_pil = make_image_grid(rgb_pils, rows=2)

|

| 17 |

+

return rgb_pil, front_pil

|

| 18 |

+

|

| 19 |

+

def concept_to_multiview_ui(concurrency_id="wkl"):

|

| 20 |

+

with gr.Row():

|

| 21 |

+

with gr.Column(scale=2):

|

| 22 |

+

preview_img = gr.Image(type='pil', image_mode='RGBA', label='Frontview')

|

| 23 |

+

input_processing = gr.Checkbox(

|

| 24 |

+

value=True,

|

| 25 |

+

label='Remove Background',

|

| 26 |

+

)

|

| 27 |

+

seed = gr.Slider(minimum=-1, maximum=1000000000, value=-1, step=1.0, label="seed")

|

| 28 |

+

guidance = gr.Slider(minimum=1.0, maximum=5.0, value=1.0, label="Guidance Scale", step=0.5)

|

| 29 |

+

run_btn = gr.Button('Generate Multiview', interactive=True)

|

| 30 |

+

with gr.Column(scale=3):

|

| 31 |

+

# export mesh display

|

| 32 |

+

output_rgb = gr.Image(type='pil', label="RGB", show_label=True)

|

| 33 |

+

output_front = gr.Image(type='pil', image_mode='RGBA', label="Frontview", show_label=True)

|

| 34 |

+

run_btn.click(

|

| 35 |

+

fn = concept_to_multiview,

|

| 36 |

+

inputs=[preview_img, input_processing, seed, guidance],

|

| 37 |

+

outputs=[output_rgb, output_front],

|

| 38 |

+

concurrency_id=concurrency_id,

|

| 39 |

+

api_name=False,

|

| 40 |

+

)

|

| 41 |

+

return output_rgb, output_front

|

| 42 |

+

|

| 43 |

+

from app.custom_models.normal_prediction import predict_normals

|

| 44 |

+

from scripts.multiview_inference import geo_reconstruct

|

| 45 |

+

def multiview_to_mesh_v2(rgb_pil, normal_pil, front_pil, do_refine=False, expansion_weight=0.1, init_type="std"):

|

| 46 |

+

rgb_pils = split_image(rgb_pil, rows=2)

|

| 47 |

+

if normal_pil is not None:

|

| 48 |

+

normal_pil = split_image(normal_pil, rows=2)

|

| 49 |

+

if front_pil is None:

|

| 50 |

+

front_pil = rgb_pils[0]

|

| 51 |

+

new_meshes = geo_reconstruct(rgb_pils, normal_pil, front_pil, do_refine=do_refine, predict_normal=normal_pil is None, expansion_weight=expansion_weight, init_type=init_type)

|

| 52 |

+

ret_mesh, video = save_glb_and_video("/tmp/gradio/generated", new_meshes, with_timestamp=True, dist=3.5, fov_in_degrees=2 / 1.35, cam_type="ortho", export_video=False)

|

| 53 |

+

return ret_mesh

|

| 54 |

+

|

| 55 |

+

def new_multiview_to_mesh_ui(concurrency_id="wkl"):

|

| 56 |

+

with gr.Row():

|

| 57 |

+

with gr.Column(scale=2):

|

| 58 |

+

rgb_pil = gr.Image(type='pil', image_mode='RGB', label='RGB')

|

| 59 |

+

front_pil = gr.Image(type='pil', image_mode='RGBA', label='Frontview(Optinal)')

|

| 60 |

+

normal_pil = gr.Image(type='pil', image_mode='RGBA', label='Normal(Optinal)')

|

| 61 |

+

do_refine = gr.Checkbox(

|

| 62 |

+

value=False,

|

| 63 |

+

label='Refine rgb',

|

| 64 |

+

visible=False,

|

| 65 |

+

)

|

| 66 |

+

expansion_weight = gr.Slider(minimum=-1.0, maximum=1.0, value=0.1, step=0.1, label="Expansion Weight", visible=False)

|

| 67 |

+

init_type = gr.Dropdown(choices=["std", "thin"], label="Mesh initialization", value="std", visible=False)

|

| 68 |

+

run_btn = gr.Button('Generate 3D', interactive=True)

|

| 69 |

+

with gr.Column(scale=3):

|

| 70 |

+

# export mesh display

|

| 71 |

+

output_mesh = gr.Model3D(value=None, label="mesh model", show_label=True)

|

| 72 |

+

run_btn.click(

|

| 73 |

+

fn = multiview_to_mesh_v2,

|

| 74 |

+

inputs=[rgb_pil, normal_pil, front_pil, do_refine, expansion_weight, init_type],

|

| 75 |

+

outputs=[output_mesh],

|

| 76 |

+

concurrency_id=concurrency_id,

|

| 77 |

+

api_name="multiview_to_mesh",

|

| 78 |

+

)

|

| 79 |

+

return rgb_pil, front_pil, output_mesh

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

#######################################

|

| 83 |

+

def create_step_ui(concurrency_id="wkl"):

|

| 84 |

+

with gr.Tab(label="3D:concept_to_multiview"):

|

| 85 |

+

concept_to_multiview_ui(concurrency_id)

|

| 86 |

+

with gr.Tab(label="3D:new_multiview_to_mesh"):

|

| 87 |

+

new_multiview_to_mesh_ui(concurrency_id)

|

app/gradio_local.py

ADDED

|

@@ -0,0 +1,76 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

if __name__ == "__main__":

|

| 2 |

+

import os

|

| 3 |

+

import sys

|

| 4 |

+

sys.path.append(os.curdir)

|

| 5 |

+

if 'CUDA_VISIBLE_DEVICES' not in os.environ:

|

| 6 |

+

os.environ['CUDA_VISIBLE_DEVICES'] = '0'

|

| 7 |

+

os.environ['TRANSFORMERS_OFFLINE']='0'

|

| 8 |

+

os.environ['DIFFUSERS_OFFLINE']='0'

|

| 9 |

+

os.environ['HF_HUB_OFFLINE']='0'

|

| 10 |

+

os.environ['GRADIO_ANALYTICS_ENABLED']='False'

|

| 11 |

+

os.environ['HF_ENDPOINT']='https://hf-mirror.com'

|

| 12 |

+

import torch

|

| 13 |

+

torch.set_float32_matmul_precision('medium')

|

| 14 |

+

torch.backends.cuda.matmul.allow_tf32 = True

|

| 15 |

+

torch.set_grad_enabled(False)

|

| 16 |

+

|

| 17 |

+

import gradio as gr

|

| 18 |

+

import argparse

|

| 19 |

+

|

| 20 |

+

from app.gradio_3dgen import create_ui as create_3d_ui

|

| 21 |

+

# from app.gradio_3dgen_steps import create_step_ui

|

| 22 |

+

from app.all_models import model_zoo

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

_TITLE = '''Unique3D: High-Quality and Efficient 3D Mesh Generation from a Single Image'''

|

| 26 |

+

_DESCRIPTION = '''

|

| 27 |

+

[Project page](https://wukailu.github.io/Unique3D/)

|

| 28 |

+

|

| 29 |

+

* High-fidelity and diverse textured meshes generated by Unique3D from single-view images.

|

| 30 |

+

|

| 31 |

+

* The demo is still under construction, and more features are expected to be implemented soon.

|

| 32 |

+

'''

|

| 33 |

+

|

| 34 |

+

def launch(

|

| 35 |

+

port,

|

| 36 |

+

listen=False,

|

| 37 |

+

share=False,

|

| 38 |

+

gradio_root="",

|

| 39 |

+

):

|

| 40 |

+

model_zoo.init_models()

|

| 41 |

+

|

| 42 |

+

with gr.Blocks(

|

| 43 |

+

title=_TITLE,

|

| 44 |

+

theme=gr.themes.Monochrome(),

|

| 45 |

+

) as demo:

|

| 46 |

+

with gr.Row():

|

| 47 |

+

with gr.Column(scale=1):

|

| 48 |

+

gr.Markdown('# ' + _TITLE)

|

| 49 |

+

gr.Markdown(_DESCRIPTION)

|

| 50 |

+

create_3d_ui("wkl")

|

| 51 |

+

|

| 52 |

+

launch_args = {}

|

| 53 |

+

if listen:

|

| 54 |

+

launch_args["server_name"] = "0.0.0.0"

|

| 55 |

+

|

| 56 |

+

demo.queue(default_concurrency_limit=1).launch(

|

| 57 |

+

server_port=None if port == 0 else port,

|

| 58 |

+

share=share,

|

| 59 |

+

root_path=gradio_root if gradio_root != "" else None, # "/myapp"

|

| 60 |

+

**launch_args,

|

| 61 |

+

)

|

| 62 |

+

|

| 63 |

+

if __name__ == "__main__":

|

| 64 |

+

parser = argparse.ArgumentParser()

|

| 65 |

+

args, extra = parser.parse_known_args()

|

| 66 |

+

parser.add_argument("--listen", action="store_true")

|

| 67 |

+

parser.add_argument("--port", type=int, default=0)

|

| 68 |

+

parser.add_argument("--share", action="store_true")

|

| 69 |

+

parser.add_argument("--gradio_root", default="")

|

| 70 |

+

args = parser.parse_args()

|

| 71 |

+

launch(

|

| 72 |

+

args.port,

|

| 73 |

+

listen=args.listen,

|

| 74 |

+

share=args.share,

|

| 75 |

+

gradio_root=args.gradio_root,

|

| 76 |

+

)

|

app/utils.py

ADDED

|

@@ -0,0 +1,112 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import numpy as np

|

| 3 |

+

from PIL import Image

|

| 4 |

+

import gc

|

| 5 |

+

import numpy as np

|

| 6 |

+

import numpy as np

|

| 7 |

+

from PIL import Image

|

| 8 |

+

from scripts.refine_lr_to_sr import run_sr_fast

|

| 9 |

+

|

| 10 |

+

GRADIO_CACHE = "/tmp/gradio/"

|

| 11 |

+

|

| 12 |

+

def clean_up():

|

| 13 |

+

torch.cuda.empty_cache()

|

| 14 |

+

gc.collect()

|

| 15 |

+

|

| 16 |

+

def remove_color(arr):

|

| 17 |

+

if arr.shape[-1] == 4:

|

| 18 |

+

arr = arr[..., :3]

|

| 19 |

+

# calc diffs

|

| 20 |

+

base = arr[0, 0]

|

| 21 |

+

diffs = np.abs(arr.astype(np.int32) - base.astype(np.int32)).sum(axis=-1)

|

| 22 |

+

alpha = (diffs <= 80)

|

| 23 |

+

|

| 24 |

+

arr[alpha] = 255

|

| 25 |

+

alpha = ~alpha

|

| 26 |

+

arr = np.concatenate([arr, alpha[..., None].astype(np.int32) * 255], axis=-1)

|

| 27 |

+

return arr

|

| 28 |

+

|

| 29 |

+

def simple_remove(imgs, run_sr=True):

|

| 30 |

+

"""Only works for normal"""

|

| 31 |

+

if not isinstance(imgs, list):

|

| 32 |

+

imgs = [imgs]

|

| 33 |

+

single_input = True

|

| 34 |

+

else:

|

| 35 |

+

single_input = False

|

| 36 |

+

if run_sr:

|

| 37 |

+

imgs = run_sr_fast(imgs)

|

| 38 |

+

rets = []

|

| 39 |

+

for img in imgs:

|

| 40 |

+

arr = np.array(img)

|

| 41 |

+

arr = remove_color(arr)

|

| 42 |

+

rets.append(Image.fromarray(arr.astype(np.uint8)))

|

| 43 |

+

if single_input:

|

| 44 |

+

return rets[0]

|

| 45 |

+

return rets

|

| 46 |

+

|

| 47 |

+

def rgba_to_rgb(rgba: Image.Image, bkgd="WHITE"):

|

| 48 |

+

new_image = Image.new("RGBA", rgba.size, bkgd)

|

| 49 |

+

new_image.paste(rgba, (0, 0), rgba)

|

| 50 |

+

new_image = new_image.convert('RGB')

|

| 51 |

+

return new_image

|

| 52 |

+

|

| 53 |

+

def change_rgba_bg(rgba: Image.Image, bkgd="WHITE"):

|

| 54 |

+

rgb_white = rgba_to_rgb(rgba, bkgd)

|

| 55 |

+

new_rgba = Image.fromarray(np.concatenate([np.array(rgb_white), np.array(rgba)[:, :, 3:4]], axis=-1))

|

| 56 |

+

return new_rgba

|

| 57 |

+

|

| 58 |

+

def split_image(image, rows=None, cols=None):

|

| 59 |

+

"""

|

| 60 |

+

inverse function of make_image_grid

|

| 61 |

+

"""

|

| 62 |

+

# image is in square

|

| 63 |

+

if rows is None and cols is None:

|

| 64 |

+

# image.size [W, H]

|

| 65 |

+

rows = 1

|

| 66 |

+

cols = image.size[0] // image.size[1]

|

| 67 |

+

assert cols * image.size[1] == image.size[0]

|

| 68 |

+

subimg_size = image.size[1]

|

| 69 |

+

elif rows is None:

|

| 70 |

+

subimg_size = image.size[0] // cols

|

| 71 |

+

rows = image.size[1] // subimg_size

|

| 72 |

+

assert rows * subimg_size == image.size[1]

|

| 73 |

+

elif cols is None:

|

| 74 |

+

subimg_size = image.size[1] // rows

|

| 75 |

+

cols = image.size[0] // subimg_size

|

| 76 |

+

assert cols * subimg_size == image.size[0]

|

| 77 |

+

else:

|

| 78 |

+

subimg_size = image.size[1] // rows

|

| 79 |

+

assert cols * subimg_size == image.size[0]

|

| 80 |

+

subimgs = []

|

| 81 |

+

for i in range(rows):

|

| 82 |

+

for j in range(cols):

|

| 83 |

+

subimg = image.crop((j*subimg_size, i*subimg_size, (j+1)*subimg_size, (i+1)*subimg_size))

|

| 84 |

+

subimgs.append(subimg)

|

| 85 |

+

return subimgs

|

| 86 |

+

|

| 87 |

+

def make_image_grid(images, rows=None, cols=None, resize=None):

|

| 88 |

+

if rows is None and cols is None:

|

| 89 |

+

rows = 1

|

| 90 |

+

cols = len(images)

|

| 91 |

+

if rows is None:

|

| 92 |

+

rows = len(images) // cols

|

| 93 |

+

if len(images) % cols != 0:

|

| 94 |

+

rows += 1

|

| 95 |

+

if cols is None:

|

| 96 |

+

cols = len(images) // rows

|

| 97 |

+

if len(images) % rows != 0:

|

| 98 |

+

cols += 1

|

| 99 |

+

total_imgs = rows * cols

|

| 100 |

+

if total_imgs > len(images):

|

| 101 |

+

images += [Image.new(images[0].mode, images[0].size) for _ in range(total_imgs - len(images))]

|

| 102 |

+

|

| 103 |

+

if resize is not None:

|

| 104 |

+

images = [img.resize((resize, resize)) for img in images]

|

| 105 |

+

|

| 106 |

+

w, h = images[0].size

|

| 107 |

+

grid = Image.new(images[0].mode, size=(cols * w, rows * h))

|

| 108 |

+

|

| 109 |

+

for i, img in enumerate(images):

|

| 110 |

+

grid.paste(img, box=(i % cols * w, i // cols * h))

|

| 111 |

+

return grid

|

| 112 |

+

|

assets/teaser.jpg

ADDED

|

ckpt/controlnet-tile/config.json

ADDED

|

@@ -0,0 +1,52 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|