Spaces:

Running

on

Zero

Running

on

Zero

Commit

·

ef6b1b7

1

Parent(s):

ccf317f

Remove unused image files and update bot function names for clarity

Browse files- app.py +67 -59

- conversation.py +2 -2

- gallery/1-2.PNG +0 -3

- gallery/10.PNG +0 -0

- gallery/14.jfif +0 -0

- gallery/15.PNG +0 -0

- gallery/2.PNG +0 -3

- gallery/astro_on_unicorn.png +0 -3

- gallery/cheetah.png +0 -3

- gallery/child_1.jpg +0 -0

- gallery/child_2.jpg +0 -0

- gallery/child_3.jpg +0 -0

- gallery/farmer_sunset.png +0 -3

- gallery/friends.png +0 -3

- gallery/prod_1.jpeg +0 -0

- gallery/prod_11.jpg +0 -0

- gallery/prod_12.png +0 -0

- gallery/prod_17.jpg +0 -0

- gallery/prod_4.png +0 -0

- gallery/prod_9.jpg +0 -0

- gallery/prod_en_17.png +0 -3

- gallery/puppy.png +0 -3

- gallery/viking.png +0 -3

- gallery/water.jpg +0 -0

- gallery/wizard.png +0 -3

- samples/1.jpg +0 -0

- samples/2.png +0 -0

- samples/3.jpeg +0 -0

- samples/4.jpeg +0 -0

app.py

CHANGED

|

@@ -216,7 +216,7 @@ def predict(message,

|

|

| 216 |

response, conv_history = model.chat(tokenizer, pixel_values, question, generation_config, history=history, return_history=True)

|

| 217 |

return response, conv_history

|

| 218 |

|

| 219 |

-

def

|

| 220 |

state,

|

| 221 |

temperature,

|

| 222 |

top_p,

|

|

@@ -226,7 +226,7 @@ def http_bot(

|

|

| 226 |

request: gr.Request,

|

| 227 |

):

|

| 228 |

|

| 229 |

-

logger.info(f"

|

| 230 |

start_tstamp = time.time()

|

| 231 |

if hasattr(state, "skip_next") and state.skip_next:

|

| 232 |

# This generate call is skipped due to invalid inputs

|

|

@@ -276,7 +276,7 @@ def http_bot(

|

|

| 276 |

top_p,

|

| 277 |

repetition_penalty)

|

| 278 |

|

| 279 |

-

|

| 280 |

buffer = ""

|

| 281 |

for new_text in response:

|

| 282 |

buffer += new_text

|

|

@@ -289,7 +289,7 @@ def http_bot(

|

|

| 289 |

) + (disable_btn,) * 5

|

| 290 |

|

| 291 |

except Exception as e:

|

| 292 |

-

logger.error(f"Error in

|

| 293 |

state.update_message(Conversation.ASSISTANT, server_error_msg, None)

|

| 294 |

yield (

|

| 295 |

state,

|

|

@@ -333,11 +333,11 @@ def http_bot(

|

|

| 333 |

# <h1 style="font-size: 28px; font-weight: bold;">Expanding Performance Boundaries of Open-Source Multimodal Models with Model, Data, and Test-Time Scaling</h1>

|

| 334 |

title_html = """

|

| 335 |

<div style="text-align: center;">

|

| 336 |

-

<img src="https://lh3.googleusercontent.com/pw/AP1GczMmW-aFQ4dNaR_LCAllh4UZLLx9fTZ1ITHeGVMWx-1bwlIWz4VsWJSGb3_9C7CQfvboqJH41y2Sbc5ToC9ZmKeV4-buf_DEevIMU0HtaLWgHAPOqBiIbG6LaE8CvDqniLZzvB9UX8TR_-YgvYzPFt2z=w1472-h832-s-no-gm?authuser=0" style="height:

|

| 337 |

-

<p>🔥Vintern-1B-v3_5🔥</p>

|

| 338 |

-

<p>An Efficient Multimodal Large Language Model for Vietnamese</p>

|

| 339 |

-

<a href="https://huggingface.co/papers/2408.12480">[📖 Vintern Paper]</a>

|

| 340 |

-

<a href="https://huggingface.co/5CD-AI">[🤗 Huggingface]</a>

|

| 341 |

</div>

|

| 342 |

"""

|

| 343 |

|

|

@@ -375,45 +375,45 @@ block_css = """

|

|

| 375 |

}

|

| 376 |

"""

|

| 377 |

|

| 378 |

-

|

| 379 |

-

|

| 380 |

-

|

| 381 |

-

|

| 382 |

-

|

| 383 |

-

|

| 384 |

-

|

| 385 |

-

|

| 386 |

-

|

| 387 |

-

|

| 388 |

-

|

| 389 |

-

|

| 390 |

-

|

| 391 |

-

|

| 392 |

-

|

| 393 |

-

|

| 394 |

-

|

| 395 |

-

|

| 396 |

-

|

| 397 |

-

|

| 398 |

-

|

| 399 |

-

|

| 400 |

-

|

| 401 |

-

|

| 402 |

-

|

| 403 |

-

|

| 404 |

-

|

| 405 |

-

|

| 406 |

-

|

| 407 |

-

|

| 408 |

-

|

| 409 |

-

|

| 410 |

-

|

| 411 |

-

|

| 412 |

-

|

| 413 |

-

|

| 414 |

-

|

| 415 |

-

|

| 416 |

-

|

| 417 |

|

| 418 |

|

| 419 |

def build_demo():

|

|

@@ -426,14 +426,14 @@ def build_demo():

|

|

| 426 |

|

| 427 |

with gr.Blocks(

|

| 428 |

title="Vintern-1B-v3_5-Demo",

|

| 429 |

-

theme=

|

| 430 |

css=block_css,

|

|

|

|

| 431 |

) as demo:

|

| 432 |

state = gr.State()

|

| 433 |

|

| 434 |

with gr.Row():

|

| 435 |

with gr.Column(scale=2):

|

| 436 |

-

# gr.Image('./gallery/logo-47b364d3.jpg')

|

| 437 |

gr.HTML(title_html)

|

| 438 |

|

| 439 |

with gr.Accordion("Settings", open=False) as setting_row:

|

|

@@ -487,25 +487,33 @@ def build_demo():

|

|

| 487 |

[

|

| 488 |

{

|

| 489 |

"files": [

|

| 490 |

-

"

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 491 |

],

|

| 492 |

-

"text": "

|

| 493 |

}

|

| 494 |

],

|

| 495 |

[

|

| 496 |

{

|

| 497 |

"files": [

|

| 498 |

-

"

|

| 499 |

],

|

| 500 |

-

"text": "

|

| 501 |

}

|

| 502 |

],

|

| 503 |

[

|

| 504 |

{

|

| 505 |

"files": [

|

| 506 |

-

"

|

| 507 |

],

|

| 508 |

-

"text": "

|

| 509 |

}

|

| 510 |

],

|

| 511 |

],

|

|

@@ -570,7 +578,7 @@ def build_demo():

|

|

| 570 |

[state, system_prompt],

|

| 571 |

[state, chatbot, textbox] + btn_list,

|

| 572 |

).then(

|

| 573 |

-

|

| 574 |

[

|

| 575 |

state,

|

| 576 |

temperature,

|

|

@@ -588,7 +596,7 @@ def build_demo():

|

|

| 588 |

[state, textbox, system_prompt],

|

| 589 |

[state, chatbot, textbox] + btn_list,

|

| 590 |

).then(

|

| 591 |

-

|

| 592 |

[

|

| 593 |

state,

|

| 594 |

temperature,

|

|

@@ -604,7 +612,7 @@ def build_demo():

|

|

| 604 |

[state, textbox, system_prompt],

|

| 605 |

[state, chatbot, textbox] + btn_list,

|

| 606 |

).then(

|

| 607 |

-

|

| 608 |

[

|

| 609 |

state,

|

| 610 |

temperature,

|

|

|

|

| 216 |

response, conv_history = model.chat(tokenizer, pixel_values, question, generation_config, history=history, return_history=True)

|

| 217 |

return response, conv_history

|

| 218 |

|

| 219 |

+

def ai_bot(

|

| 220 |

state,

|

| 221 |

temperature,

|

| 222 |

top_p,

|

|

|

|

| 226 |

request: gr.Request,

|

| 227 |

):

|

| 228 |

|

| 229 |

+

logger.info(f"ai_bot. ip: {request.client.host}")

|

| 230 |

start_tstamp = time.time()

|

| 231 |

if hasattr(state, "skip_next") and state.skip_next:

|

| 232 |

# This generate call is skipped due to invalid inputs

|

|

|

|

| 276 |

top_p,

|

| 277 |

repetition_penalty)

|

| 278 |

|

| 279 |

+

response = "This is a test response"

|

| 280 |

buffer = ""

|

| 281 |

for new_text in response:

|

| 282 |

buffer += new_text

|

|

|

|

| 289 |

) + (disable_btn,) * 5

|

| 290 |

|

| 291 |

except Exception as e:

|

| 292 |

+

logger.error(f"Error in ai_bot: {e} \n{traceback.format_exc()}")

|

| 293 |

state.update_message(Conversation.ASSISTANT, server_error_msg, None)

|

| 294 |

yield (

|

| 295 |

state,

|

|

|

|

| 333 |

# <h1 style="font-size: 28px; font-weight: bold;">Expanding Performance Boundaries of Open-Source Multimodal Models with Model, Data, and Test-Time Scaling</h1>

|

| 334 |

title_html = """

|

| 335 |

<div style="text-align: center;">

|

| 336 |

+

<img src="https://lh3.googleusercontent.com/pw/AP1GczMmW-aFQ4dNaR_LCAllh4UZLLx9fTZ1ITHeGVMWx-1bwlIWz4VsWJSGb3_9C7CQfvboqJH41y2Sbc5ToC9ZmKeV4-buf_DEevIMU0HtaLWgHAPOqBiIbG6LaE8CvDqniLZzvB9UX8TR_-YgvYzPFt2z=w1472-h832-s-no-gm?authuser=0" style="height: 90px; width: 95%;">

|

| 337 |

+

<p style="font-size: 20px;">🔥Vintern-1B-v3_5🔥</p>

|

| 338 |

+

<p style="font-size: 14px;">An Efficient Multimodal Large Language Model for Vietnamese</p>

|

| 339 |

+

<a href="https://huggingface.co/papers/2408.12480" style="font-size: 13px;">[📖 Vintern Paper]</a>

|

| 340 |

+

<a href="https://huggingface.co/5CD-AI" style="font-size: 13px;">[🤗 Huggingface]</a>

|

| 341 |

</div>

|

| 342 |

"""

|

| 343 |

|

|

|

|

| 375 |

}

|

| 376 |

"""

|

| 377 |

|

| 378 |

+

js = """

|

| 379 |

+

function createWaveAnimation() {

|

| 380 |

+

const text = document.getElementById('text');

|

| 381 |

+

var i = 0;

|

| 382 |

+

setInterval(function() {

|

| 383 |

+

const colors = [

|

| 384 |

+

'red, orange, yellow, green, blue, indigo, violet, purple',

|

| 385 |

+

'orange, yellow, green, blue, indigo, violet, purple, red',

|

| 386 |

+

'yellow, green, blue, indigo, violet, purple, red, orange',

|

| 387 |

+

'green, blue, indigo, violet, purple, red, orange, yellow',

|

| 388 |

+

'blue, indigo, violet, purple, red, orange, yellow, green',

|

| 389 |

+

'indigo, violet, purple, red, orange, yellow, green, blue',

|

| 390 |

+

'violet, purple, red, orange, yellow, green, blue, indigo',

|

| 391 |

+

'purple, red, orange, yellow, green, blue, indigo, violet',

|

| 392 |

+

];

|

| 393 |

+

const angle = 45;

|

| 394 |

+

const colorIndex = i % colors.length;

|

| 395 |

+

text.style.background = `linear-gradient(${angle}deg, ${colors[colorIndex]})`;

|

| 396 |

+

text.style.webkitBackgroundClip = 'text';

|

| 397 |

+

text.style.backgroundClip = 'text';

|

| 398 |

+

text.style.color = 'transparent';

|

| 399 |

+

text.style.fontSize = '28px';

|

| 400 |

+

text.style.width = 'auto';

|

| 401 |

+

text.textContent = 'Vintern-1B';

|

| 402 |

+

text.style.fontWeight = 'bold';

|

| 403 |

+

i += 1;

|

| 404 |

+

}, 200);

|

| 405 |

+

const params = new URLSearchParams(window.location.search);

|

| 406 |

+

url_params = Object.fromEntries(params);

|

| 407 |

+

// console.log(url_params);

|

| 408 |

+

// console.log('hello world...');

|

| 409 |

+

// console.log(window.location.search);

|

| 410 |

+

// console.log('hello world...');

|

| 411 |

+

// alert(window.location.search)

|

| 412 |

+

// alert(url_params);

|

| 413 |

+

return url_params;

|

| 414 |

+

}

|

| 415 |

+

|

| 416 |

+

"""

|

| 417 |

|

| 418 |

|

| 419 |

def build_demo():

|

|

|

|

| 426 |

|

| 427 |

with gr.Blocks(

|

| 428 |

title="Vintern-1B-v3_5-Demo",

|

| 429 |

+

theme='SebastianBravo/simci_css',

|

| 430 |

css=block_css,

|

| 431 |

+

js=js,

|

| 432 |

) as demo:

|

| 433 |

state = gr.State()

|

| 434 |

|

| 435 |

with gr.Row():

|

| 436 |

with gr.Column(scale=2):

|

|

|

|

| 437 |

gr.HTML(title_html)

|

| 438 |

|

| 439 |

with gr.Accordion("Settings", open=False) as setting_row:

|

|

|

|

| 487 |

[

|

| 488 |

{

|

| 489 |

"files": [

|

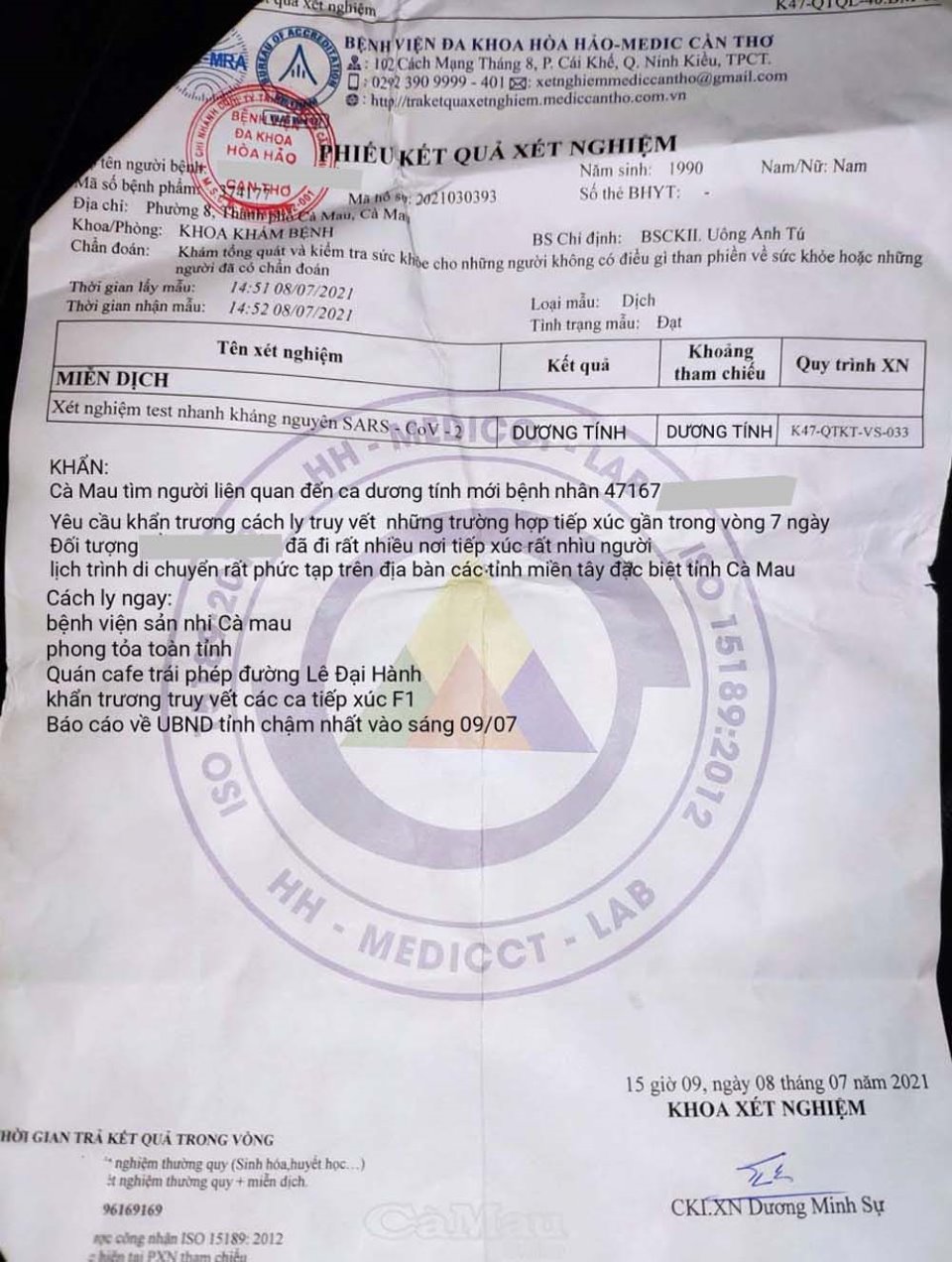

| 490 |

+

"samples/1.jpg",

|

| 491 |

+

],

|

| 492 |

+

"text": "Hãy trích xuất thông tin từ hình ảnh này và trả về kết quả dạng markdown.",

|

| 493 |

+

}

|

| 494 |

+

],

|

| 495 |

+

[

|

| 496 |

+

{

|

| 497 |

+

"files": [

|

| 498 |

+

"samples/2.png",

|

| 499 |

],

|

| 500 |

+

"text": "Bạn là một nhà sáng tạo nội dung tài năng. Hãy viết một kịch bản quảng cáo cho sản phẩm này.",

|

| 501 |

}

|

| 502 |

],

|

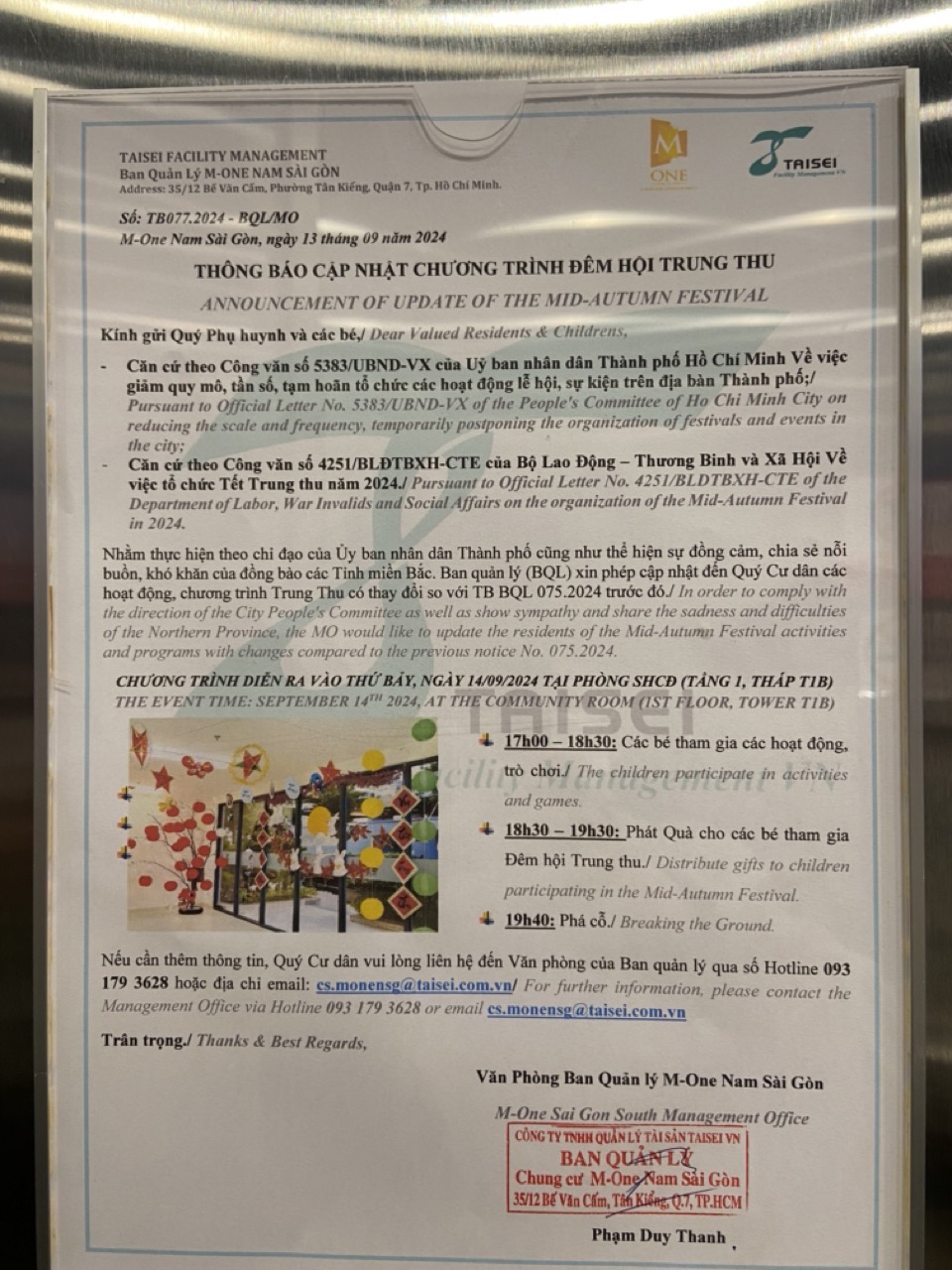

| 503 |

[

|

| 504 |

{

|

| 505 |

"files": [

|

| 506 |

+

"samples/3.jpeg",

|

| 507 |

],

|

| 508 |

+

"text": "Hãy viết lại một email cho các chủ hộ về nội dung của bảng thông báo.",

|

| 509 |

}

|

| 510 |

],

|

| 511 |

[

|

| 512 |

{

|

| 513 |

"files": [

|

| 514 |

+

"samples/4.jpeg",

|

| 515 |

],

|

| 516 |

+

"text": "Hãy viết trích xuất nội dung của hoá đơn dạng JSON.",

|

| 517 |

}

|

| 518 |

],

|

| 519 |

],

|

|

|

|

| 578 |

[state, system_prompt],

|

| 579 |

[state, chatbot, textbox] + btn_list,

|

| 580 |

).then(

|

| 581 |

+

ai_bot,

|

| 582 |

[

|

| 583 |

state,

|

| 584 |

temperature,

|

|

|

|

| 596 |

[state, textbox, system_prompt],

|

| 597 |

[state, chatbot, textbox] + btn_list,

|

| 598 |

).then(

|

| 599 |

+

ai_bot,

|

| 600 |

[

|

| 601 |

state,

|

| 602 |

temperature,

|

|

|

|

| 612 |

[state, textbox, system_prompt],

|

| 613 |

[state, chatbot, textbox] + btn_list,

|

| 614 |

).then(

|

| 615 |

+

ai_bot,

|

| 616 |

[

|

| 617 |

state,

|

| 618 |

temperature,

|

conversation.py

CHANGED

|

@@ -47,8 +47,8 @@ class Conversation:

|

|

| 47 |

Conversation.ASSISTANT,

|

| 48 |

]

|

| 49 |

)

|

| 50 |

-

mandatory_system_message = "

|

| 51 |

-

system_message: str = "

|

| 52 |

messages: List[Dict[str, Any]] = field(default_factory=lambda: [])

|

| 53 |

max_image_limit: int = 4

|

| 54 |

skip_next: bool = False

|

|

|

|

| 47 |

Conversation.ASSISTANT,

|

| 48 |

]

|

| 49 |

)

|

| 50 |

+

mandatory_system_message = ""

|

| 51 |

+

system_message: str = ""

|

| 52 |

messages: List[Dict[str, Any]] = field(default_factory=lambda: [])

|

| 53 |

max_image_limit: int = 4

|

| 54 |

skip_next: bool = False

|

gallery/1-2.PNG

DELETED

Git LFS Details

|

gallery/10.PNG

DELETED

|

Binary file (123 kB)

|

|

|

gallery/14.jfif

DELETED

|

Binary file (112 kB)

|

|

|

gallery/15.PNG

DELETED

|

Binary file (202 kB)

|

|

|

gallery/2.PNG

DELETED

Git LFS Details

|

gallery/astro_on_unicorn.png

DELETED

Git LFS Details

|

gallery/cheetah.png

DELETED

Git LFS Details

|

gallery/child_1.jpg

DELETED

|

Binary file (34.9 kB)

|

|

|

gallery/child_2.jpg

DELETED

|

Binary file (35.3 kB)

|

|

|

gallery/child_3.jpg

DELETED

|

Binary file (28.5 kB)

|

|

|

gallery/farmer_sunset.png

DELETED

Git LFS Details

|

gallery/friends.png

DELETED

Git LFS Details

|

gallery/prod_1.jpeg

DELETED

|

Binary file (48.9 kB)

|

|

|

gallery/prod_11.jpg

DELETED

|

Binary file (92.1 kB)

|

|

|

gallery/prod_12.png

DELETED

|

Binary file (329 kB)

|

|

|

gallery/prod_17.jpg

DELETED

|

Binary file (70.4 kB)

|

|

|

gallery/prod_4.png

DELETED

|

Binary file (462 kB)

|

|

|

gallery/prod_9.jpg

DELETED

|

Binary file (313 kB)

|

|

|

gallery/prod_en_17.png

DELETED

Git LFS Details

|

gallery/puppy.png

DELETED

Git LFS Details

|

gallery/viking.png

DELETED

Git LFS Details

|

gallery/water.jpg

DELETED

|

Binary file (668 kB)

|

|

|

gallery/wizard.png

DELETED

Git LFS Details

|

samples/1.jpg

ADDED

|

samples/2.png

ADDED

|

samples/3.jpeg

ADDED

|

samples/4.jpeg

ADDED

|