diff --git a/.gitattributes b/.gitattributes

new file mode 100644

index 0000000000000000000000000000000000000000..d7f73cd45e8a703a1efca7ee367e37d0ec268788

--- /dev/null

+++ b/.gitattributes

@@ -0,0 +1,36 @@

+*.7z filter=lfs diff=lfs merge=lfs -text

+*.arrow filter=lfs diff=lfs merge=lfs -text

+*.bin filter=lfs diff=lfs merge=lfs -text

+*.bz2 filter=lfs diff=lfs merge=lfs -text

+*.ckpt filter=lfs diff=lfs merge=lfs -text

+*.ftz filter=lfs diff=lfs merge=lfs -text

+*.gz filter=lfs diff=lfs merge=lfs -text

+*.h5 filter=lfs diff=lfs merge=lfs -text

+*.joblib filter=lfs diff=lfs merge=lfs -text

+*.lfs.* filter=lfs diff=lfs merge=lfs -text

+*.mlmodel filter=lfs diff=lfs merge=lfs -text

+*.model filter=lfs diff=lfs merge=lfs -text

+*.msgpack filter=lfs diff=lfs merge=lfs -text

+*.npy filter=lfs diff=lfs merge=lfs -text

+*.npz filter=lfs diff=lfs merge=lfs -text

+*.onnx filter=lfs diff=lfs merge=lfs -text

+*.ot filter=lfs diff=lfs merge=lfs -text

+*.parquet filter=lfs diff=lfs merge=lfs -text

+*.pb filter=lfs diff=lfs merge=lfs -text

+*.pickle filter=lfs diff=lfs merge=lfs -text

+*.pkl filter=lfs diff=lfs merge=lfs -text

+*.pt filter=lfs diff=lfs merge=lfs -text

+*.pth filter=lfs diff=lfs merge=lfs -text

+*.rar filter=lfs diff=lfs merge=lfs -text

+*.safetensors filter=lfs diff=lfs merge=lfs -text

+saved_model/**/* filter=lfs diff=lfs merge=lfs -text

+*.tar.* filter=lfs diff=lfs merge=lfs -text

+*.tflite filter=lfs diff=lfs merge=lfs -text

+*.tgz filter=lfs diff=lfs merge=lfs -text

+*.wasm filter=lfs diff=lfs merge=lfs -text

+*.xz filter=lfs diff=lfs merge=lfs -text

+*.zip filter=lfs diff=lfs merge=lfs -text

+*.zst filter=lfs diff=lfs merge=lfs -text

+*.zstandard filter=lfs diff=lfs merge=lfs -text

+*tfevents* filter=lfs diff=lfs merge=lfs -text

+*.glb filter=lfs diff=lfs merge=lfs -text

diff --git a/.gitignore b/.gitignore

new file mode 100644

index 0000000000000000000000000000000000000000..aff6d155226dd90c0b489e0d5c5af2b3e24ca382

--- /dev/null

+++ b/.gitignore

@@ -0,0 +1,47 @@

+results/

+# Python build

+.eggs/

+gradio.egg-info/*

+!gradio.egg-info/requires.txt

+!gradio.egg-info/PKG-INFO

+dist/

+*.pyc

+__pycache__/

+*.py[cod]

+*$py.class

+build/

+

+# JS build

+gradio/templates/frontend

+# Secrets

+.env

+

+# Gradio run artifacts

+*.db

+*.sqlite3

+gradio/launches.json

+flagged/

+# gradio_cached_examples/

+

+# Tests

+.coverage

+coverage.xml

+test.txt

+

+# Demos

+demo/tmp.zip

+demo/files/*.avi

+demo/files/*.mp4

+

+# Etc

+.idea/*

+.DS_Store

+*.bak

+workspace.code-workspace

+*.h5

+.vscode/

+

+# log files

+.pnpm-debug.log

+venv/

+*.db-journal

diff --git a/PIFu/.gitignore b/PIFu/.gitignore

new file mode 100644

index 0000000000000000000000000000000000000000..e708e040236215f149585d154acc3d1cc9827569

--- /dev/null

+++ b/PIFu/.gitignore

@@ -0,0 +1 @@

+checkpoints/*

diff --git a/PIFu/LICENSE.txt b/PIFu/LICENSE.txt

new file mode 100755

index 0000000000000000000000000000000000000000..e19263f8ae04d1aaa5a98ce6c42c1ed6c8734ea3

--- /dev/null

+++ b/PIFu/LICENSE.txt

@@ -0,0 +1,48 @@

+MIT License

+

+Copyright (c) 2019 Shunsuke Saito, Zeng Huang, and Ryota Natsume

+

+Permission is hereby granted, free of charge, to any person obtaining a copy

+of this software and associated documentation files (the "Software"), to deal

+in the Software without restriction, including without limitation the rights

+to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

+copies of the Software, and to permit persons to whom the Software is

+furnished to do so, subject to the following conditions:

+

+The above copyright notice and this permission notice shall be included in all

+copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

+IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

+FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

+AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

+LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

+OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

+SOFTWARE.

+

+anyabagomo

+

+-------------------- LICENSE FOR ResBlk Image Encoder -----------------------

+Copyright (c) 2017, Jun-Yan Zhu and Taesung Park

+All rights reserved.

+

+Redistribution and use in source and binary forms, with or without

+modification, are permitted provided that the following conditions are met:

+

+* Redistributions of source code must retain the above copyright notice, this

+ list of conditions and the following disclaimer.

+

+* Redistributions in binary form must reproduce the above copyright notice,

+ this list of conditions and the following disclaimer in the documentation

+ and/or other materials provided with the distribution.

+

+THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

+AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

+IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

+DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

+FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

+DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

+SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

+CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

+OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

+OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

\ No newline at end of file

diff --git a/PIFu/README.md b/PIFu/README.md

new file mode 100755

index 0000000000000000000000000000000000000000..5eae12f2a370027de6c46fbf78ec68a1ecb1c01c

--- /dev/null

+++ b/PIFu/README.md

@@ -0,0 +1,167 @@

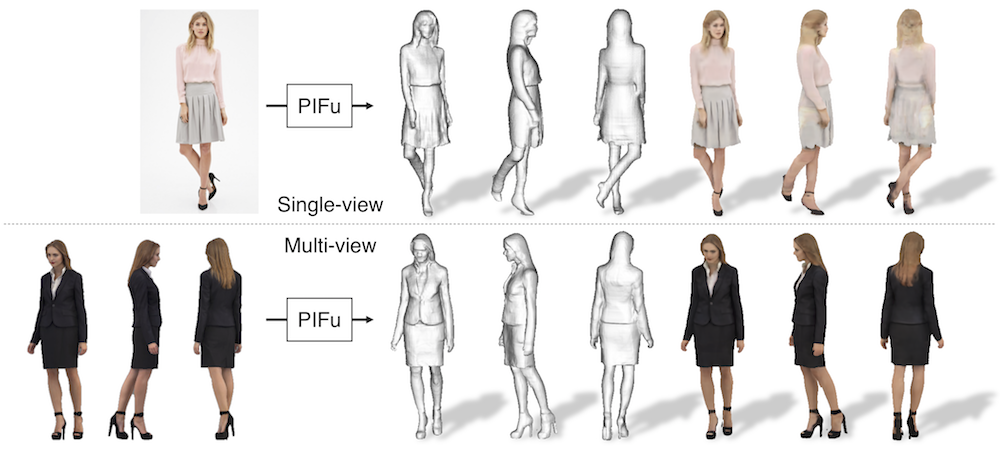

+# PIFu: Pixel-Aligned Implicit Function for High-Resolution Clothed Human Digitization

+

+[](https://arxiv.org/abs/1905.05172) [](https://colab.research.google.com/drive/1GFSsqP2BWz4gtq0e-nki00ZHSirXwFyY)

+

+News:

+* \[2020/05/04\] Added EGL rendering option for training data generation. Now you can create your own training data with headless machines!

+* \[2020/04/13\] Demo with Google Colab (incl. visualization) is available. Special thanks to [@nanopoteto](https://github.com/nanopoteto)!!!

+* \[2020/02/26\] License is updated to MIT license! Enjoy!

+

+This repository contains a pytorch implementation of "[PIFu: Pixel-Aligned Implicit Function for High-Resolution Clothed Human Digitization](https://arxiv.org/abs/1905.05172)".

+

+[Project Page](https://shunsukesaito.github.io/PIFu/)

+

+

+If you find the code useful in your research, please consider citing the paper.

+

+```

+@InProceedings{saito2019pifu,

+author = {Saito, Shunsuke and Huang, Zeng and Natsume, Ryota and Morishima, Shigeo and Kanazawa, Angjoo and Li, Hao},

+title = {PIFu: Pixel-Aligned Implicit Function for High-Resolution Clothed Human Digitization},

+booktitle = {The IEEE International Conference on Computer Vision (ICCV)},

+month = {October},

+year = {2019}

+}

+```

+

+

+This codebase provides:

+- test code

+- training code

+- data generation code

+

+## Requirements

+- Python 3

+- [PyTorch](https://pytorch.org/) tested on 1.4.0

+- json

+- PIL

+- skimage

+- tqdm

+- numpy

+- cv2

+

+for training and data generation

+- [trimesh](https://trimsh.org/) with [pyembree](https://github.com/scopatz/pyembree)

+- [pyexr](https://github.com/tvogels/pyexr)

+- PyOpenGL

+- freeglut (use `sudo apt-get install freeglut3-dev` for ubuntu users)

+- (optional) egl related packages for rendering with headless machines. (use `apt install libgl1-mesa-dri libegl1-mesa libgbm1` for ubuntu users)

+

+Warning: I found that outdated NVIDIA drivers may cause errors with EGL. If you want to try out the EGL version, please update your NVIDIA driver to the latest!!

+

+## Windows demo installation instuction

+

+- Install [miniconda](https://docs.conda.io/en/latest/miniconda.html)

+- Add `conda` to PATH

+- Install [git bash](https://git-scm.com/downloads)

+- Launch `Git\bin\bash.exe`

+- `eval "$(conda shell.bash hook)"` then `conda activate my_env` because of [this](https://github.com/conda/conda-build/issues/3371)

+- Automatic `env create -f environment.yml` (look [this](https://github.com/conda/conda/issues/3417))

+- OR manually setup [environment](https://towardsdatascience.com/a-guide-to-conda-environments-bc6180fc533)

+ - `conda create —name pifu python` where `pifu` is name of your environment

+ - `conda activate`

+ - `conda install pytorch torchvision cudatoolkit=10.1 -c pytorch`

+ - `conda install pillow`

+ - `conda install scikit-image`

+ - `conda install tqdm`

+ - `conda install -c menpo opencv`

+- Download [wget.exe](https://eternallybored.org/misc/wget/)

+- Place it into `Git\mingw64\bin`

+- `sh ./scripts/download_trained_model.sh`

+- Remove background from your image ([this](https://www.remove.bg/), for example)

+- Create black-white mask .png

+- Replace original from sample_images/

+- Try it out - `sh ./scripts/test.sh`

+- Download [Meshlab](http://www.meshlab.net/) because of [this](https://github.com/shunsukesaito/PIFu/issues/1)

+- Open .obj file in Meshlab

+

+

+## Demo

+Warning: The released model is trained with mostly upright standing scans with weak perspectie projection and the pitch angle of 0 degree. Reconstruction quality may degrade for images highly deviated from trainining data.

+1. run the following script to download the pretrained models from the following link and copy them under `./PIFu/checkpoints/`.

+```

+sh ./scripts/download_trained_model.sh

+```

+

+2. run the following script. the script creates a textured `.obj` file under `./PIFu/eval_results/`. You may need to use `./apps/crop_img.py` to roughly align an input image and the corresponding mask to the training data for better performance. For background removal, you can use any off-the-shelf tools such as [removebg](https://www.remove.bg/).

+```

+sh ./scripts/test.sh

+```

+

+## Demo on Google Colab

+If you do not have a setup to run PIFu, we offer Google Colab version to give it a try, allowing you to run PIFu in the cloud, free of charge. Try our Colab demo using the following notebook:

+[](https://colab.research.google.com/drive/1GFSsqP2BWz4gtq0e-nki00ZHSirXwFyY)

+

+## Data Generation (Linux Only)

+While we are unable to release the full training data due to the restriction of commertial scans, we provide rendering code using free models in [RenderPeople](https://renderpeople.com/free-3d-people/).

+This tutorial uses `rp_dennis_posed_004` model. Please download the model from [this link](https://renderpeople.com/sample/free/rp_dennis_posed_004_OBJ.zip) and unzip the content under a folder named `rp_dennis_posed_004_OBJ`. The same process can be applied to other RenderPeople data.

+

+Warning: the following code becomes extremely slow without [pyembree](https://github.com/scopatz/pyembree). Please make sure you install pyembree.

+

+1. run the following script to compute spherical harmonics coefficients for [precomputed radiance transfer (PRT)](https://sites.fas.harvard.edu/~cs278/papers/prt.pdf). In a nutshell, PRT is used to account for accurate light transport including ambient occlusion without compromising online rendering time, which significantly improves the photorealism compared with [a common sperical harmonics rendering using surface normals](https://cseweb.ucsd.edu/~ravir/papers/envmap/envmap.pdf). This process has to be done once for each obj file.

+```

+python -m apps.prt_util -i {path_to_rp_dennis_posed_004_OBJ}

+```

+

+2. run the following script. Under the specified data path, the code creates folders named `GEO`, `RENDER`, `MASK`, `PARAM`, `UV_RENDER`, `UV_MASK`, `UV_NORMAL`, and `UV_POS`. Note that you may need to list validation subjects to exclude from training in `{path_to_training_data}/val.txt` (this tutorial has only one subject and leave it empty). If you wish to render images with headless servers equipped with NVIDIA GPU, add -e to enable EGL rendering.

+```

+python -m apps.render_data -i {path_to_rp_dennis_posed_004_OBJ} -o {path_to_training_data} [-e]

+```

+

+## Training (Linux Only)

+

+Warning: the following code becomes extremely slow without [pyembree](https://github.com/scopatz/pyembree). Please make sure you install pyembree.

+

+1. run the following script to train the shape module. The intermediate results and checkpoints are saved under `./results` and `./checkpoints` respectively. You can add `--batch_size` and `--num_sample_input` flags to adjust the batch size and the number of sampled points based on available GPU memory.

+```

+python -m apps.train_shape --dataroot {path_to_training_data} --random_flip --random_scale --random_trans

+```

+

+2. run the following script to train the color module.

+```

+python -m apps.train_color --dataroot {path_to_training_data} --num_sample_inout 0 --num_sample_color 5000 --sigma 0.1 --random_flip --random_scale --random_trans

+```

+

+## Related Research

+**[Monocular Real-Time Volumetric Performance Capture (ECCV 2020)](https://project-splinter.github.io/)**

+*Ruilong Li\*, Yuliang Xiu\*, Shunsuke Saito, Zeng Huang, Kyle Olszewski, Hao Li*

+

+The first real-time PIFu by accelerating reconstruction and rendering!!

+

+**[PIFuHD: Multi-Level Pixel-Aligned Implicit Function for High-Resolution 3D Human Digitization (CVPR 2020)](https://shunsukesaito.github.io/PIFuHD/)**

+*Shunsuke Saito, Tomas Simon, Jason Saragih, Hanbyul Joo*

+

+We further improve the quality of reconstruction by leveraging multi-level approach!

+

+**[ARCH: Animatable Reconstruction of Clothed Humans (CVPR 2020)](https://arxiv.org/pdf/2004.04572.pdf)**

+*Zeng Huang, Yuanlu Xu, Christoph Lassner, Hao Li, Tony Tung*

+

+Learning PIFu in canonical space for animatable avatar generation!

+

+**[Robust 3D Self-portraits in Seconds (CVPR 2020)](http://www.liuyebin.com/portrait/portrait.html)**

+*Zhe Li, Tao Yu, Chuanyu Pan, Zerong Zheng, Yebin Liu*

+

+They extend PIFu to RGBD + introduce "PIFusion" utilizing PIFu reconstruction for non-rigid fusion.

+

+**[Learning to Infer Implicit Surfaces without 3d Supervision (NeurIPS 2019)](http://papers.nips.cc/paper/9039-learning-to-infer-implicit-surfaces-without-3d-supervision.pdf)**

+*Shichen Liu, Shunsuke Saito, Weikai Chen, Hao Li*

+

+We answer to the question of "how can we learn implicit function if we don't have 3D ground truth?"

+

+**[SiCloPe: Silhouette-Based Clothed People (CVPR 2019, best paper finalist)](https://arxiv.org/pdf/1901.00049.pdf)**

+*Ryota Natsume\*, Shunsuke Saito\*, Zeng Huang, Weikai Chen, Chongyang Ma, Hao Li, Shigeo Morishima*

+

+Our first attempt to reconstruct 3D clothed human body with texture from a single image!

+

+**[Deep Volumetric Video from Very Sparse Multi-view Performance Capture (ECCV 2018)](http://openaccess.thecvf.com/content_ECCV_2018/papers/Zeng_Huang_Deep_Volumetric_Video_ECCV_2018_paper.pdf)**

+*Zeng Huang, Tianye Li, Weikai Chen, Yajie Zhao, Jun Xing, Chloe LeGendre, Linjie Luo, Chongyang Ma, Hao Li*

+

+Implict surface learning for sparse view human performance capture!

+

+------

+

+

+

+For commercial queries, please contact:

+

+Hao Li: hao@hao-li.com ccto: saitos@usc.edu Baker!!

diff --git a/PIFu/apps/__init__.py b/PIFu/apps/__init__.py

new file mode 100755

index 0000000000000000000000000000000000000000..e69de29bb2d1d6434b8b29ae775ad8c2e48c5391

diff --git a/PIFu/apps/crop_img.py b/PIFu/apps/crop_img.py

new file mode 100755

index 0000000000000000000000000000000000000000..4854d1f5a6361963659a9d79f41c404d801e9193

--- /dev/null

+++ b/PIFu/apps/crop_img.py

@@ -0,0 +1,75 @@

+import os

+import cv2

+import numpy as np

+

+from pathlib import Path

+import argparse

+

+def get_bbox(msk):

+ rows = np.any(msk, axis=1)

+ cols = np.any(msk, axis=0)

+ rmin, rmax = np.where(rows)[0][[0,-1]]

+ cmin, cmax = np.where(cols)[0][[0,-1]]

+

+ return rmin, rmax, cmin, cmax

+

+def process_img(img, msk, bbox=None):

+ if bbox is None:

+ bbox = get_bbox(msk > 100)

+ cx = (bbox[3] + bbox[2])//2

+ cy = (bbox[1] + bbox[0])//2

+

+ w = img.shape[1]

+ h = img.shape[0]

+ height = int(1.138*(bbox[1] - bbox[0]))

+ hh = height//2

+

+ # crop

+ dw = min(cx, w-cx, hh)

+ if cy-hh < 0:

+ img = cv2.copyMakeBorder(img,hh-cy,0,0,0,cv2.BORDER_CONSTANT,value=[0,0,0])

+ msk = cv2.copyMakeBorder(msk,hh-cy,0,0,0,cv2.BORDER_CONSTANT,value=0)

+ cy = hh

+ if cy+hh > h:

+ img = cv2.copyMakeBorder(img,0,cy+hh-h,0,0,cv2.BORDER_CONSTANT,value=[0,0,0])

+ msk = cv2.copyMakeBorder(msk,0,cy+hh-h,0,0,cv2.BORDER_CONSTANT,value=0)

+ img = img[cy-hh:(cy+hh),cx-dw:cx+dw,:]

+ msk = msk[cy-hh:(cy+hh),cx-dw:cx+dw]

+ dw = img.shape[0] - img.shape[1]

+ if dw != 0:

+ img = cv2.copyMakeBorder(img,0,0,dw//2,dw//2,cv2.BORDER_CONSTANT,value=[0,0,0])

+ msk = cv2.copyMakeBorder(msk,0,0,dw//2,dw//2,cv2.BORDER_CONSTANT,value=0)

+ img = cv2.resize(img, (512, 512))

+ msk = cv2.resize(msk, (512, 512))

+

+ kernel = np.ones((3,3),np.uint8)

+ msk = cv2.erode((255*(msk > 100)).astype(np.uint8), kernel, iterations = 1)

+

+ return img, msk

+

+def main():

+ '''

+ given foreground mask, this script crops and resizes an input image and mask for processing.

+ '''

+ parser = argparse.ArgumentParser()

+ parser.add_argument('-i', '--input_image', type=str, help='if the image has alpha channel, it will be used as mask')

+ parser.add_argument('-m', '--input_mask', type=str)

+ parser.add_argument('-o', '--out_path', type=str, default='./sample_images')

+ args = parser.parse_args()

+

+ img = cv2.imread(args.input_image, cv2.IMREAD_UNCHANGED)

+ if img.shape[2] == 4:

+ msk = img[:,:,3:]

+ img = img[:,:,:3]

+ else:

+ msk = cv2.imread(args.input_mask, cv2.IMREAD_GRAYSCALE)

+

+ img_new, msk_new = process_img(img, msk)

+

+ img_name = Path(args.input_image).stem

+

+ cv2.imwrite(os.path.join(args.out_path, img_name + '.png'), img_new)

+ cv2.imwrite(os.path.join(args.out_path, img_name + '_mask.png'), msk_new)

+

+if __name__ == "__main__":

+ main()

\ No newline at end of file

diff --git a/PIFu/apps/eval.py b/PIFu/apps/eval.py

new file mode 100755

index 0000000000000000000000000000000000000000..a0ee3fa66c75a144da5c155b927f63170b7e923c

--- /dev/null

+++ b/PIFu/apps/eval.py

@@ -0,0 +1,153 @@

+import tqdm

+import glob

+import torchvision.transforms as transforms

+from PIL import Image

+from lib.model import *

+from lib.train_util import *

+from lib.sample_util import *

+from lib.mesh_util import *

+# from lib.options import BaseOptions

+from torch.utils.data import DataLoader

+import torch

+import numpy as np

+import json

+import time

+import sys

+import os

+

+sys.path.insert(0, os.path.abspath(

+ os.path.join(os.path.dirname(__file__), '..')))

+ROOT_PATH = os.path.dirname(os.path.dirname(os.path.abspath(__file__)))

+

+

+# # get options

+# opt = BaseOptions().parse()

+

+class Evaluator:

+ def __init__(self, opt, projection_mode='orthogonal'):

+ self.opt = opt

+ self.load_size = self.opt.loadSize

+ self.to_tensor = transforms.Compose([

+ transforms.Resize(self.load_size),

+ transforms.ToTensor(),

+ transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))

+ ])

+ # set cuda

+ cuda = torch.device(

+ 'cuda:%d' % opt.gpu_id) if torch.cuda.is_available() else torch.device('cpu')

+

+ # create net

+ netG = HGPIFuNet(opt, projection_mode).to(device=cuda)

+ print('Using Network: ', netG.name)

+

+ if opt.load_netG_checkpoint_path:

+ netG.load_state_dict(torch.load(

+ opt.load_netG_checkpoint_path, map_location=cuda))

+

+ if opt.load_netC_checkpoint_path is not None:

+ print('loading for net C ...', opt.load_netC_checkpoint_path)

+ netC = ResBlkPIFuNet(opt).to(device=cuda)

+ netC.load_state_dict(torch.load(

+ opt.load_netC_checkpoint_path, map_location=cuda))

+ else:

+ netC = None

+

+ os.makedirs(opt.results_path, exist_ok=True)

+ os.makedirs('%s/%s' % (opt.results_path, opt.name), exist_ok=True)

+

+ opt_log = os.path.join(opt.results_path, opt.name, 'opt.txt')

+ with open(opt_log, 'w') as outfile:

+ outfile.write(json.dumps(vars(opt), indent=2))

+

+ self.cuda = cuda

+ self.netG = netG

+ self.netC = netC

+

+ def load_image(self, image_path, mask_path):

+ # Name

+ img_name = os.path.splitext(os.path.basename(image_path))[0]

+ # Calib

+ B_MIN = np.array([-1, -1, -1])

+ B_MAX = np.array([1, 1, 1])

+ projection_matrix = np.identity(4)

+ projection_matrix[1, 1] = -1

+ calib = torch.Tensor(projection_matrix).float()

+ # Mask

+ mask = Image.open(mask_path).convert('L')

+ mask = transforms.Resize(self.load_size)(mask)

+ mask = transforms.ToTensor()(mask).float()

+ # image

+ image = Image.open(image_path).convert('RGB')

+ image = self.to_tensor(image)

+ image = mask.expand_as(image) * image

+ return {

+ 'name': img_name,

+ 'img': image.unsqueeze(0),

+ 'calib': calib.unsqueeze(0),

+ 'mask': mask.unsqueeze(0),

+ 'b_min': B_MIN,

+ 'b_max': B_MAX,

+ }

+

+ def load_image_from_memory(self, image_path, mask_path, img_name):

+ # Calib

+ B_MIN = np.array([-1, -1, -1])

+ B_MAX = np.array([1, 1, 1])

+ projection_matrix = np.identity(4)

+ projection_matrix[1, 1] = -1

+ calib = torch.Tensor(projection_matrix).float()

+ # Mask

+ mask = Image.fromarray(mask_path).convert('L')

+ mask = transforms.Resize(self.load_size)(mask)

+ mask = transforms.ToTensor()(mask).float()

+ # image

+ image = Image.fromarray(image_path).convert('RGB')

+ image = self.to_tensor(image)

+ image = mask.expand_as(image) * image

+ return {

+ 'name': img_name,

+ 'img': image.unsqueeze(0),

+ 'calib': calib.unsqueeze(0),

+ 'mask': mask.unsqueeze(0),

+ 'b_min': B_MIN,

+ 'b_max': B_MAX,

+ }

+

+ def eval(self, data, use_octree=False):

+ '''

+ Evaluate a data point

+ :param data: a dict containing at least ['name'], ['image'], ['calib'], ['b_min'] and ['b_max'] tensors.

+ :return:

+ '''

+ opt = self.opt

+ with torch.no_grad():

+ self.netG.eval()

+ if self.netC:

+ self.netC.eval()

+ save_path = '%s/%s/result_%s.obj' % (

+ opt.results_path, opt.name, data['name'])

+ if self.netC:

+ gen_mesh_color(opt, self.netG, self.netC, self.cuda,

+ data, save_path, use_octree=use_octree)

+ else:

+ gen_mesh(opt, self.netG, self.cuda, data,

+ save_path, use_octree=use_octree)

+

+

+if __name__ == '__main__':

+ evaluator = Evaluator(opt)

+

+ test_images = glob.glob(os.path.join(opt.test_folder_path, '*'))

+ test_images = [f for f in test_images if (

+ 'png' in f or 'jpg' in f) and (not 'mask' in f)]

+ test_masks = [f[:-4]+'_mask.png' for f in test_images]

+

+ print("num; ", len(test_masks))

+

+ for image_path, mask_path in tqdm.tqdm(zip(test_images, test_masks)):

+ try:

+ print(image_path, mask_path)

+ data = evaluator.load_image(image_path, mask_path)

+ evaluator.eval(data, True)

+ except Exception as e:

+ print("error:", e.args)

diff --git a/PIFu/apps/eval_spaces.py b/PIFu/apps/eval_spaces.py

new file mode 100755

index 0000000000000000000000000000000000000000..b0cf689d24f70d95aa0d491fd04987296802e492

--- /dev/null

+++ b/PIFu/apps/eval_spaces.py

@@ -0,0 +1,138 @@

+import sys

+import os

+

+sys.path.insert(0, os.path.abspath(os.path.join(os.path.dirname(__file__), '..')))

+ROOT_PATH = os.path.dirname(os.path.dirname(os.path.abspath(__file__)))

+

+import time

+import json

+import numpy as np

+import torch

+from torch.utils.data import DataLoader

+

+from lib.options import BaseOptions

+from lib.mesh_util import *

+from lib.sample_util import *

+from lib.train_util import *

+from lib.model import *

+

+from PIL import Image

+import torchvision.transforms as transforms

+

+import trimesh

+from datetime import datetime

+

+# get options

+opt = BaseOptions().parse()

+

+class Evaluator:

+ def __init__(self, opt, projection_mode='orthogonal'):

+ self.opt = opt

+ self.load_size = self.opt.loadSize

+ self.to_tensor = transforms.Compose([

+ transforms.Resize(self.load_size),

+ transforms.ToTensor(),

+ transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))

+ ])

+ # set cuda

+ cuda = torch.device('cuda:%d' % opt.gpu_id) if torch.cuda.is_available() else torch.device('cpu')

+ print("CUDDAAAAA ???", torch.cuda.get_device_name(0) if torch.cuda.is_available() else "NO ONLY CPU")

+

+ # create net

+ netG = HGPIFuNet(opt, projection_mode).to(device=cuda)

+ print('Using Network: ', netG.name)

+

+ if opt.load_netG_checkpoint_path:

+ netG.load_state_dict(torch.load(opt.load_netG_checkpoint_path, map_location=cuda))

+

+ if opt.load_netC_checkpoint_path is not None:

+ print('loading for net C ...', opt.load_netC_checkpoint_path)

+ netC = ResBlkPIFuNet(opt).to(device=cuda)

+ netC.load_state_dict(torch.load(opt.load_netC_checkpoint_path, map_location=cuda))

+ else:

+ netC = None

+

+ os.makedirs(opt.results_path, exist_ok=True)

+ os.makedirs('%s/%s' % (opt.results_path, opt.name), exist_ok=True)

+

+ opt_log = os.path.join(opt.results_path, opt.name, 'opt.txt')

+ with open(opt_log, 'w') as outfile:

+ outfile.write(json.dumps(vars(opt), indent=2))

+

+ self.cuda = cuda

+ self.netG = netG

+ self.netC = netC

+

+ def load_image(self, image_path, mask_path):

+ # Name

+ img_name = os.path.splitext(os.path.basename(image_path))[0]

+ # Calib

+ B_MIN = np.array([-1, -1, -1])

+ B_MAX = np.array([1, 1, 1])

+ projection_matrix = np.identity(4)

+ projection_matrix[1, 1] = -1

+ calib = torch.Tensor(projection_matrix).float()

+ # Mask

+ mask = Image.open(mask_path).convert('L')

+ mask = transforms.Resize(self.load_size)(mask)

+ mask = transforms.ToTensor()(mask).float()

+ # image

+ image = Image.open(image_path).convert('RGB')

+ image = self.to_tensor(image)

+ image = mask.expand_as(image) * image

+ return {

+ 'name': img_name,

+ 'img': image.unsqueeze(0),

+ 'calib': calib.unsqueeze(0),

+ 'mask': mask.unsqueeze(0),

+ 'b_min': B_MIN,

+ 'b_max': B_MAX,

+ }

+

+ def eval(self, data, use_octree=False):

+ '''

+ Evaluate a data point

+ :param data: a dict containing at least ['name'], ['image'], ['calib'], ['b_min'] and ['b_max'] tensors.

+ :return:

+ '''

+ opt = self.opt

+ with torch.no_grad():

+ self.netG.eval()

+ if self.netC:

+ self.netC.eval()

+ save_path = '%s/%s/result_%s.obj' % (opt.results_path, opt.name, data['name'])

+ if self.netC:

+ gen_mesh_color(opt, self.netG, self.netC, self.cuda, data, save_path, use_octree=use_octree)

+ else:

+ gen_mesh(opt, self.netG, self.cuda, data, save_path, use_octree=use_octree)

+

+

+if __name__ == '__main__':

+ evaluator = Evaluator(opt)

+

+ results_path = opt.results_path

+ name = opt.name

+ test_image_path = opt.img_path

+ test_mask_path = test_image_path[:-4] +'_mask.png'

+ test_img_name = os.path.splitext(os.path.basename(test_image_path))[0]

+ print("test_image: ", test_image_path)

+ print("test_mask: ", test_mask_path)

+

+ try:

+ time = datetime.now()

+ print("evaluating" , time)

+ data = evaluator.load_image(test_image_path, test_mask_path)

+ evaluator.eval(data, False)

+ print("done evaluating" , datetime.now() - time)

+ except Exception as e:

+ print("error:", e.args)

+

+ try:

+ mesh = trimesh.load(f'{results_path}/{name}/result_{test_img_name}.obj')

+ mesh.apply_transform([[1, 0, 0, 0],

+ [0, 1, 0, 0],

+ [0, 0, -1, 0],

+ [0, 0, 0, 1]])

+ mesh.export(file_obj=f'{results_path}/{name}/result_{test_img_name}.glb')

+ except Exception as e:

+ print("error generating MESH", e)

diff --git a/PIFu/apps/prt_util.py b/PIFu/apps/prt_util.py

new file mode 100755

index 0000000000000000000000000000000000000000..7eba32fa0b396f420b2e332abbb67135dbc14d6b

--- /dev/null

+++ b/PIFu/apps/prt_util.py

@@ -0,0 +1,142 @@

+import os

+import trimesh

+import numpy as np

+import math

+from scipy.special import sph_harm

+import argparse

+from tqdm import tqdm

+

+def factratio(N, D):

+ if N >= D:

+ prod = 1.0

+ for i in range(D+1, N+1):

+ prod *= i

+ return prod

+ else:

+ prod = 1.0

+ for i in range(N+1, D+1):

+ prod *= i

+ return 1.0 / prod

+

+def KVal(M, L):

+ return math.sqrt(((2 * L + 1) / (4 * math.pi)) * (factratio(L - M, L + M)))

+

+def AssociatedLegendre(M, L, x):

+ if M < 0 or M > L or np.max(np.abs(x)) > 1.0:

+ return np.zeros_like(x)

+

+ pmm = np.ones_like(x)

+ if M > 0:

+ somx2 = np.sqrt((1.0 + x) * (1.0 - x))

+ fact = 1.0

+ for i in range(1, M+1):

+ pmm = -pmm * fact * somx2

+ fact = fact + 2

+

+ if L == M:

+ return pmm

+ else:

+ pmmp1 = x * (2 * M + 1) * pmm

+ if L == M+1:

+ return pmmp1

+ else:

+ pll = np.zeros_like(x)

+ for i in range(M+2, L+1):

+ pll = (x * (2 * i - 1) * pmmp1 - (i + M - 1) * pmm) / (i - M)

+ pmm = pmmp1

+ pmmp1 = pll

+ return pll

+

+def SphericalHarmonic(M, L, theta, phi):

+ if M > 0:

+ return math.sqrt(2.0) * KVal(M, L) * np.cos(M * phi) * AssociatedLegendre(M, L, np.cos(theta))

+ elif M < 0:

+ return math.sqrt(2.0) * KVal(-M, L) * np.sin(-M * phi) * AssociatedLegendre(-M, L, np.cos(theta))

+ else:

+ return KVal(0, L) * AssociatedLegendre(0, L, np.cos(theta))

+

+def save_obj(mesh_path, verts):

+ file = open(mesh_path, 'w')

+ for v in verts:

+ file.write('v %.4f %.4f %.4f\n' % (v[0], v[1], v[2]))

+ file.close()

+

+def sampleSphericalDirections(n):

+ xv = np.random.rand(n,n)

+ yv = np.random.rand(n,n)

+ theta = np.arccos(1-2 * xv)

+ phi = 2.0 * math.pi * yv

+

+ phi = phi.reshape(-1)

+ theta = theta.reshape(-1)

+

+ vx = -np.sin(theta) * np.cos(phi)

+ vy = -np.sin(theta) * np.sin(phi)

+ vz = np.cos(theta)

+ return np.stack([vx, vy, vz], 1), phi, theta

+

+def getSHCoeffs(order, phi, theta):

+ shs = []

+ for n in range(0, order+1):

+ for m in range(-n,n+1):

+ s = SphericalHarmonic(m, n, theta, phi)

+ shs.append(s)

+

+ return np.stack(shs, 1)

+

+def computePRT(mesh_path, n, order):

+ mesh = trimesh.load(mesh_path, process=False)

+ vectors_orig, phi, theta = sampleSphericalDirections(n)

+ SH_orig = getSHCoeffs(order, phi, theta)

+

+ w = 4.0 * math.pi / (n*n)

+

+ origins = mesh.vertices

+ normals = mesh.vertex_normals

+ n_v = origins.shape[0]

+

+ origins = np.repeat(origins[:,None], n, axis=1).reshape(-1,3)

+ normals = np.repeat(normals[:,None], n, axis=1).reshape(-1,3)

+ PRT_all = None

+ for i in tqdm(range(n)):

+ SH = np.repeat(SH_orig[None,(i*n):((i+1)*n)], n_v, axis=0).reshape(-1,SH_orig.shape[1])

+ vectors = np.repeat(vectors_orig[None,(i*n):((i+1)*n)], n_v, axis=0).reshape(-1,3)

+

+ dots = (vectors * normals).sum(1)

+ front = (dots > 0.0)

+

+ delta = 1e-3*min(mesh.bounding_box.extents)

+ hits = mesh.ray.intersects_any(origins + delta * normals, vectors)

+ nohits = np.logical_and(front, np.logical_not(hits))

+

+ PRT = (nohits.astype(np.float) * dots)[:,None] * SH

+

+ if PRT_all is not None:

+ PRT_all += (PRT.reshape(-1, n, SH.shape[1]).sum(1))

+ else:

+ PRT_all = (PRT.reshape(-1, n, SH.shape[1]).sum(1))

+

+ PRT = w * PRT_all

+

+ # NOTE: trimesh sometimes break the original vertex order, but topology will not change.

+ # when loading PRT in other program, use the triangle list from trimesh.

+ return PRT, mesh.faces

+

+def testPRT(dir_path, n=40):

+ if dir_path[-1] == '/':

+ dir_path = dir_path[:-1]

+ sub_name = dir_path.split('/')[-1][:-4]

+ obj_path = os.path.join(dir_path, sub_name + '_100k.obj')

+ os.makedirs(os.path.join(dir_path, 'bounce'), exist_ok=True)

+

+ PRT, F = computePRT(obj_path, n, 2)

+ np.savetxt(os.path.join(dir_path, 'bounce', 'bounce0.txt'), PRT, fmt='%.8f')

+ np.save(os.path.join(dir_path, 'bounce', 'face.npy'), F)

+

+if __name__ == '__main__':

+ parser = argparse.ArgumentParser()

+ parser.add_argument('-i', '--input', type=str, default='/home/shunsuke/Downloads/rp_dennis_posed_004_OBJ')

+ parser.add_argument('-n', '--n_sample', type=int, default=40, help='squared root of number of sampling. the higher, the more accurate, but slower')

+ args = parser.parse_args()

+

+ testPRT(args.input)

diff --git a/PIFu/apps/render_data.py b/PIFu/apps/render_data.py

new file mode 100755

index 0000000000000000000000000000000000000000..563c03fba6e304eced73ca283152a968a65c3b8e

--- /dev/null

+++ b/PIFu/apps/render_data.py

@@ -0,0 +1,290 @@

+#from data.config import raw_dataset, render_dataset, archive_dataset, model_list, zip_path

+

+from lib.renderer.camera import Camera

+import numpy as np

+from lib.renderer.mesh import load_obj_mesh, compute_tangent, compute_normal, load_obj_mesh_mtl

+from lib.renderer.camera import Camera

+import os

+import cv2

+import time

+import math

+import random

+import pyexr

+import argparse

+from tqdm import tqdm

+

+

+def make_rotate(rx, ry, rz):

+ sinX = np.sin(rx)

+ sinY = np.sin(ry)

+ sinZ = np.sin(rz)

+

+ cosX = np.cos(rx)

+ cosY = np.cos(ry)

+ cosZ = np.cos(rz)

+

+ Rx = np.zeros((3,3))

+ Rx[0, 0] = 1.0

+ Rx[1, 1] = cosX

+ Rx[1, 2] = -sinX

+ Rx[2, 1] = sinX

+ Rx[2, 2] = cosX

+

+ Ry = np.zeros((3,3))

+ Ry[0, 0] = cosY

+ Ry[0, 2] = sinY

+ Ry[1, 1] = 1.0

+ Ry[2, 0] = -sinY

+ Ry[2, 2] = cosY

+

+ Rz = np.zeros((3,3))

+ Rz[0, 0] = cosZ

+ Rz[0, 1] = -sinZ

+ Rz[1, 0] = sinZ

+ Rz[1, 1] = cosZ

+ Rz[2, 2] = 1.0

+

+ R = np.matmul(np.matmul(Rz,Ry),Rx)

+ return R

+

+def rotateSH(SH, R):

+ SHn = SH

+

+ # 1st order

+ SHn[1] = R[1,1]*SH[1] - R[1,2]*SH[2] + R[1,0]*SH[3]

+ SHn[2] = -R[2,1]*SH[1] + R[2,2]*SH[2] - R[2,0]*SH[3]

+ SHn[3] = R[0,1]*SH[1] - R[0,2]*SH[2] + R[0,0]*SH[3]

+

+ # 2nd order

+ SHn[4:,0] = rotateBand2(SH[4:,0],R)

+ SHn[4:,1] = rotateBand2(SH[4:,1],R)

+ SHn[4:,2] = rotateBand2(SH[4:,2],R)

+

+ return SHn

+

+def rotateBand2(x, R):

+ s_c3 = 0.94617469575

+ s_c4 = -0.31539156525

+ s_c5 = 0.54627421529

+

+ s_c_scale = 1.0/0.91529123286551084

+ s_c_scale_inv = 0.91529123286551084

+

+ s_rc2 = 1.5853309190550713*s_c_scale

+ s_c4_div_c3 = s_c4/s_c3

+ s_c4_div_c3_x2 = (s_c4/s_c3)*2.0

+

+ s_scale_dst2 = s_c3 * s_c_scale_inv

+ s_scale_dst4 = s_c5 * s_c_scale_inv

+

+ sh0 = x[3] + x[4] + x[4] - x[1]

+ sh1 = x[0] + s_rc2*x[2] + x[3] + x[4]

+ sh2 = x[0]

+ sh3 = -x[3]

+ sh4 = -x[1]

+

+ r2x = R[0][0] + R[0][1]

+ r2y = R[1][0] + R[1][1]

+ r2z = R[2][0] + R[2][1]

+

+ r3x = R[0][0] + R[0][2]

+ r3y = R[1][0] + R[1][2]

+ r3z = R[2][0] + R[2][2]

+

+ r4x = R[0][1] + R[0][2]

+ r4y = R[1][1] + R[1][2]

+ r4z = R[2][1] + R[2][2]

+

+ sh0_x = sh0 * R[0][0]

+ sh0_y = sh0 * R[1][0]

+ d0 = sh0_x * R[1][0]

+ d1 = sh0_y * R[2][0]

+ d2 = sh0 * (R[2][0] * R[2][0] + s_c4_div_c3)

+ d3 = sh0_x * R[2][0]

+ d4 = sh0_x * R[0][0] - sh0_y * R[1][0]

+

+ sh1_x = sh1 * R[0][2]

+ sh1_y = sh1 * R[1][2]

+ d0 += sh1_x * R[1][2]

+ d1 += sh1_y * R[2][2]

+ d2 += sh1 * (R[2][2] * R[2][2] + s_c4_div_c3)

+ d3 += sh1_x * R[2][2]

+ d4 += sh1_x * R[0][2] - sh1_y * R[1][2]

+

+ sh2_x = sh2 * r2x

+ sh2_y = sh2 * r2y

+ d0 += sh2_x * r2y

+ d1 += sh2_y * r2z

+ d2 += sh2 * (r2z * r2z + s_c4_div_c3_x2)

+ d3 += sh2_x * r2z

+ d4 += sh2_x * r2x - sh2_y * r2y

+

+ sh3_x = sh3 * r3x

+ sh3_y = sh3 * r3y

+ d0 += sh3_x * r3y

+ d1 += sh3_y * r3z

+ d2 += sh3 * (r3z * r3z + s_c4_div_c3_x2)

+ d3 += sh3_x * r3z

+ d4 += sh3_x * r3x - sh3_y * r3y

+

+ sh4_x = sh4 * r4x

+ sh4_y = sh4 * r4y

+ d0 += sh4_x * r4y

+ d1 += sh4_y * r4z

+ d2 += sh4 * (r4z * r4z + s_c4_div_c3_x2)

+ d3 += sh4_x * r4z

+ d4 += sh4_x * r4x - sh4_y * r4y

+

+ dst = x

+ dst[0] = d0

+ dst[1] = -d1

+ dst[2] = d2 * s_scale_dst2

+ dst[3] = -d3

+ dst[4] = d4 * s_scale_dst4

+

+ return dst

+

+def render_prt_ortho(out_path, folder_name, subject_name, shs, rndr, rndr_uv, im_size, angl_step=4, n_light=1, pitch=[0]):

+ cam = Camera(width=im_size, height=im_size)

+ cam.ortho_ratio = 0.4 * (512 / im_size)

+ cam.near = -100

+ cam.far = 100

+ cam.sanity_check()

+

+ # set path for obj, prt

+ mesh_file = os.path.join(folder_name, subject_name + '_100k.obj')

+ if not os.path.exists(mesh_file):

+ print('ERROR: obj file does not exist!!', mesh_file)

+ return

+ prt_file = os.path.join(folder_name, 'bounce', 'bounce0.txt')

+ if not os.path.exists(prt_file):

+ print('ERROR: prt file does not exist!!!', prt_file)

+ return

+ face_prt_file = os.path.join(folder_name, 'bounce', 'face.npy')

+ if not os.path.exists(face_prt_file):

+ print('ERROR: face prt file does not exist!!!', prt_file)

+ return

+ text_file = os.path.join(folder_name, 'tex', subject_name + '_dif_2k.jpg')

+ if not os.path.exists(text_file):

+ print('ERROR: dif file does not exist!!', text_file)

+ return

+

+ texture_image = cv2.imread(text_file)

+ texture_image = cv2.cvtColor(texture_image, cv2.COLOR_BGR2RGB)

+

+ vertices, faces, normals, faces_normals, textures, face_textures = load_obj_mesh(mesh_file, with_normal=True, with_texture=True)

+ vmin = vertices.min(0)

+ vmax = vertices.max(0)

+ up_axis = 1 if (vmax-vmin).argmax() == 1 else 2

+

+ vmed = np.median(vertices, 0)

+ vmed[up_axis] = 0.5*(vmax[up_axis]+vmin[up_axis])

+ y_scale = 180/(vmax[up_axis] - vmin[up_axis])

+

+ rndr.set_norm_mat(y_scale, vmed)

+ rndr_uv.set_norm_mat(y_scale, vmed)

+

+ tan, bitan = compute_tangent(vertices, faces, normals, textures, face_textures)

+ prt = np.loadtxt(prt_file)

+ face_prt = np.load(face_prt_file)

+ rndr.set_mesh(vertices, faces, normals, faces_normals, textures, face_textures, prt, face_prt, tan, bitan)

+ rndr.set_albedo(texture_image)

+

+ rndr_uv.set_mesh(vertices, faces, normals, faces_normals, textures, face_textures, prt, face_prt, tan, bitan)

+ rndr_uv.set_albedo(texture_image)

+

+ os.makedirs(os.path.join(out_path, 'GEO', 'OBJ', subject_name),exist_ok=True)

+ os.makedirs(os.path.join(out_path, 'PARAM', subject_name),exist_ok=True)

+ os.makedirs(os.path.join(out_path, 'RENDER', subject_name),exist_ok=True)

+ os.makedirs(os.path.join(out_path, 'MASK', subject_name),exist_ok=True)

+ os.makedirs(os.path.join(out_path, 'UV_RENDER', subject_name),exist_ok=True)

+ os.makedirs(os.path.join(out_path, 'UV_MASK', subject_name),exist_ok=True)

+ os.makedirs(os.path.join(out_path, 'UV_POS', subject_name),exist_ok=True)

+ os.makedirs(os.path.join(out_path, 'UV_NORMAL', subject_name),exist_ok=True)

+

+ if not os.path.exists(os.path.join(out_path, 'val.txt')):

+ f = open(os.path.join(out_path, 'val.txt'), 'w')

+ f.close()

+

+ # copy obj file

+ cmd = 'cp %s %s' % (mesh_file, os.path.join(out_path, 'GEO', 'OBJ', subject_name))

+ print(cmd)

+ os.system(cmd)

+

+ for p in pitch:

+ for y in tqdm(range(0, 360, angl_step)):

+ R = np.matmul(make_rotate(math.radians(p), 0, 0), make_rotate(0, math.radians(y), 0))

+ if up_axis == 2:

+ R = np.matmul(R, make_rotate(math.radians(90),0,0))

+

+ rndr.rot_matrix = R

+ rndr_uv.rot_matrix = R

+ rndr.set_camera(cam)

+ rndr_uv.set_camera(cam)

+

+ for j in range(n_light):

+ sh_id = random.randint(0,shs.shape[0]-1)

+ sh = shs[sh_id]

+ sh_angle = 0.2*np.pi*(random.random()-0.5)

+ sh = rotateSH(sh, make_rotate(0, sh_angle, 0).T)

+

+ dic = {'sh': sh, 'ortho_ratio': cam.ortho_ratio, 'scale': y_scale, 'center': vmed, 'R': R}

+

+ rndr.set_sh(sh)

+ rndr.analytic = False

+ rndr.use_inverse_depth = False

+ rndr.display()

+

+ out_all_f = rndr.get_color(0)

+ out_mask = out_all_f[:,:,3]

+ out_all_f = cv2.cvtColor(out_all_f, cv2.COLOR_RGBA2BGR)

+

+ np.save(os.path.join(out_path, 'PARAM', subject_name, '%d_%d_%02d.npy'%(y,p,j)),dic)

+ cv2.imwrite(os.path.join(out_path, 'RENDER', subject_name, '%d_%d_%02d.jpg'%(y,p,j)),255.0*out_all_f)

+ cv2.imwrite(os.path.join(out_path, 'MASK', subject_name, '%d_%d_%02d.png'%(y,p,j)),255.0*out_mask)

+

+ rndr_uv.set_sh(sh)

+ rndr_uv.analytic = False

+ rndr_uv.use_inverse_depth = False

+ rndr_uv.display()

+

+ uv_color = rndr_uv.get_color(0)

+ uv_color = cv2.cvtColor(uv_color, cv2.COLOR_RGBA2BGR)

+ cv2.imwrite(os.path.join(out_path, 'UV_RENDER', subject_name, '%d_%d_%02d.jpg'%(y,p,j)),255.0*uv_color)

+

+ if y == 0 and j == 0 and p == pitch[0]:

+ uv_pos = rndr_uv.get_color(1)

+ uv_mask = uv_pos[:,:,3]

+ cv2.imwrite(os.path.join(out_path, 'UV_MASK', subject_name, '00.png'),255.0*uv_mask)

+

+ data = {'default': uv_pos[:,:,:3]} # default is a reserved name

+ pyexr.write(os.path.join(out_path, 'UV_POS', subject_name, '00.exr'), data)

+

+ uv_nml = rndr_uv.get_color(2)

+ uv_nml = cv2.cvtColor(uv_nml, cv2.COLOR_RGBA2BGR)

+ cv2.imwrite(os.path.join(out_path, 'UV_NORMAL', subject_name, '00.png'),255.0*uv_nml)

+

+

+if __name__ == '__main__':

+ shs = np.load('./env_sh.npy')

+

+ parser = argparse.ArgumentParser()

+ parser.add_argument('-i', '--input', type=str, default='/home/shunsuke/Downloads/rp_dennis_posed_004_OBJ')

+ parser.add_argument('-o', '--out_dir', type=str, default='/home/shunsuke/Documents/hf_human')

+ parser.add_argument('-m', '--ms_rate', type=int, default=1, help='higher ms rate results in less aliased output. MESA renderer only supports ms_rate=1.')

+ parser.add_argument('-e', '--egl', action='store_true', help='egl rendering option. use this when rendering with headless server with NVIDIA GPU')

+ parser.add_argument('-s', '--size', type=int, default=512, help='rendering image size')

+ args = parser.parse_args()

+

+ # NOTE: GL context has to be created before any other OpenGL function loads.

+ from lib.renderer.gl.init_gl import initialize_GL_context

+ initialize_GL_context(width=args.size, height=args.size, egl=args.egl)

+

+ from lib.renderer.gl.prt_render import PRTRender

+ rndr = PRTRender(width=args.size, height=args.size, ms_rate=args.ms_rate, egl=args.egl)

+ rndr_uv = PRTRender(width=args.size, height=args.size, uv_mode=True, egl=args.egl)

+

+ if args.input[-1] == '/':

+ args.input = args.input[:-1]

+ subject_name = args.input.split('/')[-1][:-4]

+ render_prt_ortho(args.out_dir, args.input, subject_name, shs, rndr, rndr_uv, args.size, 1, 1, pitch=[0])

\ No newline at end of file

diff --git a/PIFu/apps/train_color.py b/PIFu/apps/train_color.py

new file mode 100644

index 0000000000000000000000000000000000000000..3c1aeb9f33ff7ebf95489cef9a3e96e8af7ee3d7

--- /dev/null

+++ b/PIFu/apps/train_color.py

@@ -0,0 +1,191 @@

+import sys

+import os

+

+sys.path.insert(0, os.path.abspath(os.path.join(os.path.dirname(__file__), '..')))

+ROOT_PATH = os.path.dirname(os.path.dirname(os.path.abspath(__file__)))

+

+import time

+import json

+import numpy as np

+import cv2

+import random

+import torch

+import torch.nn as nn

+from torch.utils.data import DataLoader

+from tqdm import tqdm

+

+from lib.options import BaseOptions

+from lib.mesh_util import *

+from lib.sample_util import *

+from lib.train_util import *

+from lib.data import *

+from lib.model import *

+from lib.geometry import index

+

+# get options

+opt = BaseOptions().parse()

+

+def train_color(opt):

+ # set cuda

+ cuda = torch.device('cuda:%d' % opt.gpu_id)

+

+ train_dataset = TrainDataset(opt, phase='train')

+ test_dataset = TrainDataset(opt, phase='test')

+

+ projection_mode = train_dataset.projection_mode

+

+ # create data loader

+ train_data_loader = DataLoader(train_dataset,

+ batch_size=opt.batch_size, shuffle=not opt.serial_batches,

+ num_workers=opt.num_threads, pin_memory=opt.pin_memory)

+

+ print('train data size: ', len(train_data_loader))

+

+ # NOTE: batch size should be 1 and use all the points for evaluation

+ test_data_loader = DataLoader(test_dataset,

+ batch_size=1, shuffle=False,

+ num_workers=opt.num_threads, pin_memory=opt.pin_memory)

+ print('test data size: ', len(test_data_loader))

+

+ # create net

+ netG = HGPIFuNet(opt, projection_mode).to(device=cuda)

+

+ lr = opt.learning_rate

+

+ # Always use resnet for color regression

+ netC = ResBlkPIFuNet(opt).to(device=cuda)

+ optimizerC = torch.optim.Adam(netC.parameters(), lr=opt.learning_rate)

+

+ def set_train():

+ netG.eval()

+ netC.train()

+

+ def set_eval():

+ netG.eval()

+ netC.eval()

+

+ print('Using NetworkG: ', netG.name, 'networkC: ', netC.name)

+

+ # load checkpoints

+ if opt.load_netG_checkpoint_path is not None:

+ print('loading for net G ...', opt.load_netG_checkpoint_path)

+ netG.load_state_dict(torch.load(opt.load_netG_checkpoint_path, map_location=cuda))

+ else:

+ model_path_G = '%s/%s/netG_latest' % (opt.checkpoints_path, opt.name)

+ print('loading for net G ...', model_path_G)

+ netG.load_state_dict(torch.load(model_path_G, map_location=cuda))

+

+ if opt.load_netC_checkpoint_path is not None:

+ print('loading for net C ...', opt.load_netC_checkpoint_path)

+ netC.load_state_dict(torch.load(opt.load_netC_checkpoint_path, map_location=cuda))

+

+ if opt.continue_train:

+ if opt.resume_epoch < 0:

+ model_path_C = '%s/%s/netC_latest' % (opt.checkpoints_path, opt.name)

+ else:

+ model_path_C = '%s/%s/netC_epoch_%d' % (opt.checkpoints_path, opt.name, opt.resume_epoch)

+

+ print('Resuming from ', model_path_C)

+ netC.load_state_dict(torch.load(model_path_C, map_location=cuda))

+

+ os.makedirs(opt.checkpoints_path, exist_ok=True)

+ os.makedirs(opt.results_path, exist_ok=True)

+ os.makedirs('%s/%s' % (opt.checkpoints_path, opt.name), exist_ok=True)

+ os.makedirs('%s/%s' % (opt.results_path, opt.name), exist_ok=True)

+

+ opt_log = os.path.join(opt.results_path, opt.name, 'opt.txt')

+ with open(opt_log, 'w') as outfile:

+ outfile.write(json.dumps(vars(opt), indent=2))

+

+ # training

+ start_epoch = 0 if not opt.continue_train else max(opt.resume_epoch,0)

+ for epoch in range(start_epoch, opt.num_epoch):

+ epoch_start_time = time.time()

+

+ set_train()

+ iter_data_time = time.time()

+ for train_idx, train_data in enumerate(train_data_loader):

+ iter_start_time = time.time()

+ # retrieve the data

+ image_tensor = train_data['img'].to(device=cuda)

+ calib_tensor = train_data['calib'].to(device=cuda)

+ color_sample_tensor = train_data['color_samples'].to(device=cuda)

+

+ image_tensor, calib_tensor = reshape_multiview_tensors(image_tensor, calib_tensor)

+

+ if opt.num_views > 1:

+ color_sample_tensor = reshape_sample_tensor(color_sample_tensor, opt.num_views)

+

+ rgb_tensor = train_data['rgbs'].to(device=cuda)

+

+ with torch.no_grad():

+ netG.filter(image_tensor)

+ resC, error = netC.forward(image_tensor, netG.get_im_feat(), color_sample_tensor, calib_tensor, labels=rgb_tensor)

+

+ optimizerC.zero_grad()

+ error.backward()

+ optimizerC.step()

+

+ iter_net_time = time.time()

+ eta = ((iter_net_time - epoch_start_time) / (train_idx + 1)) * len(train_data_loader) - (

+ iter_net_time - epoch_start_time)

+

+ if train_idx % opt.freq_plot == 0:

+ print(

+ 'Name: {0} | Epoch: {1} | {2}/{3} | Err: {4:.06f} | LR: {5:.06f} | dataT: {6:.05f} | netT: {7:.05f} | ETA: {8:02d}:{9:02d}'.format(

+ opt.name, epoch, train_idx, len(train_data_loader),

+ error.item(),

+ lr,

+ iter_start_time - iter_data_time,

+ iter_net_time - iter_start_time, int(eta // 60),

+ int(eta - 60 * (eta // 60))))

+

+ if train_idx % opt.freq_save == 0 and train_idx != 0:

+ torch.save(netC.state_dict(), '%s/%s/netC_latest' % (opt.checkpoints_path, opt.name))

+ torch.save(netC.state_dict(), '%s/%s/netC_epoch_%d' % (opt.checkpoints_path, opt.name, epoch))

+

+ if train_idx % opt.freq_save_ply == 0:

+ save_path = '%s/%s/pred_col.ply' % (opt.results_path, opt.name)

+ rgb = resC[0].transpose(0, 1).cpu() * 0.5 + 0.5

+ points = color_sample_tensor[0].transpose(0, 1).cpu()

+ save_samples_rgb(save_path, points.detach().numpy(), rgb.detach().numpy())

+

+ iter_data_time = time.time()

+

+ #### test

+ with torch.no_grad():

+ set_eval()

+

+ if not opt.no_num_eval:

+ test_losses = {}

+ print('calc error (test) ...')

+ test_color_error = calc_error_color(opt, netG, netC, cuda, test_dataset, 100)

+ print('eval test | color error:', test_color_error)

+ test_losses['test_color'] = test_color_error

+

+ print('calc error (train) ...')

+ train_dataset.is_train = False

+ train_color_error = calc_error_color(opt, netG, netC, cuda, train_dataset, 100)

+ train_dataset.is_train = True

+ print('eval train | color error:', train_color_error)

+ test_losses['train_color'] = train_color_error

+

+ if not opt.no_gen_mesh:

+ print('generate mesh (test) ...')

+ for gen_idx in tqdm(range(opt.num_gen_mesh_test)):

+ test_data = random.choice(test_dataset)

+ save_path = '%s/%s/test_eval_epoch%d_%s.obj' % (

+ opt.results_path, opt.name, epoch, test_data['name'])

+ gen_mesh_color(opt, netG, netC, cuda, test_data, save_path)

+

+ print('generate mesh (train) ...')

+ train_dataset.is_train = False

+ for gen_idx in tqdm(range(opt.num_gen_mesh_test)):

+ train_data = random.choice(train_dataset)

+ save_path = '%s/%s/train_eval_epoch%d_%s.obj' % (

+ opt.results_path, opt.name, epoch, train_data['name'])

+ gen_mesh_color(opt, netG, netC, cuda, train_data, save_path)

+ train_dataset.is_train = True

+

+if __name__ == '__main__':

+ train_color(opt)

\ No newline at end of file

diff --git a/PIFu/apps/train_shape.py b/PIFu/apps/train_shape.py

new file mode 100644

index 0000000000000000000000000000000000000000..241ce543c956ce51f6f8445739ef41f4ddf7a7d5

--- /dev/null

+++ b/PIFu/apps/train_shape.py

@@ -0,0 +1,183 @@

+import sys

+import os

+

+sys.path.insert(0, os.path.abspath(os.path.join(os.path.dirname(__file__), '..')))

+ROOT_PATH = os.path.dirname(os.path.dirname(os.path.abspath(__file__)))

+

+import time

+import json

+import numpy as np

+import cv2

+import random

+import torch

+from torch.utils.data import DataLoader

+from tqdm import tqdm

+

+from lib.options import BaseOptions

+from lib.mesh_util import *

+from lib.sample_util import *

+from lib.train_util import *

+from lib.data import *

+from lib.model import *

+from lib.geometry import index

+

+# get options

+opt = BaseOptions().parse()

+

+def train(opt):

+ # set cuda

+ cuda = torch.device('cuda:%d' % opt.gpu_id)

+

+ train_dataset = TrainDataset(opt, phase='train')

+ test_dataset = TrainDataset(opt, phase='test')

+

+ projection_mode = train_dataset.projection_mode

+

+ # create data loader

+ train_data_loader = DataLoader(train_dataset,

+ batch_size=opt.batch_size, shuffle=not opt.serial_batches,

+ num_workers=opt.num_threads, pin_memory=opt.pin_memory)

+

+ print('train data size: ', len(train_data_loader))

+

+ # NOTE: batch size should be 1 and use all the points for evaluation

+ test_data_loader = DataLoader(test_dataset,

+ batch_size=1, shuffle=False,

+ num_workers=opt.num_threads, pin_memory=opt.pin_memory)

+ print('test data size: ', len(test_data_loader))

+

+ # create net

+ netG = HGPIFuNet(opt, projection_mode).to(device=cuda)

+ optimizerG = torch.optim.RMSprop(netG.parameters(), lr=opt.learning_rate, momentum=0, weight_decay=0)

+ lr = opt.learning_rate

+ print('Using Network: ', netG.name)

+

+ def set_train():

+ netG.train()

+

+ def set_eval():

+ netG.eval()

+

+ # load checkpoints

+ if opt.load_netG_checkpoint_path is not None:

+ print('loading for net G ...', opt.load_netG_checkpoint_path)

+ netG.load_state_dict(torch.load(opt.load_netG_checkpoint_path, map_location=cuda))

+

+ if opt.continue_train:

+ if opt.resume_epoch < 0:

+ model_path = '%s/%s/netG_latest' % (opt.checkpoints_path, opt.name)

+ else:

+ model_path = '%s/%s/netG_epoch_%d' % (opt.checkpoints_path, opt.name, opt.resume_epoch)

+ print('Resuming from ', model_path)

+ netG.load_state_dict(torch.load(model_path, map_location=cuda))

+

+ os.makedirs(opt.checkpoints_path, exist_ok=True)

+ os.makedirs(opt.results_path, exist_ok=True)

+ os.makedirs('%s/%s' % (opt.checkpoints_path, opt.name), exist_ok=True)

+ os.makedirs('%s/%s' % (opt.results_path, opt.name), exist_ok=True)

+

+ opt_log = os.path.join(opt.results_path, opt.name, 'opt.txt')

+ with open(opt_log, 'w') as outfile:

+ outfile.write(json.dumps(vars(opt), indent=2))

+

+ # training

+ start_epoch = 0 if not opt.continue_train else max(opt.resume_epoch,0)

+ for epoch in range(start_epoch, opt.num_epoch):

+ epoch_start_time = time.time()

+

+ set_train()

+ iter_data_time = time.time()

+ for train_idx, train_data in enumerate(train_data_loader):

+ iter_start_time = time.time()

+

+ # retrieve the data

+ image_tensor = train_data['img'].to(device=cuda)

+ calib_tensor = train_data['calib'].to(device=cuda)

+ sample_tensor = train_data['samples'].to(device=cuda)

+

+ image_tensor, calib_tensor = reshape_multiview_tensors(image_tensor, calib_tensor)

+

+ if opt.num_views > 1:

+ sample_tensor = reshape_sample_tensor(sample_tensor, opt.num_views)

+

+ label_tensor = train_data['labels'].to(device=cuda)

+

+ res, error = netG.forward(image_tensor, sample_tensor, calib_tensor, labels=label_tensor)

+

+ optimizerG.zero_grad()

+ error.backward()

+ optimizerG.step()

+

+ iter_net_time = time.time()

+ eta = ((iter_net_time - epoch_start_time) / (train_idx + 1)) * len(train_data_loader) - (

+ iter_net_time - epoch_start_time)

+

+ if train_idx % opt.freq_plot == 0:

+ print(

+ 'Name: {0} | Epoch: {1} | {2}/{3} | Err: {4:.06f} | LR: {5:.06f} | Sigma: {6:.02f} | dataT: {7:.05f} | netT: {8:.05f} | ETA: {9:02d}:{10:02d}'.format(

+ opt.name, epoch, train_idx, len(train_data_loader), error.item(), lr, opt.sigma,

+ iter_start_time - iter_data_time,

+ iter_net_time - iter_start_time, int(eta // 60),

+ int(eta - 60 * (eta // 60))))

+

+ if train_idx % opt.freq_save == 0 and train_idx != 0:

+ torch.save(netG.state_dict(), '%s/%s/netG_latest' % (opt.checkpoints_path, opt.name))

+ torch.save(netG.state_dict(), '%s/%s/netG_epoch_%d' % (opt.checkpoints_path, opt.name, epoch))

+

+ if train_idx % opt.freq_save_ply == 0:

+ save_path = '%s/%s/pred.ply' % (opt.results_path, opt.name)

+ r = res[0].cpu()

+ points = sample_tensor[0].transpose(0, 1).cpu()

+ save_samples_truncted_prob(save_path, points.detach().numpy(), r.detach().numpy())

+

+ iter_data_time = time.time()

+

+ # update learning rate

+ lr = adjust_learning_rate(optimizerG, epoch, lr, opt.schedule, opt.gamma)

+

+ #### test

+ with torch.no_grad():

+ set_eval()

+

+ if not opt.no_num_eval:

+ test_losses = {}

+ print('calc error (test) ...')

+ test_errors = calc_error(opt, netG, cuda, test_dataset, 100)

+ print('eval test MSE: {0:06f} IOU: {1:06f} prec: {2:06f} recall: {3:06f}'.format(*test_errors))

+ MSE, IOU, prec, recall = test_errors

+ test_losses['MSE(test)'] = MSE

+ test_losses['IOU(test)'] = IOU

+ test_losses['prec(test)'] = prec

+ test_losses['recall(test)'] = recall

+

+ print('calc error (train) ...')

+ train_dataset.is_train = False

+ train_errors = calc_error(opt, netG, cuda, train_dataset, 100)

+ train_dataset.is_train = True

+ print('eval train MSE: {0:06f} IOU: {1:06f} prec: {2:06f} recall: {3:06f}'.format(*train_errors))

+ MSE, IOU, prec, recall = train_errors

+ test_losses['MSE(train)'] = MSE

+ test_losses['IOU(train)'] = IOU

+ test_losses['prec(train)'] = prec

+ test_losses['recall(train)'] = recall

+

+ if not opt.no_gen_mesh:

+ print('generate mesh (test) ...')

+ for gen_idx in tqdm(range(opt.num_gen_mesh_test)):

+ test_data = random.choice(test_dataset)

+ save_path = '%s/%s/test_eval_epoch%d_%s.obj' % (

+ opt.results_path, opt.name, epoch, test_data['name'])

+ gen_mesh(opt, netG, cuda, test_data, save_path)

+

+ print('generate mesh (train) ...')

+ train_dataset.is_train = False

+ for gen_idx in tqdm(range(opt.num_gen_mesh_test)):

+ train_data = random.choice(train_dataset)

+ save_path = '%s/%s/train_eval_epoch%d_%s.obj' % (

+ opt.results_path, opt.name, epoch, train_data['name'])

+ gen_mesh(opt, netG, cuda, train_data, save_path)

+ train_dataset.is_train = True

+

+

+if __name__ == '__main__':

+ train(opt)

\ No newline at end of file

diff --git a/PIFu/env_sh.npy b/PIFu/env_sh.npy

new file mode 100755

index 0000000000000000000000000000000000000000..6841e757f9035f97d392925e09a5e202a45a7701

Binary files /dev/null and b/PIFu/env_sh.npy differ

diff --git a/PIFu/environment.yml b/PIFu/environment.yml

new file mode 100755

index 0000000000000000000000000000000000000000..80b2e05ef59c6f5377c70af584837e495c9cf690

--- /dev/null

+++ b/PIFu/environment.yml

@@ -0,0 +1,19 @@

+name: PIFu

+channels:

+- pytorch

+- defaults

+dependencies:

+- opencv

+- pytorch

+- json

+- pyexr

+- cv2

+- PIL

+- skimage

+- tqdm

+- pyembree

+- shapely

+- rtree

+- xxhash

+- trimesh

+- PyOpenGL

\ No newline at end of file

diff --git a/PIFu/lib/__init__.py b/PIFu/lib/__init__.py

new file mode 100755

index 0000000000000000000000000000000000000000..e69de29bb2d1d6434b8b29ae775ad8c2e48c5391

diff --git a/PIFu/lib/colab_util.py b/PIFu/lib/colab_util.py

new file mode 100644

index 0000000000000000000000000000000000000000..608227b228647e7b1bc16676fadf22d68e381f57

--- /dev/null

+++ b/PIFu/lib/colab_util.py

@@ -0,0 +1,114 @@

+import io

+import os

+import torch

+from skimage.io import imread

+import numpy as np

+import cv2

+from tqdm import tqdm_notebook as tqdm

+import base64

+from IPython.display import HTML

+

+# Util function for loading meshes

+from pytorch3d.io import load_objs_as_meshes

+

+from IPython.display import HTML

+from base64 import b64encode

+

+# Data structures and functions for rendering

+from pytorch3d.structures import Meshes

+from pytorch3d.renderer import (

+ look_at_view_transform,

+ OpenGLOrthographicCameras,

+ PointLights,

+ DirectionalLights,

+ Materials,

+ RasterizationSettings,

+ MeshRenderer,

+ MeshRasterizer,

+ SoftPhongShader,

+ HardPhongShader,

+ TexturesVertex

+)

+

+def set_renderer():

+ # Setup

+ device = torch.device("cuda:0")

+ torch.cuda.set_device(device)

+

+ # Initialize an OpenGL perspective camera.

+ R, T = look_at_view_transform(2.0, 0, 180)

+ cameras = OpenGLOrthographicCameras(device=device, R=R, T=T)

+

+ raster_settings = RasterizationSettings(

+ image_size=512,

+ blur_radius=0.0,

+ faces_per_pixel=1,

+ bin_size = None,

+ max_faces_per_bin = None

+ )

+

+ lights = PointLights(device=device, location=((2.0, 2.0, 2.0),))

+

+ renderer = MeshRenderer(

+ rasterizer=MeshRasterizer(

+ cameras=cameras,

+ raster_settings=raster_settings

+ ),

+ shader=HardPhongShader(

+ device=device,

+ cameras=cameras,

+ lights=lights

+ )

+ )

+ return renderer

+

+def get_verts_rgb_colors(obj_path):

+ rgb_colors = []

+

+ f = open(obj_path)

+ lines = f.readlines()

+ for line in lines:

+ ls = line.split(' ')

+ if len(ls) == 7:

+ rgb_colors.append(ls[-3:])

+

+ return np.array(rgb_colors, dtype='float32')[None, :, :]

+

+def generate_video_from_obj(obj_path, video_path, renderer):

+ # Setup

+ device = torch.device("cuda:0")

+ torch.cuda.set_device(device)

+

+ # Load obj file

+ verts_rgb_colors = get_verts_rgb_colors(obj_path)

+ verts_rgb_colors = torch.from_numpy(verts_rgb_colors).to(device)

+ textures = TexturesVertex(verts_features=verts_rgb_colors)

+ wo_textures = TexturesVertex(verts_features=torch.ones_like(verts_rgb_colors)*0.75)

+

+ # Load obj

+ mesh = load_objs_as_meshes([obj_path], device=device)

+

+ # Set mesh

+ vers = mesh._verts_list

+ faces = mesh._faces_list

+ mesh_w_tex = Meshes(vers, faces, textures)

+ mesh_wo_tex = Meshes(vers, faces, wo_textures)

+

+ # create VideoWriter

+ fourcc = cv2. VideoWriter_fourcc(*'MP4V')

+ out = cv2.VideoWriter(video_path, fourcc, 20.0, (1024,512))

+

+ for i in tqdm(range(90)):

+ R, T = look_at_view_transform(1.8, 0, i*4, device=device)

+ images_w_tex = renderer(mesh_w_tex, R=R, T=T)

+ images_w_tex = np.clip(images_w_tex[0, ..., :3].cpu().numpy(), 0.0, 1.0)[:, :, ::-1] * 255

+ images_wo_tex = renderer(mesh_wo_tex, R=R, T=T)

+ images_wo_tex = np.clip(images_wo_tex[0, ..., :3].cpu().numpy(), 0.0, 1.0)[:, :, ::-1] * 255

+ image = np.concatenate([images_w_tex, images_wo_tex], axis=1)

+ out.write(image.astype('uint8'))

+ out.release()

+

+def video(path):

+ mp4 = open(path,'rb').read()

+ data_url = "data:video/mp4;base64," + b64encode(mp4).decode()

+ return HTML('' % data_url)

diff --git a/PIFu/lib/data/BaseDataset.py b/PIFu/lib/data/BaseDataset.py

new file mode 100755

index 0000000000000000000000000000000000000000..2d3e842341ecd51514ac96ce51a13fcaa12d1733

--- /dev/null

+++ b/PIFu/lib/data/BaseDataset.py

@@ -0,0 +1,46 @@

+from torch.utils.data import Dataset

+import random

+

+

+class BaseDataset(Dataset):

+ '''

+ This is the Base Datasets.

+ Itself does nothing and is not runnable.

+ Check self.get_item function to see what it should return.

+ '''

+

+ @staticmethod

+ def modify_commandline_options(parser, is_train):

+ return parser

+

+ def __init__(self, opt, phase='train'):

+ self.opt = opt

+ self.is_train = self.phase == 'train'

+ self.projection_mode = 'orthogonal' # Declare projection mode here

+

+ def __len__(self):

+ return 0

+

+ def get_item(self, index):

+ # In case of a missing file or IO error, switch to a random sample instead

+ try:

+ res = {

+ 'name': None, # name of this subject

+ 'b_min': None, # Bounding box (x_min, y_min, z_min) of target space

+ 'b_max': None, # Bounding box (x_max, y_max, z_max) of target space

+

+ 'samples': None, # [3, N] samples

+ 'labels': None, # [1, N] labels

+

+ 'img': None, # [num_views, C, H, W] input images

+ 'calib': None, # [num_views, 4, 4] calibration matrix

+ 'extrinsic': None, # [num_views, 4, 4] extrinsic matrix

+ 'mask': None, # [num_views, 1, H, W] segmentation masks

+ }

+ return res

+ except:

+ print("Requested index %s has missing files. Using a random sample instead." % index)

+ return self.get_item(index=random.randint(0, self.__len__() - 1))

+

+ def __getitem__(self, index):

+ return self.get_item(index)

diff --git a/PIFu/lib/data/EvalDataset.py b/PIFu/lib/data/EvalDataset.py

new file mode 100755

index 0000000000000000000000000000000000000000..ad42b46459aa099ed48780b5cff0cb9099f82b71

--- /dev/null

+++ b/PIFu/lib/data/EvalDataset.py

@@ -0,0 +1,166 @@

+from torch.utils.data import Dataset

+import numpy as np

+import os

+import random

+import torchvision.transforms as transforms

+from PIL import Image, ImageOps

+import cv2

+import torch

+from PIL.ImageFilter import GaussianBlur

+import trimesh

+import cv2

+

+

+class EvalDataset(Dataset):

+ @staticmethod

+ def modify_commandline_options(parser):

+ return parser

+

+ def __init__(self, opt, root=None):

+ self.opt = opt

+ self.projection_mode = 'orthogonal'

+

+ # Path setup

+ self.root = self.opt.dataroot

+ if root is not None:

+ self.root = root

+ self.RENDER = os.path.join(self.root, 'RENDER')

+ self.MASK = os.path.join(self.root, 'MASK')

+ self.PARAM = os.path.join(self.root, 'PARAM')

+ self.OBJ = os.path.join(self.root, 'GEO', 'OBJ')

+

+ self.phase = 'val'

+ self.load_size = self.opt.loadSize

+

+ self.num_views = self.opt.num_views

+

+ self.max_view_angle = 360

+ self.interval = 1

+ self.subjects = self.get_subjects()

+

+ # PIL to tensor

+ self.to_tensor = transforms.Compose([

+ transforms.Resize(self.load_size),

+ transforms.ToTensor(),

+ transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))

+ ])

+

+ def get_subjects(self):

+ var_file = os.path.join(self.root, 'val.txt')

+ if os.path.exists(var_file):

+ var_subjects = np.loadtxt(var_file, dtype=str)

+ return sorted(list(var_subjects))

+ all_subjects = os.listdir(self.RENDER)

+ return sorted(list(all_subjects))

+

+ def __len__(self):

+ return len(self.subjects) * self.max_view_angle // self.interval

+

+ def get_render(self, subject, num_views, view_id=None, random_sample=False):

+ '''

+ Return the render data

+ :param subject: subject name

+ :param num_views: how many views to return

+ :param view_id: the first view_id. If None, select a random one.

+ :return:

+ 'img': [num_views, C, W, H] images

+ 'calib': [num_views, 4, 4] calibration matrix

+ 'extrinsic': [num_views, 4, 4] extrinsic matrix

+ 'mask': [num_views, 1, W, H] masks

+ '''

+ # For now we only have pitch = 00. Hard code it here

+ pitch = 0

+ # Select a random view_id from self.max_view_angle if not given

+ if view_id is None:

+ view_id = np.random.randint(self.max_view_angle)

+ # The ids are an even distribution of num_views around view_id

+ view_ids = [(view_id + self.max_view_angle // num_views * offset) % self.max_view_angle

+ for offset in range(num_views)]

+ if random_sample:

+ view_ids = np.random.choice(self.max_view_angle, num_views, replace=False)

+

+ calib_list = []

+ render_list = []

+ mask_list = []

+ extrinsic_list = []

+

+ for vid in view_ids:

+ param_path = os.path.join(self.PARAM, subject, '%d_%02d.npy' % (vid, pitch))

+ render_path = os.path.join(self.RENDER, subject, '%d_%02d.jpg' % (vid, pitch))

+ mask_path = os.path.join(self.MASK, subject, '%d_%02d.png' % (vid, pitch))

+

+ # loading calibration data

+ param = np.load(param_path)

+ # pixel unit / world unit

+ ortho_ratio = param.item().get('ortho_ratio')

+ # world unit / model unit

+ scale = param.item().get('scale')

+ # camera center world coordinate

+ center = param.item().get('center')

+ # model rotation

+ R = param.item().get('R')

+

+ translate = -np.matmul(R, center).reshape(3, 1)

+ extrinsic = np.concatenate([R, translate], axis=1)

+ extrinsic = np.concatenate([extrinsic, np.array([0, 0, 0, 1]).reshape(1, 4)], 0)

+ # Match camera space to image pixel space

+ scale_intrinsic = np.identity(4)

+ scale_intrinsic[0, 0] = scale / ortho_ratio

+ scale_intrinsic[1, 1] = -scale / ortho_ratio

+ scale_intrinsic[2, 2] = -scale / ortho_ratio

+ # Match image pixel space to image uv space

+ uv_intrinsic = np.identity(4)

+ uv_intrinsic[0, 0] = 1.0 / float(self.opt.loadSize // 2)

+ uv_intrinsic[1, 1] = 1.0 / float(self.opt.loadSize // 2)

+ uv_intrinsic[2, 2] = 1.0 / float(self.opt.loadSize // 2)

+ # Transform under image pixel space

+ trans_intrinsic = np.identity(4)

+

+ mask = Image.open(mask_path).convert('L')

+ render = Image.open(render_path).convert('RGB')

+

+ intrinsic = np.matmul(trans_intrinsic, np.matmul(uv_intrinsic, scale_intrinsic))

+ calib = torch.Tensor(np.matmul(intrinsic, extrinsic)).float()

+ extrinsic = torch.Tensor(extrinsic).float()

+

+ mask = transforms.Resize(self.load_size)(mask)

+ mask = transforms.ToTensor()(mask).float()

+ mask_list.append(mask)

+

+ render = self.to_tensor(render)

+ render = mask.expand_as(render) * render

+

+ render_list.append(render)

+ calib_list.append(calib)

+ extrinsic_list.append(extrinsic)

+

+ return {

+ 'img': torch.stack(render_list, dim=0),

+ 'calib': torch.stack(calib_list, dim=0),

+ 'extrinsic': torch.stack(extrinsic_list, dim=0),

+ 'mask': torch.stack(mask_list, dim=0)

+ }

+

+ def get_item(self, index):

+ # In case of a missing file or IO error, switch to a random sample instead

+ try:

+ sid = index % len(self.subjects)

+ vid = (index // len(self.subjects)) * self.interval

+ # name of the subject 'rp_xxxx_xxx'

+ subject = self.subjects[sid]

+ res = {

+ 'name': subject,

+ 'mesh_path': os.path.join(self.OBJ, subject + '.obj'),

+ 'sid': sid,

+ 'vid': vid,

+ }

+ render_data = self.get_render(subject, num_views=self.num_views, view_id=vid,

+ random_sample=self.opt.random_multiview)

+ res.update(render_data)

+ return res

+ except Exception as e:

+ print(e)

+ return self.get_item(index=random.randint(0, self.__len__() - 1))

+

+ def __getitem__(self, index):

+ return self.get_item(index)

diff --git a/PIFu/lib/data/TrainDataset.py b/PIFu/lib/data/TrainDataset.py

new file mode 100644

index 0000000000000000000000000000000000000000..47a639bc644ba7a26e0f2799ffb5f170eed93318

--- /dev/null

+++ b/PIFu/lib/data/TrainDataset.py

@@ -0,0 +1,390 @@

+from torch.utils.data import Dataset

+import numpy as np

+import os

+import random

+import torchvision.transforms as transforms

+from PIL import Image, ImageOps

+import cv2

+import torch

+from PIL.ImageFilter import GaussianBlur

+import trimesh

+import logging

+

+log = logging.getLogger('trimesh')

+log.setLevel(40)

+

+def load_trimesh(root_dir):

+ folders = os.listdir(root_dir)

+ meshs = {}

+ for i, f in enumerate(folders):

+ sub_name = f

+ meshs[sub_name] = trimesh.load(os.path.join(root_dir, f, '%s_100k.obj' % sub_name))

+

+ return meshs

+

+def save_samples_truncted_prob(fname, points, prob):

+ '''

+ Save the visualization of sampling to a ply file.

+ Red points represent positive predictions.

+ Green points represent negative predictions.

+ :param fname: File name to save

+ :param points: [N, 3] array of points

+ :param prob: [N, 1] array of predictions in the range [0~1]

+ :return:

+ '''

+ r = (prob > 0.5).reshape([-1, 1]) * 255

+ g = (prob < 0.5).reshape([-1, 1]) * 255

+ b = np.zeros(r.shape)

+

+ to_save = np.concatenate([points, r, g, b], axis=-1)

+ return np.savetxt(fname,

+ to_save,

+ fmt='%.6f %.6f %.6f %d %d %d',

+ comments='',

+ header=(

+ 'ply\nformat ascii 1.0\nelement vertex {:d}\nproperty float x\nproperty float y\nproperty float z\nproperty uchar red\nproperty uchar green\nproperty uchar blue\nend_header').format(

+ points.shape[0])

+ )

+

+