+

+🐸TTS is a library for advanced Text-to-Speech generation. It's built on the latest research, was designed to achieve the best trade-off among ease-of-training, speed and quality.

+🐸TTS comes with pretrained models, tools for measuring dataset quality and already used in **20+ languages** for products and research projects.

+

+[](https://gitter.im/coqui-ai/TTS?utm_source=badge&utm_medium=badge&utm_campaign=pr-badge)

+[](https://gitter.im/coqui-ai/TTS?utm_source=badge&utm_medium=badge&utm_campaign=pr-badge)

+[ +

+## 💬 Where to ask questions

+Please use our dedicated channels for questions and discussion. Help is much more valuable if it's shared publicly so that more people can benefit from it.

+

+| Type | Platforms |

+| ------------------------------- | --------------------------------------- |

+| 🚨 **Bug Reports** | [GitHub Issue Tracker] |

+| 🎁 **Feature Requests & Ideas** | [GitHub Issue Tracker] |

+| 👩💻 **Usage Questions** | [Github Discussions] |

+| 🗯 **General Discussion** | [Github Discussions] or [Gitter Room] |

+

+[github issue tracker]: https://github.com/coqui-ai/tts/issues

+[github discussions]: https://github.com/coqui-ai/TTS/discussions

+[gitter room]: https://gitter.im/coqui-ai/TTS?utm_source=share-link&utm_medium=link&utm_campaign=share-link

+[Tutorials and Examples]: https://github.com/coqui-ai/TTS/wiki/TTS-Notebooks-and-Tutorials

+

+

+## 🔗 Links and Resources

+| Type | Links |

+| ------------------------------- | --------------------------------------- |

+| 💼 **Documentation** | [ReadTheDocs](https://tts.readthedocs.io/en/latest/)

+| 💾 **Installation** | [TTS/README.md](https://github.com/coqui-ai/TTS/tree/dev#install-tts)|

+| 👩💻 **Contributing** | [CONTRIBUTING.md](https://github.com/coqui-ai/TTS/blob/main/CONTRIBUTING.md)|

+| 📌 **Road Map** | [Main Development Plans](https://github.com/coqui-ai/TTS/issues/378)

+| 🚀 **Released Models** | [TTS Releases](https://github.com/coqui-ai/TTS/releases) and [Experimental Models](https://github.com/coqui-ai/TTS/wiki/Experimental-Released-Models)|

+

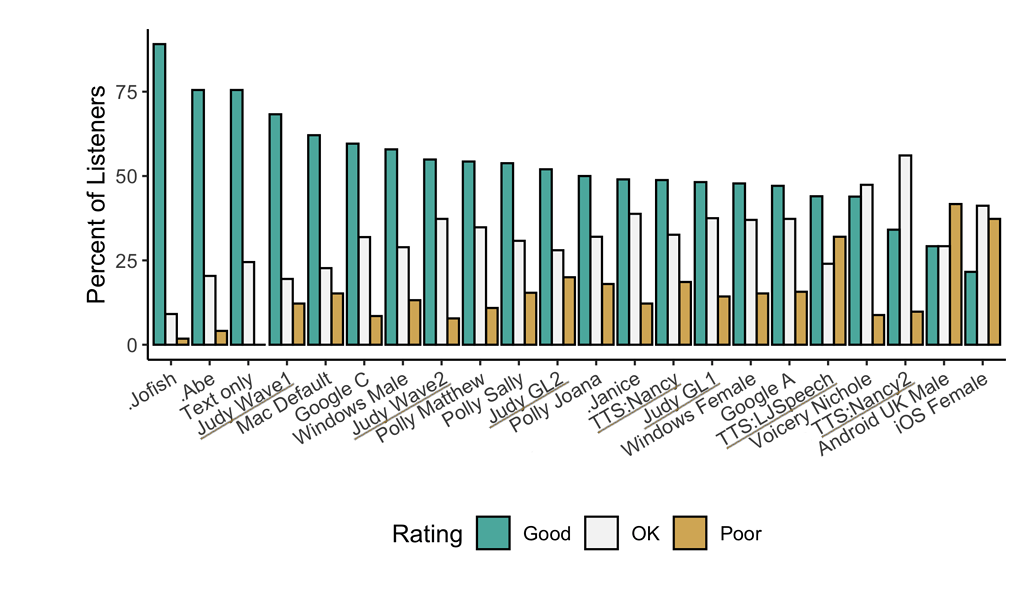

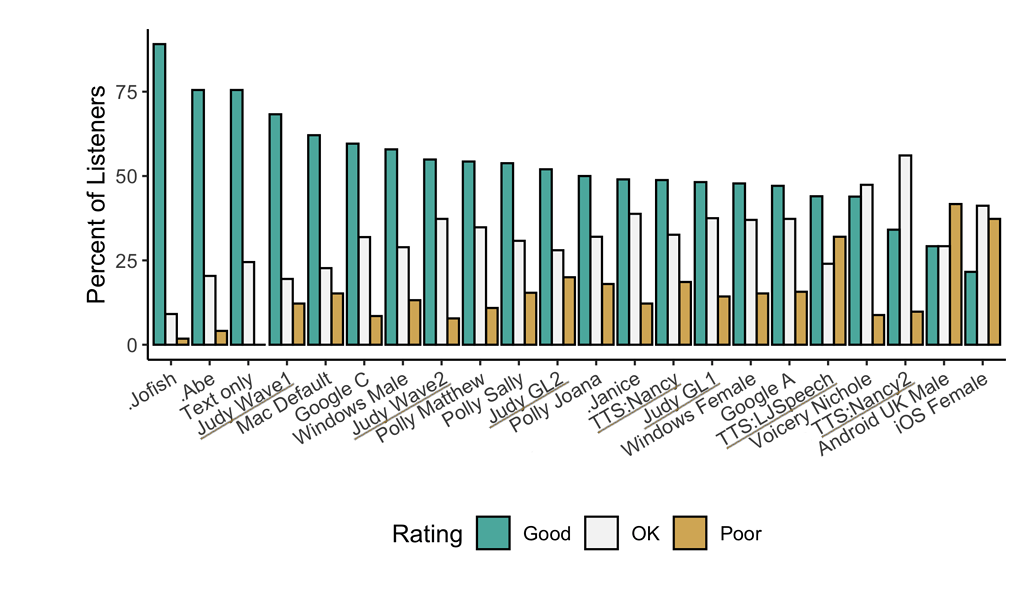

+## 🥇 TTS Performance

+

+

+## 💬 Where to ask questions

+Please use our dedicated channels for questions and discussion. Help is much more valuable if it's shared publicly so that more people can benefit from it.

+

+| Type | Platforms |

+| ------------------------------- | --------------------------------------- |

+| 🚨 **Bug Reports** | [GitHub Issue Tracker] |

+| 🎁 **Feature Requests & Ideas** | [GitHub Issue Tracker] |

+| 👩💻 **Usage Questions** | [Github Discussions] |

+| 🗯 **General Discussion** | [Github Discussions] or [Gitter Room] |

+

+[github issue tracker]: https://github.com/coqui-ai/tts/issues

+[github discussions]: https://github.com/coqui-ai/TTS/discussions

+[gitter room]: https://gitter.im/coqui-ai/TTS?utm_source=share-link&utm_medium=link&utm_campaign=share-link

+[Tutorials and Examples]: https://github.com/coqui-ai/TTS/wiki/TTS-Notebooks-and-Tutorials

+

+

+## 🔗 Links and Resources

+| Type | Links |

+| ------------------------------- | --------------------------------------- |

+| 💼 **Documentation** | [ReadTheDocs](https://tts.readthedocs.io/en/latest/)

+| 💾 **Installation** | [TTS/README.md](https://github.com/coqui-ai/TTS/tree/dev#install-tts)|

+| 👩💻 **Contributing** | [CONTRIBUTING.md](https://github.com/coqui-ai/TTS/blob/main/CONTRIBUTING.md)|

+| 📌 **Road Map** | [Main Development Plans](https://github.com/coqui-ai/TTS/issues/378)

+| 🚀 **Released Models** | [TTS Releases](https://github.com/coqui-ai/TTS/releases) and [Experimental Models](https://github.com/coqui-ai/TTS/wiki/Experimental-Released-Models)|

+

+## 🥇 TTS Performance

+

+

+🐸TTS is a library for advanced Text-to-Speech generation. It's built on the latest research, was designed to achieve the best trade-off among ease-of-training, speed and quality.

+🐸TTS comes with pretrained models, tools for measuring dataset quality and already used in **20+ languages** for products and research projects.

+

+[](https://gitter.im/coqui-ai/TTS?utm_source=badge&utm_medium=badge&utm_campaign=pr-badge)

+[](https://gitter.im/coqui-ai/TTS?utm_source=badge&utm_medium=badge&utm_campaign=pr-badge)

+[ +

+## 💬 Where to ask questions

+Please use our dedicated channels for questions and discussion. Help is much more valuable if it's shared publicly so that more people can benefit from it.

+

+| Type | Platforms |

+| ------------------------------- | --------------------------------------- |

+| 🚨 **Bug Reports** | [GitHub Issue Tracker] |

+| 🎁 **Feature Requests & Ideas** | [GitHub Issue Tracker] |

+| 👩💻 **Usage Questions** | [Github Discussions] |

+| 🗯 **General Discussion** | [Github Discussions] or [Gitter Room] |

+

+[github issue tracker]: https://github.com/coqui-ai/tts/issues

+[github discussions]: https://github.com/coqui-ai/TTS/discussions

+[gitter room]: https://gitter.im/coqui-ai/TTS?utm_source=share-link&utm_medium=link&utm_campaign=share-link

+[Tutorials and Examples]: https://github.com/coqui-ai/TTS/wiki/TTS-Notebooks-and-Tutorials

+

+

+## 🔗 Links and Resources

+| Type | Links |

+| ------------------------------- | --------------------------------------- |

+| 💼 **Documentation** | [ReadTheDocs](https://tts.readthedocs.io/en/latest/)

+| 💾 **Installation** | [TTS/README.md](https://github.com/coqui-ai/TTS/tree/dev#install-tts)|

+| 👩💻 **Contributing** | [CONTRIBUTING.md](https://github.com/coqui-ai/TTS/blob/main/CONTRIBUTING.md)|

+| 📌 **Road Map** | [Main Development Plans](https://github.com/coqui-ai/TTS/issues/378)

+| 🚀 **Released Models** | [TTS Releases](https://github.com/coqui-ai/TTS/releases) and [Experimental Models](https://github.com/coqui-ai/TTS/wiki/Experimental-Released-Models)|

+

+## 🥇 TTS Performance

+

+

+## 💬 Where to ask questions

+Please use our dedicated channels for questions and discussion. Help is much more valuable if it's shared publicly so that more people can benefit from it.

+

+| Type | Platforms |

+| ------------------------------- | --------------------------------------- |

+| 🚨 **Bug Reports** | [GitHub Issue Tracker] |

+| 🎁 **Feature Requests & Ideas** | [GitHub Issue Tracker] |

+| 👩💻 **Usage Questions** | [Github Discussions] |

+| 🗯 **General Discussion** | [Github Discussions] or [Gitter Room] |

+

+[github issue tracker]: https://github.com/coqui-ai/tts/issues

+[github discussions]: https://github.com/coqui-ai/TTS/discussions

+[gitter room]: https://gitter.im/coqui-ai/TTS?utm_source=share-link&utm_medium=link&utm_campaign=share-link

+[Tutorials and Examples]: https://github.com/coqui-ai/TTS/wiki/TTS-Notebooks-and-Tutorials

+

+

+## 🔗 Links and Resources

+| Type | Links |

+| ------------------------------- | --------------------------------------- |

+| 💼 **Documentation** | [ReadTheDocs](https://tts.readthedocs.io/en/latest/)

+| 💾 **Installation** | [TTS/README.md](https://github.com/coqui-ai/TTS/tree/dev#install-tts)|

+| 👩💻 **Contributing** | [CONTRIBUTING.md](https://github.com/coqui-ai/TTS/blob/main/CONTRIBUTING.md)|

+| 📌 **Road Map** | [Main Development Plans](https://github.com/coqui-ai/TTS/issues/378)

+| 🚀 **Released Models** | [TTS Releases](https://github.com/coqui-ai/TTS/releases) and [Experimental Models](https://github.com/coqui-ai/TTS/wiki/Experimental-Released-Models)|

+

+## 🥇 TTS Performance

+

+

+ {% if show_details == true %}

+

+

+

+ {% if show_details == true %}

+

+

+ Model details

+

+

+

+

+

+

+

+

+

+ CLI arguments:

+| CLI key | +Value | +

| {{ key }} | +{{ value }} | +

+

+ {% if model_config != None %}

+

+

+

+

+

+

+

+

+

+

+ {% endif %}

+

+ Model config:

+ +| Key | +Value | +

| {{ key }} | +{{ value }} | +

+ {% if vocoder_config != None %}

+

+

+ {% else %}

+

+

+

+

+ {% endif %}

+ Vocoder model config:

+ +| Key | +Value | +

| {{ key }} | +{{ value }} | +

+ Please start server with --show_details=true to see details.

+

+

+ {% endif %}

+

+

+

+

\ No newline at end of file

diff --git a/Indic-TTS/TTS/TTS/server/templates/index.html b/Indic-TTS/TTS/TTS/server/templates/index.html

new file mode 100644

index 0000000000000000000000000000000000000000..b0eab291a2c78e678709aba7dddb2b97b8e94b0f

--- /dev/null

+++ b/Indic-TTS/TTS/TTS/server/templates/index.html

@@ -0,0 +1,143 @@

+

+

+

+

+

+

+

+

+

+

+  +

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

\ No newline at end of file

diff --git a/Indic-TTS/TTS/TTS/tts/__init__.py b/Indic-TTS/TTS/TTS/tts/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..e69de29bb2d1d6434b8b29ae775ad8c2e48c5391

diff --git a/Indic-TTS/TTS/TTS/tts/__pycache__/__init__.cpython-37.pyc b/Indic-TTS/TTS/TTS/tts/__pycache__/__init__.cpython-37.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..a8eeabe3dc13f757056639de5f79c9ed06508b3e

Binary files /dev/null and b/Indic-TTS/TTS/TTS/tts/__pycache__/__init__.cpython-37.pyc differ

diff --git a/Indic-TTS/TTS/TTS/tts/configs/__init__.py b/Indic-TTS/TTS/TTS/tts/configs/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..3146ac1c116cb807a81889b7a9ab223b9a051036

--- /dev/null

+++ b/Indic-TTS/TTS/TTS/tts/configs/__init__.py

@@ -0,0 +1,17 @@

+import importlib

+import os

+from inspect import isclass

+

+# import all files under configs/

+# configs_dir = os.path.dirname(__file__)

+# for file in os.listdir(configs_dir):

+# path = os.path.join(configs_dir, file)

+# if not file.startswith("_") and not file.startswith(".") and (file.endswith(".py") or os.path.isdir(path)):

+# config_name = file[: file.find(".py")] if file.endswith(".py") else file

+# module = importlib.import_module("TTS.tts.configs." + config_name)

+# for attribute_name in dir(module):

+# attribute = getattr(module, attribute_name)

+

+# if isclass(attribute):

+# # Add the class to this package's variables

+# globals()[attribute_name] = attribute

diff --git a/Indic-TTS/TTS/TTS/tts/configs/__pycache__/__init__.cpython-37.pyc b/Indic-TTS/TTS/TTS/tts/configs/__pycache__/__init__.cpython-37.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..a667a9aef2594250788e230cebb267264cfed7a2

Binary files /dev/null and b/Indic-TTS/TTS/TTS/tts/configs/__pycache__/__init__.cpython-37.pyc differ

diff --git a/Indic-TTS/TTS/TTS/tts/configs/__pycache__/fast_pitch_config.cpython-37.pyc b/Indic-TTS/TTS/TTS/tts/configs/__pycache__/fast_pitch_config.cpython-37.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..d89fa20499dddde1fa7b1db19ce52a1e9225f062

Binary files /dev/null and b/Indic-TTS/TTS/TTS/tts/configs/__pycache__/fast_pitch_config.cpython-37.pyc differ

diff --git a/Indic-TTS/TTS/TTS/tts/configs/__pycache__/shared_configs.cpython-37.pyc b/Indic-TTS/TTS/TTS/tts/configs/__pycache__/shared_configs.cpython-37.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..39c35d5d335e2dcbf15a0a8505cc4b2ee7efdfce

Binary files /dev/null and b/Indic-TTS/TTS/TTS/tts/configs/__pycache__/shared_configs.cpython-37.pyc differ

diff --git a/Indic-TTS/TTS/TTS/tts/configs/align_tts_config.py b/Indic-TTS/TTS/TTS/tts/configs/align_tts_config.py

new file mode 100644

index 0000000000000000000000000000000000000000..317a01af53ce26914d83610a913eb44b5836dac2

--- /dev/null

+++ b/Indic-TTS/TTS/TTS/tts/configs/align_tts_config.py

@@ -0,0 +1,107 @@

+from dataclasses import dataclass, field

+from typing import List

+

+from TTS.tts.configs.shared_configs import BaseTTSConfig

+from TTS.tts.models.align_tts import AlignTTSArgs

+

+

+@dataclass

+class AlignTTSConfig(BaseTTSConfig):

+ """Defines parameters for AlignTTS model.

+ Example:

+

+ >>> from TTS.tts.configs.align_tts_config import AlignTTSConfig

+ >>> config = AlignTTSConfig()

+

+ Args:

+ model(str):

+ Model name used for selecting the right model at initialization. Defaults to `align_tts`.

+ positional_encoding (bool):

+ enable / disable positional encoding applied to the encoder output. Defaults to True.

+ hidden_channels (int):

+ Base number of hidden channels. Defines all the layers expect ones defined by the specific encoder or decoder

+ parameters. Defaults to 256.

+ hidden_channels_dp (int):

+ Number of hidden channels of the duration predictor's layers. Defaults to 256.

+ encoder_type (str):

+ Type of the encoder used by the model. Look at `TTS.tts.layers.feed_forward.encoder` for more details.

+ Defaults to `fftransformer`.

+ encoder_params (dict):

+ Parameters used to define the encoder network. Look at `TTS.tts.layers.feed_forward.encoder` for more details.

+ Defaults to `{"hidden_channels_ffn": 1024, "num_heads": 2, "num_layers": 6, "dropout_p": 0.1}`.

+ decoder_type (str):

+ Type of the decoder used by the model. Look at `TTS.tts.layers.feed_forward.decoder` for more details.

+ Defaults to `fftransformer`.

+ decoder_params (dict):

+ Parameters used to define the decoder network. Look at `TTS.tts.layers.feed_forward.decoder` for more details.

+ Defaults to `{"hidden_channels_ffn": 1024, "num_heads": 2, "num_layers": 6, "dropout_p": 0.1}`.

+ phase_start_steps (List[int]):

+ A list of number of steps required to start the next training phase. AlignTTS has 4 different training

+ phases. Thus you need to define 4 different values to enable phase based training. If None, it

+ trains the whole model together. Defaults to None.

+ ssim_alpha (float):

+ Weight for the SSIM loss. If set <= 0, disables the SSIM loss. Defaults to 1.0.

+ duration_loss_alpha (float):

+ Weight for the duration predictor's loss. Defaults to 1.0.

+ mdn_alpha (float):

+ Weight for the MDN loss. Defaults to 1.0.

+ spec_loss_alpha (float):

+ Weight for the MSE spectrogram loss. If set <= 0, disables the L1 loss. Defaults to 1.0.

+ use_speaker_embedding (bool):

+ enable / disable using speaker embeddings for multi-speaker models. If set True, the model is

+ in the multi-speaker mode. Defaults to False.

+ use_d_vector_file (bool):

+ enable /disable using external speaker embeddings in place of the learned embeddings. Defaults to False.

+ d_vector_file (str):

+ Path to the file including pre-computed speaker embeddings. Defaults to None.

+ noam_schedule (bool):

+ enable / disable the use of Noam LR scheduler. Defaults to False.

+ warmup_steps (int):

+ Number of warm-up steps for the Noam scheduler. Defaults 4000.

+ lr (float):

+ Initial learning rate. Defaults to `1e-3`.

+ wd (float):

+ Weight decay coefficient. Defaults to `1e-7`.

+ min_seq_len (int):

+ Minimum input sequence length to be used at training.

+ max_seq_len (int):

+ Maximum input sequence length to be used at training. Larger values result in more VRAM usage."""

+

+ model: str = "align_tts"

+ # model specific params

+ model_args: AlignTTSArgs = field(default_factory=AlignTTSArgs)

+ phase_start_steps: List[int] = None

+

+ ssim_alpha: float = 1.0

+ spec_loss_alpha: float = 1.0

+ dur_loss_alpha: float = 1.0

+ mdn_alpha: float = 1.0

+

+ # multi-speaker settings

+ use_speaker_embedding: bool = False

+ use_d_vector_file: bool = False

+ d_vector_file: str = False

+

+ # optimizer parameters

+ optimizer: str = "Adam"

+ optimizer_params: dict = field(default_factory=lambda: {"betas": [0.9, 0.998], "weight_decay": 1e-6})

+ lr_scheduler: str = None

+ lr_scheduler_params: dict = None

+ lr: float = 1e-4

+ grad_clip: float = 5.0

+

+ # overrides

+ min_seq_len: int = 13

+ max_seq_len: int = 200

+ r: int = 1

+

+ # testing

+ test_sentences: List[str] = field(

+ default_factory=lambda: [

+ "It took me quite a long time to develop a voice, and now that I have it I'm not going to be silent.",

+ "Be a voice, not an echo.",

+ "I'm sorry Dave. I'm afraid I can't do that.",

+ "This cake is great. It's so delicious and moist.",

+ "Prior to November 22, 1963.",

+ ]

+ )

diff --git a/Indic-TTS/TTS/TTS/tts/configs/fast_pitch_config.py b/Indic-TTS/TTS/TTS/tts/configs/fast_pitch_config.py

new file mode 100644

index 0000000000000000000000000000000000000000..26ccfdd54037a63c4d5d638109cd30524f8f22ca

--- /dev/null

+++ b/Indic-TTS/TTS/TTS/tts/configs/fast_pitch_config.py

@@ -0,0 +1,182 @@

+from dataclasses import dataclass, field

+from typing import List

+

+from TTS.tts.configs.shared_configs import BaseTTSConfig

+from TTS.tts.models.forward_tts import ForwardTTSArgs

+

+

+@dataclass

+class FastPitchConfig(BaseTTSConfig):

+ """Configure `ForwardTTS` as FastPitch model.

+

+ Example:

+

+ >>> from TTS.tts.configs.fast_pitch_config import FastPitchConfig

+ >>> config = FastPitchConfig()

+

+ Args:

+ model (str):

+ Model name used for selecting the right model at initialization. Defaults to `fast_pitch`.

+

+ base_model (str):

+ Name of the base model being configured as this model so that 🐸 TTS knows it needs to initiate

+ the base model rather than searching for the `model` implementation. Defaults to `forward_tts`.

+

+ model_args (Coqpit):

+ Model class arguments. Check `FastPitchArgs` for more details. Defaults to `FastPitchArgs()`.

+

+ data_dep_init_steps (int):

+ Number of steps used for computing normalization parameters at the beginning of the training. GlowTTS uses

+ Activation Normalization that pre-computes normalization stats at the beginning and use the same values

+ for the rest. Defaults to 10.

+

+ speakers_file (str):

+ Path to the file containing the list of speakers. Needed at inference for loading matching speaker ids to

+ speaker names. Defaults to `None`.

+

+ use_speaker_embedding (bool):

+ enable / disable using speaker embeddings for multi-speaker models. If set True, the model is

+ in the multi-speaker mode. Defaults to False.

+

+ use_d_vector_file (bool):

+ enable /disable using external speaker embeddings in place of the learned embeddings. Defaults to False.

+

+ d_vector_file (str):

+ Path to the file including pre-computed speaker embeddings. Defaults to None.

+

+ d_vector_dim (int):

+ Dimension of the external speaker embeddings. Defaults to 0.

+

+ optimizer (str):

+ Name of the model optimizer. Defaults to `Adam`.

+

+ optimizer_params (dict):

+ Arguments of the model optimizer. Defaults to `{"betas": [0.9, 0.998], "weight_decay": 1e-6}`.

+

+ lr_scheduler (str):

+ Name of the learning rate scheduler. Defaults to `Noam`.

+

+ lr_scheduler_params (dict):

+ Arguments of the learning rate scheduler. Defaults to `{"warmup_steps": 4000}`.

+

+ lr (float):

+ Initial learning rate. Defaults to `1e-3`.

+

+ grad_clip (float):

+ Gradient norm clipping value. Defaults to `5.0`.

+

+ spec_loss_type (str):

+ Type of the spectrogram loss. Check `ForwardTTSLoss` for possible values. Defaults to `mse`.

+

+ duration_loss_type (str):

+ Type of the duration loss. Check `ForwardTTSLoss` for possible values. Defaults to `mse`.

+

+ use_ssim_loss (bool):

+ Enable/disable the use of SSIM (Structural Similarity) loss. Defaults to True.

+

+ wd (float):

+ Weight decay coefficient. Defaults to `1e-7`.

+

+ ssim_loss_alpha (float):

+ Weight for the SSIM loss. If set 0, disables the SSIM loss. Defaults to 1.0.

+

+ dur_loss_alpha (float):

+ Weight for the duration predictor's loss. If set 0, disables the huber loss. Defaults to 1.0.

+

+ spec_loss_alpha (float):

+ Weight for the L1 spectrogram loss. If set 0, disables the L1 loss. Defaults to 1.0.

+

+ pitch_loss_alpha (float):

+ Weight for the pitch predictor's loss. If set 0, disables the pitch predictor. Defaults to 1.0.

+

+ binary_align_loss_alpha (float):

+ Weight for the binary loss. If set 0, disables the binary loss. Defaults to 1.0.

+

+ binary_loss_warmup_epochs (float):

+ Number of epochs to gradually increase the binary loss impact. Defaults to 150.

+

+ min_seq_len (int):

+ Minimum input sequence length to be used at training.

+

+ max_seq_len (int):

+ Maximum input sequence length to be used at training. Larger values result in more VRAM usage.

+ """

+

+ model: str = "fast_pitch"

+ base_model: str = "forward_tts"

+

+ # model specific params

+ model_args: ForwardTTSArgs = ForwardTTSArgs()

+

+ # data loader params

+ return_wav: bool = False

+ compute_linear_spec: bool = False

+

+ # multi-speaker settings

+ num_speakers: int = 0

+ speakers_file: str = None

+ use_speaker_embedding: bool = False

+ use_d_vector_file: bool = False

+ d_vector_file: str = False

+ d_vector_dim: int = 0

+

+ # optimizer parameters

+ optimizer: str = "Adam"

+ optimizer_params: dict = field(default_factory=lambda: {"betas": [0.9, 0.998], "weight_decay": 1e-6})

+ lr_scheduler: str = "NoamLR"

+ lr_scheduler_params: dict = field(default_factory=lambda: {"warmup_steps": 4000})

+ lr: float = 1e-4

+ grad_clip: float = 5.0

+

+ # loss params

+ spec_loss_type: str = "mse"

+ duration_loss_type: str = "mse"

+ use_ssim_loss: bool = True

+ ssim_loss_alpha: float = 1.0

+ spec_loss_alpha: float = 1.0

+ aligner_loss_alpha: float = 1.0

+ pitch_loss_alpha: float = 0.1

+ dur_loss_alpha: float = 0.1

+ binary_align_loss_alpha: float = 0.1

+ spk_encoder_loss_alpha: float = 0.1

+ binary_loss_warmup_epochs: int = 150

+ aligner_epochs: int = 1000

+

+ # overrides

+ min_seq_len: int = 13

+ max_seq_len: int = 200

+ r: int = 1 # DO NOT CHANGE

+

+ # dataset configs

+ compute_f0: bool = True

+ f0_cache_path: str = None

+

+ # testing

+ test_sentences: List[str] = field(

+ default_factory=lambda: [

+ "It took me quite a long time to develop a voice, and now that I have it I'm not going to be silent.",

+ "Be a voice, not an echo.",

+ "I'm sorry Dave. I'm afraid I can't do that.",

+ "This cake is great. It's so delicious and moist.",

+ "Prior to November 22, 1963.",

+ ]

+ )

+

+ def __post_init__(self):

+ # Pass multi-speaker parameters to the model args as `model.init_multispeaker()` looks for it there.

+ if self.num_speakers > 0:

+ self.model_args.num_speakers = self.num_speakers

+

+ # speaker embedding settings

+ if self.use_speaker_embedding:

+ self.model_args.use_speaker_embedding = True

+ if self.speakers_file:

+ self.model_args.speakers_file = self.speakers_file

+

+ # d-vector settings

+ if self.use_d_vector_file:

+ self.model_args.use_d_vector_file = True

+ if self.d_vector_dim is not None and self.d_vector_dim > 0:

+ self.model_args.d_vector_dim = self.d_vector_dim

+ if self.d_vector_file:

+ self.model_args.d_vector_file = self.d_vector_file

diff --git a/Indic-TTS/TTS/TTS/tts/configs/fast_speech_config.py b/Indic-TTS/TTS/TTS/tts/configs/fast_speech_config.py

new file mode 100644

index 0000000000000000000000000000000000000000..16a76e215f4d47d086bea827d2b6ccc61524e5c1

--- /dev/null

+++ b/Indic-TTS/TTS/TTS/tts/configs/fast_speech_config.py

@@ -0,0 +1,177 @@

+from dataclasses import dataclass, field

+from typing import List

+

+from TTS.tts.configs.shared_configs import BaseTTSConfig

+from TTS.tts.models.forward_tts import ForwardTTSArgs

+

+

+@dataclass

+class FastSpeechConfig(BaseTTSConfig):

+ """Configure `ForwardTTS` as FastSpeech model.

+

+ Example:

+

+ >>> from TTS.tts.configs.fast_speech_config import FastSpeechConfig

+ >>> config = FastSpeechConfig()

+

+ Args:

+ model (str):

+ Model name used for selecting the right model at initialization. Defaults to `fast_pitch`.

+

+ base_model (str):

+ Name of the base model being configured as this model so that 🐸 TTS knows it needs to initiate

+ the base model rather than searching for the `model` implementation. Defaults to `forward_tts`.

+

+ model_args (Coqpit):

+ Model class arguments. Check `FastSpeechArgs` for more details. Defaults to `FastSpeechArgs()`.

+

+ data_dep_init_steps (int):

+ Number of steps used for computing normalization parameters at the beginning of the training. GlowTTS uses

+ Activation Normalization that pre-computes normalization stats at the beginning and use the same values

+ for the rest. Defaults to 10.

+

+ speakers_file (str):

+ Path to the file containing the list of speakers. Needed at inference for loading matching speaker ids to

+ speaker names. Defaults to `None`.

+

+

+ use_speaker_embedding (bool):

+ enable / disable using speaker embeddings for multi-speaker models. If set True, the model is

+ in the multi-speaker mode. Defaults to False.

+

+ use_d_vector_file (bool):

+ enable /disable using external speaker embeddings in place of the learned embeddings. Defaults to False.

+

+ d_vector_file (str):

+ Path to the file including pre-computed speaker embeddings. Defaults to None.

+

+ d_vector_dim (int):

+ Dimension of the external speaker embeddings. Defaults to 0.

+

+ optimizer (str):

+ Name of the model optimizer. Defaults to `Adam`.

+

+ optimizer_params (dict):

+ Arguments of the model optimizer. Defaults to `{"betas": [0.9, 0.998], "weight_decay": 1e-6}`.

+

+ lr_scheduler (str):

+ Name of the learning rate scheduler. Defaults to `Noam`.

+

+ lr_scheduler_params (dict):

+ Arguments of the learning rate scheduler. Defaults to `{"warmup_steps": 4000}`.

+

+ lr (float):

+ Initial learning rate. Defaults to `1e-3`.

+

+ grad_clip (float):

+ Gradient norm clipping value. Defaults to `5.0`.

+

+ spec_loss_type (str):

+ Type of the spectrogram loss. Check `ForwardTTSLoss` for possible values. Defaults to `mse`.

+

+ duration_loss_type (str):

+ Type of the duration loss. Check `ForwardTTSLoss` for possible values. Defaults to `mse`.

+

+ use_ssim_loss (bool):

+ Enable/disable the use of SSIM (Structural Similarity) loss. Defaults to True.

+

+ wd (float):

+ Weight decay coefficient. Defaults to `1e-7`.

+

+ ssim_loss_alpha (float):

+ Weight for the SSIM loss. If set 0, disables the SSIM loss. Defaults to 1.0.

+

+ dur_loss_alpha (float):

+ Weight for the duration predictor's loss. If set 0, disables the huber loss. Defaults to 1.0.

+

+ spec_loss_alpha (float):

+ Weight for the L1 spectrogram loss. If set 0, disables the L1 loss. Defaults to 1.0.

+

+ pitch_loss_alpha (float):

+ Weight for the pitch predictor's loss. If set 0, disables the pitch predictor. Defaults to 1.0.

+

+ binary_loss_alpha (float):

+ Weight for the binary loss. If set 0, disables the binary loss. Defaults to 1.0.

+

+ binary_loss_warmup_epochs (float):

+ Number of epochs to gradually increase the binary loss impact. Defaults to 150.

+

+ min_seq_len (int):

+ Minimum input sequence length to be used at training.

+

+ max_seq_len (int):

+ Maximum input sequence length to be used at training. Larger values result in more VRAM usage.

+ """

+

+ model: str = "fast_speech"

+ base_model: str = "forward_tts"

+

+ # model specific params

+ model_args: ForwardTTSArgs = ForwardTTSArgs(use_pitch=False)

+

+ # multi-speaker settings

+ num_speakers: int = 0

+ speakers_file: str = None

+ use_speaker_embedding: bool = False

+ use_d_vector_file: bool = False

+ d_vector_file: str = False

+ d_vector_dim: int = 0

+

+ # optimizer parameters

+ optimizer: str = "Adam"

+ optimizer_params: dict = field(default_factory=lambda: {"betas": [0.9, 0.998], "weight_decay": 1e-6})

+ lr_scheduler: str = "NoamLR"

+ lr_scheduler_params: dict = field(default_factory=lambda: {"warmup_steps": 4000})

+ lr: float = 1e-4

+ grad_clip: float = 5.0

+

+ # loss params

+ spec_loss_type: str = "mse"

+ duration_loss_type: str = "mse"

+ use_ssim_loss: bool = True

+ ssim_loss_alpha: float = 1.0

+ dur_loss_alpha: float = 1.0

+ spec_loss_alpha: float = 1.0

+ pitch_loss_alpha: float = 0.0

+ aligner_loss_alpha: float = 1.0

+ binary_align_loss_alpha: float = 1.0

+ binary_loss_warmup_epochs: int = 150

+

+ # overrides

+ min_seq_len: int = 13

+ max_seq_len: int = 200

+ r: int = 1 # DO NOT CHANGE

+

+ # dataset configs

+ compute_f0: bool = False

+ f0_cache_path: str = None

+

+ # testing

+ test_sentences: List[str] = field(

+ default_factory=lambda: [

+ "It took me quite a long time to develop a voice, and now that I have it I'm not going to be silent.",

+ "Be a voice, not an echo.",

+ "I'm sorry Dave. I'm afraid I can't do that.",

+ "This cake is great. It's so delicious and moist.",

+ "Prior to November 22, 1963.",

+ ]

+ )

+

+ def __post_init__(self):

+ # Pass multi-speaker parameters to the model args as `model.init_multispeaker()` looks for it there.

+ if self.num_speakers > 0:

+ self.model_args.num_speakers = self.num_speakers

+

+ # speaker embedding settings

+ if self.use_speaker_embedding:

+ self.model_args.use_speaker_embedding = True

+ if self.speakers_file:

+ self.model_args.speakers_file = self.speakers_file

+

+ # d-vector settings

+ if self.use_d_vector_file:

+ self.model_args.use_d_vector_file = True

+ if self.d_vector_dim is not None and self.d_vector_dim > 0:

+ self.model_args.d_vector_dim = self.d_vector_dim

+ if self.d_vector_file:

+ self.model_args.d_vector_file = self.d_vector_file

diff --git a/Indic-TTS/TTS/TTS/tts/configs/glow_tts_config.py b/Indic-TTS/TTS/TTS/tts/configs/glow_tts_config.py

new file mode 100644

index 0000000000000000000000000000000000000000..f42f3e5a510bacf1b2312ccea7d46201bbcb774f

--- /dev/null

+++ b/Indic-TTS/TTS/TTS/tts/configs/glow_tts_config.py

@@ -0,0 +1,182 @@

+from dataclasses import dataclass, field

+from typing import List

+

+from TTS.tts.configs.shared_configs import BaseTTSConfig

+

+

+@dataclass

+class GlowTTSConfig(BaseTTSConfig):

+ """Defines parameters for GlowTTS model.

+

+ Example:

+

+ >>> from TTS.tts.configs.glow_tts_config import GlowTTSConfig

+ >>> config = GlowTTSConfig()

+

+ Args:

+ model(str):

+ Model name used for selecting the right model at initialization. Defaults to `glow_tts`.

+ encoder_type (str):

+ Type of the encoder used by the model. Look at `TTS.tts.layers.glow_tts.encoder` for more details.

+ Defaults to `rel_pos_transformers`.

+ encoder_params (dict):

+ Parameters used to define the encoder network. Look at `TTS.tts.layers.glow_tts.encoder` for more details.

+ Defaults to `{"kernel_size": 3, "dropout_p": 0.1, "num_layers": 6, "num_heads": 2, "hidden_channels_ffn": 768}`

+ use_encoder_prenet (bool):

+ enable / disable the use of a prenet for the encoder. Defaults to True.

+ hidden_channels_enc (int):

+ Number of base hidden channels used by the encoder network. It defines the input and the output channel sizes,

+ and for some encoder types internal hidden channels sizes too. Defaults to 192.

+ hidden_channels_dec (int):

+ Number of base hidden channels used by the decoder WaveNet network. Defaults to 192 as in the original work.

+ hidden_channels_dp (int):

+ Number of layer channels of the duration predictor network. Defaults to 256 as in the original work.

+ mean_only (bool):

+ If true predict only the mean values by the decoder flow. Defaults to True.

+ out_channels (int):

+ Number of channels of the model output tensor. Defaults to 80.

+ num_flow_blocks_dec (int):

+ Number of decoder blocks. Defaults to 12.

+ inference_noise_scale (float):

+ Noise scale used at inference. Defaults to 0.33.

+ kernel_size_dec (int):

+ Decoder kernel size. Defaults to 5

+ dilation_rate (int):

+ Rate to increase dilation by each layer in a decoder block. Defaults to 1.

+ num_block_layers (int):

+ Number of decoder layers in each decoder block. Defaults to 4.

+ dropout_p_dec (float):

+ Dropout rate for decoder. Defaults to 0.1.

+ num_speaker (int):

+ Number of speaker to define the size of speaker embedding layer. Defaults to 0.

+ c_in_channels (int):

+ Number of speaker embedding channels. It is set to 512 if embeddings are learned. Defaults to 0.

+ num_splits (int):

+ Number of split levels in inversible conv1x1 operation. Defaults to 4.

+ num_squeeze (int):

+ Number of squeeze levels. When squeezing channels increases and time steps reduces by the factor

+ 'num_squeeze'. Defaults to 2.

+ sigmoid_scale (bool):

+ enable/disable sigmoid scaling in decoder. Defaults to False.

+ mean_only (bool):

+ If True, encoder only computes mean value and uses constant variance for each time step. Defaults to true.

+ encoder_type (str):

+ Encoder module type. Possible values are`["rel_pos_transformer", "gated_conv", "residual_conv_bn", "time_depth_separable"]`

+ Check `TTS.tts.layers.glow_tts.encoder` for more details. Defaults to `rel_pos_transformers` as in the original paper.

+ encoder_params (dict):

+ Encoder module parameters. Defaults to None.

+ d_vector_dim (int):

+ Channels of external speaker embedding vectors. Defaults to 0.

+ data_dep_init_steps (int):

+ Number of steps used for computing normalization parameters at the beginning of the training. GlowTTS uses

+ Activation Normalization that pre-computes normalization stats at the beginning and use the same values

+ for the rest. Defaults to 10.

+ style_wav_for_test (str):

+ Path to the wav file used for changing the style of the speech. Defaults to None.

+ inference_noise_scale (float):

+ Variance used for sampling the random noise added to the decoder's input at inference. Defaults to 0.0.

+ length_scale (float):

+ Multiply the predicted durations with this value to change the speech speed. Defaults to 1.

+ use_speaker_embedding (bool):

+ enable / disable using speaker embeddings for multi-speaker models. If set True, the model is

+ in the multi-speaker mode. Defaults to False.

+ use_d_vector_file (bool):

+ enable /disable using external speaker embeddings in place of the learned embeddings. Defaults to False.

+ d_vector_file (str):

+ Path to the file including pre-computed speaker embeddings. Defaults to None.

+ noam_schedule (bool):

+ enable / disable the use of Noam LR scheduler. Defaults to False.

+ warmup_steps (int):

+ Number of warm-up steps for the Noam scheduler. Defaults 4000.

+ lr (float):

+ Initial learning rate. Defaults to `1e-3`.

+ wd (float):

+ Weight decay coefficient. Defaults to `1e-7`.

+ min_seq_len (int):

+ Minimum input sequence length to be used at training.

+ max_seq_len (int):

+ Maximum input sequence length to be used at training. Larger values result in more VRAM usage.

+ """

+

+ model: str = "glow_tts"

+

+ # model params

+ num_chars: int = None

+ encoder_type: str = "rel_pos_transformer"

+ encoder_params: dict = field(

+ default_factory=lambda: {

+ "kernel_size": 3,

+ "dropout_p": 0.1,

+ "num_layers": 6,

+ "num_heads": 2,

+ "hidden_channels_ffn": 768,

+ }

+ )

+ use_encoder_prenet: bool = True

+ hidden_channels_enc: int = 192

+ hidden_channels_dec: int = 192

+ hidden_channels_dp: int = 256

+ dropout_p_dp: float = 0.1

+ dropout_p_dec: float = 0.05

+ mean_only: bool = True

+ out_channels: int = 80

+ num_flow_blocks_dec: int = 12

+ inference_noise_scale: float = 0.33

+ kernel_size_dec: int = 5

+ dilation_rate: int = 1

+ num_block_layers: int = 4

+ num_speakers: int = 0

+ c_in_channels: int = 0

+ num_splits: int = 4

+ num_squeeze: int = 2

+ sigmoid_scale: bool = False

+ encoder_type: str = "rel_pos_transformer"

+ encoder_params: dict = field(

+ default_factory=lambda: {

+ "kernel_size": 3,

+ "dropout_p": 0.1,

+ "num_layers": 6,

+ "num_heads": 2,

+ "hidden_channels_ffn": 768,

+ "input_length": None,

+ }

+ )

+ d_vector_dim: int = 0

+

+ # training params

+ data_dep_init_steps: int = 10

+

+ # inference params

+ style_wav_for_test: str = None

+ inference_noise_scale: float = 0.0

+ length_scale: float = 1.0

+

+ # multi-speaker settings

+ use_speaker_embedding: bool = False

+ speakers_file: str = None

+ use_d_vector_file: bool = False

+ d_vector_file: str = False

+

+ # optimizer parameters

+ optimizer: str = "RAdam"

+ optimizer_params: dict = field(default_factory=lambda: {"betas": [0.9, 0.998], "weight_decay": 1e-6})

+ lr_scheduler: str = "NoamLR"

+ lr_scheduler_params: dict = field(default_factory=lambda: {"warmup_steps": 4000})

+ grad_clip: float = 5.0

+ lr: float = 1e-3

+

+ # overrides

+ min_seq_len: int = 3

+ max_seq_len: int = 500

+ r: int = 1 # DO NOT CHANGE - TODO: make this immutable once coqpit implements it.

+

+ # testing

+ test_sentences: List[str] = field(

+ default_factory=lambda: [

+ "It took me quite a long time to develop a voice, and now that I have it I'm not going to be silent.",

+ "Be a voice, not an echo.",

+ "I'm sorry Dave. I'm afraid I can't do that.",

+ "This cake is great. It's so delicious and moist.",

+ "Prior to November 22, 1963.",

+ ]

+ )

diff --git a/Indic-TTS/TTS/TTS/tts/configs/shared_configs.py b/Indic-TTS/TTS/TTS/tts/configs/shared_configs.py

new file mode 100644

index 0000000000000000000000000000000000000000..4704687c268780ed518f8c6a4ca64808dbab8e65

--- /dev/null

+++ b/Indic-TTS/TTS/TTS/tts/configs/shared_configs.py

@@ -0,0 +1,335 @@

+from dataclasses import asdict, dataclass, field

+from typing import Dict, List

+

+from coqpit import Coqpit, check_argument

+

+from TTS.config import BaseAudioConfig, BaseDatasetConfig, BaseTrainingConfig

+

+

+@dataclass

+class GSTConfig(Coqpit):

+ """Defines the Global Style Token Module

+

+ Args:

+ gst_style_input_wav (str):

+ Path to the wav file used to define the style of the output speech at inference. Defaults to None.

+

+ gst_style_input_weights (dict):

+ Defines the weights for each style token used at inference. Defaults to None.

+

+ gst_embedding_dim (int):

+ Defines the size of the GST embedding vector dimensions. Defaults to 256.

+

+ gst_num_heads (int):

+ Number of attention heads used by the multi-head attention. Defaults to 4.

+

+ gst_num_style_tokens (int):

+ Number of style token vectors. Defaults to 10.

+ """

+

+ gst_style_input_wav: str = None

+ gst_style_input_weights: dict = None

+ gst_embedding_dim: int = 256

+ gst_use_speaker_embedding: bool = False

+ gst_num_heads: int = 4

+ gst_num_style_tokens: int = 10

+

+ def check_values(

+ self,

+ ):

+ """Check config fields"""

+ c = asdict(self)

+ super().check_values()

+ check_argument("gst_style_input_weights", c, restricted=False)

+ check_argument("gst_style_input_wav", c, restricted=False)

+ check_argument("gst_embedding_dim", c, restricted=True, min_val=0, max_val=1000)

+ check_argument("gst_use_speaker_embedding", c, restricted=False)

+ check_argument("gst_num_heads", c, restricted=True, min_val=2, max_val=10)

+ check_argument("gst_num_style_tokens", c, restricted=True, min_val=1, max_val=1000)

+

+

+@dataclass

+class CapacitronVAEConfig(Coqpit):

+ """Defines the capacitron VAE Module

+ Args:

+ capacitron_capacity (int):

+ Defines the variational capacity limit of the prosody embeddings. Defaults to 150.

+ capacitron_VAE_embedding_dim (int):

+ Defines the size of the Capacitron embedding vector dimension. Defaults to 128.

+ capacitron_use_text_summary_embeddings (bool):

+ If True, use a text summary embedding in Capacitron. Defaults to True.

+ capacitron_text_summary_embedding_dim (int):

+ Defines the size of the capacitron text embedding vector dimension. Defaults to 128.

+ capacitron_use_speaker_embedding (bool):

+ if True use speaker embeddings in Capacitron. Defaults to False.

+ capacitron_VAE_loss_alpha (float):

+ Weight for the VAE loss of the Tacotron model. If set less than or equal to zero, it disables the

+ corresponding loss function. Defaults to 0.25

+ capacitron_grad_clip (float):

+ Gradient clipping value for all gradients except beta. Defaults to 5.0

+ """

+

+ capacitron_loss_alpha: int = 1

+ capacitron_capacity: int = 150

+ capacitron_VAE_embedding_dim: int = 128

+ capacitron_use_text_summary_embeddings: bool = True

+ capacitron_text_summary_embedding_dim: int = 128

+ capacitron_use_speaker_embedding: bool = False

+ capacitron_VAE_loss_alpha: float = 0.25

+ capacitron_grad_clip: float = 5.0

+

+ def check_values(

+ self,

+ ):

+ """Check config fields"""

+ c = asdict(self)

+ super().check_values()

+ check_argument("capacitron_capacity", c, restricted=True, min_val=10, max_val=500)

+ check_argument("capacitron_VAE_embedding_dim", c, restricted=True, min_val=16, max_val=1024)

+ check_argument("capacitron_use_speaker_embedding", c, restricted=False)

+ check_argument("capacitron_text_summary_embedding_dim", c, restricted=False, min_val=16, max_val=512)

+ check_argument("capacitron_VAE_loss_alpha", c, restricted=False)

+ check_argument("capacitron_grad_clip", c, restricted=False)

+

+

+@dataclass

+class CharactersConfig(Coqpit):

+ """Defines arguments for the `BaseCharacters` or `BaseVocabulary` and their subclasses.

+

+ Args:

+ characters_class (str):

+ Defines the class of the characters used. If None, we pick ```Phonemes``` or ```Graphemes``` based on

+ the configuration. Defaults to None.

+

+ vocab_dict (dict):

+ Defines the vocabulary dictionary used to encode the characters. Defaults to None.

+

+ pad (str):

+ characters in place of empty padding. Defaults to None.

+

+ eos (str):

+ characters showing the end of a sentence. Defaults to None.

+

+ bos (str):

+ characters showing the beginning of a sentence. Defaults to None.

+

+ blank (str):

+ Optional character used between characters by some models for better prosody. Defaults to `_blank`.

+

+ characters (str):

+ character set used by the model. Characters not in this list are ignored when converting input text to

+ a list of sequence IDs. Defaults to None.

+

+ punctuations (str):

+ characters considered as punctuation as parsing the input sentence. Defaults to None.

+

+ phonemes (str):

+ characters considered as parsing phonemes. This is only for backwards compat. Use `characters` for new

+ models. Defaults to None.

+

+ is_unique (bool):

+ remove any duplicate characters in the character lists. It is a bandaid for compatibility with the old

+ models trained with character lists with duplicates. Defaults to True.

+

+ is_sorted (bool):

+ Sort the characters in alphabetical order. Defaults to True.

+ """

+

+ characters_class: str = None

+

+ # using BaseVocabulary

+ vocab_dict: Dict = None

+

+ # using on BaseCharacters

+ pad: str = None

+ eos: str = None

+ bos: str = None

+ blank: str = None

+ characters: str = None

+ punctuations: str = None

+ phonemes: str = None

+ is_unique: bool = True # for backwards compatibility of models trained with char sets with duplicates

+ is_sorted: bool = True

+

+

+@dataclass

+class BaseTTSConfig(BaseTrainingConfig):

+ """Shared parameters among all the tts models.

+

+ Args:

+

+ audio (BaseAudioConfig):

+ Audio processor config object instance.

+

+ use_phonemes (bool):

+ enable / disable phoneme use.

+

+ phonemizer (str):

+ Name of the phonemizer to use. If set None, the phonemizer will be selected by `phoneme_language`.

+ Defaults to None.

+

+ phoneme_language (str):

+ Language code for the phonemizer. You can check the list of supported languages by running

+ `python TTS/tts/utils/text/phonemizers/__init__.py`. Defaults to None.

+

+ compute_input_seq_cache (bool):

+ enable / disable precomputation of the phoneme sequences. At the expense of some delay at the beginning of

+ the training, It allows faster data loader time and precise limitation with `max_seq_len` and

+ `min_seq_len`.

+

+ text_cleaner (str):

+ Name of the text cleaner used for cleaning and formatting transcripts.

+

+ enable_eos_bos_chars (bool):

+ enable / disable the use of eos and bos characters.

+

+ test_senteces_file (str):

+ Path to a txt file that has sentences used at test time. The file must have a sentence per line.

+

+ phoneme_cache_path (str):

+ Path to the output folder caching the computed phonemes for each sample.

+

+ characters (CharactersConfig):

+ Instance of a CharactersConfig class.

+

+ batch_group_size (int):

+ Size of the batch groups used for bucketing. By default, the dataloader orders samples by the sequence

+ length for a more efficient and stable training. If `batch_group_size > 1` then it performs bucketing to

+ prevent using the same batches for each epoch.

+

+ loss_masking (bool):

+ enable / disable masking loss values against padded segments of samples in a batch.

+

+ sort_by_audio_len (bool):

+ If true, dataloder sorts the data by audio length else sorts by the input text length. Defaults to `False`.

+

+ min_text_len (int):

+ Minimum length of input text to be used. All shorter samples will be ignored. Defaults to 0.

+

+ max_text_len (int):

+ Maximum length of input text to be used. All longer samples will be ignored. Defaults to float("inf").

+

+ min_audio_len (int):

+ Minimum length of input audio to be used. All shorter samples will be ignored. Defaults to 0.

+

+ max_audio_len (int):

+ Maximum length of input audio to be used. All longer samples will be ignored. The maximum length in the

+ dataset defines the VRAM used in the training. Hence, pay attention to this value if you encounter an

+ OOM error in training. Defaults to float("inf").

+

+ compute_f0 (int):

+ (Not in use yet).

+

+ compute_linear_spec (bool):

+ If True data loader computes and returns linear spectrograms alongside the other data.

+

+ precompute_num_workers (int):

+ Number of workers to precompute features. Defaults to 0.

+

+ use_noise_augment (bool):

+ Augment the input audio with random noise.

+

+ start_by_longest (bool):

+ If True, the data loader will start loading the longest batch first. It is useful for checking OOM issues.

+ Defaults to False.

+

+ add_blank (bool):

+ Add blank characters between each other two characters. It improves performance for some models at expense

+ of slower run-time due to the longer input sequence.

+

+ datasets (List[BaseDatasetConfig]):

+ List of datasets used for training. If multiple datasets are provided, they are merged and used together

+ for training.

+

+ optimizer (str):

+ Optimizer used for the training. Set one from `torch.optim.Optimizer` or `TTS.utils.training`.

+ Defaults to ``.

+

+ optimizer_params (dict):

+ Optimizer kwargs. Defaults to `{"betas": [0.8, 0.99], "weight_decay": 0.0}`

+

+ lr_scheduler (str):

+ Learning rate scheduler for the training. Use one from `torch.optim.Scheduler` schedulers or

+ `TTS.utils.training`. Defaults to ``.

+

+ lr_scheduler_params (dict):

+ Parameters for the generator learning rate scheduler. Defaults to `{"warmup": 4000}`.

+

+ test_sentences (List[str]):

+ List of sentences to be used at testing. Defaults to '[]'

+

+ eval_split_max_size (int):

+ Number maximum of samples to be used for evaluation in proportion split. Defaults to None (Disabled).

+

+ eval_split_size (float):

+ If between 0.0 and 1.0 represents the proportion of the dataset to include in the evaluation set.

+ If > 1, represents the absolute number of evaluation samples. Defaults to 0.01 (1%).

+

+ use_speaker_weighted_sampler (bool):

+ Enable / Disable the batch balancer by speaker. Defaults to ```False```.

+

+ speaker_weighted_sampler_alpha (float):

+ Number that control the influence of the speaker sampler weights. Defaults to ```1.0```.

+

+ use_language_weighted_sampler (bool):

+ Enable / Disable the batch balancer by language. Defaults to ```False```.

+

+ language_weighted_sampler_alpha (float):

+ Number that control the influence of the language sampler weights. Defaults to ```1.0```.

+

+ use_length_weighted_sampler (bool):

+ Enable / Disable the batch balancer by audio length. If enabled the dataset will be divided

+ into 10 buckets considering the min and max audio of the dataset. The sampler weights will be

+ computed forcing to have the same quantity of data for each bucket in each training batch. Defaults to ```False```.

+

+ length_weighted_sampler_alpha (float):

+ Number that control the influence of the length sampler weights. Defaults to ```1.0```.

+ """

+

+ audio: BaseAudioConfig = field(default_factory=BaseAudioConfig)

+ # phoneme settings

+ use_phonemes: bool = False

+ phonemizer: str = None

+ phoneme_language: str = None

+ compute_input_seq_cache: bool = False

+ text_cleaner: str = None

+ enable_eos_bos_chars: bool = False

+ test_sentences_file: str = ""

+ phoneme_cache_path: str = None

+ # vocabulary parameters

+ characters: CharactersConfig = None

+ add_blank: bool = False

+ # training params

+ batch_group_size: int = 0

+ loss_masking: bool = None

+ # dataloading

+ sort_by_audio_len: bool = False

+ min_audio_len: int = 1

+ max_audio_len: int = float("inf")

+ min_text_len: int = 1

+ max_text_len: int = float("inf")

+ compute_f0: bool = False

+ compute_linear_spec: bool = False

+ precompute_num_workers: int = 0

+ use_noise_augment: bool = False

+ start_by_longest: bool = False

+ # dataset

+ datasets: List[BaseDatasetConfig] = field(default_factory=lambda: [BaseDatasetConfig()])

+ # optimizer

+ optimizer: str = "radam"

+ optimizer_params: dict = None

+ # scheduler

+ lr_scheduler: str = ""

+ lr_scheduler_params: dict = field(default_factory=lambda: {})

+ # testing

+ test_sentences: List[str] = field(default_factory=lambda: [])

+ # evaluation

+ eval_split_max_size: int = None

+ eval_split_size: float = 0.01

+ # weighted samplers

+ use_speaker_weighted_sampler: bool = False

+ speaker_weighted_sampler_alpha: float = 1.0

+ use_language_weighted_sampler: bool = False

+ language_weighted_sampler_alpha: float = 1.0

+ use_length_weighted_sampler: bool = False

+ length_weighted_sampler_alpha: float = 1.0

diff --git a/Indic-TTS/TTS/TTS/tts/configs/speedy_speech_config.py b/Indic-TTS/TTS/TTS/tts/configs/speedy_speech_config.py

new file mode 100644

index 0000000000000000000000000000000000000000..4bf5101fcad2479e87836c827658c88addfd7cc6

--- /dev/null

+++ b/Indic-TTS/TTS/TTS/tts/configs/speedy_speech_config.py

@@ -0,0 +1,192 @@

+from dataclasses import dataclass, field

+from typing import List

+

+from TTS.tts.configs.shared_configs import BaseTTSConfig

+from TTS.tts.models.forward_tts import ForwardTTSArgs

+

+

+@dataclass

+class SpeedySpeechConfig(BaseTTSConfig):

+ """Configure `ForwardTTS` as SpeedySpeech model.

+

+ Example:

+

+ >>> from TTS.tts.configs.speedy_speech_config import SpeedySpeechConfig

+ >>> config = SpeedySpeechConfig()

+

+ Args:

+ model (str):

+ Model name used for selecting the right model at initialization. Defaults to `speedy_speech`.

+

+ base_model (str):

+ Name of the base model being configured as this model so that 🐸 TTS knows it needs to initiate

+ the base model rather than searching for the `model` implementation. Defaults to `forward_tts`.

+

+ model_args (Coqpit):

+ Model class arguments. Check `FastPitchArgs` for more details. Defaults to `FastPitchArgs()`.

+

+ data_dep_init_steps (int):

+ Number of steps used for computing normalization parameters at the beginning of the training. GlowTTS uses

+ Activation Normalization that pre-computes normalization stats at the beginning and use the same values

+ for the rest. Defaults to 10.

+

+ speakers_file (str):

+ Path to the file containing the list of speakers. Needed at inference for loading matching speaker ids to

+ speaker names. Defaults to `None`.

+

+ use_speaker_embedding (bool):

+ enable / disable using speaker embeddings for multi-speaker models. If set True, the model is

+ in the multi-speaker mode. Defaults to False.

+

+ use_d_vector_file (bool):

+ enable /disable using external speaker embeddings in place of the learned embeddings. Defaults to False.

+

+ d_vector_file (str):

+ Path to the file including pre-computed speaker embeddings. Defaults to None.

+

+ d_vector_dim (int):

+ Dimension of the external speaker embeddings. Defaults to 0.

+

+ optimizer (str):

+ Name of the model optimizer. Defaults to `RAdam`.

+

+ optimizer_params (dict):

+ Arguments of the model optimizer. Defaults to `{"betas": [0.9, 0.998], "weight_decay": 1e-6}`.

+

+ lr_scheduler (str):

+ Name of the learning rate scheduler. Defaults to `Noam`.

+

+ lr_scheduler_params (dict):

+ Arguments of the learning rate scheduler. Defaults to `{"warmup_steps": 4000}`.

+

+ lr (float):

+ Initial learning rate. Defaults to `1e-3`.

+

+ grad_clip (float):

+ Gradient norm clipping value. Defaults to `5.0`.

+

+ spec_loss_type (str):

+ Type of the spectrogram loss. Check `ForwardTTSLoss` for possible values. Defaults to `l1`.

+

+ duration_loss_type (str):

+ Type of the duration loss. Check `ForwardTTSLoss` for possible values. Defaults to `huber`.

+

+ use_ssim_loss (bool):

+ Enable/disable the use of SSIM (Structural Similarity) loss. Defaults to True.

+

+ wd (float):

+ Weight decay coefficient. Defaults to `1e-7`.

+

+ ssim_loss_alpha (float):

+ Weight for the SSIM loss. If set 0, disables the SSIM loss. Defaults to 1.0.

+

+ dur_loss_alpha (float):

+ Weight for the duration predictor's loss. If set 0, disables the huber loss. Defaults to 1.0.

+

+ spec_loss_alpha (float):

+ Weight for the L1 spectrogram loss. If set 0, disables the L1 loss. Defaults to 1.0.

+

+ binary_loss_alpha (float):

+ Weight for the binary loss. If set 0, disables the binary loss. Defaults to 1.0.

+

+ binary_loss_warmup_epochs (float):

+ Number of epochs to gradually increase the binary loss impact. Defaults to 150.

+

+ min_seq_len (int):

+ Minimum input sequence length to be used at training.

+

+ max_seq_len (int):

+ Maximum input sequence length to be used at training. Larger values result in more VRAM usage.

+ """

+

+ model: str = "speedy_speech"

+ base_model: str = "forward_tts"

+

+ # set model args as SpeedySpeech

+ model_args: ForwardTTSArgs = ForwardTTSArgs(

+ use_pitch=False,

+ encoder_type="residual_conv_bn",

+ encoder_params={

+ "kernel_size": 4,

+ "dilations": 4 * [1, 2, 4] + [1],

+ "num_conv_blocks": 2,

+ "num_res_blocks": 13,

+ },

+ decoder_type="residual_conv_bn",

+ decoder_params={

+ "kernel_size": 4,

+ "dilations": 4 * [1, 2, 4, 8] + [1],

+ "num_conv_blocks": 2,

+ "num_res_blocks": 17,

+ },

+ out_channels=80,

+ hidden_channels=128,

+ positional_encoding=True,

+ detach_duration_predictor=True,

+ )

+

+ # multi-speaker settings

+ num_speakers: int = 0

+ speakers_file: str = None

+ use_speaker_embedding: bool = False

+ use_d_vector_file: bool = False

+ d_vector_file: str = False

+ d_vector_dim: int = 0

+

+ # optimizer parameters

+ optimizer: str = "Adam"

+ optimizer_params: dict = field(default_factory=lambda: {"betas": [0.9, 0.998], "weight_decay": 1e-6})

+ lr_scheduler: str = "NoamLR"

+ lr_scheduler_params: dict = field(default_factory=lambda: {"warmup_steps": 4000})

+ lr: float = 1e-4

+ grad_clip: float = 5.0

+

+ # loss params

+ spec_loss_type: str = "l1"

+ duration_loss_type: str = "huber"

+ use_ssim_loss: bool = False

+ ssim_loss_alpha: float = 1.0

+ dur_loss_alpha: float = 1.0

+ spec_loss_alpha: float = 1.0

+ aligner_loss_alpha: float = 1.0

+ binary_align_loss_alpha: float = 0.3

+ binary_loss_warmup_epochs: int = 150

+

+ # overrides

+ min_seq_len: int = 13

+ max_seq_len: int = 200

+ r: int = 1 # DO NOT CHANGE

+

+ # dataset configs

+ compute_f0: bool = False

+ f0_cache_path: str = None

+

+ # testing

+ test_sentences: List[str] = field(

+ default_factory=lambda: [

+ "It took me quite a long time to develop a voice, and now that I have it I'm not going to be silent.",

+ "Be a voice, not an echo.",

+ "I'm sorry Dave. I'm afraid I can't do that.",

+ "This cake is great. It's so delicious and moist.",

+ "Prior to November 22, 1963.",

+ ]

+ )

+

+ def __post_init__(self):

+ # Pass multi-speaker parameters to the model args as `model.init_multispeaker()` looks for it there.

+ if self.num_speakers > 0:

+ self.model_args.num_speakers = self.num_speakers

+

+ # speaker embedding settings

+ if self.use_speaker_embedding:

+ self.model_args.use_speaker_embedding = True

+ if self.speakers_file:

+ self.model_args.speakers_file = self.speakers_file

+

+ # d-vector settings

+ if self.use_d_vector_file:

+ self.model_args.use_d_vector_file = True

+ if self.d_vector_dim is not None and self.d_vector_dim > 0:

+ self.model_args.d_vector_dim = self.d_vector_dim

+ if self.d_vector_file:

+ self.model_args.d_vector_file = self.d_vector_file

diff --git a/Indic-TTS/TTS/TTS/tts/configs/tacotron2_config.py b/Indic-TTS/TTS/TTS/tts/configs/tacotron2_config.py

new file mode 100644

index 0000000000000000000000000000000000000000..95b65202218cf3aa0dd70c8d8cd55a3f913ed308

--- /dev/null

+++ b/Indic-TTS/TTS/TTS/tts/configs/tacotron2_config.py

@@ -0,0 +1,21 @@

+from dataclasses import dataclass

+

+from TTS.tts.configs.tacotron_config import TacotronConfig

+

+

+@dataclass

+class Tacotron2Config(TacotronConfig):

+ """Defines parameters for Tacotron2 based models.

+

+ Example:

+

+ >>> from TTS.tts.configs.tacotron2_config import Tacotron2Config

+ >>> config = Tacotron2Config()

+

+ Check `TacotronConfig` for argument descriptions.

+ """

+

+ model: str = "tacotron2"

+ out_channels: int = 80

+ encoder_in_features: int = 512

+ decoder_in_features: int = 512

diff --git a/Indic-TTS/TTS/TTS/tts/configs/tacotron_config.py b/Indic-TTS/TTS/TTS/tts/configs/tacotron_config.py

new file mode 100644

index 0000000000000000000000000000000000000000..e25609ffcf685fae91fc40eaa2201e728ebc73c4

--- /dev/null

+++ b/Indic-TTS/TTS/TTS/tts/configs/tacotron_config.py

@@ -0,0 +1,235 @@

+from dataclasses import dataclass, field

+from typing import List

+

+from TTS.tts.configs.shared_configs import BaseTTSConfig, CapacitronVAEConfig, GSTConfig

+

+

+@dataclass

+class TacotronConfig(BaseTTSConfig):

+ """Defines parameters for Tacotron based models.

+

+ Example:

+

+ >>> from TTS.tts.configs.tacotron_config import TacotronConfig

+ >>> config = TacotronConfig()

+

+ Args:

+ model (str):

+ Model name used to select the right model class to initilize. Defaults to `Tacotron`.

+ use_gst (bool):

+ enable / disable the use of Global Style Token modules. Defaults to False.

+ gst (GSTConfig):

+ Instance of `GSTConfig` class.

+ gst_style_input (str):

+ Path to the wav file used at inference to set the speech style through GST. If `GST` is enabled and

+ this is not defined, the model uses a zero vector as an input. Defaults to None.

+ use_capacitron_vae (bool):

+ enable / disable the use of Capacitron modules. Defaults to False.

+ capacitron_vae (CapacitronConfig):

+ Instance of `CapacitronConfig` class.

+ num_chars (int):

+ Number of characters used by the model. It must be defined before initializing the model. Defaults to None.

+ num_speakers (int):

+ Number of speakers for multi-speaker models. Defaults to 1.

+ r (int):

+ Initial number of output frames that the decoder computed per iteration. Larger values makes training and inference

+ faster but reduces the quality of the output frames. This must be equal to the largest `r` value used in

+ `gradual_training` schedule. Defaults to 1.

+ gradual_training (List[List]):

+ Parameters for the gradual training schedule. It is in the form `[[a, b, c], [d ,e ,f] ..]` where `a` is

+ the step number to start using the rest of the values, `b` is the `r` value and `c` is the batch size.

+ If sets None, no gradual training is used. Defaults to None.

+ memory_size (int):

+ Defines the number of previous frames used by the Prenet. If set to < 0, then it uses only the last frame.

+ Defaults to -1.

+ prenet_type (str):

+ `original` or `bn`. `original` sets the default Prenet and `bn` uses Batch Normalization version of the

+ Prenet. Defaults to `original`.

+ prenet_dropout (bool):

+ enables / disables the use of dropout in the Prenet. Defaults to True.

+ prenet_dropout_at_inference (bool):

+ enable / disable the use of dropout in the Prenet at the inference time. Defaults to False.

+ stopnet (bool):

+ enable /disable the Stopnet that predicts the end of the decoder sequence. Defaults to True.

+ stopnet_pos_weight (float):

+ Weight that is applied to over-weight positive instances in the Stopnet loss. Use larger values with

+ datasets with longer sentences. Defaults to 10.

+ max_decoder_steps (int):

+ Max number of steps allowed for the decoder. Defaults to 50.

+ encoder_in_features (int):

+ Channels of encoder input and character embedding tensors. Defaults to 256.

+ decoder_in_features (int):

+ Channels of decoder input and encoder output tensors. Defaults to 256.

+ out_channels (int):

+ Channels of the final model output. It must match the spectragram size. Defaults to 80.

+ separate_stopnet (bool):

+ Use a distinct Stopnet which is trained separately from the rest of the model. Defaults to True.

+ attention_type (str):

+ attention type. Check ```TTS.tts.layers.attentions.init_attn```. Defaults to 'original'.

+ attention_heads (int):

+ Number of attention heads for GMM attention. Defaults to 5.

+ windowing (bool):

+ It especially useful at inference to keep attention alignment diagonal. Defaults to False.

+ use_forward_attn (bool):

+ It is only valid if ```attn_type``` is ```original```. Defaults to False.

+ forward_attn_mask (bool):

+ enable/disable extra masking over forward attention. It is useful at inference to prevent

+ possible attention failures. Defaults to False.

+ transition_agent (bool):

+ enable/disable transition agent in forward attention. Defaults to False.

+ location_attn (bool):

+ enable/disable location sensitive attention as in the original Tacotron2 paper.

+ It is only valid if ```attn_type``` is ```original```. Defaults to True.

+ bidirectional_decoder (bool):

+ enable/disable bidirectional decoding. Defaults to False.

+ double_decoder_consistency (bool):

+ enable/disable double decoder consistency. Defaults to False.

+ ddc_r (int):

+ reduction rate used by the coarse decoder when `double_decoder_consistency` is in use. Set this

+ as a multiple of the `r` value. Defaults to 6.

+ speakers_file (str):

+ Path to the speaker mapping file for the Speaker Manager. Defaults to None.

+ use_speaker_embedding (bool):

+ enable / disable using speaker embeddings for multi-speaker models. If set True, the model is

+ in the multi-speaker mode. Defaults to False.

+ use_d_vector_file (bool):

+ enable /disable using external speaker embeddings in place of the learned embeddings. Defaults to False.

+ d_vector_file (str):

+ Path to the file including pre-computed speaker embeddings. Defaults to None.

+ optimizer (str):

+ Optimizer used for the training. Set one from `torch.optim.Optimizer` or `TTS.utils.training`.

+ Defaults to `RAdam`.

+ optimizer_params (dict):

+ Optimizer kwargs. Defaults to `{"betas": [0.8, 0.99], "weight_decay": 0.0}`

+ lr_scheduler (str):

+ Learning rate scheduler for the training. Use one from `torch.optim.Scheduler` schedulers or

+ `TTS.utils.training`. Defaults to `NoamLR`.

+ lr_scheduler_params (dict):

+ Parameters for the generator learning rate scheduler. Defaults to `{"warmup": 4000}`.

+ lr (float):

+ Initial learning rate. Defaults to `1e-4`.

+ wd (float):

+ Weight decay coefficient. Defaults to `1e-6`.

+ grad_clip (float):

+ Gradient clipping threshold. Defaults to `5`.

+ seq_len_norm (bool):

+ enable / disable the sequnce length normalization in the loss functions. If set True, loss of a sample

+ is divided by the sequence length. Defaults to False.

+ loss_masking (bool):

+ enable / disable masking the paddings of the samples in loss computation. Defaults to True.

+ decoder_loss_alpha (float):

+ Weight for the decoder loss of the Tacotron model. If set less than or equal to zero, it disables the

+ corresponding loss function. Defaults to 0.25

+ postnet_loss_alpha (float):

+ Weight for the postnet loss of the Tacotron model. If set less than or equal to zero, it disables the

+ corresponding loss function. Defaults to 0.25

+ postnet_diff_spec_alpha (float):

+ Weight for the postnet differential loss of the Tacotron model. If set less than or equal to zero, it disables the

+ corresponding loss function. Defaults to 0.25

+ decoder_diff_spec_alpha (float):

+

+ Weight for the decoder differential loss of the Tacotron model. If set less than or equal to zero, it disables the

+ corresponding loss function. Defaults to 0.25

+ decoder_ssim_alpha (float):

+ Weight for the decoder SSIM loss of the Tacotron model. If set less than or equal to zero, it disables the

+ corresponding loss function. Defaults to 0.25

+ postnet_ssim_alpha (float):

+ Weight for the postnet SSIM loss of the Tacotron model. If set less than or equal to zero, it disables the

+ corresponding loss function. Defaults to 0.25

+ ga_alpha (float):

+ Weight for the guided attention loss. If set less than or equal to zero, it disables the corresponding loss

+ function. Defaults to 5.

+ """

+

+ model: str = "tacotron"

+ # model_params: TacotronArgs = field(default_factory=lambda: TacotronArgs())

+ use_gst: bool = False

+ gst: GSTConfig = None

+ gst_style_input: str = None

+

+ use_capacitron_vae: bool = False

+ capacitron_vae: CapacitronVAEConfig = None

+

+ # model specific params

+ num_speakers: int = 1

+ num_chars: int = 0

+ r: int = 2

+ gradual_training: List[List[int]] = None

+ memory_size: int = -1

+ prenet_type: str = "original"

+ prenet_dropout: bool = True

+ prenet_dropout_at_inference: bool = False

+ stopnet: bool = True

+ separate_stopnet: bool = True

+ stopnet_pos_weight: float = 10.0

+ max_decoder_steps: int = 500

+ encoder_in_features: int = 256

+ decoder_in_features: int = 256

+ decoder_output_dim: int = 80

+ out_channels: int = 513

+

+ # attention layers

+ attention_type: str = "original"

+ attention_heads: int = None

+ attention_norm: str = "sigmoid"

+ attention_win: bool = False

+ windowing: bool = False

+ use_forward_attn: bool = False

+ forward_attn_mask: bool = False

+ transition_agent: bool = False

+ location_attn: bool = True

+

+ # advance methods

+ bidirectional_decoder: bool = False

+ double_decoder_consistency: bool = False

+ ddc_r: int = 6

+

+ # multi-speaker settings

+ speakers_file: str = None

+ use_speaker_embedding: bool = False

+ speaker_embedding_dim: int = 512

+ use_d_vector_file: bool = False

+ d_vector_file: str = False

+ d_vector_dim: int = None

+

+ # optimizer parameters

+ optimizer: str = "RAdam"

+ optimizer_params: dict = field(default_factory=lambda: {"betas": [0.9, 0.998], "weight_decay": 1e-6})

+ lr_scheduler: str = "NoamLR"

+ lr_scheduler_params: dict = field(default_factory=lambda: {"warmup_steps": 4000})

+ lr: float = 1e-4

+ grad_clip: float = 5.0

+ seq_len_norm: bool = False

+ loss_masking: bool = True

+

+ # loss params

+ decoder_loss_alpha: float = 0.25

+ postnet_loss_alpha: float = 0.25

+ postnet_diff_spec_alpha: float = 0.25

+ decoder_diff_spec_alpha: float = 0.25

+ decoder_ssim_alpha: float = 0.25

+ postnet_ssim_alpha: float = 0.25

+ ga_alpha: float = 5.0

+

+ # testing

+ test_sentences: List[str] = field(

+ default_factory=lambda: [

+ "It took me quite a long time to develop a voice, and now that I have it I'm not going to be silent.",

+ "Be a voice, not an echo.",

+ "I'm sorry Dave. I'm afraid I can't do that.",

+ "This cake is great. It's so delicious and moist.",

+ "Prior to November 22, 1963.",

+ ]

+ )

+

+ def check_values(self):

+ if self.gradual_training:

+ assert (

+ self.gradual_training[0][1] == self.r

+ ), f"[!] the first scheduled gradual training `r` must be equal to the model's `r` value. {self.gradual_training[0][1]} vs {self.r}"

+ if self.model == "tacotron" and self.audio is not None:

+ assert self.out_channels == (

+ self.audio.fft_size // 2 + 1

+ ), f"{self.out_channels} vs {self.audio.fft_size // 2 + 1}"

+ if self.model == "tacotron2" and self.audio is not None:

+ assert self.out_channels == self.audio.num_mels

diff --git a/Indic-TTS/TTS/TTS/tts/configs/vits_config.py b/Indic-TTS/TTS/TTS/tts/configs/vits_config.py

new file mode 100644

index 0000000000000000000000000000000000000000..a8c7f91dcd965e0d1f1e3f2b78de321e27af3b95

--- /dev/null

+++ b/Indic-TTS/TTS/TTS/tts/configs/vits_config.py

@@ -0,0 +1,155 @@

+from dataclasses import dataclass, field

+from typing import List

+

+from TTS.tts.configs.shared_configs import BaseTTSConfig

+from TTS.tts.models.vits import VitsArgs

+

+

+@dataclass

+class VitsConfig(BaseTTSConfig):

+ """Defines parameters for VITS End2End TTS model.

+

+ Args:

+ model (str):

+ Model name. Do not change unless you know what you are doing.

+

+ model_args (VitsArgs):

+ Model architecture arguments. Defaults to `VitsArgs()`.

+

+ grad_clip (List):

+ Gradient clipping thresholds for each optimizer. Defaults to `[1000.0, 1000.0]`.

+

+ lr_gen (float):

+ Initial learning rate for the generator. Defaults to 0.0002.

+

+ lr_disc (float):

+ Initial learning rate for the discriminator. Defaults to 0.0002.

+

+ lr_scheduler_gen (str):

+ Name of the learning rate scheduler for the generator. One of the `torch.optim.lr_scheduler.*`. Defaults to

+ `ExponentialLR`.

+

+ lr_scheduler_gen_params (dict):

+ Parameters for the learning rate scheduler of the generator. Defaults to `{'gamma': 0.999875, "last_epoch":-1}`.

+

+ lr_scheduler_disc (str):

+ Name of the learning rate scheduler for the discriminator. One of the `torch.optim.lr_scheduler.*`. Defaults to

+ `ExponentialLR`.

+

+ lr_scheduler_disc_params (dict):

+ Parameters for the learning rate scheduler of the discriminator. Defaults to `{'gamma': 0.999875, "last_epoch":-1}`.

+

+ scheduler_after_epoch (bool):

+ If true, step the schedulers after each epoch else after each step. Defaults to `False`.

+

+ optimizer (str):

+ Name of the optimizer to use with both the generator and the discriminator networks. One of the

+ `torch.optim.*`. Defaults to `AdamW`.

+

+ kl_loss_alpha (float):

+ Loss weight for KL loss. Defaults to 1.0.

+

+ disc_loss_alpha (float):

+ Loss weight for the discriminator loss. Defaults to 1.0.

+

+ gen_loss_alpha (float):

+ Loss weight for the generator loss. Defaults to 1.0.

+

+ feat_loss_alpha (float):

+ Loss weight for the feature matching loss. Defaults to 1.0.

+

+ mel_loss_alpha (float):

+ Loss weight for the mel loss. Defaults to 45.0.

+

+ return_wav (bool):